openmcp-client

All in one vscode plugin for mcp developer

Stars: 503

OpenMCP is an integrated plugin for MCP server debugging in vscode/trae/cursor, combining development and testing functionalities. It includes tools for testing MCP resources, managing large model interactions, project-level management, and supports multiple large models. The openmcp-sdk allows for deploying MCP as an agent app with easy configuration and execution of tasks. The project follows a modular design allowing implementation in different modes on various platforms.

README:

English | 中文

An all-in-one vscode/trae/cursor plugin for MCP server debugging.

Integrated Inspector + MCP client basic functions, combining development and testing into one.

Test mcp tools, prompts and resources with a variety of tools.

Tested tools can be placed in the "Interactive Testing" module for large model interaction testing.

Complete project-level management panel for easier MCP project management at both project and global levels.

Supports multiple large models

Support XML mode and customized options for your tool selection.

once everything is tested and verified in openmcp-client, you can deploy your mcp as an agent app with openmcp-sdk fastly and easily:

npm install openmcp-sdkthen deploy your agent with just lines of codes

import { OmAgent } from 'openmcp-sdk/service/sdk';

// create Agent

const agent = new OmAgent();

// Load configuration, which can be automatically generated after debugging with openmcp client

agent.loadMcpConfig('./mcpconfig.json');

// Read the debugged prompt

const prompt = await agent.getPrompt('hacknews', { topn: '5' });

// Execute the task

const res = await agent.ainvoke({ messages: prompt });

console.log('⚙️ Agent Response', res);output

[2025/6/20 20:47:31] 🚀 [crawl4ai-mcp] 1.9.1 connected

[2025/6/20 20:47:35] 🤖 Agent wants to use these tools get_web_markdown

[2025/6/20 20:47:35] 🔧 using tool get_web_markdown

[2025/6/20 20:47:39] ✓ use tools success

[2025/6/20 20:47:46] 🤖 Agent wants to use these tools get_web_markdown, get_web_markdown, get_web_markdown

[2025/6/20 20:47:46] 🔧 using tool get_web_markdown

[2025/6/20 20:47:48] ✓ use tools success

[2025/6/20 20:47:48] 🔧 using tool get_web_markdown

[2025/6/20 20:47:54] ✓ use tools success

[2025/6/20 20:47:54] 🔧 using tool get_web_markdown

[2025/6/20 20:47:57] ✓ use tools success

⚙️ Agent Response

⌨️ Today's Tech Article Roundup

📌 How to Detect or Observe Passing Gravitational Waves?

Summary: This article explores the physics of gravitational waves, explaining their effects on space-time and how humans might perceive or observe this cosmic phenomenon.

Author: ynoxinul

Posted: 2 hours ago

Link: https://physics.stackexchange.com/questions/338912/how-would-a-passing-gravitational-wave-look-or-feel

📌 Learn Makefile Tutorial

Summary: A comprehensive Makefile tutorial for beginners and advanced users, covering basic syntax, variables, automatic rules, and advanced features to help developers manage project builds efficiently.

Author: dsego

Posted: 4 hours ago

Link: https://makefiletutorial.com/

📌 Hurl: Run and Test HTTP Requests in Plain Text

Summary: Hurl is a command-line tool that allows defining and executing HTTP requests in plain text format, ideal for data fetching and HTTP session testing. It supports chained requests, value capture, and response queries, making it perfect for testing REST, SOAP, and GraphQL APIs.

Author: flykespice

Posted: 8 hours ago

Link: https://github.com/Orange-OpenSource/hurl

Click here to learn how to make contribution to OpenMCP.

- Email: [email protected]

- QQ: 782833642

- Wechat: contact

lstmkirigaya - Discord: https://discord.gg/SKTZRf6NzU

| Module | Feature | Priority | Status | Fix Priority |

|---|---|---|---|---|

all |

Complete basic infrastructure | Full Version |

100% | Done |

render |

Support cost analysis in chat mode | Iteration |

100% | Done |

ext |

Support basic MCP project management | Iteration |

100% | P0 |

service |

Support custom OpenAI-compatible large model integration | Full Version |

100% | Done |

service |

Support custom protocol large model integration | MVP |

0% | P1 |

all |

Support debugging multiple MCP Servers simultaneously | MVP |

100% | P0 |

all |

Support online verification via large models | Iteration |

100% | Done |

all |

Support saving user's server debugging work | Iteration |

100% | Done |

render |

High-risk operation permission confirmation | MVP |

0% | P1 |

service |

Hot update for connected MCP servers | MVP |

0% | P1 |

service |

Cloud sync for system configuration | MVP |

0% | P1 |

all |

System prompt management module | Iteration |

100% | Done |

service |

Tool-wise logging system | MVP |

0% | P1 |

service |

MCP security checks (prevent prompt injection, etc.) | MVP |

0% | P1 |

service |

Built-in OCR for character recognition | Iteration |

100% | Done |

OpenMCP adopts a layered modular design. By assembling different modules, it can be implemented in different modes on different platforms.

flowchart TD

subgraph OpenMCP Core Components

renderer[Renderer]

openmcpservice[OpenMCPService]

end

subgraph OpenMCP_Web["OpenMCP Web"]

renderer

openmcpservice

nginx[Nginx]

end

subgraph OpenMCP_Plugin["OpenMCP Plugin"]

renderer

openmcpservice

vscode[VSCode Plugin Code]

end

subgraph OpenMCP_App["OpenMCP App"]

renderer

openmcpservice

electron[Electron Code]

end

subgraph QQBot["OpenMCP-based QQ Bot"]

lagrange[Lagrange.OneBot]

openmcpservice

end

%% Dependencies

OpenMCP_Web -->|Frontend Rendering| renderer

OpenMCP_Web -->|Backend Service| openmcpservice

OpenMCP_Web -->|Reverse Proxy| nginx

OpenMCP_Plugin -->|UI Interface| renderer

OpenMCP_Plugin -->|Core Logic| openmcpservice

OpenMCP_Plugin -->|IDE Integration| vscode

OpenMCP_App -->|Frontend UI| renderer

OpenMCP_App -->|Local Service| openmcpservice

OpenMCP_App -->|Desktop Packaging| electron

QQBot -->|Protocol Adaptation| lagrange

QQBot -->|Business Logic| openmcpservice- renderer : Frontend UI definitions

- service : Test components for renderer , including a simple forwarding layer

- src : VSCode plugin definitions

flowchart LR

D[renderer] <--> A[Dev Server]

<--ws--> B[service]

B <--mcp--> m(MCP Server)Project setup:

npm run setupStart dev server:

npm run serveflowchart LR

D[renderer] <--> A[extention.ts] <--> B[service]

B <--mcp--> m(MCP Server)Build for deployment:

npm run buildbuild vscode extension:

npm run build:pluginThen just press F5, いただきます (Let's begin)

✅ npm run build ✅ npm run build:task-loop ✅ openmcp-client UT ✅ openmcp-sdk UT ✅ vscode extension UT

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for openmcp-client

Similar Open Source Tools

openmcp-client

OpenMCP is an integrated plugin for MCP server debugging in vscode/trae/cursor, combining development and testing functionalities. It includes tools for testing MCP resources, managing large model interactions, project-level management, and supports multiple large models. The openmcp-sdk allows for deploying MCP as an agent app with easy configuration and execution of tasks. The project follows a modular design allowing implementation in different modes on various platforms.

PDFMathTranslate

PDFMathTranslate is a tool designed for translating scientific papers and conducting bilingual comparisons. It preserves formulas, charts, table of contents, and annotations. The tool supports multiple languages and diverse translation services. It provides a command-line tool, interactive user interface, and Docker deployment. Users can try the application through online demos. The tool offers various installation methods including command-line, portable, graphic user interface, and Docker. Advanced options allow users to customize translation settings. Additionally, the tool supports secondary development through APIs for Python and HTTP. Future plans include parsing layout with DocLayNet based models, fixing page rotation and format issues, supporting non-PDF/A files, and integrating plugins for Zotero and Obsidian.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

slidev-mcp

slidev-mcp is an intelligent slide generation tool based on Slidev that integrates large language model technology, allowing users to automatically generate professional online PPT presentations with simple descriptions. It dramatically lowers the barrier to using Slidev, provides natural language interactive slide creation, and offers automated generation of professional presentations. The tool also includes various features for environment and project management, slide content management, and utility tools to enhance the slide creation process.

ovos-installer

The ovos-installer is a simple and multilingual tool designed to install Open Voice OS and HiveMind using Bash, Whiptail, and Ansible. It supports various Linux distributions and provides an automated installation process. Users can easily start and stop services, update their Open Voice OS instance, and uninstall the tool if needed. The installer also allows for non-interactive installation through scenario files. It offers a user-friendly way to set up Open Voice OS on different systems.

llmvision-card

LLM Vision Timeline Card is a custom card designed to display the LLM Vision Timeline on your Home Assistant Dashboard. It requires LLM Vision set up in Home Assistant, Timeline provider set up in LLM Vision, and Blueprint or Automation to add events to the timeline. The card allows users to show events that occurred within a specified number of hours and customize the display based on categories and colors. It supports multiple languages for UI and icon generation.

chonkie

Chonkie is a feature-rich, easy-to-use, fast, lightweight, and wide-support chunking library designed to efficiently split texts into chunks. It integrates with various tokenizers, embedding models, and APIs, supporting 56 languages and offering cloud-ready functionality. Chonkie provides a modular pipeline approach called CHOMP for text processing, chunking, post-processing, and exporting. With multiple chunkers, refineries, porters, and handshakes, Chonkie offers a comprehensive solution for text chunking needs. It includes 24+ integrations, 3+ LLM providers, 2+ refineries, 2+ porters, and 4+ vector database connections, making it a versatile tool for text processing and analysis.

atlas-mcp-server

ATLAS (Adaptive Task & Logic Automation System) is a high-performance Model Context Protocol server designed for LLMs to manage complex task hierarchies. Built with TypeScript, it features ACID-compliant storage, efficient task tracking, and intelligent template management. ATLAS provides LLM Agents task management through a clean, flexible tool interface. The server implements the Model Context Protocol (MCP) for standardized communication between LLMs and external systems, offering hierarchical task organization, task state management, smart templates, enterprise features, and performance optimization.

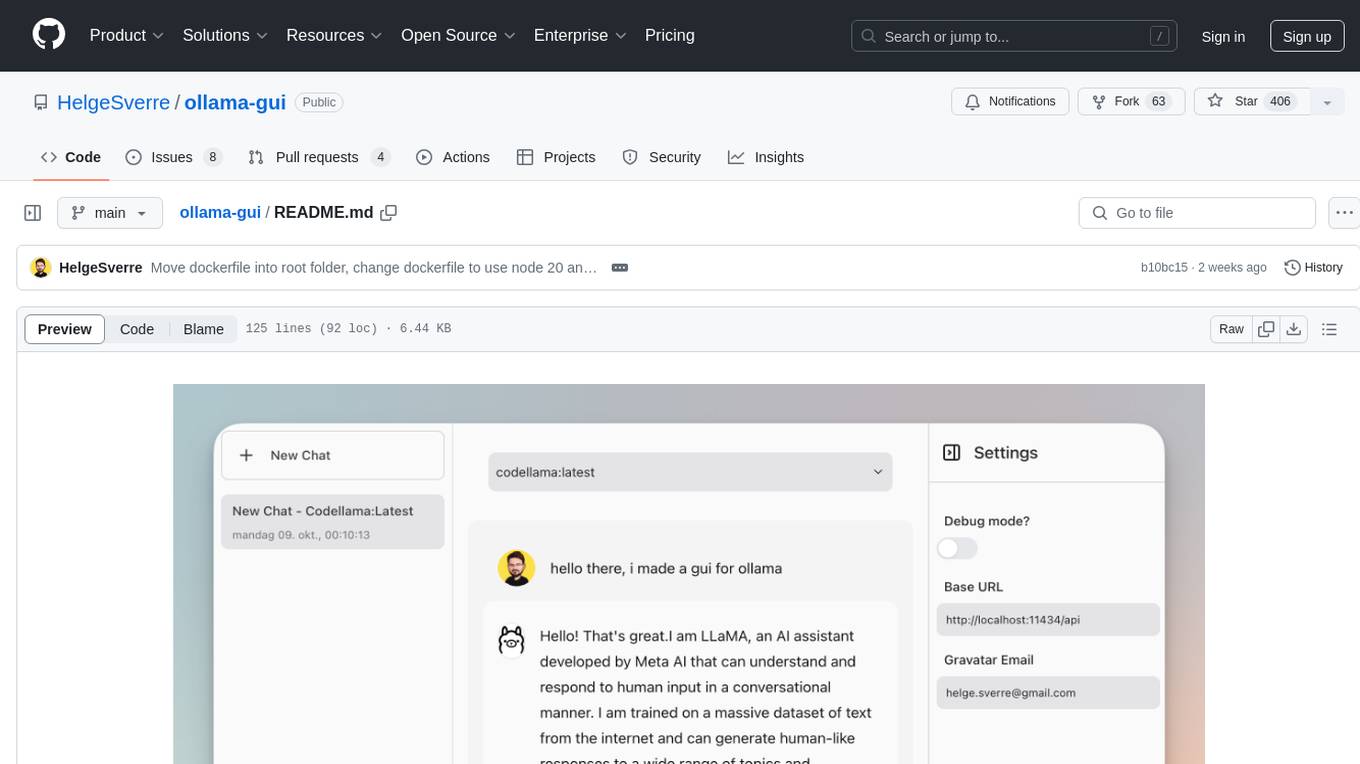

ollama-gui

Ollama GUI is a web interface for ollama.ai, a tool that enables running Large Language Models (LLMs) on your local machine. It provides a user-friendly platform for chatting with LLMs and accessing various models for text generation. Users can easily interact with different models, manage chat history, and explore available models through the web interface. The tool is built with Vue.js, Vite, and Tailwind CSS, offering a modern and responsive design for seamless user experience.

optillm

optillm is an OpenAI API compatible optimizing inference proxy implementing state-of-the-art techniques to enhance accuracy and performance of LLMs, focusing on reasoning over coding, logical, and mathematical queries. By leveraging additional compute at inference time, it surpasses frontier models across diverse tasks.

aiosmb

aiosmb is a fully asynchronous SMB library written in pure Python, supporting Python 3.7 and above. It offers various authentication methods such as Kerberos, NTLM, SSPI, and NEGOEX. The library supports connections over TCP and QUIC protocols, with proxy support for SOCKS4 and SOCKS5. Users can specify an SMB connection using a URL format, making it easier to authenticate and connect to SMB hosts. The project aims to implement DCERPC features, VSS mountpoint operations, and other enhancements in the future. It is inspired by Impacket and AzureADJoinedMachinePTC projects.

scrape-it-now

Scrape It Now is a versatile tool for scraping websites with features like decoupled architecture, CLI functionality, idempotent operations, and content storage options. The tool includes a scraper component for efficient scraping, ad blocking, link detection, markdown extraction, dynamic content loading, and anonymity features. It also offers an indexer component for creating AI search indexes, chunking content, embedding chunks, and enabling semantic search. The tool supports various configurations for Azure services and local storage, providing flexibility and scalability for web scraping and indexing tasks.

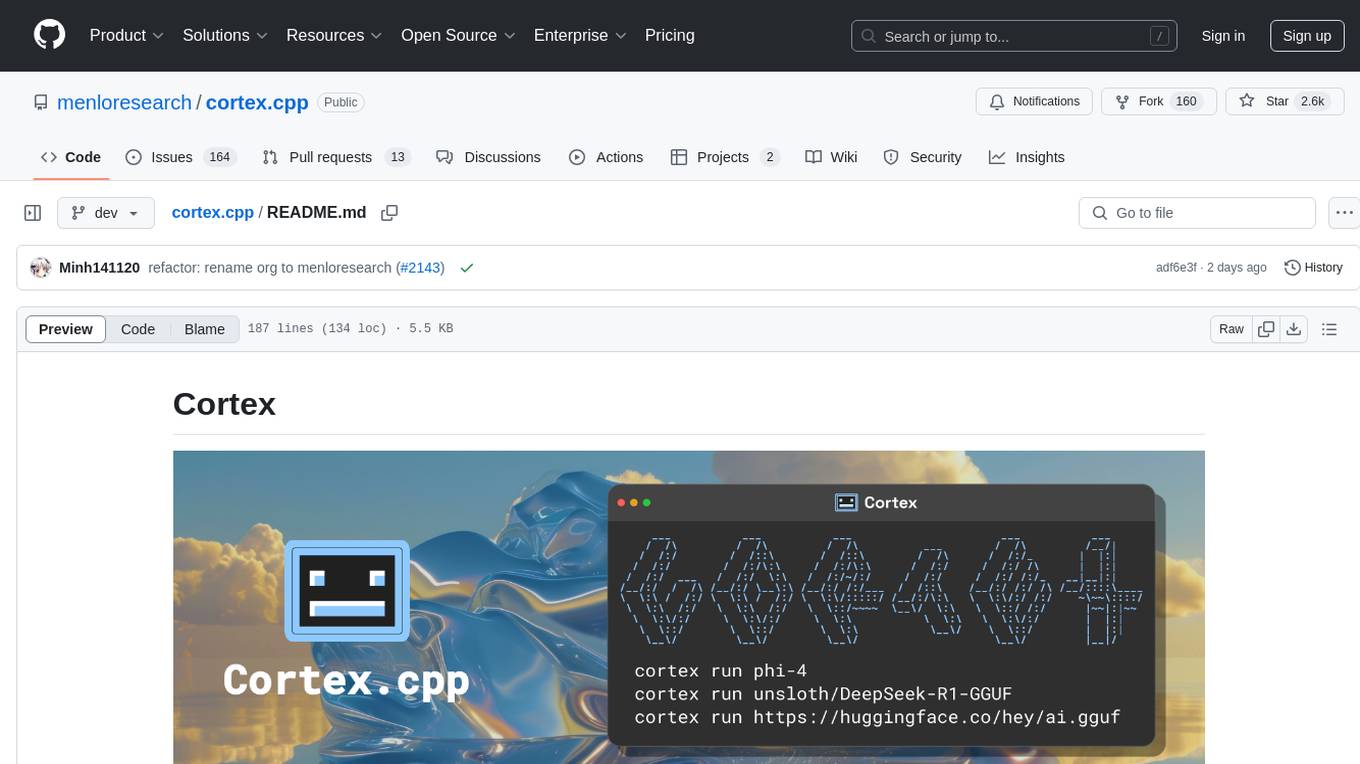

cortex.cpp

Cortex.cpp is an open-source platform designed as the brain for robots, offering functionalities such as vision, speech, language, tabular data processing, and action. It provides an AI platform for running AI models with multi-engine support, hardware optimization with automatic GPU detection, and an OpenAI-compatible API. Users can download models from the Hugging Face model hub, run models, manage resources, and access advanced features like multiple quantizations and engine management. The tool is under active development, promising rapid improvements for users.

gollama

Gollama is a delightful tool that brings Ollama, your offline conversational AI companion, directly into your terminal. It provides a fun and interactive way to generate responses from various models without needing internet connectivity. Whether you're brainstorming ideas, exploring creative writing, or just looking for inspiration, Gollama is here to assist you. The tool offers an interactive interface, customizable prompts, multiple models selection, and visual feedback to enhance user experience. It can be installed via different methods like downloading the latest release, using Go, running with Docker, or building from source. Users can interact with Gollama through various options like specifying a custom base URL, prompt, model, and enabling raw output mode. The tool supports different modes like interactive, piped, CLI with image, and TUI with image. Gollama relies on third-party packages like bubbletea, glamour, huh, and lipgloss. The roadmap includes implementing piped mode, support for extracting codeblocks, copying responses/codeblocks to clipboard, GitHub Actions for automated releases, and downloading models directly from Ollama using the rest API. Contributions are welcome, and the project is licensed under the MIT License.

wa_llm

WhatsApp Group Summary Bot is an AI-powered tool that joins WhatsApp groups, tracks conversations, and generates intelligent summaries. It features automated group chat responses, LLM-based conversation summaries, knowledge base integration, persistent message history with PostgreSQL, support for multiple message types, group management, and a REST API with Swagger docs. Prerequisites include Docker, Python 3.12+, PostgreSQL with pgvector extension, Voyage AI API key, and a WhatsApp account for the bot. The tool can be quickly set up by cloning the repository, configuring environment variables, starting services, and connecting devices. It offers API usage for loading new knowledge base topics and generating & dispatching summaries to managed groups. The project architecture includes FastAPI backend, WhatsApp Web API client, PostgreSQL database with vector storage, and AI-powered message processing.

vicinity

Vicinity is a lightweight, low-dependency vector store that provides a unified interface for nearest neighbor search with support for different backends and evaluation. It simplifies the process of comparing and evaluating different nearest neighbors packages by offering a simple and intuitive API. Users can easily experiment with various indexing methods and distance metrics to choose the best one for their use case. Vicinity also allows for measuring performance metrics like queries per second and recall.

For similar tasks

openmcp-client

OpenMCP is an integrated plugin for MCP server debugging in vscode/trae/cursor, combining development and testing functionalities. It includes tools for testing MCP resources, managing large model interactions, project-level management, and supports multiple large models. The openmcp-sdk allows for deploying MCP as an agent app with easy configuration and execution of tasks. The project follows a modular design allowing implementation in different modes on various platforms.

multi-agent-orchestrator

Multi-Agent Orchestrator is a flexible and powerful framework for managing multiple AI agents and handling complex conversations. It intelligently routes queries to the most suitable agent based on context and content, supports dual language implementation in Python and TypeScript, offers flexible agent responses, context management across agents, extensible architecture for customization, universal deployment options, and pre-built agents and classifiers. It is suitable for various applications, from simple chatbots to sophisticated AI systems, accommodating diverse requirements and scaling efficiently.

WindowsAgentArena

Windows Agent Arena (WAA) is a scalable Windows AI agent platform designed for testing and benchmarking multi-modal, desktop AI agents. It provides researchers and developers with a reproducible and realistic Windows OS environment for AI research, enabling testing of agentic AI workflows across various tasks. WAA supports deploying agents at scale using Azure ML cloud infrastructure, allowing parallel running of multiple agents and delivering quick benchmark results for hundreds of tasks in minutes.

Upsonic

Upsonic offers a cutting-edge enterprise-ready framework for orchestrating LLM calls, agents, and computer use to complete tasks cost-effectively. It provides reliable systems, scalability, and a task-oriented structure for real-world cases. Key features include production-ready scalability, task-centric design, MCP server support, tool-calling server, computer use integration, and easy addition of custom tools. The framework supports client-server architecture and allows seamless deployment on AWS, GCP, or locally using Docker.

AutoAgent

AutoAgent is a fully-automated and zero-code framework that enables users to create and deploy LLM agents through natural language alone. It is a top performer on the GAIA Benchmark, equipped with a native self-managing vector database, and allows for easy creation of tools, agents, and workflows without any coding. AutoAgent seamlessly integrates with a wide range of LLMs and supports both function-calling and ReAct interaction modes. It is designed to be dynamic, extensible, customized, and lightweight, serving as a personal AI assistant.

agent-starter-pack

The agent-starter-pack is a collection of production-ready Generative AI Agent templates built for Google Cloud. It accelerates development by providing a holistic, production-ready solution, addressing common challenges in building and deploying GenAI agents. The tool offers pre-built agent templates, evaluation tools, production-ready infrastructure, and customization options. It also provides CI/CD automation and data pipeline integration for RAG agents. The starter pack covers all aspects of agent development, from prototyping and evaluation to deployment and monitoring. It is designed to simplify project creation, template selection, and deployment for agent development on Google Cloud.

kagent

Kagent is a Kubernetes native framework for building AI agents, designed to be easy to understand and use. It provides a flexible and powerful way to build, deploy, and manage AI agents in Kubernetes. The framework consists of agents, tools, and model configurations defined as Kubernetes custom resources, making them easy to manage and modify. Kagent is extensible, flexible, observable, declarative, testable, and has core components like a controller, UI, engine, and CLI.

motia

Motia is an AI agent framework designed for software engineers to create, test, and deploy production-ready AI agents quickly. It provides a code-first approach, allowing developers to write agent logic in familiar languages and visualize execution in real-time. With Motia, developers can focus on business logic rather than infrastructure, offering zero infrastructure headaches, multi-language support, composable steps, built-in observability, instant APIs, and full control over AI logic. Ideal for building sophisticated agents and intelligent automations, Motia's event-driven architecture and modular steps enable the creation of GenAI-powered workflows, decision-making systems, and data processing pipelines.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.

oss-fuzz-gen

This framework generates fuzz targets for real-world `C`/`C++` projects with various Large Language Models (LLM) and benchmarks them via the `OSS-Fuzz` platform. It manages to successfully leverage LLMs to generate valid fuzz targets (which generate non-zero coverage increase) for 160 C/C++ projects. The maximum line coverage increase is 29% from the existing human-written targets.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.