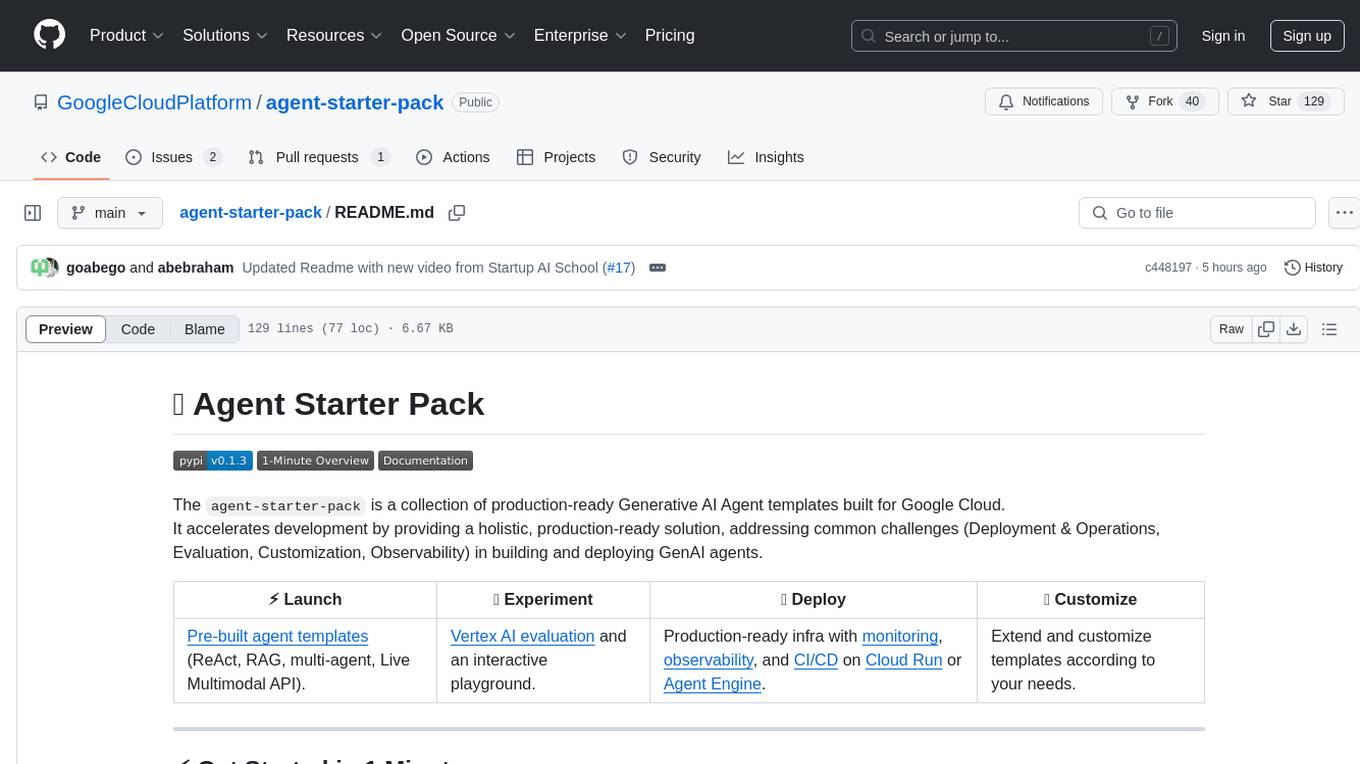

agent-starter-pack

Ship AI Agents to Google Cloud in minutes, not months. Production-ready templates with built-in CI/CD, evaluation, and observability.

Stars: 5683

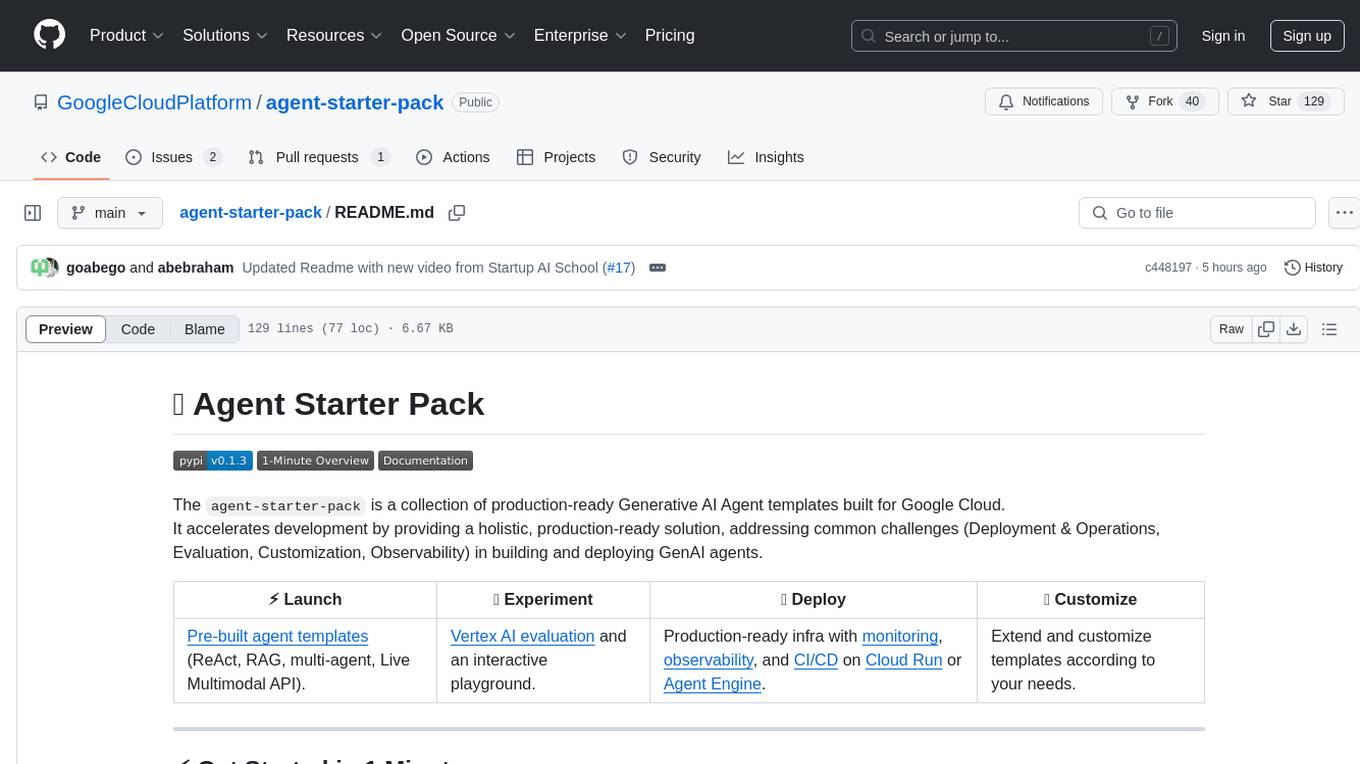

The agent-starter-pack is a collection of production-ready Generative AI Agent templates built for Google Cloud. It accelerates development by providing a holistic, production-ready solution, addressing common challenges in building and deploying GenAI agents. The tool offers pre-built agent templates, evaluation tools, production-ready infrastructure, and customization options. It also provides CI/CD automation and data pipeline integration for RAG agents. The starter pack covers all aspects of agent development, from prototyping and evaluation to deployment and monitoring. It is designed to simplify project creation, template selection, and deployment for agent development on Google Cloud.

README:

A Python package that provides production-ready templates for GenAI agents on Google Cloud.

Focus on your agent logic—the starter pack provides everything else: infrastructure, CI/CD, observability, and security.

| ⚡️ Launch | 🧪 Experiment | ✅ Deploy | 🛠️ Customize |

|---|---|---|---|

| Pre-built agent templates (ReAct, RAG, multi-agent, Live API). | Vertex AI evaluation and an interactive playground. | Production-ready infra with monitoring, observability, and CI/CD on Cloud Run or Agent Engine. | Extend and customize templates according to your needs. 🆕 Now integrating with Gemini CLI |

From zero to production-ready agent in 60 seconds using uv:

uvx agent-starter-pack create✨ Alternative: Using pip

If you don't have uv installed, you can use pip:

# Create and activate a Python virtual environment

python -m venv .venv && source .venv/bin/activate

# Install the agent starter pack

pip install --upgrade agent-starter-pack

# Create a new agent project

agent-starter-pack createThat's it! You now have a fully functional agent project—complete with backend, frontend, and deployment infrastructure—ready for you to explore and customize.

Already have an agent? Add production-ready deployment and infrastructure by running this command in your project's root folder:

uvx agent-starter-pack enhanceSee Installation Guide for more options, or try with zero setup in Firebase Studio or Cloud Shell.

| Agent Name | Description |

|---|---|

adk |

A base ReAct agent implemented using Google's Agent Development Kit |

adk_a2a |

An ADK agent with Agent2Agent (A2A) Protocol support for distributed agent communication and interoperability |

agentic_rag |

A RAG agent for document retrieval and Q&A. Supporting Vertex AI Search and Vector Search. |

langgraph |

A base ReAct agent implemented using LangChain's LangGraph |

adk_live |

A real-time multimodal RAG agent powered by Gemini, supporting audio/video/text chat |

More agents are on the way! We are continuously expanding our agent library. Have a specific agent type in mind? Raise an issue as a feature request!

🔍 ADK Samples

Looking to explore more ADK examples? Check out the ADK Samples Repository for additional examples and use cases demonstrating ADK's capabilities.

Explore amazing projects built with the Agent Starter Pack!

The agent-starter-pack offers key features to accelerate and simplify the development of your agent:

- 🔄 CI/CD Automation - A single command to set up a complete CI/CD pipeline for all environments, supporting both Google Cloud Build and GitHub Actions.

- 📥 Data Pipeline for RAG with Terraform/CI-CD - Seamlessly integrate a data pipeline to process embeddings for RAG into your agent system. Supporting Vertex AI Search and Vector Search.

- Remote Templates: Create and share your own agent starter packs templates from any Git repository.

-

🤖 Gemini CLI Integration - Use the Gemini CLI and the included

GEMINI.mdcontext file to ask questions about your template, agent architecture, and the path to production. Get instant guidance and code examples directly in your terminal.

This starter pack covers all aspects of Agent development, from prototyping and evaluation to deployment and monitoring.

- Python 3.10+

- Google Cloud SDK

- Terraform (for deployment)

- Make (for development tasks)

Visit our documentation site for comprehensive guides and references!

🔍 New to the codebase? Explore the CodeWiki for AI-powered code understanding and navigation.

- Getting Started Guide - First steps with agent-starter-pack

- Installation Guide - Setting up your environment

- Deployment Guide - Taking your agent to production

- Agent Templates Overview - Explore available agent patterns

- CLI Reference - Command-line tool documentation

-

From Demo to Production with Agent Starter Pack: Learn how the Agent Starter Pack acts as an Automated Architect, building the professional infrastructure for your AI project in seconds. Covers why most AI projects fail at deployment and how ASP automates Terraform, CI/CD, and observability.

-

6-minute introduction (April 2024): Explaining the Agent Starter Pack and demonstrating its key features. Part of the Kaggle GenAI intensive course.

Looking for more examples and resources for Generative AI on Google Cloud? Check out the GoogleCloudPlatform/generative-ai repository for notebooks, code samples, and more!

Contributions are welcome! See the Contributing Guide.

We value your input! Your feedback helps us improve this starter pack and make it more useful for the community.

If you encounter any issues or have specific suggestions, please first consider raising an issue on our GitHub repository.

For other types of feedback, or if you'd like to share a positive experience or success story using this starter pack, we'd love to hear from you! You can reach out to us at [email protected].

Thank you for your contributions!

This repository is for demonstrative purposes only and is not an officially supported Google product.

The agent-starter-pack templating CLI and the templates in this starter pack leverage Google Cloud APIs. When you use this starter pack, you'll be deploying resources in your own Google Cloud project and will be responsible for those resources. Please review the Google Cloud Service Terms for details on the terms of service associated with these APIs.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for agent-starter-pack

Similar Open Source Tools

agent-starter-pack

The agent-starter-pack is a collection of production-ready Generative AI Agent templates built for Google Cloud. It accelerates development by providing a holistic, production-ready solution, addressing common challenges in building and deploying GenAI agents. The tool offers pre-built agent templates, evaluation tools, production-ready infrastructure, and customization options. It also provides CI/CD automation and data pipeline integration for RAG agents. The starter pack covers all aspects of agent development, from prototyping and evaluation to deployment and monitoring. It is designed to simplify project creation, template selection, and deployment for agent development on Google Cloud.

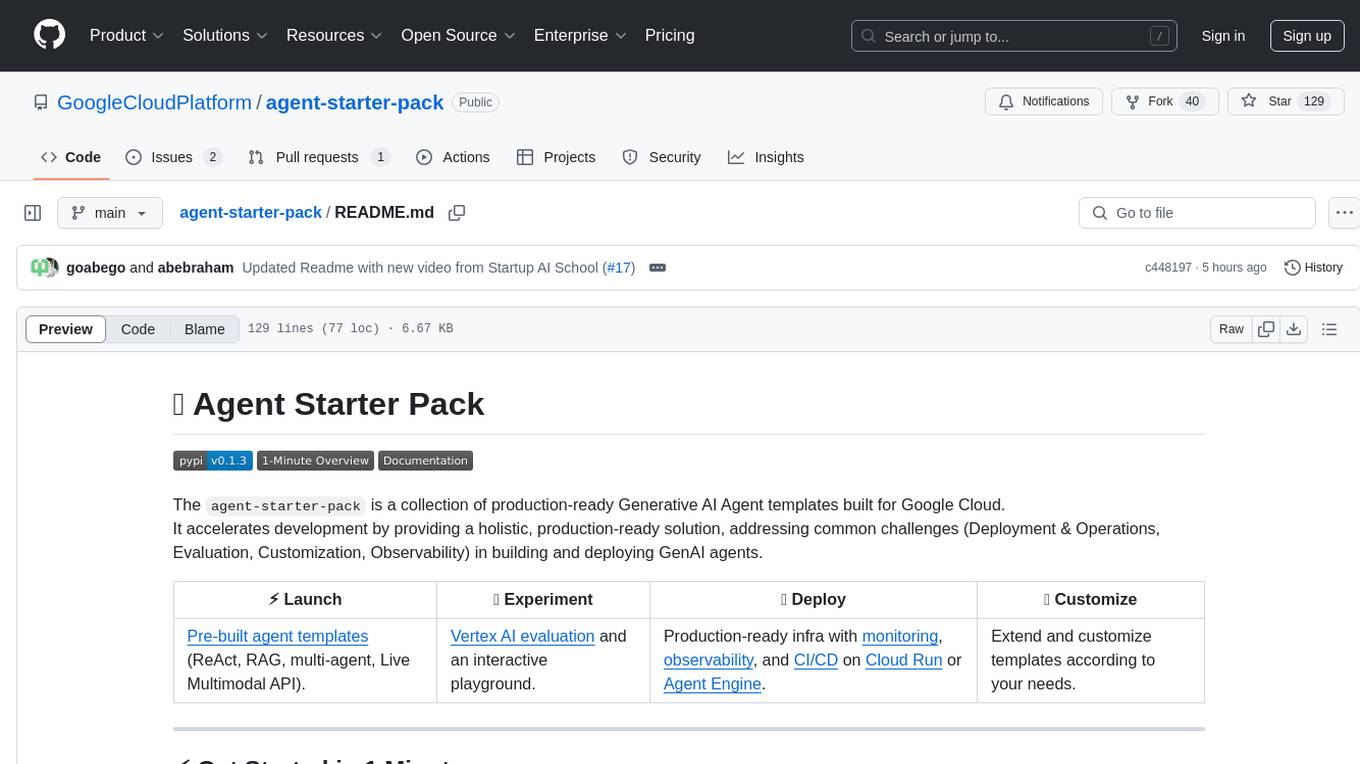

adk-python

Agent Development Kit (ADK) is an open-source, code-first Python toolkit for building, evaluating, and deploying sophisticated AI agents with flexibility and control. It is a flexible and modular framework optimized for Gemini and the Google ecosystem, but also compatible with other frameworks. ADK aims to make agent development feel more like software development, enabling developers to create, deploy, and orchestrate agentic architectures ranging from simple tasks to complex workflows.

nexent

Nexent is a powerful tool for analyzing and visualizing network traffic data. It provides comprehensive insights into network behavior, helping users to identify patterns, anomalies, and potential security threats. With its user-friendly interface and advanced features, Nexent is suitable for network administrators, cybersecurity professionals, and anyone looking to gain a deeper understanding of their network infrastructure.

langwatch

LangWatch is a monitoring and analytics platform designed to track, visualize, and analyze interactions with Large Language Models (LLMs). It offers real-time telemetry to optimize LLM cost and latency, a user-friendly interface for deep insights into LLM behavior, user analytics for engagement metrics, detailed debugging capabilities, and guardrails to monitor LLM outputs for issues like PII leaks and toxic language. The platform supports OpenAI and LangChain integrations, simplifying the process of tracing LLM calls and generating API keys for usage. LangWatch also provides documentation for easy integration and self-hosting options for interested users.

synthora

Synthora is a lightweight and extensible framework for LLM-driven Agents and ALM research. It aims to simplify the process of building, testing, and evaluating agents by providing essential components. The framework allows for easy agent assembly with a single config, reducing the effort required for tuning and sharing agents. Although in early development stages with unstable APIs, Synthora welcomes feedback and contributions to enhance its stability and functionality.

OpenDAN-Personal-AI-OS

OpenDAN is an open source Personal AI OS that consolidates various AI modules for personal use. It empowers users to create powerful AI agents like assistants, tutors, and companions. The OS allows agents to collaborate, integrate with services, and control smart devices. OpenDAN offers features like rapid installation, AI agent customization, connectivity via Telegram/Email, building a local knowledge base, distributed AI computing, and more. It aims to simplify life by putting AI in users' hands. The project is in early stages with ongoing development and future plans for user and kernel mode separation, home IoT device control, and an official OpenDAN SDK release.

dify

Dify is an open-source LLM app development platform that combines AI workflow, RAG pipeline, agent capabilities, model management, observability features, and more. It allows users to quickly go from prototype to production. Key features include: 1. Workflow: Build and test powerful AI workflows on a visual canvas. 2. Comprehensive model support: Seamless integration with hundreds of proprietary / open-source LLMs from dozens of inference providers and self-hosted solutions. 3. Prompt IDE: Intuitive interface for crafting prompts, comparing model performance, and adding additional features. 4. RAG Pipeline: Extensive RAG capabilities that cover everything from document ingestion to retrieval. 5. Agent capabilities: Define agents based on LLM Function Calling or ReAct, and add pre-built or custom tools. 6. LLMOps: Monitor and analyze application logs and performance over time. 7. Backend-as-a-Service: All of Dify's offerings come with corresponding APIs for easy integration into your own business logic.

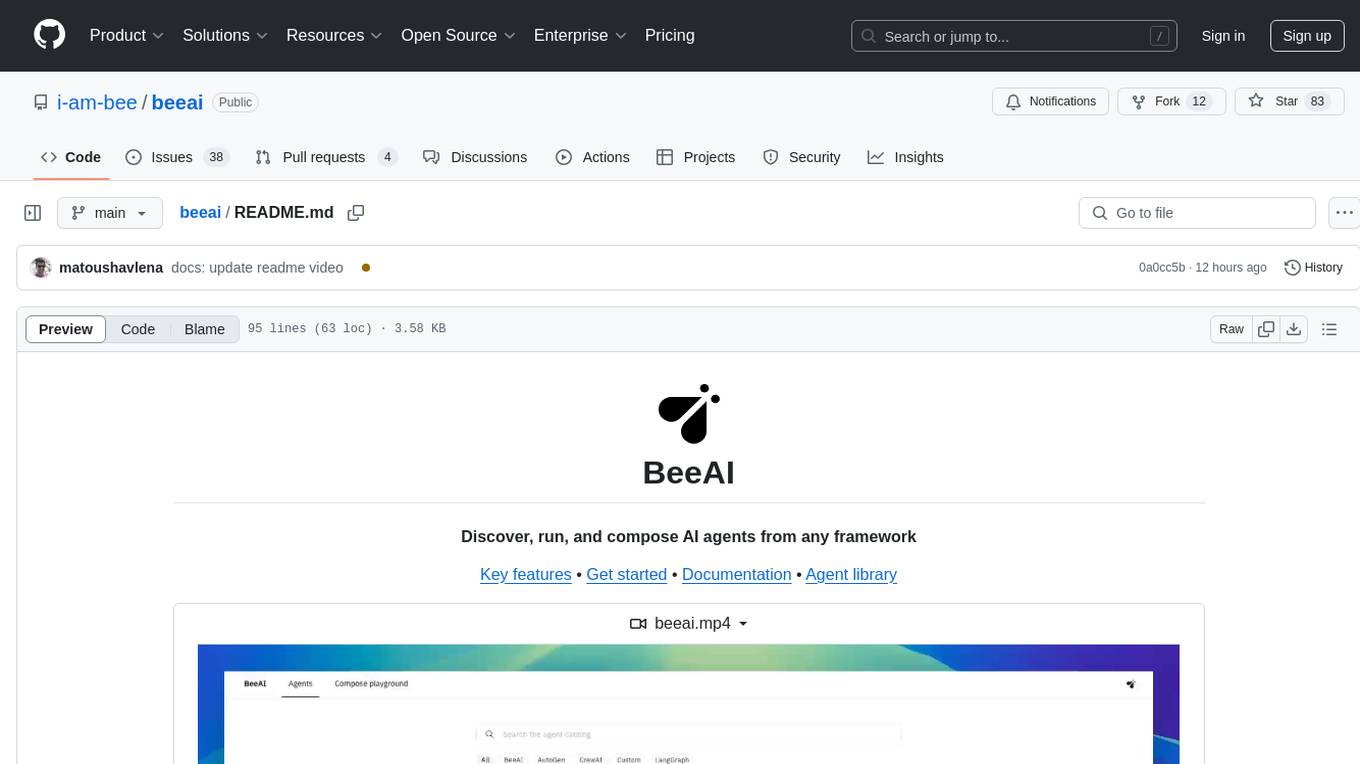

beeai

BeeAI is an open platform that helps users discover, run, and compose AI agents from any framework and language. It offers a framework-agnostic approach, allowing seamless integration of AI agents regardless of the language or platform. Users can build complex workflows using simple building blocks, explore a catalog of powerful agents with integrated search, and benefit from the BeeAI ecosystem with first-class support for Python and TypeScript agent developers.

ai-platform-engineering

The AI Platform Engineering repository provides a collection of tools and resources for building and deploying AI models. It includes libraries for data preprocessing, model training, and model serving. The repository also contains example code and tutorials to help users get started with AI development. Whether you are a beginner or an experienced AI engineer, this repository offers valuable insights and best practices to streamline your AI projects.

coze-studio

Coze Studio is an all-in-one AI agent development tool that offers the most convenient AI agent development environment, from development to deployment. It provides core technologies for AI agent development, complete app templates, and build frameworks. Coze Studio aims to simplify creating, debugging, and deploying AI agents through visual design and build tools, enabling powerful AI app development and customized business logic. The tool is developed using Golang for the backend, React + TypeScript for the frontend, and follows microservices architecture based on domain-driven design principles.

beeai-platform

BeeAI is an open-source platform that simplifies the discovery, running, and sharing of AI agents across different frameworks. It addresses challenges such as framework fragmentation, deployment complexity, and discovery issues by providing a standardized platform for individuals and teams to access agents easily. With features like a centralized agent catalog, framework-agnostic interfaces, containerized agents, and consistent user experiences, BeeAI aims to streamline the process of working with AI agents for both developers and teams.

enterprise-commerce

Enterprise Commerce is a Next.js commerce starter that helps you launch your high-performance Shopify storefront in minutes, not weeks. It leverages the power of Vector Search and AI to deliver a superior online shopping experience without the development headaches.

AgentUp

AgentUp is an active development tool that provides a developer-first agent framework for creating AI agents with enterprise-grade infrastructure. It allows developers to define agents with configuration, ensuring consistent behavior across environments. The tool offers secure design, configuration-driven architecture, extensible ecosystem for customizations, agent-to-agent discovery, asynchronous task architecture, deterministic routing, and MCP support. It supports multiple agent types like reactive agents and iterative agents, making it suitable for chatbots, interactive applications, research tasks, and more. AgentUp is built by experienced engineers from top tech companies and is designed to make AI agents production-ready, secure, and reliable.

refly

Refly.AI is an open-source AI-native creation engine that empowers users to transform ideas into production-ready content. It features a free-form canvas interface with multi-threaded conversations, knowledge base integration, contextual memory, intelligent search, WYSIWYG AI editor, and more. Users can leverage AI-powered capabilities, context memory, knowledge base integration, quotes, and AI document editing to enhance their content creation process. Refly offers both cloud and self-hosting options, making it suitable for individuals, enterprises, and organizations. The tool is designed to facilitate human-AI collaboration and streamline content creation workflows.

crewAI

CrewAI is a cutting-edge framework designed to orchestrate role-playing autonomous AI agents. By fostering collaborative intelligence, CrewAI empowers agents to work together seamlessly, tackling complex tasks. It enables AI agents to assume roles, share goals, and operate in a cohesive unit, much like a well-oiled crew. Whether you're building a smart assistant platform, an automated customer service ensemble, or a multi-agent research team, CrewAI provides the backbone for sophisticated multi-agent interactions. With features like role-based agent design, autonomous inter-agent delegation, flexible task management, and support for various LLMs, CrewAI offers a dynamic and adaptable solution for both development and production workflows.

appwrite

Appwrite is a best-in-class, developer-first platform that provides everything needed to create scalable, stable, and production-ready software quickly. It is an end-to-end platform for building Web, Mobile, Native, or Backend apps, packaged as Docker microservices. Appwrite abstracts the complexity of building modern apps and allows users to build secure, full-stack applications faster. It offers features like user authentication, database management, storage, file management, image manipulation, Cloud Functions, messaging, and more services.

For similar tasks

Agently

Agently is a development framework that helps developers build AI agent native application really fast. You can use and build AI agent in your code in an extremely simple way. You can create an AI agent instance then interact with it like calling a function in very few codes like this below. Click the run button below and witness the magic. It's just that simple: python # Import and Init Settings import Agently agent = Agently.create_agent() agent\ .set_settings("current_model", "OpenAI")\ .set_settings("model.OpenAI.auth", {"api_key": ""}) # Interact with the agent instance like calling a function result = agent\ .input("Give me 3 words")\ .output([("String", "one word")])\ .start() print(result) ['apple', 'banana', 'carrot'] And you may notice that when we print the value of `result`, the value is a `list` just like the format of parameter we put into the `.output()`. In Agently framework we've done a lot of work like this to make it easier for application developers to integrate Agent instances into their business code. This will allow application developers to focus on how to build their business logic instead of figure out how to cater to language models or how to keep models satisfied.

alan-sdk-web

Alan AI is a comprehensive AI solution that acts as a 'unified brain' for enterprises, interconnecting applications, APIs, and data sources to streamline workflows. It offers tools like Alan AI Studio for designing dialog scenarios, lightweight SDKs for embedding AI Agents, and a backend powered by advanced AI technologies. With Alan AI, users can create conversational experiences with minimal UI changes, benefit from a serverless environment, receive on-the-fly updates, and access dialog testing and analytics tools. The platform supports various frameworks like JavaScript, React, Angular, Vue, Ember, and Electron, and provides example web apps for different platforms. Users can also explore Alan AI SDKs for iOS, Android, Flutter, Ionic, Apache Cordova, and React Native.

archgw

Arch is an intelligent Layer 7 gateway designed to protect, observe, and personalize AI agents with APIs. It handles tasks related to prompts, including detecting jailbreak attempts, calling backend APIs, routing between LLMs, and managing observability. Built on Envoy Proxy, it offers features like function calling, prompt guardrails, traffic management, and observability. Users can build fast, observable, and personalized AI agents using Arch to improve speed, security, and personalization of GenAI apps.

llm-dev

The 'llm-dev' repository contains source code and resources for the book 'Practical Projects of Large Models: Multi-Domain Intelligent Application Development'. It covers topics such as language model basics, application architecture, working modes, environment setup, model installation, fine-tuning, quantization, multi-modal model applications, chat applications, programming large model applications, VS Code plugin development, enhanced generation applications, translation applications, intelligent agent applications, speech model applications, digital human applications, model training applications, and AI town applications.

agent-starter-pack

The agent-starter-pack is a collection of production-ready Generative AI Agent templates built for Google Cloud. It accelerates development by providing a holistic, production-ready solution, addressing common challenges in building and deploying GenAI agents. The tool offers pre-built agent templates, evaluation tools, production-ready infrastructure, and customization options. It also provides CI/CD automation and data pipeline integration for RAG agents. The starter pack covers all aspects of agent development, from prototyping and evaluation to deployment and monitoring. It is designed to simplify project creation, template selection, and deployment for agent development on Google Cloud.

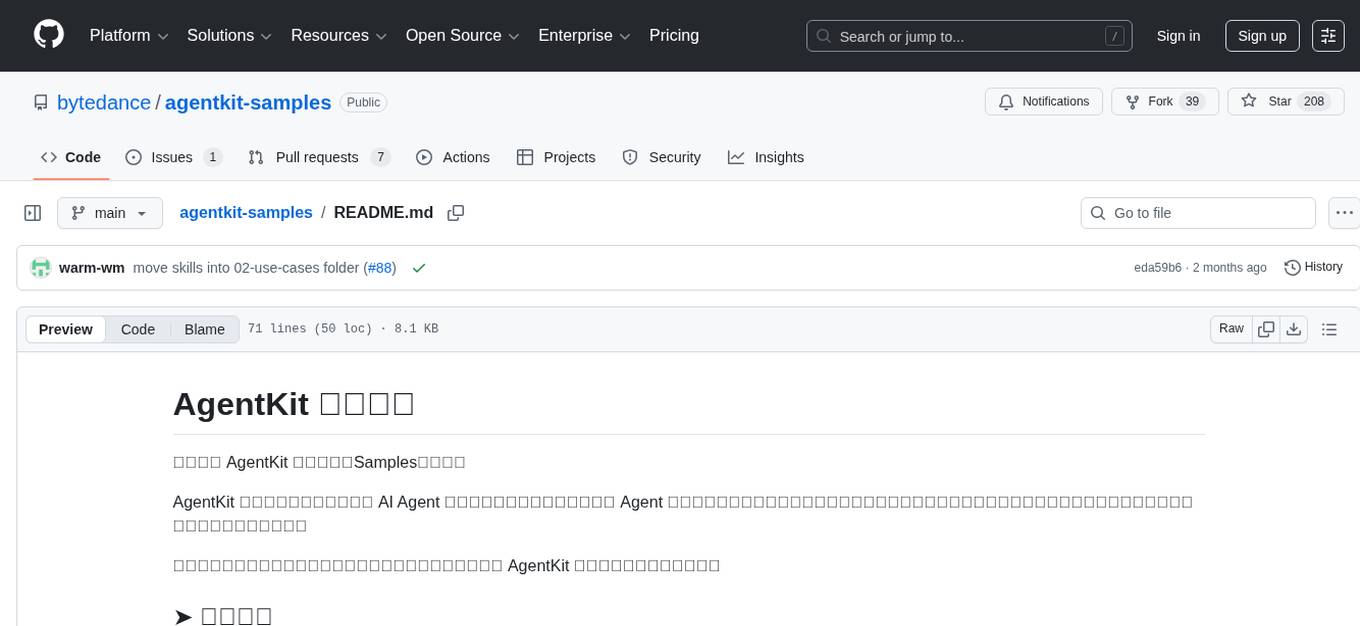

agentkit-samples

AgentKit Samples is a repository containing a series of examples and tutorials to help users understand, implement, and integrate various functionalities of AgentKit into their applications. The platform offers a complete solution for building, deploying, and maintaining AI agents, significantly reducing the complexity of developing intelligent applications. The repository provides different levels of examples and tutorials, including basic tutorials for understanding AgentKit's concepts and use cases, as well as more complex examples for experienced developers.

multi-agent-orchestrator

Multi-Agent Orchestrator is a flexible and powerful framework for managing multiple AI agents and handling complex conversations. It intelligently routes queries to the most suitable agent based on context and content, supports dual language implementation in Python and TypeScript, offers flexible agent responses, context management across agents, extensible architecture for customization, universal deployment options, and pre-built agents and classifiers. It is suitable for various applications, from simple chatbots to sophisticated AI systems, accommodating diverse requirements and scaling efficiently.

WindowsAgentArena

Windows Agent Arena (WAA) is a scalable Windows AI agent platform designed for testing and benchmarking multi-modal, desktop AI agents. It provides researchers and developers with a reproducible and realistic Windows OS environment for AI research, enabling testing of agentic AI workflows across various tasks. WAA supports deploying agents at scale using Azure ML cloud infrastructure, allowing parallel running of multiple agents and delivering quick benchmark results for hundreds of tasks in minutes.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.