archgw

The smart edge and AI gateway for agents. Arch is a high-performance proxy server that handles the low-level work in building agents: like applying guardrails, routing prompts to the right agent, and unifying access to LLMs, etc. Natively designed to process prompts, it's framework-agnostic and helps you build agents faster.

Stars: 3637

Arch is an intelligent Layer 7 gateway designed to protect, observe, and personalize AI agents with APIs. It handles tasks related to prompts, including detecting jailbreak attempts, calling backend APIs, routing between LLMs, and managing observability. Built on Envoy Proxy, it offers features like function calling, prompt guardrails, traffic management, and observability. Users can build fast, observable, and personalized AI agents using Arch to improve speed, security, and personalization of GenAI apps.

README:

Arch is a smart proxy server designed as a modular edge and AI gateway for agents.

Arch handles the pesky low-level work in building agentic apps — like applying guardrails, clarifying vague user input, routing prompts to the right agent, and unifying access to any LLM. It’s a language and framework friendly infrastructure layer designed to help you build and ship agentic apps faster.

Quickstart • Demos • Route LLMs • Build agentic apps with Arch • Documentation • Contact

AI demos are easy to hack. But once you move past a prototype, you’re stuck building and maintaining low-level plumbing code that slows down real innovation. For example:

- Routing & orchestration. Put routing in code and you’ve got two choices: maintain it yourself or live with a framework’s baked-in logic. Either way, keeping routing consistent means pushing code changes across all your agents, slowing iteration and turning every policy tweak into a refactor instead of a config flip.

- Model integration churn. Frameworks wire LLM integrations directly into code abstractions, making it hard to add or swap models without touching application code — meaning you’ll have to do codewide search/replace every time you want to experiment with a new model or version.

- Observability & governance. Logging, tracing, and guardrails are baked in as tightly coupled features, so bringing in best-of-breed solutions is painful and often requires digging through the guts of a framework.

- Prompt engineering overhead. Input validation, clarifying vague user input, and coercing outputs into the right schema all pile up, turning what should be design work into low-level plumbing work.

- Brittle upgrades. Every change (new model, new guardrail, new trace format) means patching and redeploying application servers. Contrast that with bouncing a central proxy—one upgrade, instantly consistent everywhere.

With Arch, you can move faster by focusing on higher-level objectives in a language and framework agnostic way. Arch was built by the contributors of Envoy Proxy with the belief that:

Prompts are nuanced and opaque user requests, which require the same capabilities as traditional HTTP requests including secure handling, intelligent routing, robust observability, and integration with backend (API) systems to improve speed and accuracy for common agentic scenarios – all outside core application logic.*

Core Features:

-

🚦 Route to Agents: Engineered with purpose-built LLMs for fast (<100ms) agent routing and hand-off -

🔗 Route to LLMs: Unify access to LLMs with support for dynamic routing. Model aliases coming soon -

⛨ Guardrails: Centrally configure and prevent harmful outcomes and ensure safe user interactions -

⚡ Tools Use: For common agentic scenarios let Arch instantly clarify and convert prompts to tools/API calls -

🕵 Observability: W3C compatible request tracing and LLM metrics that instantly plugin with popular tools -

🧱 Built on Envoy: Arch runs alongside app servers as a containerized process, and builds on top of Envoy's proven HTTP management and scalability features to handle ingress and egress traffic related to prompts and LLMs.

Jump to our docs to learn how you can use Arch to improve the speed, security and personalization of your GenAI apps.

[!IMPORTANT] Today, the function calling LLM (Arch-Function) designed for the agentic and RAG scenarios is hosted free of charge in the US-central region. To offer consistent latencies and throughput, and to manage our expenses, we will enable access to the hosted version via developers keys soon, and give you the option to run that LLM locally. For more details see this issue #258

To get in touch with us, please join our discord server. We will be monitoring that actively and offering support there.

- Sample App: Weather Forecast Agent - A sample agentic weather forecasting app that highlights core function calling capabilities of Arch.

- Sample App: Network Operator Agent - A simple network device switch operator agent that can retrive device statistics and reboot them.

- User Case: Connecting to SaaS APIs - Connect 3rd party SaaS APIs to your agentic chat experience.

Follow this quickstart guide to use Arch as a router for local or hosted LLMs, including dynamic routing. Later in the section we will see how you can Arch to build highly capable agentic applications, and to provide e2e observability.

Before you begin, ensure you have the following:

- Docker System (v24)

- Docker compose (v2.29)

- Python (v3.13)

Arch's CLI allows you to manage and interact with the Arch gateway efficiently. To install the CLI, simply run the following command:

[!TIP] We recommend that developers create a new Python virtual environment to isolate dependencies before installing Arch. This ensures that archgw and its dependencies do not interfere with other packages on your system.

$ python3.12 -m venv venv

$ source venv/bin/activate # On Windows, use: venv\Scripts\activate

$ pip install archgw==0.3.10Arch supports two primary routing strategies for LLMs: model-based routing and preference-based routing.

Model-based routing allows you to configure static model names for routing. This is useful when you always want to use a specific model for certain tasks, or manually swap between models. Below an example configuration for model-based routing, and you can follow our usage guide on how to get working.

version: v0.1.0

listeners:

egress_traffic:

address: 0.0.0.0

port: 12000

message_format: openai

timeout: 30s

llm_providers:

- access_key: $OPENAI_API_KEY

model: openai/gpt-4o

default: true

- access_key: $MISTRAL_API_KEY

model: mistral/mistral-3b-latestPreference-based routing is designed for more dynamic and intelligent selection of models. Instead of static model names, you write plain-language routing policies that describe the type of task or preference — for example:

version: v0.1.0

listeners:

egress_traffic:

address: 0.0.0.0

port: 12000

message_format: openai

timeout: 30s

llm_providers:

- model: openai/gpt-4.1

access_key: $OPENAI_API_KEY

default: true

routing_preferences:

- name: code generation

description: generating new code snippets, functions, or boilerplate based on user prompts or requirements

- model: openai/gpt-4o-mini

access_key: $OPENAI_API_KEY

routing_preferences:

- name: code understanding

description: understand and explain existing code snippets, functions, or librariesArch uses a lightweight 1.5B autoregressive model to map prompts (and conversation context) to these policies. This approach adapts to intent drift, supports multi-turn conversations, and avoids the brittleness of embedding-based classifiers or manual if/else chains. No retraining is required when adding new models or updating policies — routing is governed entirely by human-readable rules. You can learn more about the design, benchmarks, and methodology behind preference-based routing in our paper:

In following quickstart we will show you how easy it is to build AI agent with Arch gateway. We will build a currency exchange agent using following simple steps. For this demo we will use https://api.frankfurter.dev/ to fetch latest price for currencies and assume USD as base currency.

Create arch_config.yaml file with following content,

version: v0.1.0

listeners:

ingress_traffic:

address: 0.0.0.0

port: 10000

message_format: openai

timeout: 30s

llm_providers:

- access_key: $OPENAI_API_KEY

model: openai/gpt-4o

system_prompt: |

You are a helpful assistant.

prompt_guards:

input_guards:

jailbreak:

on_exception:

message: Looks like you're curious about my abilities, but I can only provide assistance for currency exchange.

prompt_targets:

- name: currency_exchange

description: Get currency exchange rate from USD to other currencies

parameters:

- name: currency_symbol

description: the currency that needs conversion

required: true

type: str

in_path: true

endpoint:

name: frankfurther_api

path: /v1/latest?base=USD&symbols={currency_symbol}

system_prompt: |

You are a helpful assistant. Show me the currency symbol you want to convert from USD.

- name: get_supported_currencies

description: Get list of supported currencies for conversion

endpoint:

name: frankfurther_api

path: /v1/currencies

endpoints:

frankfurther_api:

endpoint: api.frankfurter.dev:443

protocol: https$ archgw up arch_config.yaml

2024-12-05 16:56:27,979 - cli.main - INFO - Starting archgw cli version: 0.3.10

2024-12-05 16:56:28,485 - cli.utils - INFO - Schema validation successful!

2024-12-05 16:56:28,485 - cli.main - INFO - Starting arch model server and arch gateway

2024-12-05 16:56:51,647 - cli.core - INFO - Container is healthy!Once the gateway is up you can start interacting with at port 10000 using openai chat completion API.

Some of the sample queries you can ask could be what is currency rate for gbp? or show me list of currencies for conversion.

Here is a sample curl command you can use to interact,

$ curl --header 'Content-Type: application/json' \

--data '{"messages": [{"role": "user","content": "what is exchange rate for gbp"}], "model": "none"}' \

http://localhost:10000/v1/chat/completions | jq ".choices[0].message.content"

"As of the date provided in your context, December 5, 2024, the exchange rate for GBP (British Pound) from USD (United States Dollar) is 0.78558. This means that 1 USD is equivalent to 0.78558 GBP."

And to get list of supported currencies,

$ curl --header 'Content-Type: application/json' \

--data '{"messages": [{"role": "user","content": "show me list of currencies that are supported for conversion"}], "model": "none"}' \

http://localhost:10000/v1/chat/completions | jq ".choices[0].message.content"

"Here is a list of the currencies that are supported for conversion from USD, along with their symbols:\n\n1. AUD - Australian Dollar\n2. BGN - Bulgarian Lev\n3. BRL - Brazilian Real\n4. CAD - Canadian Dollar\n5. CHF - Swiss Franc\n6. CNY - Chinese Renminbi Yuan\n7. CZK - Czech Koruna\n8. DKK - Danish Krone\n9. EUR - Euro\n10. GBP - British Pound\n11. HKD - Hong Kong Dollar\n12. HUF - Hungarian Forint\n13. IDR - Indonesian Rupiah\n14. ILS - Israeli New Sheqel\n15. INR - Indian Rupee\n16. ISK - Icelandic Króna\n17. JPY - Japanese Yen\n18. KRW - South Korean Won\n19. MXN - Mexican Peso\n20. MYR - Malaysian Ringgit\n21. NOK - Norwegian Krone\n22. NZD - New Zealand Dollar\n23. PHP - Philippine Peso\n24. PLN - Polish Złoty\n25. RON - Romanian Leu\n26. SEK - Swedish Krona\n27. SGD - Singapore Dollar\n28. THB - Thai Baht\n29. TRY - Turkish Lira\n30. USD - United States Dollar\n31. ZAR - South African Rand\n\nIf you want to convert USD to any of these currencies, you can select the one you are interested in."

Arch is designed to support best-in class observability by supporting open standards. Please read our docs on observability for more details on tracing, metrics, and logs. The screenshot below is from our integration with Signoz (among others)

When debugging issues / errors application logs and access logs provide key information to give you more context on whats going on with the system. Arch gateway runs in info log level and following is a typical output you could see in a typical interaction between developer and arch gateway,

$ archgw up --service archgw --foreground

...

[2025-03-26 18:32:01.350][26][info] prompt_gateway: on_http_request_body: sending request to model server

[2025-03-26 18:32:01.851][26][info] prompt_gateway: on_http_call_response: model server response received

[2025-03-26 18:32:01.852][26][info] prompt_gateway: on_http_call_response: dispatching api call to developer endpoint: weather_forecast_service, path: /weather, method: POST

[2025-03-26 18:32:01.882][26][info] prompt_gateway: on_http_call_response: developer api call response received: status code: 200

[2025-03-26 18:32:01.882][26][info] prompt_gateway: on_http_call_response: sending request to upstream llm

[2025-03-26 18:32:01.883][26][info] llm_gateway: on_http_request_body: provider: gpt-4o-mini, model requested: None, model selected: gpt-4o-mini

[2025-03-26 18:32:02.818][26][info] llm_gateway: on_http_response_body: time to first token: 1468ms

[2025-03-26 18:32:04.532][26][info] llm_gateway: on_http_response_body: request latency: 3183ms

...

Log level can be changed to debug to get more details. To enable debug logs edit (supervisord.conf)[arch/supervisord.conf], change the log level --component-log-level wasm:info to --component-log-level wasm:debug. And after that you need to rebuild docker image and restart the arch gateway using following set of commands,

# make sure you are at the root of the repo

$ archgw build

# go to your service that has arch_config.yaml file and issue following command,

$ archgw up --service archgw --foreground

We would love feedback on our Roadmap and we welcome contributions to Arch! Whether you're fixing bugs, adding new features, improving documentation, or creating tutorials, your help is much appreciated. Please visit our Contribution Guide for more details

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for archgw

Similar Open Source Tools

archgw

Arch is an intelligent Layer 7 gateway designed to protect, observe, and personalize AI agents with APIs. It handles tasks related to prompts, including detecting jailbreak attempts, calling backend APIs, routing between LLMs, and managing observability. Built on Envoy Proxy, it offers features like function calling, prompt guardrails, traffic management, and observability. Users can build fast, observable, and personalized AI agents using Arch to improve speed, security, and personalization of GenAI apps.

deep-research

Deep Research is a lightning-fast tool that uses powerful AI models to generate comprehensive research reports in just a few minutes. It leverages advanced 'Thinking' and 'Task' models, combined with an internet connection, to provide fast and insightful analysis on various topics. The tool ensures privacy by processing and storing all data locally. It supports multi-platform deployment, offers support for various large language models, web search functionality, knowledge graph generation, research history preservation, local and server API support, PWA technology, multi-key payload support, multi-language support, and is built with modern technologies like Next.js and Shadcn UI. Deep Research is open-source under the MIT License.

kubeai

KubeAI is a highly scalable AI platform that runs on Kubernetes, serving as a drop-in replacement for OpenAI with API compatibility. It can operate OSS model servers like vLLM and Ollama, with zero dependencies and additional OSS addons included. Users can configure models via Kubernetes Custom Resources and interact with models through a chat UI. KubeAI supports serving various models like Llama v3.1, Gemma2, and Qwen2, and has plans for model caching, LoRA finetuning, and image generation.

Revornix

Revornix is an information management tool designed for the AI era. It allows users to conveniently integrate all visible information and generates comprehensive reports at specific times. The tool offers cross-platform availability, all-in-one content aggregation, document transformation & vectorized storage, native multi-tenancy, localization & open-source features, smart assistant & built-in MCP, seamless LLM integration, and multilingual & responsive experience for users.

HeyGem.ai

Heygem is an open-source, affordable alternative to Heygen, offering a fully offline video synthesis tool for Windows systems. It enables precise appearance and voice cloning, allowing users to digitalize their image and drive virtual avatars through text and voice for video production. With core features like efficient video synthesis and multi-language support, Heygem ensures a user-friendly experience with fully offline operation and support for multiple models. The tool leverages advanced AI algorithms for voice cloning, automatic speech recognition, and computer vision technology to enhance the virtual avatar's performance and synchronization.

OpenLLM

OpenLLM is a platform that helps developers run any open-source Large Language Models (LLMs) as OpenAI-compatible API endpoints, locally and in the cloud. It supports a wide range of LLMs, provides state-of-the-art serving and inference performance, and simplifies cloud deployment via BentoML. Users can fine-tune, serve, deploy, and monitor any LLMs with ease using OpenLLM. The platform also supports various quantization techniques, serving fine-tuning layers, and multiple runtime implementations. OpenLLM seamlessly integrates with other tools like OpenAI Compatible Endpoints, LlamaIndex, LangChain, and Transformers Agents. It offers deployment options through Docker containers, BentoCloud, and provides a community for collaboration and contributions.

SalesGPT

SalesGPT is an open-source AI agent designed for sales, utilizing context-awareness and LLMs to work across various communication channels like voice, email, and texting. It aims to enhance sales conversations by understanding the stage of the conversation and providing tools like product knowledge base to reduce errors. The agent can autonomously generate payment links, handle objections, and close sales. It also offers features like automated email communication, meeting scheduling, and integration with various LLMs for customization. SalesGPT is optimized for low latency in voice channels and ensures human supervision where necessary. The tool provides enterprise-grade security and supports LangSmith tracing for monitoring and evaluation of intelligent agents built on LLM frameworks.

OpenAdapt

OpenAdapt is an open-source software adapter between Large Multimodal Models (LMMs) and traditional desktop and web Graphical User Interfaces (GUIs). It aims to automate repetitive GUI workflows by leveraging the power of LMMs. OpenAdapt records user input and screenshots, converts them into tokenized format, and generates synthetic input via transformer model completions. It also analyzes recordings to generate task trees and replay synthetic input to complete tasks. OpenAdapt is model agnostic and generates prompts automatically by learning from human demonstration, ensuring that agents are grounded in existing processes and mitigating hallucinations. It works with all types of desktop GUIs, including virtualized and web, and is open source under the MIT license.

llm-answer-engine

This repository contains the code and instructions needed to build a sophisticated answer engine that leverages the capabilities of Groq, Mistral AI's Mixtral, Langchain.JS, Brave Search, Serper API, and OpenAI. Designed to efficiently return sources, answers, images, videos, and follow-up questions based on user queries, this project is an ideal starting point for developers interested in natural language processing and search technologies.

TaskWeaver

TaskWeaver is a code-first agent framework designed for planning and executing data analytics tasks. It interprets user requests through code snippets, coordinates various plugins to execute tasks in a stateful manner, and preserves both chat history and code execution history. It supports rich data structures, customized algorithms, domain-specific knowledge incorporation, stateful execution, code verification, easy debugging, security considerations, and easy extension. TaskWeaver is easy to use with CLI and WebUI support, and it can be integrated as a library. It offers detailed documentation, demo examples, and citation guidelines.

DemoGPT

DemoGPT is an all-in-one agent library that provides tools, prompts, frameworks, and LLM models for streamlined agent development. It leverages GPT-3.5-turbo to generate LangChain code, creating interactive Streamlit applications. The tool is designed for creating intelligent, interactive, and inclusive solutions in LLM-based application development. It offers model flexibility, iterative development, and a commitment to user engagement. Future enhancements include integrating Gorilla for autonomous API usage and adding a publicly available database for refining the generation process.

depthai

This repository contains a demo application for DepthAI, a tool that can load different networks, create pipelines, record video, and more. It provides documentation for installation and usage, including running programs through Docker. Users can explore DepthAI features via command line arguments or a clickable QT interface. Supported models include various AI models for tasks like face detection, human pose estimation, and object detection. The tool collects anonymous usage statistics by default, which can be disabled. Users can report issues to the development team for support and troubleshooting.

ChatDev

ChatDev is a virtual software company powered by intelligent agents like CEO, CPO, CTO, programmer, reviewer, tester, and art designer. These agents collaborate to revolutionize the digital world through programming. The platform offers an easy-to-use, highly customizable, and extendable framework based on large language models, ideal for studying collective intelligence. ChatDev introduces innovative methods like Iterative Experience Refinement and Experiential Co-Learning to enhance software development efficiency. It supports features like incremental development, Docker integration, Git mode, and Human-Agent-Interaction mode. Users can customize ChatChain, Phase, and Role settings, and share their software creations easily. The project is open-source under the Apache 2.0 License and utilizes data licensed under CC BY-NC 4.0.

agentok

Agentok Studio is a tool built upon AG2, a powerful agent framework from Microsoft, offering intuitive visual tools to streamline the creation and management of complex agent-based workflows. It simplifies the process for creators and developers by generating native Python code with minimal dependencies, enabling users to create self-contained code that can be executed anywhere. The tool is currently under development and not recommended for production use, but contributions are welcome from the community to enhance its capabilities and functionalities.

letmedoit

LetMeDoIt AI is a virtual assistant designed to revolutionize the way you work. It goes beyond being a mere chatbot by offering a unique and powerful capability - the ability to execute commands and perform computing tasks on your behalf. With LetMeDoIt AI, you can access OpenAI ChatGPT-4, Google Gemini Pro, and Microsoft AutoGen, local LLMs, all in one place, to enhance your productivity.

axoned

Axone is a public dPoS layer 1 designed for connecting, sharing, and monetizing resources in the AI stack. It is an open network for collaborative AI workflow management compatible with any data, model, or infrastructure, allowing sharing of data, algorithms, storage, compute, APIs, both on-chain and off-chain. The 'axoned' node of the AXONE network is built on Cosmos SDK & Tendermint consensus, enabling companies & individuals to define on-chain rules, share off-chain resources, and create new applications. Validators secure the network by maintaining uptime and staking $AXONE for rewards. The blockchain supports various platforms and follows Semantic Versioning 2.0.0. A docker image is available for quick start, with documentation on querying networks, creating wallets, starting nodes, and joining networks. Development involves Go and Cosmos SDK, with smart contracts deployed on the AXONE blockchain. The project provides a Makefile for building, installing, linting, and testing. Community involvement is encouraged through Discord, open issues, and pull requests.

For similar tasks

Agently

Agently is a development framework that helps developers build AI agent native application really fast. You can use and build AI agent in your code in an extremely simple way. You can create an AI agent instance then interact with it like calling a function in very few codes like this below. Click the run button below and witness the magic. It's just that simple: python # Import and Init Settings import Agently agent = Agently.create_agent() agent\ .set_settings("current_model", "OpenAI")\ .set_settings("model.OpenAI.auth", {"api_key": ""}) # Interact with the agent instance like calling a function result = agent\ .input("Give me 3 words")\ .output([("String", "one word")])\ .start() print(result) ['apple', 'banana', 'carrot'] And you may notice that when we print the value of `result`, the value is a `list` just like the format of parameter we put into the `.output()`. In Agently framework we've done a lot of work like this to make it easier for application developers to integrate Agent instances into their business code. This will allow application developers to focus on how to build their business logic instead of figure out how to cater to language models or how to keep models satisfied.

alan-sdk-web

Alan AI is a comprehensive AI solution that acts as a 'unified brain' for enterprises, interconnecting applications, APIs, and data sources to streamline workflows. It offers tools like Alan AI Studio for designing dialog scenarios, lightweight SDKs for embedding AI Agents, and a backend powered by advanced AI technologies. With Alan AI, users can create conversational experiences with minimal UI changes, benefit from a serverless environment, receive on-the-fly updates, and access dialog testing and analytics tools. The platform supports various frameworks like JavaScript, React, Angular, Vue, Ember, and Electron, and provides example web apps for different platforms. Users can also explore Alan AI SDKs for iOS, Android, Flutter, Ionic, Apache Cordova, and React Native.

archgw

Arch is an intelligent Layer 7 gateway designed to protect, observe, and personalize AI agents with APIs. It handles tasks related to prompts, including detecting jailbreak attempts, calling backend APIs, routing between LLMs, and managing observability. Built on Envoy Proxy, it offers features like function calling, prompt guardrails, traffic management, and observability. Users can build fast, observable, and personalized AI agents using Arch to improve speed, security, and personalization of GenAI apps.

llm-dev

The 'llm-dev' repository contains source code and resources for the book 'Practical Projects of Large Models: Multi-Domain Intelligent Application Development'. It covers topics such as language model basics, application architecture, working modes, environment setup, model installation, fine-tuning, quantization, multi-modal model applications, chat applications, programming large model applications, VS Code plugin development, enhanced generation applications, translation applications, intelligent agent applications, speech model applications, digital human applications, model training applications, and AI town applications.

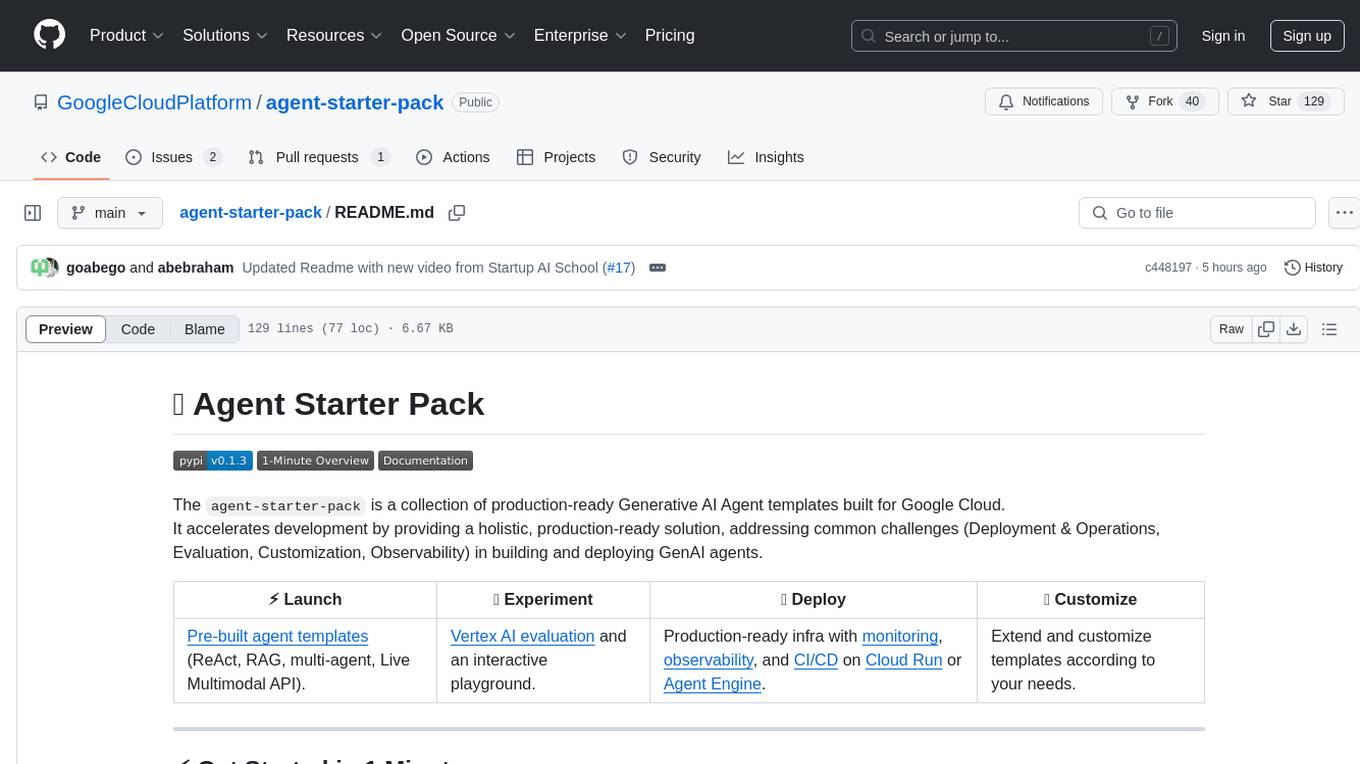

agent-starter-pack

The agent-starter-pack is a collection of production-ready Generative AI Agent templates built for Google Cloud. It accelerates development by providing a holistic, production-ready solution, addressing common challenges in building and deploying GenAI agents. The tool offers pre-built agent templates, evaluation tools, production-ready infrastructure, and customization options. It also provides CI/CD automation and data pipeline integration for RAG agents. The starter pack covers all aspects of agent development, from prototyping and evaluation to deployment and monitoring. It is designed to simplify project creation, template selection, and deployment for agent development on Google Cloud.

agentkit-samples

AgentKit Samples is a repository containing a series of examples and tutorials to help users understand, implement, and integrate various functionalities of AgentKit into their applications. The platform offers a complete solution for building, deploying, and maintaining AI agents, significantly reducing the complexity of developing intelligent applications. The repository provides different levels of examples and tutorials, including basic tutorials for understanding AgentKit's concepts and use cases, as well as more complex examples for experienced developers.

openclaw-mini

OpenClaw Mini is a simplified reproduction of the core architecture of OpenClaw, designed for learning system-level design of AI agents. It focuses on understanding the Agent Loop, session persistence, context management, long-term memory, skill systems, and active awakening. The project provides a minimal implementation to help users grasp the core design concepts of a production-level AI agent system.

paig

PAIG is an open-source project focused on protecting Generative AI applications by ensuring security, safety, and observability. It offers a versatile framework to address the latest security challenges and integrate point security solutions without rewriting applications. The project aims to provide a secure environment for developing and deploying GenAI applications.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.