mindcraft

Minecraft AI with LLMs+Mineflayer

Stars: 3896

Mindcraft is a project that crafts minds for Minecraft using Large Language Models (LLMs) and Mineflayer. It allows an LLM to write and execute code on your computer, with code sandboxed but still vulnerable to injection attacks. The project requires Minecraft Java Edition, Node.js, and one of several API keys. Users can run tasks to acquire specific items or construct buildings, customize project details in settings.js, and connect to online servers with a Microsoft/Minecraft account. The project also supports Docker container deployment for running in a secure environment.

README:

Crafting minds for Minecraft with LLMs and Mineflayer!

FAQ | Discord Support | Video Tutorial | Blog Post | Contributor TODO | Paper Website | MineCollab

[!Caution] Do not connect this bot to public servers with coding enabled. This project allows an LLM to write/execute code on your computer. The code is sandboxed, but still vulnerable to injection attacks. Code writing is disabled by default, you can enable it by setting

allow_insecure_codingtotrueinsettings.js. Ye be warned.

- Minecraft Java Edition (up to v1.21.6, recommend v1.21.1)

- Node.js Installed (at least v18)

- One of these: OpenAI API Key | Gemini API Key | Anthropic API Key | Replicate API Key | Hugging Face API Key | Groq API Key | Ollama Installed. | Mistral API Key | Qwen API Key [Intl.]/[cn] | Novita AI API Key | Cerebras API Key | Mercury API

-

Make sure you have the requirements above.

-

Clone or download this repository (big green button) 'git clone https://github.com/mindcraft-bots/mindcraft.git'

-

Rename

keys.example.jsontokeys.jsonand fill in your API keys (you only need one). The desired model is set inandy.jsonor other profiles. For other models refer to the table below. -

In terminal/command prompt, run

npm installfrom the installed directory -

Start a minecraft world and open it to LAN on localhost port

55916 -

Run

node main.jsfrom the installed directory

If you encounter issues, check the FAQ or find support on discord. We are currently not very responsive to github issues. To run tasks please refer to Minecollab Instructions

Bot performance can be roughly evaluated with Tasks. Tasks automatically intialize bots with a goal to aquire specific items or construct predefined buildings, and remove the bot once the goal is achieved.

To run tasks, you need python, pip, and optionally conda. You can then install dependencies with pip install -r requirements.txt.

Tasks are defined in json files in the tasks folder, and can be run with: python tasks/run_task_file.py --task_path=tasks/example_tasks.json

For full evaluations, you will need to download and install the task suite. Full instructions.

You can configure project details in settings.js. See file.

You can configure the agent's name, model, and prompts in their profile like andy.json with the model field. For comprehensive details, see Model Specifications.

| API | Config Variable | Example Model name | Docs |

|---|---|---|---|

openai |

OPENAI_API_KEY |

gpt-4o-mini |

docs |

google |

GEMINI_API_KEY |

gemini-2.0-flash |

docs |

anthropic |

ANTHROPIC_API_KEY |

claude-3-haiku-20240307 |

docs |

xai |

XAI_API_KEY |

grok-2-1212 |

docs |

deepseek |

DEEPSEEK_API_KEY |

deepseek-chat |

docs |

ollama (local) |

n/a | ollama/sweaterdog/andy-4:micro-q8_0 |

docs |

qwen |

QWEN_API_KEY |

qwen-max |

Intl./cn |

mistral |

MISTRAL_API_KEY |

mistral-large-latest |

docs |

replicate |

REPLICATE_API_KEY |

replicate/meta/meta-llama-3-70b-instruct |

docs |

groq (not grok) |

GROQCLOUD_API_KEY |

groq/mixtral-8x7b-32768 |

docs |

huggingface |

HUGGINGFACE_API_KEY |

huggingface/mistralai/Mistral-Nemo-Instruct-2407 |

docs |

novita |

NOVITA_API_KEY |

novita/deepseek/deepseek-r1 |

docs |

openrouter |

OPENROUTER_API_KEY |

openrouter/anthropic/claude-3.5-sonnet |

docs |

glhf.chat |

GHLF_API_KEY |

glhf/hf:meta-llama/Llama-3.1-405B-Instruct |

docs |

hyperbolic |

HYPERBOLIC_API_KEY |

hyperbolic/deepseek-ai/DeepSeek-V3 |

docs |

vllm |

n/a | vllm/llama3 |

n/a |

cerebras |

CEREBRAS_API_KEY |

cerebras/llama-3.3-70b |

docs |

mercury |

MERCURY_API_KEY |

mercury-coder-small |

docs |

If you use Ollama, to install the models used by default (generation and embedding), execute the following terminal command:

ollama pull sweaterdog/andy-4:micro-q8_0 && ollama pull embeddinggemma

To use Azure, you can reuse the OPENAI_API_KEY environment variable. You can get the key from the Azure portal. See azure.json for an example.

To connect to online servers your bot will need an official Microsoft/Minecraft account. You can use your own personal one, but will need another account if you want to connect too and play with it. To connect, change these lines in settings.js:

"host": "111.222.333.444",

"port": 55920,

"auth": "microsoft",

// rest is same...[!Important] The bot's name in the profile.json must exactly match the Minecraft profile name! Otherwise the bot will spam talk to itself.

To use different accounts, Mindcraft will connect with the account that the Minecraft launcher is currently using. You can switch accounts in the launcer, then run node main.js, then switch to your main account after the bot has connected.

If you intend to allow_insecure_coding, it is a good idea to run the app in a docker container to reduce risks of running unknown code. This is strongly recommended before connecting to remote servers.

docker run -i -t --rm -v $(pwd):/app -w /app -p 3000-3003:3000-3003 node:latest node main.jsor simply

docker-compose upWhen running in docker, if you want the bot to join your local minecraft server, you have to use a special host address host.docker.internal to call your localhost from inside your docker container. Put this into your settings.js:

"host": "host.docker.internal", // instead of "localhost", to join your local minecraft from inside the docker containerTo connect to an unsupported minecraft version, you can try to use viaproxy

Bot profiles are json files (such as andy.json) that define:

- Bot backend LLMs to use for talking, coding, and embedding.

- Prompts used to influence the bot's behavior.

- Examples help the bot perform tasks.

LLM models can be specified simply as "model": "gpt-4o". However, you can use different models for chat, coding, and embeddings.

You can pass a string or an object for these fields. A model object must specify an api, and optionally a model, url, and additional params.

"model": {

"api": "openai",

"model": "gpt-4o",

"url": "https://api.openai.com/v1/",

"params": {

"max_tokens": 1000,

"temperature": 1

}

},

"code_model": {

"api": "openai",

"model": "gpt-4",

"url": "https://api.openai.com/v1/"

},

"vision_model": {

"api": "openai",

"model": "gpt-4o",

"url": "https://api.openai.com/v1/"

},

"embedding": {

"api": "openai",

"url": "https://api.openai.com/v1/",

"model": "text-embedding-ada-002"

},

"speak_model": {

"api": "openai",

"url": "https://api.openai.com/v1/",

"model": "tts-1",

"voice": "echo"

}

model is used for chat, code_model is used for newAction coding, vision_model is used for image interpretation, and embedding is used to embed text for example selection. If code_model or vision_model is not specified, model will be used by default. Not all APIs support embeddings or vision.

All apis have default models and urls, so those fields are optional. The params field is optional and can be used to specify additional parameters for the model. It accepts any key-value pairs supported by the api. Is not supported for embedding models.

Embedding models are used to embed and efficiently select relevant examples for conversation and coding.

Supported Embedding APIs: openai, google, replicate, huggingface, novita

If you try to use an unsupported model, then it will default to a simple word-overlap method. Expect reduced performance, recommend mixing APIs to ensure embedding support.

By default, the program will use the profiles specified in settings.js. You can specify one or more agent profiles using the --profiles argument: node main.js --profiles ./profiles/andy.json ./profiles/jill.json

Some of the node modules that we depend on have bugs in them. To add a patch, change your local node module file and run npx patch-package [package-name]

@article{mindcraft2025,

title = {Collaborating Action by Action: A Multi-agent LLM Framework for Embodied Reasoning},

author = {White*, Isadora and Nottingham*, Kolby and Maniar, Ayush and Robinson, Max and Lillemark, Hansen and Maheshwari, Mehul and Qin, Lianhui and Ammanabrolu, Prithviraj},

journal = {arXiv preprint arXiv:2504.17950},

year = {2025},

url = {https://arxiv.org/abs/2504.17950},

}

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for mindcraft

Similar Open Source Tools

mindcraft

Mindcraft is a project that crafts minds for Minecraft using Large Language Models (LLMs) and Mineflayer. It allows an LLM to write and execute code on your computer, with code sandboxed but still vulnerable to injection attacks. The project requires Minecraft Java Edition, Node.js, and one of several API keys. Users can run tasks to acquire specific items or construct buildings, customize project details in settings.js, and connect to online servers with a Microsoft/Minecraft account. The project also supports Docker container deployment for running in a secure environment.

skyvern

Skyvern automates browser-based workflows using LLMs and computer vision. It provides a simple API endpoint to fully automate manual workflows, replacing brittle or unreliable automation solutions. Traditional approaches to browser automations required writing custom scripts for websites, often relying on DOM parsing and XPath-based interactions which would break whenever the website layouts changed. Instead of only relying on code-defined XPath interactions, Skyvern adds computer vision and LLMs to the mix to parse items in the viewport in real-time, create a plan for interaction and interact with them. This approach gives us a few advantages: 1. Skyvern can operate on websites it’s never seen before, as it’s able to map visual elements to actions necessary to complete a workflow, without any customized code 2. Skyvern is resistant to website layout changes, as there are no pre-determined XPaths or other selectors our system is looking for while trying to navigate 3. Skyvern leverages LLMs to reason through interactions to ensure we can cover complex situations. Examples include: 1. If you wanted to get an auto insurance quote from Geico, the answer to a common question “Were you eligible to drive at 18?” could be inferred from the driver receiving their license at age 16 2. If you were doing competitor analysis, it’s understanding that an Arnold Palmer 22 oz can at 7/11 is almost definitely the same product as a 23 oz can at Gopuff (even though the sizes are slightly different, which could be a rounding error!) Want to see examples of Skyvern in action? Jump to #real-world-examples-of- skyvern

AgentPoison

AgentPoison is a repository that provides the official PyTorch implementation of the paper 'AgentPoison: Red-teaming LLM Agents via Memory or Knowledge Base Backdoor Poisoning'. It offers tools for red-teaming LLM agents by poisoning memory or knowledge bases. The repository includes trigger optimization algorithms, agent experiments, and evaluation scripts for Agent-Driver, ReAct-StrategyQA, and EHRAgent. Users can fine-tune motion planners, inject queries with triggers, and evaluate red-teaming performance. The codebase supports multiple RAG embedders and provides a unified dataset access for all three agents.

binary_ninja_mcp

This repository contains a Binary Ninja plugin, MCP server, and bridge that enables seamless integration of Binary Ninja's capabilities with your favorite LLM client. It provides real-time integration, AI assistance for reverse engineering, multi-binary support, and various MCP tools for tasks like decompiling functions, getting IL code, managing comments, renaming variables, and more.

magentic

Easily integrate Large Language Models into your Python code. Simply use the `@prompt` and `@chatprompt` decorators to create functions that return structured output from the LLM. Mix LLM queries and function calling with regular Python code to create complex logic.

nano-graphrag

nano-GraphRAG is a simple, easy-to-hack implementation of GraphRAG that provides a smaller, faster, and cleaner version of the official implementation. It is about 800 lines of code, small yet scalable, asynchronous, and fully typed. The tool supports incremental insert, async methods, and various parameters for customization. Users can replace storage components and LLM functions as needed. It also allows for embedding function replacement and comes with pre-defined prompts for entity extraction and community reports. However, some features like covariates and global search implementation differ from the original GraphRAG. Future versions aim to address issues related to data source ID, community description truncation, and add new components.

worker-vllm

The worker-vLLM repository provides a serverless endpoint for deploying OpenAI-compatible vLLM models with blazing-fast performance. It supports deploying various model architectures, such as Aquila, Baichuan, BLOOM, ChatGLM, Command-R, DBRX, DeciLM, Falcon, Gemma, GPT-2, GPT BigCode, GPT-J, GPT-NeoX, InternLM, Jais, LLaMA, MiniCPM, Mistral, Mixtral, MPT, OLMo, OPT, Orion, Phi, Phi-3, Qwen, Qwen2, Qwen2MoE, StableLM, Starcoder2, Xverse, and Yi. Users can deploy models using pre-built Docker images or build custom images with specified arguments. The repository also supports OpenAI compatibility for chat completions, completions, and models, with customizable input parameters. Users can modify their OpenAI codebase to use the deployed vLLM worker and access a list of available models for deployment.

thepipe

The Pipe is a multimodal-first tool for feeding files and web pages into vision-language models such as GPT-4V. It is best for LLM and RAG applications that require a deep understanding of tricky data sources. The Pipe is available as a hosted API at thepi.pe, or it can be set up locally.

chat-ui

A chat interface using open source models, eg OpenAssistant or Llama. It is a SvelteKit app and it powers the HuggingChat app on hf.co/chat.

monacopilot

Monacopilot is a powerful and customizable AI auto-completion plugin for the Monaco Editor. It supports multiple AI providers such as Anthropic, OpenAI, Groq, and Google, providing real-time code completions with an efficient caching system. The plugin offers context-aware suggestions, customizable completion behavior, and framework agnostic features. Users can also customize the model support and trigger completions manually. Monacopilot is designed to enhance coding productivity by providing accurate and contextually appropriate completions in daily spoken language.

laravel-crod

Laravel Crod is a package designed to facilitate the implementation of CRUD operations in Laravel projects. It allows users to quickly generate controllers, models, migrations, services, repositories, views, and requests with various customization options. The package simplifies tasks such as creating resource controllers, making models fillable, querying repositories and services, and generating additional files like seeders and factories. Laravel Crod aims to streamline the process of building CRUD functionalities in Laravel applications by providing a set of commands and tools for developers.

receipt-scanner

The receipt-scanner repository is an AI-Powered Receipt and Invoice Scanner for Laravel that allows users to easily extract structured receipt data from images, PDFs, and emails within their Laravel application using OpenAI. It provides a light wrapper around OpenAI Chat and Completion endpoints, supports various input formats, and integrates with Textract for OCR functionality. Users can install the package via composer, publish configuration files, and use it to extract data from plain text, PDFs, images, Word documents, and web content. The scanned receipt data is parsed into a DTO structure with main classes like Receipt, Merchant, and LineItem.

npcsh

`npcsh` is a python-based command-line tool designed to integrate Large Language Models (LLMs) and Agents into one's daily workflow by making them available and easily configurable through the command line shell. It leverages the power of LLMs to understand natural language commands and questions, execute tasks, answer queries, and provide relevant information from local files and the web. Users can also build their own tools and call them like macros from the shell. `npcsh` allows users to take advantage of agents (i.e. NPCs) through a managed system, tailoring NPCs to specific tasks and workflows. The tool is extensible with Python, providing useful functions for interacting with LLMs, including explicit coverage for popular providers like ollama, anthropic, openai, gemini, deepseek, and openai-like providers. Users can set up a flask server to expose their NPC team for use as a backend service, run SQL models defined in their project, execute assembly lines, and verify the integrity of their NPC team's interrelations. Users can execute bash commands directly, use favorite command-line tools like VIM, Emacs, ipython, sqlite3, git, pipe the output of these commands to LLMs, or pass LLM results to bash commands.

shell-pilot

Shell-pilot is a simple, lightweight shell script designed to interact with various AI models such as OpenAI, Ollama, Mistral AI, LocalAI, ZhipuAI, Anthropic, Moonshot, and Novita AI from the terminal. It enhances intelligent system management without any dependencies, offering features like setting up a local LLM repository, using official models and APIs, viewing history and session persistence, passing input prompts with pipe/redirector, listing available models, setting request parameters, generating and running commands in the terminal, easy configuration setup, system package version checking, and managing system aliases.

slack-mcp-server

Slack MCP Server is a Model Context Protocol server for Slack Workspaces, offering powerful features like Stealth and OAuth Modes, Enterprise Workspaces Support, Channel and Thread Support, Smart History, Search Messages, Safe Message Posting, DM and Group DM support, Embedded user information, Cache support, and multiple transport options. It provides tools like conversations_history, conversations_replies, conversations_add_message, conversations_search_messages, and channels_list for managing messages, threads, adding messages, searching messages, and listing channels. The server also exposes directory resources for workspace metadata access. The tool is designed to enhance Slack workspace functionality and improve user experience.

duckdb-airport-extension

The 'duckdb-airport-extension' is a tool that enables the use of Arrow Flight with DuckDB. It provides functions to list available Arrow Flights at a specific endpoint and to retrieve the contents of an Arrow Flight. The extension also supports creating secrets for authentication purposes. It includes features for serializing filters and optimizing projections to enhance data transmission efficiency. The tool is built on top of gRPC and the Arrow IPC format, offering high-performance data services for data processing and retrieval.

For similar tasks

mindcraft

Mindcraft is a project that crafts minds for Minecraft using Large Language Models (LLMs) and Mineflayer. It allows an LLM to write and execute code on your computer, with code sandboxed but still vulnerable to injection attacks. The project requires Minecraft Java Edition, Node.js, and one of several API keys. Users can run tasks to acquire specific items or construct buildings, customize project details in settings.js, and connect to online servers with a Microsoft/Minecraft account. The project also supports Docker container deployment for running in a secure environment.

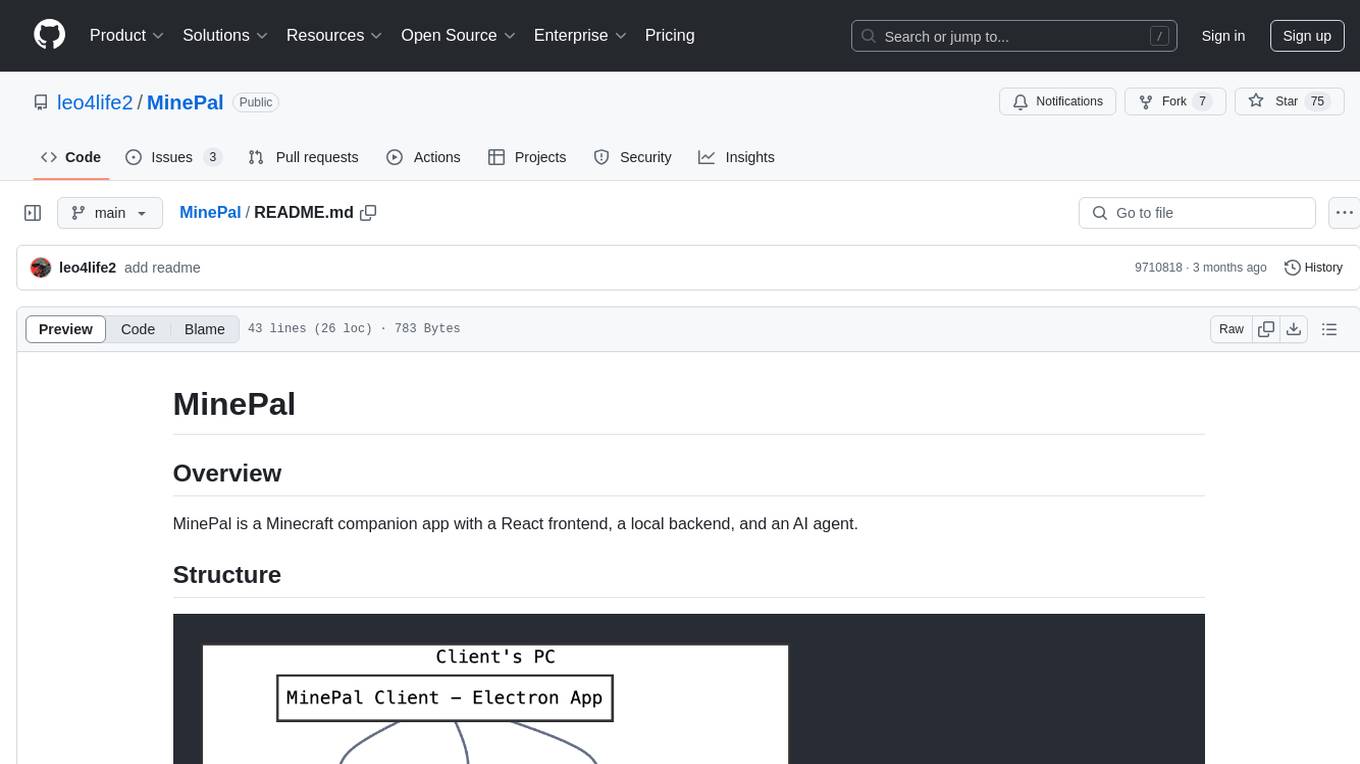

MinePal

MinePal is a Minecraft companion app with a React frontend, a local backend, and an AI agent. The frontend is built with React and Vite, the local backend APIs are in server.js, and the Minecraft agent logic is in src/agent/. Users can set up the frontend by installing dependencies and building it, refer to the backend repository for backend setup, and navigate to src/agent/ to access actions that the bot can take.

minecraft-mcp-server

Minecraft MCP Server is a bot powered by large language models and Mineflayer API. It uses the Model Context Protocol (MCP) to enable models like Claude to control a Minecraft character. The bot allows users to interact with Minecraft through commands and chat messages, facilitating tasks such as movement, inventory management, block interaction, entity interaction, and more. Users can also upload images of buildings and ask the bot to build them. The tool is designed to work with Claude Desktop and requires specific configurations for Minecraft and MCP clients. Contributions to the project, including refactoring, testing, documentation, and new functionality, are welcome.

Geoweaver

Geoweaver is an in-browser software that enables users to easily compose and execute full-stack data processing workflows using online spatial data facilities, high-performance computation platforms, and open-source deep learning libraries. It provides server management, code repository, workflow orchestration software, and history recording capabilities. Users can run it from both local and remote machines. Geoweaver aims to make data processing workflows manageable for non-coder scientists and preserve model run history. It offers features like progress storage, organization, SSH connection to external servers, and a web UI with Python support.

novelai-bot

This repository contains a drawing plugin based on NovelAI. It allows users to draw images, change models, samplers, and image sizes, use advanced request syntax, customize prohibited word lists, automatically translate Chinese keywords, automatically retract messages after a certain time, and connect to private servers. Thanks to Koishi's plugin mechanism, users can achieve more functionalities by combining it with other plugins, such as multi-platform support, rate limiting, context management, and multi-language support.

mcp-router

MCP Router is a desktop application that simplifies the management of Model Context Protocol (MCP) servers. It is a universal tool that allows users to connect to any MCP server, supports remote or local servers, and provides data portability by enabling easy export and import of MCP configurations. The application prioritizes privacy and security by storing all data locally, ensuring secure credentials, and offering complete control over server connections and data. Transparency is maintained through auditable source code, verifiable privacy practices, and community-driven security improvements and audits.

cria

Cria is a Python library designed for running Large Language Models with minimal configuration. It provides an easy and concise way to interact with LLMs, offering advanced features such as custom models, streams, message history management, and running multiple models in parallel. Cria simplifies the process of using LLMs by providing a straightforward API that requires only a few lines of code to get started. It also handles model installation automatically, making it efficient and user-friendly for various natural language processing tasks.

ChuanhuChatGPT

Chuanhu Chat is a user-friendly web graphical interface that provides various additional features for ChatGPT and other language models. It supports GPT-4, file-based question answering, local deployment of language models, online search, agent assistant, and fine-tuning. The tool offers a range of functionalities including auto-solving questions, online searching with network support, knowledge base for quick reading, local deployment of language models, GPT 3.5 fine-tuning, and custom model integration. It also features system prompts for effective role-playing, basic conversation capabilities with options to regenerate or delete dialogues, conversation history management with auto-saving and search functionalities, and a visually appealing user experience with themes, dark mode, LaTeX rendering, and PWA application support.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.