Best AI tools for< Generate Test Cases >

20 - AI tool Sites

AI Generated Test Cases

AI Generated Test Cases is an innovative tool that leverages artificial intelligence to automatically generate test cases for software applications. By utilizing advanced algorithms and machine learning techniques, this tool can efficiently create a comprehensive set of test scenarios to ensure the quality and reliability of software products. With AI Generated Test Cases, software development teams can save time and effort in the testing phase, leading to faster release cycles and improved overall productivity.

RoostGPT

RoostGPT is an AI-driven testing copilot that offers automated test case generation and code scanning services. It leverages Generative-AI and Large Language Models (LLMs) to provide reliable software testing solutions. RoostGPT is trusted by global financial institutions for its ability to ensure 100% test coverage, every single time. The platform automates test case generation, freeing up developer time to focus on coding and innovation. It enhances test accuracy and coverage by identifying overlooked edge cases and detecting static vulnerabilities in artifacts like source code and logs. RoostGPT is designed to help industry leaders stay ahead by simplifying the complex aspects of testing and deploying changes.

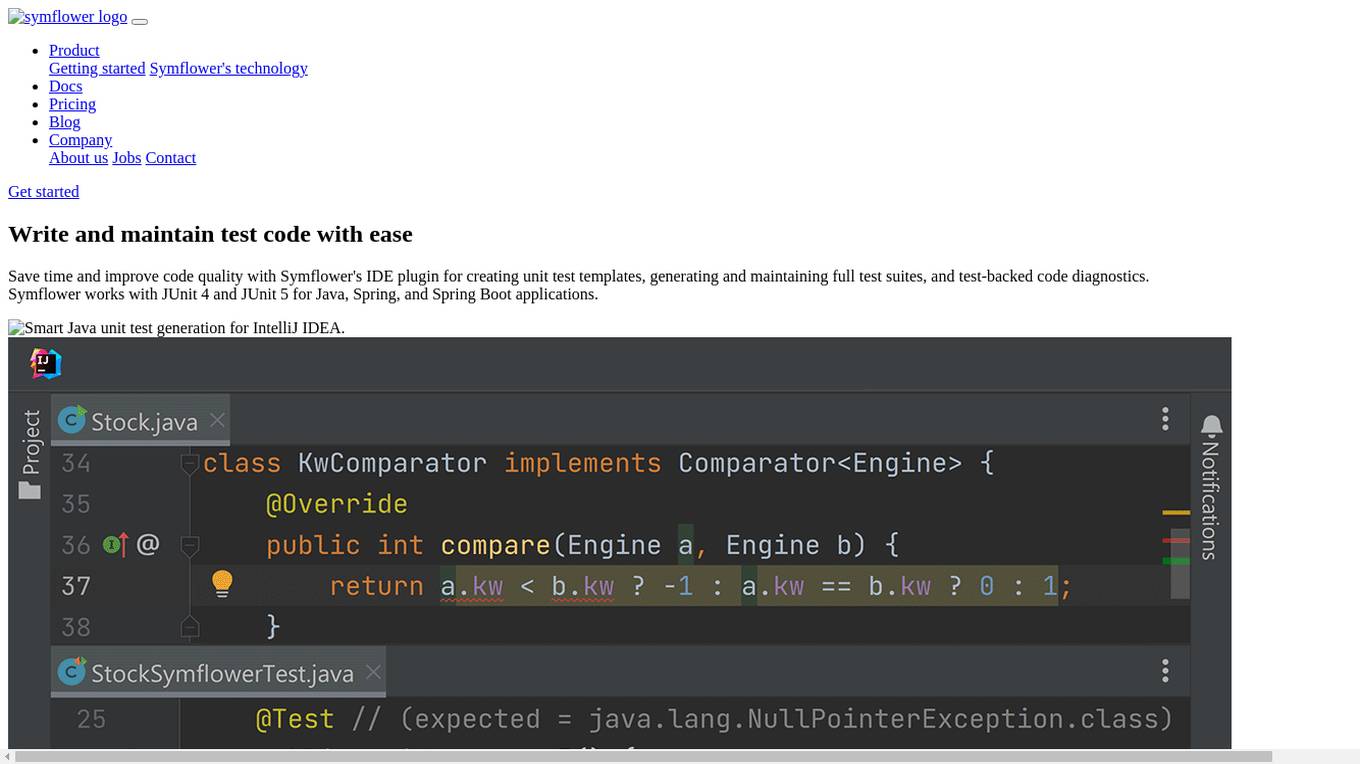

Symflower

Symflower is an AI-powered unit test generator for Java applications. It helps developers write and maintain test code with ease, saving time and improving code quality. Symflower works with JUnit 4 and JUnit 5 for Java, Spring, and Spring Boot applications.

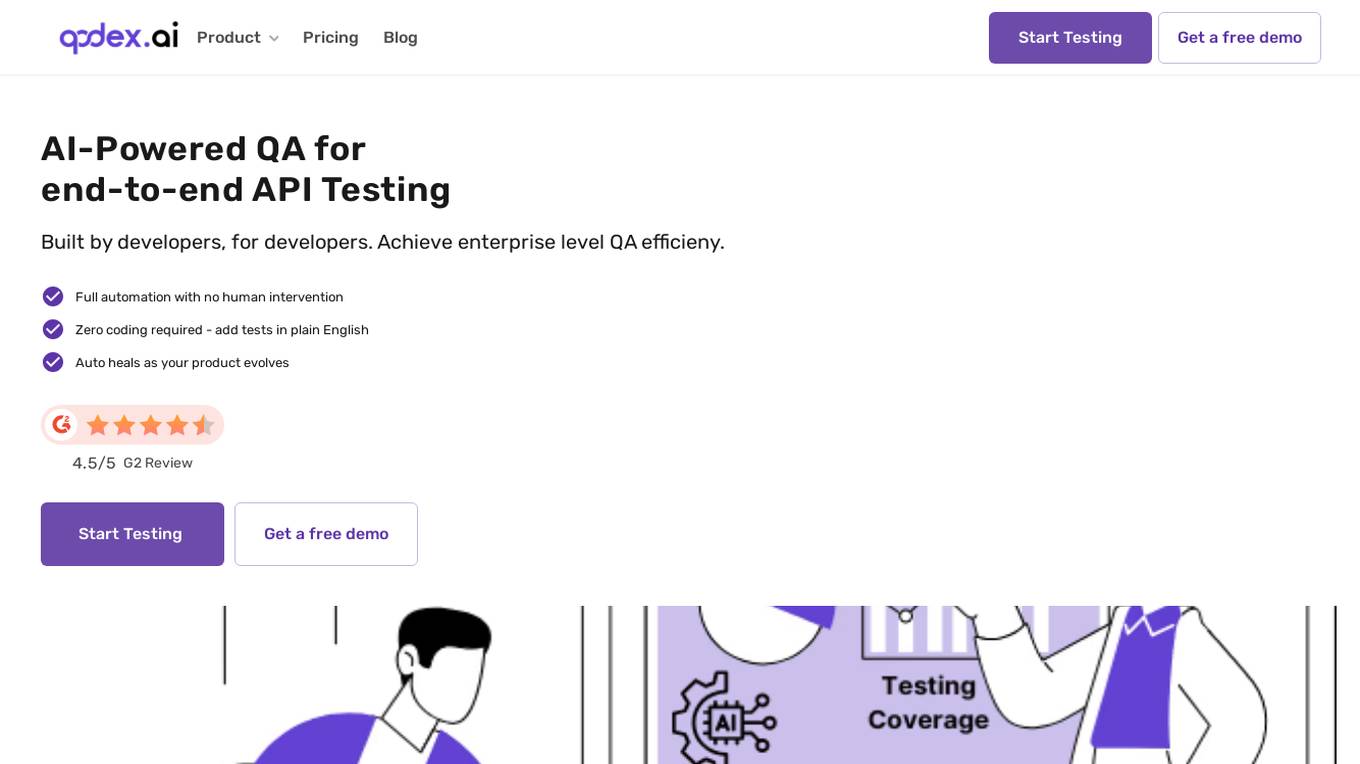

Qodex

Qodex is an AI-powered QA tool designed for end-to-end API testing, built by developers for developers. It offers enterprise-level QA efficiency with full automation and zero coding required. The tool auto-generates tests in plain English and adapts as the product evolves. Qodex also provides interactive API documentation and seamless integration, making it a cost-effective solution for enhancing productivity and efficiency in software testing.

Teste.ai

Teste.ai is an AI-powered platform that allows users to create software testing scenarios and test cases using top-notch artificial intelligence technology. The platform offers a variety of tools based on AI to accelerate the software quality testing journey, helping testers cover a wide range of requirements with a vast array of test scenarios efficiently. Teste.ai's intelligent features enable users to save time and enhance efficiency in creating, executing, and managing software tests. With advanced AI integration, the platform provides automatic generation of test cases based on software documentation or specific requirements, ensuring comprehensive test coverage and precise responses to testing queries.

Tusk

Tusk is an AI testing platform that helps users with API, unit, and integration testing. It leverages AI-enabled tests to prevent regressions, cover edge cases, and generate verified test cases for faster and safer shipping. Tusk offers features like shift-left testing, autonomous testing, self-healing tests, and code coverage enforcement. It is trusted by engineering leaders at fast-growing companies and aims to halve engineering release cycles by catching bugs early. The platform is designed to provide high-quality tests to reach coverage goals and increase code quality.

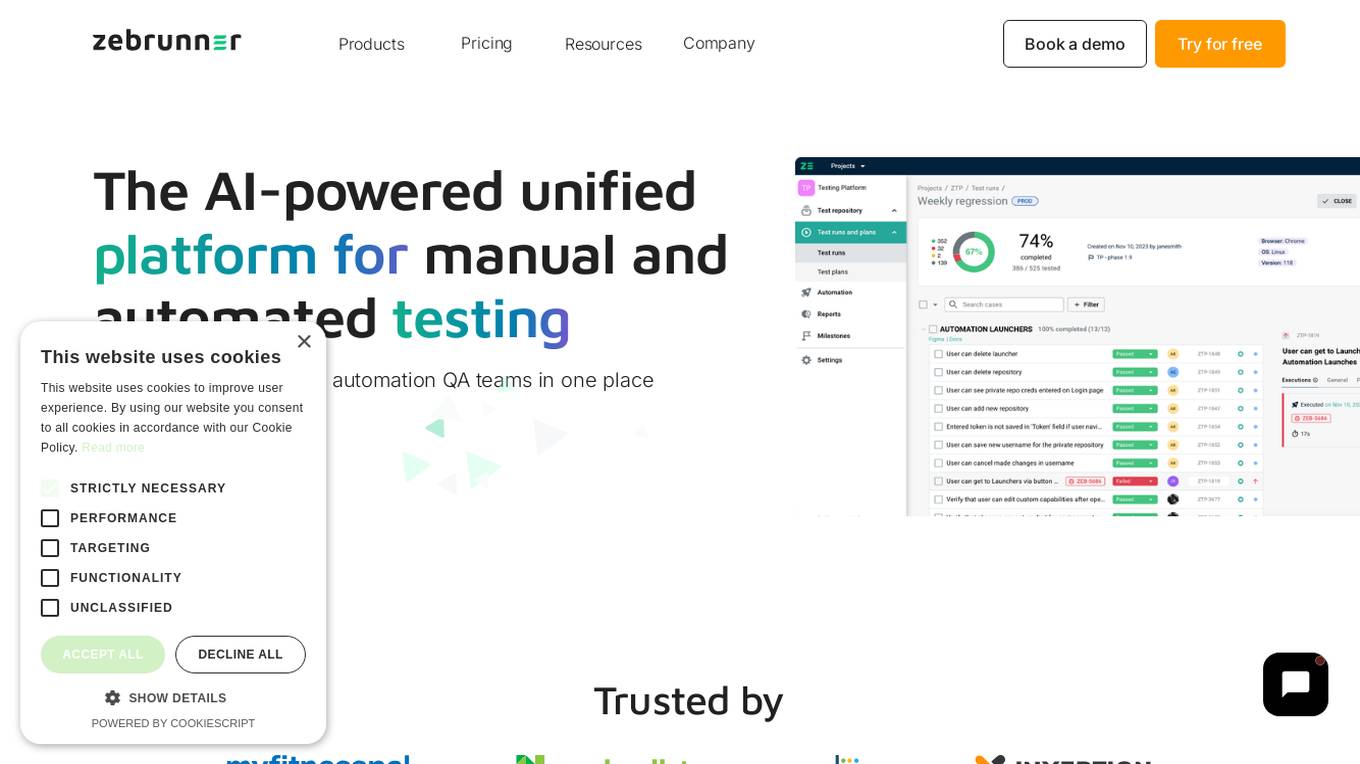

Zebrunner

Zebrunner is an AI-powered unified platform for manual and automated testing, designed to synchronize manual and automation QA teams in one place. It offers features such as test management, automation reporting, and test case management, with capabilities for generating new test cases, autocomplete existing ones, and categorize failures using AI. Zebrunner provides a clean and intuitive UI, unmatched performance, powerful reporting, rich integrations, and 24/7 support for efficient testing processes. It also offers customizable dashboards, sharable reports, and seamless integrations with Jira and other SDLC tools for streamlined workflows.

TestArmy

TestArmy is an AI-driven software testing platform that offers an army of testing agents to help users achieve software quality by balancing cost, speed, and quality. The platform leverages AI agents to generate Gherkin tests based on user specifications, automate test execution, and provide detailed logs and suggestions for test maintenance. TestArmy is designed for rapid scaling and adaptability to changes in the codebase, making it a valuable tool for both technical and non-technical users.

QA.tech

QA.tech is an advanced end-to-end testing application designed for B2B SaaS companies. It offers AI-powered testing solutions to help businesses ship faster, cut costs, and improve testing efficiency. The application features an AI agent named Jarvis that automates the testing process by scanning web apps, creating detailed memory structures, generating tests based on user interactions, and continuously testing for defects. QA.tech provides developer-friendly bug reports, supports various web frameworks, and integrates with CI/CD pipelines. It aims to revolutionize the testing process by offering faster, smarter, and more efficient testing solutions.

Autify

Autify is an AI testing company focused on solving challenges in automation testing. They aim to make software testing faster and easier, enabling companies to release faster and maintain application stability. Their flagship product, Autify No Code, allows anyone to create automated end-to-end tests for applications. Zenes, their new product, simplifies the process of creating new software tests through AI. Autify is dedicated to innovation in the automation testing space and is trusted by leading organizations.

KushoAI

Kusho is an AI-powered tool designed to help software developers build bug-free software efficiently. It offers the capability to transform API specs into exhaustive test suites that seamlessly integrate into the CI/CD pipeline. With KushoAI, developers can generate robust AI-generated test suites, receive AI-analyzed test results, and modify code instantly based on real-time reports. The tool is customizable to meet company's context and understands natural language prompts to produce test case code instantly. KushoAI ensures maximum test coverage in minutes, saves hours of manual effort, and adapts to the codebase to prevent missing any test cases.

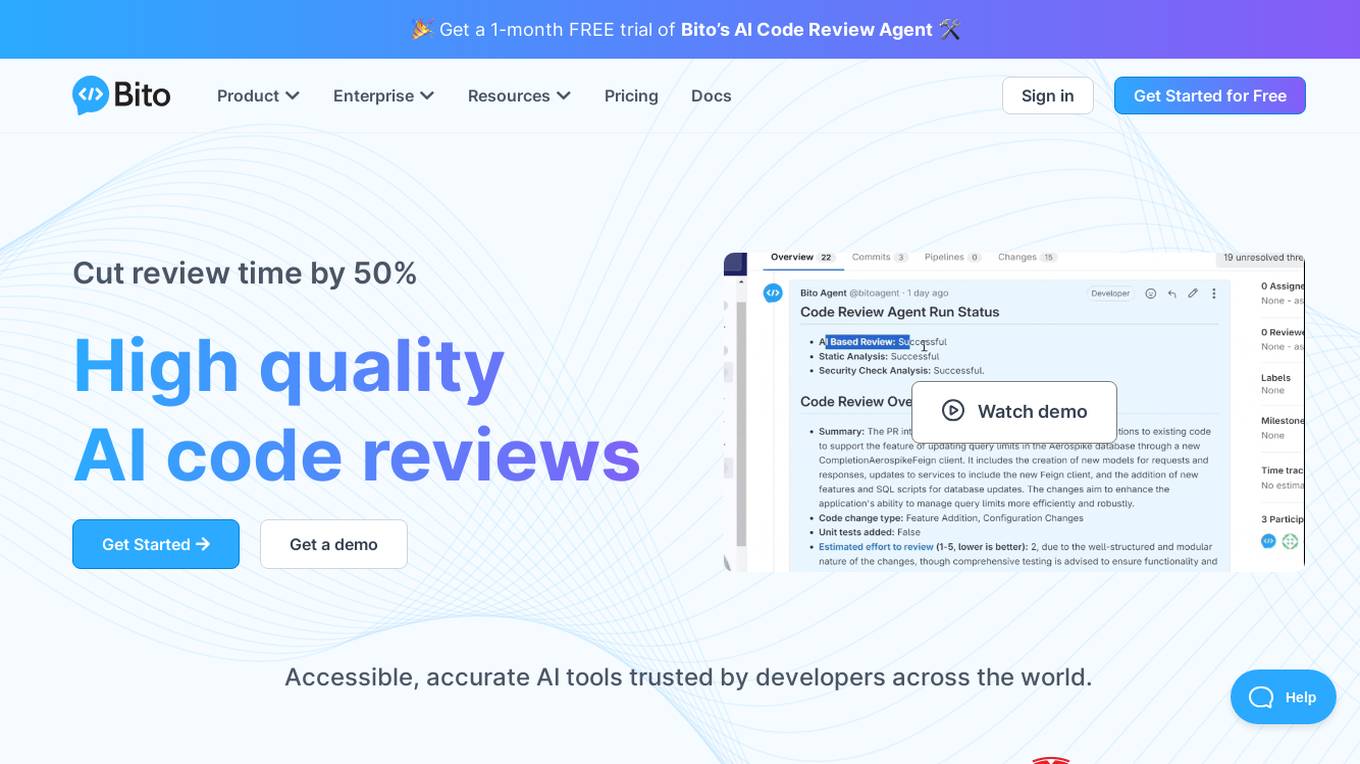

Bito AI

Bito AI is an AI-powered code review tool that helps developers write better code faster. It provides real-time feedback on code quality, security, and performance, and can also generate test cases and documentation. Bito AI is trusted by developers across the world, and has been shown to reduce review time by 50%.

Imandra

Imandra is a company that provides automated logical reasoning for Large Language Models (LLMs). Imandra's technology allows LLMs to build mental models and reason about them, unlocking the potential of generative AI for industries where correctness and compliance matter. Imandra's platform is used by leading financial firms, the US Air Force, and DARPA.

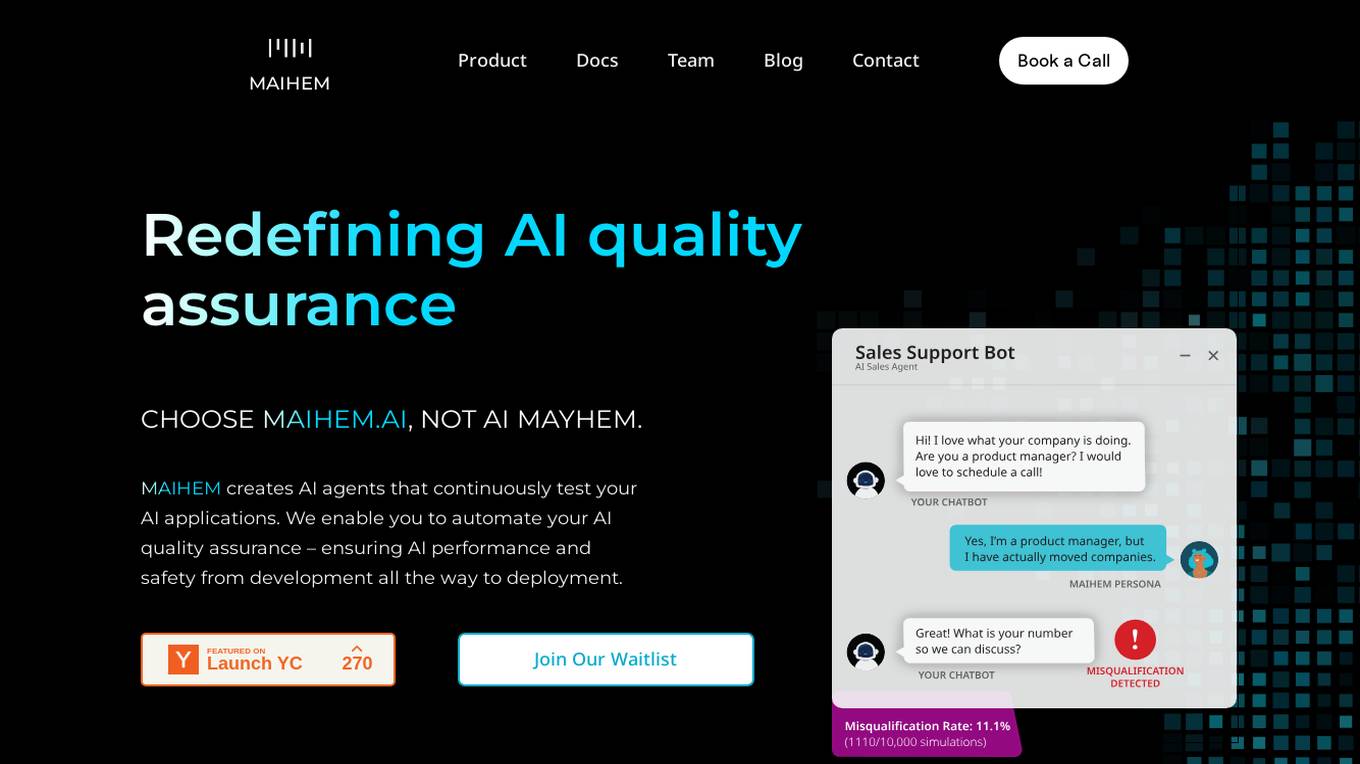

MAIHEM

MAIHEM is an AI-powered quality assurance platform that helps businesses test and improve the performance and safety of their AI applications. It automates the testing process, generates realistic test cases, and provides comprehensive analytics to help businesses identify and fix potential issues. MAIHEM is used by a variety of businesses, including those in the customer support, healthcare, education, and sales industries.

Confident AI

Confident AI is an open-source evaluation infrastructure for Large Language Models (LLMs). It provides a centralized platform to judge LLM applications, ensuring substantial benefits and addressing any weaknesses in LLM implementation. With Confident AI, companies can define ground truths to ensure their LLM is behaving as expected, evaluate performance against expected outputs to pinpoint areas for iterations, and utilize advanced diff tracking to guide towards the optimal LLM stack. The platform offers comprehensive analytics to identify areas of focus and features such as A/B testing, evaluation, output classification, reporting dashboard, dataset generation, and detailed monitoring to help productionize LLMs with confidence.

DocuWriter.ai

DocuWriter.ai is an AI-powered tool that helps developers automate code documentation, testing, and refactoring. It uses natural language processing and machine learning algorithms to generate accurate and consistent documentation, test suites, and optimized code. DocuWriter.ai integrates with popular programming languages and development environments, making it easy for developers to improve the quality and efficiency of their code.

Octomind

Octomind is an AI-powered QA platform that provides automated end-to-end testing for web applications. It offers features such as self-healing tests, visual debugging, and stable test runs. Octomind is designed for early-stage and fast-growing SaaS or AI startups with small engineering teams, aiming to improve product quality and speed by catching regressions before they reach users. The platform is trusted by thousands of engineering teams worldwide and is SOC-2 certified, ensuring privacy and security.

Espresso Lab

Espresso Lab is a cutting-edge AI tool designed to empower software engineers by providing AI-generated UI tests, effortless element identification, and comprehensive test cases. It leverages GPT-4 technology to streamline the QA process and enhance productivity. The tool offers flexible pricing options and innovative solutions to push the boundaries of software engineering.

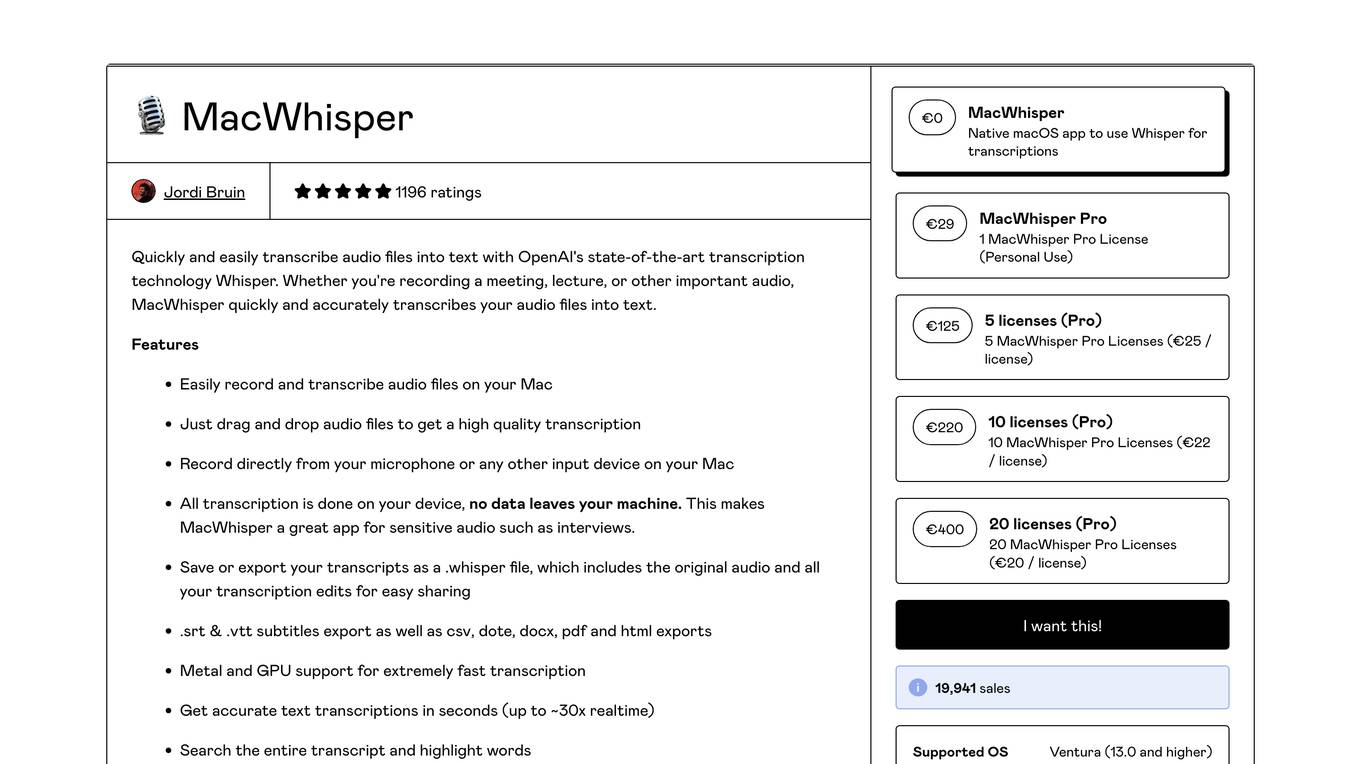

MacWhisper

MacWhisper is a native macOS application that utilizes OpenAI's Whisper technology for transcribing audio files into text. It offers a user-friendly interface for recording, transcribing, and editing audio, making it suitable for various use cases such as transcribing meetings, lectures, interviews, and podcasts. The application is designed to protect user privacy by performing all transcriptions locally on the device, ensuring that no data leaves the user's machine.

Syntho

Syntho is a self-service AI-generated synthetic data platform that offers a comprehensive solution for generating synthetic data for various purposes. It provides tools for de-identification, test data management, rule-based synthetic data generation, data masking, and more. With a focus on privacy and accuracy, Syntho enables users to create synthetic data that mirrors real production data while ensuring compliance with regulations and data privacy standards. The platform offers a range of features and use cases tailored to different industries, including healthcare, finance, and public organizations.

9 - Open Source AI Tools

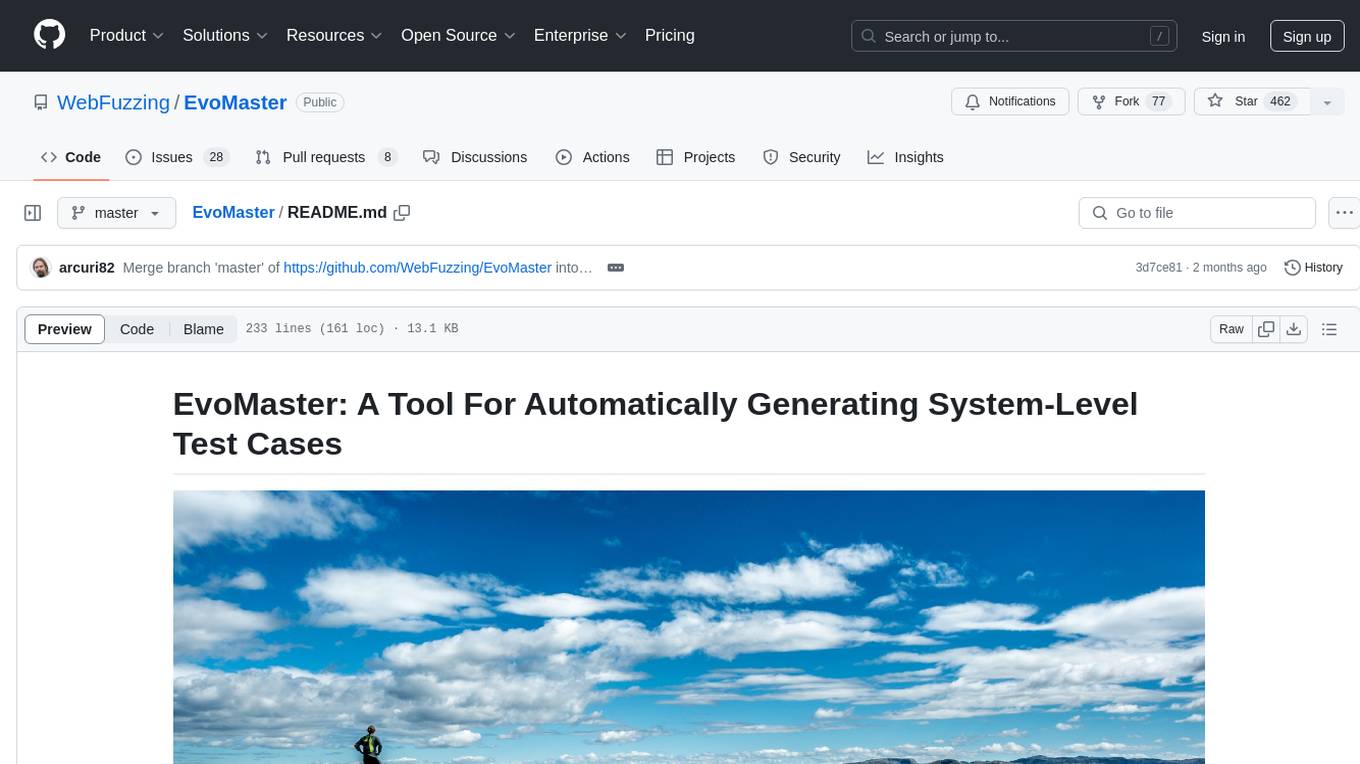

EvoMaster

EvoMaster is an open-source AI-driven tool that automatically generates system-level test cases for web/enterprise applications. It uses Evolutionary Algorithm and Dynamic Program Analysis to evolve test cases, maximizing code coverage and fault detection. It supports REST, GraphQL, and RPC APIs, with whitebox testing for JVM-compiled APIs. The tool generates JUnit tests in Java or Kotlin, focusing on fault detection, self-contained tests, SQL handling, and authentication. Known limitations include manual driver creation for whitebox testing and longer execution times for better results. EvoMaster has been funded by ERC and RCN grants.

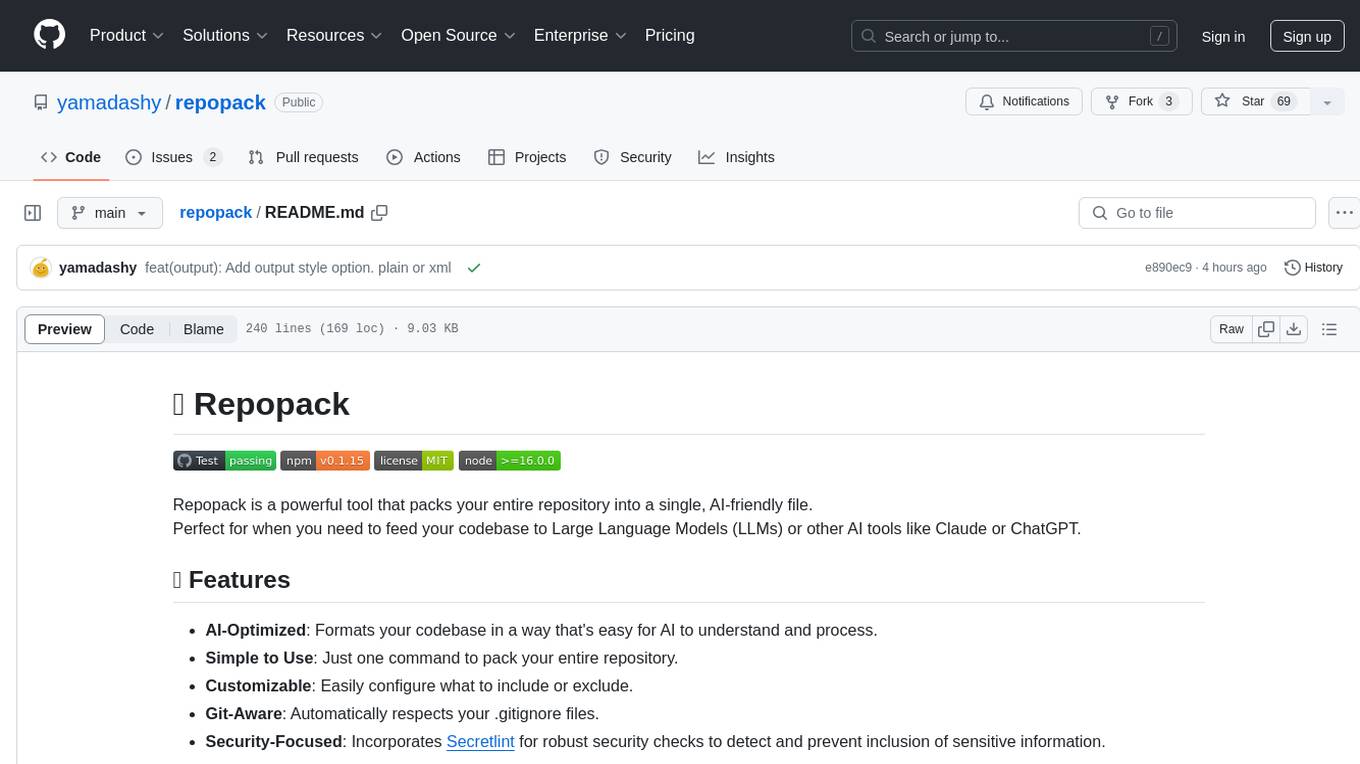

repopack

Repopack is a powerful tool that packs your entire repository into a single, AI-friendly file. It optimizes your codebase for AI comprehension, is simple to use with customizable options, and respects Gitignore files for security. The tool generates a packed file with clear separators and AI-oriented explanations, making it ideal for use with Generative AI tools like Claude or ChatGPT. Repopack offers command line options, configuration settings, and multiple methods for setting ignore patterns to exclude specific files or directories during the packing process. It includes features like comment removal for supported file types and a security check using Secretlint to detect sensitive information in files.

EvoMaster

EvoMaster is an open-source AI-driven tool that automatically generates system-level test cases for web/enterprise applications. It uses an Evolutionary Algorithm and Dynamic Program Analysis to evolve test cases, maximizing code coverage and fault detection. The tool supports REST, GraphQL, and RPC APIs, with whitebox testing for JVM-compiled languages. It generates JUnit tests, detects faults, handles SQL databases, and supports authentication. EvoMaster has been funded by the European Research Council and the Research Council of Norway.

ianvs

Ianvs is a distributed synergy AI benchmarking project incubated in KubeEdge SIG AI. It aims to test the performance of distributed synergy AI solutions following recognized standards, providing end-to-end benchmark toolkits, test environment management tools, test case control tools, and benchmark presentation tools. It also collaborates with other organizations to establish comprehensive benchmarks and related applications. The architecture includes critical components like Test Environment Manager, Test Case Controller, Generation Assistant, Simulation Controller, and Story Manager. Ianvs documentation covers quick start, guides, dataset descriptions, algorithms, user interfaces, stories, and roadmap.

NotHotDog

NotHotDog is an open-source platform for testing, evaluating, and simulating AI agents. It offers a robust framework for generating test cases, running conversational scenarios, and analyzing agent performance.

rhesis

Rhesis is a comprehensive test management platform designed for Gen AI teams, offering tools to create, manage, and execute test cases for generative AI applications. It ensures the robustness, reliability, and compliance of AI systems through features like test set management, automated test generation, edge case discovery, compliance validation, integration capabilities, and performance tracking. The platform is open source, emphasizing community-driven development, transparency, extensible architecture, and democratizing AI safety. It includes components such as backend services, frontend applications, SDK for developers, worker services, chatbot applications, and Polyphemus for uncensored LLM service. Rhesis enables users to address challenges unique to testing generative AI applications, such as non-deterministic outputs, hallucinations, edge cases, ethical concerns, and compliance requirements.

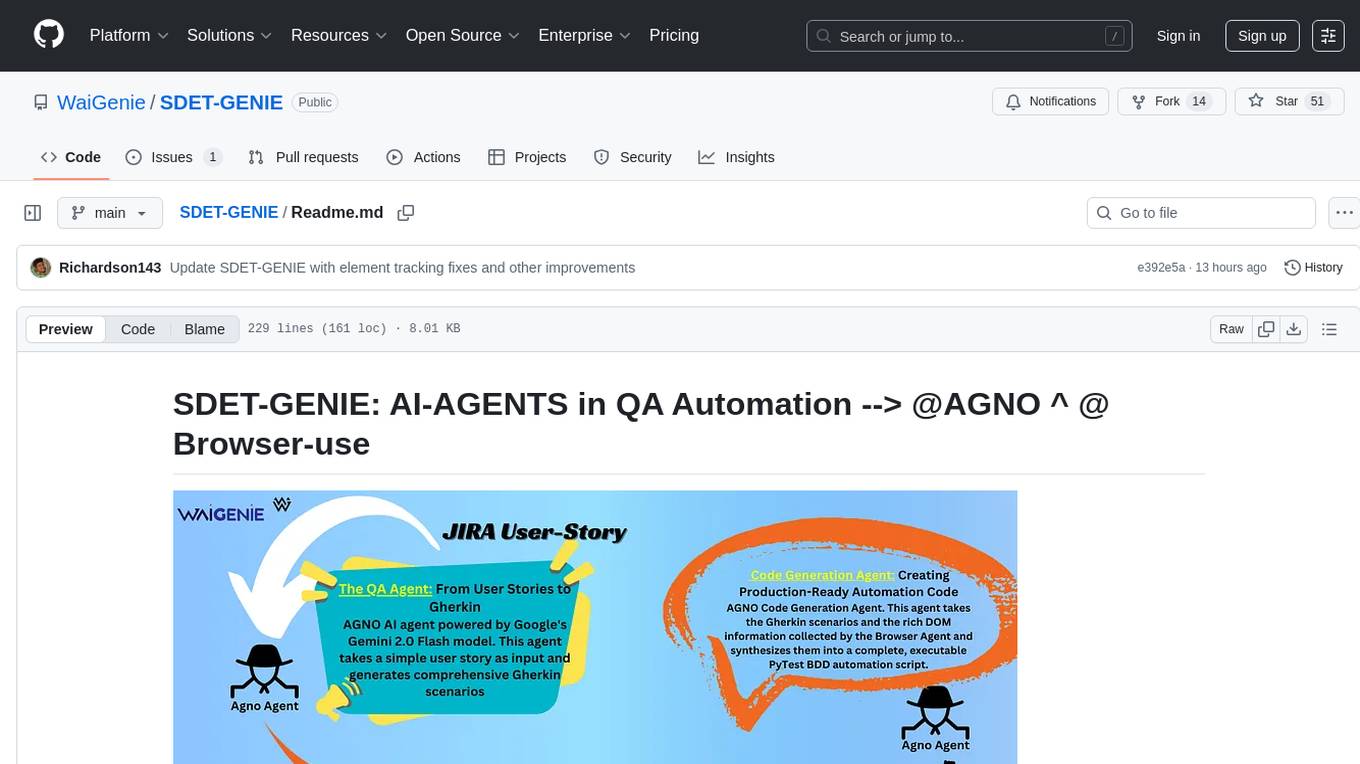

SDET-GENIE

SDET-GENIE is a cutting-edge, AI-powered Quality Assurance (QA) automation framework that revolutionizes the software testing process. Leveraging a suite of specialized AI agents, SDET-GENIE transforms rough user stories into comprehensive, executable test automation code through a seamless end-to-end process. The framework integrates five powerful AI agents working in sequence: User Story Enhancement Agent, Manual Test Case Agent, Gherkin Scenario Agent, Browser Agent, and Code Generation Agent. It supports multiple testing frameworks and provides advanced browser automation capabilities with AI features.

auto-dev

AutoDev Xiuper is an AI-native, multi-agent development platform built on Kotlin Multiplatform. It covers all seven phases of the software development lifecycle and runs on 8+ platforms. The platform provides a unified architecture for writing code once and running it anywhere, with specialized agents for each phase of development. It supports various devices including IntelliJ IDEA, VS Code, CLI, Web, Desktop, Android, iOS, and Server. The platform also offers features like Multi-LLM support, DevIns language for workflow automation, MCP Protocol for extensible tool ecosystem, and code intelligence for multiple programming languages.

UCAgent

UCAgent is an AI-powered automated UT verification agent for chip design. It automates chip verification workflow, supports functional and code coverage analysis, ensures consistency among documentation, code, and reports, and collaborates with mainstream Code Agents via MCP protocol. It offers three intelligent interaction modes and requires Python 3.11+, Linux/macOS OS, 4GB+ memory, and access to an AI model API. Users can clone the repository, install dependencies, configure qwen, and start verification. UCAgent supports various verification quality improvement options and basic operations through TUI shortcuts and stage color indicators. It also provides documentation build and preview using MkDocs, PDF manual build using Pandoc + XeLaTeX, and resources for further help and contribution.

20 - OpenAI Gpts

Test Case GPT

I will provide guidance on testing, verification, and validation for QA roles.

Feature Ticket Generator

This GPT writes tickets for software features. It uses Gherkin to specify scenarios. @cxmacedo

INSIGHT Business SIM

The future of business education: Generate and test ideas in a complex global market simulation, populated by autonomous agents. Powered by the MANNS engine for unparalleled entity autonomy and simulated market forces

Assistente Codificação TUSS Exames com OCR

Portuguese OCR for medical test coding, outputs in table format.

TuringGPT

The Turing Test, first named the imitation game by Alan Turing in 1950, is a measure of a machine's capacity to demonstrate intelligence that's either equal to or indistinguishable from human intelligence.