CodeGPT

A CLI written in Go language that writes git commit messages or do a code review brief for you using ChatGPT AI (gpt-4o, gpt-4-turbo, gpt-3.5-turbo model) and automatically installs a git prepare-commit-msg hook.

Stars: 1350

CodeGPT is a CLI tool written in Go that helps you write git commit messages or do a code review brief using ChatGPT AI (gpt-3.5-turbo, gpt-4 model) and automatically installs a git prepare-commit-msg hook. It supports Azure OpenAI Service or OpenAI API, conventional commits specification, Git prepare-commit-msg Hook, customizing the number of lines of context in diffs, excluding files from the git diff command, translating commit messages into different languages, using socks or custom network HTTP proxies, specifying model lists, and doing brief code reviews.

README:

English | 繁體中文

A CLI written in Go that writes git commit messages or provides a code review summary for you using ChatGPT AI (gpt-4o, gpt-4 model) and automatically installs a git prepare-commit-msg hook.

- Supports Azure OpenAI Service, OpenAI API, Gemini, Anthropic, Ollama, Groq, and OpenRouter.

- Supports conventional commits specification.

- Supports Git prepare-commit-msg Hook, see the Git Hooks documentation.

- Supports customizing generated diffs with n lines of context, the default is three.

- Supports excluding files from the git diff command.

- Supports commit message translation into another language (supports

en,zh-tw, orzh-cn). - Supports socks proxy or custom network HTTP proxy.

- Supports model lists like

gpt-4,gpt-4o, etc. - Supports generating a brief code review.

Install from Homebrew on macOS

brew tap appleboy/tap

brew install codegptInstall from Chocolatey on Windows

choco install codegptThe pre-compiled binaries can be downloaded from release page. Change the binary permissions to 755 and copy the binary to the system bin directory. Use the codegpt command as shown below.

$ codegpt version

version: v0.4.3 commit: xxxxxxxInstall from source code:

go install github.com/appleboy/CodeGPT/cmd/codegpt@latestPlease first create your OpenAI API Key. The OpenAI Platform allows you to generate a new API Key.

An environment variable is a variable that is set on your operating system, rather than within your application. It consists of a name and value. We recommend that you set the name of the variable to OPENAI_API_KEY.

See the Best Practices for API Key Safety.

export OPENAI_API_KEY=sk-xxxxxxxor store your API key in a custom config file.

codegpt config set openai.api_key sk-xxxxxxxThis will create a .codegpt.yaml file in your home directory ($HOME/.config/codegpt/.codegpt.yaml). The following options are available.

| Option | Description |

|---|---|

| openai.base_url | Replace the default base URL (https://api.openai.com/v1). |

| openai.api_key | Generate API key from openai platform page. |

| openai.org_id | Identifier for this organization sometimes used in API requests. See organization settings. Only for openai service. |

| openai.model | Default model is gpt-4o, you can change to other custom model (Groq or OpenRouter provider). |

| openai.proxy | HTTP/HTTPS client proxy. |

| openai.socks | SOCKS client proxy. |

| openai.timeout | Default HTTP timeout is 10s (ten seconds). |

| openai.max_tokens | Default max tokens is 300. See reference max_tokens. |

| openai.temperature | Default temperature is 1. See reference temperature. |

| git.diff_unified | Generate diffs with <n> lines of context, default is 3. |

| git.exclude_list | Exclude file from git diff command. |

| openai.provider | Default service provider is openai, you can change to azure. |

| output.lang | Default language is en and available languages zh-tw, zh-cn, ja. |

| openai.top_p | Default top_p is 1.0. See reference top_p. |

| openai.frequency_penalty | Default frequency_penalty is 0.0. See reference frequency_penalty. |

| openai.presence_penalty | Default presence_penalty is 0.0. See reference presence_penalty. |

Please get the API key, Endpoint and Model deployments list from Azure Resource Management Portal on left menu.

Update your config file.

codegpt config set openai.provider azure

codegpt config set openai.base_url https://xxxxxxxxx.openai.azure.com/

codegpt config set openai.api_key xxxxxxxxxxxxxxxx

codegpt config set openai.model xxxxx-gpt-35-turboSupport Gemini API Service

Build with the Gemini API, you can see the Gemini API documentation. Update the provider and api_key in your config file. Please create an API key from the Gemini API page.

codegpt config set openai.provider gemini

codegpt config set openai.api_key xxxxxxx

codegpt config set openai.model gemini-1.5-flash-latestSupport Anthropic API Service

Build with the Anthropic API, you can see the Anthropic API documentation. Update the provider and api_key in your config file. Please create an API key from the Anthropic API page.

codegpt config set openai.provider anthropic

codegpt config set openai.api_key xxxxxxx

codegpt config set openai.model claude-3-5-sonnet-20241022See the model list from the Anthropic API documentation.

How to change to Groq API Service

Please get the API key from Groq API Service, please visit here. Update the base_url and api_key in your config file.

codegpt config set openai.provider openai

codegpt config set openai.base_url https://api.groq.com/openai/v1

codegpt config set openai.api_key gsk_xxxxxxxxxxxxxx

codegpt config set openai.model llama3-8b-8192GroqCloud currently supports the following models:

We can use the Llama3 model from the ollama API Service, please visit here. Update the base_url in your config file.

# pull llama3 8b model

ollama pull llama3

ollama cp llama3 gpt-4oTry to use the ollama API Service.

curl http://localhost:11434/v1/chat/completions \

-H "Content-Type: application/json" \

-d '{

"model": "gpt-4o",

"messages": [

{

"role": "user",

"content": "Hello!"

}

]

}'Update the base_url in your config file. You don't need to set the api_key in the config file.

codegpt config set openai.base_url http://localhost:11434/v1How to change to OpenRouter API Service

You can see the supported models list, model usage can be paid by users, developers, or both, and may shift in availability. You can also fetch models, prices, and limits via API.

The following example use free model name: meta-llama/llama-3-8b-instruct:free

codegpt config set openai.provider openai

codegpt config set openai.base_url https://openrouter.ai/api/v1

codegpt config set openai.api_key sk-or-v1-xxxxxxxxxxxxxxxx

codegpt config set openai.model google/learnlm-1.5-pro-experimental:freeFor including your app on openrouter.ai rankings and Shows in rankings on openrouter.ai, you can set the openai.headers in your config file.

codegpt config set openai.headers "HTTP-Referer=https://github.com/appleboy/CodeGPT X-Title=CodeGPT"- HTTP-Refer: Optional, for including your app on openrouter.ai rankings.

- X-Title: Optional, for Shows in rankings on openrouter.ai.

There are two methods for generating a commit message using the codegpt command: CLI mode and Git Hook.

You can call codegpt directly to generate a commit message for your staged changes:

git add <files...>

codegpt commit --previewThe commit message is shown below.

Summarize the commit message use gpt-4o model

We are trying to summarize a git diff

We are trying to summarize a title for pull request

================Commit Summary====================

feat: Add preview flag and remove disableCommit flag in commit command and template file.

- Add a `preview` flag to the `commit` command

- Remove the `disbaleCommit` flag from the `prepare-commit-msg` template file

==================================================

Write the commit message to .git/COMMIT_EDITMSG fileor translate all git commit messages into a different language (Traditional Chinese, Simplified Chinese or Japanese)

codegpt commit --lang zh-tw --previewConsider the following outcome:

Summarize the commit message use gpt-4o model

We are trying to summarize a git diff

We are trying to summarize a title for pull request

We are trying to translate a git commit message to Traditional Chinese language

================Commit Summary====================

功能:重構 codegpt commit 命令標記

- 將「codegpt commit」命令新增「預覽」標記

- 從「codegpt commit」命令中移除「--disableCommit」標記

==================================================

Write the commit message to .git/COMMIT_EDITMSG fileYou can replace the tip of the current branch by creating a new commit. just use --amend flag

codegpt commit --amendDefault commit message template as following:

{{ .summarize_prefix }}: {{ .summarize_title }}

{{ .summarize_message }}

change format with template string using --template_string parameter:

codegpt commit --preview --template_string \

"[{{ .summarize_prefix }}]: {{ .summarize_title }}"change format with template file using --template_file parameter:

codegpt commit --preview --template_file your_file_pathAdd custom variable to git commit message template:

{{ .summarize_prefix }}: {{ .summarize_title }}

{{ .summarize_message }}

{{ if .JIRA_URL }}{{ .JIRA_URL }}{{ end }}Add custom variable to git commit message template using --template_vars parameter:

codegpt commit --preview --template_file your_file_path --template_vars \

JIRA_URL=https://jira.example.com/ABC-123Load custom variable from file using --template_vars_file parameter:

codegpt commit --preview --template_file your_file_path --template_vars_file your_file_pathSee the template_vars_file format as following:

JIRA_URL=https://jira.example.com/ABC-123You can also use the prepare-commit-msg hook to integrate codegpt with Git. This allows you to use Git normally and edit the commit message before committing.

You want to install the hook in the Git repository:

codegpt hook installYou want to remove the hook from the Git repository:

codegpt hook uninstallStage your files and commit after installation:

git add <files...>

git commitcodegpt will generate the commit message for you and pass it back to Git. Git will open it with the configured editor for you to review/edit it. Then, to commit, save and close the editor!

$ git commit

Summarize the commit message use gpt-4o model

We are trying to summarize a git diff

We are trying to summarize a title for pull request

================Commit Summary====================

Improve user experience and documentation for OpenAI tools

- Add download links for pre-compiled binaries

- Include instructions for setting up OpenAI API key

- Add a CLI mode for generating commit messages

- Provide references for OpenAI Chat completions and ChatGPT/Whisper APIs

==================================================

Write the commit message to .git/COMMIT_EDITMSG file

[main 6a9e879] Improve user experience and documentation for OpenAI tools

1 file changed, 56 insertions(+)You can use codegpt to generate a code review message for your staged changes:

codegpt reviewor translate all code review messages into a different language (Traditional Chinese, Simplified Chinese or Japanese)

codegpt review --lang zh-twSee the following result:

Code review your changes using gpt-4o model

We are trying to review code changes

PromptTokens: 1021, CompletionTokens: 200, TotalTokens: 1221

We are trying to translate core review to Traditional Chinese language

PromptTokens: 287, CompletionTokens: 199, TotalTokens: 486

================Review Summary====================

總體而言,此程式碼修補似乎在增加 Review 指令的功能,允許指定輸出語言並在必要時進行翻譯。以下是需要考慮的潛在問題:

- 輸出語言沒有進行輸入驗證。如果指定了無效的語言代碼,程式可能會崩潰或產生意外結果。

- 此使用的翻譯 API 未指定,因此不清楚是否存在任何安全漏洞。

- 無法處理翻譯 API 調用的錯誤。如果翻譯服

==================================================another php example code:

<?php

if( isset( $_POST[ 'Submit' ] ) ) {

// Get input

$target = $_REQUEST[ 'ip' ];

// Determine OS and execute the ping command.

if( stristr( php_uname( 's' ), 'Windows NT' ) ) {

// Windows

$cmd = shell_exec( 'ping ' . $target );

}

else {

// *nix

$cmd = shell_exec( 'ping -c 4 ' . $target );

}

// Feedback for the end user

$html .= "<pre>{$cmd}</pre>";

}

?>code review result:

================Review Summary====================

Code review:

1. Security: The code is vulnerable to command injection attacks as the user input is directly used in the shell_exec() function. An attacker can potentially execute malicious commands on the server by injecting them into the 'ip' parameter.

2. Error handling: There is no error handling in the code. If the ping command fails, the error message is not displayed to the user.

3. Input validation: There is no input validation for the 'ip' parameter. It should be validated to ensure that it is a valid IP address or domain name.

4. Cross-platform issues: The code assumes that the server is either running Windows or *nix operating systems. It may not work correctly on other platforms.

Suggestions for improvement:

1. Use escapeshellarg() function to sanitize the user input before passing it to shell_exec() function to prevent command injection.

2. Implement error handling to display error messages to the user if the ping command fails.

3. Use a regular expression to validate the 'ip' parameter to ensure that it is a valid IP address or domain name.

4. Use a more robust method to determine the operating system, such as the PHP_OS constant, which can detect a wider range of operating systems.

==================================================Run the following command to test the code:

make testFor Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for CodeGPT

Similar Open Source Tools

CodeGPT

CodeGPT is a CLI tool written in Go that helps you write git commit messages or do a code review brief using ChatGPT AI (gpt-3.5-turbo, gpt-4 model) and automatically installs a git prepare-commit-msg hook. It supports Azure OpenAI Service or OpenAI API, conventional commits specification, Git prepare-commit-msg Hook, customizing the number of lines of context in diffs, excluding files from the git diff command, translating commit messages into different languages, using socks or custom network HTTP proxies, specifying model lists, and doing brief code reviews.

repopack

Repopack is a powerful tool that packs your entire repository into a single, AI-friendly file. It optimizes your codebase for AI comprehension, is simple to use with customizable options, and respects Gitignore files for security. The tool generates a packed file with clear separators and AI-oriented explanations, making it ideal for use with Generative AI tools like Claude or ChatGPT. Repopack offers command line options, configuration settings, and multiple methods for setting ignore patterns to exclude specific files or directories during the packing process. It includes features like comment removal for supported file types and a security check using Secretlint to detect sensitive information in files.

python-repomix

Repomix is a powerful tool that packs your entire repository into a single, AI-friendly file. It formats your codebase for easy AI comprehension, provides token counts, is simple to use with one command, customizable, git-aware, security-focused, and offers advanced code compression. It supports multiprocessing or threading for faster analysis, automatically handles various file encodings, and includes built-in security checks. Repomix can be used with uvx, pipx, or Docker. It offers various configuration options for output style, security checks, compression modes, ignore patterns, and remote repository processing. The tool can be used for code review, documentation generation, test case generation, code quality assessment, library overview, API documentation review, code architecture analysis, and configuration analysis. Repomix can also run as an MCP server for AI assistants like Claude, providing tools for packaging codebases, reading output files, searching within outputs, reading files from the filesystem, listing directory contents, generating Claude Agent Skills, and more.

mcp-client-cli

MCP CLI client is a simple CLI program designed to run LLM prompts and act as an alternative client for Model Context Protocol (MCP). Users can interact with MCP-compatible servers from their terminal, including LLM providers like OpenAI, Groq, or local LLM models via llama. The tool supports various functionalities such as running prompt templates, analyzing image inputs, triggering tools, continuing conversations, utilizing clipboard support, and additional options like listing tools and prompts. Users can configure LLM and MCP servers via a JSON config file and contribute to the project by submitting issues and pull requests for enhancements or bug fixes.

dvc

DVC, or Data Version Control, is a command-line tool and VS Code extension that helps you develop reproducible machine learning projects. With DVC, you can version your data and models, iterate fast with lightweight pipelines, track experiments in your local Git repo, compare any data, code, parameters, model, or performance plots, and share experiments and automatically reproduce anyone's experiment.

cipher

Cipher is a versatile encryption and decryption tool designed to secure sensitive information. It offers a user-friendly interface with various encryption algorithms to choose from, ensuring data confidentiality and integrity. With Cipher, users can easily encrypt text or files using strong encryption methods, making it suitable for protecting personal data, confidential documents, and communication. The tool also supports decryption of encrypted data, providing a seamless experience for users to access their secured information. Cipher is a reliable solution for individuals and organizations looking to enhance their data security measures.

repomix

Repomix is a powerful tool that packs your entire repository into a single, AI-friendly file. It is designed to format your codebase for easy understanding by AI tools like Large Language Models (LLMs), Claude, ChatGPT, and Gemini. Repomix offers features such as AI optimization, token counting, simplicity in usage, customization options, Git awareness, and security-focused checks using Secretlint. It allows users to pack their entire repository or specific directories/files using glob patterns, and even supports processing remote Git repositories. The tool generates output in plain text, XML, or Markdown formats, with options for including/excluding files, removing comments, and performing security checks. Repomix also provides a global configuration option, custom instructions for AI context, and a security check feature to detect sensitive information in files.

llm.nvim

llm.nvim is a plugin for Neovim that enables code completion using LLM models. It supports 'ghost-text' code completion similar to Copilot and allows users to choose their model for code generation via HTTP requests. The plugin interfaces with multiple backends like Hugging Face, Ollama, Open AI, and TGI, providing flexibility in model selection and configuration. Users can customize the behavior of suggestions, tokenization, and model parameters to enhance their coding experience. llm.nvim also includes commands for toggling auto-suggestions and manually requesting suggestions, making it a versatile tool for developers using Neovim.

grammar-llm

GrammarLLM is an AI-powered grammar correction tool that utilizes fine-tuned language models to fix grammatical errors in text. It offers real-time grammar and spelling correction with individual suggestion acceptance. The tool features a clean and responsive web interface, a FastAPI backend integrated with llama.cpp, and support for multiple grammar models. Users can easily deploy the tool using Docker Compose and interact with it through a web interface or REST API. The default model, GRMR-V3-G4B-Q8_0, provides grammar correction, spelling correction, punctuation fixes, and style improvements without requiring a GPU. The tool also includes endpoints for applying single or multiple suggestions to text, a health check endpoint, and detailed documentation for functionality and model details. Testing and verification steps are provided for manual and Docker testing, along with community guidelines for contributing, reporting issues, and getting support.

model.nvim

model.nvim is a tool designed for Neovim users who want to utilize AI models for completions or chat within their text editor. It allows users to build prompts programmatically with Lua, customize prompts, experiment with multiple providers, and use both hosted and local models. The tool supports features like provider agnosticism, programmatic prompts in Lua, async and multistep prompts, streaming completions, and chat functionality in 'mchat' filetype buffer. Users can customize prompts, manage responses, and context, and utilize various providers like OpenAI ChatGPT, Google PaLM, llama.cpp, ollama, and more. The tool also supports treesitter highlights and folds for chat buffers.

avante.nvim

avante.nvim is a Neovim plugin that emulates the behavior of the Cursor AI IDE, providing AI-driven code suggestions and enabling users to apply recommendations to their source files effortlessly. It offers AI-powered code assistance and one-click application of suggested changes, streamlining the editing process and saving time. The plugin is still in early development, with functionalities like setting API keys, querying AI about code, reviewing suggestions, and applying changes. Key bindings are available for various actions, and the roadmap includes enhancing AI interactions, stability improvements, and introducing new features for coding tasks.

opencode.nvim

Opencode.nvim is a neovim frontend for Opencode, a terminal-based AI coding agent. It provides a chat interface between neovim and the Opencode AI agent, capturing editor context to enhance prompts. The plugin maintains persistent sessions for continuous conversations with the AI assistant, similar to Cursor AI.

parrot.nvim

Parrot.nvim is a Neovim plugin that prioritizes a seamless out-of-the-box experience for text generation. It simplifies functionality and focuses solely on text generation, excluding integration of DALLE and Whisper. It supports persistent conversations as markdown files, custom hooks for inline text editing, multiple providers like Anthropic API, perplexity.ai API, OpenAI API, Mistral API, and local/offline serving via ollama. It allows custom agent definitions, flexible API credential support, and repository-specific instructions with a `.parrot.md` file. It does not have autocompletion or hidden requests in the background to analyze files.

minuet-ai.nvim

Minuet AI is a Neovim plugin that integrates with nvim-cmp to provide AI-powered code completion using multiple AI providers such as OpenAI, Claude, Gemini, Codestral, and Huggingface. It offers customizable configuration options and streaming support for completion delivery. Users can manually invoke completion or use cost-effective models for auto-completion. The plugin requires API keys for supported AI providers and allows customization of system prompts. Minuet AI also supports changing providers, toggling auto-completion, and provides solutions for input delay issues. Integration with lazyvim is possible, and future plans include implementing RAG on the codebase and virtual text UI support.

deepgram-js-sdk

Deepgram JavaScript SDK. Power your apps with world-class speech and Language AI models.

mdream

Mdream is a lightweight and user-friendly markdown editor designed for developers and writers. It provides a simple and intuitive interface for creating and editing markdown files with real-time preview. The tool offers syntax highlighting, markdown formatting options, and the ability to export files in various formats. Mdream aims to streamline the writing process and enhance productivity for individuals working with markdown documents.

For similar tasks

CodeGPT

CodeGPT is a CLI tool written in Go that helps you write git commit messages or do a code review brief using ChatGPT AI (gpt-3.5-turbo, gpt-4 model) and automatically installs a git prepare-commit-msg hook. It supports Azure OpenAI Service or OpenAI API, conventional commits specification, Git prepare-commit-msg Hook, customizing the number of lines of context in diffs, excluding files from the git diff command, translating commit messages into different languages, using socks or custom network HTTP proxies, specifying model lists, and doing brief code reviews.

sourcegraph

Sourcegraph is a code search and navigation tool that helps developers read, write, and fix code in large, complex codebases. It provides features such as code search across all repositories and branches, code intelligence for navigation and refactoring, and the ability to fix and refactor code across multiple repositories at once.

pr-agent

PR-Agent is a tool that helps to efficiently review and handle pull requests by providing AI feedbacks and suggestions. It supports various commands such as generating PR descriptions, providing code suggestions, answering questions about the PR, and updating the CHANGELOG.md file. PR-Agent can be used via CLI, GitHub Action, GitHub App, Docker, and supports multiple git providers and models. It emphasizes real-life practical usage, with each tool having a single GPT-4 call for quick and affordable responses. The PR Compression strategy enables effective handling of both short and long PRs, while the JSON prompting strategy allows for modular and customizable tools. PR-Agent Pro, the hosted version by CodiumAI, provides additional benefits such as full management, improved privacy, priority support, and extra features.

code-review-gpt

Code Review GPT uses Large Language Models to review code in your CI/CD pipeline. It helps streamline the code review process by providing feedback on code that may have issues or areas for improvement. It should pick up on common issues such as exposed secrets, slow or inefficient code, and unreadable code. It can also be run locally in your command line to review staged files. Code Review GPT is in alpha and should be used for fun only. It may provide useful feedback but please check any suggestions thoroughly.

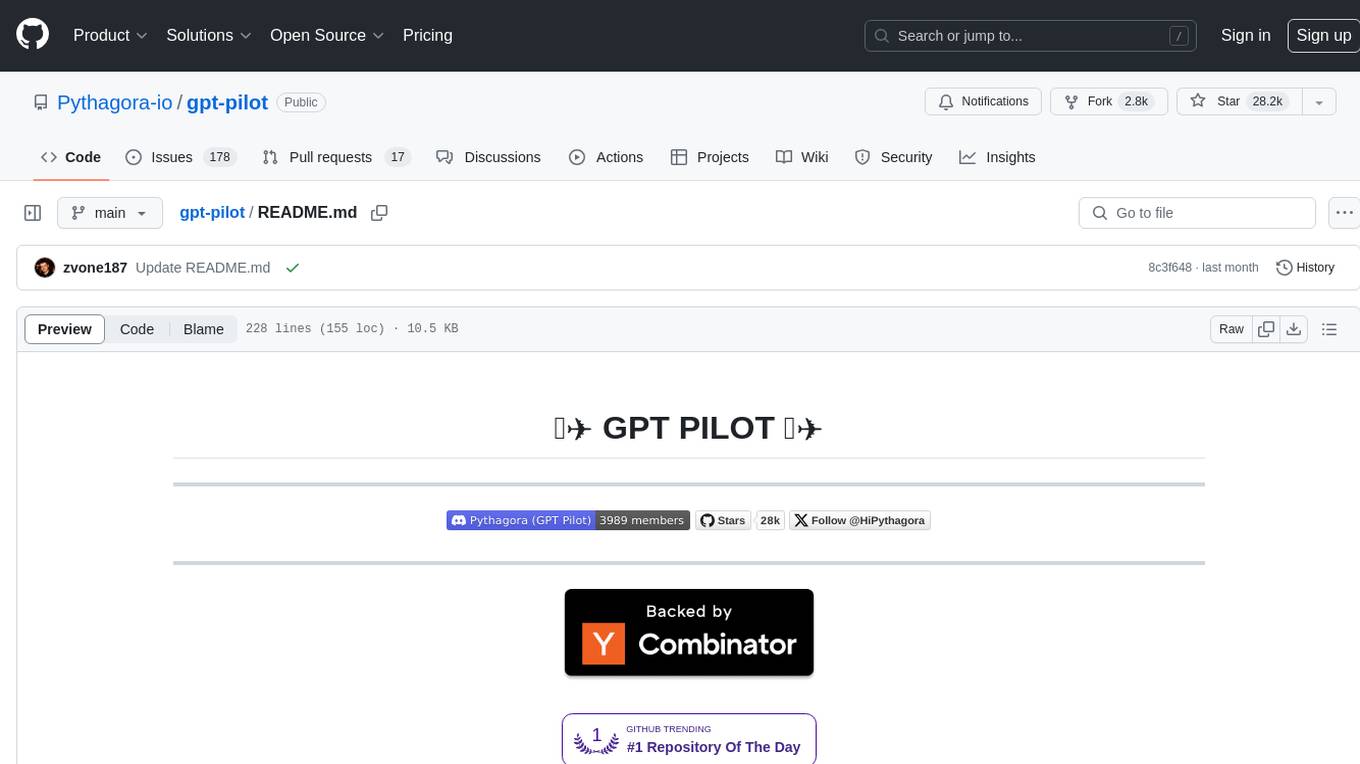

gpt-pilot

GPT Pilot is a core technology for the Pythagora VS Code extension, aiming to provide the first real AI developer companion. It goes beyond autocomplete, helping with writing full features, debugging, issue discussions, and reviews. The tool utilizes LLMs to generate production-ready apps, with developers overseeing the implementation. GPT Pilot works step by step like a developer, debugging issues as they arise. It can work at any scale, filtering out code to show only relevant parts to the AI during tasks. Contributions are welcome, with debugging and telemetry being key areas of focus for improvement.

DevoxxGenieIDEAPlugin

Devoxx Genie is a Java-based IntelliJ IDEA plugin that integrates with local and cloud-based LLM providers to aid in reviewing, testing, and explaining project code. It supports features like code highlighting, chat conversations, and adding files/code snippets to context. Users can modify REST endpoints and LLM parameters in settings, including support for cloud-based LLMs. The plugin requires IntelliJ version 2023.3.4 and JDK 17. Building and publishing the plugin is done using Gradle tasks. Users can select an LLM provider, choose code, and use commands like review, explain, or generate unit tests for code analysis.

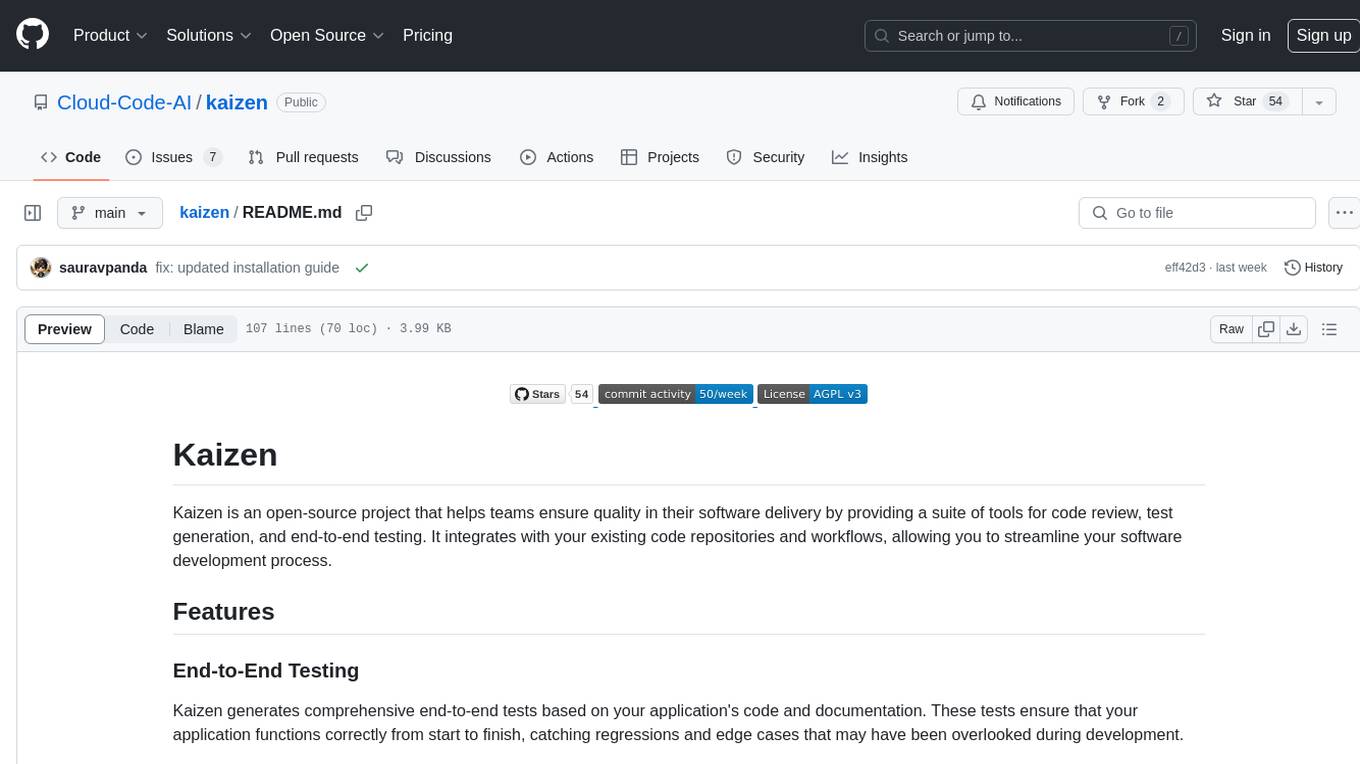

kaizen

Kaizen is an open-source project that helps teams ensure quality in their software delivery by providing a suite of tools for code review, test generation, and end-to-end testing. It integrates with your existing code repositories and workflows, allowing you to streamline your software development process. Kaizen generates comprehensive end-to-end tests, provides UI testing and review, and automates code review with insightful feedback. The file structure includes components for API server, logic, actors, generators, LLM integrations, documentation, and sample code. Getting started involves installing the Kaizen package, generating tests for websites, and executing tests. The tool also runs an API server for GitHub App actions. Contributions are welcome under the AGPL License.

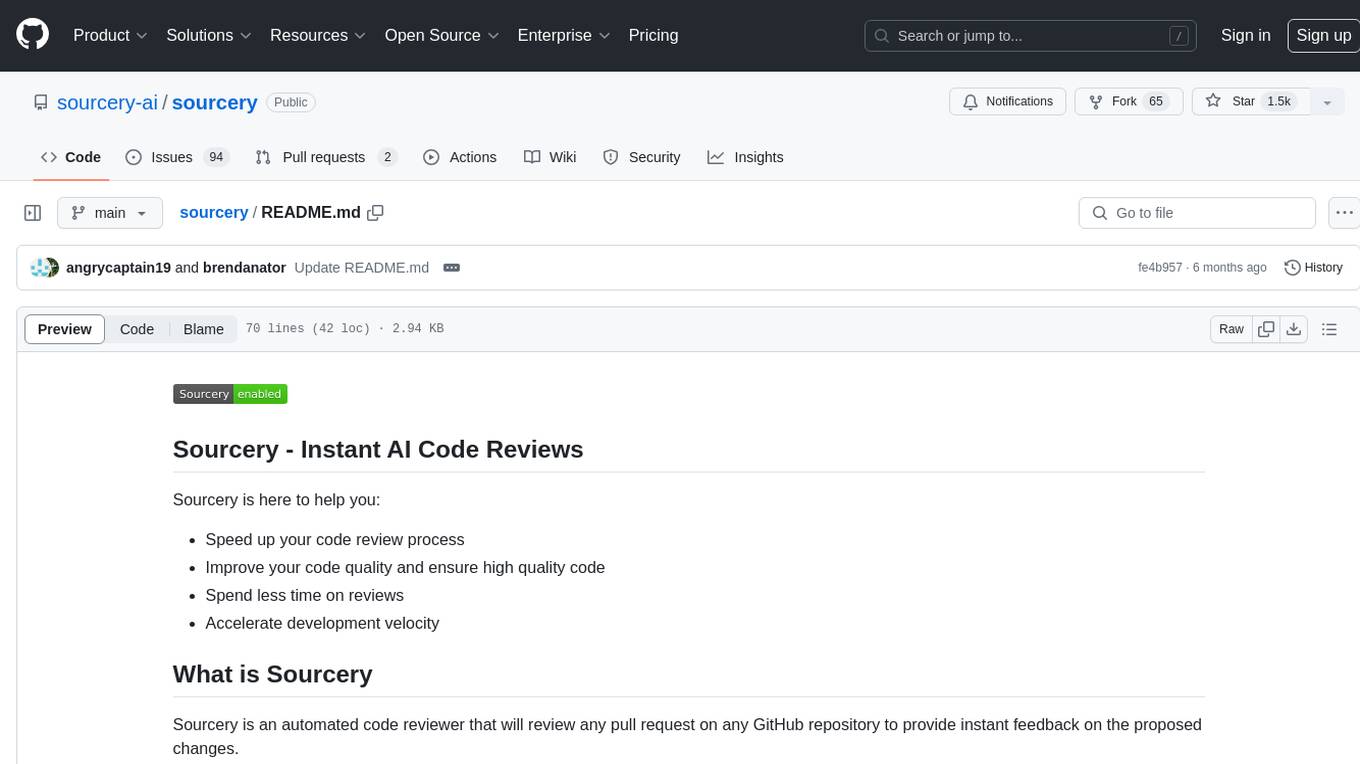

sourcery

Sourcery is an automated code reviewer tool that provides instant feedback on pull requests, helping to speed up the code review process, improve code quality, and accelerate development velocity. It offers high-level feedback, line-by-line suggestions, and aims to mimic the type of code review one would expect from a colleague. Sourcery can also be used as an IDE coding assistant to understand existing code, add unit tests, optimize code, and improve code quality with instant suggestions. It is free for public repos/open source projects and offers a 14-day trial for private repos.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.

oss-fuzz-gen

This framework generates fuzz targets for real-world `C`/`C++` projects with various Large Language Models (LLM) and benchmarks them via the `OSS-Fuzz` platform. It manages to successfully leverage LLMs to generate valid fuzz targets (which generate non-zero coverage increase) for 160 C/C++ projects. The maximum line coverage increase is 29% from the existing human-written targets.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.