AirGym

A high-performance quadrotor deep reinforcement learning platform built upon IsaacGym.

Stars: 82

AirGym is an open source Python quadrotor simulator based on IsaacGym, providing a high-fidelity dynamics and Deep Reinforcement Learning (DRL) framework for quadrotor robot learning research. It offers a lightweight and customizable platform with strict alignment with PX4 logic, multiple control modes, and Sim-to-Real toolkits. Users can perform tasks such as Hovering, Balloon, Tracking, Avoid, and Planning, with the ability to create customized environments and tasks. The tool also supports training from scratch, visual encoding approaches, playing and testing of trained models, and customization of new tasks and assets.

README:

v0.5.1 released on 2025.09.27: Fix bugs:

planningtask resets at start when loading trained modelplanning_cnn_rate.pth.

v0.5.0 released on 2025.07.21: Update VAE model.

v0.4.0 released on 2025.04.21: Update reward functions for

planning. Upload pretrained model for taskplanning.

v0.3.0 released on 2025.04.16: Bugs fixed on environments registry, and tests had done on every tasks.

v0.2.0 released on 2025.04.05: This version has bugs! Re-inplement the asset manager part to make it clear and efficient. Split the algorithm outside the /airgym.

v0.1.0dev released: first release.

AirGym is an open souce Python quadrotor simulator based on IsaacGym, a part of AirGym series Sim-to-Real workding flow. It provides a high-fidelity dynamics and Deep Reinforcement Learning (DRL) framework for quadrotor robot learning research. Furthermore, we also provide toolkits for transferring policy from AirGym simulator to the real quadrotor emNavi-X152b, making Sim-to-Real possible. The documentation website is at https://emnavi.tech/AirGym/.

The Sim-to-Real working flow of AirGym series is broken down into four parts:

- AirGym: the quadrotor simulation platform described in this repository, providing environments and basic training algorithm.

- rlPx4Controller: a flight geometric controller that maintains strict control logic alignment with the open-source PX4, for a better Sim-to-Real.

- AirGym-Real: an onboard Sim-to-Real module compatible with AirGym, enabling direct loading of pretrained models, supporting onboard visual-inertial pose estimation, ROS topic publishing, and one-shot scripted deployment.

- control_for_gym: a middleware layer based on MAVROS for forwarding control commands at various levels to PX4 autopilot. It includes a finite state machine to facilitate switching between DRL models and traditional control algorithms.

AirGym is more lightweight and has a clearer file structure compared to other simulators, because it was designed from the beginning with the goal of achieving Sim-to-Real transfer.

- Lightweight & Customizable: AirGym is extremely lightweight yet highly extensible, allowing you to quickly set up your own customized training task.

- Strict Alignment with PX4 Logic: Flight control in AirGym is supported by rlPx4Controller. It maintains strict control logic alignment with the open-source PX4, for a better Sim-to-Real.

- Multiple Control Modes: AirGym provides various control modes including PY (position & yaw), LV (linear velocity & yaw), CTA (collective thrust & attitude angle), CTBR (collective thrust & body rate), SRT (single-rotor thrust).

- Sim-to-Real Toolkits: AirGym series have complete flow of robot learning Sim-to-Real and provide a potential to transfer well-trained policies to a physical device.

AirGym provides four basic tasks Hovering, Balloon, Tracking, Avoid, and a higher level task Planning. All tasks are implemented on X152b quadrotor frame since this is our Sim-to-Real device.

Task Hovering: the quadrotor is expected to be initialized randomly inside a cube with a side length of 2 meters, then converge into the center and hover until the end of the episode. Also, this task can be used as "go to waypoint" task if specify a target waypoint.

Task Balloon: also called target reaching. It is essentially a variation of the hovering task, but with a key difference: the quadrotor moves at a higher speed, rapidly dashing toward the balloon (the target).

Task Tracking: tracking a sequance of waypoints which is played as a trajectory. The tracking speed is effected by the trajectory playing speed.

Task Avoid: hover and try to avoid a cube or a ball with random throwing velocity and angle. This task provides depth image as input.

Task Planning: a drone navigates and flies through a random generated woods like area, using only depth information as input. No global information is utilized in this task which means a better adaptation in a GNSS-deny environment and without a VIO.

Furthermore, you can build a customized environment and even task upon AirGym. Here is a simple demo of random assets generation.

Note: this repository has been tested on Ubuntu 20.04 PyTorch 2.0.0 + CUDA11.8 with RTX4090.

Run configuration.sh to download and install NVIDIA Isaac Gym Preview 4 and rlPx4Controller at ~, and create a new conda environment named airgym. Note that sudo is required to install apt package:

chmod +x configuration.sh

./configuration.shYou can run the example script which is a quadrotor position control illustration of 4096 parallel environments:

conda activate airgym

python airgym/scripts/example.py --task hovering --ctl_mode pos --num_envs 4The default ctl_mode is position control.

AirGym/

│

├── airgym/ # source code

│ ├── envs/ # envs definition

│ │ ├── base/ # base envs

│ │ └── task/ # tasks

│ │ ├── planning.py # task env file

│ │ ├── planning_config.py # task env config file

│ │ ├── ...

│ │ ...

│ │

│ ├── assets/ # assets

│ │ ├── env_assets/ # env assets

│ │ └── robots/ # robot assets

│ ├── scripts/ # debug scripts

│ └── utils/ # others

│

├── doc/ # doc assets

│

├── lib/ # RL algorithm

│

├── scripts/ # program scipts

│ ├── config/ # config file for training

│ ├── runner.py # program entry

│ └── ...

│

└── README.md # introductionWe train the model by a rl_games liked customed PPO (rl_games was discarded after the version 0.0.1beta because of its complexity for use). The training algorithm is at airgym/lib modified from rl_games-1.6.1. Of course you can use any other RL libs for training.

Training from scratch can be down within minites. The running log files and checkpoint is saved under runs. Using Hovering as an example:

python scripts/runner.py --ctl_mode rate --headless --task hoveringAlgorithm related parameters can be edited in .yaml files. Environment and simulator related parameters are located in ENV_config files like hovering_config.py. The ctl_mode must be spicified. tensorboard can be used for debugging.

The input arguments can be overrided:

| args | explanation |

|---|---|

| --play | Play(test) a trained network. |

| --task | Spicify a task. 'hovering', 'avoid', 'balloon', 'planning', 'tracking' |

| --num_envs | Spicify the number of parallel running environments. |

| --headless | Run in headless mode or not. |

| --ctl_mode | Spicify a control level mode from 'pos', 'vel', 'atti', 'rate', 'prop'. |

| --checkpoint | Load a trained checkpoint model from path. |

| --file | Spicify an algorithm config file for training. |

We provide two types of depth encoding methods: cnn and vae. Using cnn is to train an end-to-end model from scratch, including the image encoder, while this is often very hard. Using vae as encoder is more recommended, and we provide a pretrained depth vae model with depth input as (212,120). The vae model is frozen by default.

To select the types of encoding, please edit cfg file in scripts/config/ppo_planning:

# cnn:

# output_dim: 30

vae:

latent_dims: 64

model_folder: "/home/kjaebye/isaac/AirGym/trained" # path to your trained model directory

model_file: "vae_model.pth"

image_res: [120, 212]

interpolation_mode: "bilinear"

return_sampled_latent: FalseLoad a trained checkpoint is quite easy:

python scripts/runner.py --play --task hovering --num_envs 64 --ctl_mode rate --checkpoint <path-to-ckpt>Use planning task as an example, load and play the trained model from dir \trained:

python scripts/runner.py --play --ctl_mode rate --num_envs 4 --task planning --checkpoint trained/planning_cnn_rate.pthImportant: emNavi provide a general quadrotor sim2real approach, please refer to AirGym-Real @https://github.com/emNavi/AirGym-Real.

- Create a new

.pyfile in directoryairgym/envs/task. Usually, we use<task_name>.pyto describe an environment and a<task_name>_config.pyto describe basic environment settings. You can follow this rule. - Register new task in

airgym/envs/task/__init__.py. This is registration for AirGym environment. Note that the registration for vectorized parallel environments and training agent have been implemented automatically. Using task Planning as an example:

TASK_CONFIGS = [

{

'name': 'planning',

'config_module': 'task.planning_config',

'config_class': 'PlanningConfig',

'task_module': 'task.planning',

'task_class': 'Planning'

}

]We recommend users inherit from task hovering(no camera) and customized(depth).

We use an asset register to manage assets, and an asset manager to realize quickly assets loading and environment creating.

Before adding assets into the environment, users should register their own assets at airgym/assets/__init__.py by using function registry.register_asset(asset_name, override_params, asset_type).

| params | explanation |

|---|---|

| asset_name | The identified name of asset in airgym. |

| override_params | Customized assets parameters that can override the default settings in airgym/assets/asset_register.py. |

| asset_type | Asset type: "single" or "group". single: One asset defined in a .urdf file. group: A kind of assets that have similar features, like cubes with multiple shapes of cube1x1, cube1x4, et al. If asset type is "group", the key "path" in the override_params should be a folder. |

Here we use robot X152b as an example:

registry.register_asset(

"X152b",

override_params={

"path": f"{AIRGYM_ROOT_DIR}/airgym/assets/robots/X152b/model.urdf",

"name": "X152b",

"base_link_name": "base_link",

"foot_name": None,

"penalize_contacts_on": [],

"terminate_after_contacts_on": [],

"disable_gravity": False,

"collapse_fixed_joints": True,

"fix_base_link": False,

"collision_mask": 1,

"density": -1,

"angular_damping": 0.0,

"linear_damping": 0.0,

"replace_cylinder_with_capsule": False,

"flip_visual_attachments": False,

"max_angular_velocity": 100.,

"max_linear_velocity": 100.,

"armature": 0.001,

},

asset_type="single"

)Considering frequently changes of assets settings, we use a simple but very clear method to include your customed assets in the task, defining it in <task_name>_config.py file. Here is an example:

class asset_config:

include_robot = {

"X152b": {

"num_assets": 1,

"enable_onboard_cameras": True,

"collision_mask": 1,

}

}

include_single_asset = {

"cubes/1x1": {

"collision_mask": 0,

"num_assets": 1,

},

}

include_group_asset = {

"cubes": {

"num_assets": 10,

"collision_mask": 0,

},

}

include_boundary = {

"18x18ground": {

"num_assets": 1,

},

}To define four dictionary, you can add pre-registered assets into task. include_single_asset indicates to include a specified type of asset. include_group_asset indicatde to randomly include a group of a type of assets. include_boundary means to include a ground, or any boundaries like a wall, et al. Robots are also included by include_robot.

IMPORTANT: considering a fast debug of assets features, you can add and edit params of asset in the dictionaries, to override the original settings, which is extremly efficient during development.

Python file asset_manager.py provide clear but powerful functions to load assets, create assets, and achieving quick params overriding. Here are key functions in class AssetManager:

func load_asset(self: AssetManager, gym: gymapi.acquire_gym(), sim: gym.create_sim()):

Load and prepare assets from ENV_config.py. Overriding and saving parameters into lists, to avoid repetitive loading of assets.

func create_asset(self: AssetManager, env_handle: gym.create_env(), start_pose: gymapi.Transform(), env_id: int):

Create isaacgym actors according to prepared lists, to add assets into task.

Add multiple drone training tasks.

The hardware of emNavi-X152b is going to open-sourced soon.

TBC

LICENSE FILE We build the project upon BSD 3-Clause and claim the contributions of those who came before us.

We thanks colleagues @emNavi Tech (https://emnavi.tech/) who develope and maintain the outstanding hardware device emNavi-X152b for Sim-to-Real task.

Also, we thanks the excellent work by Aerial Gym Simulator licensed(https://github.com/ntnu-arl/aerial_gym_simulator) under the BSD 3-Clause License. AirGym is modified and greatly improved upon Aerial Gym Simulator especially for Sim-to-Real task.

@article{huang2025general,

title={A General Infrastructure and Workflow for Quadrotor Deep Reinforcement Learning and Reality Deployment},

author={Huang, Kangyao and Wang, Hao and Luo, Yu and Chen, Jingyu and Chen, Jintao and Zhang, Xiangkui and Ji, Xiangyang and Liu, Huaping},

journal={arXiv preprint arXiv:2504.15129},

year={2025}

}

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for AirGym

Similar Open Source Tools

AirGym

AirGym is an open source Python quadrotor simulator based on IsaacGym, providing a high-fidelity dynamics and Deep Reinforcement Learning (DRL) framework for quadrotor robot learning research. It offers a lightweight and customizable platform with strict alignment with PX4 logic, multiple control modes, and Sim-to-Real toolkits. Users can perform tasks such as Hovering, Balloon, Tracking, Avoid, and Planning, with the ability to create customized environments and tasks. The tool also supports training from scratch, visual encoding approaches, playing and testing of trained models, and customization of new tasks and assets.

embodied-agents

Embodied Agents is a toolkit for integrating large multi-modal models into existing robot stacks with just a few lines of code. It provides consistency, reliability, scalability, and is configurable to any observation and action space. The toolkit is designed to reduce complexities involved in setting up inference endpoints, converting between different model formats, and collecting/storing datasets. It aims to facilitate data collection and sharing among roboticists by providing Python-first abstractions that are modular, extensible, and applicable to a wide range of tasks. The toolkit supports asynchronous and remote thread-safe agent execution for maximal responsiveness and scalability, and is compatible with various APIs like HuggingFace Spaces, Datasets, Gymnasium Spaces, Ollama, and OpenAI. It also offers automatic dataset recording and optional uploads to the HuggingFace hub.

exospherehost

Exosphere is an open source infrastructure designed to run AI agents at scale for large data and long running flows. It allows developers to define plug and playable nodes that can be run on a reliable backbone in the form of a workflow, with features like dynamic state creation at runtime, infinite parallel agents, persistent state management, and failure handling. This enables the deployment of production agents that can scale beautifully to build robust autonomous AI workflows.

kwaak

Kwaak is a tool that allows users to run a team of autonomous AI agents locally from their own machine. It enables users to write code, improve test coverage, update documentation, and enhance code quality while focusing on building innovative projects. Kwaak is designed to run multiple agents in parallel, interact with codebases, answer questions about code, find examples, write and execute code, create pull requests, and more. It is free and open-source, allowing users to bring their own API keys or models via Ollama. Kwaak is part of the bosun.ai project, aiming to be a platform for autonomous code improvement.

AgentFly

AgentFly is an extensible framework for building LLM agents with reinforcement learning. It supports multi-turn training by adapting traditional RL methods with token-level masking. It features a decorator-based interface for defining tools and reward functions, enabling seamless extension and ease of use. To support high-throughput training, it implemented asynchronous execution of tool calls and reward computations, and designed a centralized resource management system for scalable environment coordination. A suite of prebuilt tools and environments are provided.

memobase

Memobase is a user profile-based memory system designed to enhance Generative AI applications by enabling them to remember, understand, and evolve with users. It provides structured user profiles, scalable profiling, easy integration with existing LLM stacks, batch processing for speed, and is production-ready. Users can manage users, insert data, get memory profiles, and track user preferences and behaviors. Memobase is ideal for applications that require user analysis, tracking, and personalized interactions.

gfm-rag

The GFM-RAG is a graph foundation model-powered pipeline that combines graph neural networks to reason over knowledge graphs and retrieve relevant documents for question answering. It features a knowledge graph index, efficiency in multi-hop reasoning, generalizability to unseen datasets, transferability for fine-tuning, compatibility with agent-based frameworks, and interpretability of reasoning paths. The tool can be used for conducting retrieval and question answering tasks using pre-trained models or fine-tuning on custom datasets.

marqo

Marqo is more than a vector database, it's an end-to-end vector search engine for both text and images. Vector generation, storage and retrieval are handled out of the box through a single API. No need to bring your own embeddings.

swe-rl

SWE-RL is the official codebase for the paper 'SWE-RL: Advancing LLM Reasoning via Reinforcement Learning on Open Software Evolution'. It is the first approach to scale reinforcement learning based LLM reasoning for real-world software engineering, leveraging open-source software evolution data and rule-based rewards. The code provides prompt templates and the implementation of the reward function based on sequence similarity. Agentless Mini, a part of SWE-RL, builds on top of Agentless with improvements like fast async inference, code refactoring for scalability, and support for using multiple reproduction tests for reranking. The tool can be used for localization, repair, and reproduction test generation in software engineering tasks.

agentlang

AgentLang is an open-source programming language and framework designed for solving complex tasks with the help of AI agents. It allows users to build business applications rapidly from high-level specifications, making it more efficient than traditional programming languages. The language is data-oriented and declarative, with a syntax that is intuitive and closer to natural languages. AgentLang introduces innovative concepts such as first-class AI agents, graph-based hierarchical data model, zero-trust programming, declarative dataflow, resolvers, interceptors, and entity-graph-database mapping.

lionagi

LionAGI is a powerful intelligent workflow automation framework that introduces advanced ML models into any existing workflows and data infrastructure. It can interact with almost any model, run interactions in parallel for most models, produce structured pydantic outputs with flexible usage, automate workflow via graph based agents, use advanced prompting techniques, and more. LionAGI aims to provide a centralized agent-managed framework for "ML-powered tools coordination" and to dramatically lower the barrier of entries for creating use-case/domain specific tools. It is designed to be asynchronous only and requires Python 3.10 or higher.

bigcodebench

BigCodeBench is an easy-to-use benchmark for code generation with practical and challenging programming tasks. It aims to evaluate the true programming capabilities of large language models (LLMs) in a more realistic setting. The benchmark is designed for HumanEval-like function-level code generation tasks, but with much more complex instructions and diverse function calls. BigCodeBench focuses on the evaluation of LLM4Code with diverse function calls and complex instructions, providing precise evaluation & ranking and pre-generated samples to accelerate code intelligence research. It inherits the design of the EvalPlus framework but differs in terms of execution environment and test evaluation.

flow-prompt

Flow Prompt is a dynamic library for managing and optimizing prompts for large language models. It facilitates budget-aware operations, dynamic data integration, and efficient load distribution. Features include CI/CD testing, dynamic prompt development, multi-model support, real-time insights, and prompt testing and evolution.

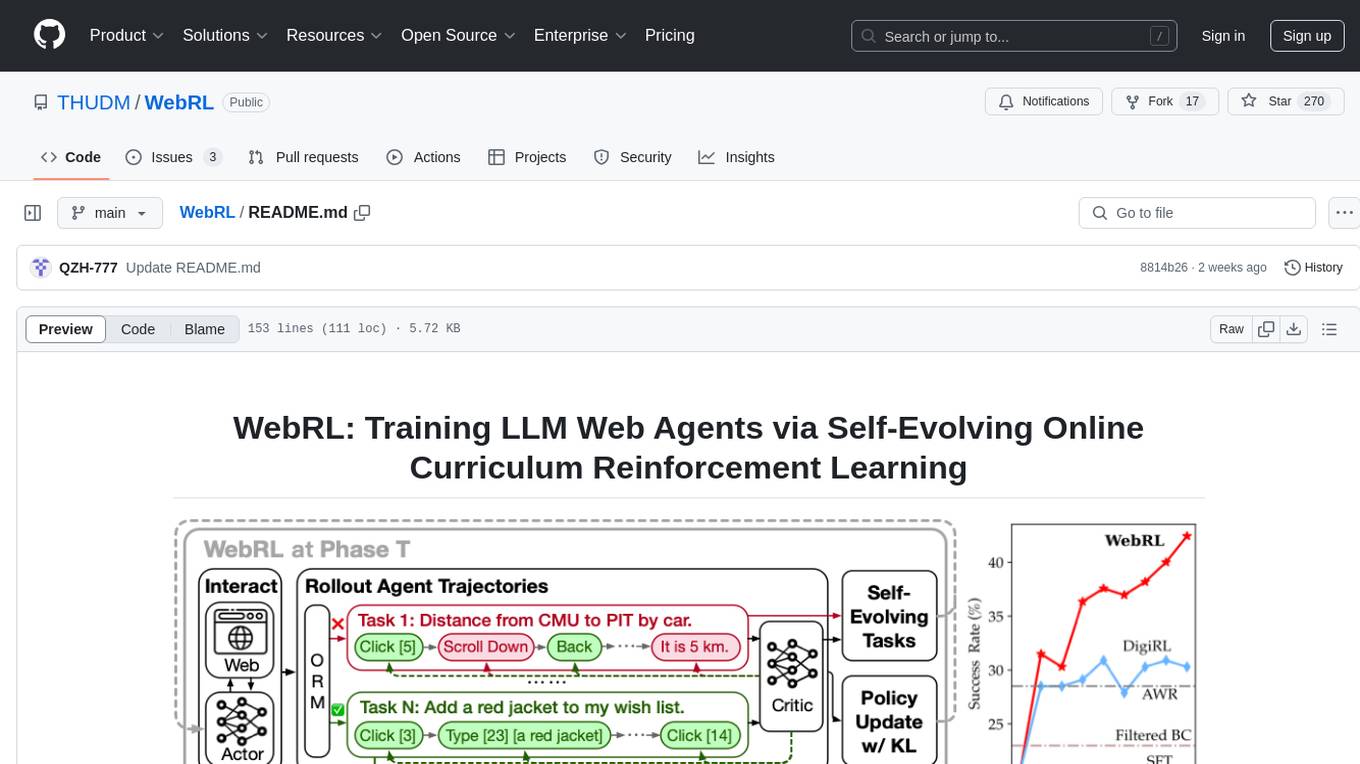

WebRL

WebRL is a self-evolving online curriculum learning framework designed for training web agents in the WebArena environment. It provides model checkpoints, training instructions, and evaluation processes for training the actor and critic models. The tool enables users to generate new instructions and interact with WebArena to configure tasks for training and evaluation.

curator

Bespoke Curator is an open-source tool for data curation and structured data extraction. It provides a Python library for generating synthetic data at scale, with features like programmability, performance optimization, caching, and integration with HuggingFace Datasets. The tool includes a Curator Viewer for dataset visualization and offers a rich set of functionalities for creating and refining data generation strategies.

Biomni

Biomni is a general-purpose biomedical AI agent designed to autonomously execute a wide range of research tasks across diverse biomedical subfields. By integrating cutting-edge large language model (LLM) reasoning with retrieval-augmented planning and code-based execution, Biomni helps scientists dramatically enhance research productivity and generate testable hypotheses.

For similar tasks

Tools4AI

Tools4AI is a Java-based Agentic Framework for building AI agents to integrate with enterprise Java applications. It enables the conversion of natural language prompts into actionable behaviors, streamlining user interactions with complex systems. By leveraging AI capabilities, it enhances productivity and innovation across diverse applications. The framework allows for seamless integration of AI with various systems, such as customer service applications, to interpret user requests, trigger actions, and streamline workflows. Prompt prediction anticipates user actions based on input prompts, enhancing user experience by proactively suggesting relevant actions or services based on context.

AirGym

AirGym is an open source Python quadrotor simulator based on IsaacGym, providing a high-fidelity dynamics and Deep Reinforcement Learning (DRL) framework for quadrotor robot learning research. It offers a lightweight and customizable platform with strict alignment with PX4 logic, multiple control modes, and Sim-to-Real toolkits. Users can perform tasks such as Hovering, Balloon, Tracking, Avoid, and Planning, with the ability to create customized environments and tasks. The tool also supports training from scratch, visual encoding approaches, playing and testing of trained models, and customization of new tasks and assets.

ezlocalai

ezlocalai is an artificial intelligence server that simplifies running multimodal AI models locally. It handles model downloading and server configuration based on hardware specs. It offers OpenAI Style endpoints for integration, voice cloning, text-to-speech, voice-to-text, and offline image generation. Users can modify environment variables for customization. Supports NVIDIA GPU and CPU setups. Provides demo UI and workflow visualization for easy usage.

BotSharp-UI

BotSharp UI is a web app for managing agents and conversations. It allows users to build new AI assistants quickly using a Node-based Agent building experience. The project is written in SvelteKit v2 and utilizes BotSharp as the LLM services.

stable-diffusion-webui

Stable Diffusion WebUI Docker Image allows users to run Automatic1111 WebUI in a docker container locally or in the cloud. The images do not bundle models or third-party configurations, requiring users to use a provisioning script for container configuration. It supports NVIDIA CUDA, AMD ROCm, and CPU platforms, with additional environment variables for customization and pre-configured templates for Vast.ai and Runpod.io. The service is password protected by default, with options for version pinning, startup flags, and service management using supervisorctl.

ai-accelerator

The AI Accelerator project source code is designed to initialize an OpenShift cluster with a recommended set of operators and components for training, deploying, serving, and monitoring Machine Learning models. It provides core OpenShift features for Data Science environments and can be customized for specific scenarios. The project automates IT infrastructure using GitOps practices, including Git, code review, and CI/CD. ArgoCD Application objects are used to manage the installation of operators on the cluster.

vllm

vLLM is a fast and easy-to-use library for LLM inference and serving. It is designed to be efficient, flexible, and easy to use. vLLM can be used to serve a variety of LLM models, including Hugging Face models. It supports a variety of decoding algorithms, including parallel sampling, beam search, and more. vLLM also supports tensor parallelism for distributed inference and streaming outputs. It is open-source and available on GitHub.

bce-qianfan-sdk

The Qianfan SDK provides best practices for large model toolchains, allowing AI workflows and AI-native applications to access the Qianfan large model platform elegantly and conveniently. The core capabilities of the SDK include three parts: large model reasoning, large model training, and general and extension: * `Large model reasoning`: Implements interface encapsulation for reasoning of Yuyan (ERNIE-Bot) series, open source large models, etc., supporting dialogue, completion, Embedding, etc. * `Large model training`: Based on platform capabilities, it supports end-to-end large model training process, including training data, fine-tuning/pre-training, and model services. * `General and extension`: General capabilities include common AI development tools such as Prompt/Debug/Client. The extension capability is based on the characteristics of Qianfan to adapt to common middleware frameworks.

For similar jobs

Autono

A highly robust autonomous agent framework based on the ReAct paradigm, designed for adaptive decision making and multi-agent collaboration. It dynamically generates next actions during agent execution, enhancing robustness. Features a timely abandonment strategy and memory transfer mechanism for multi-agent collaboration. The framework allows developers to balance conservative and exploratory tendencies in agent execution strategies, improving adaptability and task execution efficiency in complex environments. Supports external tool integration, modular design, and MCP protocol compatibility for flexible action space expansion. Multi-agent collaboration mechanism enables agents to focus on specific task components, improving execution efficiency and quality.

ProjectAirSim

Project AirSim is a simulation platform for drones, robots, and autonomous systems. Leveraging Unreal Engine 5, it provides photo-realistic visuals and a simulation framework for custom physics, controllers, actuators, and sensors. It consists of three main layers: Sim Libs, Plugin, and Client Library. It supports Windows 11 and Ubuntu 22, inviting collaboration and enterprise support. Users can join the community, contribute to the roadmap, and get started with pre-built binaries or building from source. It offers headless running options and references for configuration settings, API, controllers, sensors, scene, physics, and FAQ.

AirGym

AirGym is an open source Python quadrotor simulator based on IsaacGym, providing a high-fidelity dynamics and Deep Reinforcement Learning (DRL) framework for quadrotor robot learning research. It offers a lightweight and customizable platform with strict alignment with PX4 logic, multiple control modes, and Sim-to-Real toolkits. Users can perform tasks such as Hovering, Balloon, Tracking, Avoid, and Planning, with the ability to create customized environments and tasks. The tool also supports training from scratch, visual encoding approaches, playing and testing of trained models, and customization of new tasks and assets.

Awesome-Trustworthy-Embodied-AI

The Awesome Trustworthy Embodied AI repository focuses on the development of safe and trustworthy Embodied Artificial Intelligence (EAI) systems. It addresses critical challenges related to safety and trustworthiness in EAI, proposing a unified research framework and defining levels of safety and resilience. The repository provides a comprehensive review of state-of-the-art solutions, benchmarks, and evaluation metrics, aiming to bridge the gap between capability advancement and safety mechanisms in EAI development.

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.