vllm

A high-throughput and memory-efficient inference and serving engine for LLMs

Stars: 59085

vLLM is a fast and easy-to-use library for LLM inference and serving. It is designed to be efficient, flexible, and easy to use. vLLM can be used to serve a variety of LLM models, including Hugging Face models. It supports a variety of decoding algorithms, including parallel sampling, beam search, and more. vLLM also supports tensor parallelism for distributed inference and streaming outputs. It is open-source and available on GitHub.

README:

| Documentation | Blog | Paper | Twitter/X | User Forum | Developer Slack |

Join us at the PyTorch Conference, October 22-23 and Ray Summit, November 3-5 in San Francisco for our latest updates on vLLM and to meet the vLLM team! Register now for the largest vLLM community events of the year!

Latest News 🔥

- [2025/09] We hosted vLLM Toronto Meetup focused on tackling inference at scale and speculative decoding with speakers from NVIDIA and Red Hat! Please find the meetup slides here.

- [2025/08] We hosted vLLM Shenzhen Meetup focusing on the ecosystem around vLLM! Please find the meetup slides here.

- [2025/08] We hosted vLLM Singapore Meetup. We shared V1 updates, disaggregated serving and MLLM speedups with speakers from Embedded LLM, AMD, WekaIO, and A*STAR. Please find the meetup slides here.

- [2025/08] We hosted vLLM Shanghai Meetup focusing on building, developing, and integrating with vLLM! Please find the meetup slides here.

- [2025/05] vLLM is now a hosted project under PyTorch Foundation! Please find the announcement here.

- [2025/01] We are excited to announce the alpha release of vLLM V1: A major architectural upgrade with 1.7x speedup! Clean code, optimized execution loop, zero-overhead prefix caching, enhanced multimodal support, and more. Please check out our blog post here.

Previous News

- [2025/08] We hosted vLLM Korea Meetup with Red Hat and Rebellions! We shared the latest advancements in vLLM along with project spotlights from the vLLM Korea community. Please find the meetup slides here.

- [2025/08] We hosted vLLM Beijing Meetup focusing on large-scale LLM deployment! Please find the meetup slides here and the recording here.

- [2025/05] We hosted NYC vLLM Meetup! Please find the meetup slides here.

- [2025/04] We hosted Asia Developer Day! Please find the meetup slides from the vLLM team here.

- [2025/03] We hosted vLLM x Ollama Inference Night! Please find the meetup slides from the vLLM team here.

- [2025/03] We hosted the first vLLM China Meetup! Please find the meetup slides from vLLM team here.

- [2025/03] We hosted the East Coast vLLM Meetup! Please find the meetup slides here.

- [2025/02] We hosted the ninth vLLM meetup with Meta! Please find the meetup slides from vLLM team here and AMD here. The slides from Meta will not be posted.

- [2025/01] We hosted the eighth vLLM meetup with Google Cloud! Please find the meetup slides from vLLM team here, and Google Cloud team here.

- [2024/12] vLLM joins pytorch ecosystem! Easy, Fast, and Cheap LLM Serving for Everyone!

- [2024/11] We hosted the seventh vLLM meetup with Snowflake! Please find the meetup slides from vLLM team here, and Snowflake team here.

- [2024/10] We have just created a developer slack (slack.vllm.ai) focusing on coordinating contributions and discussing features. Please feel free to join us there!

- [2024/10] Ray Summit 2024 held a special track for vLLM! Please find the opening talk slides from the vLLM team here. Learn more from the talks from other vLLM contributors and users!

- [2024/09] We hosted the sixth vLLM meetup with NVIDIA! Please find the meetup slides here.

- [2024/07] We hosted the fifth vLLM meetup with AWS! Please find the meetup slides here.

- [2024/07] In partnership with Meta, vLLM officially supports Llama 3.1 with FP8 quantization and pipeline parallelism! Please check out our blog post here.

- [2024/06] We hosted the fourth vLLM meetup with Cloudflare and BentoML! Please find the meetup slides here.

- [2024/04] We hosted the third vLLM meetup with Roblox! Please find the meetup slides here.

- [2024/01] We hosted the second vLLM meetup with IBM! Please find the meetup slides here.

- [2023/10] We hosted the first vLLM meetup with a16z! Please find the meetup slides here.

- [2023/08] We would like to express our sincere gratitude to Andreessen Horowitz (a16z) for providing a generous grant to support the open-source development and research of vLLM.

- [2023/06] We officially released vLLM! FastChat-vLLM integration has powered LMSYS Vicuna and Chatbot Arena since mid-April. Check out our blog post.

vLLM is a fast and easy-to-use library for LLM inference and serving.

Originally developed in the Sky Computing Lab at UC Berkeley, vLLM has evolved into a community-driven project with contributions from both academia and industry.

vLLM is fast with:

- State-of-the-art serving throughput

- Efficient management of attention key and value memory with PagedAttention

- Continuous batching of incoming requests

- Fast model execution with CUDA/HIP graph

- Quantizations: GPTQ, AWQ, AutoRound, INT4, INT8, and FP8

- Optimized CUDA kernels, including integration with FlashAttention and FlashInfer

- Speculative decoding

- Chunked prefill

vLLM is flexible and easy to use with:

- Seamless integration with popular Hugging Face models

- High-throughput serving with various decoding algorithms, including parallel sampling, beam search, and more

- Tensor, pipeline, data and expert parallelism support for distributed inference

- Streaming outputs

- OpenAI-compatible API server

- Support for NVIDIA GPUs, AMD CPUs and GPUs, Intel CPUs and GPUs, PowerPC CPUs, and TPU. Additionally, support for diverse hardware plugins such as Intel Gaudi, IBM Spyre and Huawei Ascend.

- Prefix caching support

- Multi-LoRA support

vLLM seamlessly supports most popular open-source models on HuggingFace, including:

- Transformer-like LLMs (e.g., Llama)

- Mixture-of-Expert LLMs (e.g., Mixtral, Deepseek-V2 and V3)

- Embedding Models (e.g., E5-Mistral)

- Multi-modal LLMs (e.g., LLaVA)

Find the full list of supported models here.

Install vLLM with pip or from source:

pip install vllmVisit our documentation to learn more.

We welcome and value any contributions and collaborations. Please check out Contributing to vLLM for how to get involved.

vLLM is a community project. Our compute resources for development and testing are supported by the following organizations. Thank you for your support!

Cash Donations:

- a16z

- Dropbox

- Sequoia Capital

- Skywork AI

- ZhenFund

Compute Resources:

- Alibaba Cloud

- AMD

- Anyscale

- AWS

- Crusoe Cloud

- Databricks

- DeepInfra

- Google Cloud

- Intel

- Lambda Lab

- Nebius

- Novita AI

- NVIDIA

- Replicate

- Roblox

- RunPod

- Trainy

- UC Berkeley

- UC San Diego

Slack Sponsor: Anyscale

We also have an official fundraising venue through OpenCollective. We plan to use the fund to support the development, maintenance, and adoption of vLLM.

If you use vLLM for your research, please cite our paper:

@inproceedings{kwon2023efficient,

title={Efficient Memory Management for Large Language Model Serving with PagedAttention},

author={Woosuk Kwon and Zhuohan Li and Siyuan Zhuang and Ying Sheng and Lianmin Zheng and Cody Hao Yu and Joseph E. Gonzalez and Hao Zhang and Ion Stoica},

booktitle={Proceedings of the ACM SIGOPS 29th Symposium on Operating Systems Principles},

year={2023}

}- For technical questions and feature requests, please use GitHub Issues

- For discussing with fellow users, please use the vLLM Forum

- For coordinating contributions and development, please use Slack

- For security disclosures, please use GitHub's Security Advisories feature

- For collaborations and partnerships, please contact us at [email protected]

- If you wish to use vLLM's logo, please refer to our media kit repo

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for vllm

Similar Open Source Tools

vllm

vLLM is a fast and easy-to-use library for LLM inference and serving. It is designed to be efficient, flexible, and easy to use. vLLM can be used to serve a variety of LLM models, including Hugging Face models. It supports a variety of decoding algorithms, including parallel sampling, beam search, and more. vLLM also supports tensor parallelism for distributed inference and streaming outputs. It is open-source and available on GitHub.

deeppowers

Deeppowers is a powerful Python library for deep learning applications. It provides a wide range of tools and utilities to simplify the process of building and training deep neural networks. With Deeppowers, users can easily create complex neural network architectures, perform efficient training and optimization, and deploy models for various tasks. The library is designed to be user-friendly and flexible, making it suitable for both beginners and experienced deep learning practitioners.

LightLLM

LightLLM is a lightweight library for linear and logistic regression models. It provides a simple and efficient way to train and deploy machine learning models for regression tasks. The library is designed to be easy to use and integrate into existing projects, making it suitable for both beginners and experienced data scientists. With LightLLM, users can quickly build and evaluate regression models using a variety of algorithms and hyperparameters. The library also supports feature engineering and model interpretation, allowing users to gain insights from their data and make informed decisions based on the model predictions.

ml-retreat

ML-Retreat is a comprehensive machine learning library designed to simplify and streamline the process of building and deploying machine learning models. It provides a wide range of tools and utilities for data preprocessing, model training, evaluation, and deployment. With ML-Retreat, users can easily experiment with different algorithms, hyperparameters, and feature engineering techniques to optimize their models. The library is built with a focus on scalability, performance, and ease of use, making it suitable for both beginners and experienced machine learning practitioners.

BentoVLLM

BentoVLLM is an example project demonstrating how to serve and deploy open-source Large Language Models using vLLM, a high-throughput and memory-efficient inference engine. It provides a basis for advanced code customization, such as custom models, inference logic, or vLLM options. The project allows for simple LLM hosting with OpenAI compatible endpoints without the need to write any code. Users can interact with the server using Swagger UI or other methods, and the service can be deployed to BentoCloud for better management and scalability. Additionally, the repository includes integration examples for different LLM models and tools.

Fast-LLM

Fast-LLM is an open-source library designed for training large language models with exceptional speed, scalability, and flexibility. Built on PyTorch and Triton, it offers optimized kernel efficiency, reduced overheads, and memory usage, making it suitable for training models of all sizes. The library supports distributed training across multiple GPUs and nodes, offers flexibility in model architectures, and is easy to use with pre-built Docker images and simple configuration. Fast-LLM is licensed under Apache 2.0, developed transparently on GitHub, and encourages contributions and collaboration from the community.

Awesome-Efficient-MoE

Awesome Efficient MoE is a GitHub repository that provides an implementation of Mixture of Experts (MoE) models for efficient deep learning. The repository includes code for training and using MoE models, which are neural network architectures that combine multiple expert networks to improve performance on complex tasks. MoE models are particularly useful for handling diverse data distributions and capturing complex patterns in data. The implementation in this repository is designed to be efficient and scalable, making it suitable for training large-scale MoE models on modern hardware. The code is well-documented and easy to use, making it accessible for researchers and practitioners interested in leveraging MoE models for their deep learning projects.

deepteam

Deepteam is a powerful open-source tool designed for deep learning projects. It provides a user-friendly interface for training, testing, and deploying deep neural networks. With Deepteam, users can easily create and manage complex models, visualize training progress, and optimize hyperparameters. The tool supports various deep learning frameworks and allows seamless integration with popular libraries like TensorFlow and PyTorch. Whether you are a beginner or an experienced deep learning practitioner, Deepteam simplifies the development process and accelerates model deployment.

DelhiLM

DelhiLM is a natural language processing tool for building and training language models. It provides a user-friendly interface for text processing tasks such as tokenization, lemmatization, and language model training. With DelhiLM, users can easily preprocess text data and train custom language models for various NLP applications. The tool supports different languages and allows for fine-tuning pre-trained models to suit specific needs. DelhiLM is designed to be flexible, efficient, and easy to use for both beginners and experienced NLP practitioners.

pdr_ai_v2

pdr_ai_v2 is a Python library for implementing machine learning algorithms and models. It provides a wide range of tools and functionalities for data preprocessing, model training, evaluation, and deployment. The library is designed to be user-friendly and efficient, making it suitable for both beginners and experienced data scientists. With pdr_ai_v2, users can easily build and deploy machine learning models for various applications, such as classification, regression, clustering, and more.

open-webui-tools

Open WebUI Tools Collection is a set of tools for structured planning, arXiv paper search, Hugging Face text-to-image generation, prompt enhancement, and multi-model conversations. It enhances LLM interactions with academic research, image generation, and conversation management. Tools include arXiv Search Tool and Hugging Face Image Generator. Function Pipes like Planner Agent offer autonomous plan generation and execution. Filters like Prompt Enhancer improve prompt quality. Installation and configuration instructions are provided for each tool and pipe.

graphbit

GraphBit is an industry-grade agentic AI framework built for developers and AI teams that demand stability, scalability, and low resource usage. It is written in Rust for maximum performance and safety, delivering significantly lower CPU usage and memory footprint compared to leading alternatives. The framework is designed to run multi-agent workflows in parallel, persist memory across steps, recover from failures, and ensure 100% task success under load. With lightweight architecture, observability, and concurrency support, GraphBit is suitable for deployment in high-scale enterprise environments and low-resource edge scenarios.

GraphLLM

GraphLLM is a graph-based framework designed to process data using LLMs. It offers a set of tools including a web scraper, PDF parser, YouTube subtitles downloader, Python sandbox, and TTS engine. The framework provides a GUI for building and debugging graphs with advanced features like loops, conditionals, parallel execution, streaming of results, hierarchical graphs, external tool integration, and dynamic scheduling. GraphLLM is a low-level framework that gives users full control over the raw prompt and output of models, with a steeper learning curve. It is tested with llama70b and qwen 32b, under heavy development with breaking changes expected.

FLAME

FLAME is a lightweight and efficient deep learning framework designed for edge devices. It provides a simple and user-friendly interface for developing and deploying deep learning models on resource-constrained devices. With FLAME, users can easily build and optimize neural networks for tasks such as image classification, object detection, and natural language processing. The framework supports various neural network architectures and optimization techniques, making it suitable for a wide range of applications in the field of edge computing.

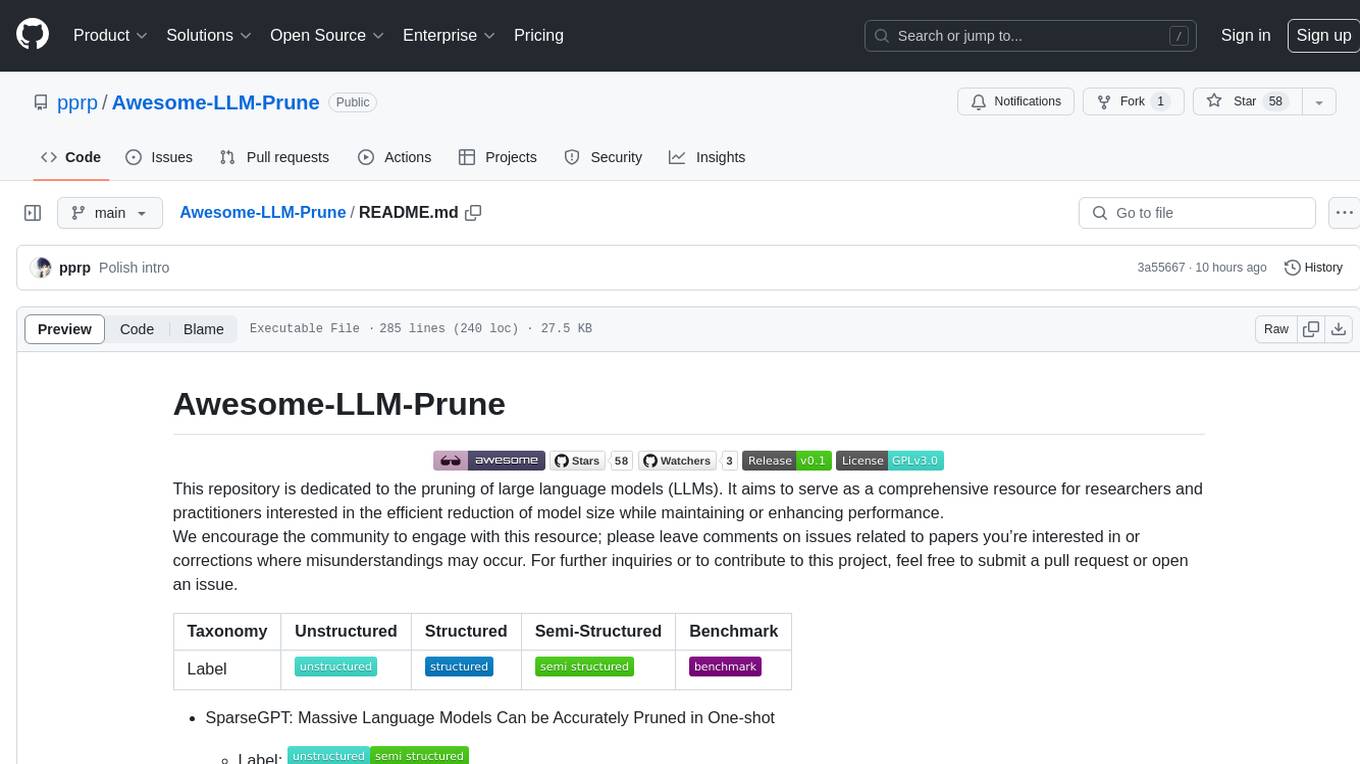

Awesome-LLM-Prune

This repository is dedicated to the pruning of large language models (LLMs). It aims to serve as a comprehensive resource for researchers and practitioners interested in the efficient reduction of model size while maintaining or enhancing performance. The repository contains various papers, summaries, and links related to different pruning approaches for LLMs, along with author information and publication details. It covers a wide range of topics such as structured pruning, unstructured pruning, semi-structured pruning, and benchmarking methods. Researchers and practitioners can explore different pruning techniques, understand their implications, and access relevant resources for further study and implementation.

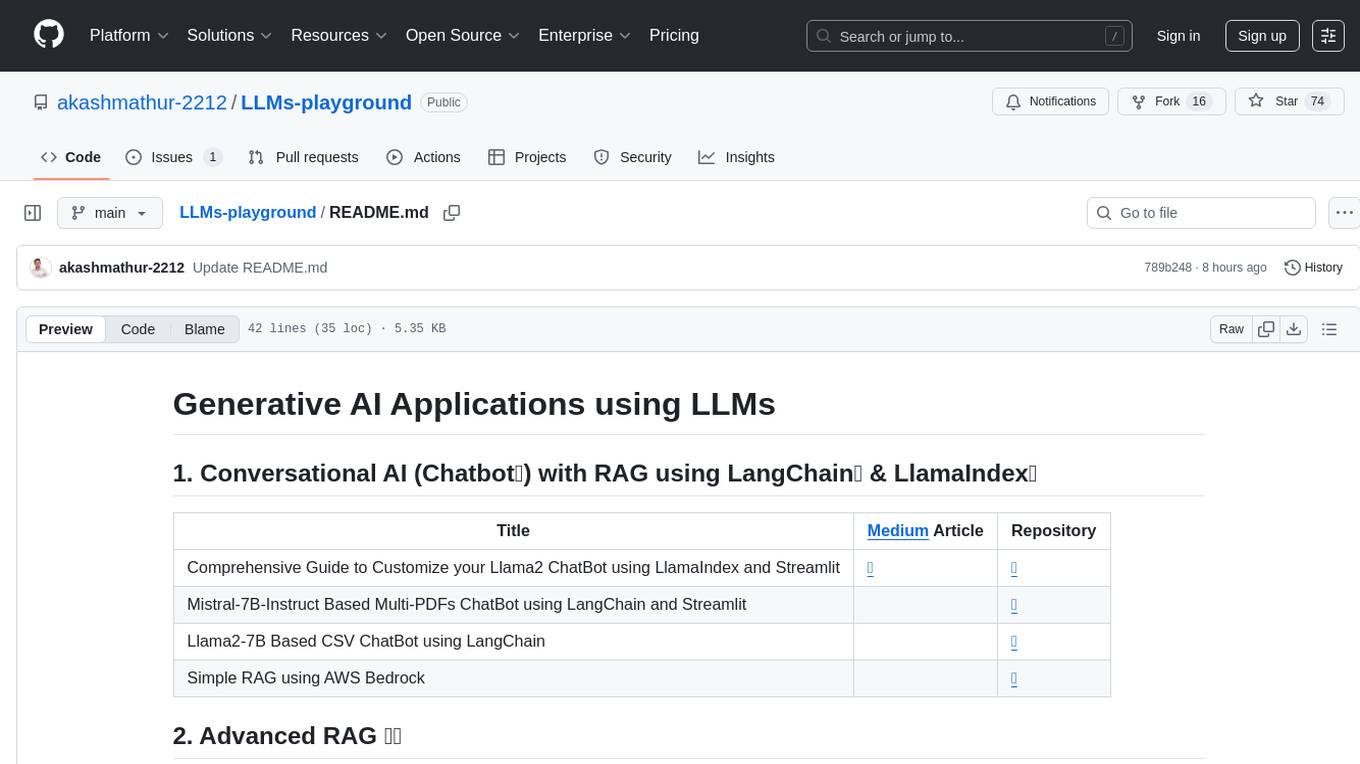

LLMs-playground

LLMs-playground is a repository containing code examples and tutorials for learning and experimenting with Large Language Models (LLMs). It provides a hands-on approach to understanding how LLMs work and how to fine-tune them for specific tasks. The repository covers various LLM architectures, pre-training techniques, and fine-tuning strategies, making it a valuable resource for researchers, students, and practitioners interested in natural language processing and machine learning. By exploring the code and following the tutorials, users can gain practical insights into working with LLMs and apply their knowledge to real-world projects.

For similar tasks

vllm

vLLM is a fast and easy-to-use library for LLM inference and serving. It is designed to be efficient, flexible, and easy to use. vLLM can be used to serve a variety of LLM models, including Hugging Face models. It supports a variety of decoding algorithms, including parallel sampling, beam search, and more. vLLM also supports tensor parallelism for distributed inference and streaming outputs. It is open-source and available on GitHub.

bce-qianfan-sdk

The Qianfan SDK provides best practices for large model toolchains, allowing AI workflows and AI-native applications to access the Qianfan large model platform elegantly and conveniently. The core capabilities of the SDK include three parts: large model reasoning, large model training, and general and extension: * `Large model reasoning`: Implements interface encapsulation for reasoning of Yuyan (ERNIE-Bot) series, open source large models, etc., supporting dialogue, completion, Embedding, etc. * `Large model training`: Based on platform capabilities, it supports end-to-end large model training process, including training data, fine-tuning/pre-training, and model services. * `General and extension`: General capabilities include common AI development tools such as Prompt/Debug/Client. The extension capability is based on the characteristics of Qianfan to adapt to common middleware frameworks.

dstack

Dstack is an open-source orchestration engine for running AI workloads in any cloud. It supports a wide range of cloud providers (such as AWS, GCP, Azure, Lambda, TensorDock, Vast.ai, CUDO, RunPod, etc.) as well as on-premises infrastructure. With Dstack, you can easily set up and manage dev environments, tasks, services, and pools for your AI workloads.

tiny-llm-zh

Tiny LLM zh is a project aimed at building a small-parameter Chinese language large model for quick entry into learning large model-related knowledge. The project implements a two-stage training process for large models and subsequent human alignment, including tokenization, pre-training, instruction fine-tuning, human alignment, evaluation, and deployment. It is deployed on ModeScope Tiny LLM website and features open access to all data and code, including pre-training data and tokenizer. The project trains a tokenizer using 10GB of Chinese encyclopedia text to build a Tiny LLM vocabulary. It supports training with Transformers deepspeed, multiple machine and card support, and Zero optimization techniques. The project has three main branches: llama2_torch, main tiny_llm, and tiny_llm_moe, each with specific modifications and features.

examples

Cerebrium's official examples repository provides practical, ready-to-use examples for building Machine Learning / AI applications on the platform. The repository contains self-contained projects demonstrating specific use cases with detailed instructions on deployment. Examples cover a wide range of categories such as getting started, advanced concepts, endpoints, integrations, large language models, voice, image & video, migrations, application demos, batching, and Python apps.

HuaTuoAI

HuaTuoAI is an artificial intelligence image classification system specifically designed for traditional Chinese medicine. It utilizes deep learning techniques, such as Convolutional Neural Networks (CNN), to accurately classify Chinese herbs and ingredients based on input images. The project aims to unlock the secrets of plants, depict the unknown realm of Chinese medicine using technology and intelligence, and perpetuate ancient cultural heritage.

vector-inference

This repository provides an easy-to-use solution for running inference servers on Slurm-managed computing clusters using vLLM. All scripts in this repository run natively on the Vector Institute cluster environment. Users can deploy models as Slurm jobs, check server status and performance metrics, and shut down models. The repository also supports launching custom models with specific configurations. Additionally, users can send inference requests and set up an SSH tunnel to run inference from a local device.

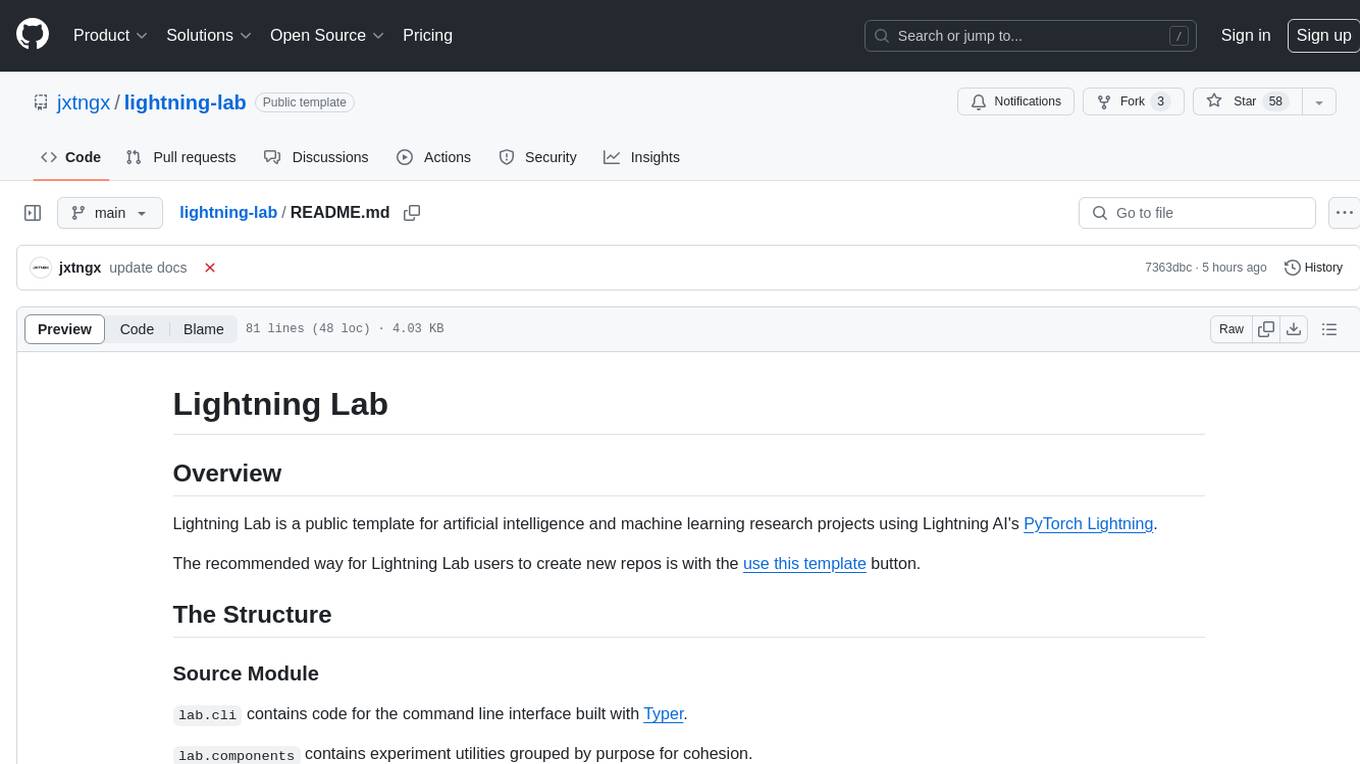

lightning-lab

Lightning Lab is a public template for artificial intelligence and machine learning research projects using Lightning AI's PyTorch Lightning. It provides a structured project layout with modules for command line interface, experiment utilities, Lightning Module and Trainer, data acquisition and preprocessing, model serving APIs, project configurations, training checkpoints, technical documentation, logs, notebooks for data analysis, requirements management, testing, and packaging. The template simplifies the setup of deep learning projects and offers extras for different domains like vision, text, audio, reinforcement learning, and forecasting.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.

oss-fuzz-gen

This framework generates fuzz targets for real-world `C`/`C++` projects with various Large Language Models (LLM) and benchmarks them via the `OSS-Fuzz` platform. It manages to successfully leverage LLMs to generate valid fuzz targets (which generate non-zero coverage increase) for 160 C/C++ projects. The maximum line coverage increase is 29% from the existing human-written targets.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.