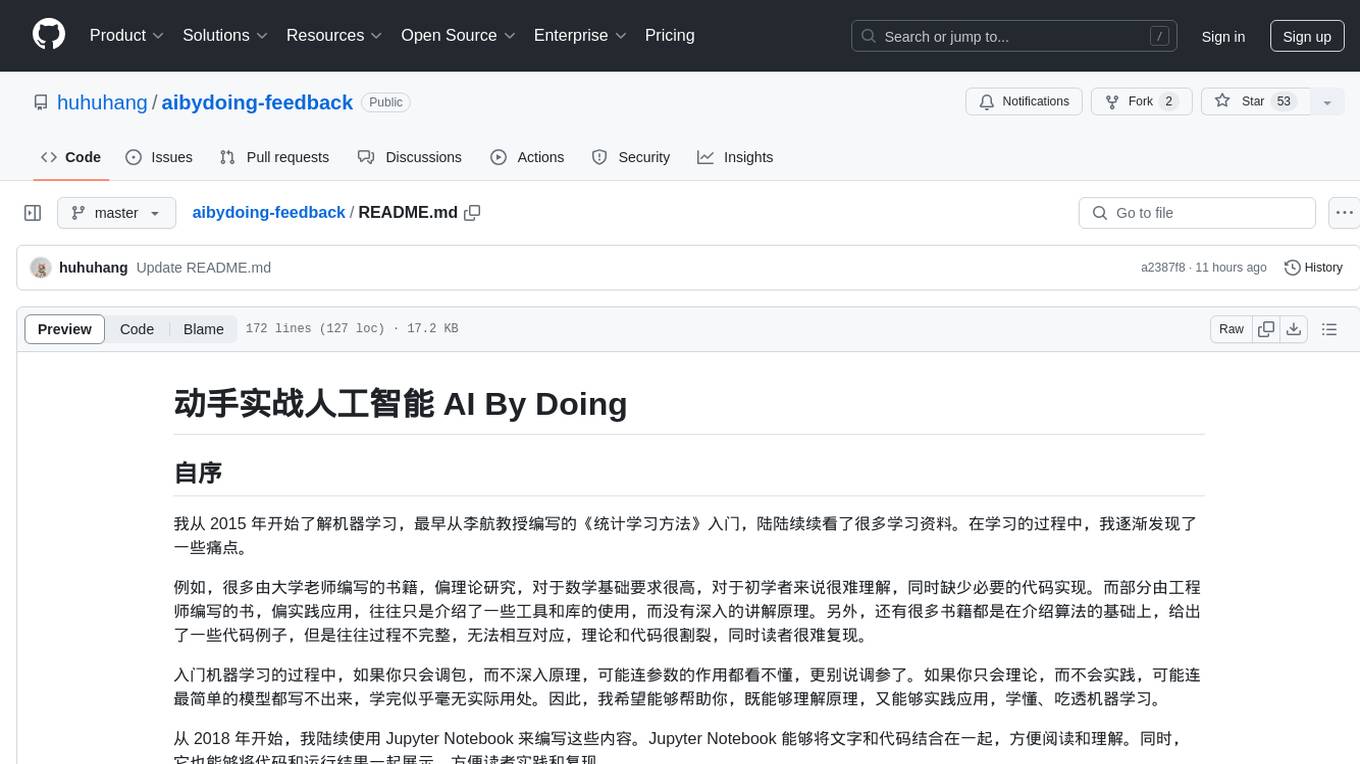

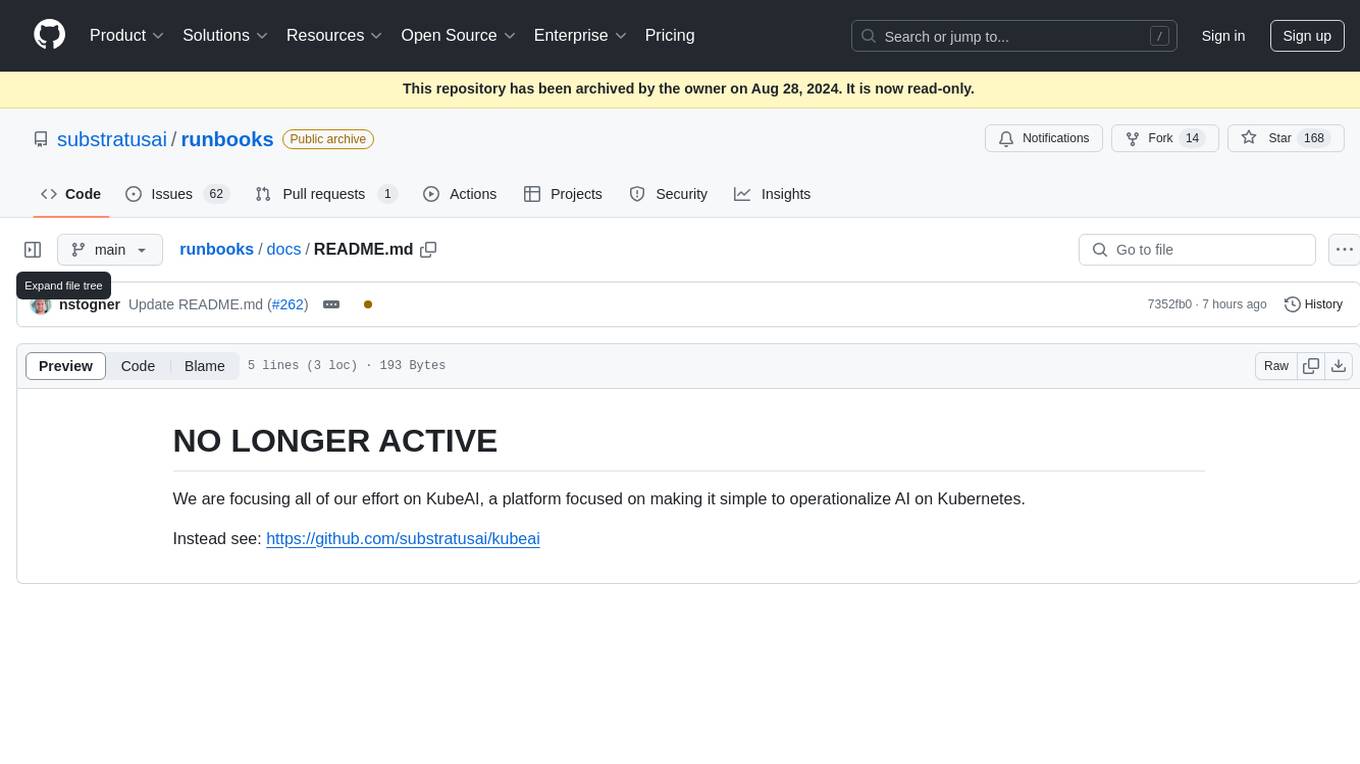

runbooks

Finetune LLMs on K8s by using Runbooks

Stars: 168

Runbooks is a repository that is no longer active. The project has been deprecated in favor of KubeAI, a platform designed to simplify the operationalization of AI on Kubernetes. For more information, please refer to the new repository at https://github.com/substratusai/kubeai.

README:

We are focusing all of our effort on KubeAI, a platform focused on making it simple to operationalize AI on Kubernetes.

Instead see: https://github.com/substratusai/kubeai

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for runbooks

Similar Open Source Tools

runbooks

Runbooks is a repository that is no longer active. The project has been deprecated in favor of KubeAI, a platform designed to simplify the operationalization of AI on Kubernetes. For more information, please refer to the new repository at https://github.com/substratusai/kubeai.

dust

Dust is a platform that provides customizable and secure AI assistants to amplify your team's potential. With Dust, you can build and deploy AI assistants that are tailored to your specific needs, without the need for extensive technical expertise. Dust's platform is easy to use and provides a variety of features to help you get started quickly, including a library of pre-built blocks, a developer platform, and an API reference.

learn-generative-ai

Learn Cloud Applied Generative AI Engineering (GenEng) is a course focusing on the application of generative AI technologies in various industries. The course covers topics such as the economic impact of generative AI, the role of developers in adopting and integrating generative AI technologies, and the future trends in generative AI. Students will learn about tools like OpenAI API, LangChain, and Pinecone, and how to build and deploy Large Language Models (LLMs) for different applications. The course also explores the convergence of generative AI with Web 3.0 and its potential implications for decentralized intelligence.

foundationallm

FoundationaLLM is a platform designed for deploying, scaling, securing, and governing generative AI in enterprises. It allows users to create AI agents grounded in enterprise data, integrate REST APIs, experiment with large language models, centrally manage AI agents and assets, deploy scalable vectorization data pipelines, enable non-developer users to create their own AI agents, control access with role-based access controls, and harness capabilities from Azure AI and Azure OpenAI. The platform simplifies integration with enterprise data sources, provides fine-grain security controls, load balances across multiple endpoints, and is extensible to new data sources and orchestrators. FoundationaLLM addresses the need for customized copilots or AI agents that are secure, licensed, flexible, and suitable for enterprise-scale production.

metaflow

Metaflow is a user-friendly library designed to assist scientists and engineers in developing and managing real-world data science projects. Initially created at Netflix, Metaflow aimed to enhance the productivity of data scientists working on diverse projects ranging from traditional statistics to cutting-edge deep learning. For further information, refer to Metaflow's website and documentation.

intelligent-app-workshop

Welcome to the envisioning workshop designed to help you build your own custom Copilot using Microsoft's Copilot stack. This workshop aims to rethink user experience, architecture, and app development by leveraging reasoning engines and semantic memory systems. You will utilize Azure AI Foundry, Prompt Flow, AI Search, and Semantic Kernel. Work with Miyagi codebase, explore advanced capabilities like AutoGen and GraphRag. This workshop guides you through the entire lifecycle of app development, including identifying user needs, developing a production-grade app, and deploying on Azure with advanced capabilities. By the end, you will have a deeper understanding of leveraging Microsoft's tools to create intelligent applications.

MaiBot

MaiBot is an intelligent QQ group chat bot based on a large language model. It is developed using the nonebot2 framework, with LLM providing conversation abilities, MongoDB for data persistence support, and NapCat as the QQ protocol endpoint support. The project is in active development stage, with features like chat functionality, emoji functionality, schedule management, memory function, knowledge base function, and relationship function planned for future updates. The project aims to create a 'life form' active in QQ group chats, focusing on companionship and creating a more human-like presence rather than a perfect assistant. The application generates content from AI models, so users are advised to discern carefully and not use it for illegal purposes.

dioptra

Dioptra is a software test platform for assessing the trustworthy characteristics of artificial intelligence (AI). It supports the NIST AI Risk Management Framework by providing functionality to assess, analyze, and track identified AI risks. Dioptra provides a REST API and can be controlled via a web interface or Python client for designing, managing, executing, and tracking experiments. It aims to be reproducible, traceable, extensible, interoperable, modular, secure, interactive, shareable, and reusable.

gdx-ai

An artificial intelligence framework entirely written in Java for game development with libGDX. It is a high-performance framework providing common AI techniques used in the game industry, covering movement AI, pathfinding, decision making, and infrastructure. The framework is designed to be used with libGDX but can be used independently. Current features include steering behaviors, formation motion, A* pathfinding, hierarchical pathfinding, behavior trees, state machine, message handling, and scheduling.

examples

This repository contains a collection of sample applications and Jupyter Notebooks for hands-on experience with Pinecone vector databases and common AI patterns, tools, and algorithms. It includes production-ready examples for review and support, as well as learning-optimized examples for exploring AI techniques and building applications. Users can contribute, provide feedback, and collaborate to improve the resource.

InferenceMAX

InferenceMAX™ is an open-source benchmarking tool designed to track real-time performance improvements in popular open-source inference frameworks and models. It runs a suite of benchmarks every night to capture progress in near real-time, providing a live indicator of inference performance. The tool addresses the challenge of rapidly evolving software ecosystems by benchmarking the latest software packages, ensuring that benchmarks do not go stale. InferenceMAX™ is supported by industry leaders and contributors, providing transparent and reproducible benchmarks that help the ML community make informed decisions about hardware and software performance.

husky

Husky is a research-focused programming language designed for next-generation computing. It aims to provide a powerful and ergonomic development experience for various tasks, including system level programming, web/native frontend development, parser/compiler tasks, game development, formal verification, machine learning, and more. With a strong type system and support for human-in-the-loop programming, Husky enables users to tackle complex tasks such as explainable image classification, natural language processing, and reinforcement learning. The language prioritizes debugging, visualization, and human-computer interaction, offering agile compilation and evaluation, multiparadigm support, and a commitment to a good ecosystem.

GenAI-Showcase

The Generative AI Use Cases Repository showcases a wide range of applications in generative AI, including Retrieval-Augmented Generation (RAG), AI Agents, and industry-specific use cases. It provides practical notebooks and guidance on utilizing frameworks such as LlamaIndex and LangChain, and demonstrates how to integrate models from leading AI research companies like Anthropic and OpenAI.

AI-Engineer-Headquarters

AI Engineer Headquarters is a comprehensive learning resource designed to help individuals master scientific methods, processes, algorithms, and systems to build stories and models in the field of Data and AI. The repository provides in-depth content through video sessions and text materials, catering to individuals aspiring to be in the top 1% of Data and AI experts. It covers various topics such as AI engineering foundations, large language models, retrieval-augmented generation, fine-tuning LLMs, reinforcement learning, ethical AI, agentic workflows, and career acceleration. The learning approach emphasizes action-oriented drills and routines, encouraging consistent effort and dedication to excel in the AI field.

ai_gallery

AI Gallery is a showcase site built using React and Nextjs for static site generation, featuring interactive visualizations of classic algorithms, classic games implementation, and various interesting widgets. The project utilizes AI assistance from Claude 3.5 and GPT-4 to create components and enhance the development process. It aims to continually add more components with AI assistance, providing a platform for contributors to leverage AI in frontend development.

PentestGPT

PentestGPT provides advanced AI and integrated tools to help security teams conduct comprehensive penetration tests effortlessly. Scan, exploit, and analyze web applications, networks, and cloud environments with ease and precision, without needing expert skills. The tool utilizes Supabase for data storage and management, and Vercel for hosting the frontend. It offers a local quickstart guide for running the tool locally and a hosted quickstart guide for deploying it in the cloud. PentestGPT aims to simplify the penetration testing process for security professionals and enthusiasts alike.

For similar tasks

runbooks

Runbooks is a repository that is no longer active. The project has been deprecated in favor of KubeAI, a platform designed to simplify the operationalization of AI on Kubernetes. For more information, please refer to the new repository at https://github.com/substratusai/kubeai.

katib

Katib is a Kubernetes-native project for automated machine learning (AutoML). Katib supports Hyperparameter Tuning, Early Stopping and Neural Architecture Search. Katib is the project which is agnostic to machine learning (ML) frameworks. It can tune hyperparameters of applications written in any language of the users’ choice and natively supports many ML frameworks, such as TensorFlow, Apache MXNet, PyTorch, XGBoost, and others. Katib can perform training jobs using any Kubernetes Custom Resources with out of the box support for Kubeflow Training Operator, Argo Workflows, Tekton Pipelines and many more.

cog

Cog is an open-source tool that lets you package machine learning models in a standard, production-ready container. You can deploy your packaged model to your own infrastructure, or to Replicate.

mosec

Mosec is a high-performance and flexible model serving framework for building ML model-enabled backend and microservices. It bridges the gap between any machine learning models you just trained and the efficient online service API. * **Highly performant** : web layer and task coordination built with Rust 🦀, which offers blazing speed in addition to efficient CPU utilization powered by async I/O * **Ease of use** : user interface purely in Python 🐍, by which users can serve their models in an ML framework-agnostic manner using the same code as they do for offline testing * **Dynamic batching** : aggregate requests from different users for batched inference and distribute results back * **Pipelined stages** : spawn multiple processes for pipelined stages to handle CPU/GPU/IO mixed workloads * **Cloud friendly** : designed to run in the cloud, with the model warmup, graceful shutdown, and Prometheus monitoring metrics, easily managed by Kubernetes or any container orchestration systems * **Do one thing well** : focus on the online serving part, users can pay attention to the model optimization and business logic

web-llm

WebLLM is a modular and customizable javascript package that directly brings language model chats directly onto web browsers with hardware acceleration. Everything runs inside the browser with no server support and is accelerated with WebGPU. WebLLM is fully compatible with OpenAI API. That is, you can use the same OpenAI API on any open source models locally, with functionalities including json-mode, function-calling, streaming, etc. We can bring a lot of fun opportunities to build AI assistants for everyone and enable privacy while enjoying GPU acceleration.

data-scientist-roadmap2024

The Data Scientist Roadmap2024 provides a comprehensive guide to mastering essential tools for data science success. It includes programming languages, machine learning libraries, cloud platforms, and concepts categorized by difficulty. The roadmap covers a wide range of topics from programming languages to machine learning techniques, data visualization tools, and DevOps/MLOps tools. It also includes web development frameworks and specific concepts like supervised and unsupervised learning, NLP, deep learning, reinforcement learning, and statistics. Additionally, it delves into DevOps tools like Airflow and MLFlow, data visualization tools like Tableau and Matplotlib, and other topics such as ETL processes, optimization algorithms, and financial modeling.

aibydoing-feedback

AI By Doing is a hands-on artificial intelligence tutorial series that aims to help beginners understand the principles of machine learning and deep learning while providing practical applications. The content covers various supervised and unsupervised learning algorithms, machine learning engineering, deep learning fundamentals, frameworks like TensorFlow and PyTorch, and applications in computer vision and natural language processing. The tutorials are written in Jupyter Notebook format, combining theory, mathematical derivations, and Python code implementations to facilitate learning and understanding.

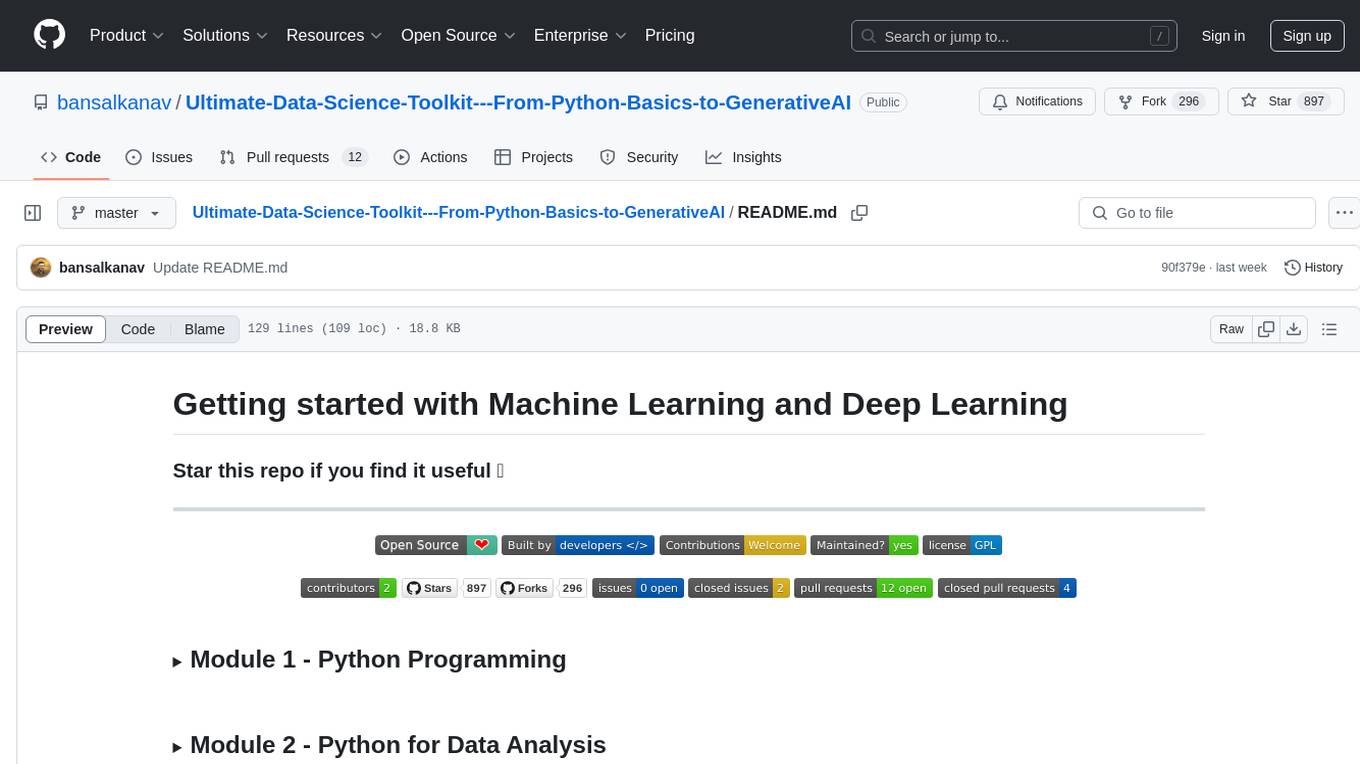

Ultimate-Data-Science-Toolkit---From-Python-Basics-to-GenerativeAI

Ultimate Data Science Toolkit is a comprehensive repository covering Python basics to Generative AI. It includes modules on Python programming, data analysis, statistics, machine learning, MLOps, case studies, and deep learning. The repository provides detailed tutorials on various topics such as Python data structures, control statements, functions, modules, object-oriented programming, exception handling, file handling, web API, databases, list comprehension, lambda functions, Pandas, Numpy, data visualization, statistical analysis, supervised and unsupervised machine learning algorithms, model serialization, ML pipeline orchestration, case studies, and deep learning concepts like neural networks and autoencoders.

For similar jobs

runbooks

Runbooks is a repository that is no longer active. The project has been deprecated in favor of KubeAI, a platform designed to simplify the operationalization of AI on Kubernetes. For more information, please refer to the new repository at https://github.com/substratusai/kubeai.

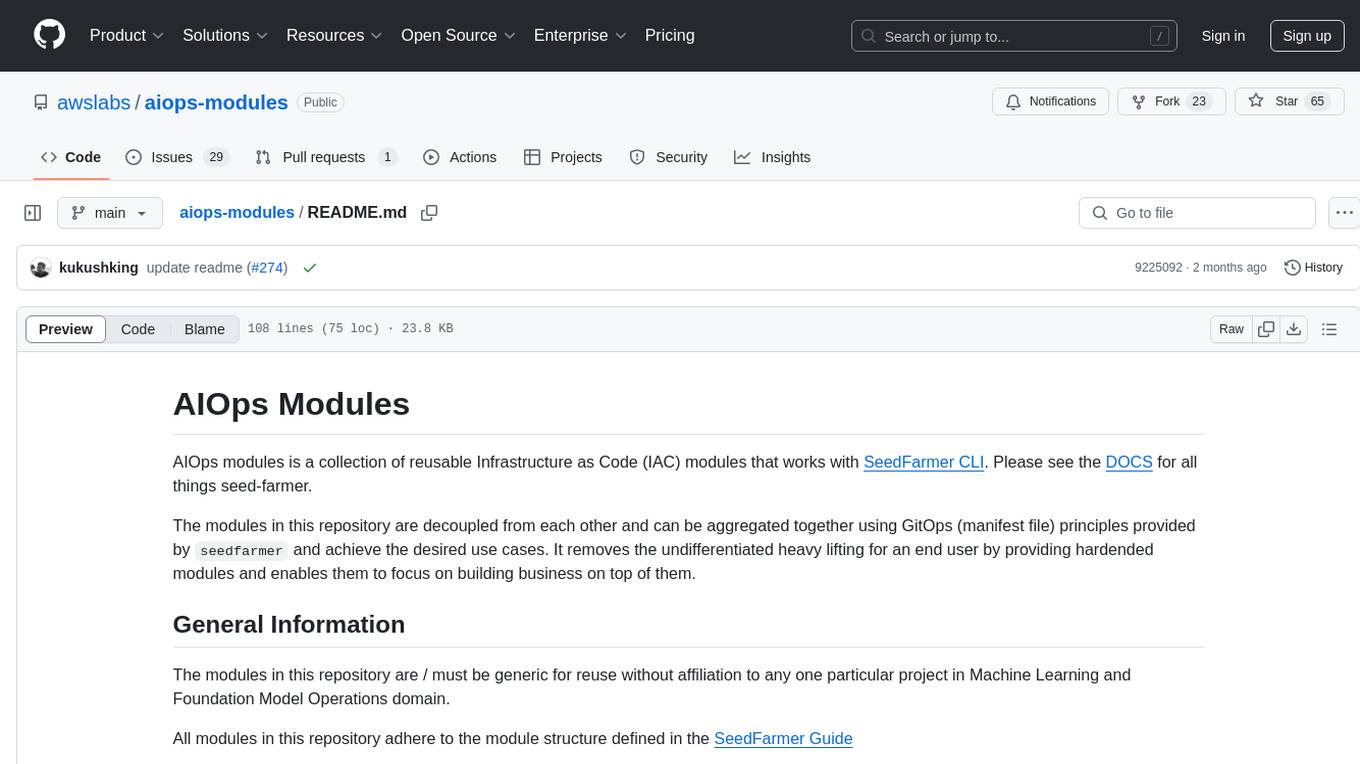

aiops-modules

AIOps Modules is a collection of reusable Infrastructure as Code (IAC) modules that work with SeedFarmer CLI. The modules are decoupled and can be aggregated using GitOps principles to achieve desired use cases, removing heavy lifting for end users. They must be generic for reuse in Machine Learning and Foundation Model Operations domain, adhering to SeedFarmer Guide structure. The repository includes deployment steps, project manifests, and various modules for SageMaker, Mlflow, FMOps/LLMOps, MWAA, Step Functions, EKS, and example use cases. It also supports Industry Data Framework (IDF) and Autonomous Driving Data Framework (ADDF) Modules.

Awesome-LLMOps

Awesome-LLMOps is a curated list of the best LLMOps tools, providing a comprehensive collection of frameworks and tools for building, deploying, and managing large language models (LLMs) and AI agents. The repository includes a wide range of tools for tasks such as building multimodal AI agents, fine-tuning models, orchestrating applications, evaluating models, and serving models for inference. It covers various aspects of the machine learning operations (MLOps) lifecycle, from training to deployment and observability. The tools listed in this repository cater to the needs of developers, data scientists, and machine learning engineers working with large language models and AI applications.

skyflo

Skyflo.ai is an AI agent designed for Cloud Native operations, providing seamless infrastructure management through natural language interactions. It serves as a safety-first co-pilot with a human-in-the-loop design. The tool offers flexible deployment options for both production and local Kubernetes environments, supporting various LLM providers and self-hosted models. Users can explore the architecture of Skyflo.ai and contribute to its development following the provided guidelines and Code of Conduct. The community engagement includes Discord, Twitter, YouTube, and GitHub Discussions.

AI-CloudOps

AI+CloudOps is a cloud-native operations management platform designed for enterprises. It aims to integrate artificial intelligence technology with cloud-native practices to significantly improve the efficiency and level of operations work. The platform offers features such as AIOps for monitoring data analysis and alerts, multi-dimensional permission management, visual CMDB for resource management, efficient ticketing system, deep integration with Prometheus for real-time monitoring, and unified Kubernetes management for cluster optimization.

kubectl-mcp-server

Control your entire Kubernetes infrastructure through natural language conversations with AI. Talk to your clusters like you talk to a DevOps expert. Debug crashed pods, optimize costs, deploy applications, audit security, manage Helm charts, and visualize dashboards—all through natural language. The tool provides 253 powerful tools, 8 workflow prompts, 8 data resources, and works with all major AI assistants. It offers AI-powered diagnostics, built-in cost optimization, enterprise-ready features, zero learning curve, universal compatibility, visual insights, and production-grade deployment options. From debugging crashed pods to optimizing cluster costs, kubectl-mcp-server is your AI-powered DevOps companion.

minio

MinIO is a High Performance Object Storage released under GNU Affero General Public License v3.0. It is API compatible with Amazon S3 cloud storage service. Use MinIO to build high performance infrastructure for machine learning, analytics and application data workloads.

ai-on-gke

This repository contains assets related to AI/ML workloads on Google Kubernetes Engine (GKE). Run optimized AI/ML workloads with Google Kubernetes Engine (GKE) platform orchestration capabilities. A robust AI/ML platform considers the following layers: Infrastructure orchestration that support GPUs and TPUs for training and serving workloads at scale Flexible integration with distributed computing and data processing frameworks Support for multiple teams on the same infrastructure to maximize utilization of resources