APIPark

🦄云原生、超高性能 AI&API网关,LLM API 管理、分发系统、开放平台,支持所有AI API,不限于OpenAI、Azure、Anthropic Claude、Google Gemini、DeepSeek、字节豆包、ChatGLM、文心一言、讯飞星火、通义千问、360 智脑、腾讯混元等主流模型,统一 API 请求和返回,API申请与审批,调用统计、负载均衡、多模型灾备。一键部署,开箱即用。Cloud native, ultra-high performance AI&API gateway, LLM API management, distribution system, open platform, supporting all AI APIs.

Stars: 996

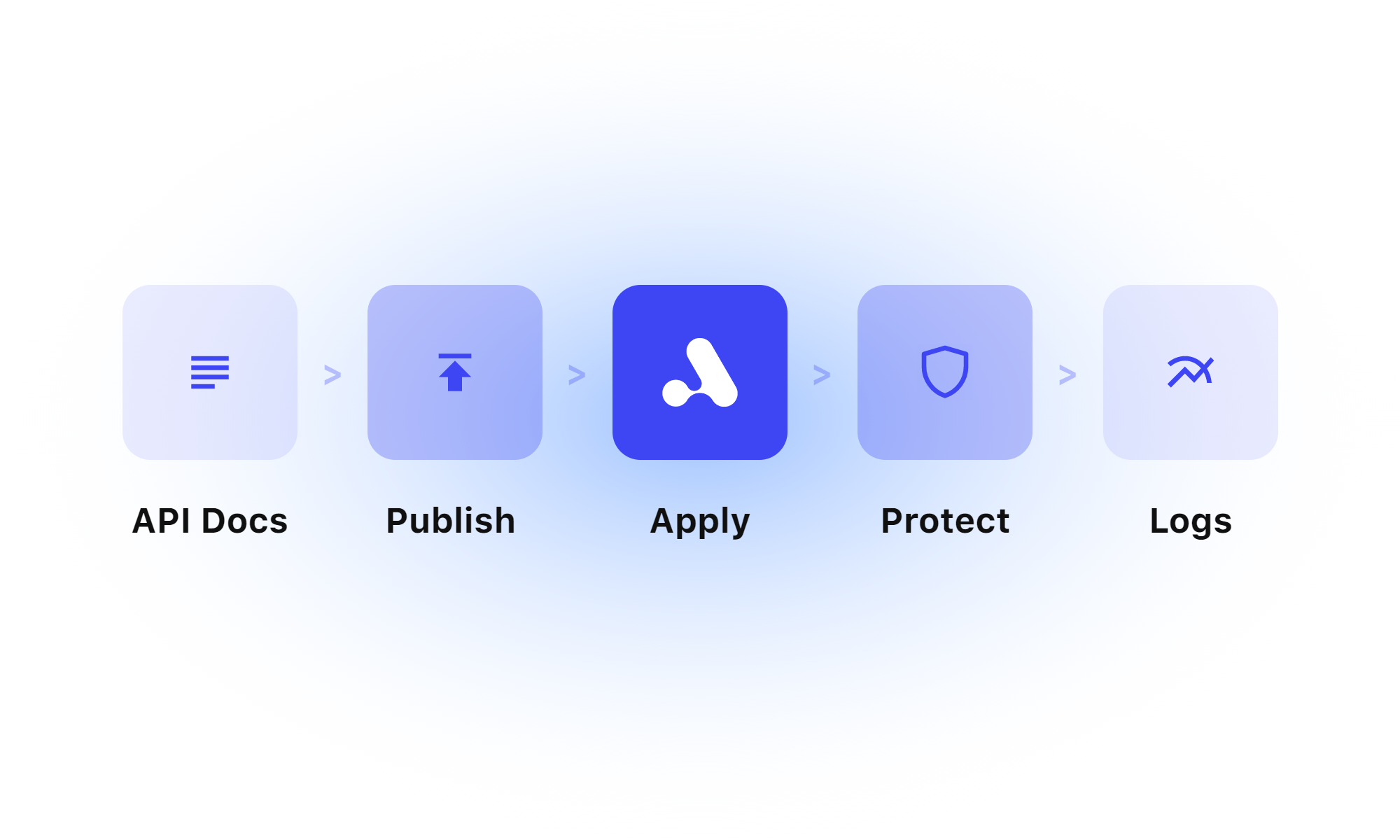

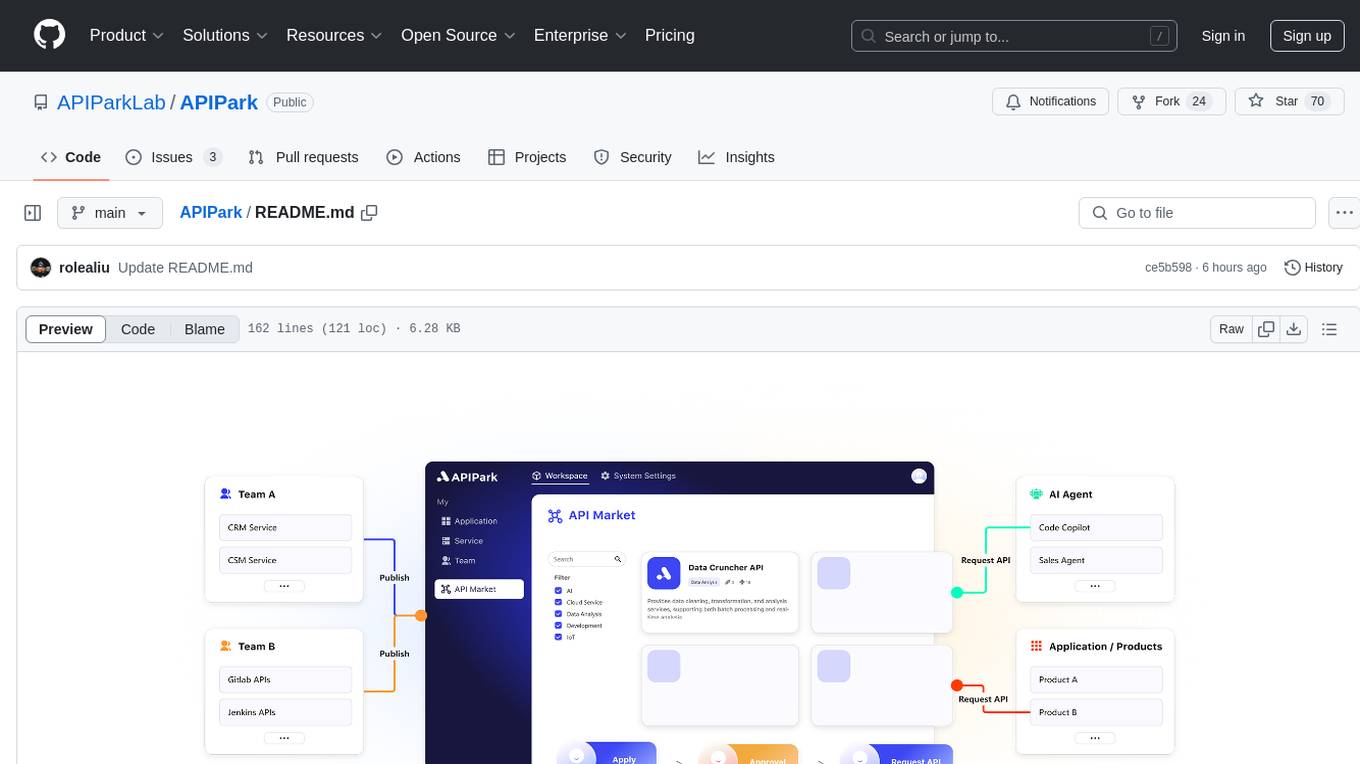

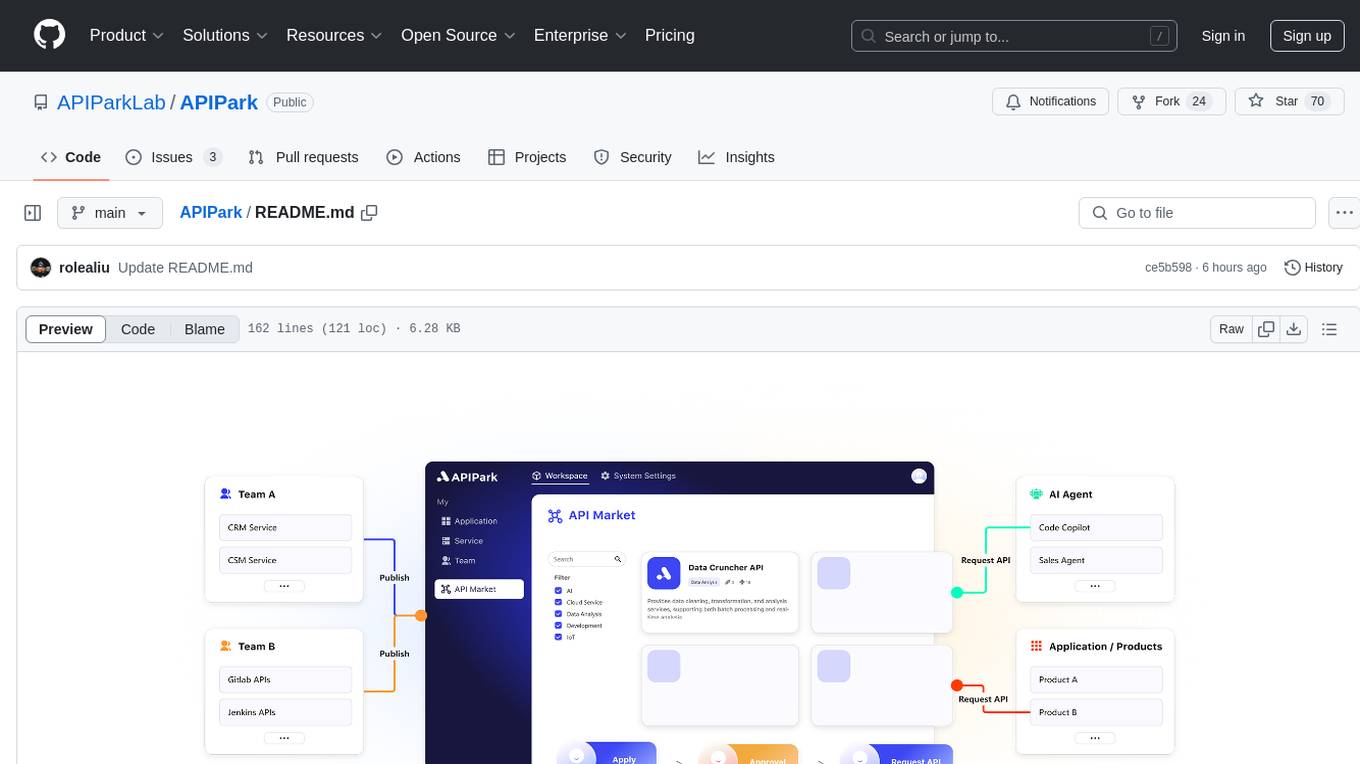

APIPark is an open-source AI Gateway and Developer Portal that enables users to easily manage, integrate, and deploy AI and API services. It provides robust API management features, including creation, monitoring, and access control, to help developers efficiently and securely develop and manage their APIs. The platform aims to solve challenges such as connecting to powerful AI models, managing complex AI & API call relationships, overseeing API creation and security, simplifying fault detection and troubleshooting, and enhancing the visibility and valuation of data assets.

README:

🦄 APIPark is an open-source, all-in-one AI gateway and API developer portal, helping developers and enterprises easily manage, integrate, and deploy AI services. APIPark is open-sourced under the Apache 2.0 license, which means it's free for commercial use!

✨ With APIPark, you can:

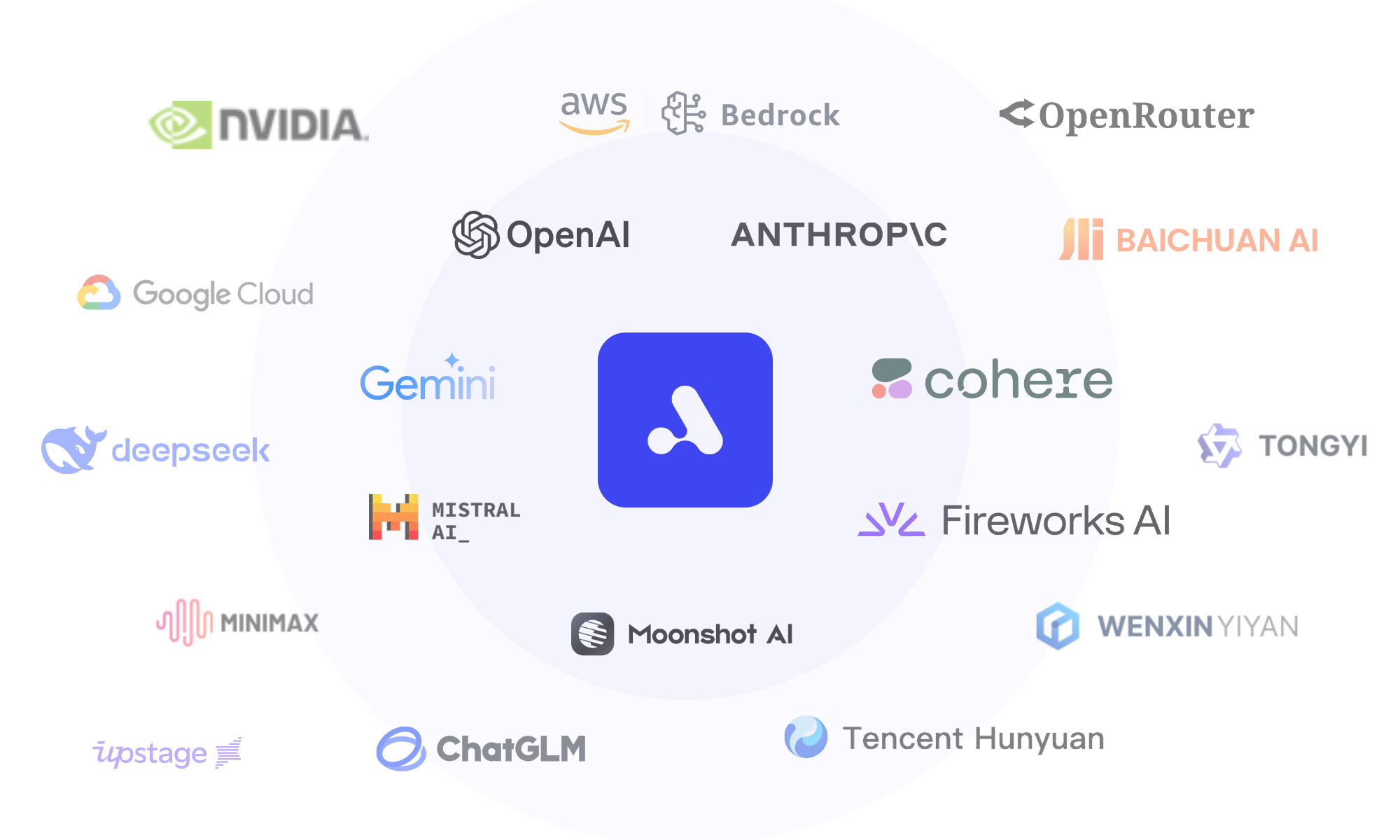

- Quickly connect to 100+ AI models, supporting all mainstream AI Companies!

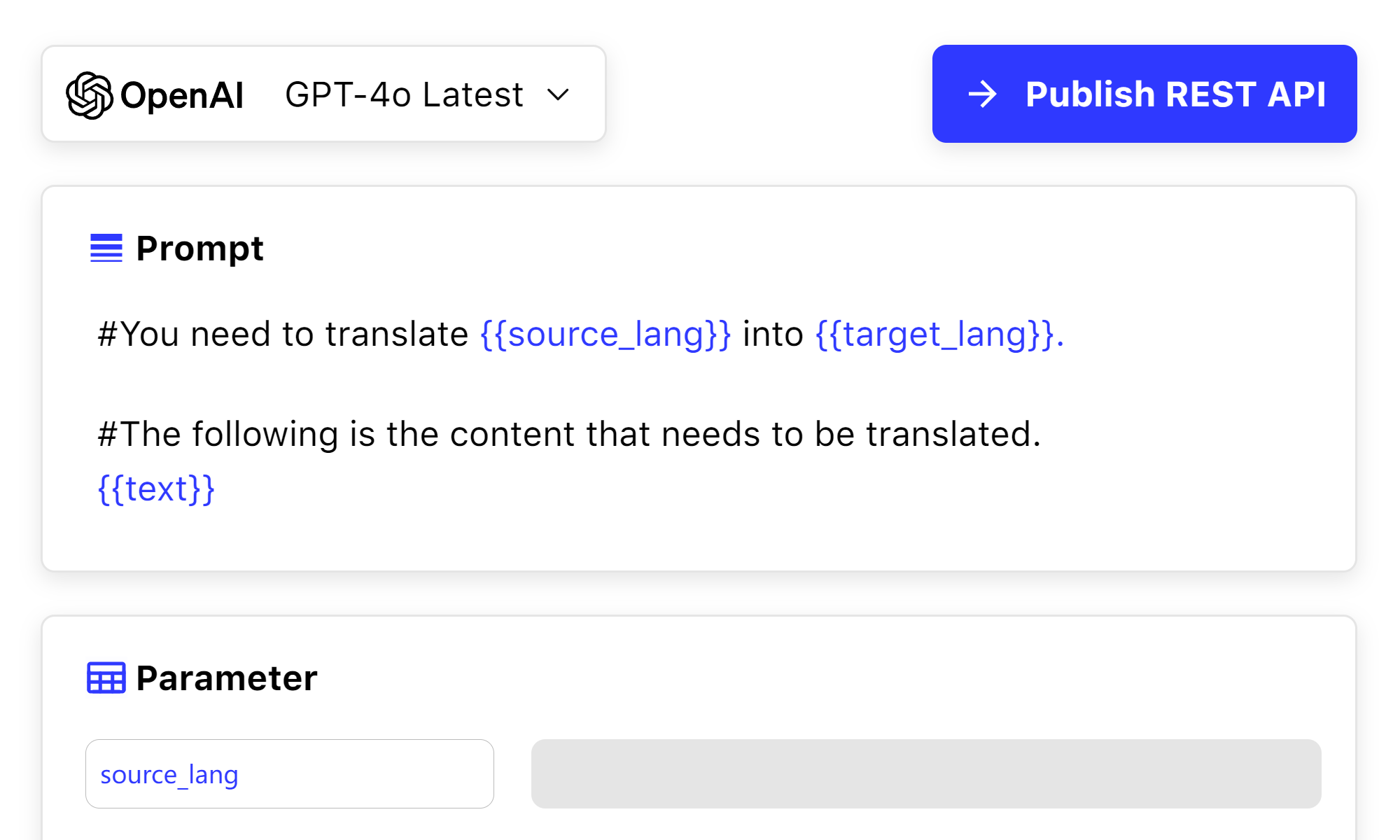

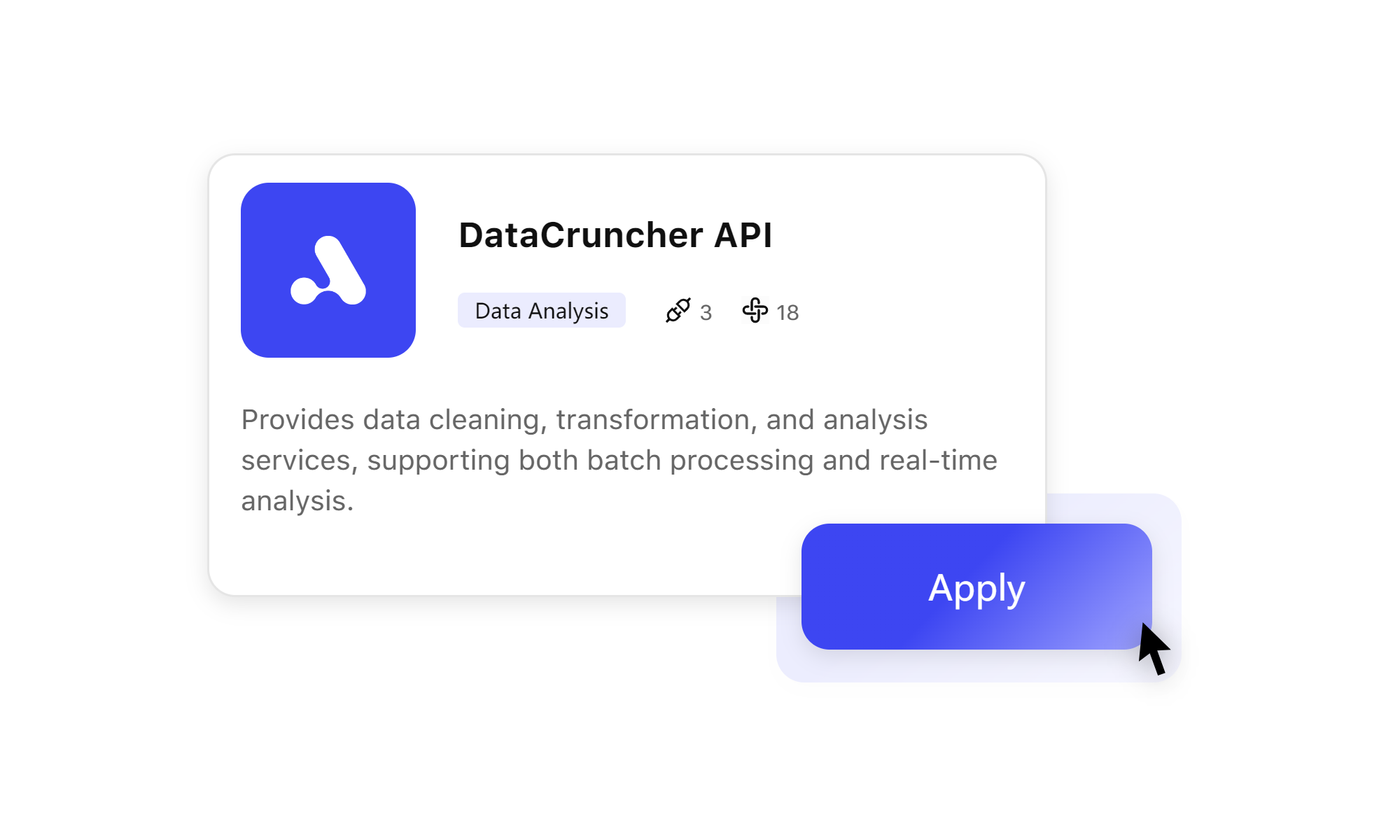

- Combine AI models and prompt templates into APIs, such as creating a sentiment analysis API, translation API, or data analysis API based on OpenAI GPT-4 and some custom prompts.

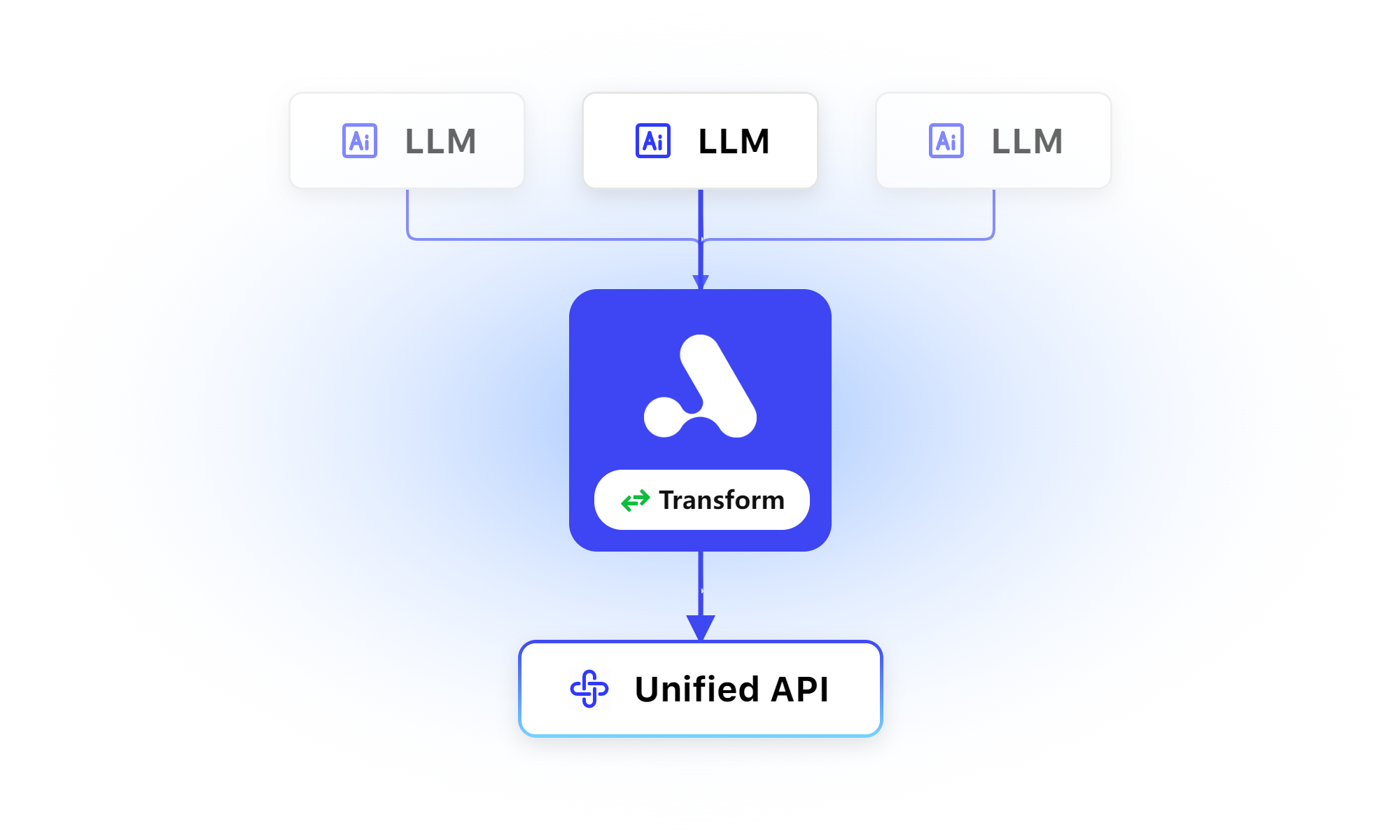

- Standardize the data format of all AI API requests, so switching AI models or modifying prompts won’t affect your APP or microservices, simplifying your AI usage and maintenance costs.

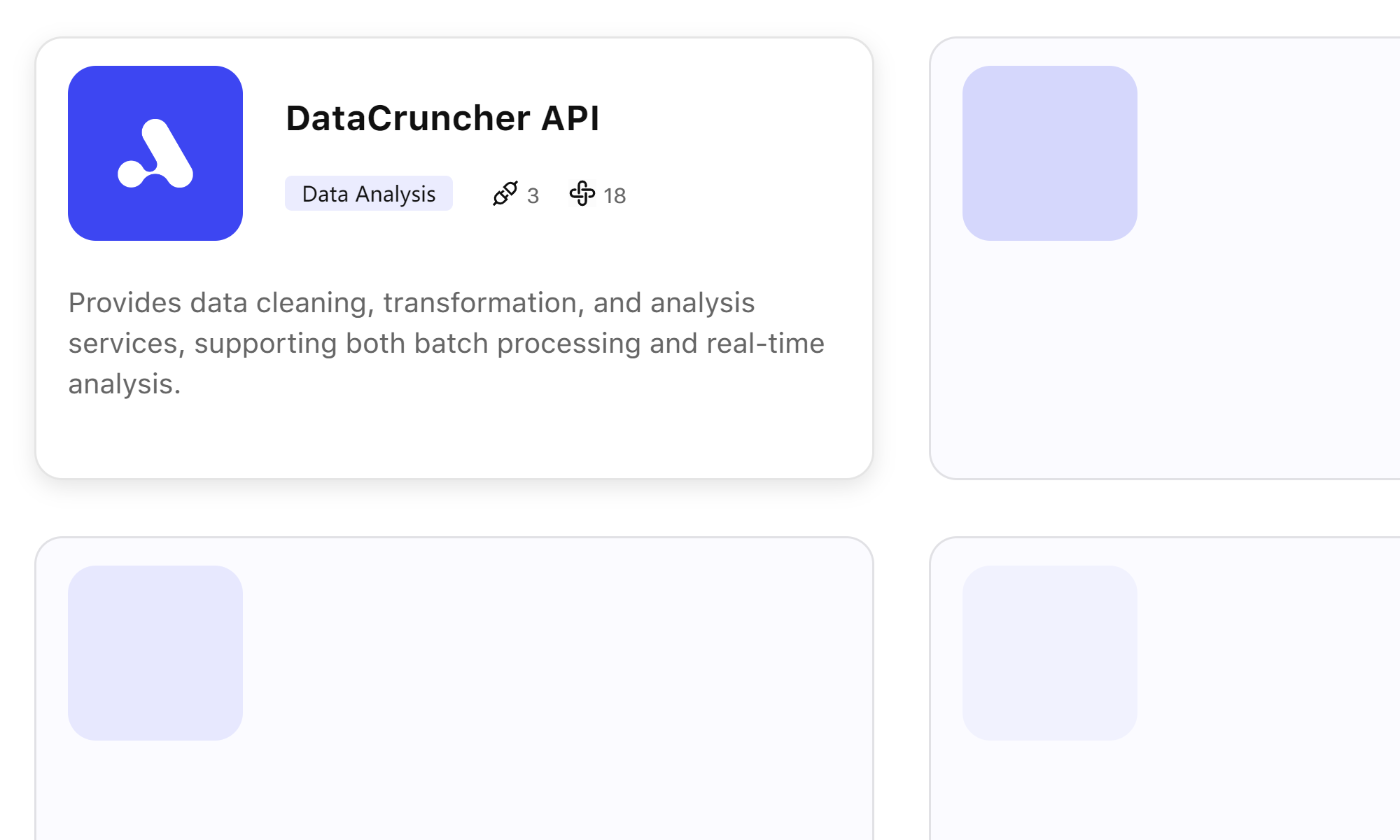

- Share APIs within the team through APIPark's developer portal.

- Manage calling applications and API keys to ensure your API's security and stability.

- Monitor your AI API usage with clear charts.

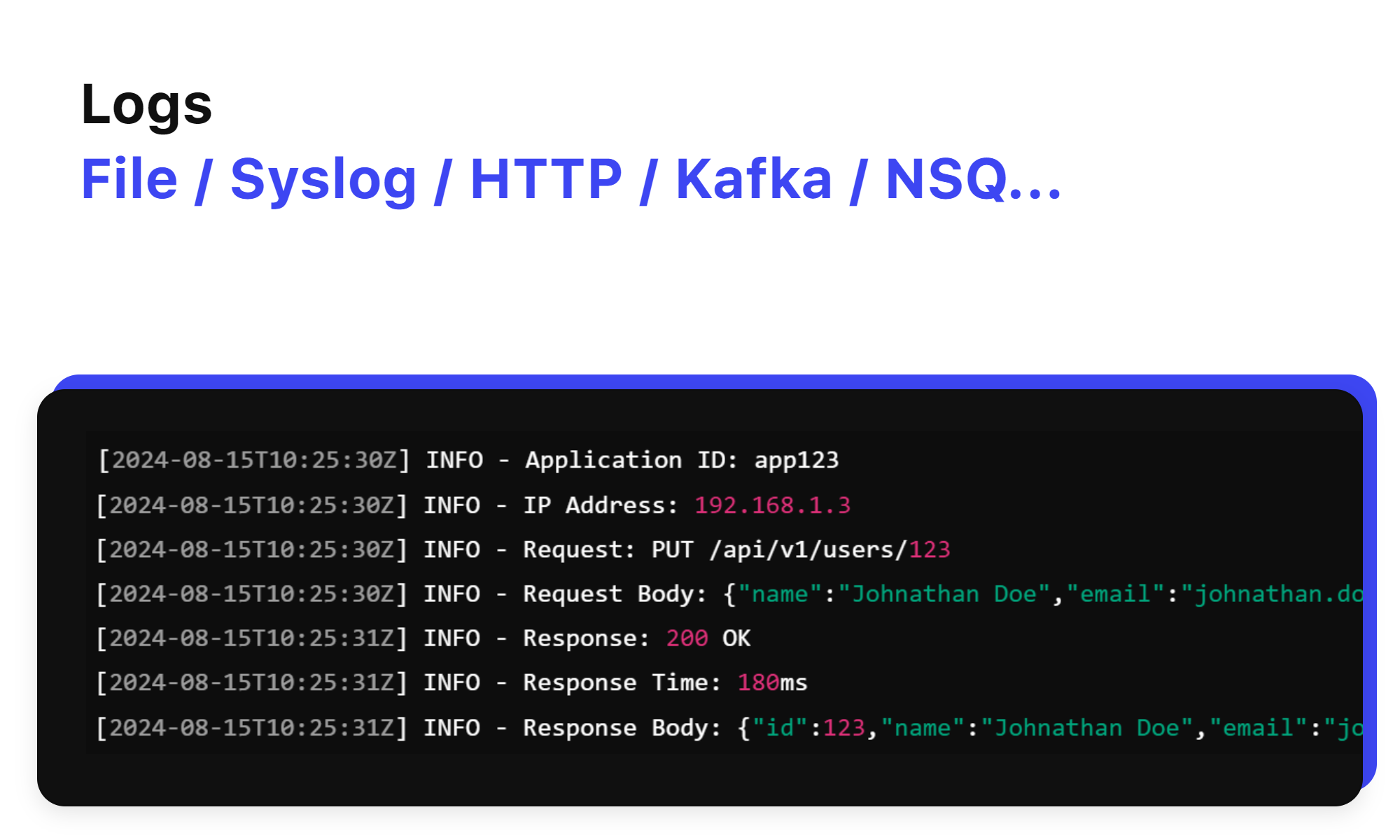

- Quickly export API request logs to third-party logging platforms.

✨ APIPark is also a powerful cloud-native API gateway:

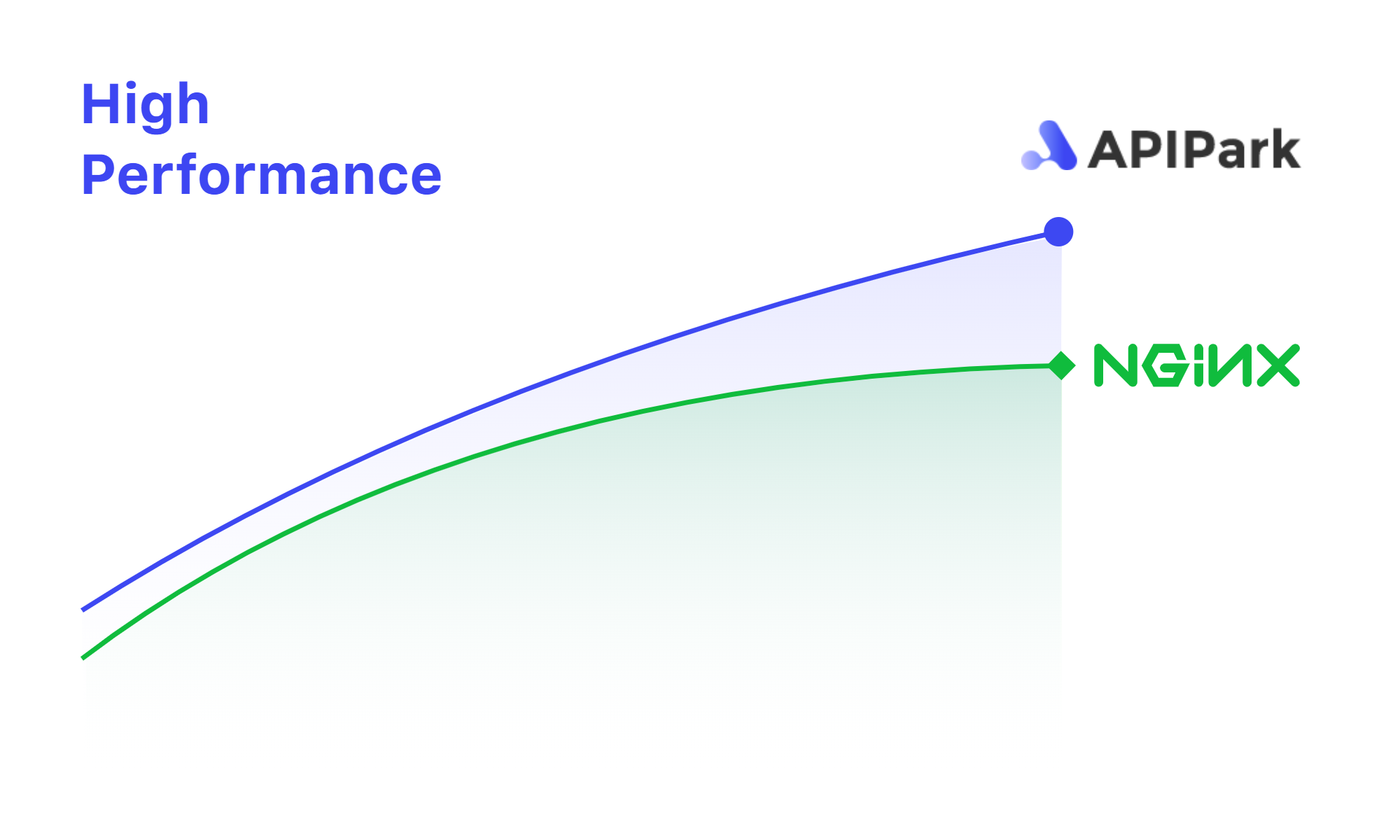

- It outperforms Nginx with higher performance, supports cluster deployment, and handles large-scale traffic.

- Share REST APIs within the team, manage API call relationships, and prevent management costs and data breaches caused by chaotic API calls.

Before building APIPark, we spent seven years developing an API development and automated testing platform with over 1 million developer users, 500+ enterprise customers, and multi-million-dollar investment from Sequoia Capital.

As AI and Agents evolved, we noticed many enterprises wanted to integrate AI into both internal and third-party APIs, enabling AI agents to perform more complex tasks beyond being just knowledge-based Q&A bots. Hence, we built APIPark—your all-in-one AI gateway and API developer portal to accelerate your AI API development and quickly build your product or AI agent!

APIPark is designed to solve the following problems:

- Seamlessly connect to various AI models and package these AI capabilities into APIs for easy calling, significantly reducing the barrier to using AI models.

- Manage complex AI & API call relationships.

- Manage API creation, monitoring, and security.

- Fault detection and troubleshooting: Simplifying system issue identification and resolution.

- Quantify data asset value: Enhance the visibility and valuation of data assets.

😍 Deploying APIPark is incredibly simple. With just one command line, you can deploy your AI gateway and API developer portal in under 5 minutes.

curl -sSO https://download.apipark.com/install/quick-start.sh ; bash quick-start.sh

- Connect to 100+ major models from all mainstream AI vendors, with standardized API calls requiring no additional adaptation work.

- Combine AI models and prompt templates to create new AI APIs, simplifying AI API development.

- Quickly share AI APIs within the team.

- Quickly build an internal API developer portal.

- Efficiently manage and call APIs.

- Reduce complex system call relationships.

- Powerful service governance and compliance management features.

- Granular permission management for application calls.

- Ensure API call security and compliance, reducing enterprise risk.

- Use monitoring and diagnostic tools to quickly identify and resolve issues.

- Reduce downtime and improve system stability.

- Supports multi-tenant management to meet different business unit needs.

- Flexible subscription and approval processes simplify API usage and management.

- Real-time monitoring and tracking of API usage.

- Fully understand data flow to enhance data usage transparency.

We’ve set exciting goals for APIPark: enabling everyone to quickly create their own products and AI agents using AI and APIs!

To achieve this goal, we plan to add new features to APIPark, including:

- Integrating with API marketplaces such as Postman, RapidAPI, APISpace, APILayer, etc. You can directly use APIs from various API marketplaces through APIPark and make them smarter using AI.

- Integrating AI Agents such as Langchain, AgentGPT, Auto-GPT, Dify, and more, allowing AI Agents to access your internal or third-party APIs through APIPark to complete more complex tasks.

- Intelligent API orchestration: APIPark will provide a unified API entry point and automatically orchestrate multiple APIs to fulfill your requests based on the API content.

Visit APIPark Documentation for detailed installation guides, API references, and usage instructions.

APIPark uses the Apache 2.0 License. For more details, please refer to the LICENSE file.

For enterprise-level features and professional technical support, contact our pre-sales experts for personalized demos, customized solutions, and pricing.

- Website: https://apipark.com

- Email: [email protected]

🙏 A big thanks to everyone who helped shape APIPark. We are thrilled to hear the community’s thoughts! Let’s make the world of APIs and AI stronger and more fun together. 🎉

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for APIPark

Similar Open Source Tools

APIPark

APIPark is an open-source AI Gateway and Developer Portal that enables users to easily manage, integrate, and deploy AI and API services. It provides robust API management features, including creation, monitoring, and access control, to help developers efficiently and securely develop and manage their APIs. The platform aims to solve challenges such as connecting to powerful AI models, managing complex AI & API call relationships, overseeing API creation and security, simplifying fault detection and troubleshooting, and enhancing the visibility and valuation of data assets.

CSGHub

CSGHub is an open source, trustworthy large model asset management platform that can assist users in governing the assets involved in the lifecycle of LLM and LLM applications (datasets, model files, codes, etc). With CSGHub, users can perform operations on LLM assets, including uploading, downloading, storing, verifying, and distributing, through Web interface, Git command line, or natural language Chatbot. Meanwhile, the platform provides microservice submodules and standardized OpenAPIs, which could be easily integrated with users' own systems. CSGHub is committed to bringing users an asset management platform that is natively designed for large models and can be deployed On-Premise for fully offline operation. CSGHub offers functionalities similar to a privatized Huggingface(on-premise Huggingface), managing LLM assets in a manner akin to how OpenStack Glance manages virtual machine images, Harbor manages container images, and Sonatype Nexus manages artifacts.

yao

YAO is an open-source application engine written in Golang, suitable for developing business systems, website/APP API, admin panel, and self-built low-code platforms. It adopts a flow-based programming model to implement functions by writing YAO DSL or using JavaScript. Yao allows developers to create web services by processes, creating a database model, writing API services, and describing dashboard interfaces just by JSON for web & hardware, and 10x productivity. It is based on the flow-based programming idea, developed in Go language, and supports multiple ways to expand the data stream processor. Yao has a built-in data management system, making it suitable for quickly making various management backgrounds, CRM, ERP, and other internal enterprise systems. It is highly versatile, efficient, and performs better than PHP, JAVA, and other languages.

spring-ai-alibaba

Spring AI Alibaba is an AI application framework for Java developers that seamlessly integrates with Alibaba Cloud QWen LLM services and cloud-native infrastructures. It provides features like support for various AI models, high-level AI agent abstraction, function calling, and RAG support. The framework aims to simplify the development, evaluation, deployment, and observability of AI native Java applications. It offers open-source framework and ecosystem integrations to support features like prompt template management, event-driven AI applications, and more.

AgentUp

AgentUp is an active development tool that provides a developer-first agent framework for creating AI agents with enterprise-grade infrastructure. It allows developers to define agents with configuration, ensuring consistent behavior across environments. The tool offers secure design, configuration-driven architecture, extensible ecosystem for customizations, agent-to-agent discovery, asynchronous task architecture, deterministic routing, and MCP support. It supports multiple agent types like reactive agents and iterative agents, making it suitable for chatbots, interactive applications, research tasks, and more. AgentUp is built by experienced engineers from top tech companies and is designed to make AI agents production-ready, secure, and reliable.

genkit

Firebase Genkit (beta) is a framework with powerful tooling to help app developers build, test, deploy, and monitor AI-powered features with confidence. Genkit is cloud optimized and code-centric, integrating with many services that have free tiers to get started. It provides unified API for generation, context-aware AI features, evaluation of AI workflow, extensibility with plugins, easy deployment to Firebase or Google Cloud, observability and monitoring with OpenTelemetry, and a developer UI for prototyping and testing AI features locally. Genkit works seamlessly with Firebase or Google Cloud projects through official plugins and templates.

aitour26-WRK541-real-world-code-migration-with-github-copilot-agent-mode

Microsoft AI Tour 2026 WRK541 is a workshop focused on real-world code migration using GitHub Copilot Agent Mode. The session is designed for technologists interested in applying AI pair-programming techniques to challenging tasks like migrating or translating code between different programming languages. Participants will learn advanced GitHub Copilot techniques, differences between Python and C#, JSON serialization and deserialization in C#, developing and validating endpoints, integrating Swagger/OpenAPI documentation, and writing unit tests with MSTest. The workshop aims to help users gain hands-on experience in using GitHub Copilot for real-world code migration scenarios.

get-started-with-ai-agents

The 'Getting Started with Agents Using Azure AI Foundry' repository provides a solution that deploys a web-based chat application with an AI agent running in Azure Container App. The agent leverages Azure AI services for knowledge retrieval from uploaded files, enabling it to generate responses with citations. The solution includes built-in monitoring capabilities for easier troubleshooting and optimized performance. Users can deploy AI models, customize the agent, and evaluate its performance. The repository offers flexible deployment options through GitHub Codespaces, VS Code Dev Containers, or local environments.

kong

Kong, or Kong API Gateway, is a cloud-native, platform-agnostic, scalable API Gateway distinguished for its high performance and extensibility via plugins. It also provides advanced AI capabilities with multi-LLM support. By providing functionality for proxying, routing, load balancing, health checking, authentication (and more), Kong serves as the central layer for orchestrating microservices or conventional API traffic with ease. Kong runs natively on Kubernetes thanks to its official Kubernetes Ingress Controller.

apicat

ApiCat is an API documentation management tool that is fully compatible with the OpenAPI specification. With ApiCat, you can freely and efficiently manage your APIs. It integrates the capabilities of LLM, which not only helps you automatically generate API documentation and data models but also creates corresponding test cases based on the API content. Using ApiCat, you can quickly accomplish anything outside of coding, allowing you to focus your energy on the code itself.

bytechef

ByteChef is an open-source, low-code, extendable API integration and workflow automation platform. It provides an intuitive UI Workflow Editor, event-driven & scheduled workflows, multiple flow controls, built-in code editor supporting Java, JavaScript, Python, and Ruby, rich component ecosystem, extendable with custom connectors, AI-ready with built-in AI components, developer-ready to expose workflows as APIs, version control friendly, self-hosted, scalable, and resilient. It allows users to build and visualize workflows, automate tasks across SaaS apps, internal APIs, and databases, and handle millions of workflows with high availability and fault tolerance.

Genkit

Genkit is an open-source framework for building full-stack AI-powered applications, used in production by Google's Firebase. It provides SDKs for JavaScript/TypeScript (Stable), Go (Beta), and Python (Alpha) with unified interface for integrating AI models from providers like Google, OpenAI, Anthropic, Ollama. Rapidly build chatbots, automations, and recommendation systems using streamlined APIs for multimodal content, structured outputs, tool calling, and agentic workflows. Genkit simplifies AI integration with open-source SDK, unified APIs, and offers text and image generation, structured data generation, tool calling, prompt templating, persisted chat interfaces, AI workflows, and AI-powered data retrieval (RAG).

bedrock-agentcore-starter-toolkit

Amazon Bedrock AgentCore Starter Toolkit enables developers to deploy and operate highly effective AI agents securely at scale using any framework and model. It provides tools and capabilities to make agents more effective and capable, purpose-built infrastructure to securely scale agents, and controls to operate trustworthy agents. The toolkit includes modular services like Runtime, Memory, Gateway, Code Interpreter, Browser, Observability, Identity, and Import Agent for seamless migration of existing agents. It is currently in public preview and offers enterprise-grade security and reliability for accelerating AI agent development.

admyral

Admyral is an open-source Cybersecurity Automation & Investigation Assistant that provides a unified console for investigations and incident handling, workflow automation creation, automatic alert investigation, and next step suggestions for analysts. It aims to tackle alert fatigue and automate security workflows effectively by offering features like workflow actions, AI actions, case management, alert handling, and more. Admyral combines security automation and case management to streamline incident response processes and improve overall security posture. The tool is open-source, transparent, and community-driven, allowing users to self-host, contribute, and collaborate on integrations and features.

craftgen

Craftgen.ai is an innovative AI platform designed for both technical and non-technical users. It's built on a foundation of graph architecture for scalability and the Actor Model for efficient concurrent operations, tailored to both technical and non-technical users. A key aspect of Craftgen.ai is its modular AI approach, allowing users to assemble and customize AI components like building blocks to fit their specific needs. The platform's robustness is enhanced by its event-driven architecture, ensuring reliable data processing and featuring browser web technologies for universal access. Craftgen.ai excels in dynamic tool and workflow generation, with strong offline capabilities for secure environments and plans for desktop application integration. A unique and valuable feature of Craftgen.ai is its marketplace, where users can access a variety of pre-built AI solutions. This marketplace accelerates the deployment of AI tools but also fosters a community of sharing and innovation. Users can contribute to and leverage this repository of solutions, enhancing the platform's versatility and practicality. Craftgen.ai uses JSON schema for industry-standard alignment, enabling seamless integration with any API following the OpenAPI spec. This allows for a broad range of applications, from automating data analysis to streamlining content management. The platform is designed to bridge the gap between advanced AI technology and practical usability. It's a flexible, secure, and intuitive platform that empowers users, from developers seeking to create custom AI solutions to businesses looking to automate routine tasks. Craftgen.ai's goal is to make AI technology an integral, seamless part of everyday problem-solving and innovation, providing a platform where modular AI and a thriving marketplace converge to meet the diverse needs of its users.

psychic

Psychic is a tool that provides a platform for users to access psychic readings and services. It offers a range of features such as tarot card readings, astrology consultations, and spiritual guidance. Users can connect with experienced psychics and receive personalized insights and advice on various aspects of their lives. The platform is designed to be user-friendly and intuitive, making it easy for users to navigate and explore the different services available. Whether you're looking for guidance on love, career, or personal growth, Psychic has you covered.

For similar tasks

APIPark

APIPark is an open-source AI Gateway and Developer Portal that enables users to easily manage, integrate, and deploy AI and API services. It provides robust API management features, including creation, monitoring, and access control, to help developers efficiently and securely develop and manage their APIs. The platform aims to solve challenges such as connecting to powerful AI models, managing complex AI & API call relationships, overseeing API creation and security, simplifying fault detection and troubleshooting, and enhancing the visibility and valuation of data assets.

higress

Higress is an open-source cloud-native API gateway built on the core of Istio and Envoy, based on Alibaba's internal practice of Envoy Gateway. It is designed for AI-native API gateway, serving AI businesses such as Tongyi Qianwen APP, Bailian Big Model API, and Machine Learning PAI platform. Higress provides capabilities to interface with LLM model vendors, AI observability, multi-model load balancing/fallback, AI token flow control, and AI caching. It offers features for AI gateway, Kubernetes Ingress gateway, microservices gateway, and security protection gateway, with advantages in production-level scalability, stream processing, extensibility, and ease of use.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.