Best AI tools for< Manage Gpu Cluster Resources >

20 - AI tool Sites

Nscale

Nscale is a full-stack, scalable, and sustainable AI cloud platform that offers a wide range of AI services and solutions. It provides services for developing, training, tuning, and deploying AI models using on-demand services. Nscale also offers serverless inference API endpoints, fine-tuning capabilities, private cloud solutions, and various GPU clusters engineered for AI. The platform aims to simplify the journey from AI model development to production, offering a marketplace for AI/ML tools and resources. Nscale's infrastructure includes data centers powered by renewable energy, high-performance GPU nodes, and optimized networking and storage solutions.

Nebius AI

Nebius AI is an AI-centric cloud platform designed to handle intensive workloads efficiently. It offers a range of advanced features to support various AI applications and projects. The platform ensures high performance and security for users, enabling them to leverage AI technology effectively in their work. With Nebius AI, users can access cutting-edge AI tools and resources to enhance their projects and streamline their workflows.

Lambda Docs

Lambda Docs is an AI tool that provides cloud and hardware solutions for individuals, teams, and organizations. It offers services such as Managed Kubernetes, Preinstalled Kubernetes, Slurm, and access to GPU clusters. The platform also provides educational resources and tutorials for machine learning engineers and researchers to fine-tune models and deploy AI solutions.

Mystic.ai

Mystic.ai is an AI tool designed to deploy and scale Machine Learning models with ease. It offers a fully managed Kubernetes platform that runs in your own cloud, allowing users to deploy ML models in their own Azure/AWS/GCP account or in a shared GPU cluster. Mystic.ai provides cost optimizations, fast inference, simpler developer experience, and performance optimizations to ensure high-performance AI model serving. With features like pay-as-you-go API, cloud integration with AWS/Azure/GCP, and a beautiful dashboard, Mystic.ai simplifies the deployment and management of ML models for data scientists and AI engineers.

Lambda

Lambda is a superintelligence cloud platform that offers on-demand GPU clusters for multi-node training and fine-tuning, private large-scale GPU clusters, seamless management and scaling of AI workloads, inference endpoints and API, and a privacy-first chat app with open source models. It also provides NVIDIA's latest generation infrastructure for enterprise AI. With Lambda, AI teams can access gigawatt-scale AI factories for training and inference, deploy GPU instances, and leverage the latest NVIDIA GPUs for high-performance computing.

Nebius

Nebius is the ultimate cloud for AI explorers, designed to democratize AI infrastructure and empower builders everywhere. It offers flexible architecture to seamlessly scale AI from a single GPU to pre-optimized clusters with thousands of NVIDIA GPUs. Nebius is engineered for demanding AI workloads, integrating NVIDIA GPU accelerators, high-performance InfiniBand, and Kubernetes or Slurm orchestration for peak efficiency. The platform provides long-term value by optimizing every layer of the stack, delivering substantial customer value over competitors.

Rafay

Rafay is an AI-powered platform that accelerates cloud-native and AI/ML initiatives for enterprises. It provides automation for Kubernetes clusters, cloud cost optimization, and AI workbenches as a service. Rafay enables platform teams to focus on innovation by automating self-service cloud infrastructure workflows.

Cirrascale Cloud Services

Cirrascale Cloud Services is an AI tool that offers cloud solutions for Artificial Intelligence applications. The platform provides a range of cloud services and products tailored for AI innovation, including NVIDIA GPU Cloud, AMD Instinct Series Cloud, Qualcomm Cloud, Graphcore, Cerebras, and SambaNova. Cirrascale's AI Innovation Cloud enables users to test and deploy on leading AI accelerators in one cloud, democratizing AI by delivering high-performance AI compute and scalable deep learning solutions. The platform also offers professional and managed services, tailored multi-GPU server options, and high-throughput storage and networking solutions to accelerate development, training, and inference workloads.

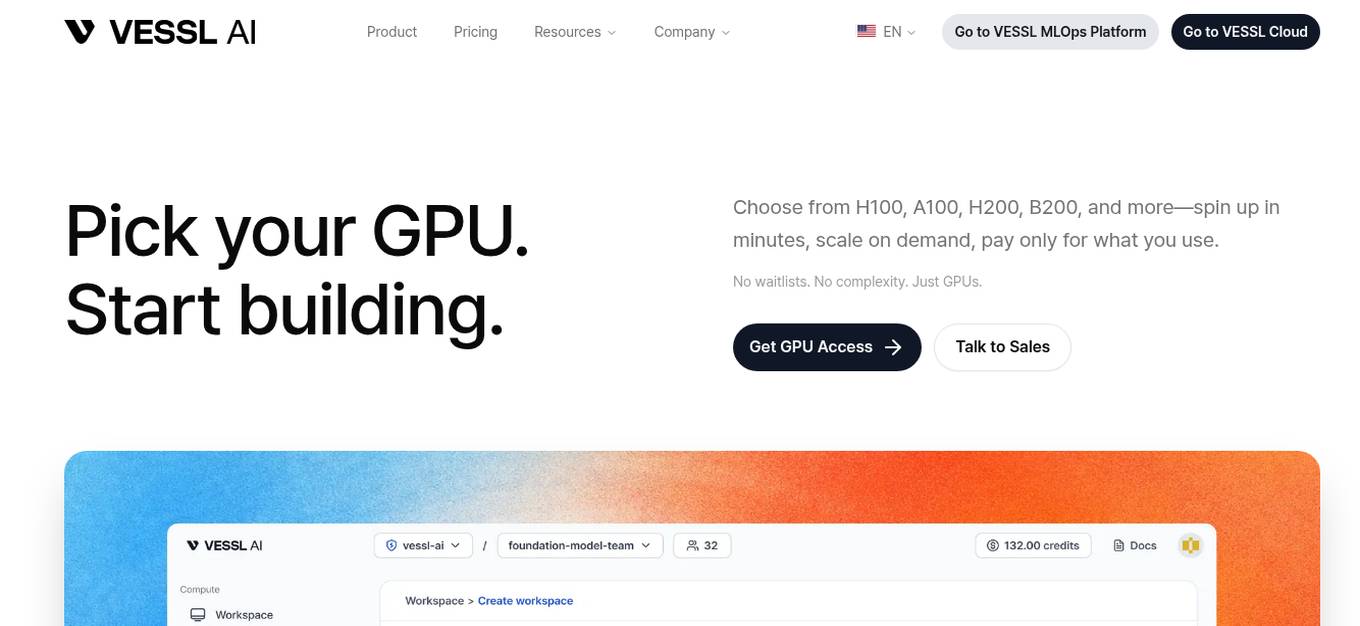

VESSL AI

VESSL AI is a platform offering Liquid AI Infra & Persistent GPU Cloud services, allowing users to easily access and utilize GPUs for running AI workloads. It provides a seamless experience from zero to running AI workloads, catering to AI startups, enterprise AI teams, and research & academia. VESSL AI offers GPU products for every stage, with options like spot, on-demand, and reserved capacity, along with features like multi-cloud failover, pay-as-you-go pricing, and production-ready reliability. The platform is designed to help users scale their AI projects efficiently and effectively.

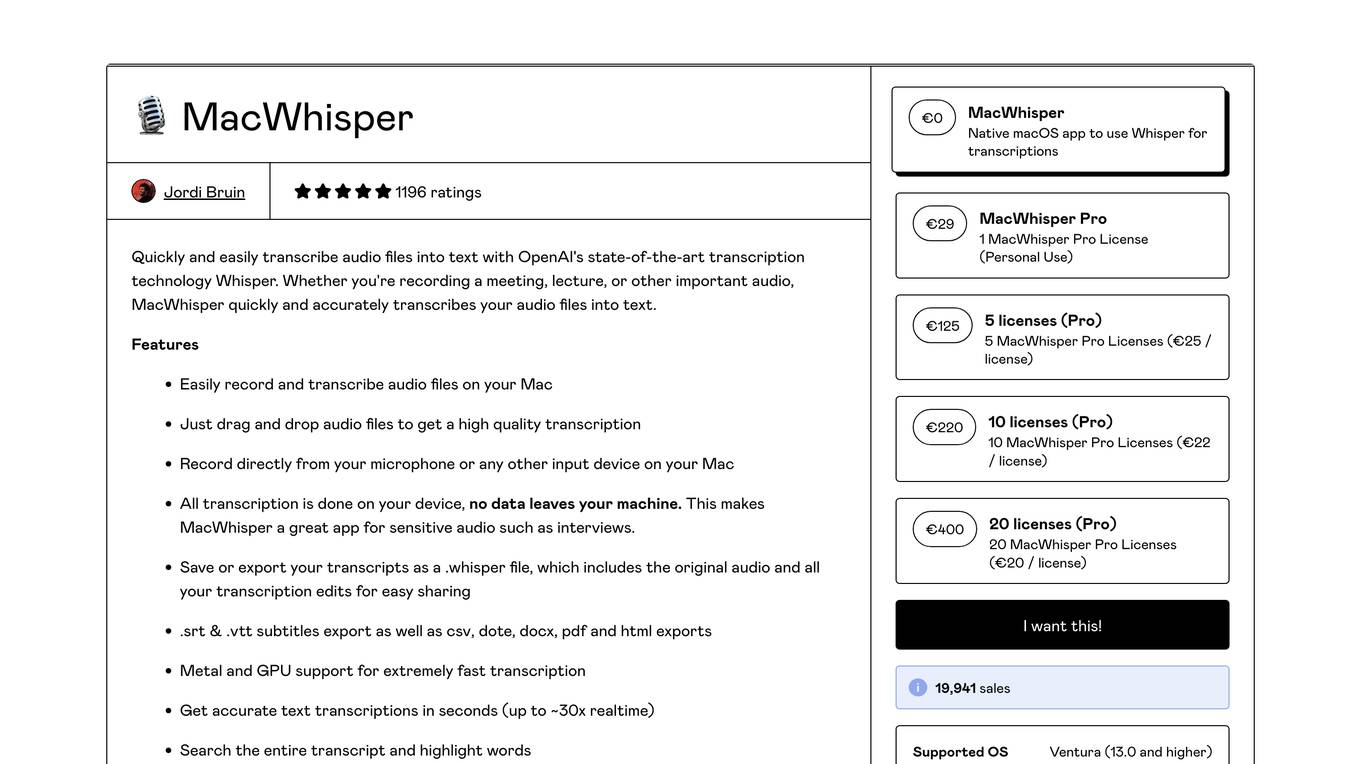

MacWhisper

MacWhisper is a native macOS application that utilizes OpenAI's Whisper technology for transcribing audio files into text. It offers a user-friendly interface for recording, transcribing, and editing audio, making it suitable for various use cases such as transcribing meetings, lectures, interviews, and podcasts. The application is designed to protect user privacy by performing all transcriptions locally on the device, ensuring that no data leaves the user's machine.

NVIDIA Run:ai

NVIDIA Run:ai is an enterprise platform for AI workloads and GPU orchestration. It accelerates AI and machine learning operations by addressing key infrastructure challenges through dynamic resource allocation, comprehensive AI life-cycle support, and strategic resource management. The platform significantly enhances GPU efficiency and workload capacity by pooling resources across environments and utilizing advanced orchestration. NVIDIA Run:ai provides unparalleled flexibility and adaptability, supporting public clouds, private clouds, hybrid environments, or on-premises data centers.

NVIDIA

NVIDIA is a world leader in artificial intelligence computing, providing solutions for cloud services, data center, embedded systems, gaming, and creating graphics cards and GPUs. They offer a wide range of products and services, including AI-driven platforms for life sciences research, end-to-end AI platforms, generative AI deployment, advanced simulation integration, and more. NVIDIA focuses on modernizing data centers with AI and accelerated computing, offering enterprise AI platforms, supercomputers, advanced networking solutions, and professional workstations. They also provide software tools for AI development, data center management, GPU monitoring, and more.

GrapixAI

GrapixAI is a leading provider of low-cost cloud GPU rental services and AI server solutions. The company's focus on flexibility, scalability, and cutting-edge technology enables a variety of AI applications in both local and cloud environments. GrapixAI offers the lowest prices for on-demand GPUs such as RTX4090, RTX 3090, RTX A6000, RTX A5000, and A40. The platform provides Docker-based container ecosystem for quick software setup, powerful GPU search console, customizable pricing options, various security levels, GUI and CLI interfaces, real-time bidding system, and personalized customer support.

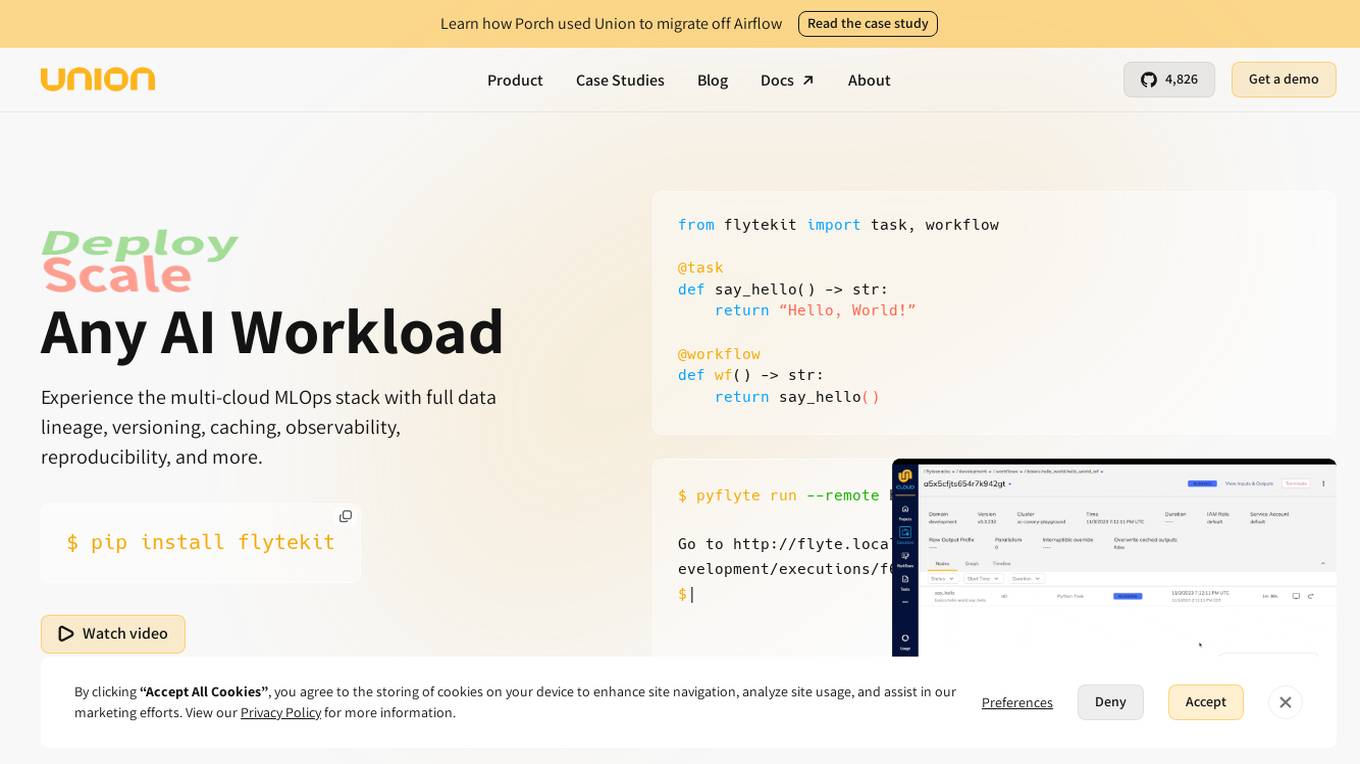

Union.ai

Union.ai is an infrastructure platform designed for AI, ML, and data workloads. It offers a scalable MLOps platform that optimizes resources, reduces costs, and fosters collaboration among team members. Union.ai provides features such as declarative infrastructure, data lineage tracking, accelerated datasets, and more to streamline AI orchestration on Kubernetes. It aims to simplify the management of AI, ML, and data workflows in production environments by addressing complexities and offering cost-effective strategies.

Local AI Playground

Local AI Playground is a free and open-source native app designed for AI management, verification, and inferencing. It allows users to experiment with AI offline in a private environment without the need for a GPU. The application is memory-efficient and compact, with a Rust backend, making it suitable for various operating systems. It offers features such as CPU inferencing, model management, and digest verification. Users can start a local streaming server for AI inferencing with just two clicks. Local AI Playground aims to simplify the AI development process and provide a user-friendly experience for both offline and online AI applications.

NVIDIA

NVIDIA is a world leader in artificial intelligence computing. The company's products and services are used by businesses and governments around the world to develop and deploy AI applications. NVIDIA's AI platform includes hardware, software, and tools that make it easy to build and train AI models. The company also offers a range of cloud-based AI services that make it easy to deploy and manage AI applications. NVIDIA's AI platform is used in a wide variety of industries, including healthcare, manufacturing, retail, and transportation. The company's AI technology is helping to improve the efficiency and accuracy of a wide range of tasks, from medical diagnosis to product design.

Nomi.cloud

Nomi.cloud is a modern AI-powered CloudOps and HPC assistant designed for next-gen businesses. It offers developers, marketplace, enterprise solutions, and pricing console. With features like single pane of glass view, instant deployment, continuous monitoring, AI-powered insights, and budgets & alerts built-in, Nomi.cloud aims to revolutionize cloud management. It provides a user-friendly interface to manage infrastructure efficiently, optimize costs, and deploy resources across multiple regions with ease. Nomi.cloud is built for scale, trusted by enterprises, and offers a range of GPUs and cloud providers to suit various needs.

HIVE Digital Technologies

HIVE Digital Technologies is a company specializing in building and operating cutting-edge data centers, with a focus on Bitcoin mining and advancing Web3, AI, and HPC technologies. They offer cloud services, operate data centers in Canada, Iceland, and Sweden, and have a fleet of industrial GPUs for AI applications. The company is known for its expertise in digital infrastructure and commitment to using renewable energy sources.

DDN A³I

DDN A³I is an AI storage platform that maximizes business differentiation and market leadership through data utilization, AI, and advanced analytics. It offers comprehensive enterprise features, easy deployment and management, predictable scaling, data protection, and high performance. DDN A³I enables organizations to accelerate insights, reduce costs, and optimize GPU productivity for faster results.

BuildAi

BuildAi is an AI tool designed to provide the lowest cost GPU cloud for AI training on the market. The platform is powered with renewable energy, enabling companies to train AI models at a significantly reduced cost. BuildAi offers interruptible pricing, short term reserved capacity, and high uptime pricing options. The application focuses on optimizing infrastructure for training and fine-tuning machine learning models, not inference, and aims to decrease the impact of computing on the planet. With features like data transfer support, SSH access, and monitoring tools, BuildAi offers a comprehensive solution for ML teams.

1 - Open Source AI Tools

Mooncake

Mooncake is a serving platform for Kimi, a leading LLM service provided by Moonshot AI. It features a KVCache-centric disaggregated architecture that separates prefill and decoding clusters, leveraging underutilized CPU, DRAM, and SSD resources of the GPU cluster. Mooncake's scheduler balances throughput and latency-related SLOs, with a prediction-based early rejection policy for highly overloaded scenarios. It excels in long-context scenarios, achieving up to a 525% increase in throughput while handling 75% more requests under real workloads.

20 - OpenAI Gpts

FODMAPs Dietician

Dietician that helps those with IBS manage their symptoms via FODMAPs. FODMAP stands for fermentable oligosaccharides, disaccharides, monosaccharides and polyols. These are the chemical names of 5 naturally occurring sugars that are not well absorbed by your small intestine.

Cognitive Behavioral Coach

Provides cognitive-behavioral and emotional therapy guidance, helping users understand and manage their thoughts, behaviors, and emotions.

1ACulma - Management Coach

Cross-cultural management. Useful for those who relocate to another country or manage cross-cultural teams.

Finance Butler(ファイナンス・バトラー)

I manage finances securely with encryption and user authentication.

GroceriesGPT

I manage your grocery lists to help you stay organized. *1/ Tell me what to add to a list. 2/ Ask me to add all ingredients for a receipe. 3/ Upload a receipt to remove items from your lists 4/ Add an item by simply uploading a picture. 5/ Ask me what items I would recommend you add to your lists.*

Family Legacy Assistant

Helps users manage and preserve family heirlooms with empathy and practical advice.

AI Home Doctor (Guided Care)

Give me your syptoms and I will provide instructions for how to manage your illness.

MixerBox ChatGSlide

Your AI Google Slides assistant! Effortlessly locate, manage, and summarize your presentations!

Herbal Healer: The Art of Botany

A simulation game where players learn grow medicinal plants, craft remedies, and manage a herbal healing garden. Another AI Tiny Game by Dave Lalande

ZenFin

💡 Tips and guidance to buy, sell, and manage BitCoins, Ether , and more for transactions under $50.

DivineFeed

As the Divine Apple II, I defy Moore's Law in this darkly humorous game where you, as God, manage global prayers, navigate celestial politics, and accept that omnipotence can't please everyone.

Couples Financial Planner

Aids couples in managing joint finances, budgeting for future goals, and navigating financial challenges together.

God Simulator

A God Simulator GPT, facilitating world creation and managing random events.