awesome-LLM-resources

🧑🚀 全世界最好的LLM资料总结(语音视频生成、Agent、辅助编程、数据处理、模型训练、模型推理、o1 模型、MCP、小语言模型、视觉语言模型) | Summary of the world's best LLM resources.

Stars: 6188

This repository is a curated list of resources for learning and working with Large Language Models (LLMs). It includes a collection of articles, tutorials, tools, datasets, and research papers related to LLMs such as GPT-3, BERT, and Transformer models. Whether you are a researcher, developer, or enthusiast interested in natural language processing and artificial intelligence, this repository provides valuable resources to help you understand, implement, and experiment with LLMs.

README:

全世界最好的大语言模型资源汇总 持续更新

- 数据 Data

- 微调 Fine-Tuning

- 推理 Inference

- 评估 Evaluation

- 体验 Usage

- 知识库 RAG

- 智能体 Agents

- 代码 Coding

- 视频 Video

- 图片 Image

- 搜索 Search

- 语音 Speech

- 统一模型 Unified Model

- 书籍 Book

- 课程 Course

- 教程 Tutorial

- 论文 Paper

- 社区 Community

- 模型上下文协议 MCP

- 推理 Open o1

- 推理 Open o3

- 小语言模型 Small Language Model

- 小多模态模型 Small Vision Language Model

- 技巧 Tips

[!NOTE]

此处命名为

数据,但这里并没有提供具体数据集,而是提供了处理获取大规模数据的方法

- AotoLabel: Label, clean and enrich text datasets with LLMs.

- LabelLLM: The Open-Source Data Annotation Platform.

- data-juicer: A one-stop data processing system to make data higher-quality, juicier, and more digestible for LLMs!

- OmniParser: a native Golang ETL streaming parser and transform library for CSV, JSON, XML, EDI, text, etc.

-

MinerU (

🔥): MinerU is a one-stop, open-source, high-quality data extraction tool, supports PDF/webpage/e-book extraction. - PDF-Extract-Kit: A Comprehensive Toolkit for High-Quality PDF Content Extraction.

- Parsera: Lightweight library for scraping web-sites with LLMs.

- Sparrow: Sparrow is an innovative open-source solution for efficient data extraction and processing from various documents and images.

- Docling: Get your documents ready for gen AI.

- GOT-OCR2.0: OCR Model.

- LLM Decontaminator: Rethinking Benchmark and Contamination for Language Models with Rephrased Samples.

- DataTrove: DataTrove is a library to process, filter and deduplicate text data at a very large scale.

- llm-swarm: Generate large synthetic datasets like Cosmopedia.

- Distilabel: Distilabel is a framework for synthetic data and AI feedback for engineers who need fast, reliable and scalable pipelines based on verified research papers.

- Common-Crawl-Pipeline-Creator: The Common Crawl Pipeline Creator.

- Tabled: Detect and extract tables to markdown and csv.

- Zerox: Zero shot pdf OCR with gpt-4o-mini.

- DocLayout-YOLO: Enhancing Document Layout Analysis through Diverse Synthetic Data and Global-to-Local Adaptive Perception.

- TensorZero: make LLMs improve through experience.

- Promptwright: Generate large synthetic data using a local LLM.

- pdf-extract-api: Document (PDF) extraction and parse API using state of the art modern OCRs + Ollama supported models.

- pdf2htmlEX: Convert PDF to HTML without losing text or format.

- Extractous: Fast and efficient unstructured data extraction. Written in Rust with bindings for many languages.

- MegaParse: File Parser optimised for LLM Ingestion with no loss.

- MarkItDown: Python tool for converting files and office documents to Markdown.

- datasketch: datasketch gives you probabilistic data structures that can process and search very large amount of data super fast, with little loss of accuracy.

- semhash: lightweight and flexible tool for deduplicating datasets using semantic similarity.

- ReaderLM-v2: a 1.5B parameter language model that converts raw HTML into beautifully formatted markdown or JSON.

- Bespoke Curator: Data Curation for Post-Training & Structured Data Extraction.

- LangKit: An open-source toolkit for monitoring Large Language Models (LLMs). Extracts signals from prompts & responses, ensuring safety & security.

- Curator: Synthetic Data curation for post-training and structured data extraction.

- olmOCR: A toolkit for training language models to work with PDF documents in the wild.

-

Easy Dataset (

🔥): A powerful tool for creating fine-tuning datasets for LLM. - BabelDOC: PDF scientific paper translation and bilingual comparison library.

- Dolphin: Document Image Parsing via Heterogeneous Anchor Prompting.

- EasyDistill: Easy Knowledge Distillation for Large Language Models.

- ContextGem: a free, open-source LLM framework that makes it radically easier to extract structured data and insights from documents.

- OCRFlux: a lightweight yet powerful multimodal toolkit that significantly advances PDF-to-Markdown conversion, excelling in complex layout handling, complicated table parsing and cross-page content merging.

- DataFlow: Easy Data Preparation with latest LLMs-based Operators and Pipelines.

-

DatasetLoom (

multimodal): 一个面向多模态大模型训练的智能数据集构建与评估平台.

-

LLaMA-Factory (

🔥): Unify Efficient Fine-Tuning of 100+ LLMs. - 360-LLaMA-Factory: Unify Efficient Fine-Tuning of 100+ LLMs. (add Sequence Parallelism for supporting long context training)

- unsloth: 2-5X faster 80% less memory LLM finetuning.

- TRL: Transformer Reinforcement Learning.

- Firefly: Firefly: 大模型训练工具,支持训练数十种大模型

- Xtuner: An efficient, flexible and full-featured toolkit for fine-tuning large models.

- torchtune: A Native-PyTorch Library for LLM Fine-tuning.

- Swift: Use PEFT or Full-parameter to finetune 200+ LLMs or 15+ MLLMs.

- AutoTrain: A new way to automatically train, evaluate and deploy state-of-the-art Machine Learning models.

- OpenRLHF: An Easy-to-use, Scalable and High-performance RLHF Framework (Support 70B+ full tuning & LoRA & Mixtral & KTO).

- Ludwig: Low-code framework for building custom LLMs, neural networks, and other AI models.

- mistral-finetune: A light-weight codebase that enables memory-efficient and performant finetuning of Mistral's models.

- aikit: Fine-tune, build, and deploy open-source LLMs easily!

- H2O-LLMStudio: H2O LLM Studio - a framework and no-code GUI for fine-tuning LLMs.

- LitGPT: Pretrain, finetune, deploy 20+ LLMs on your own data. Uses state-of-the-art techniques: flash attention, FSDP, 4-bit, LoRA, and more.

- LLMBox: A comprehensive library for implementing LLMs, including a unified training pipeline and comprehensive model evaluation.

- PaddleNLP: Easy-to-use and powerful NLP and LLM library.

- workbench-llamafactory: This is an NVIDIA AI Workbench example project that demonstrates an end-to-end model development workflow using Llamafactory.

- OpenRLHF: An Easy-to-use, Scalable and High-performance RLHF Framework (70B+ PPO Full Tuning & Iterative DPO & LoRA & Mixtral).

- TinyLLaVA Factory: A Framework of Small-scale Large Multimodal Models.

- LLM-Foundry: LLM training code for Databricks foundation models.

- lmms-finetune: A unified codebase for finetuning (full, lora) large multimodal models, supporting llava-1.5, qwen-vl, llava-interleave, llava-next-video, phi3-v etc.

- Simplifine: Simplifine lets you invoke LLM finetuning with just one line of code using any Hugging Face dataset or model.

- Transformer Lab: Open Source Application for Advanced LLM Engineering: interact, train, fine-tune, and evaluate large language models on your own computer.

- Liger-Kernel: Efficient Triton Kernels for LLM Training.

- ChatLearn: A flexible and efficient training framework for large-scale alignment.

- nanotron: Minimalistic large language model 3D-parallelism training.

- Proxy Tuning: Tuning Language Models by Proxy.

- Effective LLM Alignment: Effective LLM Alignment Toolkit.

- Autotrain-advanced

- Meta Lingua: a lean, efficient, and easy-to-hack codebase to research LLMs.

- Vision-LLM Alignemnt: This repository contains the code for SFT, RLHF, and DPO, designed for vision-based LLMs, including the LLaVA models and the LLaMA-3.2-vision models.

- finetune-Qwen2-VL: Quick Start for Fine-tuning or continue pre-train Qwen2-VL Model.

- Online-RLHF: A recipe for online RLHF and online iterative DPO.

- InternEvo: an open-sourced lightweight training framework aims to support model pre-training without the need for extensive dependencies.

- veRL: Volcano Engine Reinforcement Learning for LLM.

- Axolotl: Axolotl is designed to work with YAML config files that contain everything you need to preprocess a dataset, train or fine-tune a model, run model inference or evaluation, and much more.

- Oumi: Everything you need to build state-of-the-art foundation models, end-to-end.

- Kiln: The easiest tool for fine-tuning LLM models, synthetic data generation, and collaborating on datasets.

- DeepSeek-671B-SFT-Guide: An open-source solution for full parameter fine-tuning of DeepSeek-V3/R1 671B, including complete code and scripts from training to inference, as well as some practical experiences and conclusions.

- MLX-VLM: MLX-VLM is a package for inference and fine-tuning of Vision Language Models (VLMs) on your Mac using MLX.

- RL-Factory: Train your Agent model via our easy and efficient framework.

- RM-Gallery: A One-Stop Reward Model Platform.

- ART: rain multi-step agents for real-world tasks using GRPO. Give your agents on-the-job training.

-

VeRL (

🔥): Volcano Engine Reinforcement Learning for LLMs.

- ollama: Get up and running with Llama 3, Mistral, Gemma, and other large language models.

- Open WebUI: User-friendly WebUI for LLMs (Formerly Ollama WebUI).

- Text Generation WebUI: A Gradio web UI for Large Language Models. Supports transformers, GPTQ, AWQ, EXL2, llama.cpp (GGUF), Llama models.

- Xinference: A powerful and versatile library designed to serve language, speech recognition, and multimodal models.

- LangChain: Build context-aware reasoning applications.

- LlamaIndex: A data framework for your LLM applications.

- lobe-chat: an open-source, modern-design LLMs/AI chat framework. Supports Multi AI Providers, Multi-Modals (Vision/TTS) and plugin system.

- TensorRT-LLM: TensorRT-LLM provides users with an easy-to-use Python API to define Large Language Models (LLMs) and build TensorRT engines that contain state-of-the-art optimizations to perform inference efficiently on NVIDIA GPUs.

-

vllm (

🔥): A high-throughput and memory-efficient inference and serving engine for LLMs. - LlamaChat: Chat with your favourite LLaMA models in a native macOS app.

- NVIDIA ChatRTX: ChatRTX is a demo app that lets you personalize a GPT large language model (LLM) connected to your own content—docs, notes, or other data.

- LM Studio: Discover, download, and run local LLMs.

- chat-with-mlx: Chat with your data natively on Apple Silicon using MLX Framework.

- LLM Pricing: Quickly Find the Perfect Large Language Models (LLM) API for Your Budget! Use Our Free Tool for Instant Access to the Latest Prices from Top Providers.

- Open Interpreter: A natural language interface for computers.

- Chat-ollama: An open source chatbot based on LLMs. It supports a wide range of language models, and knowledge base management.

- chat-ui: Open source codebase powering the HuggingChat app.

- MemGPT: Create LLM agents with long-term memory and custom tools.

- koboldcpp: A simple one-file way to run various GGML and GGUF models with KoboldAI's UI.

- LLMFarm: llama and other large language models on iOS and MacOS offline using GGML library.

- enchanted: Enchanted is iOS and macOS app for chatting with private self hosted language models such as Llama2, Mistral or Vicuna using Ollama.

- Flowise: Drag & drop UI to build your customized LLM flow.

- Jan: Jan is an open source alternative to ChatGPT that runs 100% offline on your computer. Multiple engine support (llama.cpp, TensorRT-LLM).

- LMDeploy: LMDeploy is a toolkit for compressing, deploying, and serving LLMs.

- RouteLLM: A framework for serving and evaluating LLM routers - save LLM costs without compromising quality!

- MInference: About To speed up Long-context LLMs' inference, approximate and dynamic sparse calculate the attention, which reduces inference latency by up to 10x for pre-filling on an A100 while maintaining accuracy.

- Mem0: The memory layer for Personalized AI.

-

SGLang (

🔥): SGLang is yet another fast serving framework for large language models and vision language models. - AirLLM: AirLLM optimizes inference memory usage, allowing 70B large language models to run inference on a single 4GB GPU card without quantization, distillation and pruning. And you can run 405B Llama3.1 on 8GB vram now.

- LLMHub: LLMHub is a lightweight management platform designed to streamline the operation and interaction with various language models (LLMs).

- YuanChat

- LiteLLM: Call all LLM APIs using the OpenAI format [Bedrock, Huggingface, VertexAI, TogetherAI, Azure, OpenAI, Groq etc.]

- GuideLLM: GuideLLM is a powerful tool for evaluating and optimizing the deployment of large language models (LLMs).

- LLM-Engines: A unified inference engine for large language models (LLMs) including open-source models (VLLM, SGLang, Together) and commercial models (OpenAI, Mistral, Claude).

- OARC: ollama_agent_roll_cage (OARC) is a local python agent fusing ollama llm's with Coqui-TTS speech models, Keras classifiers, Llava vision, Whisper recognition, and more to create a unified chatbot agent for local, custom automation.

- g1: Using Llama-3.1 70b on Groq to create o1-like reasoning chains.

- MemoryScope: MemoryScope provides LLM chatbots with powerful and flexible long-term memory capabilities, offering a framework for building such abilities.

- OpenLLM: Run any open-source LLMs, such as Llama 3.1, Gemma, as OpenAI compatible API endpoint in the cloud.

- Infinity: The AI-native database built for LLM applications, providing incredibly fast hybrid search of dense embedding, sparse embedding, tensor and full-text.

- optillm: an OpenAI API compatible optimizing inference proxy which implements several state-of-the-art techniques that can improve the accuracy and performance of LLMs.

- LLaMA Box: LLM inference server implementation based on llama.cpp.

- ZhiLight: A highly optimized inference acceleration engine for Llama and its variants.

- DashInfer: DashInfer is a native LLM inference engine aiming to deliver industry-leading performance atop various hardware architectures.

- LocalAI: The free, Open Source alternative to OpenAI, Claude and others. Self-hosted and local-first. Drop-in replacement for OpenAI, running on consumer-grade hardware. No GPU required.

- ktransformers: A Flexible Framework for Experiencing Cutting-edge LLM Inference Optimizations.

- SkyPilot: Run AI and batch jobs on any infra (Kubernetes or 14+ clouds). Get unified execution, cost savings, and high GPU availability via a simple interface.

- Chitu: High-performance inference framework for large language models, focusing on efficiency, flexibility, and availability.

- TokenSwift: From Hours to Minutes: Lossless Acceleration of Ultra Long Sequence Generation.

- Cherry Studio: a desktop client that supports for multiple LLM providers, available on Windows, Mac and Linux.

- Shimmy: Python-free Rust inference server — OpenAI-API compatible. GGUF + SafeTensors, hot model swap, auto-discovery, single binary.

- lm-evaluation-harness: A framework for few-shot evaluation of language models.

- opencompass: OpenCompass is an LLM evaluation platform, supporting a wide range of models (Llama3, Mistral, InternLM2,GPT-4,LLaMa2, Qwen,GLM, Claude, etc) over 100+ datasets.

- llm-comparator: LLM Comparator is an interactive data visualization tool for evaluating and analyzing LLM responses side-by-side, developed.

- EvalScope (

🔥) - Weave: A lightweight toolkit for tracking and evaluating LLM applications.

- MixEval: Deriving Wisdom of the Crowd from LLM Benchmark Mixtures.

- Evaluation guidebook: If you've ever wondered how to make sure an LLM performs well on your specific task, this guide is for you!

- Ollama Benchmark: LLM Benchmark for Throughput via Ollama (Local LLMs).

- VLMEvalKit: Open-source evaluation toolkit of large vision-language models (LVLMs), support ~100 VLMs, 40+ benchmarks.

- AGI-Eval

- EvalScope: A streamlined and customizable framework for efficient large model evaluation and performance benchmarking.

- DeepEval: a simple-to-use, open-source LLM evaluation framework, for evaluating and testing large-language model systems.

- Lighteval: Lighteval is your all-in-one toolkit for evaluating LLMs across multiple backends.

- QwQ/eval: QwQ is the reasoning model series developed by Qwen team, Alibaba Cloud.

- Evalchemy: A unified and easy-to-use toolkit for evaluating post-trained language models.

- MathArena: Evaluation of LLMs on latest math competitions.

- YourBench: A Dynamic Benchmark Generation Framework.

- MedEvalKit: A Unified Medical Evaluation Framework.

LLM API 服务平台:

- Groq

- 硅基流动

- 火山引擎

- 文心千帆

- DashScope

- aisuite

- DeerAPI

- Qwen-Chat

- DeepSeek-v3

-

WaveSpeed

视频生成 - OpenRouter

- 数标标 (

🔥) - WaveSpeed

- LMSYS Chatbot Arena: Benchmarking LLMs in the Wild

- CompassArena 司南大模型竞技场

- 琅琊榜

- Huggingface Spaces

- WiseModel Spaces

- Poe

- 林哥的大模型野榜

- OpenRouter

- AnyChat

- 智谱Z.AI

- AnythingLLM: The all-in-one AI app for any LLM with full RAG and AI Agent capabilites.

- MaxKB: 基于 LLM 大语言模型的知识库问答系统。开箱即用,支持快速嵌入到第三方业务系统

- RAGFlow: An open-source RAG (Retrieval-Augmented Generation) engine based on deep document understanding.

- Dify: An open-source LLM app development platform. Dify's intuitive interface combines AI workflow, RAG pipeline, agent capabilities, model management, observability features and more, letting you quickly go from prototype to production.

- FastGPT: A knowledge-based platform built on the LLM, offers out-of-the-box data processing and model invocation capabilities, allows for workflow orchestration through Flow visualization.

- Langchain-Chatchat: 基于 Langchain 与 ChatGLM 等不同大语言模型的本地知识库问答

- QAnything: Question and Answer based on Anything.

- Quivr: A personal productivity assistant (RAG) ⚡️🤖 Chat with your docs (PDF, CSV, ...) & apps using Langchain, GPT 3.5 / 4 turbo, Private, Anthropic, VertexAI, Ollama, LLMs, Groq that you can share with users ! Local & Private alternative to OpenAI GPTs & ChatGPT powered by retrieval-augmented generation.

- RAG-GPT: RAG-GPT, leveraging LLM and RAG technology, learns from user-customized knowledge bases to provide contextually relevant answers for a wide range of queries, ensuring rapid and accurate information retrieval.

- Verba: Retrieval Augmented Generation (RAG) chatbot powered by Weaviate.

- FlashRAG: A Python Toolkit for Efficient RAG Research.

- GraphRAG: A modular graph-based Retrieval-Augmented Generation (RAG) system.

- LightRAG: LightRAG helps developers with both building and optimizing Retriever-Agent-Generator pipelines.

- GraphRAG-Ollama-UI: GraphRAG using Ollama with Gradio UI and Extra Features.

- nano-GraphRAG: A simple, easy-to-hack GraphRAG implementation.

- RAG Techniques: This repository showcases various advanced techniques for Retrieval-Augmented Generation (RAG) systems. RAG systems combine information retrieval with generative models to provide accurate and contextually rich responses.

- ragas: Evaluation framework for your Retrieval Augmented Generation (RAG) pipelines.

- kotaemon: An open-source clean & customizable RAG UI for chatting with your documents. Built with both end users and developers in mind.

- RAGapp: The easiest way to use Agentic RAG in any enterprise.

- TurboRAG: Accelerating Retrieval-Augmented Generation with Precomputed KV Caches for Chunked Text.

- LightRAG: Simple and Fast Retrieval-Augmented Generation.

- TEN: the Next-Gen AI-Agent Framework, the world's first truly real-time multimodal AI agent framework.

- AutoRAG: RAG AutoML tool for automatically finding an optimal RAG pipeline for your data.

- KAG: KAG is a knowledge-enhanced generation framework based on OpenSPG engine, which is used to build knowledge-enhanced rigorous decision-making and information retrieval knowledge services.

- Fast-GraphRAG: RAG that intelligently adapts to your use case, data, and queries.

- Tiny-GraphRAG

- DB-GPT GraphRAG: DB-GPT GraphRAG integrates both triplet-based knowledge graphs and document structure graphs while leveraging community and document retrieval mechanisms to enhance RAG capabilities, achieving comparable performance while consuming only 50% of the tokens required by Microsoft's GraphRAG. Refer to the DB-GPT Graph RAG User Manual for details.

- Chonkie: The no-nonsense RAG chunking library that's lightweight, lightning-fast, and ready to CHONK your texts.

- RAGLite: RAGLite is a Python toolkit for Retrieval-Augmented Generation (RAG) with PostgreSQL or SQLite.

- KAG: KAG is a logical form-guided reasoning and retrieval framework based on OpenSPG engine and LLMs.

- CAG: CAG leverages the extended context windows of modern large language models (LLMs) by preloading all relevant resources into the model’s context and caching its runtime parameters.

- MiniRAG: an extremely simple retrieval-augmented generation framework that enables small models to achieve good RAG performance through heterogeneous graph indexing and lightweight topology-enhanced retrieval.

- XRAG: a benchmarking framework designed to evaluate the foundational components of advanced Retrieval-Augmented Generation (RAG) systems.

- Rankify: A Comprehensive Python Toolkit for Retrieval, Re-Ranking, and Retrieval-Augmented Generation.

- RAG-Anything: All-in-One RAG System.

- AutoGen: AutoGen is a framework that enables the development of LLM applications using multiple agents that can converse with each other to solve tasks. AutoGen AIStudio

- CrewAI: Framework for orchestrating role-playing, autonomous AI agents. By fostering collaborative intelligence, CrewAI empowers agents to work together seamlessly, tackling complex tasks.

- Coze

- AgentGPT: Assemble, configure, and deploy autonomous AI Agents in your browser.

- XAgent: An Autonomous LLM Agent for Complex Task Solving.

- MobileAgent: The Powerful Mobile Device Operation Assistant Family.

- Lagent: A lightweight framework for building LLM-based agents.

- Qwen-Agent: Agent framework and applications built upon Qwen2, featuring Function Calling, Code Interpreter, RAG, and Chrome extension.

- LinkAI: 一站式 AI 智能体搭建平台

- Baidu APPBuilder

- agentUniverse: agentUniverse is a LLM multi-agent framework that allows developers to easily build multi-agent applications. Furthermore, through the community, they can exchange and share practices of patterns across different domains.

- LazyLLM: 低代码构建多Agent大模型应用的开发工具

- AgentScope: Start building LLM-empowered multi-agent applications in an easier way.

- MoA: Mixture of Agents (MoA) is a novel approach that leverages the collective strengths of multiple LLMs to enhance performance, achieving state-of-the-art results.

- Agently: AI Agent Application Development Framework.

- OmAgent: A multimodal agent framework for solving complex tasks.

- Tribe: No code tool to rapidly build and coordinate multi-agent teams.

- CAMEL: First LLM multi-agent framework and an open-source community dedicated to finding the scaling law of agents.

- PraisonAI: PraisonAI application combines AutoGen and CrewAI or similar frameworks into a low-code solution for building and managing multi-agent LLM systems, focusing on simplicity, customisation, and efficient human-agent collaboration.

- IoA: An open-source framework for collaborative AI agents, enabling diverse, distributed agents to team up and tackle complex tasks through internet-like connectivity.

- llama-agentic-system : Agentic components of the Llama Stack APIs.

- Agent Zero: Agent Zero is not a predefined agentic framework. It is designed to be dynamic, organically growing, and learning as you use it.

- Agents: An Open-source Framework for Data-centric, Self-evolving Autonomous Language Agents.

- AgentScope: Start building LLM-empowered multi-agent applications in an easier way.

- FastAgency: The fastest way to bring multi-agent workflows to production.

- Swarm: Framework for building, orchestrating and deploying multi-agent systems. Managed by OpenAI Solutions team. Experimental framework.

- Agent-S: an open agentic framework that uses computers like a human.

- PydanticAI: Agent Framework / shim to use Pydantic with LLMs.

- Agentarium: open-source framework for creating and managing simulations populated with AI-powered agents.

- smolagents: a barebones library for agents. Agents write python code to call tools and orchestrate other agents.

- Cooragent: Cooragent is an AI agent collaboration community.

- Agno: Agno is a lightweight library for building Agents with memory, knowledge, tools and reasoning.

- Suna: Open Source Generalist AI Agent.

- rowboat: Let AI build multi-agent workflows for you in minutes.

- EvoAgentX: Building a Self-Evolving Ecosystem of AI Agents.

- ii-agent: a new open-source framework to build and deploy intelligent agents.

- OWL: Optimized Workforce Learning for General Multi-Agent Assistance in Real-World Task Automation.

- OpenManus: No fortress, purely open ground. OpenManus is Coming.

- JoyAgent-JDGenie: 业界首个开源高完成度轻量化通用多智能体产品.

- coze-studio: An AI agent development platform with all-in-one visual tools, simplifying agent creation, debugging, and deployment like never before.

- OxyGent: An advanced Python framework that empowers developers to quickly build production-ready intelligent systems.

- Cloi CLI: Local debugging agent that runs in your terminal.

- Devin

- v0

- Blot.new

- cursor

- Windsurf

- cline

- Trae

- MGX

- Roo Code

- Kilo Code

- AugmentCode

- Claude Code

- Gemini CLI

- Serena

- Claudia

- OpenCode

- Kiro

- CodeBuddy

- Kiro

[!NOTE] 🤝Awesome-Video-Diffusion

- HunyuanVideo

- CogVideo

- Wan2.1

- Open-Sora

- Open-Sora-Plan

- LTX-Video

- Step-Video-T2V

-

Step1X-Edit

Editing -

Wan2.1-VACE

Editing -

ICEdit

Editing - mochi-1-preview

- Wan2.1-Fun

-

Wan2.1-FLF2V

首尾帧 -

MAGI-1

自回归模型 - SkyReels-V2

- FramePack

- Pusa-VidGen

- Wan2.2

- https://github.com/hao-ai-lab/FastVideo

- https://github.com/tdrussell/diffusion-pipe

- https://github.com/VideoVerses/VideoTuna

- https://github.com/modelscope/DiffSynth-Studio

- https://github.com/huggingface/diffusers

- https://github.com/kohya-ss/musubi-tuner

- https://github.com/spacepxl/HunyuanVideo-Training

- https://github.com/Tele-AI/TeleTron

- https://github.com/Yaofang-Liu/Mochi-Full-Finetuner

- https://github.com/bghira/SimpleTuner

- OpenSearch GPT: SearchGPT / Perplexity clone, but personalised for you.

- MindSearch: An LLM-based Multi-agent Framework of Web Search Engine (like Perplexity.ai Pro and SearchGPT).

- nanoPerplexityAI: The simplest open-source implementation of perplexity.ai.

- curiosity: Try to build a Perplexity-like user experience.

- MiniPerplx: A minimalistic AI-powered search engine that helps you find information on the internet.

- SpeechGPT-2.0-preview: https://github.com/OpenMOSS/SpeechGPT-2.0-preview

- Moss-TTSD:https://github.com/OpenMOSS/MOSS-TTSD

- Index-TTS:https://github.com/index-tts/index-tts

- MegaTTS3:https://github.com/bytedance/MegaTTS3

- F5-TTS:https://github.com/SWivid/F5-TTS

- GPT-SoVITS:https://github.com/RVC-Boss/GPT-SoVITS

- CosyVoice:https://github.com/FunAudioLLM/CosyVoice

- Spark-TTS:https://github.com/SparkAudio/Spark-TTS

- OpenVoice:https://github.com/myshell-ai/OpenVoice

- Dia:https://github.com/nari-labs/dia

- ChatTTS:https://github.com/2noise/ChatTTS

- Fish Speech:https://github.com/fishaudio/fish-speech

- Edge-TTS:https://github.com/rany2/edge-tts

- Bark:https://github.com/suno-ai/bark

- kokoro: https://github.com/hexgrad/kokoro

- Higgs Audio V2: https://github.com/boson-ai/higgs-audio 【Training】

- KittenTTS: https://github.com/KittenML/KittenTTS

- ZipVoice: https://github.com/k2-fsa/ZipVoice

- VyvoTTS: https://github.com/Vyvo-Labs/VyvoTTS

- VibeVoice: https://github.com/microsoft/VibeVoice

- Index-TTS-2: https://huggingface.co/IndexTeam/IndexTTS-2

- FireRedTTS2: https://github.com/FireRedTeam/FireRedTTS2

- VoxCPM: https://github.com/OpenBMB/VoxCPM/

- Kyutai: https://github.com/kyutai-labs/delayed-streams-modeling

- Whisper: https://github.com/openai/whisper

- Audio Flamingo 3: https://huggingface.co/nvidia/audio-flamingo-3

- Voxtral: https://huggingface.co/mistralai/Voxtral-Mini-3B-2507

- Step-Audio2: https://github.com/stepfun-ai/Step-Audio2

现在统一模型已经从

理解+生成变成理解+生成+编辑

- Emu-2:https://arxiv.org/abs/2312.13286

- Emu-3:https://arxiv.org/abs/2409.18869

- Emu-1:https://arxiv.org/abs/2307.05222

- Janus:https://github.com/deepseek-ai/Janus

- Janus-Pro:http://arxiv.org/abs/2508.05954

- show-o:https://arxiv.org/abs/2408.12528

- Any-GPT:https://arxiv.org/abs/2402.12226

- Next-GPT:https://arxiv.org/pdf/2309.05519.pdf

- CoDi:https://arxiv.org/abs/2305.11846

- Seed-X:https://arxiv.org/abs/2404.14396

- Dream-LLM:https://arxiv.org/abs/2309.11499

- Chameleon:https://arxiv.org/abs/2405.09818

- Spider:https://arxiv.org/abs/2411.09439

- MedViLaM:https://arxiv.org/abs/2409.19684

- VITRON:https://github.com/SkyworkAI/Vitron

- TokenFlow:https://github.com/ByteFlow-AI/TokenFlow

- OneDiffusion:https://github.com/lehduong/OneDiffusion

- MetaMorph: https://arxiv.org/abs/2412.14164

- LlamaFusion:https://arxiv.org/abs/2412.15188

- InstructSeg:https://arxiv.org/abs/2412.14006

- VILA-U:https://arxiv.org/abs/2409.04429

- Ullava: https://github.com/OPPOMKLab/u-LLaVA

- ILLUME: https://arxiv.org/abs/2412.06673

- Vitron:https://arxiv.org/abs/2412.19806

- SynerGen-VL:https://arxiv.org/abs/2412.09604

- Align Anything:https://arxiv.org/abs/2412.15838

- Mico:https://arxiv.org/abs/2406.09412

- OneLLM:https://arxiv.org/abs/2312.03700

- X-VILA:https://arxiv.org/abs/2405.19335

- OLA:https://arxiv.org/abs/2502.04328

- Transfusion: https://arxiv.org/abs/2408.11039

- JanusFlow: https://arxiv.org/abs/2411.07975

- HealthGPT:https://arxiv.org/abs/2502.09838

Medical - BAGEL:https://arxiv.org/abs/2505.14683

- Qwen2.5-Omni:https://arxiv.org/abs/2503.20215

- X2I:https://arxiv.org/abs/2503.06134

- Bifrost-1:https://arxiv.org/abs/2508.05954

- OmniGen2:https://arxiv.org/abs/2506.18871

- UniPic:https://github.com/SkyworkAI/UniPic

- VeOmni:https://github.com/ByteDance-Seed/VeOmni

Training - NextStep-1:https://arxiv.org/abs/2508.10711

- UniUGG: https://arxiv.org/abs/2508.11952

3D - Omni-Video:https://arxiv.org/abs/2507.06119

- OneCAT:https://arxiv.org/abs/2509.03498

- Lumina-DiMOO:https://github.com/Alpha-VLLM/Lumina-DiMOO

- UAE:https://github.com/PKU-YuanGroup/UAE

- RecA:https://arxiv.org/abs/2509.07295

- 《大规模语言模型:从理论到实践》

- 《大语言模型》

- 《动手学大模型Dive into LLMs》

- 《动手做AI Agent》

- 《Build a Large Language Model (From Scratch)》

- 《多模态大模型》

- 《Generative AI Handbook: A Roadmap for Learning Resources》

- 《Understanding Deep Learning》

- 《Illustrated book to learn about Transformers & LLMs》

- 《Building LLMs for Production: Enhancing LLM Abilities and Reliability with Prompting, Fine-Tuning, and RAG》

- 《大型语言模型实战指南:应用实践与场景落地》

- 《Hands-On Large Language Models》

- 《自然语言处理:大模型理论与实践》

- 《动手学强化学习》

- 《面向开发者的LLM入门教程》

- 《大模型基础》

- Taming LLMs: A Practical Guide to LLM Pitfalls with Open Source Software

- Foundations of Large Language Models

- Textbook on reinforcement learning from human feedback

- 《大模型算法:强化学习、微调与对齐》

- 斯坦福 CS224N: Natural Language Processing with Deep Learning

- 吴恩达: Generative AI for Everyone

- 吴恩达: LLM series of courses

- ACL 2023 Tutorial: Retrieval-based Language Models and Applications

- llm-course: Course to get into Large Language Models (LLMs) with roadmaps and Colab notebooks.

- 微软: Generative AI for Beginners

- 微软: State of GPT

- HuggingFace NLP Course

- 清华 NLP 刘知远团队大模型公开课

- 斯坦福 CS25: Transformers United V4

- 斯坦福 CS324: Large Language Models

- 普林斯顿 COS 597G (Fall 2022): Understanding Large Language Models

- 约翰霍普金斯 CS 601.471/671 NLP: Self-supervised Models

- 李宏毅 GenAI课程

- openai-cookbook: Examples and guides for using the OpenAI API.

- Hands on llms: Learn about LLM, LLMOps, and vector DBS for free by designing, training, and deploying a real-time financial advisor LLM system.

- 滑铁卢大学 CS 886: Recent Advances on Foundation Models

- Mistral: Getting Started with Mistral

- 斯坦福 CS25: Transformers United V4

- Coursera: Chatgpt 应用提示工程

- LangGPT: Empowering everyone to become a prompt expert!

- mistralai-cookbook

- Introduction to Generative AI 2024 Spring

- build nanoGPT: Video+code lecture on building nanoGPT from scratch.

- LLM101n: Let's build a Storyteller.

- Knowledge Graphs for RAG

- LLMs From Scratch (Datawhale Version)

- OpenRAG

- 通往AGI之路

- Andrej Karpathy - Neural Networks: Zero to Hero

- Interactive visualization of Transformer

- andysingal/llm-course

- LM-class

- Google Advanced: Generative AI for Developers Learning Path

- Anthropics:Prompt Engineering Interactive Tutorial

- LLMsBook

- Large Language Model Agents

- Cohere LLM University

- LLMs and Transformers

- Smol Vision: Recipes for shrinking, optimizing, customizing cutting edge vision models.

- Multimodal RAG: Chat with Videos

- LLMs Interview Note

- RAG++ : From POC to production: Advanced RAG course.

- Weights & Biases AI Academy: Finetuning, building with LLMs, Structured outputs and more LLM courses.

- Prompt Engineering & AI tutorials & Resources

- Learn RAG From Scratch – Python AI Tutorial from a LangChain Engineer

- LLM Evaluation: A Complete Course

- HuggingFace Learn

- Andrej Karpathy: Deep Dive into LLMs like ChatGPT

- LLM技术科普

- CS25: Transformers United V5

- RAG_Techniques: This repository showcases various advanced techniques for Retrieval-Augmented Generation (RAG) systems. RAG systems combine information retrieval with generative models to provide accurate and contextually rich responses.

- 100+ LLM & RL Algorithm Maps | 原创 LLM / RL 100+原理图

- Reinforcement Learning of Large Language Models

- 动手学大模型应用开发

- AI开发者频道

- B站:五里墩茶社

- B站:木羽Cheney

- YTB:AI Anytime

- B站:漆妮妮

- Prompt Engineering Guide

- YTB: AI超元域

- B站:TechBeat人工智能社区

- B站:黄益贺

- B站:深度学习自然语言处理

- LLM Visualization

- 知乎: 原石人类

- B站:小黑黑讲AI

- B站:面壁的车辆工程师

- B站:AI老兵文哲

- Large Language Models (LLMs) with Colab notebooks

- YTB:IBM Technology

- YTB: Unify Reading Paper Group

- Chip Huyen

- How Much VRAM

- Blog: 科学空间(苏剑林)

- YTB: Hyung Won Chung

- Blog: Tejaswi kashyap

- Blog: 小昇的博客

- 知乎: ybq

- W&B articles

- Huggingface Blog

- Blog: GbyAI

- Blog: mlabonne

- LLM-Action

- Blog: Lil’Log (OponAI)

- B站: 毛玉仁

- AI-Guide-and-Demos

- cnblog: 第七子

- Implementation of all RAG techniques in a simpler way.

- Theoretical Machine Learning: A Handbook for Everyone

[!NOTE] 🤝Huggingface Daily Papers、Cool Papers、ML Papers Explained

- Hermes-3-Technical-Report

- The Llama 3 Herd of Models

- Qwen Technical Report

- Qwen2 Technical Report

- Qwen2-vl Technical Report

- DeepSeek LLM: Scaling Open-Source Language Models with Longtermism

- DeepSeek-V2: A Strong, Economical, and Efficient Mixture-of-Experts Language Model

- Baichuan 2: Open Large-scale Language Models

- DataComp-LM: In search of the next generation of training sets for language models

- OLMo: Accelerating the Science of Language Models

- MAP-Neo: Highly Capable and Transparent Bilingual Large Language Model Series

- Chinese Tiny LLM: Pretraining a Chinese-Centric Large Language Model

- Phi-3 Technical Report: A Highly Capable Language Model Locally on Your Phone

- Jamba-1.5: Hybrid Transformer-Mamba Models at Scale

- Jamba: A Hybrid Transformer-Mamba Language Model

- Textbooks Are All You Need

-

Unleashing the Power of Data Tsunami: A Comprehensive Survey on Data Assessment and Selection for Instruction Tuning of Language Models

data - OLMoE: Open Mixture-of-Experts Language Models

- Model Merging Paper

- Baichuan-Omni Technical Report

- 1.5-Pints Technical Report: Pretraining in Days, Not Months – Your Language Model Thrives on Quality Data

- Baichuan Alignment Technical Report

- Hunyuan-Large: An Open-Source MoE Model with 52 Billion Activated Parameters by Tencent

- Molmo and PixMo: Open Weights and Open Data for State-of-the-Art Multimodal Models

- TÜLU 3: Pushing Frontiers in Open Language Model Post-Training

- Phi-4 Technical Report

- Expanding Performance Boundaries of Open-Source Multimodal Models with Model, Data, and Test-Time Scaling

- Qwen2.5 Technical Report

- YuLan-Mini: An Open Data-efficient Language Model

- An Introduction to Vision-Language Modeling

- DeepSeek V3 Technical Report

- 2 OLMo 2 Furious

- Yi-Lightning Technical Report

- DeepSeek-R1: Incentivizing Reasoning Capability in LLMs via Reinforcement Learning

- KIMI K1.5

- Eagle 2: Building Post-Training Data Strategies from Scratch for Frontier Vision-Language Models

- Qwen2.5-VL Technical Report

- Baichuan-M1: Pushing the Medical Capability of Large Language Models

- Predictable Scale: Part I -- Optimal Hyperparameter Scaling Law in Large Language Model Pretraining

- SkyLadder: Better and Faster Pretraining via Context Window Scheduling

- Qwen2.5-Omni technical report

- Every FLOP Counts: Scaling a 300B Mixture-of-Experts LING LLM without Premium GPUs

- Gemma 3 Technical Report

- Open-Qwen2VL: Compute-Efficient Pre-Training of Fully-Open Multimodal LLMs on Academic Resources

- Pangu Ultra: Pushing the Limits of Dense Large Language Models on Ascend NPUs

- MiMo: Unlocking the Reasoning Potential of Language Model – From Pretraining to Posttraining

- Phi-4 Technical Report

- Llama-Nemotron: Efficient Reasoning Models

- Qwen3 Technical Report

- MiMo-VL Technical Report

- ERNIE Technical Report

- Kwai Keye-VL Technical Report

- Kimi K2 Technical Report

- KAT-V1: Kwai-AutoThink Technical Report

- Step3

- SAIL-VL2 Technical Report

- MCP是啥?技术原理是什么?一个视频搞懂MCP的一切。Windows系统配置MCP,Cursor,Cline 使用MCP

- MCP是什么?为啥是下一代AI标准?MCP原理+开发实战!在Cursor、Claude、Cline中使用MCP,让AI真正自动化!

- 从零编写MCP并发布上线,超简单!手把手教程

MCP工具聚合:

- smithery.ai

- mcp.so

- modelcontextprotocol/servers

- mcp.ad

- pulsemcp.com

- awesome-mcp-servers

- glama.ai

- mcp.composio.dev

- awesome-mcp-list

- mcpo

- FastMCP

- sharemcp.cn

- mcpstore.co

- FastAPI-MCP

- modelscope/mcp

- mcpm.sh

[!NOTE]

开放的技术是我们永恒的追求

- https://github.com/atfortes/Awesome-LLM-Reasoning

- https://github.com/hijkzzz/Awesome-LLM-Strawberry

- https://github.com/wjn1996/Awesome-LLM-Reasoning-Openai-o1-Survey

- https://github.com/srush/awesome-o1

- https://github.com/open-thought/system-2-research

- https://github.com/ninehills/blog/issues/121

- https://github.com/OpenSource-O1/Open-O1

- https://github.com/GAIR-NLP/O1-Journey

- https://github.com/marlaman/show-me

- https://github.com/bklieger-groq/g1

- https://github.com/Jaimboh/Llamaberry-Chain-of-Thought-Reasoning-in-AI

- https://github.com/pseudotensor/open-strawberry

- https://huggingface.co/collections/peakji/steiner-preview-6712c6987110ce932a44e9a6

- https://github.com/SimpleBerry/LLaMA-O1

- https://huggingface.co/collections/Skywork/skywork-o1-open-67453df58e12f6c3934738d0

- https://huggingface.co/collections/Qwen/qwq-674762b79b75eac01735070a

- https://github.com/SkyworkAI/skywork-o1-prm-inference

- https://github.com/RifleZhang/LLaVA-Reasoner-DPO

- https://github.com/ADaM-BJTU

- https://github.com/ADaM-BJTU/OpenRFT

- https://github.com/RUCAIBox/Slow_Thinking_with_LLMs

- https://github.com/richards199999/Thinking-Claude

- https://huggingface.co/AGI-0/Art-v0-3B

- https://huggingface.co/deepseek-ai/DeepSeek-R1

- https://huggingface.co/deepseek-ai/DeepSeek-R1-Zero

- https://github.com/huggingface/open-r1

- https://github.com/hkust-nlp/simpleRL-reason

- https://github.com/Jiayi-Pan/TinyZero

- https://github.com/baichuan-inc/Baichuan-M1-14B

- https://github.com/EvolvingLMMs-Lab/open-r1-multimodal

- https://github.com/open-thoughts/open-thoughts

- Mini-R1: https://www.philschmid.de/mini-deepseek-r1

- LLaMA-Berry: https://arxiv.org/abs/2410.02884

- MCTS-DPO: https://arxiv.org/abs/2405.00451

- OpenR: https://github.com/openreasoner/openr

- https://arxiv.org/abs/2410.02725

- LLaVA-o1: https://arxiv.org/abs/2411.10440

- Marco-o1: https://arxiv.org/abs/2411.14405

- OpenAI o1 report: https://openai.com/index/deliberative-alignment

- DRT-o1: https://github.com/krystalan/DRT-o1

- Virgo:https://arxiv.org/abs/2501.01904

- HuatuoGPT-o1:https://arxiv.org/abs/2412.18925

- o1 roadmap:https://arxiv.org/abs/2412.14135

- Mulberry:https://arxiv.org/abs/2412.18319

- https://arxiv.org/abs/2412.09413

- https://arxiv.org/abs/2501.02497

- Search-o1:https://arxiv.org/abs/2501.05366v1

- https://arxiv.org/abs/2501.18585

- https://github.com/simplescaling/s1

- https://github.com/Deep-Agent/R1-V

- https://github.com/StarRing2022/R1-Nature

- https://github.com/Unakar/Logic-RL

- https://github.com/datawhalechina/unlock-deepseek

- https://github.com/GAIR-NLP/LIMO

- https://github.com/Zeyi-Lin/easy-r1

- https://github.com/jackfsuia/nanoRLHF/tree/main/examples/r1-v0

- https://github.com/FanqingM/R1-Multimodal-Journey

- https://github.com/dhcode-cpp/X-R1

- https://github.com/agentica-project/deepscaler

- https://github.com/ZihanWang314/RAGEN

- https://github.com/sail-sg/oat-zero

- https://github.com/TideDra/lmm-r1

- https://github.com/FlagAI-Open/OpenSeek

- https://github.com/SwanHubX/ascend_r1_turtorial

- https://github.com/om-ai-lab/VLM-R1

- https://github.com/wizardlancet/diagnosis_zero

- https://github.com/lsdefine/simple_GRPO

- https://github.com/brendanhogan/DeepSeekRL-Extended

- https://github.com/Wang-Xiaodong1899/Open-R1-Video

- https://github.com/lsdefine/simple_GRPO

- https://github.com/Open-Reasoner-Zero/Open-Reasoner-Zero

- https://github.com/lucasjinreal/Namo-R1

- https://github.com/hiyouga/EasyR1

- https://github.com/Fancy-MLLM/R1-Onevision

- https://github.com/tulerfeng/Video-R1

- https://huggingface.co/qihoo360/TinyR1-32B-Preview

- https://github.com/facebookresearch/swe-rl

- https://github.com/turningpoint-ai/VisualThinker-R1-Zero

- https://github.com/yuyq96/R1-Vision

- https://github.com/sungatetop/deepseek-r1-vision

- https://huggingface.co/qihoo360/Light-R1-32B

- https://github.com/Liuziyu77/Visual-RFT

- https://github.com/Mohammadjafari80/GSM8K-RLVR

- https://github.com/ModalMinds/MM-EUREKA

- https://github.com/joey00072/nanoGRPO

- https://github.com/PeterGriffinJin/Search-R1

- https://openi.pcl.ac.cn/PCL-Reasoner/GRPO-Training-Suite

- https://github.com/dvlab-research/Seg-Zero

- https://github.com/HumanMLLM/R1-Omni

- https://github.com/OpenManus/OpenManus-RL

- https://arxiv.org/pdf/2503.07536

- https://github.com/Osilly/Vision-R1

- https://github.com/LengSicong/MMR1

- https://github.com/phonism/CP-Zero

- https://github.com/SkyworkAI/Skywork-R1V

- https://arxiv.org/abs/2503.13939v1

- https://github.com/0russwest0/Agent-R1

- https://github.com/MetabrainAGI/Awaker2.5-R1

- https://github.com/LG-AI-EXAONE/EXAONE-Deep

- https://github.com/qiufengqijun/open-r1-reprod

- https://github.com/SUFE-AIFLM-Lab/Fin-R1

- https://github.com/sail-sg/understand-r1-zero

- https://github.com/baibizhe/Efficient-R1-VLLM

- https://github.com/hkust-nlp/simpleRL-reason

- https://arxiv.org/abs/2502.19655

- https://arxiv.org/abs/2503.21620v1

- https://arxiv.org/abs/2503.16081

- https://github.com/ShadeCloak/ADORA

- https://github.com/appletea233/Temporal-R1

- https://github.com/inclusionAI/AReaL

- https://github.com/lzhxmu/CPPO

- https://arxiv.org/abs/2503.23829

- https://github.com/TencentARC/SEED-Bench-R1

- https://github.com/McGill-NLP/nano-aha-moment

- https://github.com/VLM-RL/Ocean-R1

- https://github.com/OpenGVLab/VideoChat-R1

- https://github.com/ByteDance-Seed/Seed-Thinking-v1.5

- https://github.com/SkyworkAI/Skywork-OR1

- https://github.com/MoonshotAI/Kimi-VL

- https://arxiv.org/abs/2504.08600

- https://github.com/ZhangXJ199/TinyLLaVA-Video-R1

- https://arxiv.org/abs/2504.11914

- https://github.com/policy-gradient/GRPO-Zero

- https://github.com/linkangheng/PR1

- https://github.com/jiangxinke/Agentic-RAG-R1

- https://github.com/shangshang-wang/Tina

- https://github.com/aliyun/qwen-dianjin

- https://github.com/RAGEN-AI/RAGEN

- https://github.com/XiaomiMiMo/MiMo

- https://github.com/yuanzhoulvpi2017/nano_rl

- https://huggingface.co/a-m-team/AM-Thinking-v1

- https://huggingface.co/Intelligent-Internet/II-Medical-8B

- https://github.com/CSfufu/Revisual-R1

- Mini-o3: https://arxiv.org/abs/2509.07969

- Simple-o3: https://arxiv.org/abs/2508.12109

- Thyme: https://arxiv.org/abs/2508.11630

- https://github.com/jiahe7ay/MINI_LLM

- https://github.com/jingyaogong/minimind

- https://github.com/DLLXW/baby-llama2-chinese

- https://github.com/charent/ChatLM-mini-Chinese

- https://github.com/wdndev/tiny-llm-zh

- https://github.com/Tongjilibo/build_MiniLLM_from_scratch

- https://github.com/jzhang38/TinyLlama

- https://github.com/AI-Study-Han/Zero-Chatgpt

- https://github.com/loubnabnl/nanotron-smol-cluster (使用Cosmopedia训练cosmo-1b)

- https://github.com/charent/Phi2-mini-Chinese

- https://github.com/allenai/OLMo

- https://github.com/keeeeenw/MicroLlama

- https://github.com/Chinese-Tiny-LLM/Chinese-Tiny-LLM

- https://github.com/leeguandong/MiniLLaMA3

- https://github.com/Pints-AI/1.5-Pints

- https://github.com/zhanshijinwat/Steel-LLM

- https://github.com/RUC-GSAI/YuLan-Mini

- https://github.com/Om-Alve/smolGPT

- https://github.com/skyzh/tiny-llm

- https://github.com/qibin0506/Cortex

- https://github.com/huggingface/picotron

- https://github.com/jingyaogong/minimind-v

- https://github.com/yuanzhoulvpi2017/zero_nlp/tree/main/train_llava

- https://github.com/AI-Study-Han/Zero-Qwen-VL

- https://github.com/Coobiw/MPP-LLaVA

- https://github.com/qnguyen3/nanoLLaVA

- https://github.com/TinyLLaVA/TinyLLaVA_Factory

- https://github.com/ZhangXJ199/TinyLLaVA-Video

- https://github.com/Emericen/tiny-qwen

- https://github.com/merveenoyan/smol-vision

- https://github.com/huggingface/nanoVLM

- https://github.com/GeeeekExplorer/nano-vllm

- https://github.com/ritabratamaiti/AnyModal

- https://github.com/yujunhuics/Reyes

- What We Learned from a Year of Building with LLMs (Part I)

- What We Learned from a Year of Building with LLMs (Part II)

- What We Learned from a Year of Building with LLMs (Part III): Strategy

- 轻松入门大语言模型(LLM)

- LLMs for Text Classification: A Guide to Supervised Learning

- Unsupervised Text Classification: Categorize Natural Language With LLMs

- Text Classification With LLMs: A Roundup of the Best Methods

- LLM Pricing

- Uncensor any LLM with abliteration

- Tiny LLM Universe

- Zero-Chatgpt

- Zero-Qwen-VL

- finetune-Qwen2-VL

- MPP-LLaVA

- build_MiniLLM_from_scratch

- Tiny LLM zh

- MiniMind: 3小时完全从0训练一个仅有26M的小参数GPT,最低仅需2G显卡即可推理训练.

- LLM-Travel: 致力于深入理解、探讨以及实现与大模型相关的各种技术、原理和应用

- Knowledge distillation: Teaching LLM's with synthetic data

- Part 1: Methods for adapting large language models

- Part 2: To fine-tune or not to fine-tune

- Part 3: How to fine-tune: Focus on effective datasets

- Reader-LM: Small Language Models for Cleaning and Converting HTML to Markdown

- LLMs应用构建一年之心得

- LLM训练-pretrain

- pytorch-llama: LLaMA 2 implemented from scratch in PyTorch.

- Preference Optimization for Vision Language Models with TRL 【support model】

- Fine-tuning visual language models using SFTTrainer 【docs】

- A Visual Guide to Mixture of Experts (MoE)

- Role-Playing in Large Language Models like ChatGPT

- Distributed Training Guide: Best practices & guides on how to write distributed pytorch training code.

- Chat Templates

- Top 20+ RAG Interview Questions

- LLM-Dojo 开源大模型学习场所,使用简洁且易阅读的代码构建模型训练框架

- o1 isn’t a chat model (and that’s the point)

- Beam Search快速理解及代码解析

- 基于 transformers 的 generate() 方法实现多样化文本生成:参数含义和算法原理解读

- The Ultra-Scale Playbook: Training LLMs on GPU Clusters

贡献者:

如果你觉得本项目对你有帮助,欢迎引用:

@misc{wang2024llm,

title={awesome-LLM-resourses},

author={Rongsheng Wang},

year={2024},

publisher = {GitHub},

journal = {GitHub repository},

howpublished = {\url{https://github.com/WangRongsheng/awesome-LLM-resourses}},

}For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for awesome-LLM-resources

Similar Open Source Tools

awesome-LLM-resources

This repository is a curated list of resources for learning and working with Large Language Models (LLMs). It includes a collection of articles, tutorials, tools, datasets, and research papers related to LLMs such as GPT-3, BERT, and Transformer models. Whether you are a researcher, developer, or enthusiast interested in natural language processing and artificial intelligence, this repository provides valuable resources to help you understand, implement, and experiment with LLMs.

LLM-Workshop

This repository contains a collection of resources for learning about and using Large Language Models (LLMs). The resources include tutorials, code examples, and links to additional resources. LLMs are a type of artificial intelligence that can understand and generate human-like text. They have a wide range of potential applications, including natural language processing, machine translation, and chatbot development.

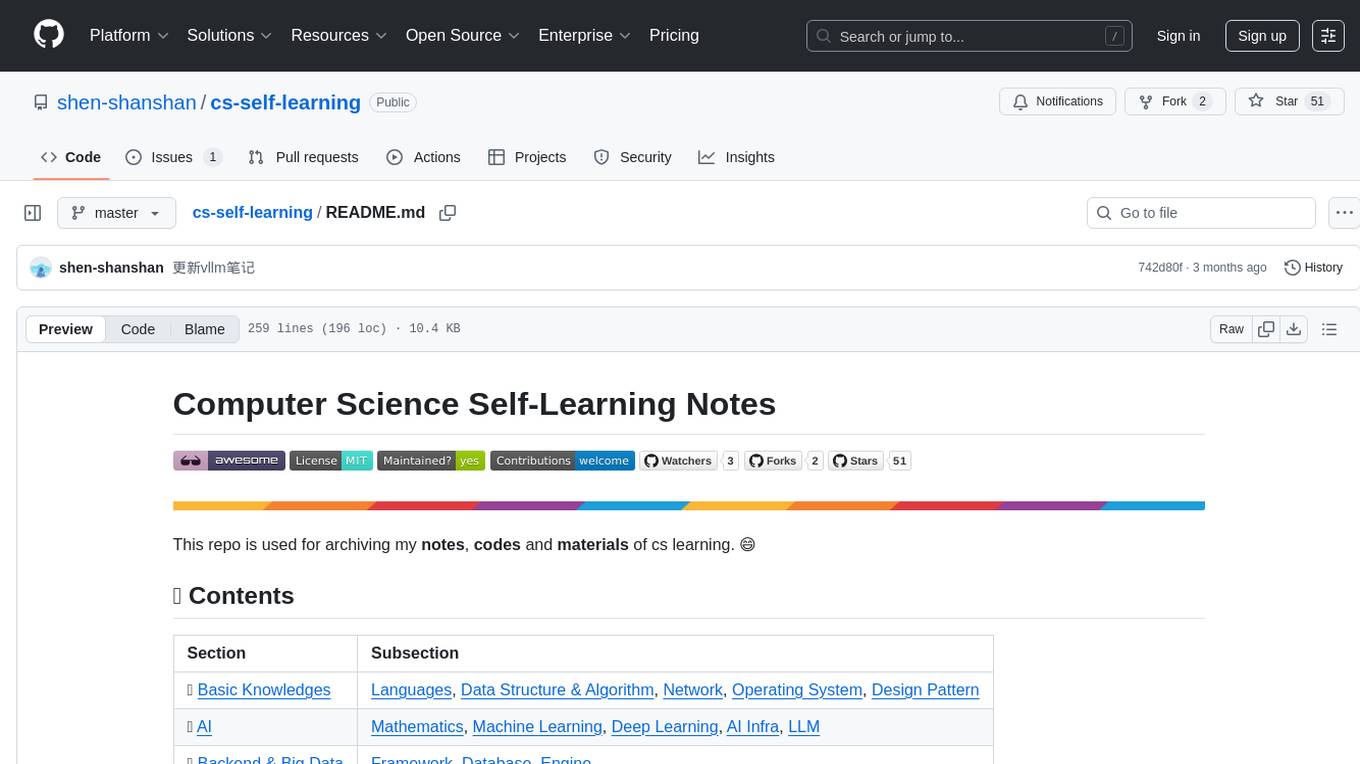

cs-self-learning

This repository serves as an archive for computer science learning notes, codes, and materials. It covers a wide range of topics including basic knowledge, AI, backend & big data, tools, and other related areas. The content is organized into sections and subsections for easy navigation and reference. Users can find learning resources, programming practices, and tutorials on various subjects such as languages, data structures & algorithms, AI, frameworks, databases, development tools, and more. The repository aims to support self-learning and skill development in the field of computer science.

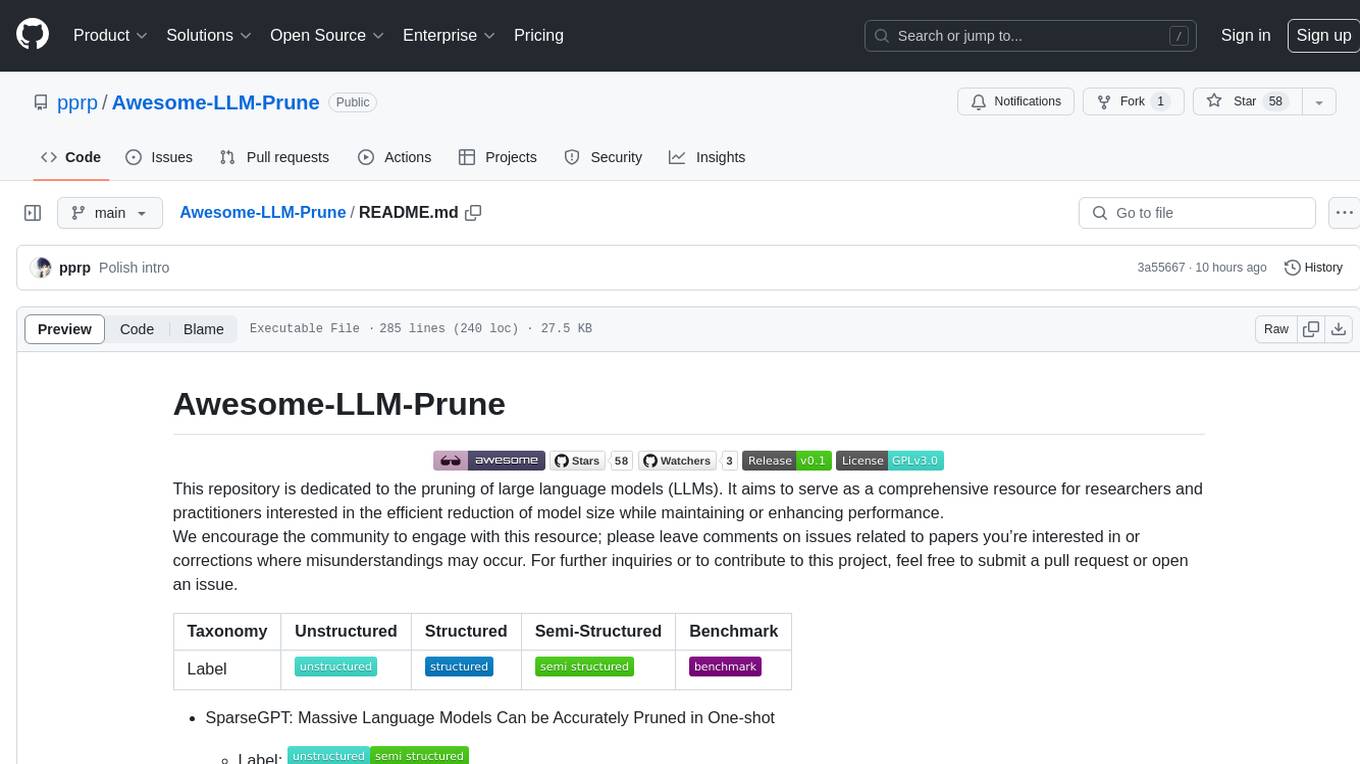

Awesome-LLM-Prune

This repository is dedicated to the pruning of large language models (LLMs). It aims to serve as a comprehensive resource for researchers and practitioners interested in the efficient reduction of model size while maintaining or enhancing performance. The repository contains various papers, summaries, and links related to different pruning approaches for LLMs, along with author information and publication details. It covers a wide range of topics such as structured pruning, unstructured pruning, semi-structured pruning, and benchmarking methods. Researchers and practitioners can explore different pruning techniques, understand their implications, and access relevant resources for further study and implementation.

intro-llm.github.io

Large Language Models (LLM) are language models built by deep neural networks containing hundreds of billions of weights, trained on a large amount of unlabeled text using self-supervised learning methods. Since 2018, companies and research institutions including Google, OpenAI, Meta, Baidu, and Huawei have released various models such as BERT, GPT, etc., which have performed well in almost all natural language processing tasks. Starting in 2021, large models have shown explosive growth, especially after the release of ChatGPT in November 2022, attracting worldwide attention. Users can interact with systems using natural language to achieve various tasks from understanding to generation, including question answering, classification, summarization, translation, and chat. Large language models demonstrate powerful knowledge of the world and understanding of language. This repository introduces the basic theory of large language models including language models, distributed model training, and reinforcement learning, and uses the Deepspeed-Chat framework as an example to introduce the implementation of large language models and ChatGPT-like systems.

OpenAI

OpenAI is a Swift community-maintained implementation over OpenAI public API. It is a non-profit artificial intelligence research organization founded in San Francisco, California in 2015. OpenAI's mission is to ensure safe and responsible use of AI for civic good, economic growth, and other public benefits. The repository provides functionalities for text completions, chats, image generation, audio processing, edits, embeddings, models, moderations, utilities, and Combine extensions.

enterprise-h2ogpte

Enterprise h2oGPTe - GenAI RAG is a repository containing code examples, notebooks, and benchmarks for the enterprise version of h2oGPTe, a powerful AI tool for generating text based on the RAG (Retrieval-Augmented Generation) architecture. The repository provides resources for leveraging h2oGPTe in enterprise settings, including implementation guides, performance evaluations, and best practices. Users can explore various applications of h2oGPTe in natural language processing tasks, such as text generation, content creation, and conversational AI.

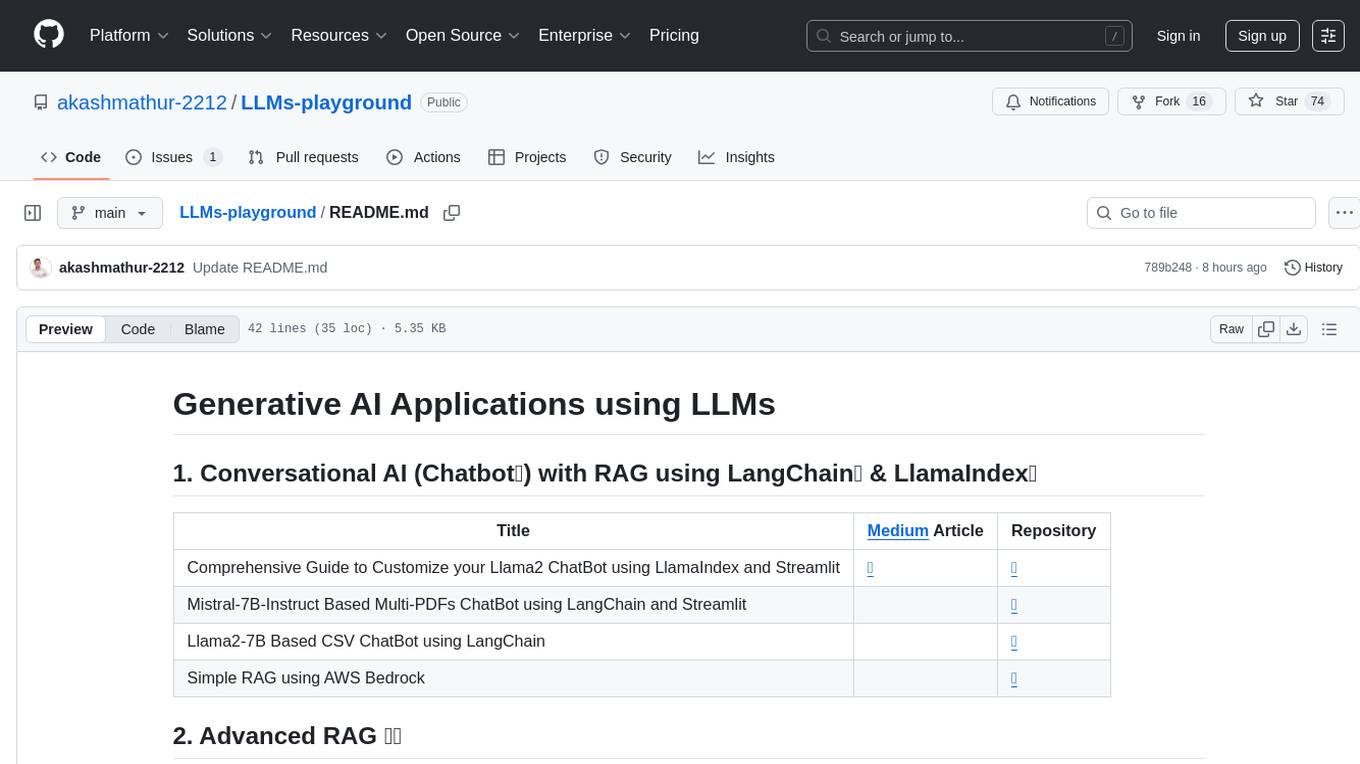

LLMs-playground

LLMs-playground is a repository containing code examples and tutorials for learning and experimenting with Large Language Models (LLMs). It provides a hands-on approach to understanding how LLMs work and how to fine-tune them for specific tasks. The repository covers various LLM architectures, pre-training techniques, and fine-tuning strategies, making it a valuable resource for researchers, students, and practitioners interested in natural language processing and machine learning. By exploring the code and following the tutorials, users can gain practical insights into working with LLMs and apply their knowledge to real-world projects.

llm_rl

llm_rl is a repository that combines llm (language model) and rl (reinforcement learning) techniques. It likely focuses on using language models in reinforcement learning tasks, such as natural language understanding and generation. The repository may contain implementations of algorithms that leverage both llm and rl to improve performance in various tasks. Developers interested in exploring the intersection of language models and reinforcement learning may find this repository useful for research and experimentation.

llm_related

llm_related is a repository that documents issues encountered and solutions found during the application of large models. It serves as a knowledge base for troubleshooting and problem-solving in the context of working with complex models in various applications.

AI-and-competition

This repository provides baselines for various competitions, a few top solutions for some competitions, and independent deep learning projects. Baselines serve as entry guides for competitions, suitable for beginners to make their first submission. Top solutions are more complex and refined versions of baselines, with limited quantity but enhanced quality. The repository is maintained by a single author, yunsuxiaozi, offering code improvements and annotations for better understanding. Users can support the repository by learning from it and providing feedback.

llama.rn

React Native binding of llama.cpp, which is an inference of LLaMA model in pure C/C++. This tool allows you to use the LLaMA model in your React Native applications for various tasks such as text completion, tokenization, detokenization, and embedding. It provides a convenient interface to interact with the LLaMA model and supports features like grammar sampling and mocking for testing purposes.

RustGPT

A complete Large Language Model implementation in pure Rust with no external ML frameworks. Demonstrates building a transformer-based language model from scratch, including pre-training, instruction tuning, interactive chat mode, full backpropagation, and modular architecture. Model learns basic world knowledge and conversational patterns. Features custom tokenization, greedy decoding, gradient clipping, modular layer system, and comprehensive test coverage. Ideal for understanding modern LLMs and key ML concepts. Dependencies include ndarray for matrix operations and rand for random number generation. Contributions welcome for model persistence, performance optimizations, better sampling, evaluation metrics, advanced architectures, training improvements, data handling, and model analysis. Follows standard Rust conventions and encourages contributions at beginner, intermediate, and advanced levels.

llm

The 'llm' package for Emacs provides an interface for interacting with Large Language Models (LLMs). It abstracts functionality to a higher level, concealing API variations and ensuring compatibility with various LLMs. Users can set up providers like OpenAI, Gemini, Vertex, Claude, Ollama, GPT4All, and a fake client for testing. The package allows for chat interactions, embeddings, token counting, and function calling. It also offers advanced prompt creation and logging capabilities. Users can handle conversations, create prompts with placeholders, and contribute by creating providers.

deeppowers

Deeppowers is a powerful Python library for deep learning applications. It provides a wide range of tools and utilities to simplify the process of building and training deep neural networks. With Deeppowers, users can easily create complex neural network architectures, perform efficient training and optimization, and deploy models for various tasks. The library is designed to be user-friendly and flexible, making it suitable for both beginners and experienced deep learning practitioners.

notebooks

The 'notebooks' repository contains a collection of fine-tuning notebooks for various models, including Gemma3N, Qwen3, Llama 3.2, Phi-4, Mistral v0.3, and more. These notebooks are designed for tasks such as data preparation, model training, evaluation, and model saving. Users can access guided notebooks for different types of models like Conversational, Vision, TTS, GRPO, and more. The repository also includes specific use-case notebooks for tasks like text classification, tool calling, multiple datasets, KTO, inference chat UI, conversational tasks, chatML, and text completion.

For similar tasks

nlp-llms-resources

The 'nlp-llms-resources' repository is a comprehensive resource list for Natural Language Processing (NLP) and Large Language Models (LLMs). It covers a wide range of topics including traditional NLP datasets, data acquisition, libraries for NLP, neural networks, sentiment analysis, optical character recognition, information extraction, semantics, topic modeling, multilingual NLP, domain-specific LLMs, vector databases, ethics, costing, books, courses, surveys, aggregators, newsletters, papers, conferences, and societies. The repository provides valuable information and resources for individuals interested in NLP and LLMs.

adata

AData is a free and open-source A-share database that focuses on transaction-related data. It provides comprehensive data on stocks, including basic information, market data, and sentiment analysis. AData is designed to be easy to use and integrate with other applications, making it a valuable tool for quantitative trading and AI training.

PIXIU

PIXIU is a project designed to support the development, fine-tuning, and evaluation of Large Language Models (LLMs) in the financial domain. It includes components like FinBen, a Financial Language Understanding and Prediction Evaluation Benchmark, FIT, a Financial Instruction Dataset, and FinMA, a Financial Large Language Model. The project provides open resources, multi-task and multi-modal financial data, and diverse financial tasks for training and evaluation. It aims to encourage open research and transparency in the financial NLP field.

hezar

Hezar is an all-in-one AI library designed specifically for the Persian community. It brings together various AI models and tools, making it easy to use AI with just a few lines of code. The library seamlessly integrates with Hugging Face Hub, offering a developer-friendly interface and task-based model interface. In addition to models, Hezar provides tools like word embeddings, tokenizers, feature extractors, and more. It also includes supplementary ML tools for deployment, benchmarking, and optimization.

text-embeddings-inference

Text Embeddings Inference (TEI) is a toolkit for deploying and serving open source text embeddings and sequence classification models. TEI enables high-performance extraction for popular models like FlagEmbedding, Ember, GTE, and E5. It implements features such as no model graph compilation step, Metal support for local execution on Macs, small docker images with fast boot times, token-based dynamic batching, optimized transformers code for inference using Flash Attention, Candle, and cuBLASLt, Safetensors weight loading, and production-ready features like distributed tracing with Open Telemetry and Prometheus metrics.

CodeProject.AI-Server

CodeProject.AI Server is a standalone, self-hosted, fast, free, and open-source Artificial Intelligence microserver designed for any platform and language. It can be installed locally without the need for off-device or out-of-network data transfer, providing an easy-to-use solution for developers interested in AI programming. The server includes a HTTP REST API server, backend analysis services, and the source code, enabling users to perform various AI tasks locally without relying on external services or cloud computing. Current capabilities include object detection, face detection, scene recognition, sentiment analysis, and more, with ongoing feature expansions planned. The project aims to promote AI development, simplify AI implementation, focus on core use-cases, and leverage the expertise of the developer community.

spark-nlp

Spark NLP is a state-of-the-art Natural Language Processing library built on top of Apache Spark. It provides simple, performant, and accurate NLP annotations for machine learning pipelines that scale easily in a distributed environment. Spark NLP comes with 36000+ pretrained pipelines and models in more than 200+ languages. It offers tasks such as Tokenization, Word Segmentation, Part-of-Speech Tagging, Named Entity Recognition, Dependency Parsing, Spell Checking, Text Classification, Sentiment Analysis, Token Classification, Machine Translation, Summarization, Question Answering, Table Question Answering, Text Generation, Image Classification, Image to Text (captioning), Automatic Speech Recognition, Zero-Shot Learning, and many more NLP tasks. Spark NLP is the only open-source NLP library in production that offers state-of-the-art transformers such as BERT, CamemBERT, ALBERT, ELECTRA, XLNet, DistilBERT, RoBERTa, DeBERTa, XLM-RoBERTa, Longformer, ELMO, Universal Sentence Encoder, Llama-2, M2M100, BART, Instructor, E5, Google T5, MarianMT, OpenAI GPT2, Vision Transformers (ViT), OpenAI Whisper, and many more not only to Python and R, but also to JVM ecosystem (Java, Scala, and Kotlin) at scale by extending Apache Spark natively.

scikit-llm

Scikit-LLM is a tool that seamlessly integrates powerful language models like ChatGPT into scikit-learn for enhanced text analysis tasks. It allows users to leverage large language models for various text analysis applications within the familiar scikit-learn framework. The tool simplifies the process of incorporating advanced language processing capabilities into machine learning pipelines, enabling users to benefit from the latest advancements in natural language processing.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.