notebooks

100+ Fine-tuning LLM Notebooks on Google Colab, Kaggle, and more.

Stars: 3687

The 'notebooks' repository contains a collection of fine-tuning notebooks for various models, including Gemma3N, Qwen3, Llama 3.2, Phi-4, Mistral v0.3, and more. These notebooks are designed for tasks such as data preparation, model training, evaluation, and model saving. Users can access guided notebooks for different types of models like Conversational, Vision, TTS, GRPO, and more. The repository also includes specific use-case notebooks for tasks like text classification, tool calling, multiple datasets, KTO, inference chat UI, conversational tasks, chatML, and text completion.

README:

Below are our notebooks for Google Colab categorized by model. You can view our Kaggle notebooks here.

Use our guided notebooks to prep data, train, evaluate, and save your model. View our main GitHub repo here.

| Model | Type | Notebook Link |

|---|---|---|

| Sesame-CSM | TTS | |

| Orpheus-TTS | TTS | |

| Spark-TTS | TTS | |

| Oute-TTS | TTS | |

| Oute-TTS | TTS | |

| Llasa TTS (1B) | TTS | |

| Llasa TTS (3B) | TTS | |

| Whisper-Large-V3 | STT |

| Model | Type | Notebook Link |

|---|---|---|

| Llama 3.2 (11B) | Vision | |

| Qwen2.5 VL (7B) | Vision | |

| Pixtral (12B) | Vision |

| Model | Notebook Link |

|---|---|

| ModernBERT-large |

| Model | Type | Notebook Link |

|---|---|---|

| (A100) gpt oss (120B) | ||

| gpt oss (20B) | ||

| GPT OSS BNB (20B) | Inference | |

| GPT OSS MXFP4 (20B) | Inference |

| Model | Type | Notebook Link |

|---|---|---|

| Liquid LFM2 (1.2B) | Conversational | |

| Liquid LFM2 | Conversational | |

| Falcon H1 (0.5B) | Alpaca | |

| Falcon H1 | Alpaca |

| Model | Type | Notebook Link |

|---|---|---|

| Orpheus (3B) | TTS |

| Model | Type | Notebook Link |

|---|---|---|

| Oute TTS (1B) | TTS |

| Model | Type | Notebook Link |

|---|---|---|

| Phi 4 | Conversational | |

| Phi 3.5 Mini | Conversational | |

| Phi 3 Medium | Conversational |

| Model | Type | Notebook Link |

|---|---|---|

| Spark TTS (0 5B) | TTS |

| Model | Type | Notebook Link |

|---|---|---|

| Whisper |

| Model | Type | Notebook Link |

|---|---|---|

| Magistral (24B) | Reasoning Conversational | |

| Sesame CSM (1B) | TTS | |

| bert classification | ||

| Unsloth | Studio | |

| CodeForces cot Finetune for Reasoning on CodeForces | Reasoning |

Click for all our Kaggle notebooks categorized by model:

| Model | Type | Notebook Link |

|---|---|---|

| (A100) gpt oss (120B) |  |

|

| GPT OSS BNB (20B) | Inference |  |

| gpt oss (20B) |  |

|

| GPT OSS MXFP4 (20B) | Inference |  |

| Model | Type | Notebook Link |

|---|---|---|

| Liquid LFM2 (1.2B) | Conversational |  |

| Falcon H1 (0.5B) | Alpaca |  |

| Model | Type | Notebook Link |

|---|---|---|

| Orpheus (3B) | TTS |  |

| Model | Type | Notebook Link |

|---|---|---|

| Oute TTS (1B) | TTS |  |

| Model | Type | Notebook Link |

|---|---|---|

| Phi 4 | Conversational |  |

| Phi 3.5 Mini | Conversational |  |

| Phi 3 Medium | Conversational |  |

| Model | Type | Notebook Link |

|---|---|---|

| Spark TTS (0 5B) | TTS |  |

| Model | Type | Notebook Link |

|---|---|---|

| Whisper |  |

| Model | Type | Notebook Link |

|---|---|---|

| Magistral (24B) | Reasoning Conversational |  |

| Sesame CSM (1B) | TTS |  |

| CodeForces cot Finetune for Reasoning on CodeForces | Reasoning |  |

| Unsloth | Studio |  |

| bert classification |  |

If you'd like to contribute to our notebooks, here's a guide to get you started:

-

Find the Template: We've provided a template notebook called

Template_Notebook.ipynbin the root directory of this project. This template contains the basic structure and formatting guidelines for all notebooks in this collection. -

Create Your Notebook:

- Make a copy of

Template_Notebook.ipynb. - Rename the copied file to follow this naming convention:

-

LLM Notebooks:

<Model Name>-<Type>.ipynb(e.g.,Mistral_v0.3_(7B)-Alpaca.ipynb) -

Vision Notebooks:

<Model Name>-Vision.ipynb(e.g.,Llava_v1.6_(7B)-Vision.ipynb) -

Example of

<Type>:Alpaca,Conversational,CPT,DPO,ORPO,Text_Completion,CSV,Inference,Unsloth_Studio

-

LLM Notebooks:

- Make a copy of

-

Place in

original_template: Once your notebook is ready, move it to theoriginal_templatedirectory. -

Update Notebooks: Run the following command in your terminal:

This script will automatically:

python update_all_notebooks.py

- Copy your notebook from

original_templateto thenotebooksdirectory. - Update the notebook's internal sections (like Installation, News) to ensure consistency.

- Add your notebook to the appropriate list in this

README.mdfile.

- Copy your notebook from

-

Create a Pull Request: After that, just create a pull request (PR) to merge your changes, making it available for everyone!

- We appreciate your contributions and look forward to reviewing your notebooks!

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for notebooks

Similar Open Source Tools

notebooks

The 'notebooks' repository contains a collection of fine-tuning notebooks for various models, including Gemma3N, Qwen3, Llama 3.2, Phi-4, Mistral v0.3, and more. These notebooks are designed for tasks such as data preparation, model training, evaluation, and model saving. Users can access guided notebooks for different types of models like Conversational, Vision, TTS, GRPO, and more. The repository also includes specific use-case notebooks for tasks like text classification, tool calling, multiple datasets, KTO, inference chat UI, conversational tasks, chatML, and text completion.

stable-diffusion.cpp

The stable-diffusion.cpp repository provides an implementation for inferring stable diffusion in pure C/C++. It offers features such as support for different versions of stable diffusion, lightweight and dependency-free implementation, various quantization support, memory-efficient CPU inference, GPU acceleration, and more. Users can download the built executable program or build it manually. The repository also includes instructions for downloading weights, building from scratch, using different acceleration methods, running the tool, converting weights, and utilizing various features like Flash Attention, ESRGAN upscaling, PhotoMaker support, and more. Additionally, it mentions future TODOs and provides information on memory requirements, bindings, UIs, contributors, and references.

RustGPT

A complete Large Language Model implementation in pure Rust with no external ML frameworks. Demonstrates building a transformer-based language model from scratch, including pre-training, instruction tuning, interactive chat mode, full backpropagation, and modular architecture. Model learns basic world knowledge and conversational patterns. Features custom tokenization, greedy decoding, gradient clipping, modular layer system, and comprehensive test coverage. Ideal for understanding modern LLMs and key ML concepts. Dependencies include ndarray for matrix operations and rand for random number generation. Contributions welcome for model persistence, performance optimizations, better sampling, evaluation metrics, advanced architectures, training improvements, data handling, and model analysis. Follows standard Rust conventions and encourages contributions at beginner, intermediate, and advanced levels.

transformers

Transformers is a state-of-the-art pretrained models library that acts as the model-definition framework for machine learning models in text, computer vision, audio, video, and multimodal tasks. It centralizes model definition for compatibility across various training frameworks, inference engines, and modeling libraries. The library simplifies the usage of new models by providing simple, customizable, and efficient model definitions. With over 1M+ Transformers model checkpoints available, users can easily find and utilize models for their tasks.

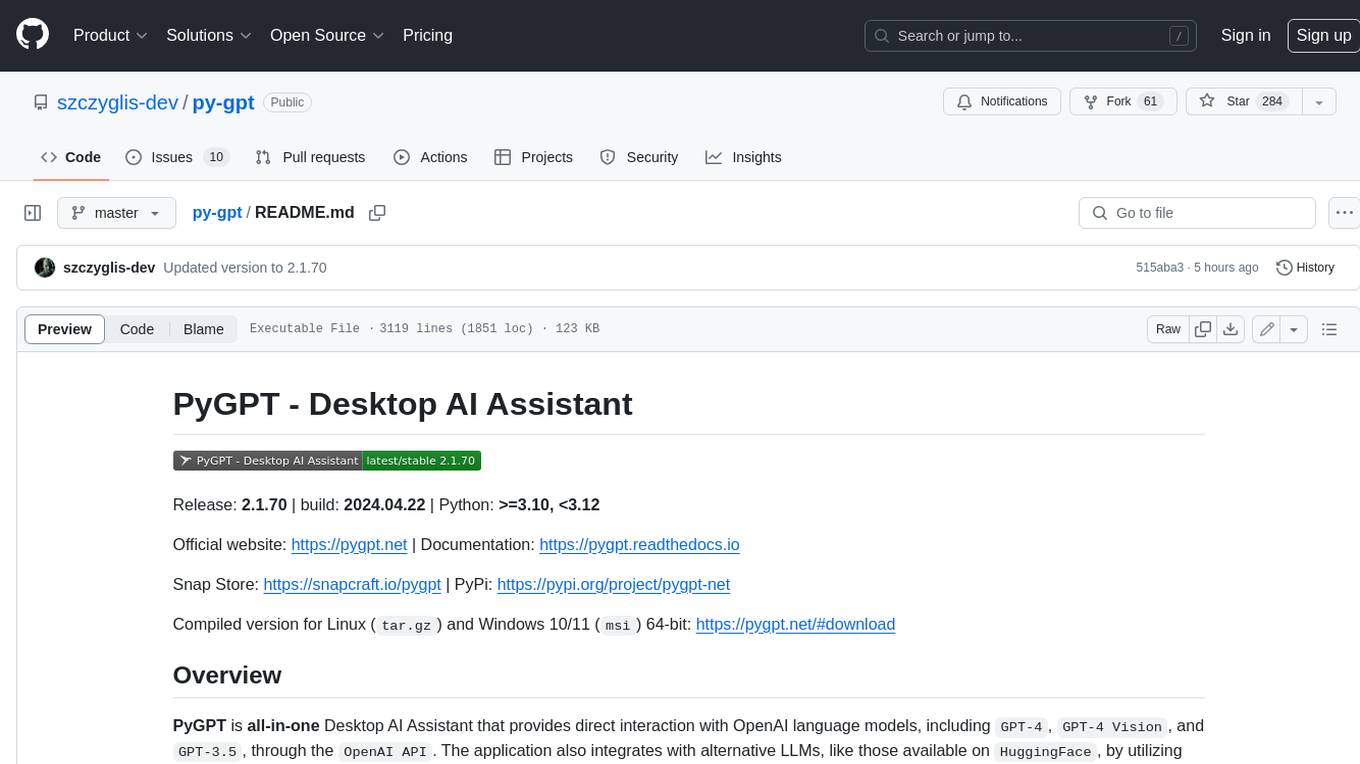

py-gpt

Py-GPT is a Python library that provides an easy-to-use interface for OpenAI's GPT-3 API. It allows users to interact with the powerful GPT-3 model for various natural language processing tasks. With Py-GPT, developers can quickly integrate GPT-3 capabilities into their applications, enabling them to generate text, answer questions, and more with just a few lines of code.

llm

The 'llm' package for Emacs provides an interface for interacting with Large Language Models (LLMs). It abstracts functionality to a higher level, concealing API variations and ensuring compatibility with various LLMs. Users can set up providers like OpenAI, Gemini, Vertex, Claude, Ollama, GPT4All, and a fake client for testing. The package allows for chat interactions, embeddings, token counting, and function calling. It also offers advanced prompt creation and logging capabilities. Users can handle conversations, create prompts with placeholders, and contribute by creating providers.

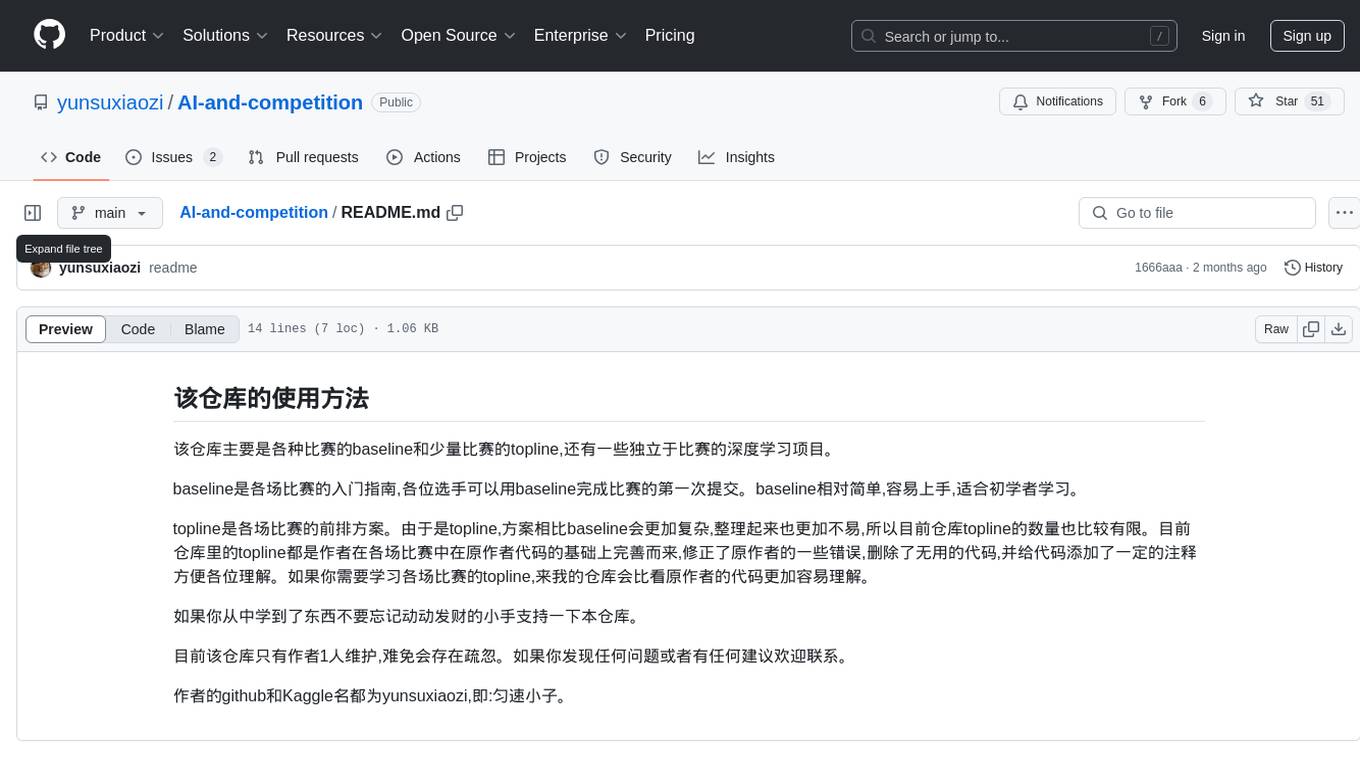

AI-and-competition

This repository provides baselines for various competitions, a few top solutions for some competitions, and independent deep learning projects. Baselines serve as entry guides for competitions, suitable for beginners to make their first submission. Top solutions are more complex and refined versions of baselines, with limited quantity but enhanced quality. The repository is maintained by a single author, yunsuxiaozi, offering code improvements and annotations for better understanding. Users can support the repository by learning from it and providing feedback.

awesome-LLM-resources

This repository is a curated list of resources for learning and working with Large Language Models (LLMs). It includes a collection of articles, tutorials, tools, datasets, and research papers related to LLMs such as GPT-3, BERT, and Transformer models. Whether you are a researcher, developer, or enthusiast interested in natural language processing and artificial intelligence, this repository provides valuable resources to help you understand, implement, and experiment with LLMs.

enterprise-h2ogpte

Enterprise h2oGPTe - GenAI RAG is a repository containing code examples, notebooks, and benchmarks for the enterprise version of h2oGPTe, a powerful AI tool for generating text based on the RAG (Retrieval-Augmented Generation) architecture. The repository provides resources for leveraging h2oGPTe in enterprise settings, including implementation guides, performance evaluations, and best practices. Users can explore various applications of h2oGPTe in natural language processing tasks, such as text generation, content creation, and conversational AI.

llm-on-openshift

This repository provides resources, demos, and recipes for working with Large Language Models (LLMs) on OpenShift using OpenShift AI or Open Data Hub. It includes instructions for deploying inference servers for LLMs, such as vLLM, Hugging Face TGI, Caikit-TGIS-Serving, and Ollama. Additionally, it offers guidance on deploying serving runtimes, such as vLLM Serving Runtime and Hugging Face Text Generation Inference, in the Single-Model Serving stack of Open Data Hub or OpenShift AI. The repository also covers vector databases that can be used as a Vector Store for Retrieval Augmented Generation (RAG) applications, including Milvus, PostgreSQL+pgvector, and Redis. Furthermore, it provides examples of inference and application usage, such as Caikit, Langchain, Langflow, and UI examples.

Applio

Applio is a VITS-based Voice Conversion tool focused on simplicity, quality, and performance. It features a user-friendly interface, cross-platform compatibility, and a range of customization options. Applio is suitable for various tasks such as voice cloning, voice conversion, and audio editing. Its key features include a modular codebase, hop length implementation, translations in over 30 languages, optimized requirements, streamlined installation, hybrid F0 estimation, easy-to-use UI, optimized code and dependencies, plugin system, overtraining detector, model search, enhancements in pretrained models, voice blender, accessibility improvements, new F0 extraction methods, output format selection, hashing system, model download system, TTS enhancements, split audio, Discord presence, Flask integration, and support tab.

OpenAI

OpenAI is a Swift community-maintained implementation over OpenAI public API. It is a non-profit artificial intelligence research organization founded in San Francisco, California in 2015. OpenAI's mission is to ensure safe and responsible use of AI for civic good, economic growth, and other public benefits. The repository provides functionalities for text completions, chats, image generation, audio processing, edits, embeddings, models, moderations, utilities, and Combine extensions.

alignment-handbook

The Alignment Handbook provides robust training recipes for continuing pretraining and aligning language models with human and AI preferences. It includes techniques such as continued pretraining, supervised fine-tuning, reward modeling, rejection sampling, and direct preference optimization (DPO). The handbook aims to fill the gap in public resources on training these models, collecting data, and measuring metrics for optimal downstream performance.

tools

Strands Agents Tools is a community-driven project that provides a powerful set of tools for your agents to use. It bridges the gap between large language models and practical applications by offering ready-to-use tools for file operations, system execution, API interactions, mathematical operations, and more. The tools cover a wide range of functionalities including file operations, shell integration, memory storage, web infrastructure, HTTP client, Slack client, Python execution, mathematical tools, AWS integration, image and video processing, audio output, environment management, task scheduling, advanced reasoning, swarm intelligence, dynamic MCP client, parallel tool execution, browser automation, diagram creation, RSS feed management, and computer automation.

DaoCloud-docs

DaoCloud Enterprise 5.0 Documentation provides detailed information on using DaoCloud, a Certified Kubernetes Service Provider. The documentation covers current and legacy versions, workflow control using GitOps, and instructions for opening a PR and previewing changes locally. It also includes naming conventions, writing tips, references, and acknowledgments to contributors. Users can find guidelines on writing, contributing, and translating pages, along with using tools like MkDocs, Docker, and Poetry for managing the documentation.

meeting-minutes

An open-source AI assistant for taking meeting notes that captures live meeting audio, transcribes it in real-time, and generates summaries while ensuring user privacy. Perfect for teams to focus on discussions while automatically capturing and organizing meeting content without external servers or complex infrastructure. Features include modern UI, real-time audio capture, speaker diarization, local processing for privacy, and more. The tool also offers a Rust-based implementation for better performance and native integration, with features like live transcription, speaker diarization, and a rich text editor for notes. Future plans include database connection for saving meeting minutes, improving summarization quality, and adding download options for meeting transcriptions and summaries. The backend supports multiple LLM providers through a unified interface, with configurations for Anthropic, Groq, and Ollama models. System architecture includes core components like audio capture service, transcription engine, LLM orchestrator, data services, and API layer. Prerequisites for setup include Node.js, Python, FFmpeg, and Rust. Development guidelines emphasize project structure, testing, documentation, type hints, and ESLint configuration. Contributions are welcome under the MIT License.

For similar tasks

Co-LLM-Agents

This repository contains code for building cooperative embodied agents modularly with large language models. The agents are trained to perform tasks in two different environments: ThreeDWorld Multi-Agent Transport (TDW-MAT) and Communicative Watch-And-Help (C-WAH). TDW-MAT is a multi-agent environment where agents must transport objects to a goal position using containers. C-WAH is an extension of the Watch-And-Help challenge, which enables agents to send messages to each other. The code in this repository can be used to train agents to perform tasks in both of these environments.

GPT4Point

GPT4Point is a unified framework for point-language understanding and generation. It aligns 3D point clouds with language, providing a comprehensive solution for tasks such as 3D captioning and controlled 3D generation. The project includes an automated point-language dataset annotation engine, a novel object-level point cloud benchmark, and a 3D multi-modality model. Users can train and evaluate models using the provided code and datasets, with a focus on improving models' understanding capabilities and facilitating the generation of 3D objects.

asreview

The ASReview project implements active learning for systematic reviews, utilizing AI-aided pipelines to assist in finding relevant texts for search tasks. It accelerates the screening of textual data with minimal human input, saving time and increasing output quality. The software offers three modes: Oracle for interactive screening, Exploration for teaching purposes, and Simulation for evaluating active learning models. ASReview LAB is designed to support decision-making in any discipline or industry by improving efficiency and transparency in screening large amounts of textual data.

Groma

Groma is a grounded multimodal assistant that excels in region understanding and visual grounding. It can process user-defined region inputs and generate contextually grounded long-form responses. The tool presents a unique paradigm for multimodal large language models, focusing on visual tokenization for localization. Groma achieves state-of-the-art performance in referring expression comprehension benchmarks. The tool provides pretrained model weights and instructions for data preparation, training, inference, and evaluation. Users can customize training by starting from intermediate checkpoints. Groma is designed to handle tasks related to detection pretraining, alignment pretraining, instruction finetuning, instruction following, and more.

amber-train

Amber is the first model in the LLM360 family, an initiative for comprehensive and fully open-sourced LLMs. It is a 7B English language model with the LLaMA architecture. The model type is a language model with the same architecture as LLaMA-7B. It is licensed under Apache 2.0. The resources available include training code, data preparation, metrics, and fully processed Amber pretraining data. The model has been trained on various datasets like Arxiv, Book, C4, Refined-Web, StarCoder, StackExchange, and Wikipedia. The hyperparameters include a total of 6.7B parameters, hidden size of 4096, intermediate size of 11008, 32 attention heads, 32 hidden layers, RMSNorm ε of 1e^-6, max sequence length of 2048, and a vocabulary size of 32000.

kan-gpt

The KAN-GPT repository is a PyTorch implementation of Generative Pre-trained Transformers (GPTs) using Kolmogorov-Arnold Networks (KANs) for language modeling. It provides a model for generating text based on prompts, with a focus on improving performance compared to traditional MLP-GPT models. The repository includes scripts for training the model, downloading datasets, and evaluating model performance. Development tasks include integrating with other libraries, testing, and documentation.

LLM-SFT

LLM-SFT is a Chinese large model fine-tuning tool that supports models such as ChatGLM, LlaMA, Bloom, Baichuan-7B, and frameworks like LoRA, QLoRA, DeepSpeed, UI, and TensorboardX. It facilitates tasks like fine-tuning, inference, evaluation, and API integration. The tool provides pre-trained weights for various models and datasets for Chinese language processing. It requires specific versions of libraries like transformers and torch for different functionalities.

zshot

Zshot is a highly customizable framework for performing Zero and Few shot named entity and relationships recognition. It can be used for mentions extraction, wikification, zero and few shot named entity recognition, zero and few shot named relationship recognition, and visualization of zero-shot NER and RE extraction. The framework consists of two main components: the mentions extractor and the linker. There are multiple mentions extractors and linkers available, each serving a specific purpose. Zshot also includes a relations extractor and a knowledge extractor for extracting relations among entities and performing entity classification. The tool requires Python 3.6+ and dependencies like spacy, torch, transformers, evaluate, and datasets for evaluation over datasets like OntoNotes. Optional dependencies include flair and blink for additional functionalities. Zshot provides examples, tutorials, and evaluation methods to assess the performance of the components.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.