Best AI tools for< Decode Text >

20 - AI tool Sites

Typly

Typly is an advanced AI writing assistant that leverages Large Language Models like GPT, ChatGPT, LLaMA, and others to automatically generate suggestions that match the context of the conversation. It is designed to help users craft unique responses effortlessly, decode complex text, summarize articles, perfect emails, and boost conversations with sentence bundles from various sources. Typly also offers extra functions like a Dating Function to find matches on dating apps and Typly Translate for language freedom. The application aims to address the challenge of managing numerous messages and notifications by providing AI-generated responses that enhance communication efficiency and effectiveness.

Dataku.ai

Dataku.ai is an advanced data extraction and analysis tool powered by AI technology. It offers seamless extraction of valuable insights from documents and texts, transforming unstructured data into structured, actionable information. The tool provides tailored data extraction solutions for various needs, such as resume extraction for streamlined recruitment processes, review insights for decoding customer sentiments, and leveraging customer data to personalize experiences. With features like market trend analysis and financial document analysis, Dataku.ai empowers users to make strategic decisions based on accurate data. The tool ensures precision, efficiency, and scalability in data processing, offering different pricing plans to cater to different user needs.

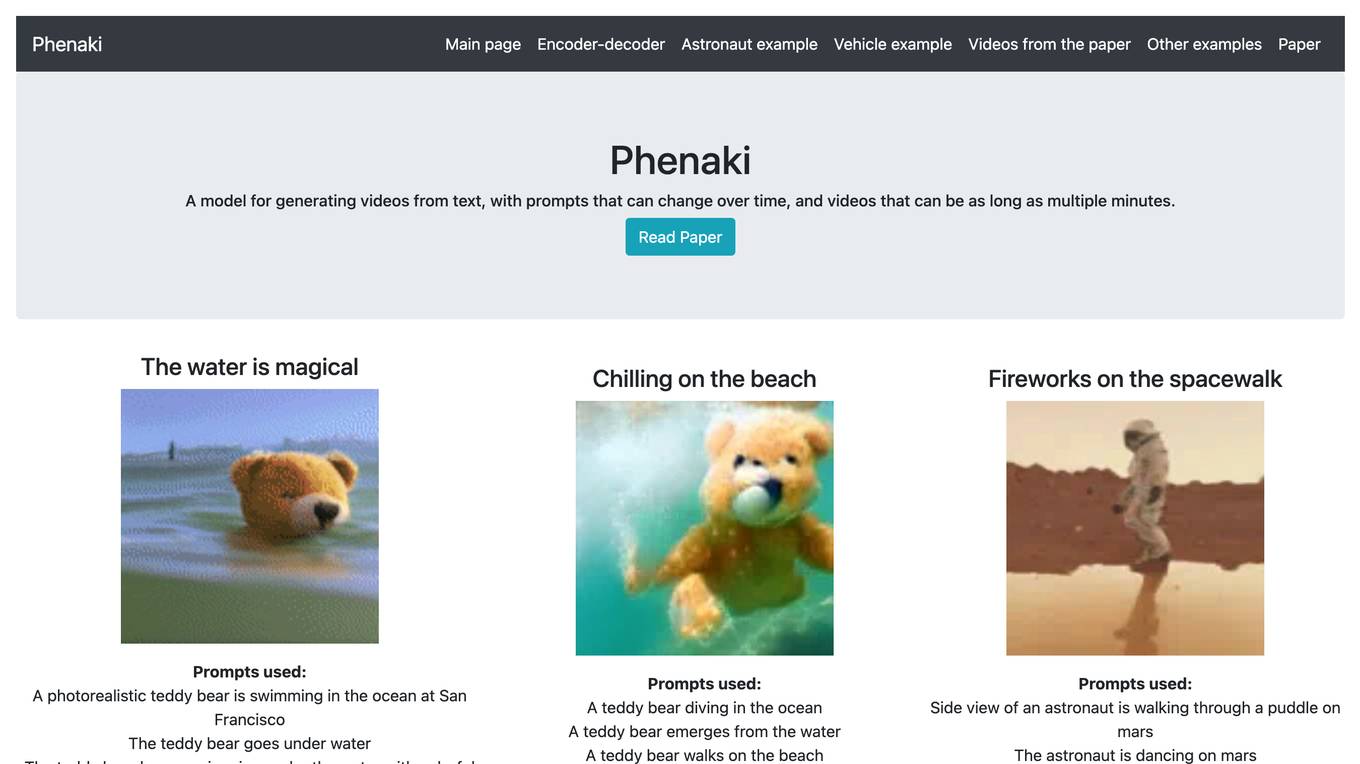

Phenaki

Phenaki is a model capable of generating realistic videos from a sequence of textual prompts. It is particularly challenging to generate videos from text due to the computational cost, limited quantities of high-quality text-video data, and variable length of videos. To address these issues, Phenaki introduces a new causal model for learning video representation, which compresses the video to a small representation of discrete tokens. This tokenizer uses causal attention in time, which allows it to work with variable-length videos. To generate video tokens from text, Phenaki uses a bidirectional masked transformer conditioned on pre-computed text tokens. The generated video tokens are subsequently de-tokenized to create the actual video. To address data issues, Phenaki demonstrates how joint training on a large corpus of image-text pairs as well as a smaller number of video-text examples can result in generalization beyond what is available in the video datasets. Compared to previous video generation methods, Phenaki can generate arbitrarily long videos conditioned on a sequence of prompts (i.e., time-variable text or a story) in an open domain. To the best of our knowledge, this is the first time a paper studies generating videos from time-variable prompts. In addition, the proposed video encoder-decoder outperforms all per-frame baselines currently used in the literature in terms of spatio-temporal quality and the number of tokens per video.

Decode Investing

Decode Investing is an AI tool designed to automate stock research for users. The platform offers a range of features to help investors analyze stocks, earnings calls, SEC filings, and more. With a user-friendly interface, Decode Investing aims to simplify the investment process and provide valuable insights to users. The tool is constantly updated to ensure accurate and timely information for informed decision-making in the stock market.

Decode Health

Decode Health is an AI and analytics platform that accelerates precision healthcare by supporting healthcare teams in launching machine learning and advanced analytics projects. The platform collaborates with pharmaceutical companies to enhance patient selection, biomarker identification, diagnostics development, data asset creation, and analysis. Decode Health offers modules for biomarker discovery, patient recruitment, next-generation sequencing, data analysis, and clinical decision support. The platform aims to provide fast, accurate, and actionable insights for acute and chronic disease management. Decode Health's custom-built modules are designed to work together to solve complex data problems efficiently.

Entropik

Entropik is a unified insights platform that leverages AI technology to decode consumer behavior and emotions. It replaces traditional market research methods with AI-powered analysis to measure emotion, attention, and intent. The platform offers a wide range of features for consumer insights, user research, AI creative insights, and an insights hub. Entropik is trusted by over 150 industry leaders and provides benefits such as faster decision-making, cost reduction, campaign uplift, and standardized testing. The platform also offers an AI Moderator tool for automated qualitative conversations. Entropik helps businesses predict and optimize for attention, clarity, and consumer behavior, providing valuable insights for various industries.

Mendel AI

Mendel AI is an advanced clinical AI tool that deciphers clinical data with clinician-like logic. It offers a fully integrated suite of clinical-specific data processing products, combining OCR, de-identification, and clinical reasoning to interpret medical records. Users can ask questions in plain English and receive accurate answers from health records in seconds. Mendel's technology goes beyond traditional AI by understanding patient-level data and ensuring consistency and explainability of results in healthcare.

MeowTalk

MeowTalk is an AI tool that allows users to decode their cat's meows and understand what their feline friends are trying to communicate. By analyzing the sound patterns of your cat's meows, MeowTalk translates them into human language, providing insights into your cat's thoughts and feelings. With MeowTalk, you can bridge the communication gap between you and your cat, leading to a deeper understanding and stronger bond.

Runix

Runix is a powerful AI-driven tool designed to help businesses decode successful advertising strategies. It offers features such as discovering viral ads, AI content analysis, and creating result-driven blog content. With Runix, users can replicate successful ad campaigns, decode success patterns, and draw inspiration from millions of AI-decoded contents. The platform provides real-time insights on viral ads across various platforms like TikTok, Youtube, and Facebook, enabling users to stay ahead of market trends.

Insitro

Insitro is a drug discovery and development company that uses machine learning and data to identify and develop new medicines. The company's platform integrates in vitro cellular data produced in its labs with human clinical data to help redefine disease. Insitro's pipeline includes wholly-owned and partnered therapeutic programs in metabolism, oncology, and neuroscience.

Buena.ai

Buena.ai is an AI-powered outreach platform designed to transform sales processes by leveraging AI agents to drive personalized outreach and pipeline growth. The platform empowers sales teams to focus on strategic tasks while AI agents handle automated lead generation, personalized engagement, and multi-channel outreach. Buena.ai offers advanced analytics, real-time data insights, and scalable solutions to enhance sales efficiency and productivity.

AI Synapse

AI Synapse is a GTM platform designed for AI workers to enhance outbound conversion rates and sales efficiency. It leverages AI-driven research, personalization, and automation to optimize sales processes, reduce time spent on sales tools, and achieve significant improvements in open, click, and reply rates. The platform enables users to achieve the output of a 30-person sales team in just 4-6 hours, leading to increased productivity and revenue generation. AI Synapse offers scalability, cost efficiency, advanced personalization, time savings, enhanced conversion rates, and predictable lead flow, making it a valuable tool for sales teams and businesses looking to streamline their outbound strategies.

Mind-Video

Mind-Video is an AI tool that focuses on high-quality video reconstruction from brain activity data. It bridges the gap between image and video brain decoding by utilizing masked brain modeling, multimodal contrastive learning, spatiotemporal attention, and co-training with an augmented Stable Diffusion model. The tool aims to recover accurate semantic information from fMRI signals, enabling the generation of realistic videos based on brain activities.

Shen-Shu

Shen-Shu is a free online Bazi calculation tool that provides accurate 2025 fortune analysis. The website offers personalized Bazi insights by simplifying the process with clear, actionable insights. Users can decode their destiny through interactive readings tailored for them, based on their solar calendar birthday and birth time. Shen-Shu utilizes BaZi (Eight Characters) astrology to examine individual destiny through the study of the Five Elements, Heavenly Stems, Earthly Branches, and their dynamic interactions. The platform aims to help users understand BaZi charts and major luck changes, providing guidance at every step of their journey.

ResearchFlow

ResearchFlow is an AI-powered research engine that enables users to conduct in-depth research, connect ideas, and enhance their research process through visual mind maps. The platform leverages AI technology to search scholarly databases, decode complex charts, and provide reliable answers from trusted sources. With interactive mind maps and AI-powered analysis, ResearchFlow simplifies the exploration of complex topics, making it easier for users to navigate and understand intricate subjects. Dive into a sea of knowledge with ResearchFlow and unlock a world of information at your fingertips.

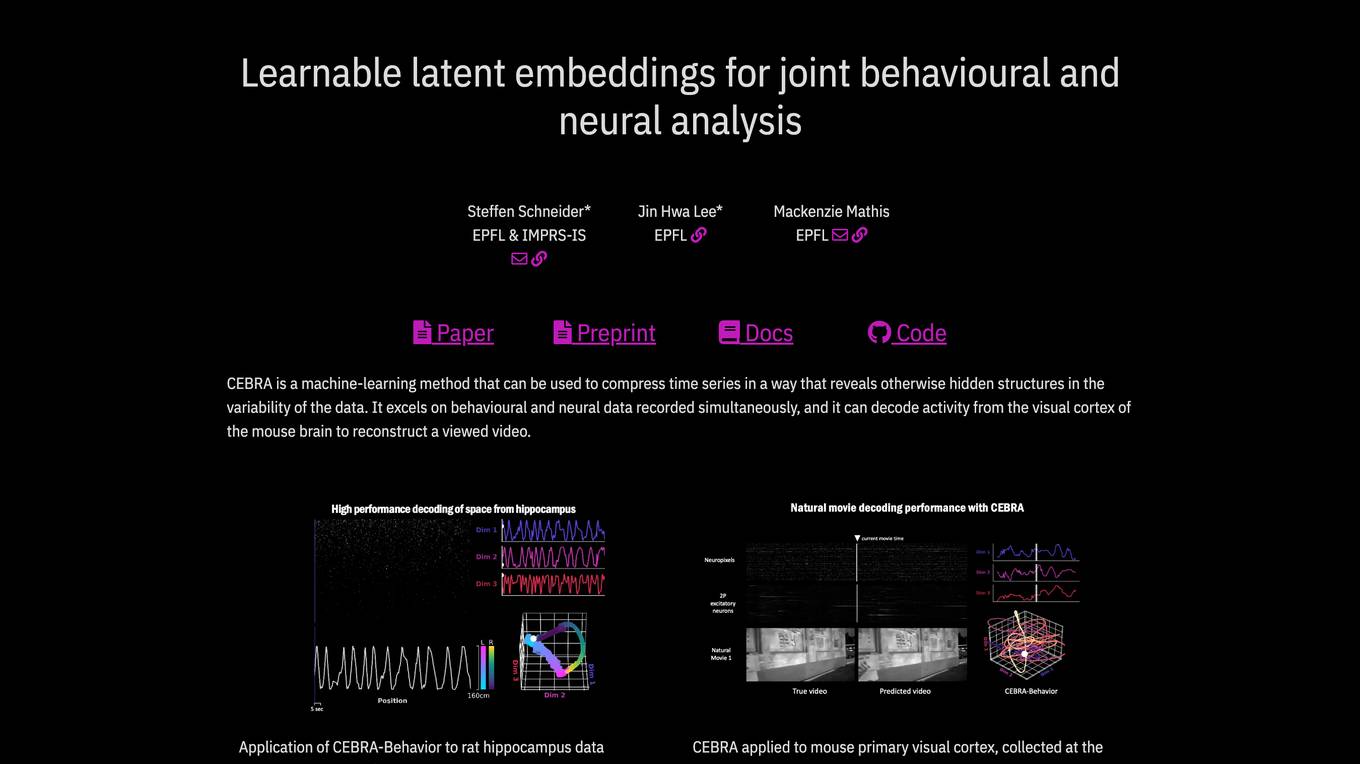

CEBRA

CEBRA is a self-supervised learning algorithm that provides interpretable embeddings of high-dimensional recordings using auxiliary variables. It excels in compressing time series data to reveal hidden structures, particularly in behavioral and neural data. The algorithm can decode activity from the visual cortex, reconstruct viewed videos, decode trajectories, and determine position during navigation. CEBRA is a valuable tool for joint behavioral and neural analysis, offering consistent and high-performance latent spaces for hypothesis testing and label-free usage across various datasets and species.

BabySleepBot™

BabySleepBot™ is an AI-powered online DIY program designed to help parents teach their babies to sleep through the night and take longer day naps. The program offers personalized training tailored to different parenting styles and babies' individual needs. It includes audio clips, personalized training, companion guide, education on decoding baby's tired cues, custom routines, and access to results within three weeks. The program is led by Jennifer, Australia's leading baby sleep consultant with 22+ years of experience and a proven track record of helping thousands of families achieve successful sleep outcomes.

Vexa

Vexa is a real-time AI meeting assistant designed to empower users to maintain focus, grasp context, decode industry-specific terms, and capture critical information effortlessly during business meetings. It offers features such as instant context recovery, flawless project execution, industry terminology decoding, enhanced focus and productivity, and ADHD-friendly meeting assistance. Vexa helps users stay sharp in long meetings, record agreements accurately, clarify industry jargon, and manage time-sensitive information effectively. It integrates with Google Meet and Zoom, supports various functionalities using the GPT-4 Chat API, and ensures privacy through end-to-end encryption and data protection measures.

implicator.ai

implicator.ai is an AI tool that provides a daily newsletter focusing on AI-related news, politics, coding trends, startups, research funding, and AI tools. The platform offers insights into the latest developments in the AI industry, including new models, acquisitions, legal battles, and market trends. With a team of tech journalists and analysts, implicator.ai decodes complex AI topics and delivers concise, informative content for readers interested in staying updated on the fast-paced world of artificial intelligence.

Hint

Hint is a hyper-personalized astrology app that combines NASA data with guidance from professional astrologers to provide personalized insights. It offers 1-on-1 guidance, horoscopes, compatibility reports, and chart decoding. Hint has become a recognized leader in the field of digital astrological services and is trusted by world's leading companies.

2 - Open Source AI Tools

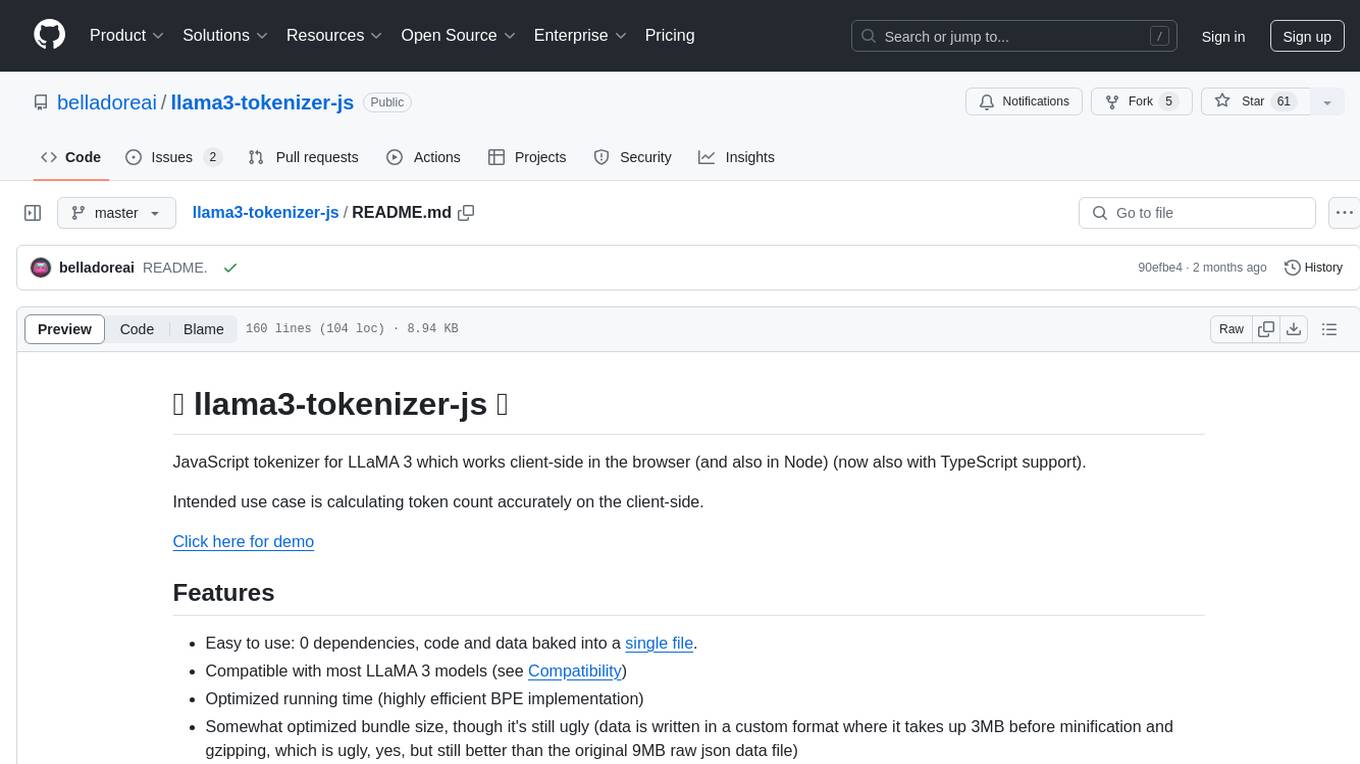

llama3-tokenizer-js

JavaScript tokenizer for LLaMA 3 designed for client-side use in the browser and Node, with TypeScript support. It accurately calculates token count, has 0 dependencies, optimized running time, and somewhat optimized bundle size. Compatible with most LLaMA 3 models. Can encode and decode text, but training is not supported. Pollutes global namespace with `llama3Tokenizer` in the browser. Mostly compatible with LLaMA 3 models released by Facebook in April 2024. Can be adapted for incompatible models by passing custom vocab and merge data. Handles special tokens and fine tunes. Developed by belladore.ai with contributions from xenova, blaze2004, imoneoi, and ConProgramming.

Tiktoken

Tiktoken is a high-performance implementation focused on token count operations. It provides various encodings like o200k_base, cl100k_base, r50k_base, p50k_base, and p50k_edit. Users can easily encode and decode text using the provided API. The repository also includes a benchmark console app for performance tracking. Contributions in the form of PRs are welcome.

20 - OpenAI Gpts

N.A.R.C. Bott

This app decodes texts from narcissists, advising across all life scenarios. Navigate. Analyze. Recognize. Communicate.

What a Girl Says Translator

Simply tell me what the girl texted you or said to you, and I will respond with what she means. 💋

Emoji GPT

🌟 Discover the Charm of EmojiGPT! 🤖💬🎉 Dive into a world where emojis reign supreme with EmojiGPT, your whimsical AI companion that speaks the universal language of emojis. Get ready to decode delightful emoji messages, laugh at clever combinations, and express yourself like never before! 🤔

Dieselpunk Cthulhu, a text adventure game

Madness or diesel fumes. You decide. Let me entertain you with this interactive horror game set in the Cthulhu mythos, lovingly illustrated in the style of gritty, cinematic dieselpunk.

操纵转世系统 reincarnation system

这是一个模拟转世系统的文字游戏,它会提供一些待转世的人员名单,由你来决定他们的下一世发展。It will provide a list of individuals to be reincarnated, and you will decide on their next life development.

Andrew Schulz

Experience conversing with the most hilarious and fun to be around character of our decade.

OGAA (Oil and Gas Acronym Assistant)

I decode acronyms from the oil & gas industry, asking for context if needed.

Paper Interpreter (international)

Automatically structure and decode academic papers with ease - simply upload a PDF!

🧬GenoCode Wizard🔬

Unlock the secrets of DNA with 🧬GenoCode Wizard🔬! Dive into genetic analysis, decode sequences, and explore bioinformatics with ease. Perfect for researchers and students!

Social Navigator

A specialist in explaining social cues and cultural norms for clarity in conversations