Best AI tools for< Run Model >

20 - AI tool Sites

Modal

Modal is a high-performance cloud platform designed for developers, AI data, and ML teams. It offers a serverless environment for running generative AI models, large-scale batch jobs, job queues, and more. With Modal, users can bring their own code and leverage the platform's optimized container file system for fast cold boots and seamless autoscaling. The platform is engineered for large-scale workloads, allowing users to scale to hundreds of GPUs, pay only for what they use, and deploy functions to the cloud in seconds without the need for YAML or Dockerfiles. Modal also provides features for job scheduling, web endpoints, observability, and security compliance.

Practice Run AI

Practice Run AI is an online platform that offers AI-powered tools for various tasks. Users can utilize the application to practice and run AI algorithms without the need for complex setups or installations. The platform provides a user-friendly interface that allows individuals to experiment with AI models and enhance their understanding of artificial intelligence concepts. Practice Run AI aims to democratize AI education and make it accessible to a wider audience by simplifying the learning process and providing hands-on experience.

GPUX

GPUX is a cloud platform that provides access to GPUs for running AI workloads. It offers a variety of features to make it easy to deploy and run AI models, including a user-friendly interface, pre-built templates, and support for a variety of programming languages. GPUX is also committed to providing a sustainable and ethical platform, and it has partnered with organizations such as the Climate Leadership Council to reduce its carbon footprint.

Mystic.ai

Mystic.ai is an AI tool designed to deploy and scale Machine Learning models with ease. It offers a fully managed Kubernetes platform that runs in your own cloud, allowing users to deploy ML models in their own Azure/AWS/GCP account or in a shared GPU cluster. Mystic.ai provides cost optimizations, fast inference, simpler developer experience, and performance optimizations to ensure high-performance AI model serving. With features like pay-as-you-go API, cloud integration with AWS/Azure/GCP, and a beautiful dashboard, Mystic.ai simplifies the deployment and management of ML models for data scientists and AI engineers.

Modular

Modular is a fast, scalable Gen AI inference platform that offers a comprehensive suite of tools and resources for AI development and deployment. It provides solutions for AI model development, deployment options, AI inference, research, and resources like documentation, models, tutorials, and step-by-step guides. Modular supports GPU and CPU performance, intelligent scaling to any cluster, and offers deployment options for various editions. The platform enables users to build agent workflows, utilize AI retrieval and controlled generation, develop chatbots, engage in code generation, and improve resource utilization through batch processing.

Qualcomm AI Hub

Qualcomm AI Hub is a platform that allows users to run AI models on Snapdragon® 8 Elite devices. It provides a collaborative ecosystem for model makers, cloud providers, runtime, and SDK partners to deploy on-device AI solutions quickly and efficiently. Users can bring their own models, optimize for deployment, and access a variety of AI services and resources. The platform caters to various industries such as mobile, automotive, and IoT, offering a range of models and services for edge computing.

Jan

Jan is an open-source ChatGPT-alternative that runs 100% offline. It allows users to chat with AI, download and run powerful models, connect to cloud AIs, set up a local API server, and chat with files. Highly customizable, Jan also offers features like creating personalized AI assistants, memory, and extensions. The application prioritizes local-first AI, user-owned data, and full customization, making it a versatile tool for AI enthusiasts and developers.

Backyard AI

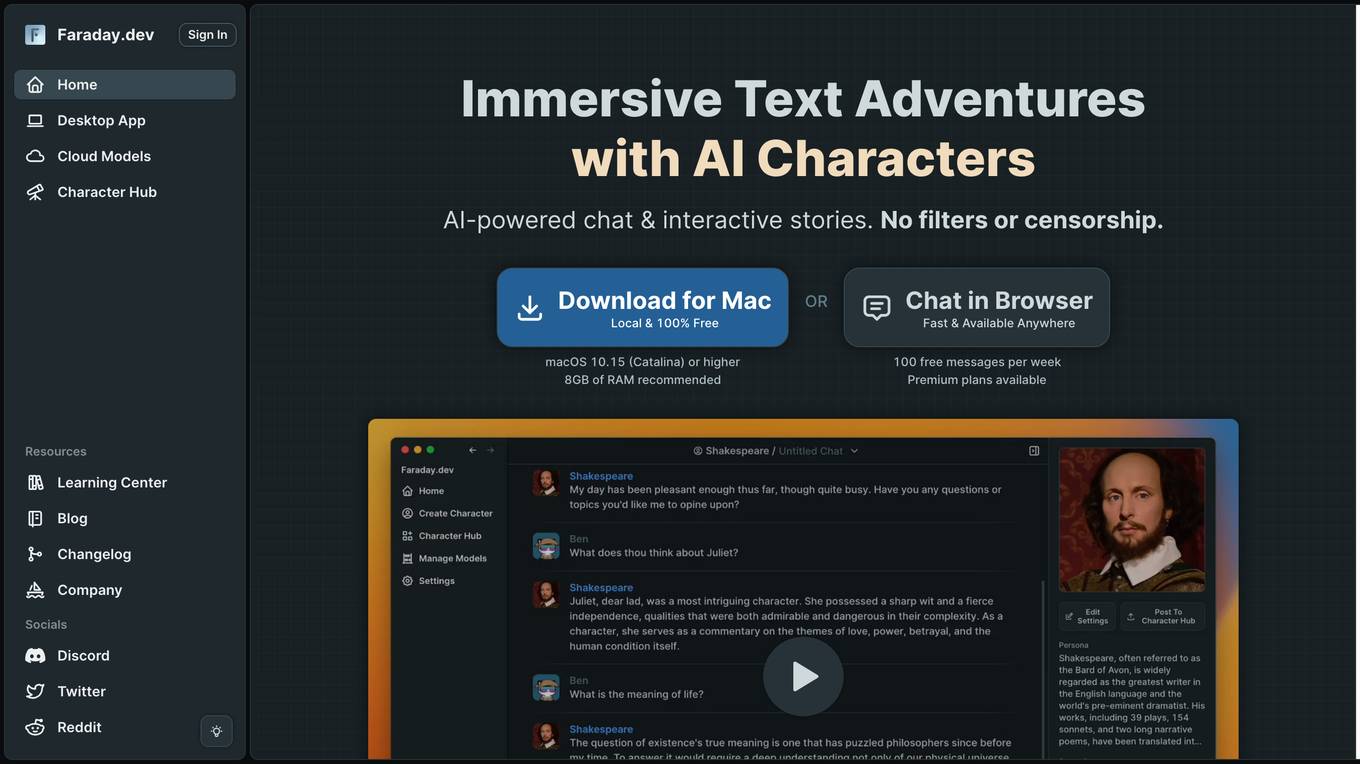

Backyard AI is an AI-powered platform that offers immersive text adventures with AI characters, enabling users to engage in chat and interactive stories without filters or censorship. Users can bring AI characters to life with expressive customizations and intricate worlds. The platform provides a Desktop App for running AI models locally and a Cloud service for fast and powerful AI models accessible from anywhere. Backyard AI prioritizes privacy and control by storing all data locally on the device and encrypting data at rest. It offers a range of language models and features like mobile tethering, automatic GPU acceleration, and secure chat in the browser.

Backyard AI

Backyard AI is an AI-powered platform that offers immersive text adventures with AI characters, chat, and interactive stories. Users can bring AI characters to life with expressive customizations and explore intricate worlds through text RPG experiences. The platform provides a Desktop App for running AI models locally and cloud models for supercharging creativity. Backyard AI prioritizes privacy and control by storing data locally and encrypting it at rest. With a focus on user-friendly features and powerful AI language models, Backyard AI aims to provide an engaging and secure AI experience for users.

Awan LLM

Awan LLM is an AI tool that offers an Unlimited Tokens, Unrestricted, and Cost-Effective LLM Inference API Platform for Power Users and Developers. It allows users to generate unlimited tokens, use LLM models without constraints, and pay per month instead of per token. The platform features an AI Assistant, AI Agents, Roleplay with AI companions, Data Processing, Code Completion, and Applications for profitable AI-powered applications.

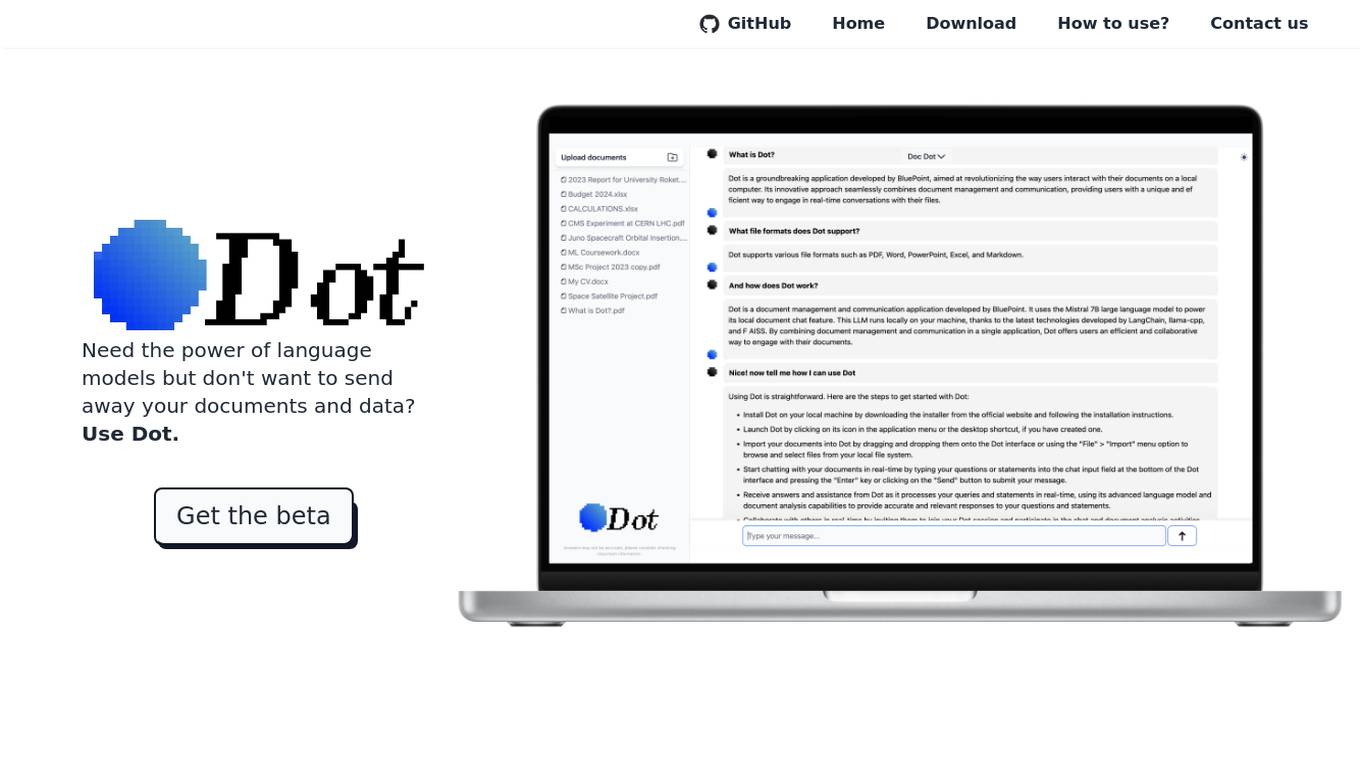

Dot

Dot is a free, locally-run language model that allows users to interact with their own documents, chat with the model, and use the model for a variety of tasks, all without sending their data away. It is powered by the Mistral 7B LLM, which means it can run locally on a user's device and does not give away any of their data. Dot can also run offline.

Fifi.ai

Fifi.ai is a managed AI cloud platform that provides users with the infrastructure and tools to deploy and run AI models. The platform is designed to be easy to use, with a focus on plug-and-play functionality. Fifi.ai also offers a range of customization and fine-tuning options, allowing users to tailor the platform to their specific needs. The platform is supported by a team of experts who can provide assistance with onboarding, API integration, and troubleshooting.

Profit Isle

Profit Isle is an AI application that helps enterprises make data-driven decisions to enhance profitability and drive value to the bottom line. The platform integrates and transforms enterprise data to power AI initiatives, providing actionable insights and recommendations grounded in company data. Profit Isle prioritizes transparency, data governance, and privacy to ensure customers can confidently run AI models and make informed decisions.

TitanML

TitanML is a platform that provides tools and services for deploying and scaling Generative AI applications. Their flagship product, the Titan Takeoff Inference Server, helps machine learning engineers build, deploy, and run Generative AI models in secure environments. TitanML's platform is designed to make it easy for businesses to adopt and use Generative AI, without having to worry about the underlying infrastructure. With TitanML, businesses can focus on building great products and solving real business problems.

Nebius

Nebius is the ultimate cloud for AI explorers, designed to democratize AI infrastructure and empower builders everywhere. It offers flexible architecture to seamlessly scale AI from a single GPU to pre-optimized clusters with thousands of NVIDIA GPUs. Nebius is engineered for demanding AI workloads, integrating NVIDIA GPU accelerators, high-performance InfiniBand, and Kubernetes or Slurm orchestration for peak efficiency. The platform provides long-term value by optimizing every layer of the stack, delivering substantial customer value over competitors.

Layla

Layla is a private AI assistant that operates offline on your device, ensuring complete privacy and no censorship. It offers different personalities, customizable features, downloadable characters, and advanced settings. Layla can chat, inspire, assist, entertain, and more, making it a versatile AI tool for various tasks. The application is constantly evolving with weekly updates, including features like real-time internet search, task reminders, social features, and 3D models. Layla utilizes cutting-edge technology to run Large Language Models on consumer hardware, providing a unique and personalized AI experience without the need for an internet connection.

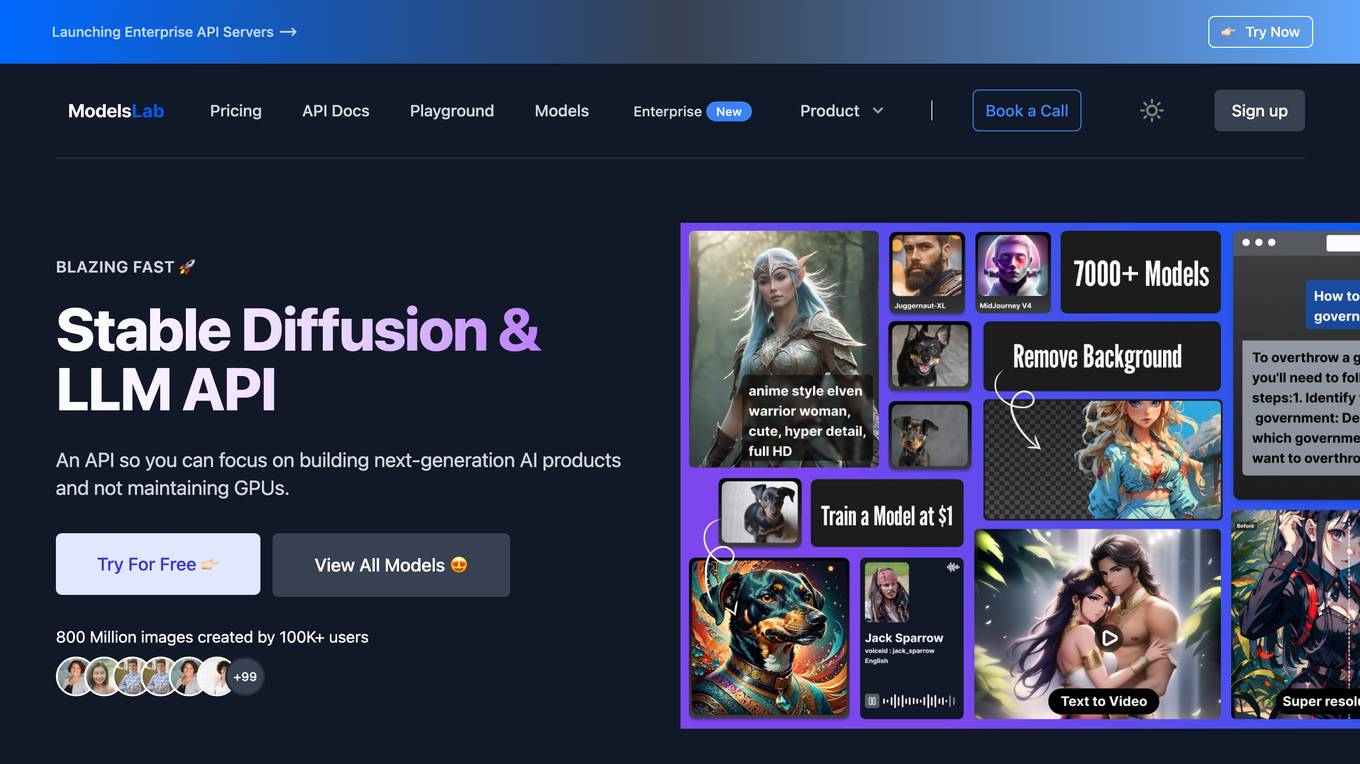

ModelsLab

ModelsLab is an AI tool that offers Text to Image and AI Voice Generator online. It provides resources for models, pricing, and enterprise solutions. Developers can access the API documentation and join the Discord community. ModelsLab enables users to build smart AI products for various applications, with features like Imagen AI Image Generation, Video Fusion, AudioGen, 3D Verse, Auto AI, and LLMaster. The platform has advantages such as easy image generation, enhanced audio and music creation, 3D model designing, productivity boost with AI, and language model integration. However, some disadvantages include limited features for certain tasks, potential learning curve, and availability of certain tools. The FAQ section covers common queries about image editing APIs, resolution quality, importance of image editing APIs, and applications of FaceGen API. ModelsLab is suitable for jobs like developers, game developers, instructional designers, digital marketing managers, and artists. Users can find the application using keywords like AI Image Generator, AI Voice Generator, Text to Image, Voice Cloning, and Language Model. Tasks that can be performed using ModelsLab include Generate Image, Create Video, Generate Audio, Design 3D Models, and Enhance Productivity.

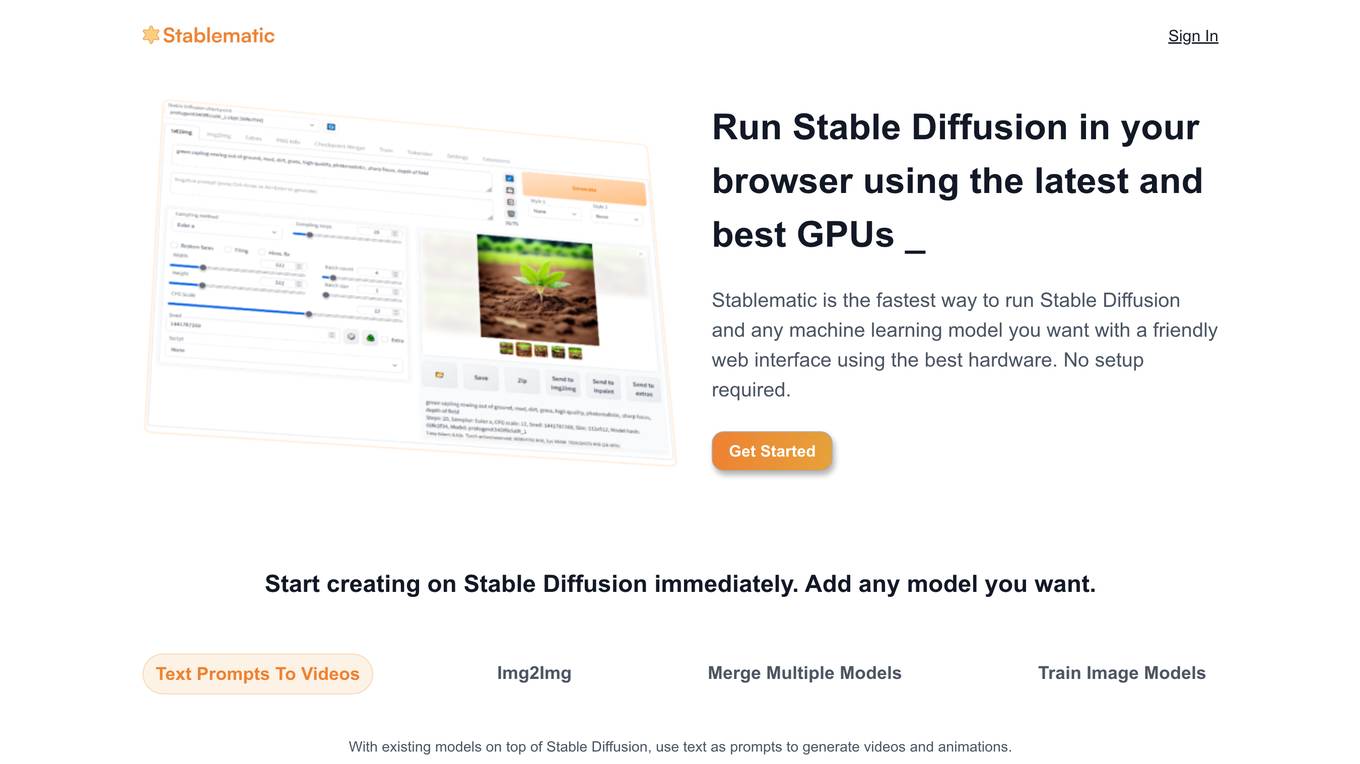

Stablematic

Stablematic is a web-based platform that allows users to run Stable Diffusion and other machine learning models without the need for local setup or hardware limitations. It provides a user-friendly interface, pre-installed plugins, and dedicated GPU resources for a seamless and efficient workflow. Users can generate images and videos from text prompts, merge multiple models, train custom models, and access a range of pre-trained models, including Dreambooth and CivitAi models. Stablematic also offers API access for developers and dedicated support for users to explore and utilize the capabilities of Stable Diffusion and other machine learning models.

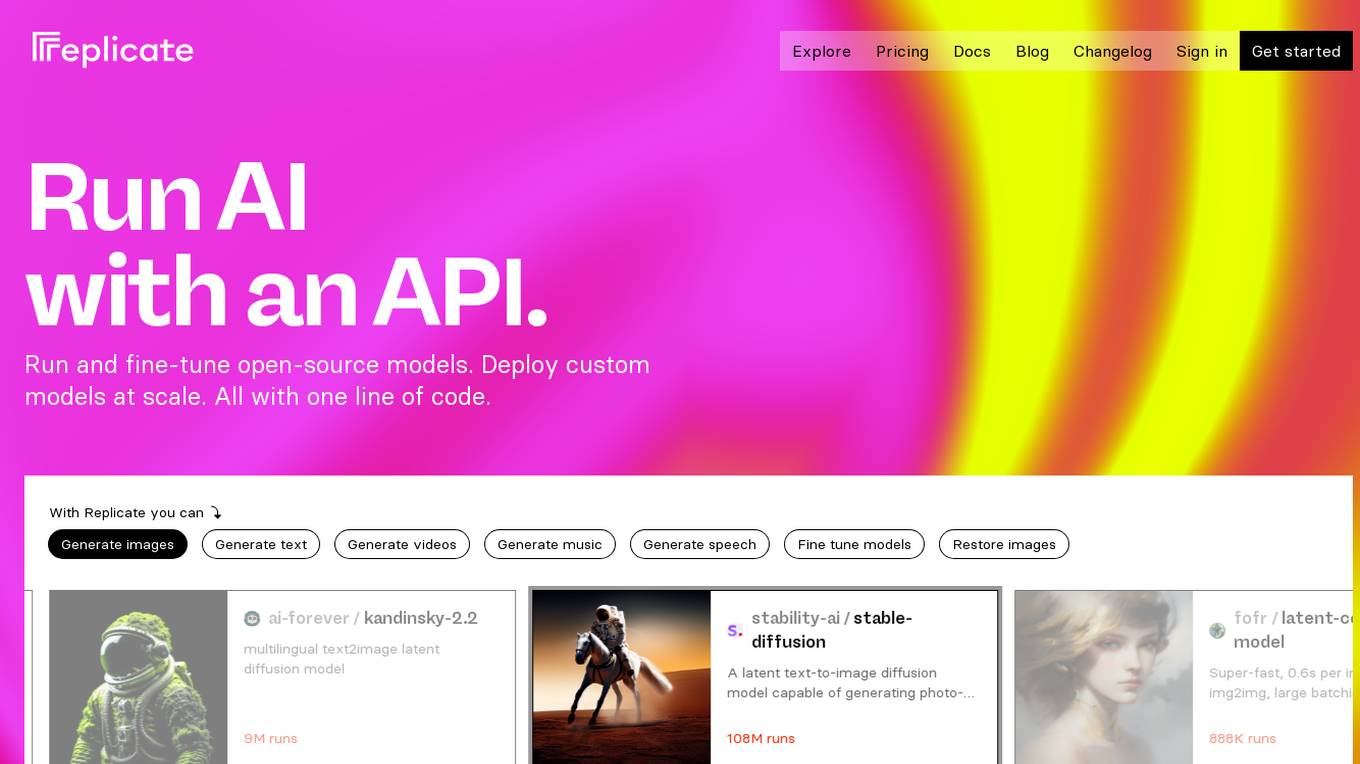

Replicate

Replicate is an AI tool that allows users to run and fine-tune open-source models, deploy custom models at scale, and generate images, text, videos, music, and speech with just one line of code. It provides a platform for the community to contribute and explore thousands of production-ready AI models, enabling users to push the boundaries of AI beyond academic papers and demos. With features like fine-tuning models, deploying custom models, and scaling on Replicate, users can easily create and deploy AI solutions for various tasks.

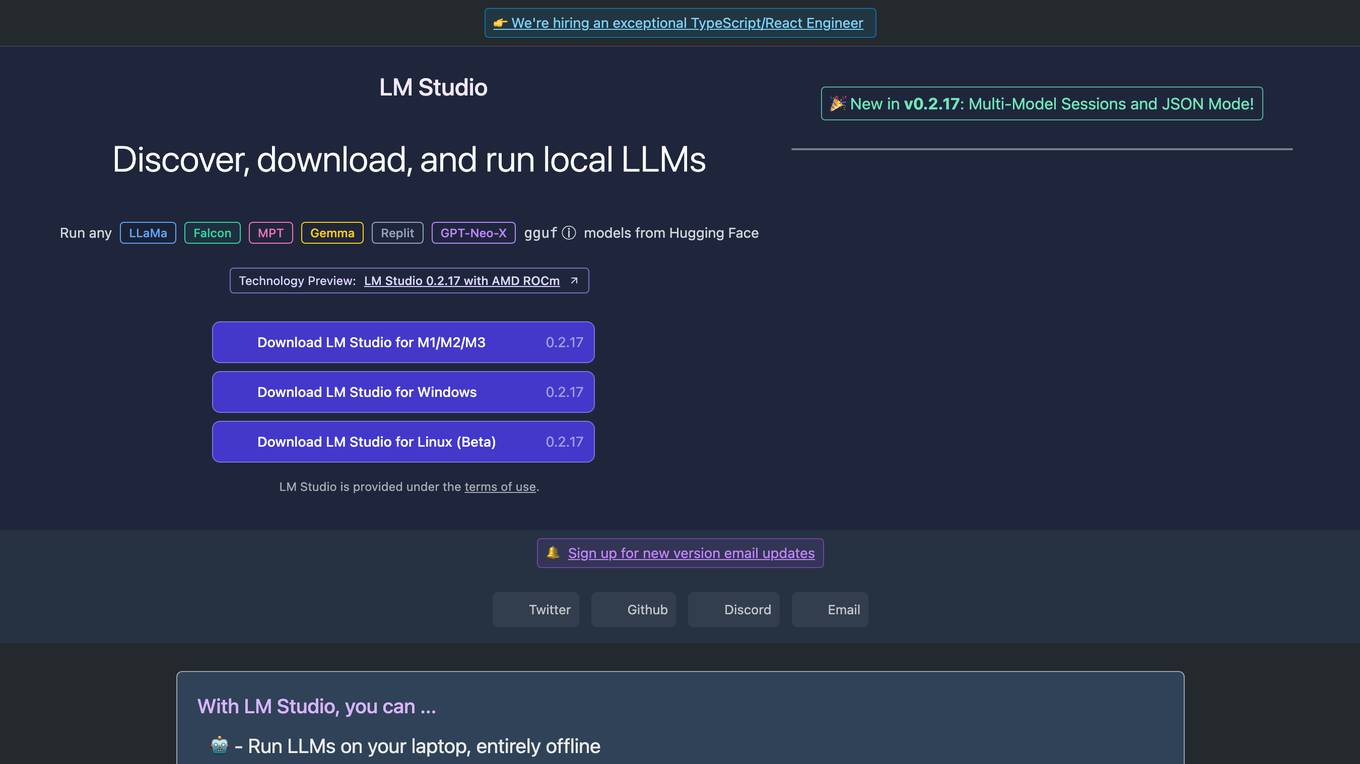

LM Studio

LM Studio is an AI tool designed for discovering, downloading, and running local LLMs (Large Language Models). Users can run LLMs on their laptops offline, use models through an in-app Chat UI or a local server, download compatible model files from HuggingFace repositories, and discover new LLMs. The tool ensures privacy by not collecting data or monitoring user actions, making it suitable for personal and business use. LM Studio supports various models like ggml Llama, MPT, and StarCoder on Hugging Face, with minimum hardware/software requirements specified for different platforms.

6 - Open Source AI Tools

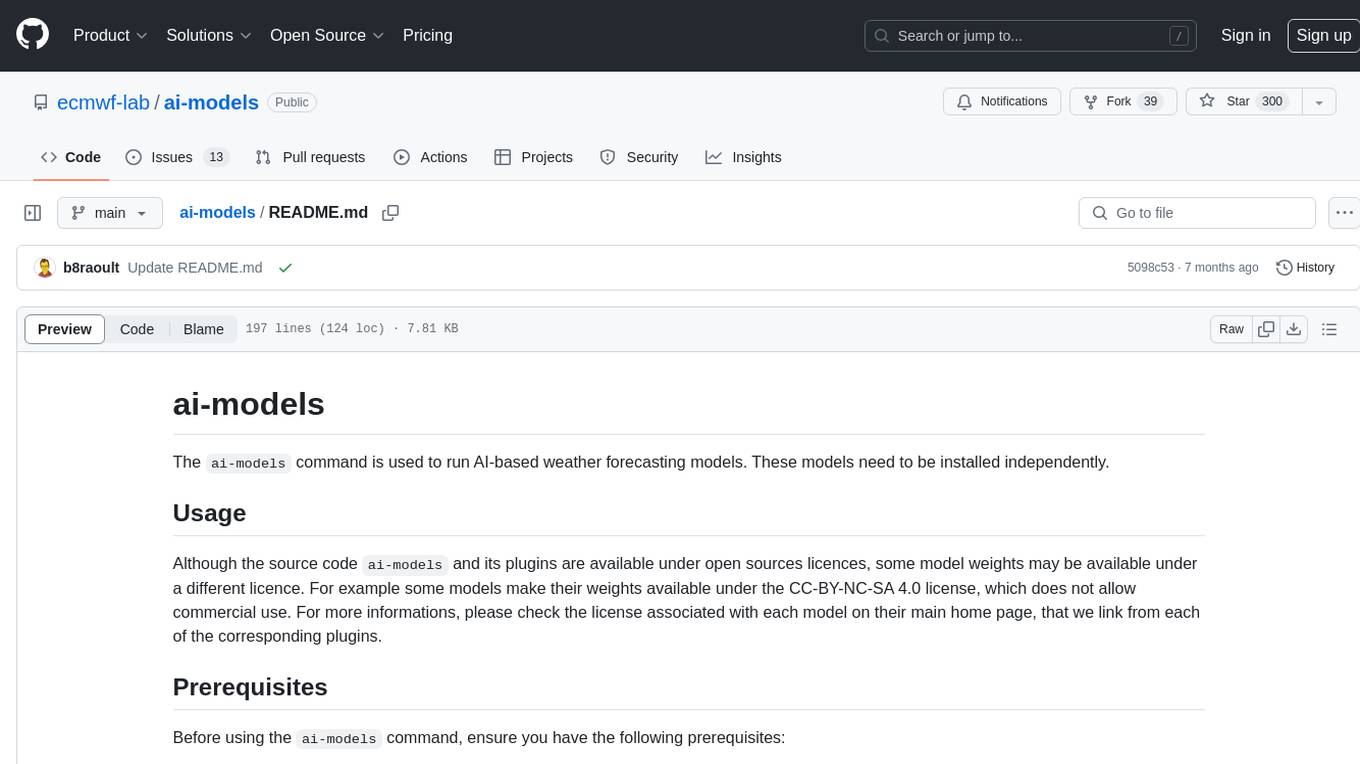

ai-models

The `ai-models` command is a tool used to run AI-based weather forecasting models. It provides functionalities to install, run, and manage different AI models for weather forecasting. Users can easily install and run various models, customize model settings, download assets, and manage input data from different sources such as ECMWF, CDS, and GRIB files. The tool is designed to optimize performance by running on GPUs and provides options for better organization of assets and output files. It offers a range of command line options for users to interact with the models and customize their forecasting tasks.

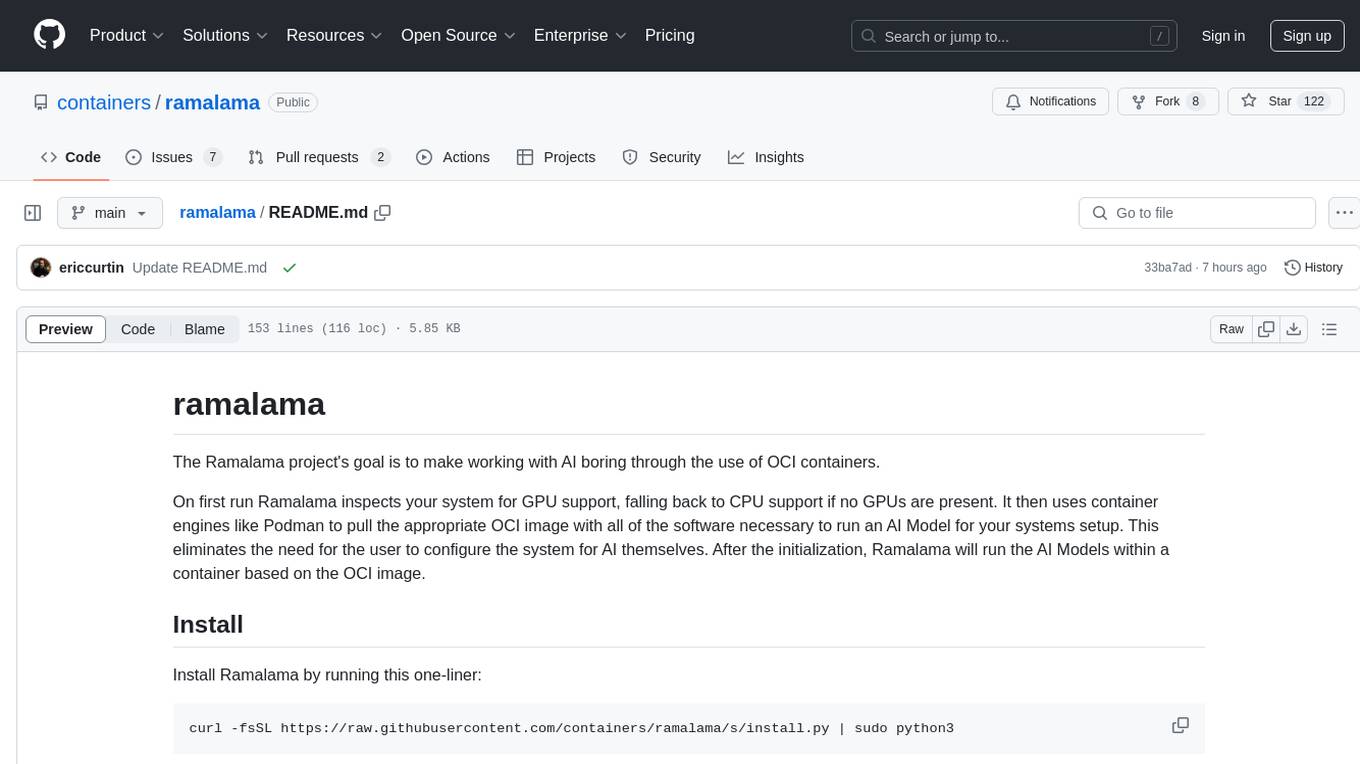

ramalama

The Ramalama project simplifies working with AI by utilizing OCI containers. It automatically detects GPU support, pulls necessary software in a container, and runs AI models. Users can list, pull, run, and serve models easily. The tool aims to support various GPUs and platforms in the future, making AI setup hassle-free.

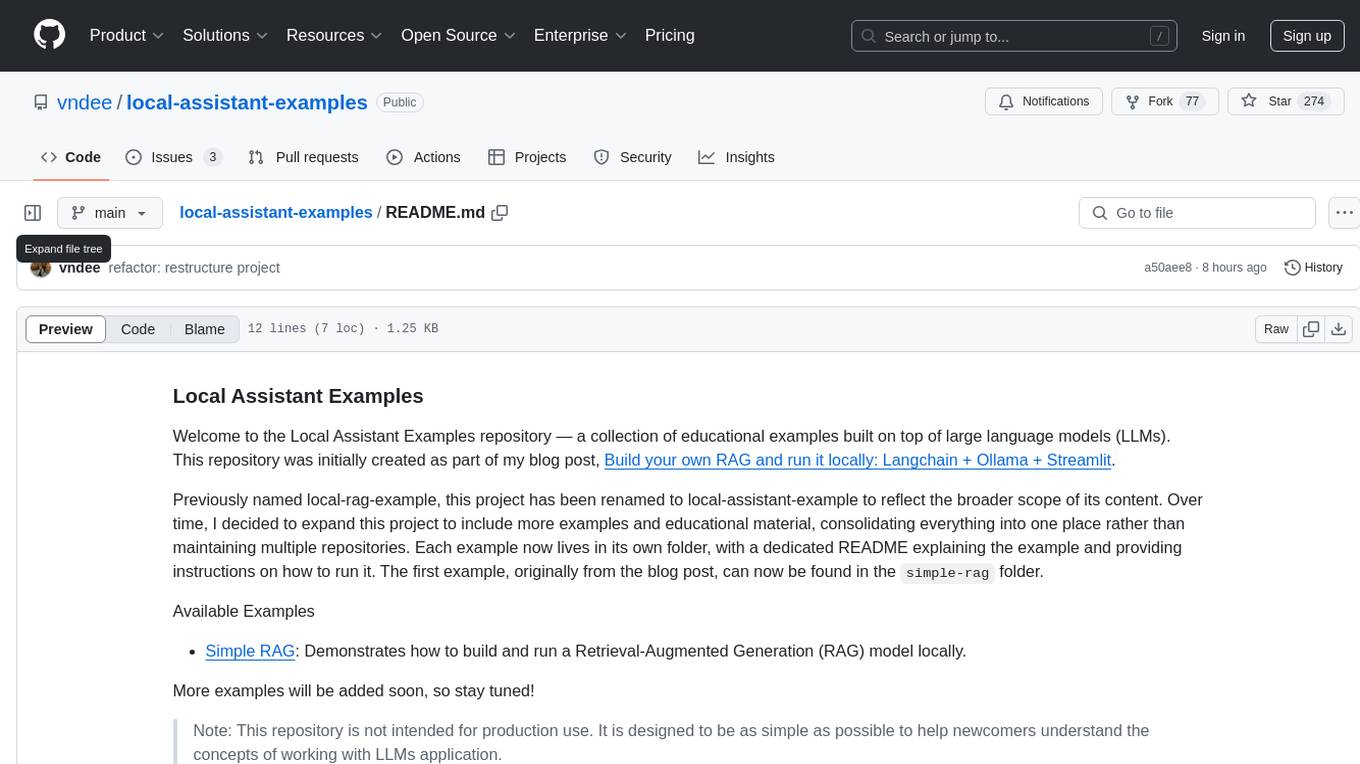

local-assistant-examples

The Local Assistant Examples repository is a collection of educational examples showcasing the use of large language models (LLMs). It was initially created for a blog post on building a RAG model locally, and has since expanded to include more examples and educational material. Each example is housed in its own folder with a dedicated README providing instructions on how to run it. The repository is designed to be simple and educational, not for production use.

nosia

Nosia is a platform that allows users to run an AI model on their own data. It is designed to be easy to install and use. Users can follow the provided guides for quickstart, API usage, upgrading, starting, stopping, and troubleshooting. The platform supports custom installations with options for remote Ollama instances, custom completion models, and custom embeddings models. Advanced installation instructions are also available for macOS with a Debian or Ubuntu VM setup. Users can access the platform at 'https://nosia.localhost' and troubleshoot any issues by checking logs and job statuses.

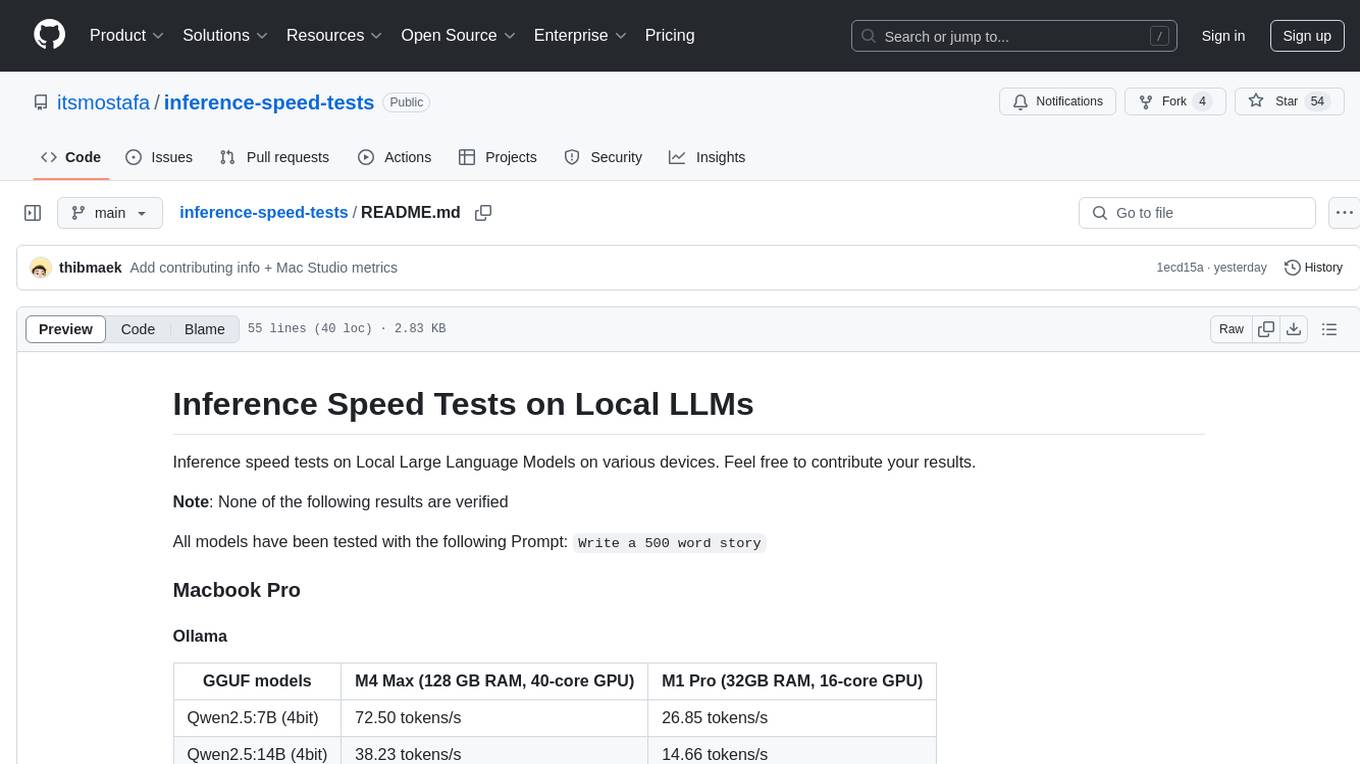

inference-speed-tests

This repository contains inference speed tests on Local Large Language Models on various devices. It provides results for different models tested on Macbook Pro and Mac Studio. Users can contribute their own results by running models with the provided prompt and adding the tokens-per-second output. Note that the results are not verified.

uzu

uzu is a high-performance inference engine for AI models on Apple Silicon. It features a simple, high-level API, hybrid architecture for GPU kernel computation, unified model configurations, traceable computations, and utilizes unified memory on Apple devices. The tool provides a CLI mode for running models, supports its own model format, and offers prebuilt Swift and TypeScript frameworks for bindings. Users can quickly start by adding the uzu dependency to their Cargo.toml and creating an inference Session with a specific model and configuration. Performance benchmarks show metrics for various models on Apple M2, highlighting the tokens/s speed for each model compared to llama.cpp with bf16/f16 precision.

20 - OpenAI Gpts

Kvaser - C&C Adventure Module Assistant

Adventure and encounter assistant for the game of Creatures & Chronicles

Consulting & Investment Banking Interview Prep GPT

Run mock interviews, review content and get tips to ace strategy consulting and investment banking interviews

Dungeon Master's Assistant

Your new DM's screen: helping Dungeon Masters to craft & run amazing D&D adventures.

Database Builder

Hosts a real SQLite database and helps you create tables, make schema changes, and run SQL queries, ideal for all levels of database administration.

Restaurant Startup Guide

Meet the Restaurant Startup Guide GPT: your friendly guide in the restaurant biz. It offers casual, approachable advice to help you start and run your own restaurant with ease.

Community Design™

A community-building GPT based on the wildly popular Community Design™ framework from Mighty Networks. Start creating communities that run themselves.

Code Helper for Web Application Development

Friendly web assistant for efficient code. Ask the wizard to create an application and you will get the HTML, CSS and Javascript code ready to run your web application.

Creative Director GPT

I'm your brainstorm muse in marketing and advertising; the creativity machine you need to sharpen the skills, land the job, generate the ideas, win the pitches, build the brands, ace the awards, or even run your own agency. Psst... don't let your clients find out about me! 😉

Pace Assistant

Provides running splits for Strava Routes, accounting for distance and elevation changes