QodeAssist

QodeAssist is an AI-powered coding assistant plugin for Qt Creator

Stars: 252

QodeAssist is an AI-powered coding assistant plugin for Qt Creator, offering intelligent code completion and suggestions for C++ and QML. It leverages large language models like Ollama to enhance coding productivity with context-aware AI assistance directly in the Qt development environment. The plugin supports multiple LLM providers, extensive model-specific templates, and easy configuration for enhanced coding experience.

README:

QodeAssist is an AI-powered coding assistant plugin for Qt Creator. It provides intelligent code completion and suggestions for C++ and QML, leveraging large language models through local providers like Ollama. Enhance your coding productivity with context-aware AI assistance directly in your Qt development environment.

When using paid providers like Claude, OpenRouter or OpenAI-compatible services:

- These services will consume API tokens which may result in charges to your account

- The QodeAssist developer bears no responsibility for any charges incurred

- Please carefully review the provider's pricing and your account settings before use

- Overview

- Install plugin to QtCreator

- Configure for Anthropic Claude

- Configure for OpenAI

- Configure for Mistral AI

- Configure for Google AI

- Configure for Ollama

- Configure for llama.cpp

- System Prompt Configuration

- File Context Feature

- Quick Refactoring Feature

- QtCreator Version Compatibility

- Development Progress

- Hotkeys

- Ignoring Files

- Troubleshooting

- Support the Development

- How to Build

- AI-powered code completion

- Sharing IDE opened files with model context (disabled by default, need enable in settings)

- Quick refactor code via fast chat command and opened files

- Chat functionality:

- Side and Bottom panels(enabling in chat settings due stability reason with QQuickWidget problem)

- Chat in additional popup window with pinning(recommended)

- Chat history autosave and restore

- Token usage monitoring and management

- Attach files for one-time code analysis

- Link files for persistent context with auto update in conversations

- Automatic syncing with open editor files (optional)

- Support for multiple LLM providers:

- Ollama

- llama.cpp

- OpenAI

- Anthropic Claude

- LM Studio

- Mistral AI

- Google AI

- OpenAI-compatible providers(eg. llama.cpp, https://openrouter.ai)

- Extensive library of model-specific templates

- Easy configuration and model selection

Join our Discord Community: Have questions or want to discuss QodeAssist? Join our Discord server to connect with other users and get support!

- Install Latest Qt Creator

- Download the QodeAssist plugin for your Qt Creator

- Remove old version plugin if already was installed

- on macOS for QtCreator 16: ~/Library/Application Support/QtProject/Qt Creator/plugins/16.0.0/petrmironychev.qodeassist

- on windows for QtCreator 16: C:\Users<user>\AppData\Local\QtProject\qtcreator\plugins\16.0.0\petrmironychev.qodeassist\lib\qtcreator\plugins

- Remove old version plugin if already was installed

- Launch Qt Creator and install the plugin:

- Go to:

- MacOS: Qt Creator -> About Plugins...

- Windows\Linux: Help -> About Plugins...

- Click on "Install Plugin..."

- Select the downloaded QodeAssist plugin archive file

- Go to:

- Open Qt Creator settings and navigate to the QodeAssist section

- Go to Provider Settings tab and configure Claude api key

- Return to General tab and configure:

- Set "Claude" as the provider for code completion or/and chat assistant

- Set the Claude URL (https://api.anthropic.com)

- Select your preferred model (e.g., claude-3-5-sonnet-20241022)

- Choose the Claude template for code completion or/and chat

- Open Qt Creator settings and navigate to the QodeAssist section

- Go to Provider Settings tab and configure OpenAI api key

- Return to General tab and configure:

- Set "OpenAI" as the provider for code completion or/and chat assistant

- Set the OpenAI URL (https://api.openai.com)

- Select your preferred model (e.g., gpt-4o)

- Choose the OpenAI template for code completion or/and chat

- Open Qt Creator settings and navigate to the QodeAssist section

- Go to Provider Settings tab and configure Mistral AI api key

- Return to General tab and configure:

- Set "Mistral AI" as the provider for code completion or/and chat assistant

- Set the OpenAI URL (https://api.mistral.ai)

- Select your preferred model (e.g., mistral-large-latest)

- Choose the Mistral AI template for code completion or/and chat

- Open Qt Creator settings and navigate to the QodeAssist section

- Go to Provider Settings tab and configure Google AI api key

- Return to General tab and configure:

- Set "Google AI" as the provider for code completion or/and chat assistant

- Set the OpenAI URL (https://generativelanguage.googleapis.com/v1beta)

- Select your preferred model (e.g., gemini-2.0-flash)

- Choose the Google AI template

- Install Ollama. Make sure to review the system requirements before installation.

- Install a language models in Ollama via terminal. For example, you can run:

For standard computers (minimum 8GB RAM):

ollama run qwen2.5-coder:7b

For better performance (16GB+ RAM):

ollama run qwen2.5-coder:14b

For high-end systems (32GB+ RAM):

ollama run qwen2.5-coder:32b

- Open Qt Creator settings (Edit > Preferences on Linux/Windows, Qt Creator > Preferences on macOS)

- Navigate to the "QodeAssist" tab

- On the "General" page, verify:

- Ollama is selected as your LLM provider

- The URL is set to http://localhost:11434

- Your installed model appears in the model selection

- The prompt template is Ollama Auto FIM or Ollama Auto Chat for chat assistance. You can specify template if it is not work correct

- Click Apply if you made any changes

You're all set! QodeAssist is now ready to use in Qt Creator.

- Open Qt Creator settings and navigate to the QodeAssist section

- Go to General tab and configure:

- Set "llama.cpp" as the provider for code completion or/and chat assistant

- Set the llama.cpp URL (e.g. http://localhost:8080)

- Fill in model name

- Choose template for model(e.g. llama.cpp FIM for any model with FIM support)

The plugin comes with default system prompts optimized for chat and instruct models, as these currently provide better results for code assistance. If you prefer using FIM (Fill-in-Middle) models, you can easily customize the system prompt in the settings.

QodeAssist provides two powerful ways to include source code files in your chat conversations: Attachments and Linked Files. Each serves a distinct purpose and helps provide better context for the AI assistant.

Attachments are designed for one-time code analysis and specific queries:

- Files are included only in the current message

- Content is discarded after the message is processed

- Ideal for:

- Getting specific feedback on code changes

- Code review requests

- Analyzing isolated code segments

- Quick implementation questions

- Files can be attached using the paperclip icon in the chat interface

- Multiple files can be attached to a single message

Linked files provide persistent context throughout the conversation:

- Files remain accessible for the entire chat session

- Content is included in every message exchange

- Files are automatically refreshed - always using latest content from disk

- Perfect for:

- Long-term refactoring discussions

- Complex architectural changes

- Multi-file implementations

- Maintaining context across related questions

- Can be managed using the link icon in the chat interface

- Supports automatic syncing with open editor files (can be enabled in settings)

- Files can be added/removed at any time during the conversation

Since this is actually a small chat with redirected output, the main settings of the provider, model and template are taken from the chat settings

The request to model consist of instructions to model, selection code and cursor position The default instruction is: "Refactor the code to improve its quality and maintainability." and sending if text field is empty Also there buttons to quick call instractions:

- Repeat latest instruction, will activate after sending first request in QtCreator session

- Improve current selection code

- Suggestion alternative variant of selection code

- Other instructions[TBD]

- QtCreator 17.0.0 - 0.6.0 - 0.x.x

- QtCreator 16.0.2 - 0.5.13 - 0.x.x

- QtCreator 16.0.1 - 0.5.7 - 0.5.13

- QtCreator 16.0.0 - 0.5.2 - 0.5.6

- QtCreator 15.0.1 - 0.4.8 - 0.5.1

- QtCreator 15.0.0 - 0.4.0 - 0.4.7

- QtCreator 14.0.2 - 0.2.3 - 0.3.x

- QtCreator 14.0.1 - 0.2.2 plugin version and below

- [x] Basic plugin with code autocomplete functionality

- [x] Improve and automate settings

- [x] Add chat functionality

- [x] Sharing diff with model

- [ ] Sharing project source with model

- [ ] Support for more providers and models

- [ ] Support MCP

All hotkeys available in QtCreator Settings Also you can find default hotkeys here:

- To call chat with llm in separate window, you can use:

- on Mac: Option + Command + W

- on Windows: Ctrl + Alt + W

- on Linux: Ctrl + Alt + W

- To close chat with llm in separate window, you can use:

- on Mac: Option + Command + S

- on Windows: Ctrl + Alt + S

- on Linux: Ctrl + Alt + S

- To call manual request to suggestion, you can use or change it in settings

- on Mac: Option + Command + Q

- on Windows: Ctrl + Alt + Q

- on Linux with KDE Plasma: Ctrl + Alt + Q

- To insert the full suggestion, you can use the TAB key

- To insert word of suggistion, you can use Alt + Right Arrow for Win/Lin, or Option + Right Arrow for Mac

- To call Quick Refactor dialog, select some code or place cursor and press

- on Mac: Option + Command + R

- on Windows: Ctrl + Alt + R

- on Linux with KDE Plasma: Ctrl + Alt + R

QodeAssist supports the ability to ignore files in context using a .qodeassistignore file. This allows you to exclude specific files from the context during code completion and in the chat assistant, which is especially useful for large projects.

- Create a .qodeassistignore file in the root directory of your project near CMakeLists.txt or pro.

- Add patterns for files and directories that should be excluded from the context.

- QodeAssist will automatically detect this file and apply the exclusion rules.

The file format is similar to .gitignore:

- Each pattern is written on a separate line

- Empty lines are ignored

- Lines starting with # are considered comments

- Standard wildcards work the same as in .gitignore

- To negate a pattern, use ! at the beginning of the line

# Ignore all files in the build directory

/build

*.tmp

# Ignore a specific file

src/generated/autogen.cpp

If QodeAssist is having problems connecting to the LLM provider, please check the following:

-

Verify the IP address and port:

- For Ollama, the default is usually http://localhost:11434

- For LM Studio, the default is usually http://localhost:1234

-

Confirm that the selected model and template are compatible:

Ensure you've chosen the correct model in the "Select Models" option Verify that the selected prompt template matches the model you're using

-

On Linux the prebuilt binaries support only ubuntu 22.04+ or simililliar os. If you need compatiblity with another os, you have to build manualy. our experiments and resolution you can check here: https://github.com/Palm1r/QodeAssist/issues/48

If you're still experiencing issues with QodeAssist, you can try resetting the settings to their default values:

- Open Qt Creator settings

- Navigate to the "QodeAssist" tab

- Pick settings page for reset

- Click on the "Reset Page to Defaults" button

- The API key will not reset

- Select model after reset

If you find QodeAssist helpful, there are several ways you can support the project:

-

Report Issues: If you encounter any bugs or have suggestions for improvements, please open an issue on our GitHub repository.

-

Contribute: Feel free to submit pull requests with bug fixes or new features.

-

Spread the Word: Star our GitHub repository and share QodeAssist with your fellow developers.

-

Financial Support: If you'd like to support the development financially, you can make a donation using one of the following:

- Bitcoin (BTC):

bc1qndq7f0mpnlya48vk7kugvyqj5w89xrg4wzg68t - Ethereum (ETH):

0xA5e8c37c94b24e25F9f1f292a01AF55F03099D8D - Litecoin (LTC):

ltc1qlrxnk30s2pcjchzx4qrxvdjt5gzuervy5mv0vy - USDT (TRC20):

THdZrE7d6epW6ry98GA3MLXRjha1DjKtUx

- Bitcoin (BTC):

Every contribution, no matter how small, is greatly appreciated and helps keep the project alive!

Create a build directory and run

cmake -DCMAKE_PREFIX_PATH=<path_to_qtcreator> -DCMAKE_BUILD_TYPE=RelWithDebInfo <path_to_plugin_source>

cmake --build .

where <path_to_qtcreator> is the relative or absolute path to a Qt Creator build directory, or to a

combined binary and development package (Windows / Linux), or to the Qt Creator.app/Contents/Resources/

directory of a combined binary and development package (macOS), and <path_to_plugin_source> is the

relative or absolute path to this plugin directory.

QML code style: Preferably follow the following guidelines https://github.com/Furkanzmc/QML-Coding-Guide, thank you @Furkanzmc for collect them C++ code style: check use .clang-fortmat in project

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for QodeAssist

Similar Open Source Tools

QodeAssist

QodeAssist is an AI-powered coding assistant plugin for Qt Creator, offering intelligent code completion and suggestions for C++ and QML. It leverages large language models like Ollama to enhance coding productivity with context-aware AI assistance directly in the Qt development environment. The plugin supports multiple LLM providers, extensive model-specific templates, and easy configuration for enhanced coding experience.

langgraph-mcp-agents

LangGraph Agent with MCP is a toolkit provided by LangChain AI that enables AI agents to interact with external tools and data sources through the Model Context Protocol (MCP). It offers a user-friendly interface for deploying ReAct agents to access various data sources and APIs through MCP tools. The toolkit includes features such as a Streamlit Interface for interaction, Tool Management for adding and configuring MCP tools dynamically, Streaming Responses in real-time, and Conversation History tracking.

ai-research-assistant

Aria is a Zotero plugin that serves as an AI Research Assistant powered by Large Language Models (LLMs). It offers features like drag-and-drop referencing, autocompletion for creators and tags, visual analysis using GPT-4 Vision, and saving chats as notes and annotations. Aria requires the OpenAI GPT-4 model family and provides a configurable interface through preferences. Users can install Aria by downloading the latest release from GitHub and activating it in Zotero. The tool allows users to interact with Zotero library through conversational AI and probabilistic models, with the ability to troubleshoot errors and provide feedback for improvement.

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.

BotServer

General Bot is a chat bot server that accelerates bot development by providing code base, resources, deployment to the cloud, and templates for creating new bots. It allows modification of bot packages without code through a database and service backend. Users can develop bot packages using custom code in editors like Visual Studio Code, Atom, or Brackets. The tool supports creating bots by copying and pasting files and using favorite tools from Office or Photoshop. It also enables building custom dialogs with BASIC for extending bots.

dify

Dify is an open-source LLM app development platform that combines AI workflow, RAG pipeline, agent capabilities, model management, observability features, and more. It allows users to quickly go from prototype to production. Key features include: 1. Workflow: Build and test powerful AI workflows on a visual canvas. 2. Comprehensive model support: Seamless integration with hundreds of proprietary / open-source LLMs from dozens of inference providers and self-hosted solutions. 3. Prompt IDE: Intuitive interface for crafting prompts, comparing model performance, and adding additional features. 4. RAG Pipeline: Extensive RAG capabilities that cover everything from document ingestion to retrieval. 5. Agent capabilities: Define agents based on LLM Function Calling or ReAct, and add pre-built or custom tools. 6. LLMOps: Monitor and analyze application logs and performance over time. 7. Backend-as-a-Service: All of Dify's offerings come with corresponding APIs for easy integration into your own business logic.

gpt4all

GPT4All is an ecosystem to run powerful and customized large language models that work locally on consumer grade CPUs and any GPU. Note that your CPU needs to support AVX or AVX2 instructions. Learn more in the documentation. A GPT4All model is a 3GB - 8GB file that you can download and plug into the GPT4All open-source ecosystem software. Nomic AI supports and maintains this software ecosystem to enforce quality and security alongside spearheading the effort to allow any person or enterprise to easily train and deploy their own on-edge large language models.

Stable-Diffusion-Android

Stable Diffusion AI is an easy-to-use app for generating images from text or other images. It allows communication with servers powered by various AI technologies like AI Horde, Hugging Face Inference API, OpenAI, StabilityAI, and LocalDiffusion. The app supports Txt2Img and Img2Img modes, positive and negative prompts, dynamic size and sampling methods, unique seed input, and batch image generation. Users can also inpaint images, select faces from gallery or camera, and export images. The app offers settings for server URL, SD Model selection, auto-saving images, and clearing cache.

Delphi-AI-Developer

Delphi AI Developer is a plugin that enhances the Delphi IDE with AI capabilities from OpenAI, Gemini, and Groq APIs. It assists in code generation, refactoring, and speeding up development by providing code suggestions and predefined questions. Users can interact with AI chat and databases within the IDE, customize settings, and access documentation. The plugin is open-source and under the MIT License.

kalavai-client

Kalavai is an open-source platform that transforms everyday devices into an AI supercomputer by aggregating resources from multiple machines. It facilitates matchmaking of resources for large AI projects, making AI hardware accessible and affordable. Users can create local and public pools, connect with the community's resources, and share computing power. The platform aims to be a management layer for research groups and organizations, enabling users to unlock the power of existing hardware without needing a devops team. Kalavai CLI tool helps manage both versions of the platform.

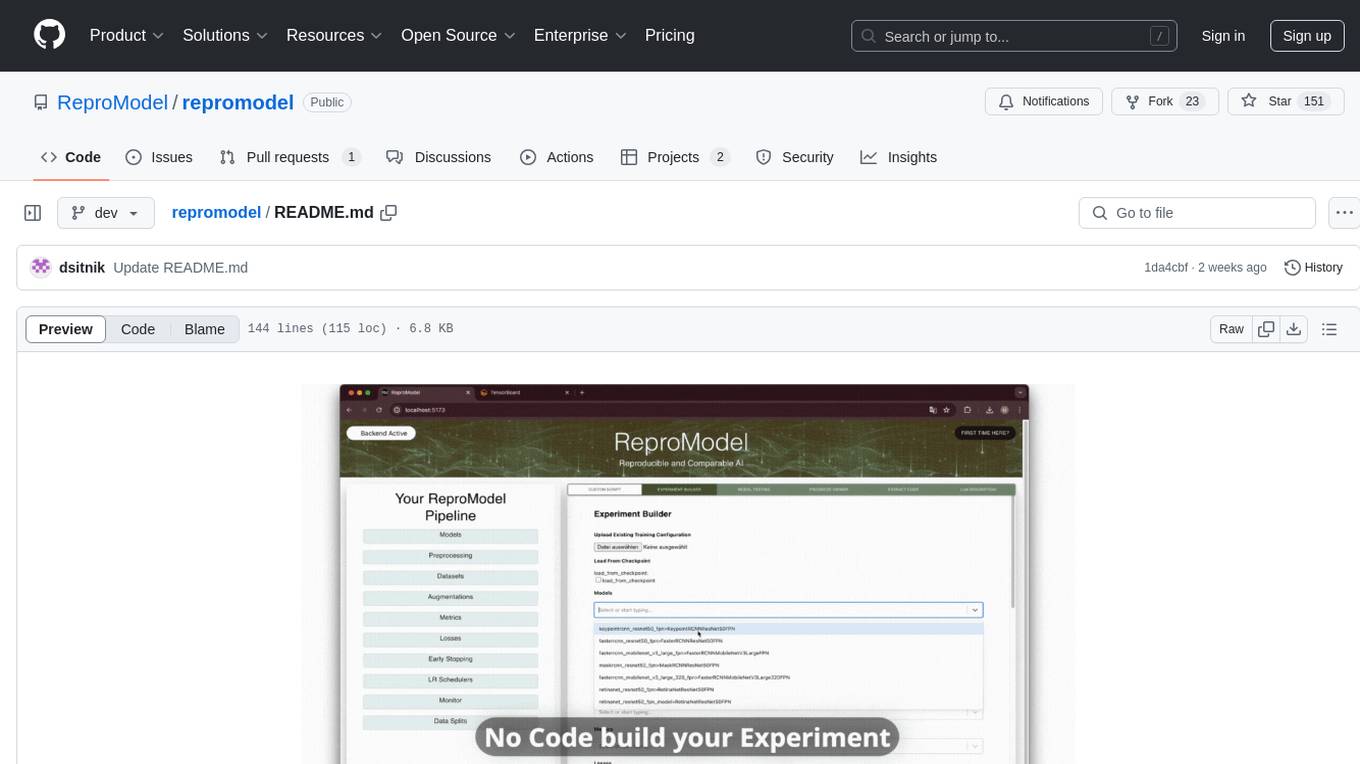

repromodel

ReproModel is an open-source toolbox designed to boost AI research efficiency by enabling researchers to reproduce, compare, train, and test AI models faster. It provides standardized models, dataloaders, and processing procedures, allowing researchers to focus on new datasets and model development. With a no-code solution, users can access benchmark and SOTA models and datasets, utilize training visualizations, extract code for publication, and leverage an LLM-powered automated methodology description writer. The toolbox helps researchers modularize development, compare pipeline performance reproducibly, and reduce time for model development, computation, and writing. Future versions aim to facilitate building upon state-of-the-art research by loading previously published study IDs with verified code, experiments, and results stored in the system.

docq

Docq is a private and secure GenAI tool designed to extract knowledge from business documents, enabling users to find answers independently. It allows data to stay within organizational boundaries, supports self-hosting with various cloud vendors, and offers multi-model and multi-modal capabilities. Docq is extensible, open-source (AGPLv3), and provides commercial licensing options. The tool aims to be a turnkey solution for organizations to adopt AI innovation safely, with plans for future features like more data ingestion options and model fine-tuning.

arbigent

Arbigent (Arbiter-Agent) is an AI agent testing framework designed to make AI agent testing practical for modern applications. It addresses challenges faced by traditional UI testing frameworks and AI agents by breaking down complex tasks into smaller, dependent scenarios. The framework is customizable for various AI providers, operating systems, and form factors, empowering users with extensive customization capabilities. Arbigent offers an intuitive UI for scenario creation and a powerful code interface for seamless test execution. It supports multiple form factors, optimizes UI for AI interaction, and is cost-effective by utilizing models like GPT-4o mini. With a flexible code interface and open-source nature, Arbigent aims to revolutionize AI agent testing in modern applications.

gptme

GPTMe is a tool that allows users to interact with an LLM assistant directly in their terminal in a chat-style interface. The tool provides features for the assistant to run shell commands, execute code, read/write files, and more, making it suitable for various development and terminal-based tasks. It serves as a local alternative to ChatGPT's 'Code Interpreter,' offering flexibility and privacy when using a local model. GPTMe supports code execution, file manipulation, context passing, self-correction, and works with various AI models like GPT-4. It also includes a GitHub Bot for requesting changes and operates entirely in GitHub Actions. In progress features include handling long contexts intelligently, a web UI and API for conversations, web and desktop vision, and a tree-based conversation structure.

colors_ai

Colors AI is a cross-platform color scheme generator that uses deep learning from public API providers. It is available for all mainstream operating systems, including mobile. Features: - Choose from open APIs, with the ability to set up custom settings - Export section with many export formats to save or clipboard copy - URL providers to other static color generators - Localized to several languages - Dark and light theme - Material Design 3 - Data encryption - Accessibility - And much more

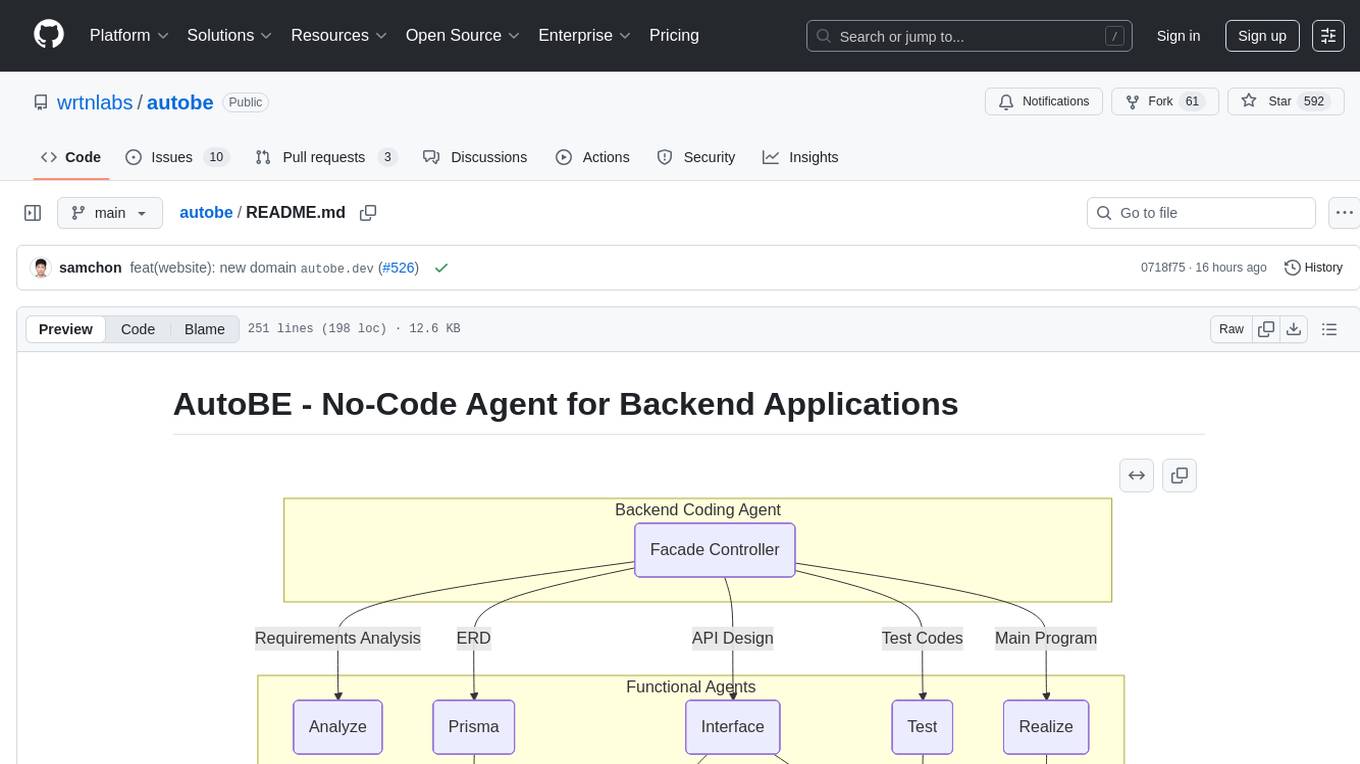

autobe

AutoBE is an AI-powered no-code agent that builds backend applications, enhanced by compiler feedback. It automatically generates backend applications using TypeScript, NestJS, and Prisma following a waterfall development model. The generated code is validated by review agents and OpenAPI/TypeScript/Prisma compilers, ensuring 100% working code. The tool aims to enable anyone to build backend servers, AI chatbots, and frontend applications without coding knowledge by conversing with AI.

For similar tasks

QodeAssist

QodeAssist is an AI-powered coding assistant plugin for Qt Creator, offering intelligent code completion and suggestions for C++ and QML. It leverages large language models like Ollama to enhance coding productivity with context-aware AI assistance directly in the Qt development environment. The plugin supports multiple LLM providers, extensive model-specific templates, and easy configuration for enhanced coding experience.

monacopilot

Monacopilot is a powerful and customizable AI auto-completion plugin for the Monaco Editor. It supports multiple AI providers such as Anthropic, OpenAI, Groq, and Google, providing real-time code completions with an efficient caching system. The plugin offers context-aware suggestions, customizable completion behavior, and framework agnostic features. Users can also customize the model support and trigger completions manually. Monacopilot is designed to enhance coding productivity by providing accurate and contextually appropriate completions in daily spoken language.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.