ai-assisted-devops

Learn how DevOps Engineers can use Gen AI to enhance their productivity in day to day tasks.

Stars: 204

AI-Assisted DevOps is a 10-day course focusing on integrating artificial intelligence (AI) technologies into DevOps practices. The course covers various topics such as AI for observability, incident response, CI/CD pipeline optimization, security, and FinOps. Participants will learn about running large language models (LLMs) locally, making API calls, AI-powered shell scripting, and using AI agents for self-healing infrastructure. Hands-on activities include creating GitHub repositories with bash scripts, generating Docker manifests, predicting server failures using AI, and building AI agents to monitor deployments. The course culminates in a capstone project where learners implement AI-assisted DevOps automation and receive peer feedback on their projects.

README:

-

Why AI is a game-changer for DevOps

-

Overview of Generative AI and LLMs (without deep theory)

-

Popular AI tools for DevOps Engineers.

-

Hands-on: Create a GitHub repository that contains a bash script. when executed the bash script confirms the health of a virtual machine by looking at the parameters such as cpu, disk space, memory e.t.c.,. Please note that the bash script should also support a command line argument named "explain", when passed, "explain" provides the detailed summary of the health status.

- Try the hands-on demonstration explained in the video.

- Fundamentals: Tokens, temperature and max tokens.

- Techniques: Zero-shot, few-shot, n-shot, and Chain-of-Thought (CoT) prompting

- Writing structured prompts for DevOps use cases

- AI-generated regex, Bash scripts, Terraform, and CI/CD configurations

- Live Demo: Demonstrate an example of few shot prompting in real time.

- Running LLMs locally (Ollama, LM Studio, GPT4All)

- Calling AI via APIs (OpenAI, Mistral, LLama, Deepseek e.t.c.,.)

- Python script to invoke ollama api

- Dockerfile Generation "Call Ollama endpoint to auto-generate Docker manifests using llama3 model"

- "Call an AI API to auto-generate Kubernetes manifests using llama3 model"

- Using AI to improve Bash/Python scripting

- AI-assisted Shell Scripting

- Mini-Challenge: "Generate a shell script to create VPC in AWS with all the best practices"

- Introduction to AIOps

- What is AIOps and What is not ?

- AI-powered monitoring with Enterprise Observability Platforms

- AIOps Recap?

- AI-powered Log Analysis

- Using AI for anomaly detection (Python)

- Demo: "Use AI to predict server failures or app failures based on logs"

- AI-powered automation in Jenkins, GitHub Actions, GitLab CI/CD

- AI-assisted YAML validation and error fixing

- Mini-Challenge: "Generate a GitHub Actions pipeline using AI and debug an error"

- What are AI Agents? How do they work?

- AI-powered self-healing infrastructure

- Project: Build a simple AI agent that monitors a deployment and suggests fixes

- AI-assisted vulnerability scanning (Trivy, Snyk, Checkov)

- AI-powered cloud cost optimization (FinOps)

- AI-generated compliance reports (CIS, NIST, PCI-DSS)

- Mini-Challenge: "Use AI to scan a container image for vulnerabilities"

- Live Demo: Running an AI-powered cloud cost analysis

- AI trends in DevOps (AI-powered SRE, AIOps, FinOps)

- Final Capstone Project: Implement an AI-assisted DevOps automation

- Peer Review: Learners give feedback on each other's projects

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for ai-assisted-devops

Similar Open Source Tools

ai-assisted-devops

AI-Assisted DevOps is a 10-day course focusing on integrating artificial intelligence (AI) technologies into DevOps practices. The course covers various topics such as AI for observability, incident response, CI/CD pipeline optimization, security, and FinOps. Participants will learn about running large language models (LLMs) locally, making API calls, AI-powered shell scripting, and using AI agents for self-healing infrastructure. Hands-on activities include creating GitHub repositories with bash scripts, generating Docker manifests, predicting server failures using AI, and building AI agents to monitor deployments. The course culminates in a capstone project where learners implement AI-assisted DevOps automation and receive peer feedback on their projects.

aigne-hub

AIGNE Hub is a unified AI gateway that manages connections to multiple LLM and AIGC providers, eliminating the complexity of handling API keys, usage tracking, and billing across different AI services. It provides self-hosting capabilities, multi-provider management, unified security, usage analytics, flexible billing, and seamless integration with the AIGNE framework. The tool supports various AI providers and deployment scenarios, catering to both enterprise self-hosting and service provider modes. Users can easily deploy and configure AI providers, enable billing, and utilize core capabilities such as chat completions, image generation, embeddings, and RESTful APIs. AIGNE Hub ensures secure access, encrypted API key management, user permissions, and audit logging. Built with modern technologies like AIGNE Framework, Node.js, TypeScript, React, SQLite, and Blocklet for cloud-native deployment.

ai-performance-engineering

This repository is a comprehensive resource for AI Systems Performance Engineering, providing code examples, tools, and resources for GPU optimization, distributed training, inference scaling, and performance tuning. It covers a wide range of topics such as performance tuning mindset, system architecture, GPU programming, memory optimization, and the latest profiling tools. The focus areas include GPU architecture, PyTorch, CUDA programming, distributed training, memory optimization, and multi-node scaling strategies.

Zettelgarden

Zettelgarden is a human-centric, open-source personal knowledge management system that helps users develop and maintain their understanding of the world. It focuses on creating and connecting atomic notes, thoughtful AI integration, and scalability from personal notes to company knowledge bases. The project is actively evolving, with features subject to change based on community feedback and development priorities.

pipecat-examples

Pipecat-examples is a collection of example applications built with Pipecat, an open-source framework for building voice and multimodal AI applications. It includes various examples demonstrating telephony & voice calls, web & client applications, realtime APIs, multimodal & creative solutions, translation & localization tasks, support, educational & specialized use cases, advanced features, deployment & infrastructure setups, monitoring & analytics tools, and testing & development scenarios.

llamafarm

LlamaFarm is a comprehensive AI framework that empowers users to build powerful AI applications locally, with full control over costs and deployment options. It provides modular components for RAG systems, vector databases, model management, prompt engineering, and fine-tuning. Users can create differentiated AI products without needing extensive ML expertise, using simple CLI commands and YAML configs. The framework supports local-first development, production-ready components, strategy-based configuration, and deployment anywhere from laptops to the cloud.

AionUi

AionUi is a user interface library for building modern and responsive web applications. It provides a set of customizable components and styles to create visually appealing user interfaces. With AionUi, developers can easily design and implement interactive web interfaces that are both functional and aesthetically pleasing. The library is built using the latest web technologies and follows best practices for performance and accessibility. Whether you are working on a personal project or a professional application, AionUi can help you streamline the UI development process and deliver a seamless user experience.

finite-monkey-engine

FiniteMonkey is an advanced vulnerability mining engine powered purely by GPT, requiring no prior knowledge base or fine-tuning. Its effectiveness significantly surpasses most current related research approaches. The tool is task-driven, prompt-driven, and focuses on prompt design, leveraging 'deception' and hallucination as key mechanics. It has helped identify vulnerabilities worth over $60,000 in bounties. The tool requires PostgreSQL database, OpenAI API access, and Python environment for setup. It supports various languages like Solidity, Rust, Python, Move, Cairo, Tact, Func, Java, and Fake Solidity for scanning. FiniteMonkey is best suited for logic vulnerability mining in real projects, not recommended for academic vulnerability testing. GPT-4-turbo is recommended for optimal results with an average scan time of 2-3 hours for medium projects. The tool provides detailed scanning results guide and implementation tips for users.

kitchenai

KitchenAI is an open-source toolkit designed to simplify AI development by serving as an AI backend and LLMOps solution. It aims to empower developers to focus on delivering results without being bogged down by AI infrastructure complexities. With features like simplifying AI integration, providing an AI backend, and empowering developers, KitchenAI streamlines the process of turning AI experiments into production-ready APIs. It offers built-in LLMOps features, is framework-agnostic and extensible, and enables faster time-to-production. KitchenAI is suitable for application developers, AI developers & data scientists, and platform & infra engineers, allowing them to seamlessly integrate AI into apps, deploy custom AI techniques, and optimize AI services with a modular framework. The toolkit eliminates the need to build APIs and infrastructure from scratch, making it easier to deploy AI code as production-ready APIs in minutes. KitchenAI also provides observability, tracing, and evaluation tools, and offers a Docker-first deployment approach for scalability and confidence.

persistent-ai-memory

Persistent AI Memory System is a comprehensive tool that offers persistent, searchable storage for AI assistants. It includes features like conversation tracking, MCP tool call logging, and intelligent scheduling. The system supports multiple databases, provides enhanced memory management, and offers various tools for memory operations, schedule management, and system health checks. It also integrates with various platforms like LM Studio, VS Code, Koboldcpp, Ollama, and more. The system is designed to be modular, platform-agnostic, and scalable, allowing users to handle large conversation histories efficiently.

sparka

Sparka AI is a multi-provider AI chat tool that allows users to access various AI models like Claude, GPT-5, Gemini, and Grok through a single interface. It offers features such as document analysis, image generation, code execution, and research tools without the need for multiple subscriptions. The tool is open-source, production-ready, and provides capabilities for collaboration, secure authentication, attachment support, AI-powered image generation, syntax highlighting, resumable streams, chat branching, chat sharing, deep research, code execution, document creation, and web analytics. Built with modern technologies for scalability and performance, Sparka AI integrates with Vercel AI SDK, tRPC, Drizzle ORM, PostgreSQL, Redis, and AI SDK Gateway.

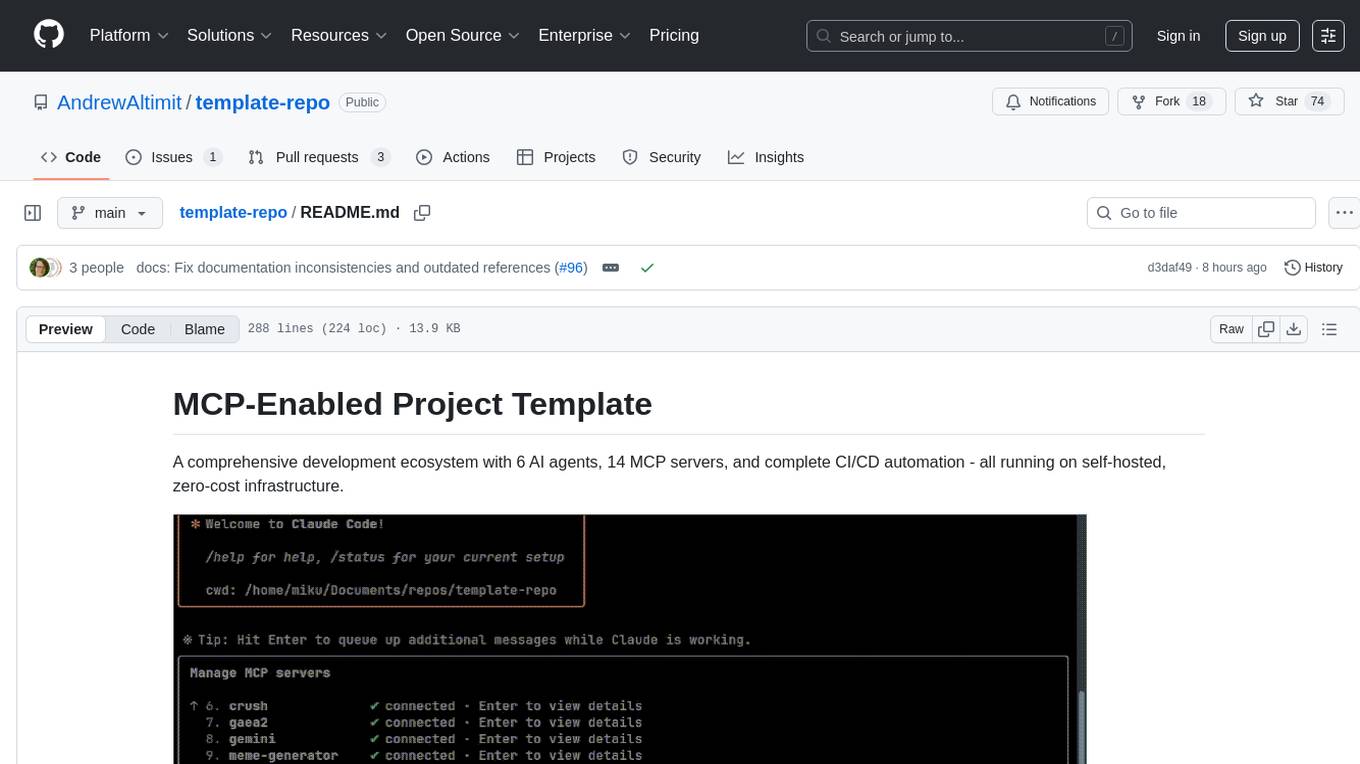

template-repo

The template-repo is a comprehensive development ecosystem with 6 AI agents, 14 MCP servers, and complete CI/CD automation running on self-hosted, zero-cost infrastructure. It follows a container-first approach, with all tools and operations running in Docker containers, zero external dependencies, self-hosted infrastructure, single maintainer design, and modular MCP architecture. The repo provides AI agents for development and automation, features 14 MCP servers for various tasks, and includes security measures, safety training, and sleeper detection system. It offers features like video editing, terrain generation, 3D content creation, AI consultation, image generation, and more, with a focus on maximum portability and consistency.

scrapegraph-sdk

Official SDKs for the ScrapeGraph AI API - Intelligent web scraping and search powered by AI. Extract structured data from any webpage or perform AI-powered web searches with natural language prompts. The SDK offers features such as SmartScraper for data extraction, SearchScraper for AI-powered web search, Markdownify for converting webpages to markdown, SmartCrawler for intelligent crawling, AgenticScraper for automated browser actions, and more. It provides seamless integration with popular frameworks and tools, supports Python and JavaScript SDKs, LLM frameworks, low-code platforms, and offers core features like AI-powered extraction, structured output, multiple data formats, high performance, and enterprise-grade security.

ai-dj

OBSIDIAN-Neural is a real-time AI music generation VST3 plugin designed for live performance. It allows users to type words and instantly receive musical loops, enhancing creative flow. The plugin features an 8-track sampler with MIDI triggering, 4 pages per track for easy variation switching, perfect DAW sync, real-time generation without pre-recorded samples, and stems separation for isolated drums, bass, and vocals. Users can generate music by typing specific keywords and trigger loops with MIDI while jamming. The tool offers different setups for server + GPU, local models for offline use, and a free API option with no setup required. OBSIDIAN-Neural is actively developed and has received over 110 GitHub stars, with ongoing updates and bug fixes. It is dual licensed under GNU Affero General Public License v3.0 and offers a commercial license option for interested parties.

bifrost

Bifrost is a high-performance AI gateway that unifies access to multiple providers through a single OpenAI-compatible API. It offers features like automatic failover, load balancing, semantic caching, and enterprise-grade functionalities. Users can deploy Bifrost in seconds with zero configuration, benefiting from its core infrastructure, advanced features, enterprise and security capabilities, and developer experience. The repository structure is modular, allowing for maximum flexibility. Bifrost is designed for quick setup, easy configuration, and seamless integration with various AI models and tools.

forge

Forge is a free and open-source digital collectible card game (CCG) engine written in Java. It is designed to be easy to use and extend, and it comes with a variety of features that make it a great choice for developers who want to create their own CCGs. Forge is used by a number of popular CCGs, including Ascension, Dominion, and Thunderstone.

For similar tasks

ai-assisted-devops

AI-Assisted DevOps is a 10-day course focusing on integrating artificial intelligence (AI) technologies into DevOps practices. The course covers various topics such as AI for observability, incident response, CI/CD pipeline optimization, security, and FinOps. Participants will learn about running large language models (LLMs) locally, making API calls, AI-powered shell scripting, and using AI agents for self-healing infrastructure. Hands-on activities include creating GitHub repositories with bash scripts, generating Docker manifests, predicting server failures using AI, and building AI agents to monitor deployments. The course culminates in a capstone project where learners implement AI-assisted DevOps automation and receive peer feedback on their projects.

watsonx-ai-samples

Sample notebooks for IBM Watsonx.ai for IBM Cloud and IBM Watsonx.ai software product. The notebooks demonstrate capabilities such as running experiments on model building using AutoAI or Deep Learning, deploying third-party models as web services or batch jobs, monitoring deployments with OpenScale, managing model lifecycles, inferencing Watsonx.ai foundation models, and integrating LangChain with Watsonx.ai. Notebooks with Python code and the Python SDK can be found in the `python_sdk` folder. The REST API examples are organized in the `rest_api` folder.

optscale

OptScale is an open-source FinOps and MLOps platform that provides cloud cost optimization for all types of organizations and MLOps capabilities like experiment tracking, model versioning, ML leaderboards.

openops

OpenOps is a No-Code FinOps automation platform designed to help organizations reduce cloud costs and streamline financial operations. It offers customizable workflows for automating key FinOps processes, comes with its own Excel-like database and visualization system, and enables collaboration between different teams. OpenOps integrates seamlessly with major cloud providers, third-party FinOps tools, communication platforms, and project management tools, providing a comprehensive solution for efficient cost-saving measures implementation.

For similar jobs

aiscript

AiScript is a lightweight scripting language that runs on JavaScript. It supports arrays, objects, and functions as first-class citizens, and is easy to write without the need for semicolons or commas. AiScript runs in a secure sandbox environment, preventing infinite loops from freezing the host. It also allows for easy provision of variables and functions from the host.

askui

AskUI is a reliable, automated end-to-end automation tool that only depends on what is shown on your screen instead of the technology or platform you are running on.

bots

The 'bots' repository is a collection of guides, tools, and example bots for programming bots to play video games. It provides resources on running bots live, installing the BotLab client, debugging bots, testing bots in simulated environments, and more. The repository also includes example bots for games like EVE Online, Tribal Wars 2, and Elvenar. Users can learn about developing bots for specific games, syntax of the Elm programming language, and tools for memory reading development. Additionally, there are guides on bot programming, contributing to BotLab, and exploring Elm syntax and core library.

ain

Ain is a terminal HTTP API client designed for scripting input and processing output via pipes. It allows flexible organization of APIs using files and folders, supports shell-scripts and executables for common tasks, handles url-encoding, and enables sharing the resulting curl, wget, or httpie command-line. Users can put things that change in environment variables or .env-files, and pipe the API output for further processing. Ain targets users who work with many APIs using a simple file format and uses curl, wget, or httpie to make the actual calls.

LaVague

LaVague is an open-source Large Action Model framework that uses advanced AI techniques to compile natural language instructions into browser automation code. It leverages Selenium or Playwright for browser actions. Users can interact with LaVague through an interactive Gradio interface to automate web interactions. The tool requires an OpenAI API key for default examples and offers a Playwright integration guide. Contributors can help by working on outlined tasks, submitting PRs, and engaging with the community on Discord. The project roadmap is available to track progress, but users should exercise caution when executing LLM-generated code using 'exec'.

robocorp

Robocorp is a platform that allows users to create, deploy, and operate Python automations and AI actions. It provides an easy way to extend the capabilities of AI agents, assistants, and copilots with custom actions written in Python. Users can create and deploy tools, skills, loaders, and plugins that securely connect any AI Assistant platform to their data and applications. The Robocorp Action Server makes Python scripts compatible with ChatGPT and LangChain by automatically creating and exposing an API based on function declaration, type hints, and docstrings. It simplifies the process of developing and deploying AI actions, enabling users to interact with AI frameworks effortlessly.

Open-Interface

Open Interface is a self-driving software that automates computer tasks by sending user requests to a language model backend (e.g., GPT-4V) and simulating keyboard and mouse inputs to execute the steps. It course-corrects by sending current screenshots to the language models. The tool supports MacOS, Linux, and Windows, and requires setting up the OpenAI API key for access to GPT-4V. It can automate tasks like creating meal plans, setting up custom language model backends, and more. Open Interface is currently not efficient in accurate spatial reasoning, tracking itself in tabular contexts, and navigating complex GUI-rich applications. Future improvements aim to enhance the tool's capabilities with better models trained on video walkthroughs. The tool is cost-effective, with user requests priced between $0.05 - $0.20, and offers features like interrupting the app and primary display visibility in multi-monitor setups.

AI-Case-Sorter-CS7.1

AI-Case-Sorter-CS7.1 is a project focused on building a case sorter using machine vision and machine learning AI to sort cases by headstamp. The repository includes Arduino code and 3D models necessary for the project.