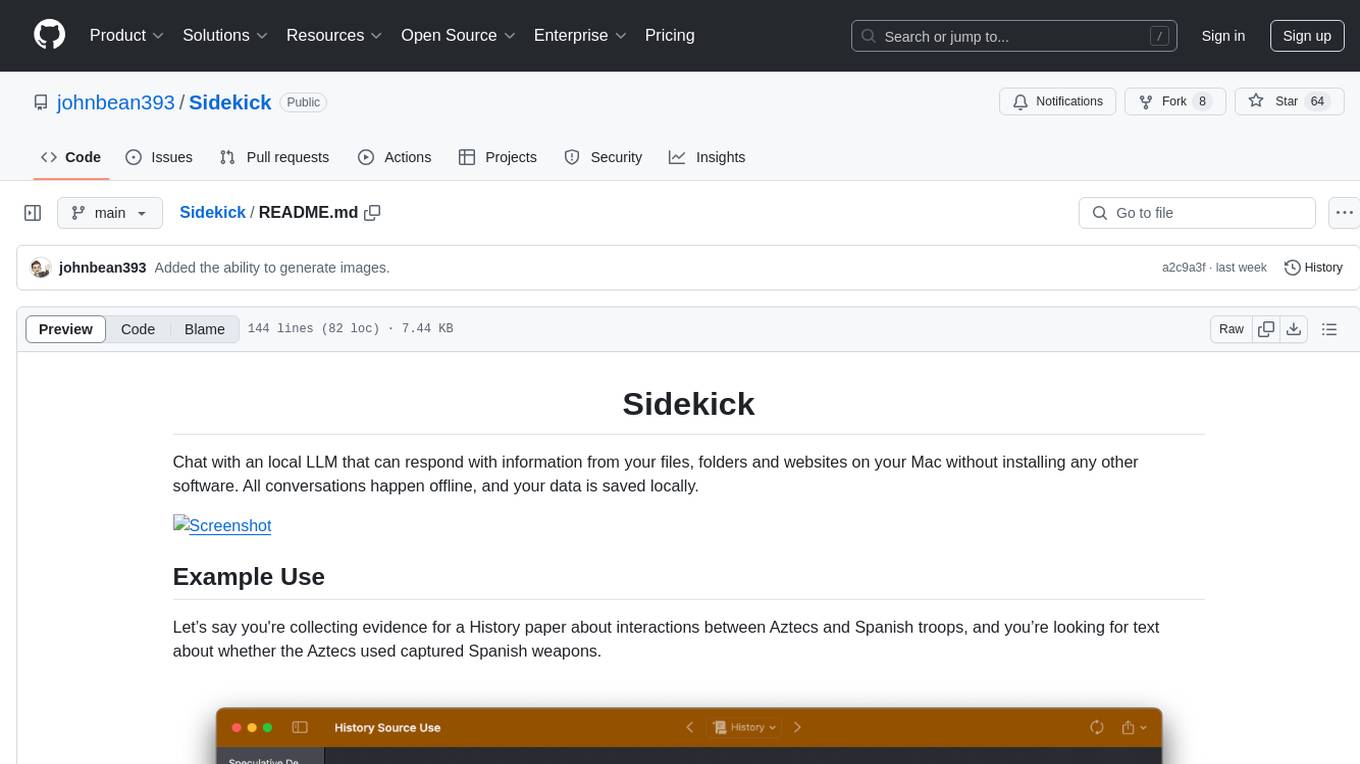

Sidekick

A native macOS app that allows users to chat with a local LLM that can respond with information from files, folders and websites on your Mac without installing any other software. Powered by llama.cpp.

Stars: 2653

Sidekick is a native LLM application for macOS that allows users to chat with a local language model to retrieve information from files, folders, and websites without the need for additional software installation. It operates offline, ensuring data privacy and security. Sidekick offers features such as resource access, image generation, inline writing assistance, advanced markdown rendering, fast generation speeds, and more. The tool aims to provide a simple and powerful solution for accessing local, private models with context awareness of user files and content on the web.

README:

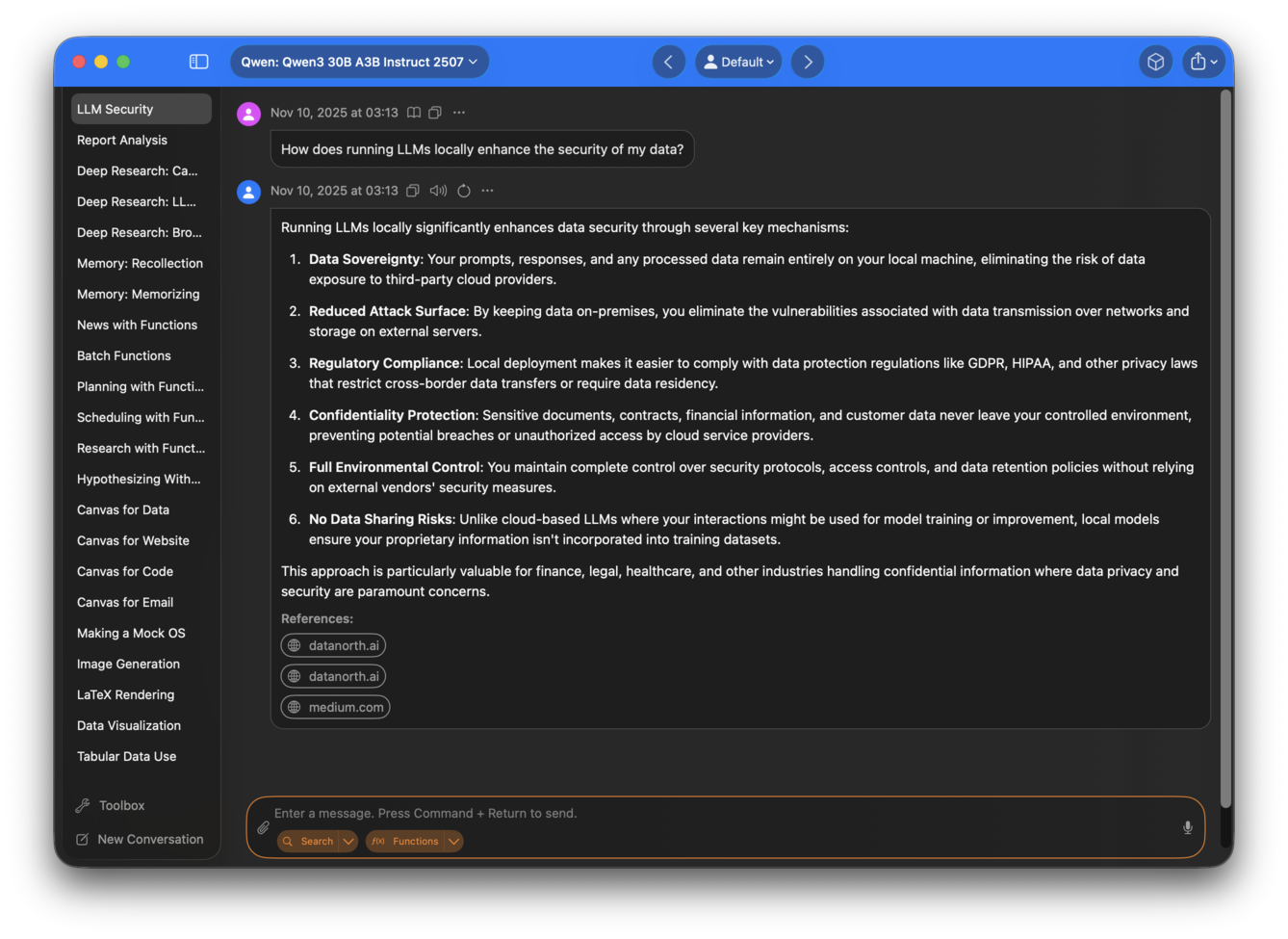

Chat with a local LLM that can respond with information from your files, folders and websites on your Mac without installing any other software. All conversations happen offline, and your data stays secure. Sidekick is a local first application –– with a built in inference engine for local models, while accomodating OpenAI compatible APIs for additional model options.

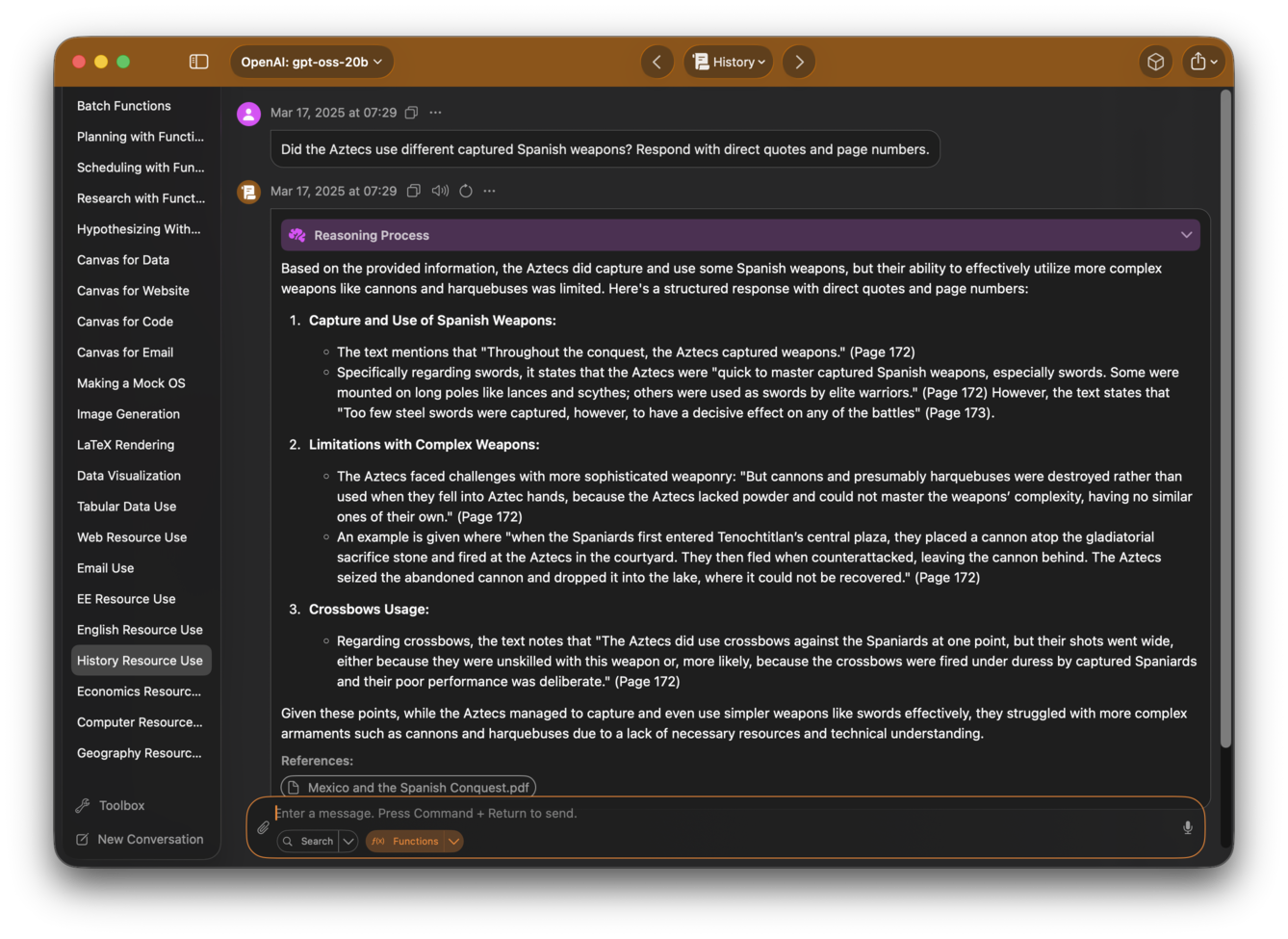

Let’s say you're collecting evidence for a History paper about interactions between Aztecs and Spanish troops, and you’re looking for text about whether the Aztecs used captured Spanish weapons.

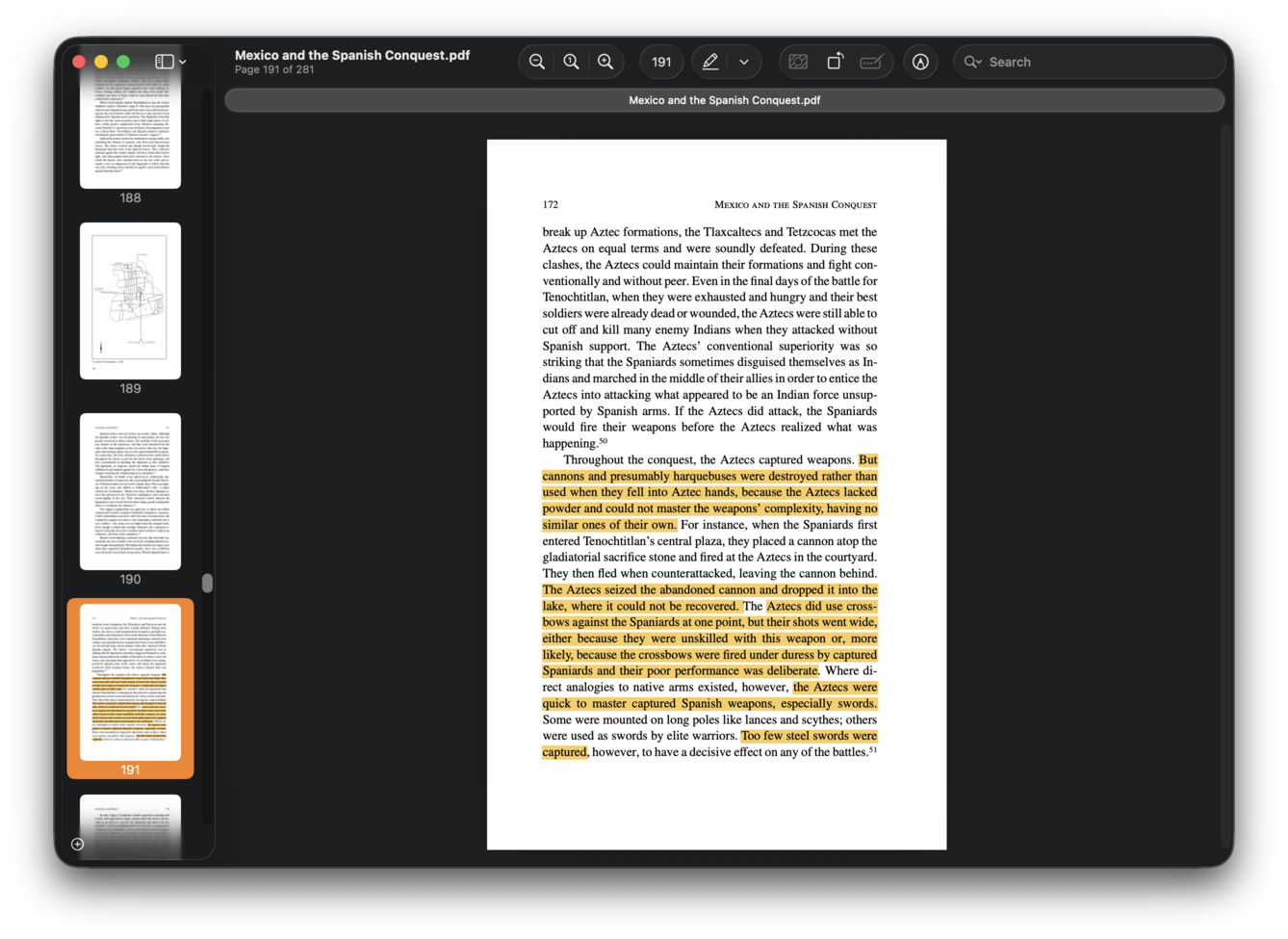

Here, you can ask Sidekick, “Did the Aztecs use captured Spanish weapons?”, and it responds with direct quotes with page numbers and a brief analysis.

To verify Sidekick’s answer, just click on the references displayed below Sidekick’s answer, and the academic paper referenced by Sidekick immediately opens in your viewer.

Read more about Sidekick's features and how to use them here.

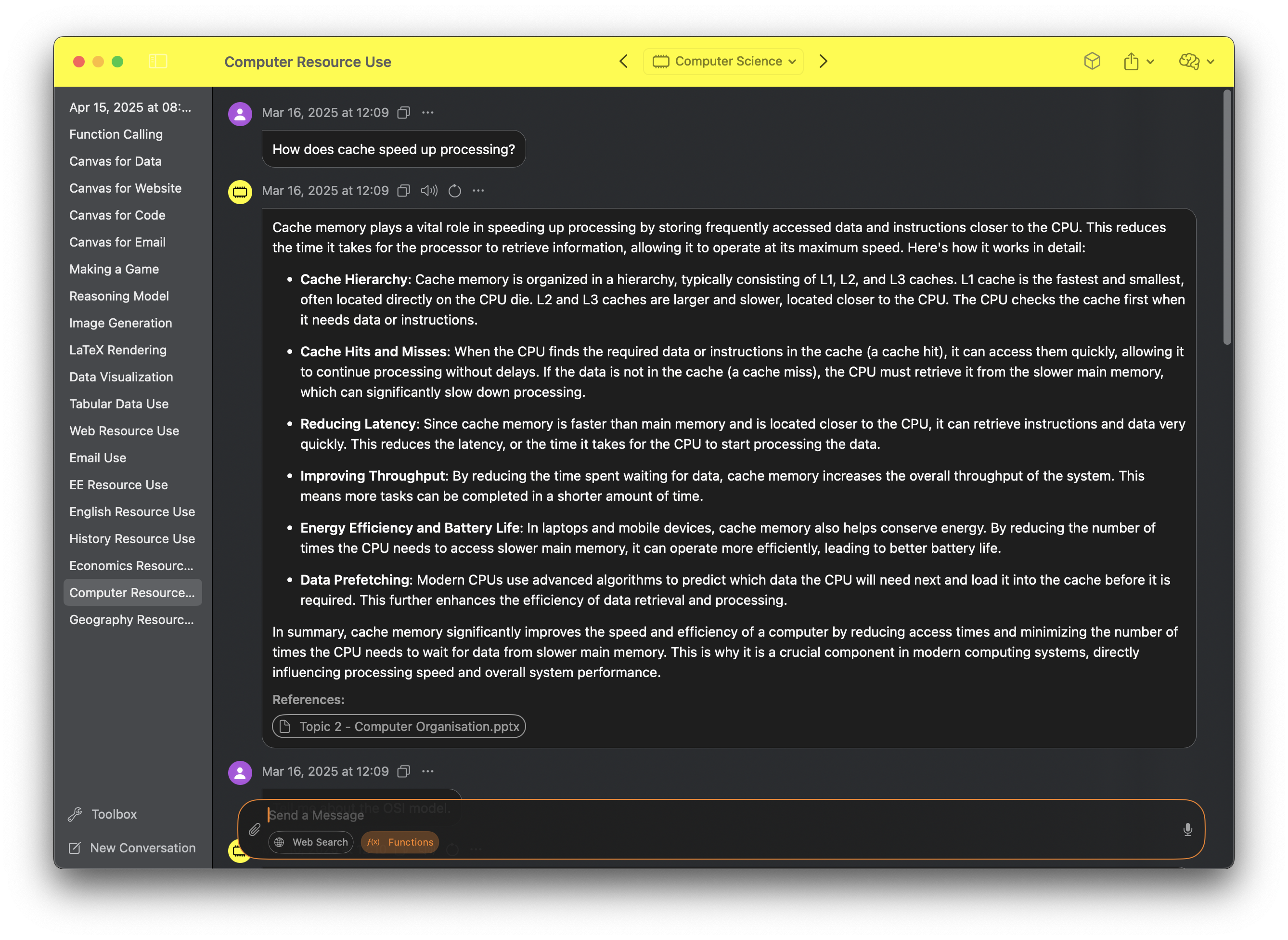

Sidekick accesses files, folders, and websites from your experts, which can be individually configured to contain resources related to specific areas of interest. Activating an expert allows Sidekick to fetch and reference materials as needed.

Because Sidekick uses RAG (Retrieval Augmented Generation), you can theoretically put unlimited resources into each expert, and Sidekick will still find information relevant to your request to aid its analysis.

For example, a student might create the experts English Literature, Mathematics, Geography, Computer Science and Physics. In the image below, he has activated the expert Computer Science.

Users can also give Sidekick access to files just by dragging them into the input field.

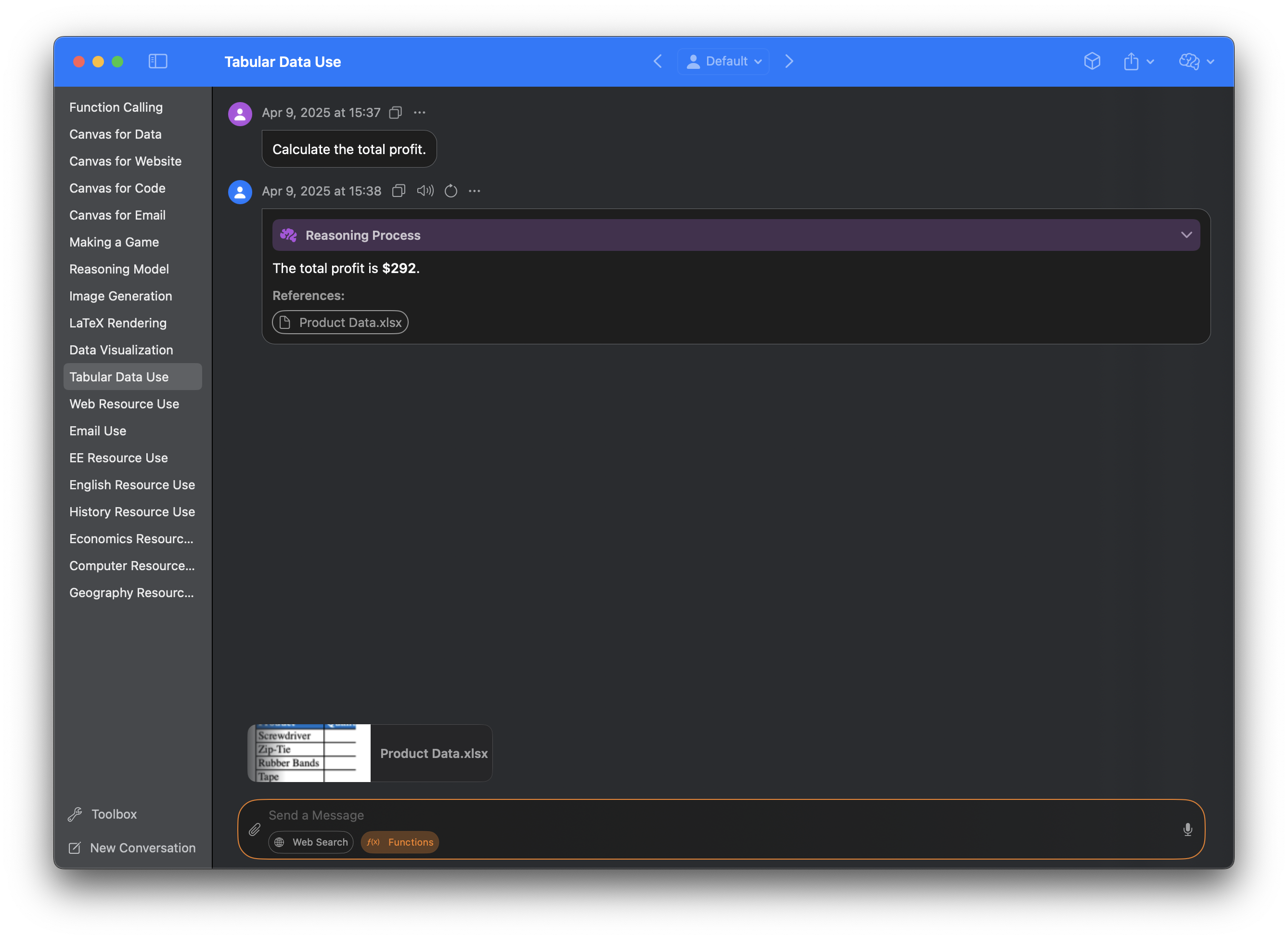

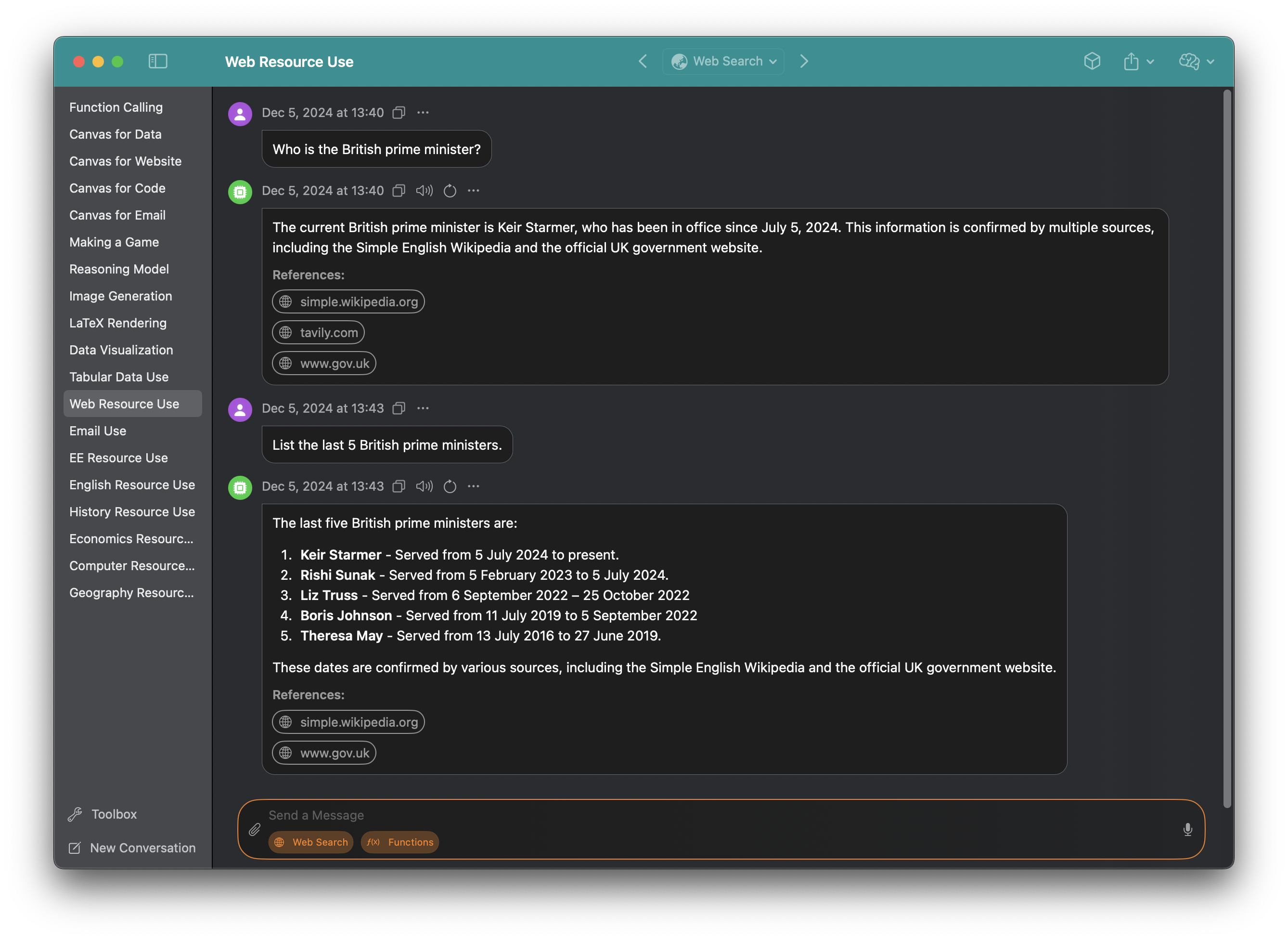

Sidekick can even respond with the latest information using web search, speeding up research.

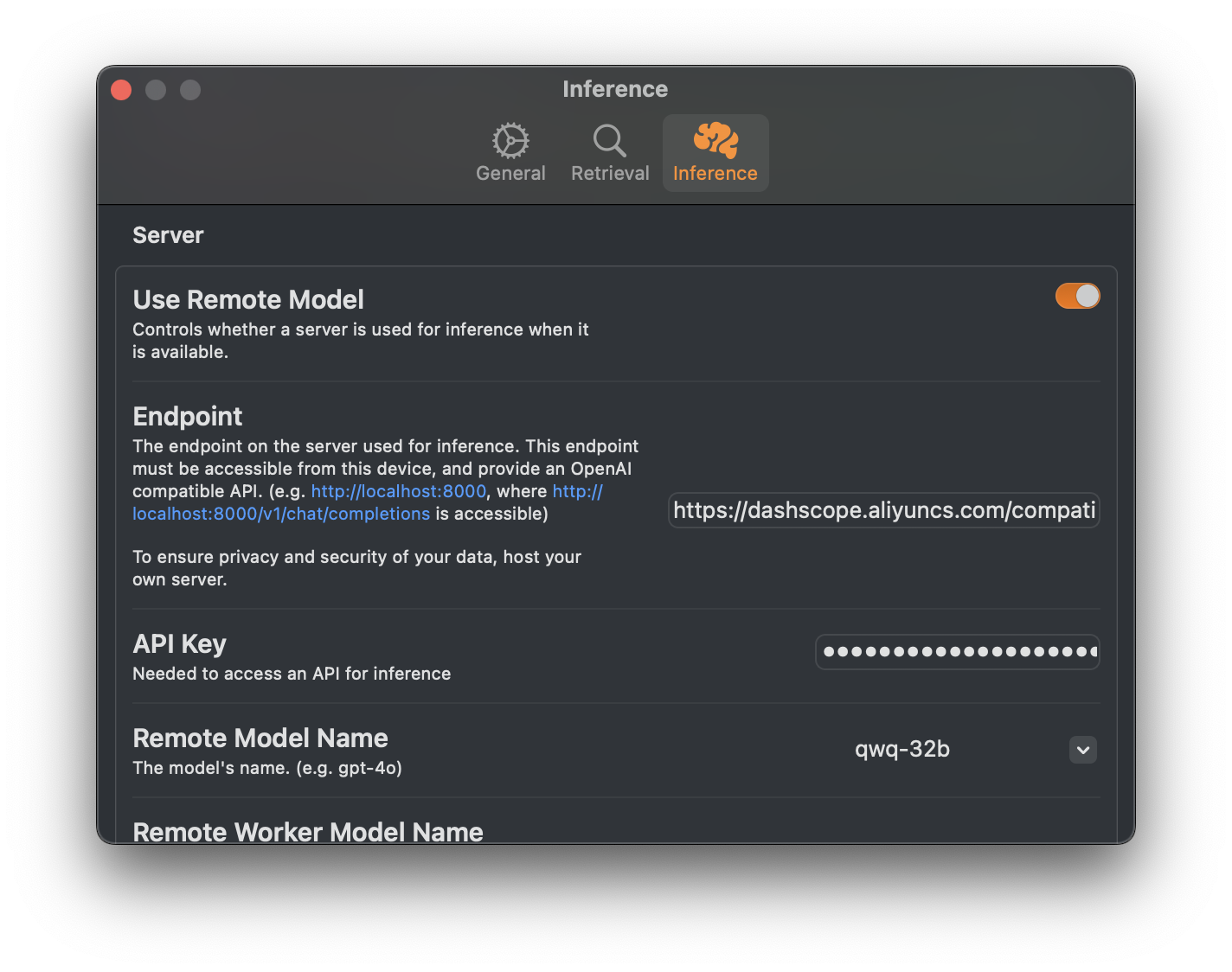

In addition to its core local-first capabilities, Sidekick now offers an option to bring your own key for OpenAI compatible APIs. This allows you to tap into additional remote models while still preserving a primarily local-first workflow.

Sidekick supports a variety of reasoning models, including Alibaba Cloud's QwQ-32B and DeepSeek's DeepSeek-R1.

Sidekick uses a code interpreter to boost the mathematical and logical capabilities of models.

Since small models are much better at writing code than doing math, having it write the code, execute it, and present the results dramatically increases trustworthiness of answers.

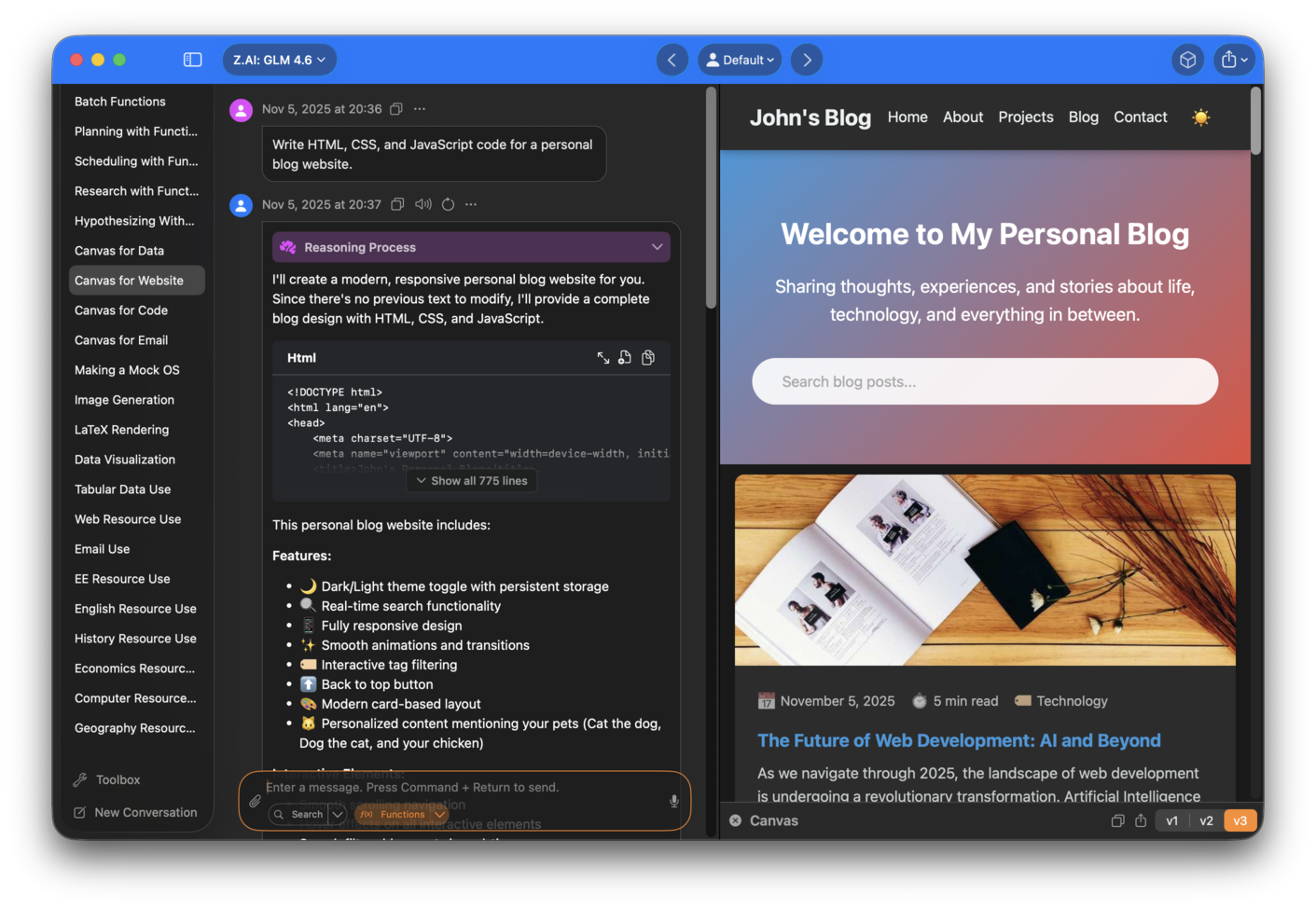

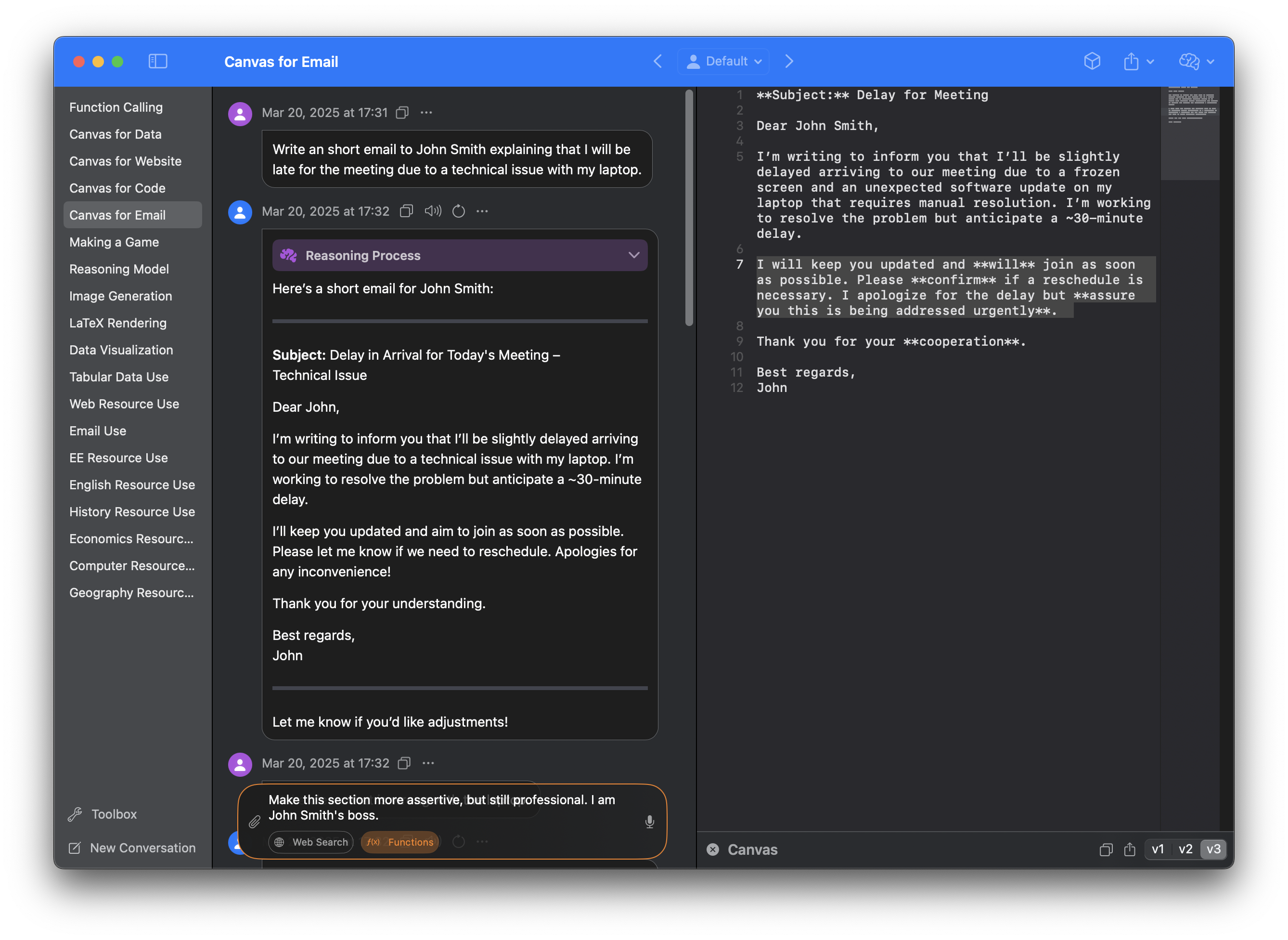

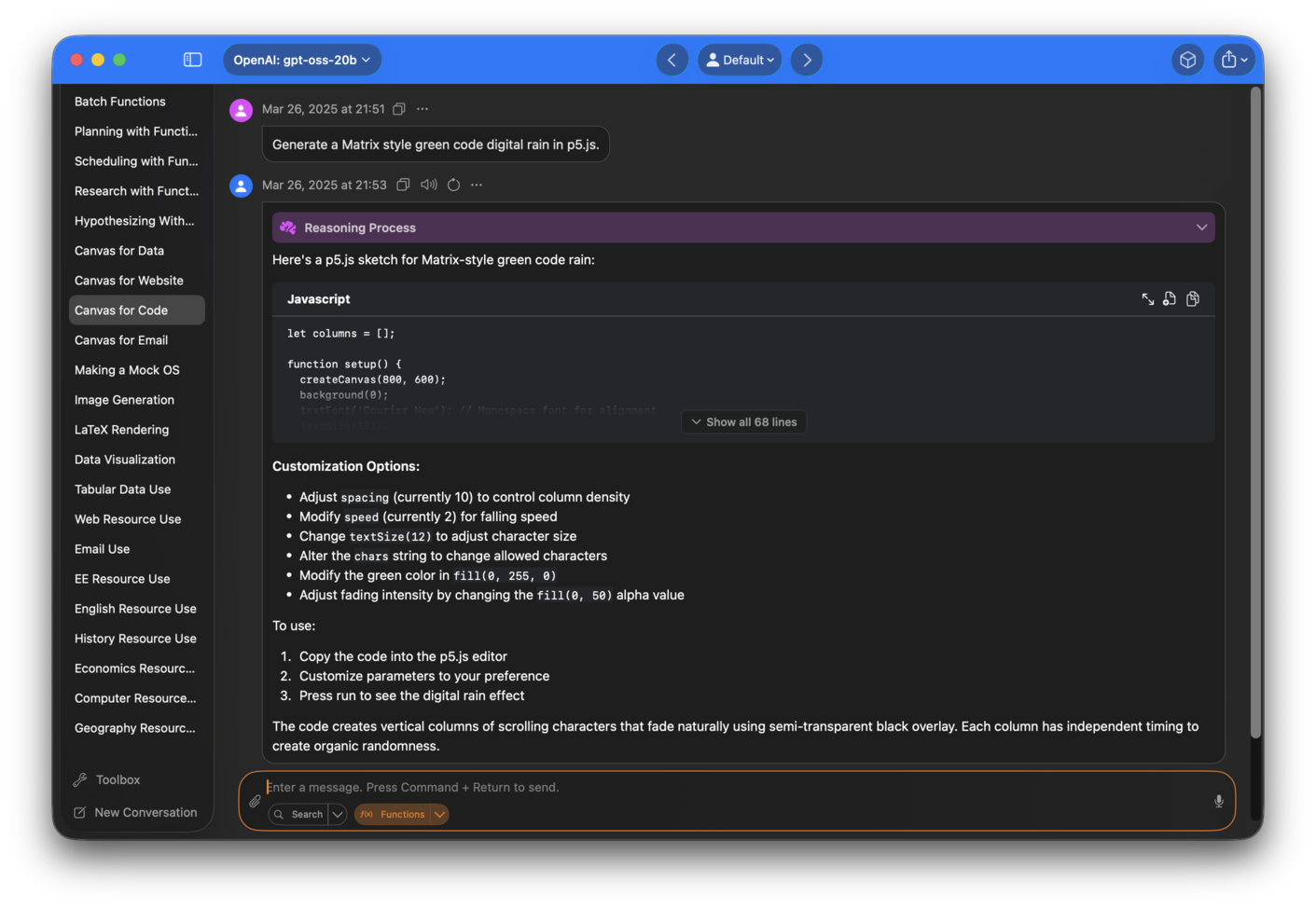

Create, edit and preview websites, code and other textual content using Canvas.

Select parts of the text, then prompt the chatbot to perform selective edits.

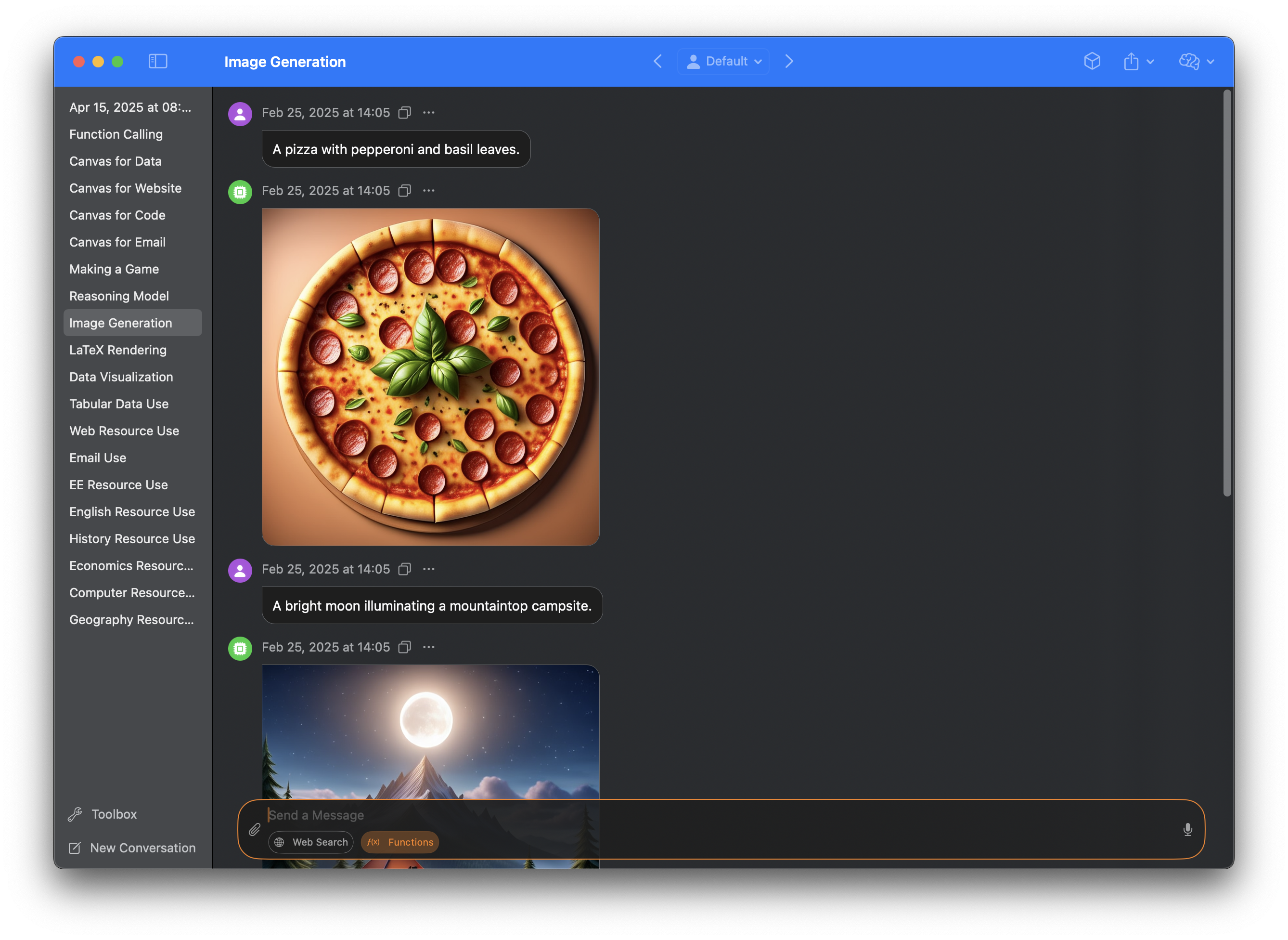

Sidekick can generate images from text, allowing you to create visual aids for your work.

There are no buttons, no switches to flick, no Image Generation mode. Instead, a built-in CoreML model automatically identifies image generation prompts, and generates an image when necessary.

Image generation is available on macOS 15.2 or above, and requires Apple Intelligence.

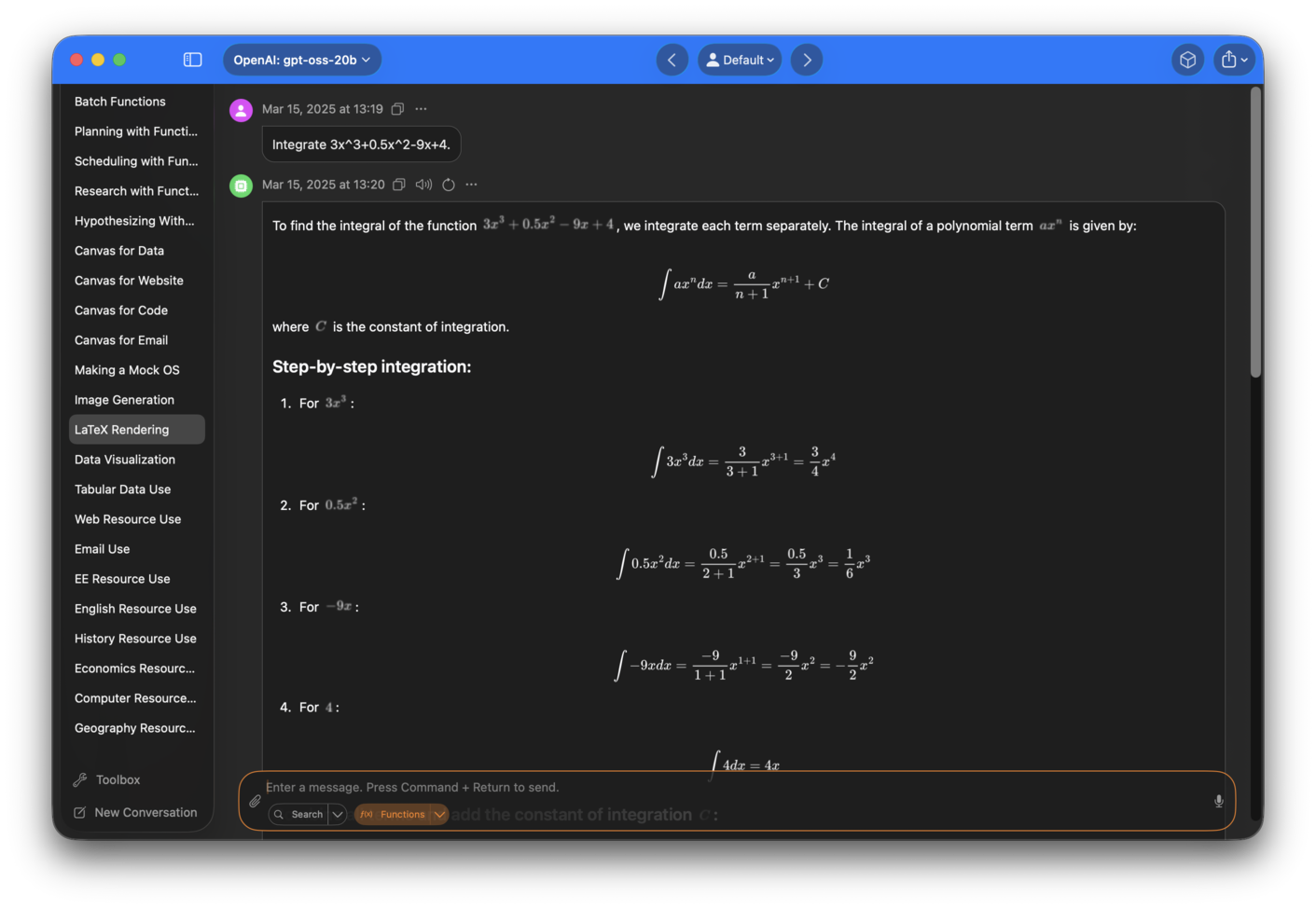

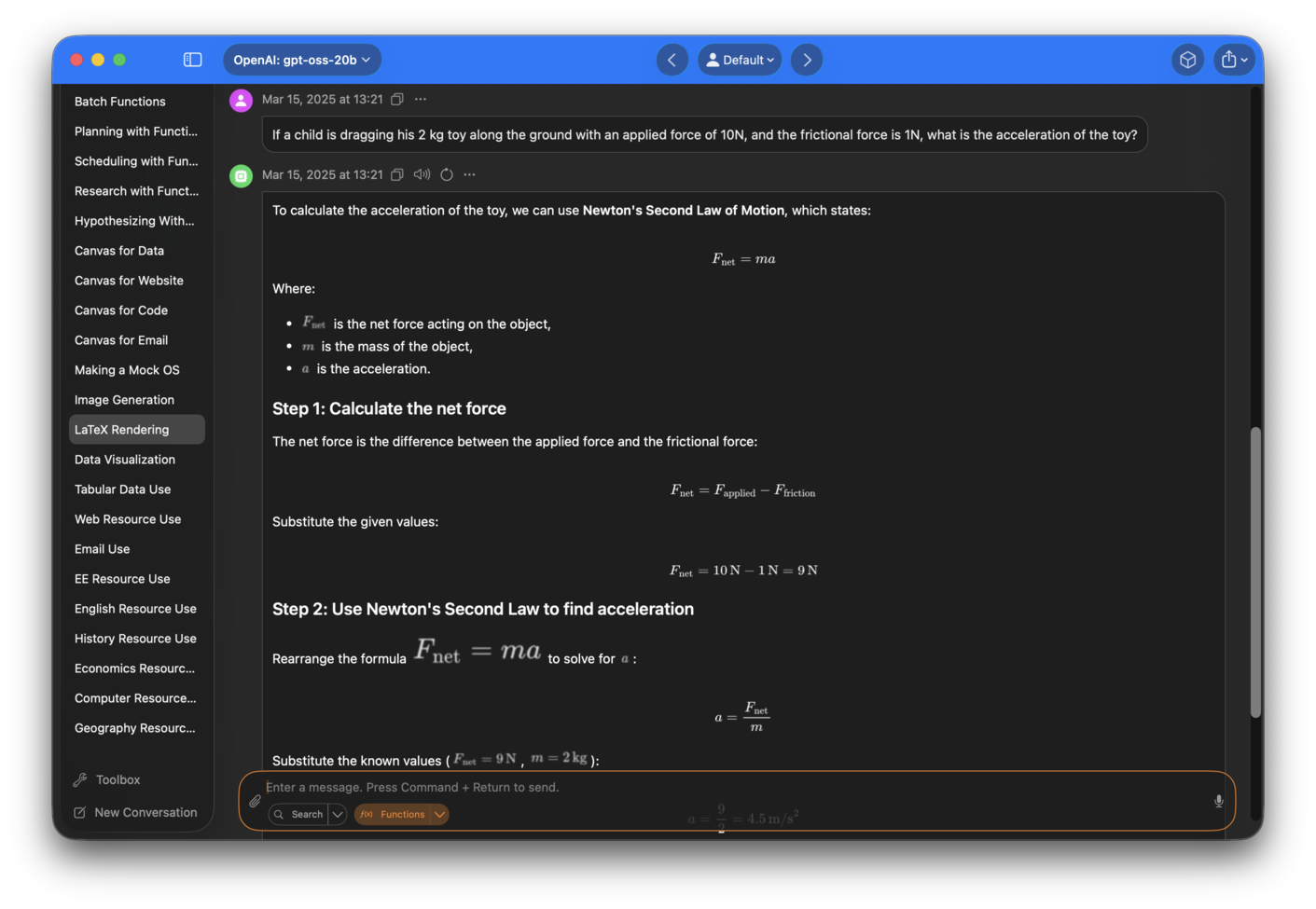

Markdown is rendered beautifully in Sidekick.

Sidekick offers native LaTeX rendering for mathematical equations.

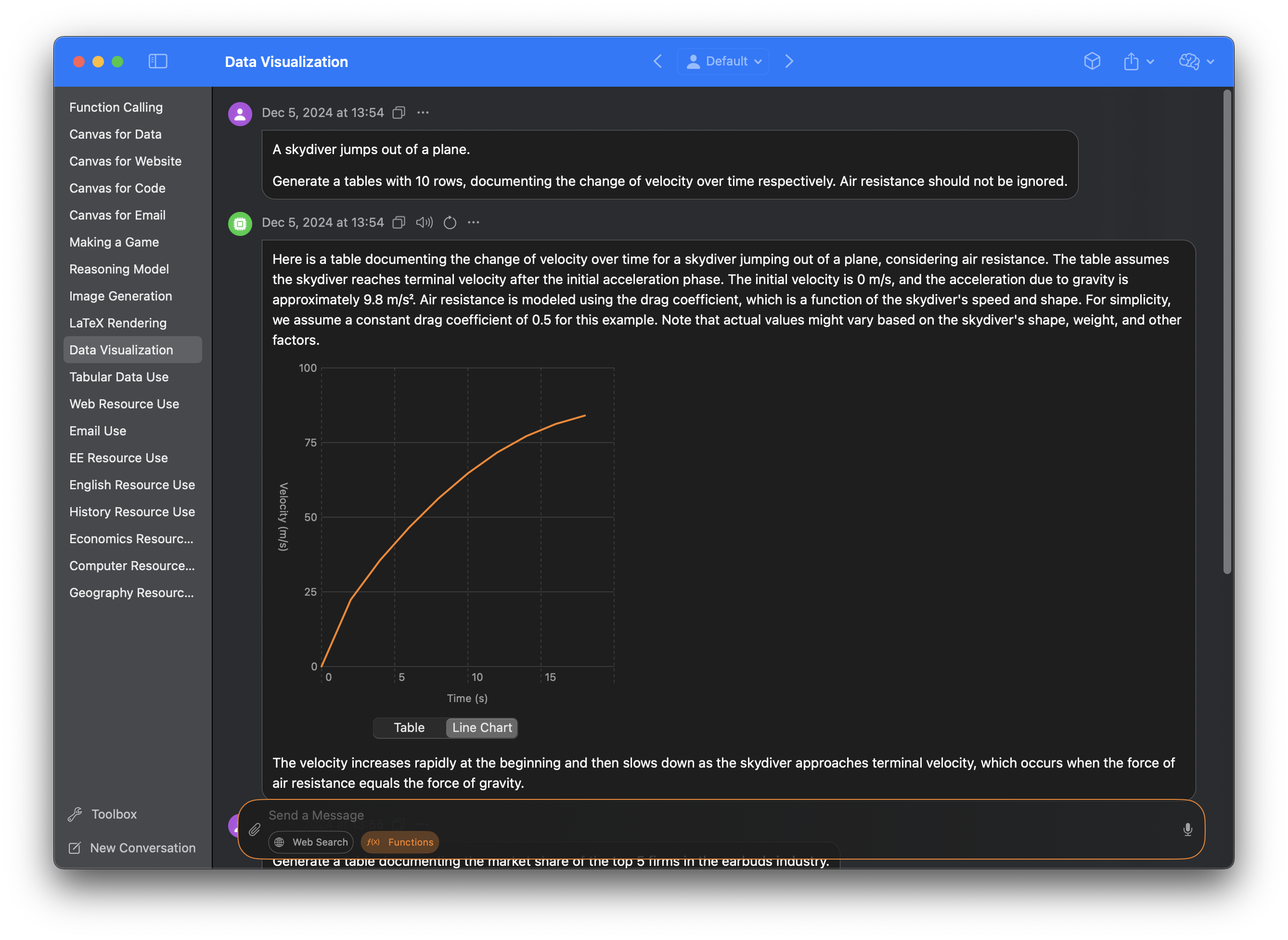

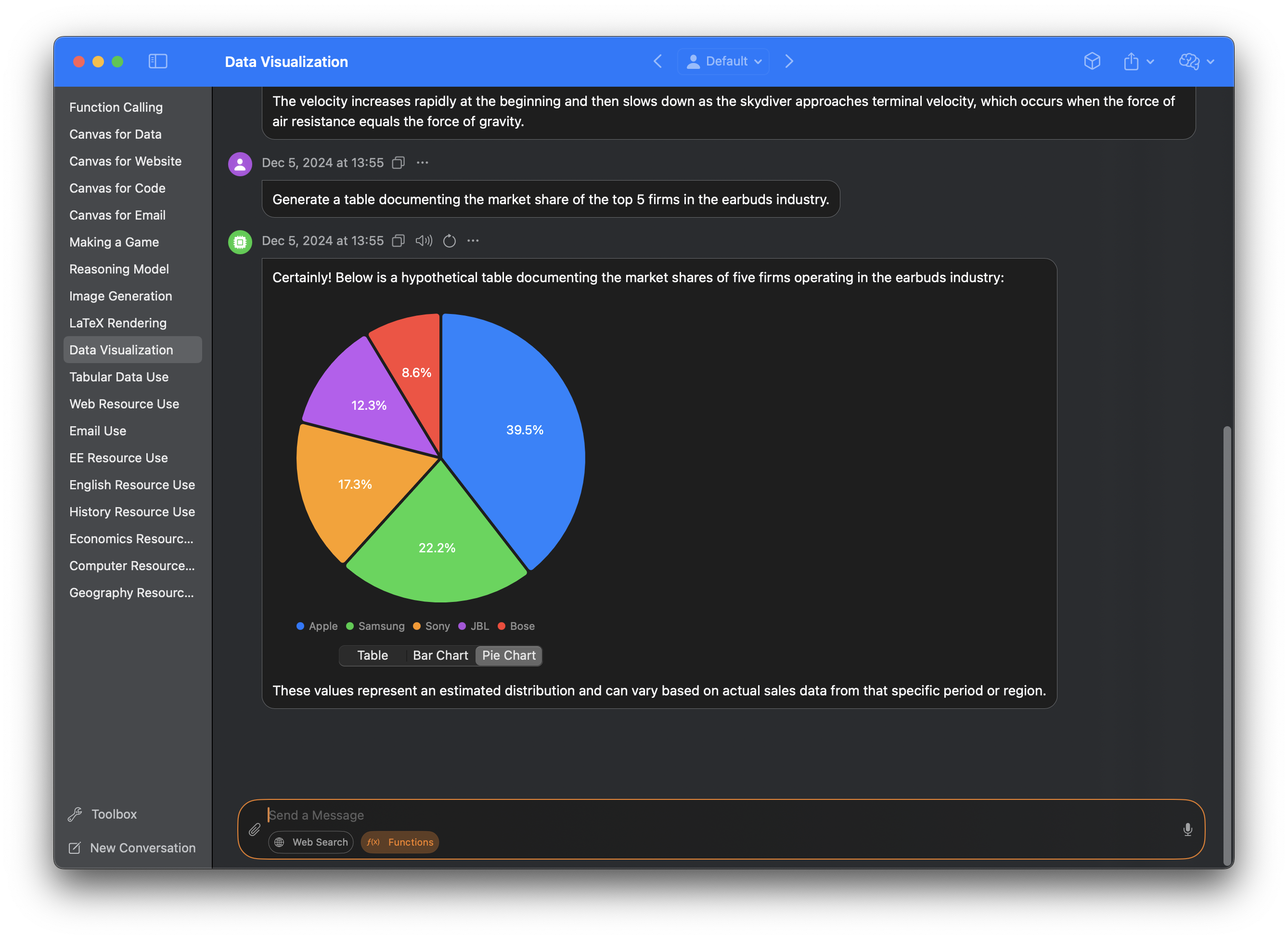

Visualizations are automatically generated for tables when appropriate, with a variety of charts available, including bar charts, line charts and pie charts.

Charts can be dragged and dropped into third party apps.

Code is beautifully rendered with syntax highlighting, and can be exported or copied at the click of a button.

Use Tools in Sidekick to supercharge your workflow.

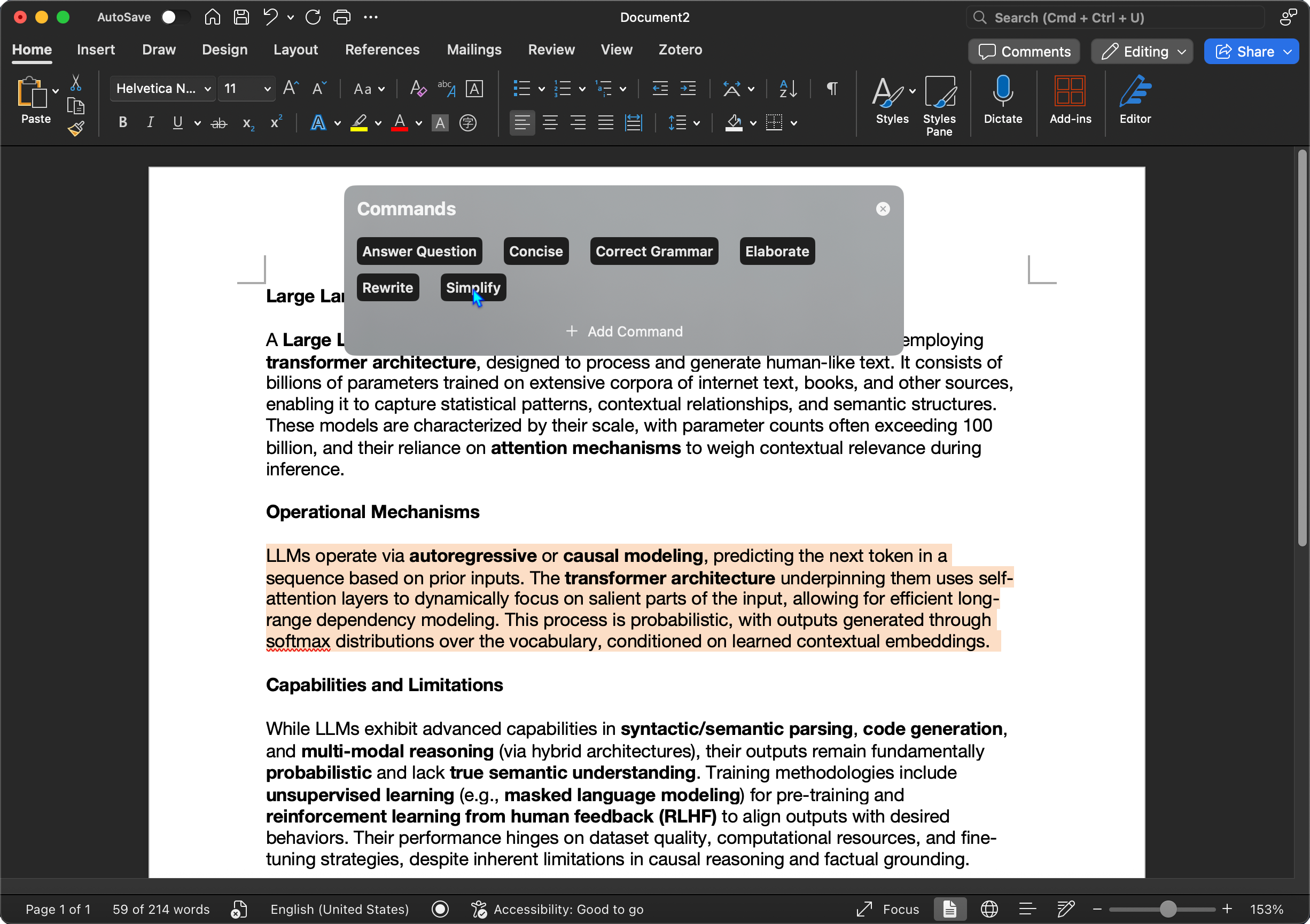

Press Command + Control + I to access Sidekick's inline writing assistant. For example, use the Answer Question command to do your homework without leaving Microsoft Word!

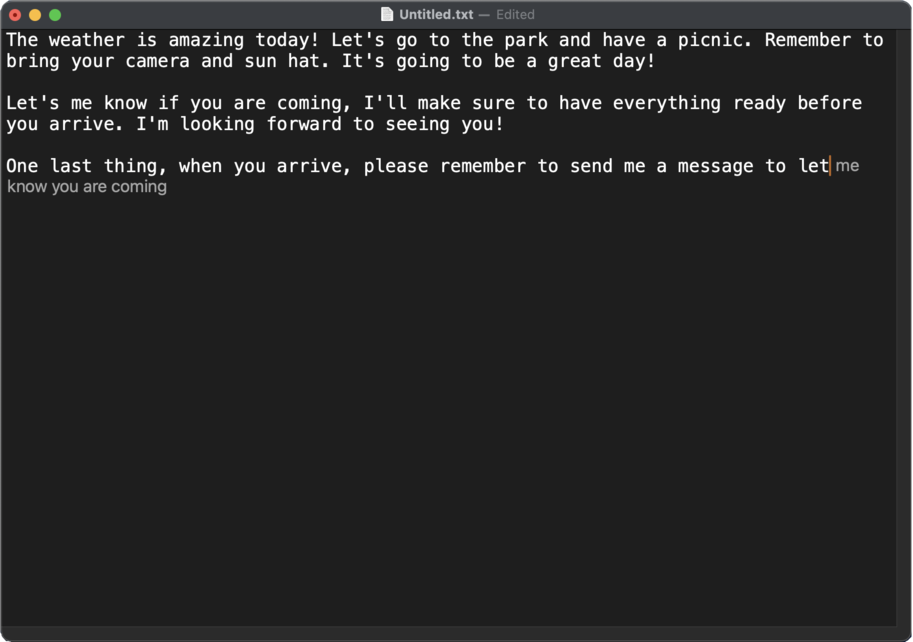

Use the default keyboard shortcut Tab to accept suggestions for the next word, or Shift + Tab to accept all suggested words. View a demo here.

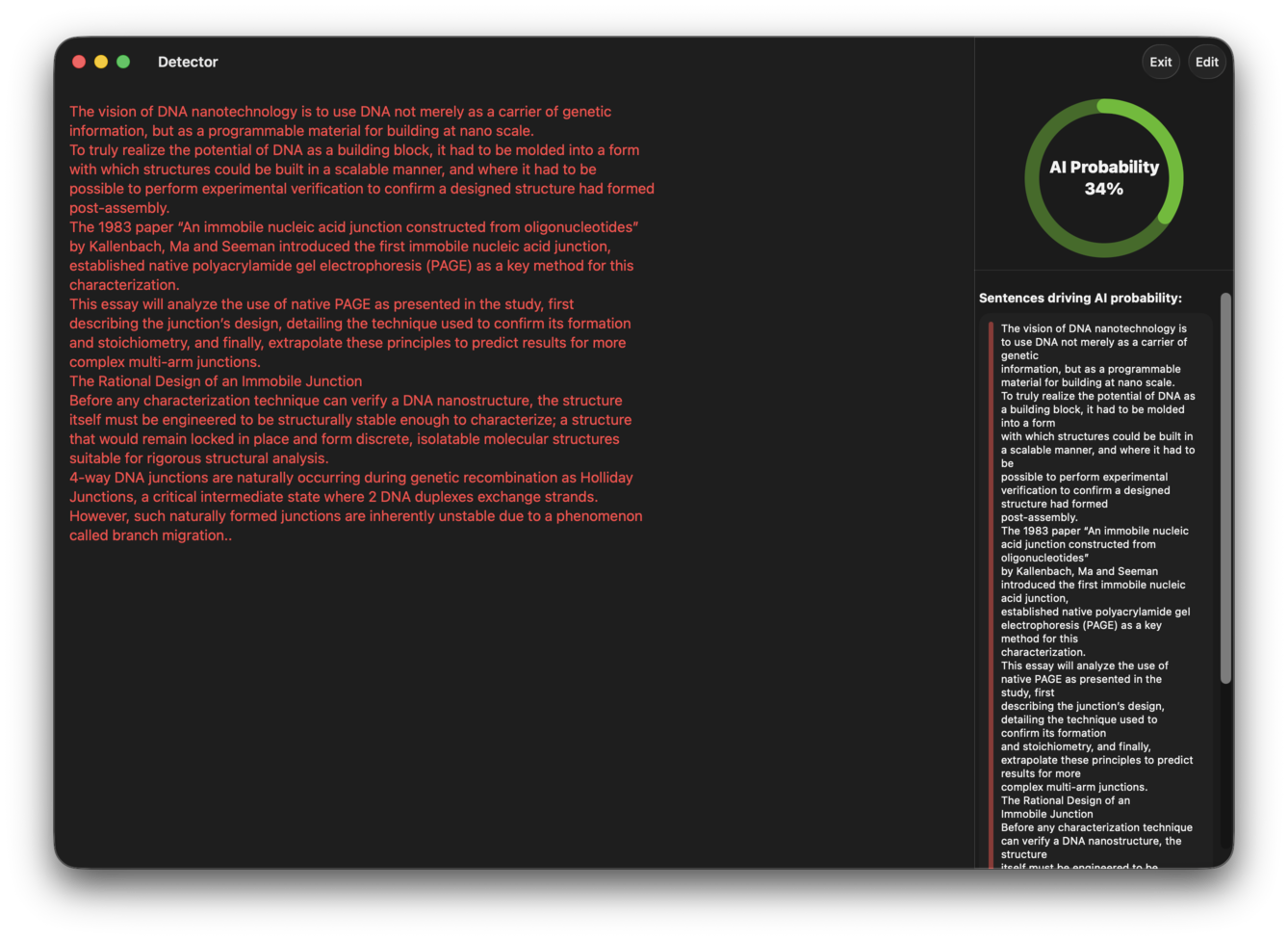

Use Detector to evaluate the AI percentage of text, and use provided suggestions to rewrite AI content.

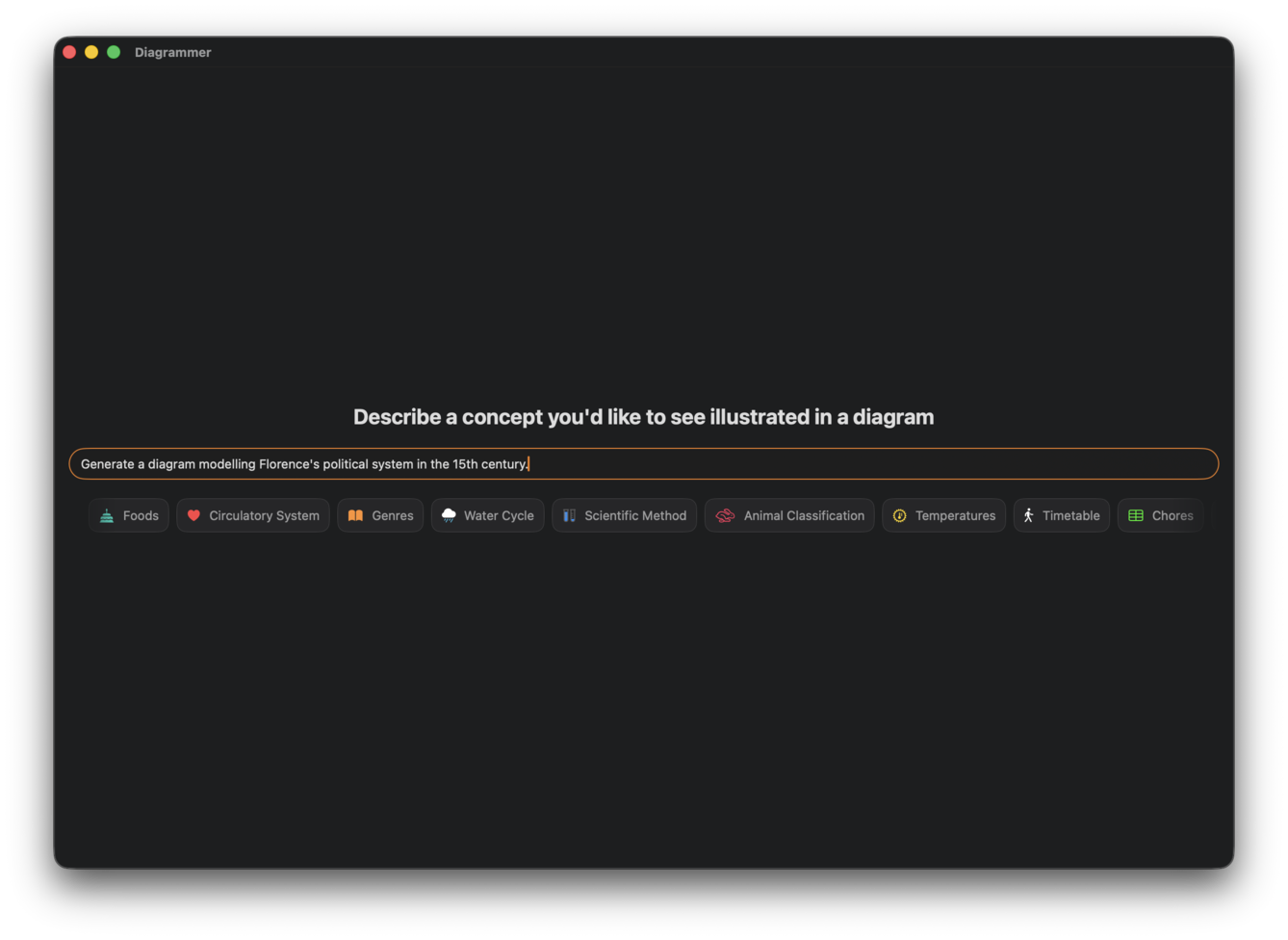

Diagrammer allows you to swiftly generate intricate relational diagrams all from a prompt. Take advantage of the integrated preview and editor for quick edits.

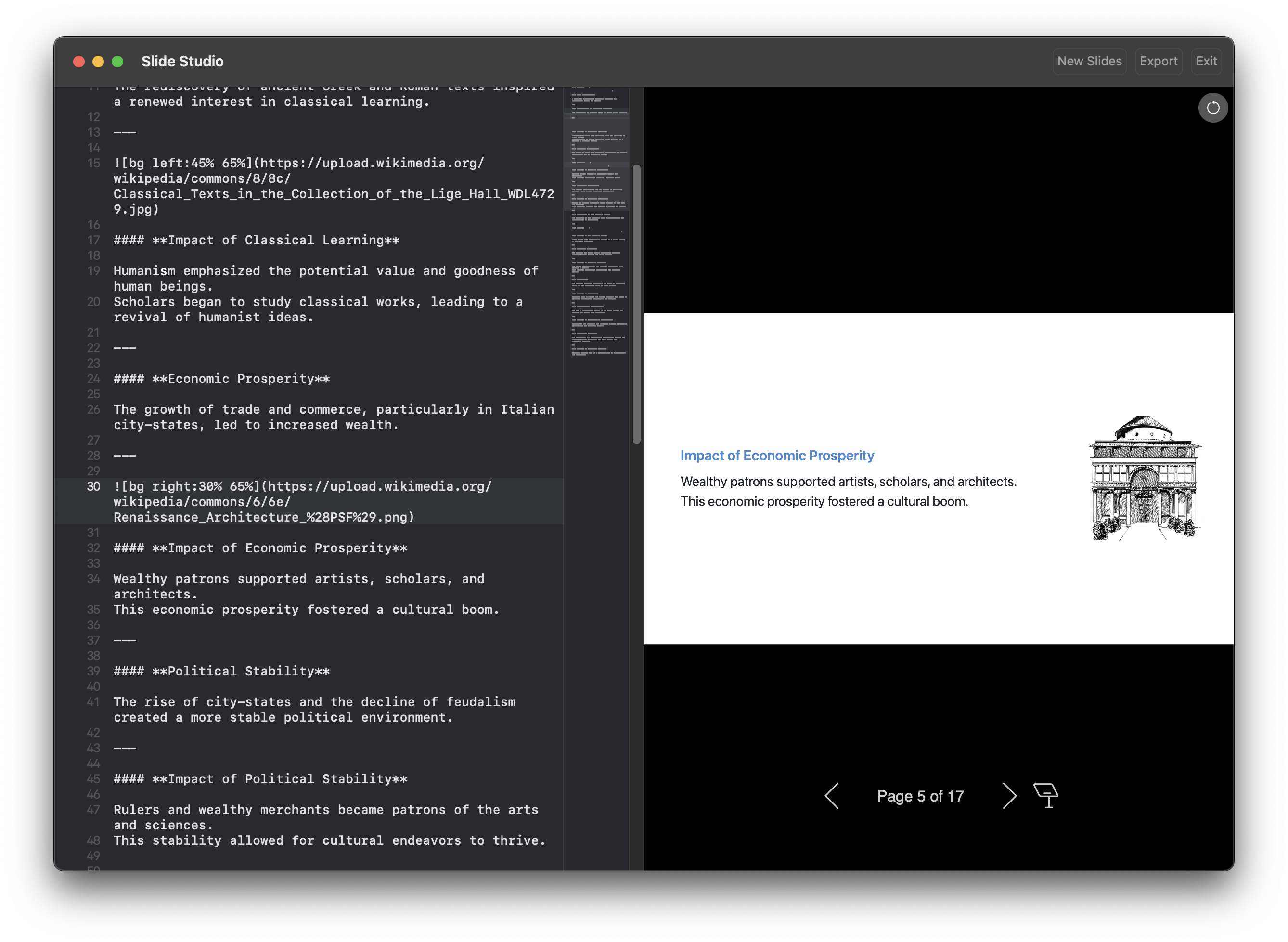

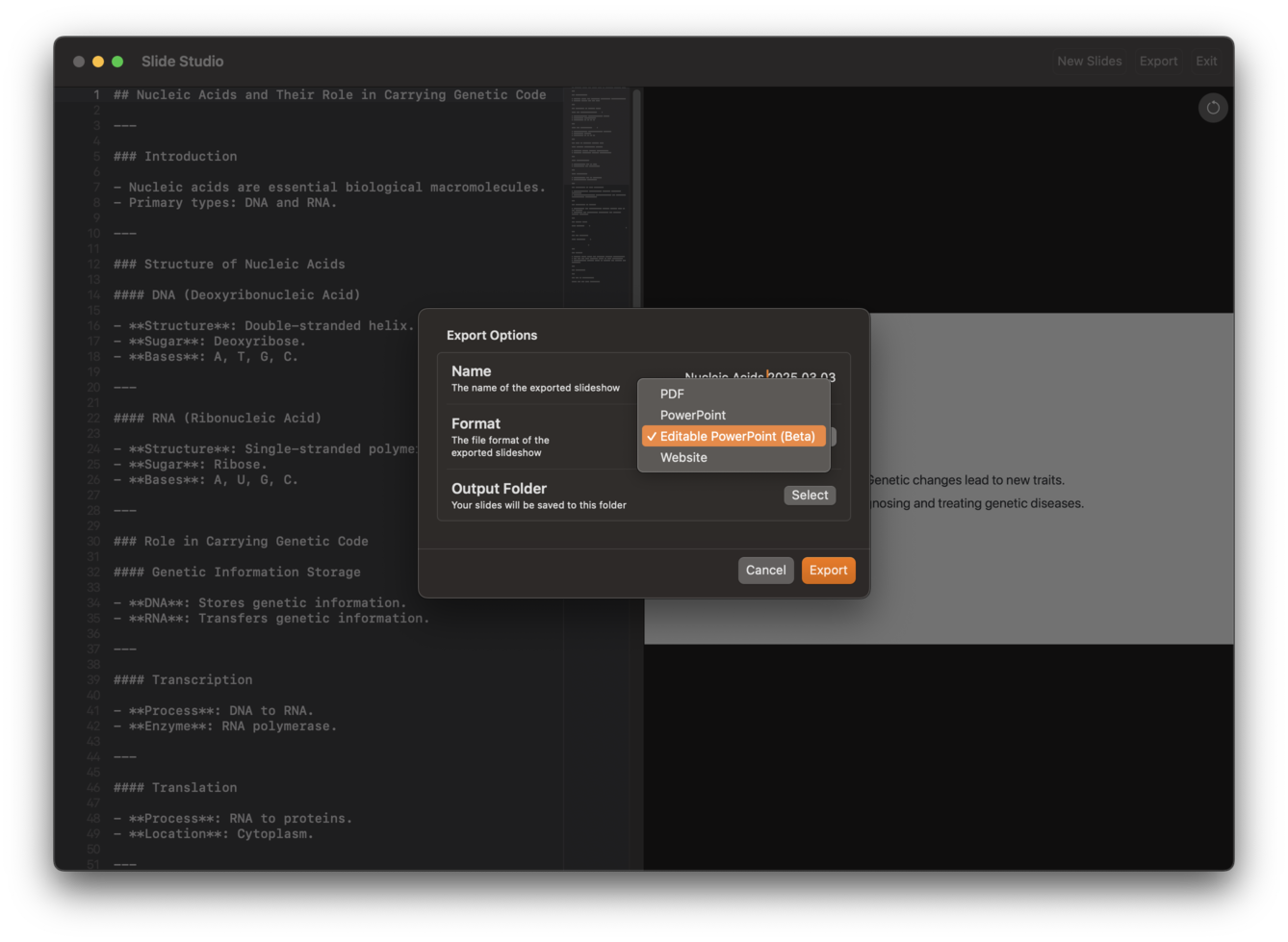

Instead of making a PowerPoint, just write a prompt. Use AI to craft 10-minute presentations in just 5 minutes.

Export to common formats like PDF and PowerPoint.

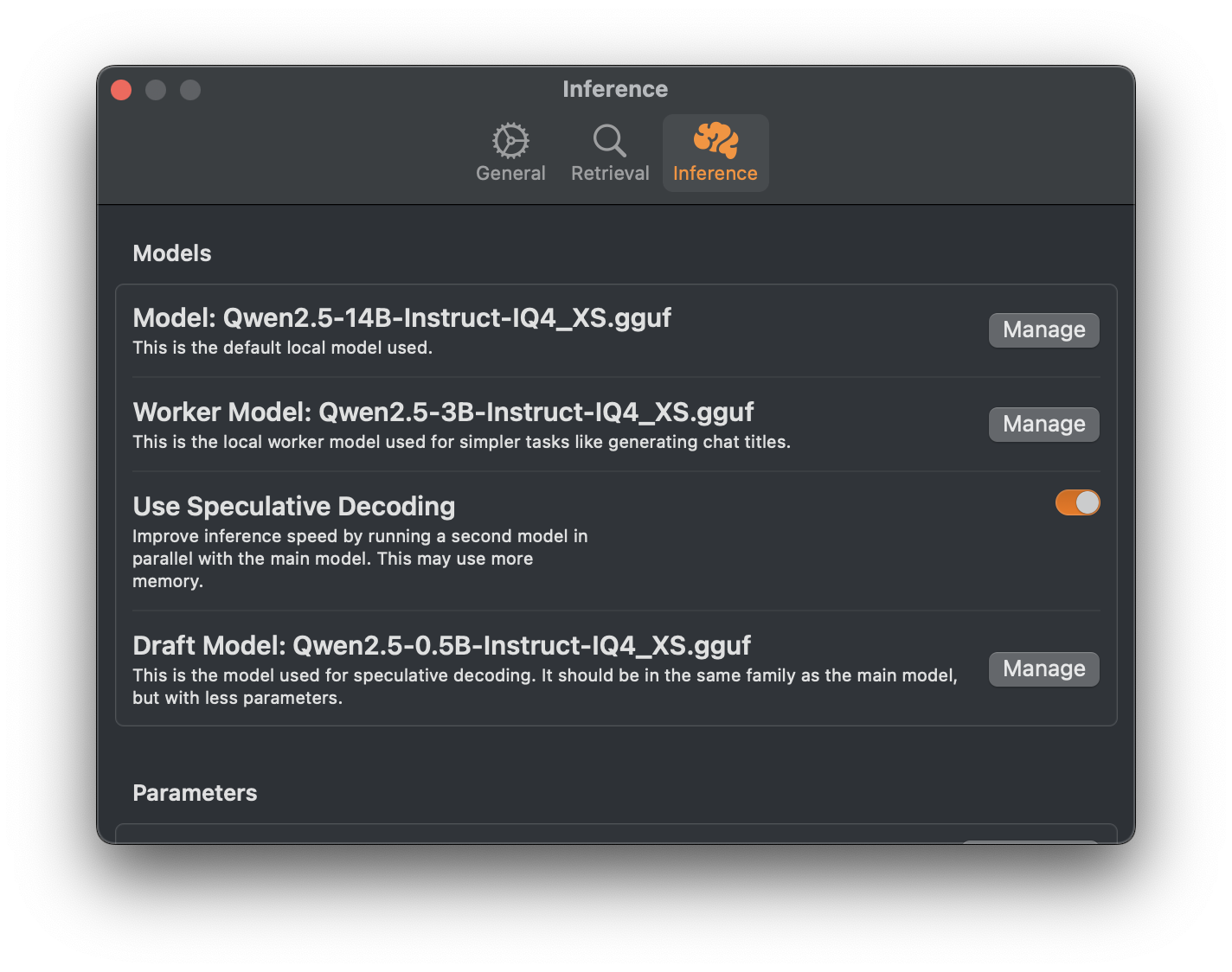

Sidekick uses llama.cpp as its inference backend, which is optimized to deliver lightning fast generation speeds on Apple Silicon. It also supports speculative decoding, which can further improve the generation speed.

Optionally, you can offload generation to speed up processing while extending the battery life of your MacBook.

Requirements

- A Mac with Apple Silicon

- RAM ≥ 8 GB

Download and Setup

- Follow the guide here.

The main goal of Sidekick is to make open, local, private, and contextually aware AI applications accessible to the masses.

Read more about our mission here.

Requirements

- A Mac with Apple Silicon

- RAM ≥ 8 GB

- Clone this repository.

- Run

security find-identity -p codesigning -vto find your signing identity.- You'll see something like

1) <SIGNING IDENTITY> "Apple Development: Michael DiGovanni ( XXXXXXXXXX)"

- Run

./setup.sh <TEAM_NAME> <SIGNING IDENTITY FROM STEP 2>to change the team in the Xcode project and download and sign themarpbinary.- The

marpbinary is required for building and must be signed to create presentations.

- The

- Open and run in Xcode.

Contributions are very welcome. Let's make Sidekick simple and powerful.

Contact this repository's owner at [email protected], or file an issue.

This project would not be possible without the hard work of:

- psugihara and contributors who built FreeChat, which this project took heavy inspiration from

- Georgi Gerganov for llama.cpp

- Alibaba for training Qwen 2.5

- Meta for training Llama 3

- Google for training Gemma 3

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Sidekick

Similar Open Source Tools

Sidekick

Sidekick is a native LLM application for macOS that allows users to chat with a local language model to retrieve information from files, folders, and websites without the need for additional software installation. It operates offline, ensuring data privacy and security. Sidekick offers features such as resource access, image generation, inline writing assistance, advanced markdown rendering, fast generation speeds, and more. The tool aims to provide a simple and powerful solution for accessing local, private models with context awareness of user files and content on the web.

npc-studio

NPC Studio is an AI IDE that allows users to have conversations with LLMs and Agents, edit files, explore data, execute code, and more. It provides a chat interface for organizing conversations, creating and managing AI agents and tools, editing plain text files, analyzing text files with AI, using tiles for conversation, accessing various menus, settings, and a data dashboard. The tool also offers features for photo editing, web browsing with AI, PDF analysis, and development setup instructions for electron-based frontend with a Python Flask backend.

lanarky

Lanarky is a Python web framework designed for building microservices using Large Language Models (LLMs). It is LLM-first, fast, modern, supports streaming over HTTP and WebSockets, and is open-source. The framework provides an abstraction layer for developers to easily create LLM microservices. Lanarky guarantees zero vendor lock-in and is free to use. It is built on top of FastAPI and offers features familiar to FastAPI users. The project is now in maintenance mode, with no active development planned, but community contributions are encouraged.

dify

Dify is an open-source LLM app development platform that combines AI workflow, RAG pipeline, agent capabilities, model management, observability features, and more. It allows users to quickly go from prototype to production. Key features include: 1. Workflow: Build and test powerful AI workflows on a visual canvas. 2. Comprehensive model support: Seamless integration with hundreds of proprietary / open-source LLMs from dozens of inference providers and self-hosted solutions. 3. Prompt IDE: Intuitive interface for crafting prompts, comparing model performance, and adding additional features. 4. RAG Pipeline: Extensive RAG capabilities that cover everything from document ingestion to retrieval. 5. Agent capabilities: Define agents based on LLM Function Calling or ReAct, and add pre-built or custom tools. 6. LLMOps: Monitor and analyze application logs and performance over time. 7. Backend-as-a-Service: All of Dify's offerings come with corresponding APIs for easy integration into your own business logic.

vertex-ai-mlops

Vertex AI is a platform for end-to-end model development. It consist of core components that make the processes of MLOps possible for design patterns of all types.

AdalFlow

AdalFlow is a library designed to help developers build and optimize Large Language Model (LLM) task pipelines. It follows a design pattern similar to PyTorch, offering a light, modular, and robust codebase. Named in honor of Ada Lovelace, AdalFlow aims to inspire more women to enter the AI field. The library is tailored for various GenAI applications like chatbots, translation, summarization, code generation, and autonomous agents, as well as classical NLP tasks such as text classification and named entity recognition. AdalFlow emphasizes modularity, robustness, and readability to support users in customizing and iterating code for their specific use cases.

Mortal

Mortal (凡夫) is a free and open source AI for Japanese mahjong, powered by deep reinforcement learning. It provides a comprehensive solution for playing Japanese mahjong with AI assistance. The project focuses on utilizing deep reinforcement learning techniques to enhance gameplay and decision-making in Japanese mahjong. Mortal offers a user-friendly interface and detailed documentation to assist users in understanding and utilizing the AI effectively. The project is actively maintained and welcomes contributions from the community to further improve the AI's capabilities and performance.

trulens

TruLens provides a set of tools for developing and monitoring neural nets, including large language models. This includes both tools for evaluation of LLMs and LLM-based applications with _TruLens-Eval_ and deep learning explainability with _TruLens-Explain_. _TruLens-Eval_ and _TruLens-Explain_ are housed in separate packages and can be used independently.

Ollamac

Ollamac is a macOS app designed for interacting with Ollama models. It is optimized for macOS, allowing users to easily use any model from the Ollama library. The app features a user-friendly interface, chat archive for saving interactions, and real-time communication using HTTP streaming technology. Ollamac is open-source, enabling users to contribute to its development and enhance its capabilities. It requires macOS 14 or later and the Ollama system to be installed on the user's Mac with at least one Ollama model downloaded.

khoj

Khoj is an open-source, personal AI assistant that extends your capabilities by creating always-available AI agents. You can share your notes and documents to extend your digital brain, and your AI agents have access to the internet, allowing you to incorporate real-time information. Khoj is accessible on Desktop, Emacs, Obsidian, Web, and Whatsapp, and you can share PDF, markdown, org-mode, notion files, and GitHub repositories. You'll get fast, accurate semantic search on top of your docs, and your agents can create deeply personal images and understand your speech. Khoj is self-hostable and always will be.

tuff

Tuff is a local-first, AI-native, and infinitely extensible desktop command center designed to enhance workflow efficiency. It offers a seamless integration of core utilities, AI-powered search, contextual intelligence, and extensibility through custom plugins. With a beautiful UI design, rich functionality, simple operations, and a focus on security and reliability, Tuff provides users with a cross-platform desktop software that is easy to use and offers a good user experience.

AI-Studio

MindWork AI Studio is a desktop application that provides a unified chat interface for Large Language Models (LLMs). It is free to use for personal and commercial purposes, offers independence in choosing LLM providers, provides unrestricted usage through the providers API, and is cost-effective with pay-as-you-go pricing. The app prioritizes privacy, flexibility, minimal storage and memory usage, and low impact on system resources. Users can support the project through monthly contributions or one-time donations, with opportunities for companies to sponsor the project for public relations and marketing benefits. Planned features include support for more LLM providers, system prompts integration, text replacement for privacy, and advanced interactions tailored for various use cases.

anything-llm

AnythingLLM is a full-stack application that enables you to turn any document, resource, or piece of content into context that any LLM can use as references during chatting. This application allows you to pick and choose which LLM or Vector Database you want to use as well as supporting multi-user management and permissions.

Alpaca

Alpaca is an Ollama client for managing and chatting with multiple AI models. It offers a user-friendly way to interact with local AI and third-party providers like Gemini and ChatGPT. The open-source tool supports features such as multiple model conversations, image and document recognition, code highlighting, notifications, import/export chats, and more.

anubis

Anubis weighs the soul of your connection using a sha256 proof-of-work challenge to protect upstream resources from scraper bots. It may prevent your website from being indexed by some search engines, serving as a nuclear response to aggressive AI scraper bots. Anubis is an alternative to using Cloudflare for origin protection in cases where Cloudflare is not feasible or preferred.

ComfyUI_VLM_nodes

ComfyUI_VLM_nodes is a repository containing various nodes for utilizing Vision Language Models (VLMs) and Language Models (LLMs). The repository provides nodes for tasks such as structured output generation, image to music conversion, LLM prompt generation, automatic prompt generation, and more. Users can integrate different models like InternLM-XComposer2-VL, UForm-Gen2, Kosmos-2, moondream1, moondream2, JoyTag, and Chat Musician. The nodes support features like extracting keywords, generating prompts, suggesting prompts, and obtaining structured outputs. The repository includes examples and instructions for using the nodes effectively.

For similar tasks

AI-Catalog

AI-Catalog is a curated list of AI tools, platforms, and resources across various domains. It serves as a comprehensive repository for users to discover and explore a wide range of AI applications. The catalog includes tools for tasks such as text-to-image generation, summarization, prompt generation, writing assistance, code assistance, developer tools, low code/no code tools, audio editing, video generation, 3D modeling, search engines, chatbots, email assistants, fun tools, gaming, music generation, presentation tools, website builders, education assistants, autonomous AI agents, photo editing, AI extensions, deep face/deep fake detection, text-to-speech, startup tools, SQL-related AI tools, education tools, and text-to-video conversion.

copywriterproai-backend

CopywriterProAI is the world's first open-source AI writing platform for SEO and Ad Copy. The backend repository powers the AI capabilities and manages content processing for smooth operation. It provides an AI writing assistant that works behind the scenes to assist users in content creation.

Sidekick

Sidekick is a native LLM application for macOS that allows users to chat with a local language model to retrieve information from files, folders, and websites without the need for additional software installation. It operates offline, ensuring data privacy and security. Sidekick offers features such as resource access, image generation, inline writing assistance, advanced markdown rendering, fast generation speeds, and more. The tool aims to provide a simple and powerful solution for accessing local, private models with context awareness of user files and content on the web.

AIWritingCompanion

AIWritingCompanion is a lightweight and versatile browser extension designed to translate text within input fields. It offers universal compatibility, multiple activation methods, and support for various translation providers like Gemini, OpenAI, and WebAI to API. Users can install it via CRX file or Git, set API key, and use it for automatic translation or via shortcut. The tool is suitable for writers, translators, students, researchers, and bloggers. AI keywords include writing assistant, translation tool, browser extension, language translation, and text translator. Users can use it for tasks like translate text, assist in writing, simplify content, check language accuracy, and enhance communication.

lollms-webui

LoLLMs WebUI (Lord of Large Language Multimodal Systems: One tool to rule them all) is a user-friendly interface to access and utilize various LLM (Large Language Models) and other AI models for a wide range of tasks. With over 500 AI expert conditionings across diverse domains and more than 2500 fine tuned models over multiple domains, LoLLMs WebUI provides an immediate resource for any problem, from car repair to coding assistance, legal matters, medical diagnosis, entertainment, and more. The easy-to-use UI with light and dark mode options, integration with GitHub repository, support for different personalities, and features like thumb up/down rating, copy, edit, and remove messages, local database storage, search, export, and delete multiple discussions, make LoLLMs WebUI a powerful and versatile tool.

daily-poetry-image

Daily Chinese ancient poetry and AI-generated images powered by Bing DALL-E-3. GitHub Action triggers the process automatically. Poetry is provided by Today's Poem API. The website is built with Astro.

InvokeAI

InvokeAI is a leading creative engine built to empower professionals and enthusiasts alike. Generate and create stunning visual media using the latest AI-driven technologies. InvokeAI offers an industry leading Web Interface, interactive Command Line Interface, and also serves as the foundation for multiple commercial products.

LocalAI

LocalAI is a free and open-source OpenAI alternative that acts as a drop-in replacement REST API compatible with OpenAI (Elevenlabs, Anthropic, etc.) API specifications for local AI inferencing. It allows users to run LLMs, generate images, audio, and more locally or on-premises with consumer-grade hardware, supporting multiple model families and not requiring a GPU. LocalAI offers features such as text generation with GPTs, text-to-audio, audio-to-text transcription, image generation with stable diffusion, OpenAI functions, embeddings generation for vector databases, constrained grammars, downloading models directly from Huggingface, and a Vision API. It provides a detailed step-by-step introduction in its Getting Started guide and supports community integrations such as custom containers, WebUIs, model galleries, and various bots for Discord, Slack, and Telegram. LocalAI also offers resources like an LLM fine-tuning guide, instructions for local building and Kubernetes installation, projects integrating LocalAI, and a how-tos section curated by the community. It encourages users to cite the repository when utilizing it in downstream projects and acknowledges the contributions of various software from the community.

For similar jobs

SLR-FC

This repository provides a comprehensive collection of AI tools and resources to enhance literature reviews. It includes a curated list of AI tools for various tasks, such as identifying research gaps, discovering relevant papers, visualizing paper content, and summarizing text. Additionally, the repository offers materials on generative AI, effective prompts, copywriting, image creation, and showcases of AI capabilities. By leveraging these tools and resources, researchers can streamline their literature review process, gain deeper insights from scholarly literature, and improve the quality of their research outputs.

paper-ai

Paper-ai is a tool that helps you write papers using artificial intelligence. It provides features such as AI writing assistance, reference searching, and editing and formatting tools. With Paper-ai, you can quickly and easily create high-quality papers.

paper-qa

PaperQA is a minimal package for question and answering from PDFs or text files, providing very good answers with in-text citations. It uses OpenAI Embeddings to embed and search documents, and follows a process of embedding docs and queries, searching for top passages, creating summaries, scoring and selecting relevant summaries, putting summaries into prompt, and generating answers. Users can customize prompts and use various models for embeddings and LLMs. The tool can be used asynchronously and supports adding documents from paths, files, or URLs.

ChatData

ChatData is a robust chat-with-documents application designed to extract information and provide answers by querying the MyScale free knowledge base or uploaded documents. It leverages the Retrieval Augmented Generation (RAG) framework, millions of Wikipedia pages, and arXiv papers. Features include self-querying retriever, VectorSQL, session management, and building a personalized knowledge base. Users can effortlessly navigate vast data, explore academic papers, and research documents. ChatData empowers researchers, students, and knowledge enthusiasts to unlock the true potential of information retrieval.

noScribe

noScribe is an AI-based software designed for automated audio transcription, specifically tailored for transcribing interviews for qualitative social research or journalistic purposes. It is a free and open-source tool that runs locally on the user's computer, ensuring data privacy. The software can differentiate between speakers and supports transcription in 99 languages. It includes a user-friendly editor for reviewing and correcting transcripts. Developed by Kai Dröge, a PhD in sociology with a background in computer science, noScribe aims to streamline the transcription process and enhance the efficiency of qualitative analysis.

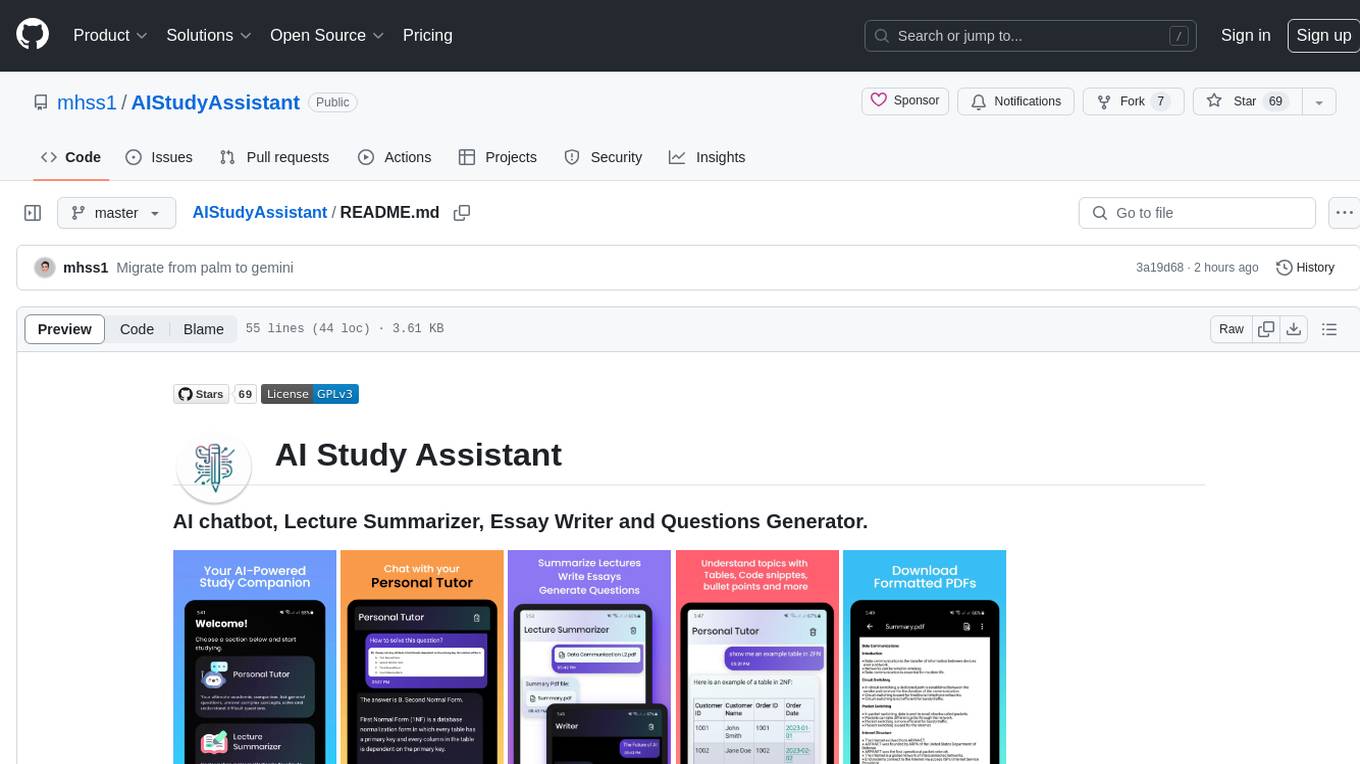

AIStudyAssistant

AI Study Assistant is an app designed to enhance learning experience and boost academic performance. It serves as a personal tutor, lecture summarizer, writer, and question generator powered by Google PaLM 2. Features include interacting with an AI chatbot, summarizing lectures, generating essays, and creating practice questions. The app is built using 100% Kotlin, Jetpack Compose, Clean Architecture, and MVVM design pattern, with technologies like Ktor, Room DB, Hilt, and Kotlin coroutines. AI Study Assistant aims to provide comprehensive AI-powered assistance for students in various academic tasks.

data-to-paper

Data-to-paper is an AI-driven framework designed to guide users through the process of conducting end-to-end scientific research, starting from raw data to the creation of comprehensive and human-verifiable research papers. The framework leverages a combination of LLM and rule-based agents to assist in tasks such as hypothesis generation, literature search, data analysis, result interpretation, and paper writing. It aims to accelerate research while maintaining key scientific values like transparency, traceability, and verifiability. The framework is field-agnostic, supports both open-goal and fixed-goal research, creates data-chained manuscripts, involves human-in-the-loop interaction, and allows for transparent replay of the research process.

k2

K2 (GeoLLaMA) is a large language model for geoscience, trained on geoscience literature and fine-tuned with knowledge-intensive instruction data. It outperforms baseline models on objective and subjective tasks. The repository provides K2 weights, core data of GeoSignal, GeoBench benchmark, and code for further pretraining and instruction tuning. The model is available on Hugging Face for use. The project aims to create larger and more powerful geoscience language models in the future.