airframe

Essential Building Blocks for Scala

Stars: 632

Airframe is a set of essential building blocks for developing applications in Scala. It includes logging, object serialization using JSON or MessagePack, dependency injection, http server/client with RPC support, functional testing with AirSpec, and more.

README:

Airframe https://wvlet.org/airframe is essential building blocks for developing applications in Scala, including logging, object serialization using JSON or MessagePack, dependency injection, http server/client with RPC support, functional testing with AirSpec, etc.

-

Airframe DI: A Dependency Injection Library Tailored to Scala

-

Airframe RPC: A Framework for Using Scala Both for Frontend and Backend Programming

Airframe uses Scala 3 as the default Scala version. To use Scala 2.x versions, run ++ 2.12 or ++ 2.13 in the sbt console.

For every PR, release-drafter will automatically label PRs using the rules defined in .github/release-drafter.yml.

To publish a new version, first, create a new release tag as follows:

$ git switch main

$ git pull

$ ruby ./scripts/release.rbThis step will update docs/release-noteds.md, push a new git tag to the GitHub, and create a new GitHub relese note. After that, the artifacts will be published to Sonatype (a.k.a. Maven Central). It usually takes 10-30 minutes.

Note: Do not create a new tag from GitHub release pages, because it will not trigger the GitHub Actions for the release.

When changing some interfaces, binary compatibility should be checked so as not to break applications using older versions of Airframe. In build.sbt, set AIRFRAME_BINARY_COMPAT_VERSION to the previous version of Airframe as a comparison target. Then run sbt mimaReportBinaryIssues to check the binary compatibility.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for airframe

Similar Open Source Tools

airframe

Airframe is a set of essential building blocks for developing applications in Scala. It includes logging, object serialization using JSON or MessagePack, dependency injection, http server/client with RPC support, functional testing with AirSpec, and more.

starwhale

Starwhale is an MLOps/LLMOps platform that brings efficiency and standardization to machine learning operations. It streamlines the model development lifecycle, enabling teams to optimize workflows around key areas like model building, evaluation, release, and fine-tuning. Starwhale abstracts Model, Runtime, and Dataset as first-class citizens, providing tailored capabilities for common workflow scenarios including Models Evaluation, Live Demo, and LLM Fine-tuning. It is an open-source platform designed for clarity and ease of use, empowering developers to build customized MLOps features tailored to their needs.

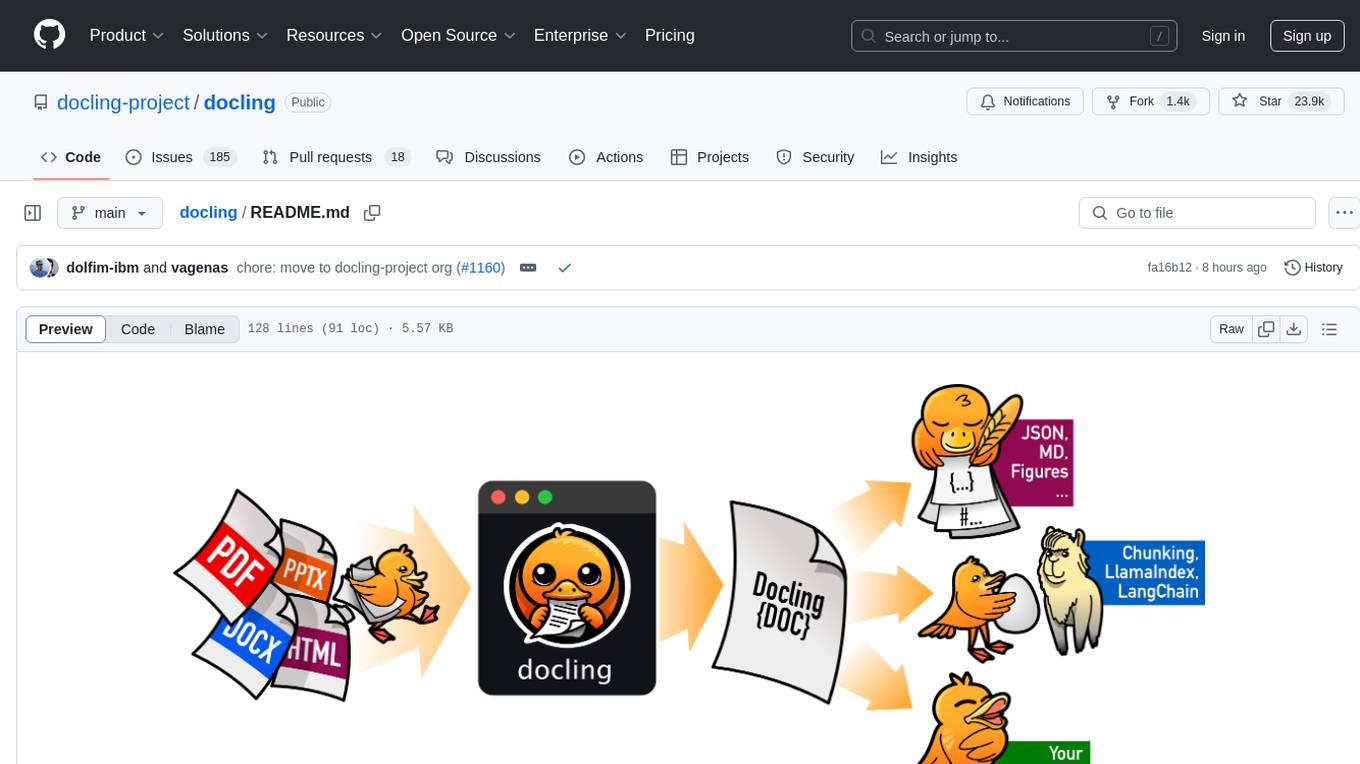

docling

Docling simplifies document processing, parsing diverse formats including advanced PDF understanding, and providing seamless integrations with the general AI ecosystem. It offers features such as parsing multiple document formats, advanced PDF understanding, unified DoclingDocument representation format, various export formats, local execution capabilities, plug-and-play integrations with agentic AI tools, extensive OCR support, and a simple CLI. Coming soon features include metadata extraction, visual language models, chart understanding, and complex chemistry understanding. Docling is installed via pip and works on macOS, Linux, and Windows environments. It provides detailed documentation, examples, integrations with popular frameworks, and support through the discussion section. The codebase is under the MIT license and has been developed by IBM.

anyquery

Anyquery is a SQL query engine built on SQLite that allows users to run SQL queries on various data sources like files, databases, and apps. It can connect to LLMs to access data and act as a MySQL server for running queries. The tool is extensible through plugins and supports various installation methods like Homebrew, APT, YUM/DNF, Scoop, Winget, and Chocolatey.

tock

Tock is an open conversational AI platform for building bots. It offers a natural language processing open source stack compatible with various tools, a user interface for building stories and analytics, a conversational DSL for different programming languages, built-in connectors for text/voice channels, toolkits for custom web/mobile integration, and the ability to deploy anywhere in the cloud or on-premise with Docker.

modelence

Modelence is an all-in-one TypeScript framework for startups shipping production apps, aiming to eliminate boilerplate for standard web app features. It provides authentication, database setup, cron jobs, AI observability, and email functionalities. Modelence requires Node.js 20.20 or higher. Developers can create projects, install dependencies, and start the development server quickly. For local development, contributors can clone the repository, install dependencies, build the package, and test changes in a real application. Modelence offers examples for further guidance.

cognee

Cognee is an open-source framework designed for creating self-improving deterministic outputs for Large Language Models (LLMs) using graphs, LLMs, and vector retrieval. It provides a platform for AI engineers to enhance their models and generate more accurate results. Users can leverage Cognee to add new information, utilize LLMs for knowledge creation, and query the system for relevant knowledge. The tool supports various LLM providers and offers flexibility in adding different data types, such as text files or directories. Cognee aims to streamline the process of working with LLMs and improving AI models for better performance and efficiency.

openmeter

OpenMeter is a real-time and scalable usage metering tool for AI, usage-based billing, infrastructure, and IoT use cases. It provides a REST API for integrations and offers client SDKs in Node.js, Python, Go, and Web. OpenMeter is licensed under the Apache 2.0 License.

langfuse-python

Langfuse Python SDK is a software development kit that provides tools and functionalities for integrating with Langfuse's language processing services. It offers decorators for observing code behavior, low-level SDK for tracing, and wrappers for accessing Langfuse's public API. The SDK was recently rewritten in version 2, released on December 17, 2023, with detailed documentation available on the official website. It also supports integrations with OpenAI SDK, LlamaIndex, and LangChain for enhanced language processing capabilities.

lunary

Lunary is an open-source observability and prompt platform for Large Language Models (LLMs). It provides a suite of features to help AI developers take their applications into production, including analytics, monitoring, prompt templates, fine-tuning dataset creation, chat and feedback tracking, and evaluations. Lunary is designed to be usable with any model, not just OpenAI, and is easy to integrate and self-host.

gpt4all

GPT4All is an ecosystem to run powerful and customized large language models that work locally on consumer grade CPUs and any GPU. Note that your CPU needs to support AVX or AVX2 instructions. Learn more in the documentation. A GPT4All model is a 3GB - 8GB file that you can download and plug into the GPT4All open-source ecosystem software. Nomic AI supports and maintains this software ecosystem to enforce quality and security alongside spearheading the effort to allow any person or enterprise to easily train and deploy their own on-edge large language models.

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.

brain4j

Brain4J is a lightweight, performant, and open-source machine learning framework for Java. Designed with portability and speed in mind, it is optimized for high performance and ideal for those looking to implement machine learning solutions in pure Java. The framework provides tools and functionalities to facilitate the development of machine learning models within Java applications, offering ease of use and efficiency.

obs-localvocal

LocalVocal is a Speech AI assistant OBS Plugin that enables users to transcribe speech into text and translate it into any language locally on their machine. The plugin runs OpenAI's Whisper for real-time speech processing and prediction. It supports features like transcribing audio in real-time, displaying captions on screen, sending captions to files, syncing captions with recordings, and translating captions to major languages. Users can bring their own Whisper model, filter or replace captions, and experience partial transcriptions for streaming. The plugin is privacy-focused, requiring no GPU, cloud costs, network, or downtime.

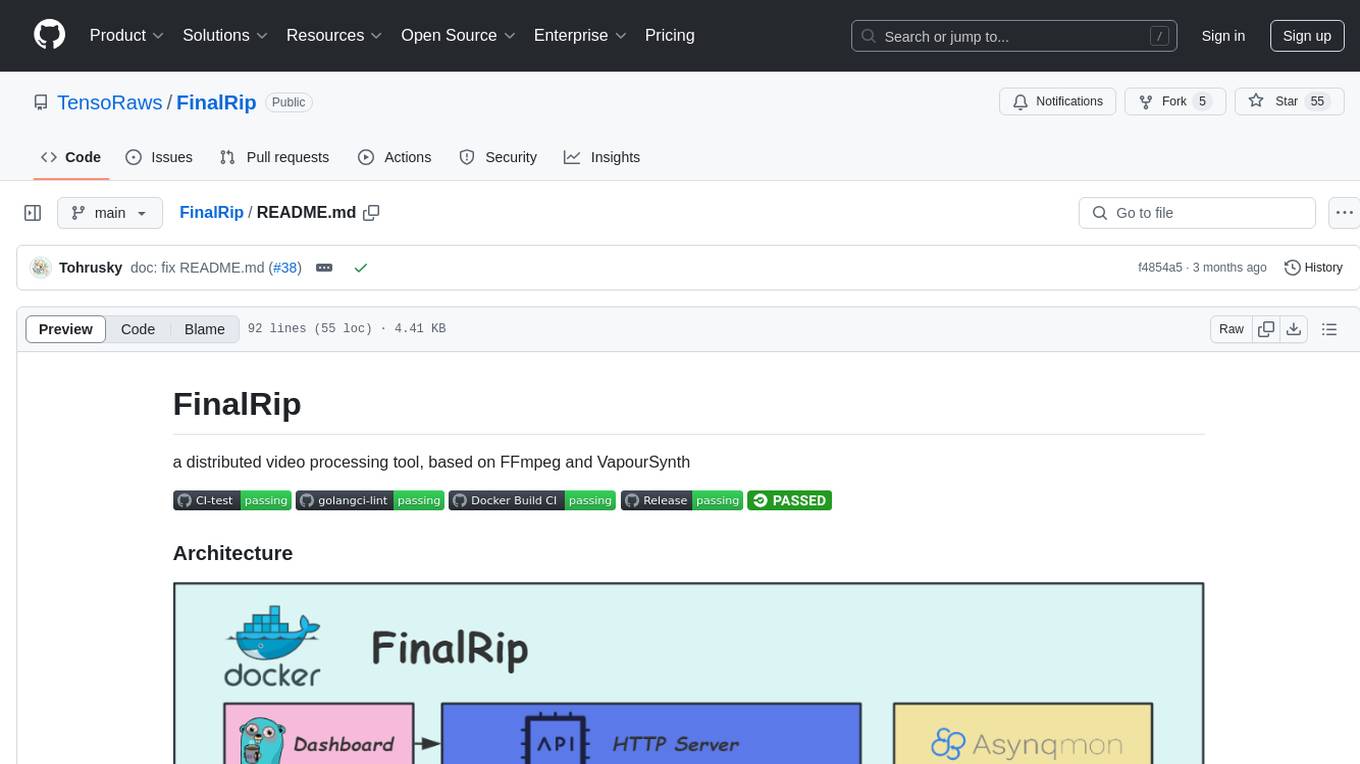

FinalRip

FinalRip is a distributed video processing tool based on FFmpeg and VapourSynth. It cuts the original video into multiple clips, processes each clip in parallel, and merges them into the final video. Users can deploy the system in a distributed way, configure settings via environment variables or remote config files, and develop/test scripts in the vs-playground environment. It supports Nvidia GPU, AMD GPU with ROCm support, and provides a dashboard for selecting compatible scripts to process videos.

ragstack-ai

RAGStack is an out-of-the-box solution simplifying Retrieval Augmented Generation (RAG) in GenAI apps. RAGStack includes the best open-source for implementing RAG, giving developers a comprehensive Gen AI Stack leveraging LangChain, CassIO, and more. RAGStack leverages the LangChain ecosystem and is fully compatible with LangSmith for monitoring your AI deployments.

For similar tasks

airframe

Airframe is a set of essential building blocks for developing applications in Scala. It includes logging, object serialization using JSON or MessagePack, dependency injection, http server/client with RPC support, functional testing with AirSpec, and more.

airlift

Airlift is a framework for building REST services in Java. It provides a simple, light-weight package that includes built-in support for configuration, metrics, logging, dependency injection, and more. Airlift allows you to focus on building production-quality web services quickly by leveraging stable, mature libraries from the Java ecosystem. It aims to streamline the development process without imposing a large, proprietary framework.

MCP-Nest

A NestJS module to effortlessly expose tools, resources, and prompts for AI using the Model Context Protocol (MCP). It allows defining tools, resources, and prompts in a familiar NestJS way, supporting multi-transport, tool validation, interactive tool calls, request context access, fine-grained authorization, resource serving, dynamic resources, prompt templates, guard-based authentication, dependency injection, server mutation, and instrumentation. It provides features for building ChatGPT widgets and MCP apps.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.

oss-fuzz-gen

This framework generates fuzz targets for real-world `C`/`C++` projects with various Large Language Models (LLM) and benchmarks them via the `OSS-Fuzz` platform. It manages to successfully leverage LLMs to generate valid fuzz targets (which generate non-zero coverage increase) for 160 C/C++ projects. The maximum line coverage increase is 29% from the existing human-written targets.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.