langfuse-python

🪢 Langfuse Python SDK - Instrument your LLM app with decorators or low-level SDK and get detailed tracing/observability. Works with any LLM or framework

Stars: 342

Langfuse Python SDK is a software development kit that provides tools and functionalities for integrating with Langfuse's language processing services. It offers decorators for observing code behavior, low-level SDK for tracing, and wrappers for accessing Langfuse's public API. The SDK was recently rewritten in version 2, released on December 17, 2023, with detailed documentation available on the official website. It also supports integrations with OpenAI SDK, LlamaIndex, and LangChain for enhanced language processing capabilities.

README:

[!IMPORTANT] The SDK was rewritten in v3 and released in June 2025. Refer to the v3 migration guide for instructions on updating your code.

pip install langfuse

Please see our docs for detailed information on this SDK.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for langfuse-python

Similar Open Source Tools

langfuse-python

Langfuse Python SDK is a software development kit that provides tools and functionalities for integrating with Langfuse's language processing services. It offers decorators for observing code behavior, low-level SDK for tracing, and wrappers for accessing Langfuse's public API. The SDK was recently rewritten in version 2, released on December 17, 2023, with detailed documentation available on the official website. It also supports integrations with OpenAI SDK, LlamaIndex, and LangChain for enhanced language processing capabilities.

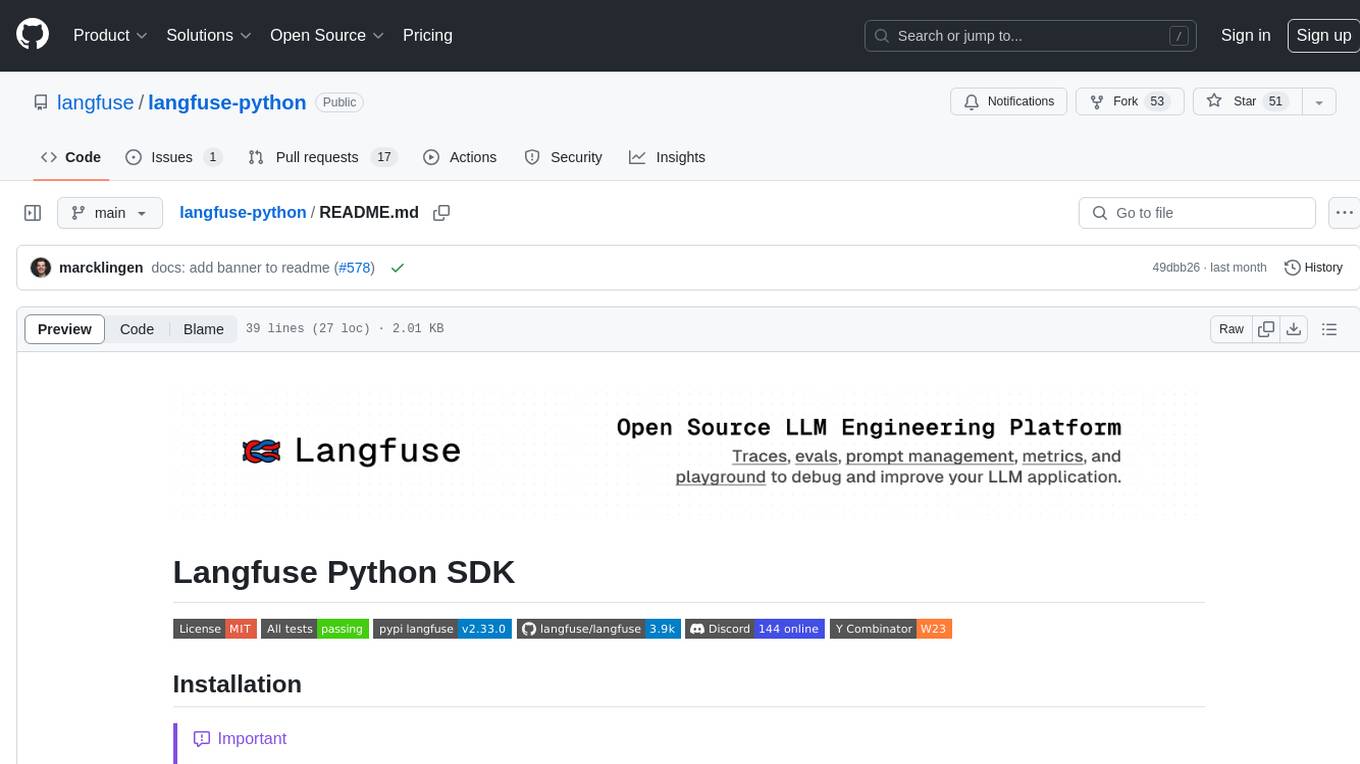

docling

Docling simplifies document processing, parsing diverse formats including advanced PDF understanding, and providing seamless integrations with the general AI ecosystem. It offers features such as parsing multiple document formats, advanced PDF understanding, unified DoclingDocument representation format, various export formats, local execution capabilities, plug-and-play integrations with agentic AI tools, extensive OCR support, and a simple CLI. Coming soon features include metadata extraction, visual language models, chart understanding, and complex chemistry understanding. Docling is installed via pip and works on macOS, Linux, and Windows environments. It provides detailed documentation, examples, integrations with popular frameworks, and support through the discussion section. The codebase is under the MIT license and has been developed by IBM.

docling

Docling is a tool that bundles PDF document conversion to JSON and Markdown in an easy, self-contained package. It can convert any PDF document to JSON or Markdown format, understand detailed page layout, reading order, recover table structures, extract metadata such as title, authors, references, and language, and optionally apply OCR for scanned PDFs. The tool is designed to be stable, lightning fast, and suitable for macOS and Linux environments.

software-agent-sdk

The OpenHands Software Agent SDK is a set of Python and REST APIs for building agents that work with code. It allows users to perform one-off tasks, routine maintenance tasks, and major tasks involving multiple agents. Agents can use the local machine or run in ephemeral workspaces like Docker or Kubernetes. The SDK can also be used to create new developer experiences, powering tools like the OpenHands CLI and OpenHands Cloud.

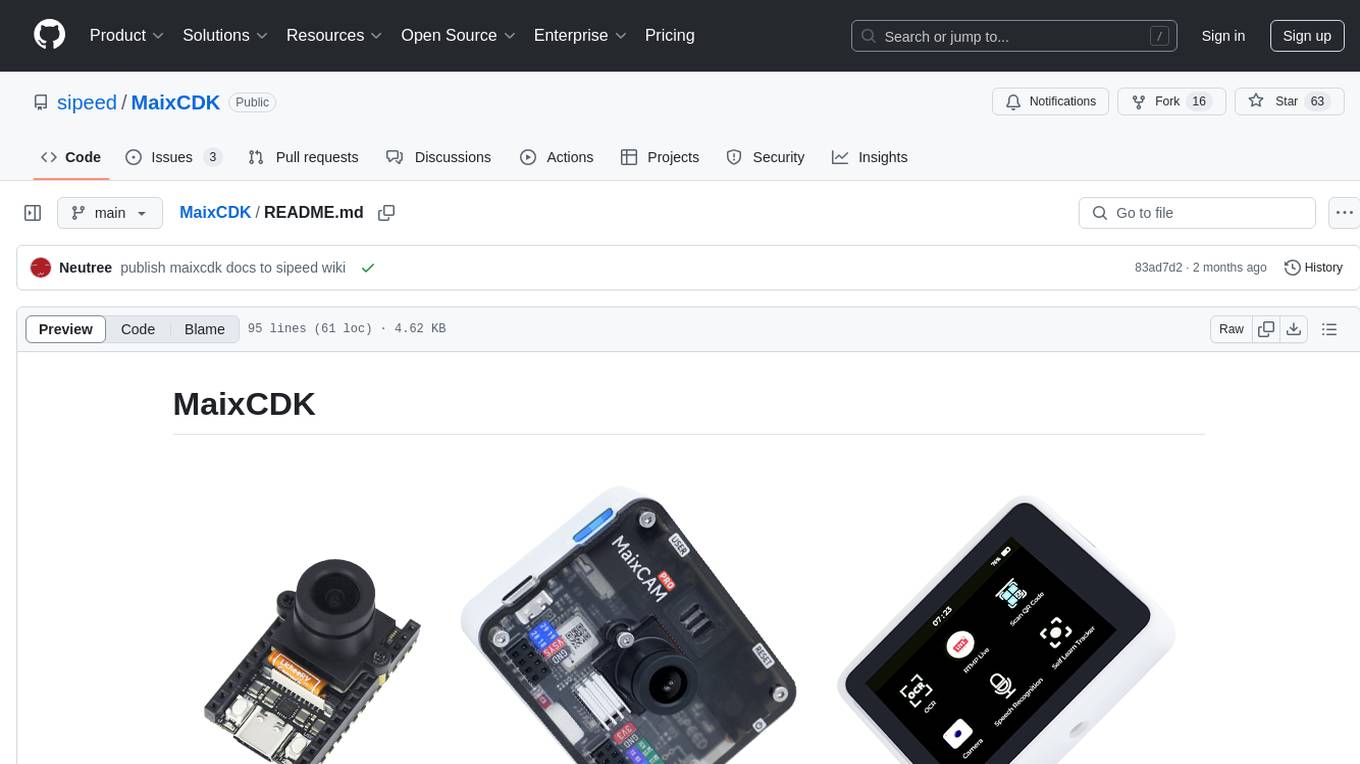

MaixCDK

MaixCDK (Maix C/CPP Development Kit) is a C/C++ development kit that integrates practical functions such as AI, machine vision, and IoT. It provides easy-to-use encapsulation for quickly building projects in vision, artificial intelligence, IoT, robotics, industrial cameras, and more. It supports hardware-accelerated execution of AI models, common vision algorithms, OpenCV, and interfaces for peripheral operations. MaixCDK offers cross-platform support, easy-to-use API, simple environment setup, online debugging, and a complete ecosystem including MaixPy and MaixVision. Supported devices include Sipeed MaixCAM, Sipeed MaixCAM-Pro, and partial support for Common Linux.

tock

Tock is an open conversational AI platform for building bots. It offers a natural language processing open source stack compatible with various tools, a user interface for building stories and analytics, a conversational DSL for different programming languages, built-in connectors for text/voice channels, toolkits for custom web/mobile integration, and the ability to deploy anywhere in the cloud or on-premise with Docker.

autoflow

AutoFlow is an open source graph rag based knowledge base tool built on top of TiDB Vector and LlamaIndex and DSPy. It features a Perplexity-style Conversational Search page and an Embeddable JavaScript Snippet for easy integration into websites. The tool allows for comprehensive coverage and streamlined search processes through sitemap URL scraping.

gpt_mobile

GPT Mobile is a chat assistant for Android that allows users to chat with multiple models at once. It supports various platforms such as OpenAI GPT, Anthropic Claude, and Google Gemini. Users can customize temperature, top p (Nucleus sampling), and system prompt. The app features local chat history, Material You style UI, dark mode support, and per app language setting for Android 13+. It is built using 100% Kotlin, Jetpack Compose, and follows a modern app architecture for Android developers.

starwhale

Starwhale is an MLOps/LLMOps platform that brings efficiency and standardization to machine learning operations. It streamlines the model development lifecycle, enabling teams to optimize workflows around key areas like model building, evaluation, release, and fine-tuning. Starwhale abstracts Model, Runtime, and Dataset as first-class citizens, providing tailored capabilities for common workflow scenarios including Models Evaluation, Live Demo, and LLM Fine-tuning. It is an open-source platform designed for clarity and ease of use, empowering developers to build customized MLOps features tailored to their needs.

devopness

Devopness is a tool that simplifies the management of cloud applications and multi-cloud infrastructure for both AI agents and humans. It provides role-based access control, permission management, cost control, and visibility into DevOps and CI/CD workflows. The tool allows provisioning and deployment to major cloud providers like AWS, Azure, DigitalOcean, and GCP. Devopness aims to make software deployment and cloud infrastructure management accessible and affordable to all involved in software projects.

rikkahub

RikkaHub is a native Android LLM chat client that supports switching between different providers for conversations. It features a modern Android app design with dark mode, support for multiple provider types, multimodal input support, Markdown rendering, search capabilities, prompt variables, QR code export/import, agent customization, ChatGPT-like memory feature, AI translation, and custom HTTP request headers and bodies. The project is developed using Kotlin, Koin, Jetpack Compose, DataStore, Room, Coil, Material You, Navigation Compose, Okhttp, kotlinx.serialization, and compose-icons/lucide.

midscene

Midscene.js is an AI-powered automation SDK that allows users to control web pages, perform assertions, and extract data in JSON format using natural language. It offers features such as natural language interaction, understanding UI and providing responses in JSON, intuitive assertion based on AI understanding, compatibility with public multimodal LLMs like GPT-4o, visualization tool for easy debugging, and a brand new experience in automation development.

macai

Macai is a native macOS client for interacting with modern AI tools, such as ChatGPT and Ollama. It features organized chats with custom system messages, system-defined light/dark themes, backup and restore functionality, customizable context size, support for any model with a compatible API, formatted code blocks and tables, multiple chat tabs, CoreData data storage, streamed responses, and automatic chat name generation. Macai is in active development, with contributions welcome.

LLM-Powered-RAG-System

LLM-Powered-RAG-System is a comprehensive repository containing frameworks, projects, components, evaluation tools, papers, blogs, and other resources related to Retrieval-Augmented Generation (RAG) systems powered by Large Language Models (LLMs). The repository includes various frameworks for building applications with LLMs, data frameworks, modular graph-based RAG systems, dense retrieval models, and efficient retrieval augmentation and generation frameworks. It also features projects such as personal productivity assistants, knowledge-based platforms, chatbots, question and answer systems, and code assistants. Additionally, the repository provides components for interacting with documents, databases, and optimization methods using ML and LLM technologies. Evaluation frameworks, papers, blogs, and other resources related to RAG systems are also included.

MinerU

MinerU is a tool that converts PDFs into machine-readable formats, allowing for easy extraction into any format. It focuses on solving symbol conversion issues in scientific literature and contributes to technological development. It removes headers, footers, footnotes, and page numbers, preserves document structure, extracts images, tables, and formulas, and supports OCR in 109 languages. MinerU supports various visualization results, runs on CPU/GPU/NPU, and is compatible with Windows, Linux, and Mac platforms.

brain4j

Brain4J is a lightweight, performant, and open-source machine learning framework for Java. Designed with portability and speed in mind, it is optimized for high performance and ideal for those looking to implement machine learning solutions in pure Java. The framework provides tools and functionalities to facilitate the development of machine learning models within Java applications, offering ease of use and efficiency.

For similar tasks

langfuse-python

Langfuse Python SDK is a software development kit that provides tools and functionalities for integrating with Langfuse's language processing services. It offers decorators for observing code behavior, low-level SDK for tracing, and wrappers for accessing Langfuse's public API. The SDK was recently rewritten in version 2, released on December 17, 2023, with detailed documentation available on the official website. It also supports integrations with OpenAI SDK, LlamaIndex, and LangChain for enhanced language processing capabilities.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.