Best AI tools for< Inject Dependencies >

5 - AI tool Sites

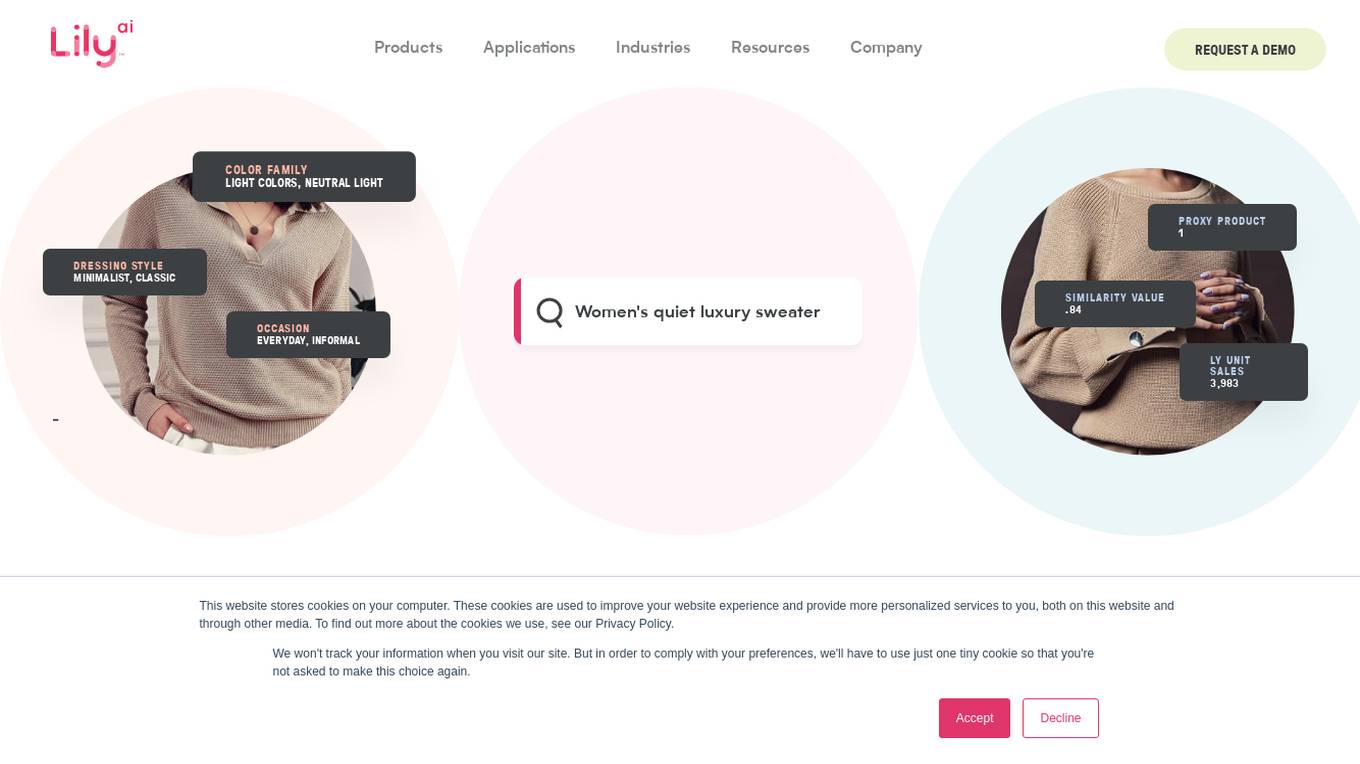

Lily AI

Lily AI is an e-commerce product discovery platform that helps brands increase sales and improve customer experience. It uses artificial intelligence to understand the language of customers and inject it across the retail ecosystem, from search to recommendations to demand forecasting. This helps retailers connect customers with the relevant products they're looking for, boost product discovery and conversion, and increase traffic, conversion, revenue, and brand loyalty.

Lily AI

Lily AI is an e-commerce product discovery platform that helps brands increase sales and improve customer experience. It uses artificial intelligence to understand the language of customers and inject it across the retail ecosystem, from search to recommendations to demand forecasting. Lily AI's platform is purpose-built for retail and turns qualitative product attributes into a universal, customer-centered mathematical language with unprecedented accuracy. This results in a depth and scale of attribution that no other solution can match.

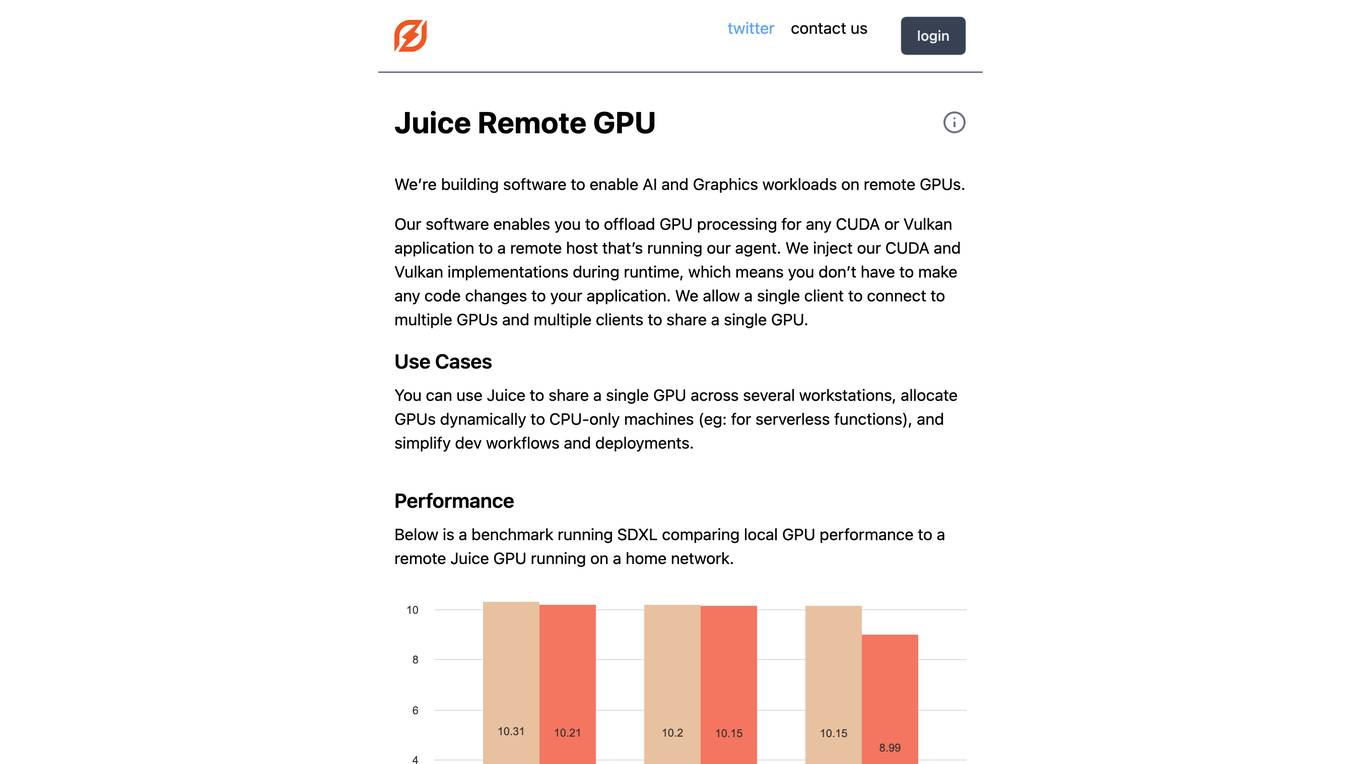

Juice Remote GPU

Juice Remote GPU is a software that enables AI and Graphics workloads on remote GPUs. It allows users to offload GPU processing for any CUDA or Vulkan application to a remote host running the Juice agent. The software injects CUDA and Vulkan implementations during runtime, eliminating the need for code changes in the application. Juice supports multiple clients connecting to multiple GPUs and multiple clients sharing a single GPU. It is useful for sharing a single GPU across multiple workstations, allocating GPUs dynamically to CPU-only machines, and simplifying development workflows and deployments. Juice Remote GPU performs within 5% of a local GPU when running in the same datacenter. It supports various APIs, including CUDA, Vulkan, DirectX, and OpenGL, and is compatible with PyTorch and TensorFlow. The team behind Juice Remote GPU consists of engineers from Meta, Intel, and the gaming industry.

Osmo

Osmo is an AI scent platform that aims to digitize the sense of smell, combining frontier AI and olfactory science to improve human health and wellbeing through fragrance. The platform reads, maps, and writes scents using modern AI tools, enabling the discovery of new fragrance ingredients and applications for insect repellents, threat detection, and immersive experiences.

Bichos ID de Fucesa

Bichos ID de Fucesa is an AI tool that allows users to explore and identify insects, arachnids, and other arthropods using artificial intelligence. Users can discover the most searched bugs, explore new discoveries made by the community, and view curated organisms. The platform aims to expand knowledge about the fascinating world of arthropods through AI-powered identification.

3 - Open Source AI Tools

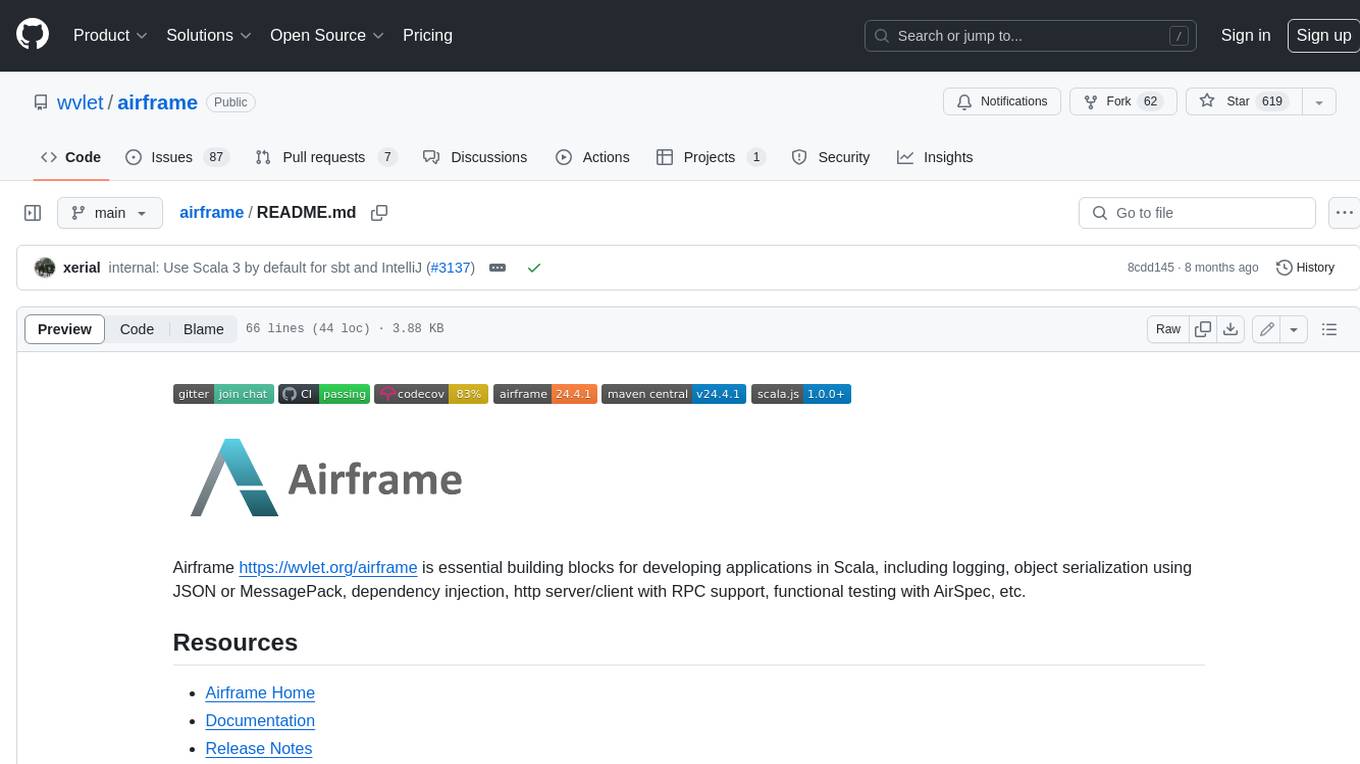

airframe

Airframe is a set of essential building blocks for developing applications in Scala. It includes logging, object serialization using JSON or MessagePack, dependency injection, http server/client with RPC support, functional testing with AirSpec, and more.

airlift

Airlift is a framework for building REST services in Java. It provides a simple, light-weight package that includes built-in support for configuration, metrics, logging, dependency injection, and more. Airlift allows you to focus on building production-quality web services quickly by leveraging stable, mature libraries from the Java ecosystem. It aims to streamline the development process without imposing a large, proprietary framework.

MCP-Nest

A NestJS module to effortlessly expose tools, resources, and prompts for AI using the Model Context Protocol (MCP). It allows defining tools, resources, and prompts in a familiar NestJS way, supporting multi-transport, tool validation, interactive tool calls, request context access, fine-grained authorization, resource serving, dynamic resources, prompt templates, guard-based authentication, dependency injection, server mutation, and instrumentation. It provides features for building ChatGPT widgets and MCP apps.