Best AI tools for< Cloud Infrastructure Manager >

Infographic

20 - AI tool Sites

Operant

Operant is a cloud-native runtime protection platform that offers instant visibility and control from infrastructure to APIs. It provides AI security shield for applications, API threat protection, Kubernetes security, automatic microsegmentation, and DevSecOps solutions. Operant helps defend APIs, protect Kubernetes, and shield AI applications by detecting and blocking various attacks in real-time. It simplifies security for cloud-native environments with zero instrumentation, application code changes, or integrations.

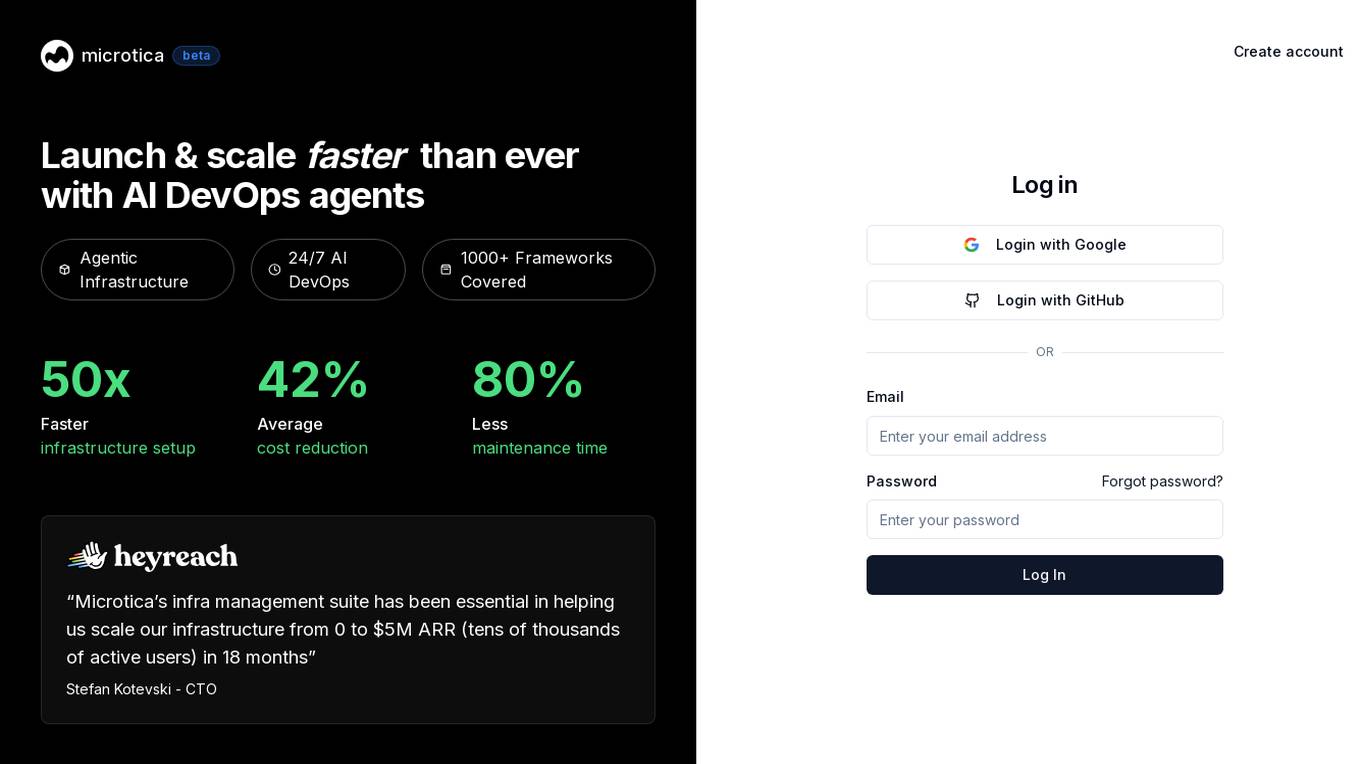

Microtica

Microtica is an AI-powered cloud delivery platform that offers a comprehensive suite of DevOps tools to help users build, deploy, and optimize their infrastructure efficiently. With features like AI Incident Investigator, AI Infrastructure Builder, Kubernetes deployment simplification, alert monitoring, pipeline automation, and cloud monitoring, Microtica aims to streamline the development and management processes for DevOps teams. The platform provides real-time insights, cost optimization suggestions, and guided deployments, making it a valuable tool for businesses looking to enhance their cloud infrastructure operations.

CloudDefense.AI

CloudDefense.AI is an industry-leading multi-layered Cloud Native Application Protection Platform (CNAPP) that safeguards cloud infrastructure and cloud-native apps with expertise, precision, and confidence. It offers comprehensive cloud security solutions, vulnerability management, compliance, and application security testing. The platform utilizes advanced AI technology to proactively detect and analyze real-time threats, ensuring robust protection for businesses against cyber threats.

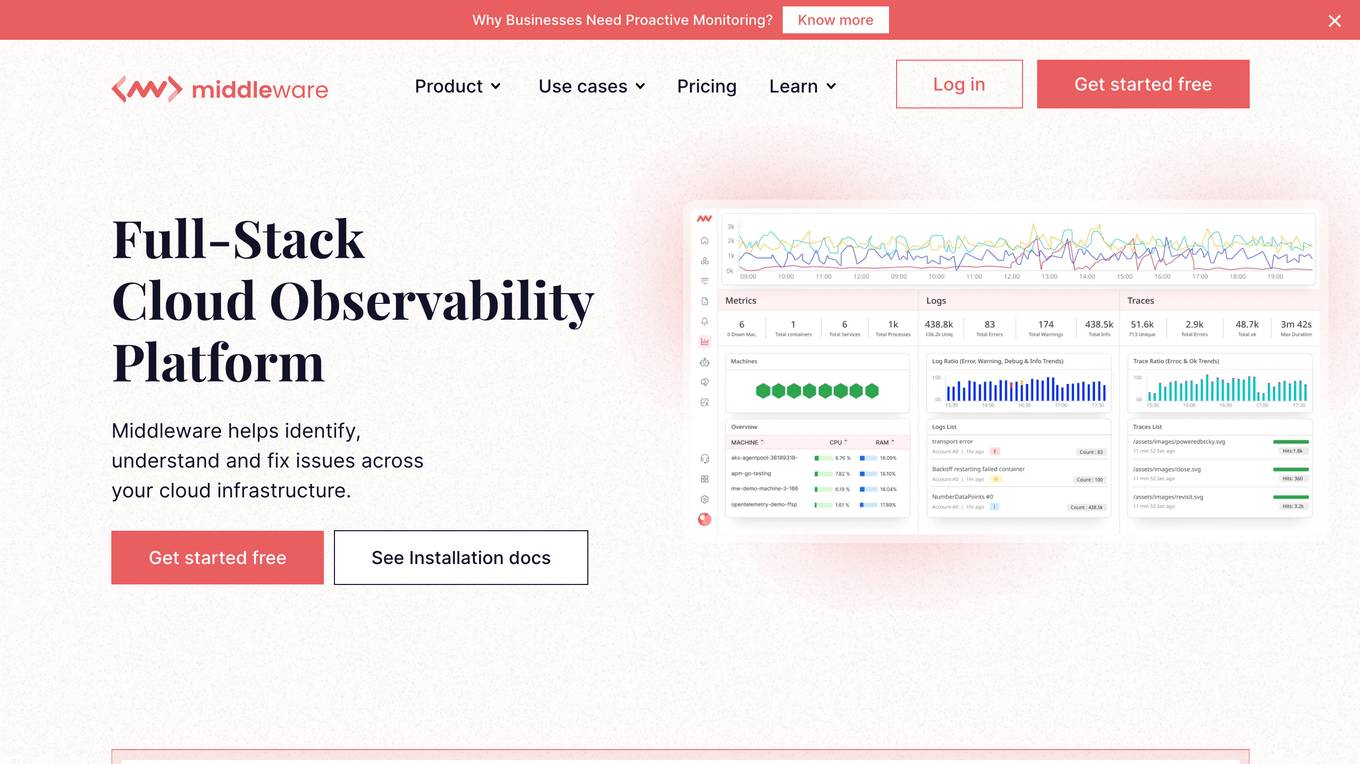

Cloud Observability Middleware

The website provides Full-Stack Cloud Observability services with a focus on Middleware. It offers comprehensive monitoring and analysis tools to help businesses optimize their cloud infrastructure performance. The platform enables users to gain insights into their middleware applications, identify bottlenecks, and improve overall system efficiency.

Microtica AI Deployment Tool

Microtica AI Deployment Tool is an advanced platform that leverages artificial intelligence to streamline the deployment process of cloud infrastructure. The tool offers features such as incident investigation with AI assistance, designing and deploying cloud infrastructure using natural language, and automated deployments. With Microtica, users can easily analyze logs, metrics, and system state to identify root causes and solutions, as well as get infrastructure-as-code and architecture diagrams. The platform aims to simplify the deployment process and enhance productivity by integrating AI capabilities into the workflow.

Nscale

Nscale is a full-stack, scalable, and sustainable AI cloud platform that offers a wide range of AI services and solutions. It provides services for developing, training, tuning, and deploying AI models using on-demand services. Nscale also offers serverless inference API endpoints, fine-tuning capabilities, private cloud solutions, and various GPU clusters engineered for AI. The platform aims to simplify the journey from AI model development to production, offering a marketplace for AI/ML tools and resources. Nscale's infrastructure includes data centers powered by renewable energy, high-performance GPU nodes, and optimized networking and storage solutions.

Reaktr.ai

Reaktr.ai is an AI-driven technology solutions provider that offers advanced AI automation services, predictive analytics, and sophisticated machine learning algorithms to help enterprises operate with agility and precision. The platform equips businesses with intelligent automation, enhanced security, and immersive experiences to drive growth, efficiency, and innovation. Reaktr.ai specializes in cloud management, cybersecurity, and AI services, providing solutions for data infrastructure, security testing, compliance, and more. With a commitment to redefining how enterprises operate, Reaktr.ai leverages AI capabilities to help businesses prosper in an AI-ready landscape.

Lacework

Lacework is a cloud security platform that provides comprehensive security solutions for DevOps, Containers, and Cloud Environments. It offers features such as Code Security, Workload Protection, Identities and Entitlements management, Posture Management, Kubernetes Security, Data Posture Management, Infrastructure as Code security, Software Composition Analysis, Application Security Testing, Edge Security, and Platform Overview. Lacework empowers users to secure their entire cloud infrastructure, prioritize risks, protect workloads, and stay compliant by leveraging AI-driven technologies and behavior-based threat detection. The platform helps automate compliance reporting, fix vulnerabilities, and reduce alerts, ultimately enhancing cloud security and operational efficiency.

Codimite

Codimite is an AI-assisted offshore development company that provides a range of services to help businesses accelerate their software development, reduce costs, and drive innovation. Codimite's team of experienced engineers and project managers use AI-powered tools and technologies to deliver exceptional results for their clients. The company's services include AI-assisted software development, cloud modernization, and data and artificial intelligence solutions.

GAPVelocity AI Modernization Studio

GAPVelocity AI Modernization Studio is an enterprise-grade modernization tool that efficiently migrates legacy applications to cutting-edge cloud-native technologies. The tool offers a hybrid AI approach, combining deterministic AI for precise code transformation with intelligent Generative AI tools for analysis, testing, and optimization. It provides deep scanning for dependencies, technical debt identification, and upgrade opportunities, along with automated assessments and migration plans. The tool supports the transformation of various legacy systems into modern applications with minimal disruption, offering accelerated delivery and production-ready output. GAPVelocity AI leverages cutting-edge technologies like cloud infrastructure, AI & machine learning, DevOps, backend and frontend technologies, and database systems to ensure successful modernization. The tool has been successfully used in various industries, including agriculture, healthcare, and manufacturing, with proven results and satisfied customers.

Kin + Carta

Kin + Carta is a global digital transformation consultancy that helps organizations embrace digital change through data, cloud, and experience design. The company's services include data and AI, cloud and platforms, experience and product design, managed services, and strategy and innovation. Kin + Carta has a team of over 2000 experts who work with clients in a variety of industries, including automotive, financial services, healthcare, and retail.

Neudesic

Neudesic is a global professional services firm that helps organizations navigate the intersection between people, technology, and business. They offer a wide range of services including cloud infrastructure, data & artificial intelligence, application innovation, modern work solutions, business transformation & strategy, hyperautomation, security services, business applications integration, and APIs. Neudesic focuses on providing ethical, transparent, and accountable AI solutions to enhance user experience and workflow efficiency for forward-thinking businesses.

ClawOneClick

ClawOneClick is an AI tool that allows users to deploy their own AI assistant in seconds without the need for technical setup. It offers a one-click deployment of an always-on AI chatbot powered by the latest AI models. Users can choose from various AI models and messaging channels to customize their AI assistant. ClawOneClick handles all the cloud infrastructure provisioning and management, ensuring secure connections and end-to-end encryption. The tool is designed to adapt to various tasks and can assist with email summarization, quick replies, translation, proofreading, customer queries, report condensation, meeting reminders, voice memo transcription, deadline tracking, schedule organization, meeting action item capture, time zone coordination, task automation, expense logging, priority planning, content generation, idea brainstorming, fast topic research, book and article summarization, concept learning, creative suggestions, code explanation, document analysis, professional document drafting, project goal definition, team updates preparation, data trend interpretation, job posting writing, product and price comparison, meal plan suggestion, and more.

Afiniti

Afiniti is a leading CX AI company that has been pioneering customer experience artificial intelligence since 2006. They deliver measurable business outcomes for some of the world's largest enterprises by leveraging AI, data, and cloud infrastructure to improve customer engagement productivity. Afiniti's eXperienceAI suite and Afiniti Inside services are designed to personalize customer experiences, drive better outcomes, and optimize interactions. Their mission is to remove skills or rules-based systems from the customer experience ecosystem, leading to predictive systems and increased customer value.

LambdaTest

LambdaTest is a next-generation mobile apps and cross-browser testing cloud platform that offers a wide range of testing services. It allows users to perform manual live-interactive cross-browser testing, run Selenium, Cypress, Playwright scripts on cloud-based infrastructure, and execute AI-powered automation testing. The platform also provides accessibility testing, real devices cloud, visual regression cloud, and AI-powered test analytics. LambdaTest is trusted by over 2 million users globally and offers a unified digital experience testing cloud to accelerate go-to-market strategies.

Looker

Looker is a business intelligence platform that offers embedded analytics and AI-powered BI solutions. Leveraging Google's AI-led innovation, Looker delivers intelligent BI by combining foundational AI, cloud-first infrastructure, industry-leading APIs, and a flexible semantic layer. It allows users to build custom data experiences, transform data into integrated experiences, and create deeply integrated dashboards. Looker also provides a universal semantic modeling layer for unified, trusted data sources and offers self-service analytics capabilities through Looker and Looker Studio. Additionally, Looker features Gemini, an AI-powered analytics assistant that accelerates analytical workflows and offers a collaborative and conversational user experience.

Palo Alto Networks

Palo Alto Networks is a cybersecurity company offering advanced security solutions powered by Precision AI to protect modern enterprises from cyber threats. The company provides network security, cloud security, and AI-driven security operations to defend against AI-generated threats in real time. Palo Alto Networks aims to simplify security and achieve better security outcomes through platformization, intelligence-driven expertise, and proactive monitoring of sophisticated threats.

Crusoe Cloud

Crusoe is a cloud computing platform that offers scalable, climate-aligned digital infrastructure optimized for high-performance computing and artificial intelligence. It provides cost-effective solutions by utilizing wasted, stranded, or clean energy sources to power computing resources. The platform supports AI workloads, computational biology, graphics rendering, and more, while reducing greenhouse gas emissions and maximizing resource efficiency.

Granica

Granica is an AI tool designed for data compression and optimization, enabling users to transform petabytes of data into terabytes with self-optimizing, lossless compression. It offers state-of-the-art technology that works seamlessly across various platforms like Iceberg, Delta, Trino, Spark, Snowflake, and Databricks. Granica helps organizations reduce storage costs, improve query performance, and enhance data accessibility for AI and analytics workloads.

Darktrace

Darktrace is a cybersecurity platform that leverages AI technology to provide proactive protection against cyber threats. It offers cloud-native AI security solutions for networks, emails, cloud environments, identity protection, and endpoint security. Darktrace's AI Analyst investigates alerts at the speed and scale of AI, mimicking human analyst behavior. The platform also includes services such as 24/7 expert support and incident management. Darktrace's AI is built on a unique approach where it learns from the organization's data to detect and respond to threats effectively. The platform caters to organizations of all sizes and industries, offering real-time detection and autonomous response to known and novel threats.

1 - Open Source Tools

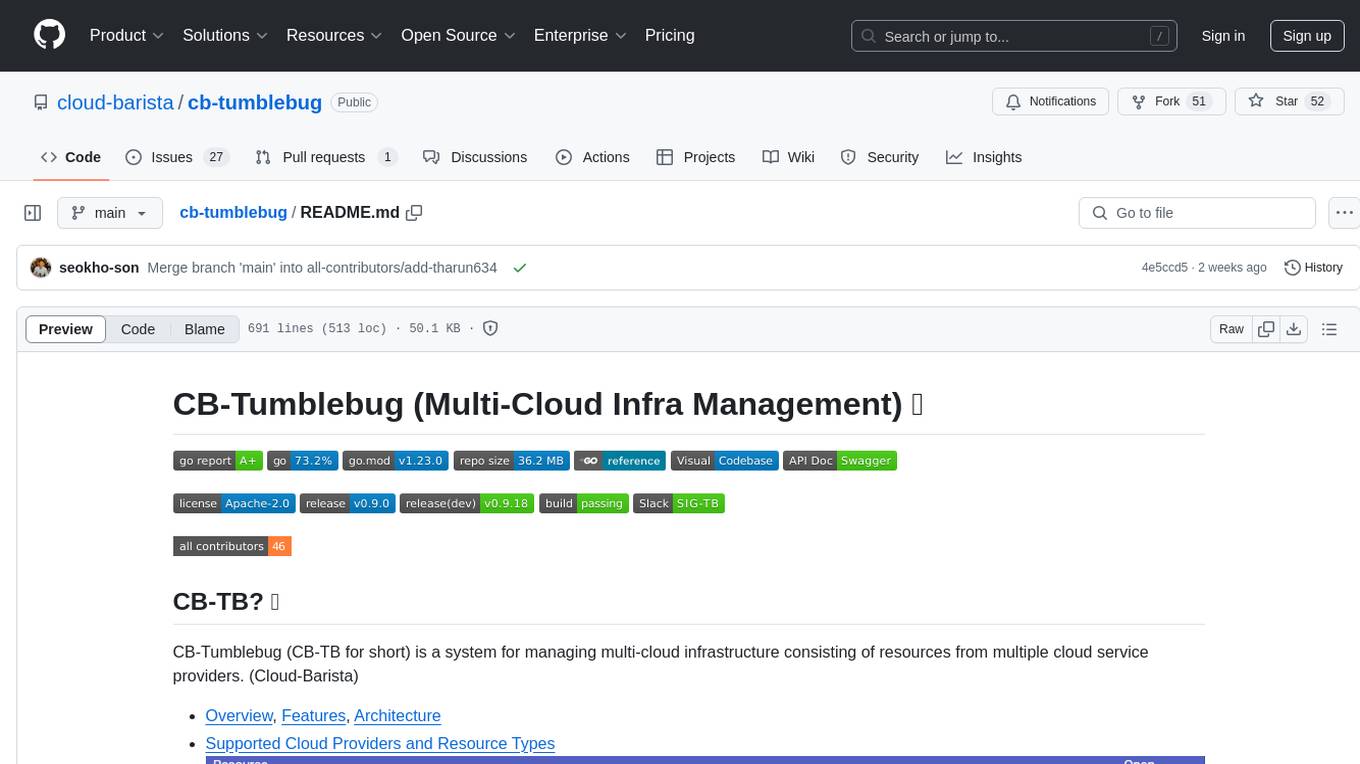

cb-tumblebug

CB-Tumblebug (CB-TB) is a system for managing multi-cloud infrastructure consisting of resources from multiple cloud service providers. It provides an overview, features, and architecture. The tool supports various cloud providers and resource types, with ongoing development and localization efforts. Users can deploy a multi-cloud infra with GPUs, enjoy multiple LLMs in parallel, and utilize LLM-related scripts. The tool requires Linux, Docker, Docker Compose, and Golang for building the source. Users can run CB-TB with Docker Compose or from the Makefile, set up prerequisites, contribute to the project, and view a list of contributors. The tool is licensed under an open-source license.

20 - OpenAI Gpts

Ryan Pollock GPT

🤖 AMAIA: ask Ryan's AI anything you'd ask the real Ryan 🧠 Deep Tech VP Marketing & Growth 🌥 Cloud Infrastructure, Databases, Machine Learning, APIs 🤖 Google Cloud, DigitalOcean, Oracle, Vultr, Android 🌁 More at linkedin.com/in/ryanpollock

🌟Technical diagrams pro🌟

Create UML for flowcharts, Class, Sequence, Use Case, and Activity diagrams using PlantUML. System design and cloud infrastructure diagrams for AWS, Azue and GCP. No login required.

Securia

AI-powered audit ally. Enhance cybersecurity effortlessly with intelligent, automated security analysis. Safe, swift, and smart.

DevOps Mentor

A formal, expert guide for DevOps pros advancing their skills. Your DevOps GYM

Cloud Computing

Expert in cloud computing, offering insights on services, security, and infrastructure.

Nimbus Navigator

Cloud Engineer Expert, guiding in cloud tech, projects, career, and industry trends.

Cloudwise Consultant

Expert in cloud-native solutions, provides tailored tech advice and cost estimates.

Infrastructure as Code Advisor

Develops, advises and optimizes infrastructure-as-code practices across the organization.

Data Engineer Consultant

Guides in data engineering tasks with a focus on practical solutions.

Azure Mentor

Expert in Azure's latest services, including Application Insights, API Management, and more.