dataengineering-roadmap

Un repositorio más con conceptos básicos, desafíos técnicos y recursos sobre ingeniería de datos en español 🧙✨

Stars: 574

A repository providing basic concepts, technical challenges, and resources on data engineering in Spanish. It is a curated list of free, Spanish-language materials found on the internet to facilitate the study of data engineering enthusiasts. The repository covers programming fundamentals, programming languages like Python, version control with Git, database fundamentals, SQL, design concepts, Big Data, analytics, cloud computing, data processing, and job search tips in the IT field.

README:

Un repositorio más con conceptos básicos, desafíos técnicos y recursos sobre ingeniería de datos en español 🧙✨

¿Te gustaría aportar al repositorio? Visitá la guía de contribución

Nota: la siguiente ruta de aprendizaje está diseñada a criterio personal con la idea de facilitar el estudio de aquellos interesados en la ingeniería de datos con material libre, gratuito y en español que encontré en internet. No es una guía definitiva ni un curso, es una lista de recursos que puede ser mejorada con el tiempo con contribuciones de la comunidad.

📚 Libros de ingeniería de datos en inglés

📖 Patrones de Diseño para DE en inglés

Comenzamos con la comprensión de los conceptos fundamentales de programación y lógica. Esta sección puede ser desarrollada simultáneamente con el aprendizaje del lenguaje de programación que elijan.

- Curso: Programación Básica de Platzi

- Videos: Introducción a los Algoritmos y la Programación de TodoCode

- Videos: Ejercicios de Pseudocódigo de TodoCode

- Videos: Linea de Comandos de Datademia

- Videos: Bash scripting de Fazt

- Lectura: Introducción a la línea de comandos de Linux y el shell de Microsoft Learn

Recomiendo iniciar con Python debido a su curva de aprendizaje amigable y su prevalencia en la industria actual. No obstante, es importante destacar que el procesamiento de datos también puede realizarse con R, Java, Scala, Julia, entre otros.

- Videos: Python desde 0 de PildorasInformáticas

- Curso: Computación científica con Python de FreeCodeCamp

- Curso: Álgebra universitaria con Python de FreeCodeCamp

- Curso: Harvard CS50’s Introducción a la programación con Python subtítulado de FreeCodeCamp

- Curso: Python intermedio subtitulado de FreeCodeCamp

- Curso: Pandas de Kaggle

- Videos: Expresiones Regulares de Ada Lovecode

- Video: Principios de la Programación Orientada a Objetos de BettaTech

- Videos: Programación Orientada a Objetos explicada con Minecraft de Absolute

- Curso: Julia para gente con prisa de Miguel Raz

El aprendizaje sobre el control de versiones no solo es valioso al trabajar en equipos, sino que también nos proporciona la capacidad de rastrear, comprender y gestionar los cambios realizados en nuestro proyecto y así mantener un desarrollo eficiente y colaborativo.

- Video: ¿Qué es el control de versiones y porque es tan importante para programar? de Datademia

- Curso: Git y Github de MoureDev

- Videos: Git y Github de TodoCode

- Lectura: Usa Git correctamente de Attlasian

- Juego: Learn Git Branching

- Notebooks: Google Collab, Jupyter o Deepnote

- IDE: VSCode o Spyder

En esta instancia toca aprender sobre las bases de datos. La elección del gestor de bases de datos a utilizar queda a tu criterio, aunque personalmente recomiendo PostgreSQL para datos estructurados y MongoDB para datos no estructurados. Sin embargo, existen muchas otras opciones: MySQL, SQLite y demás.

- Videos: Introducción a las bases de datos de TodoCode

- Lectura: Diferencias entre DDL, DML y DCL de TodoPostgreSQL

- Video: Procedimientos almacenados #1 de Héctor de León

- Video: Procedimientos almacenados #2 de Héctor de León

- Video: MongoDB de Fazt

- Videos: MongoDB de MitoCode

También aprenderás SQL, un lenguaje de consulta para gestionar y manipular las bases de datos relacionales.

Ahora seguimos con conceptos más avanzados que nos servirán para diseñar bases de datos, datalake, datawarehouses, esquemas, etcétera.

- Video: ¿Cuándo utilizar SQL y cuando NoSQL? de Héctor de León

- Video: ¿Cómo se modelan las bases de datos NoSQL? de HolaMundo

- Lectura: Bases de datos orientadas a grafos de Oracle

- Video: Bases de Datos de Grafos, Fundamentos y Práctica de Datahack

Lo siguiente es entender algunos conceptos de Big Data. Además, resulta interesante adquirir conocimientos básicos sobre inteligencia artificial, inteligencia de negocios y análisis de datos sin la necesidad de profundizar demasiado.

- Video: Big Data para dummies de Datahack

- Lectura: Big Data: ¿Qué es y cómo ayuda a mi negocio? de Salesforce

- Certificación: Diseña y programa soluciones IoT con el uso de Big Data de Universidad del Rosario

- Certificación: Big Data de University of California San Diego

- Video: Big data y privacidad de Databits

- Videos: Gobierno de Datos de Smart Data

- Video: Cómo Iniciar con Gobierno de Datos sin Romper el Presupuesto de Software Gurú

- Certificación: Fundamentos profesionales del análisis de datos, de Microsoft y LinkedIn

- Certificación: Certificado profesional de Google Data Analytics

- Certificación: Certificado profesional de Analista de datos de IBM

- Curso: Análisis de datos con Python de FreeCodeCamp

- Video: Storytelling: ¿Cómo convertir tu contenido en una historia? de Coderhouse

- Curso: Machine Learning con Python de FreeCodeCamp

- Canal: AprendeIA con Ligdi Gonzalez

- Videos: Aprende Inteligencia Artificial de Dot CSV

- Video: Cómo usar ChatGPT en ingeniería de datos de Datalytics

- Curso: Inteligencia Artificial subtitulado de Universidad de Columbia

- Videos: Google Business Intelligence Certificate subtitulado de Google Career

- Videos: ¡Business Intelligence para Todos! de PEALCALA

En esta sección está el corazón de la ingeniería de datos, veremos que son los data pipelines, qué es un ETL, orquestadores, y más. Además, dejo una lista de conceptos clave qué voy a ir actualizando con sus recursos respectivos a futuro, si te interesa aprenderlos en detalle, podés buscar en los libros subidos en el repositorio.

- Video: Ingeniería de datos: viaje al corazón de los proyectos de datos de RockingData

- Video: ¿Cómo convertirte en un verdadero Ingeniero de Datos? de Databits

- Videos: Preprocesamiento de Datos en Python de Rocio Chavez

- Videos: Preprocesamiento de Datos en R de Rocio Chavez

- Video: Pruebas A/B: Datos, no opiniones de SantanDev

- Cargas incrementales

- Colas de mensajería

- Expresiones Cron

- Modelo relacional

- Modelo dimensional

- Facts y dimensiones

- Datalake, Datamart, Datawarehouse y Dataqube

- Diseño por columnas y basada por filas

- Esquemas star y snowflake

- Esquemas on read y on write

- Videos: Airflow de Data Engineering LATAM

- Video: Automatizando ideas con Apache Airflow - Yesi Díaz de Software Gurú

- Videos: Pentaho Spoon de LEARNING-BI

- Videos: Luigi subtitulado de Seattle Data Guy

- Lectura: Azure Data Factory de Microsoft

- Procesamiento de datos por lotes o batch

- Procesamiento en tiempo real o streaming

- Arquitecturas lambda y kappa

- Lectura: Diferencias clave entre el OLAP y el OLTP de AWS

- Video: Construye ETL en batch y streaming con Spark de Databits

- Lectura: Comparación de contenedores y máquinas virtuales de Atlassian

- Videos: Docker de Pelado Nerd

- Videos: Kubernetes de Pelado Nerd

- Lectura: ¿Qué es un sistema distribuido? de Atlassian

- Videos: Spark de Data Engineering LATAM

- Video: Infraestructura como código para ingeniería de datos de Spark México

- Videos: Apache Spark de NullSafe Architect

- Videos: Apache Kafka de NullSafe Architect

- Video: Great Expectations: Validar Data Pipelines como un Profesional por CodingEric en la PyConAr 2020

- Video: ETL Testing y su Automatización con Python por Patricio Miner en la #QSConf 2023

Es útil tener conocimientos de cloud computing. Llegado a este punto, te recomendaría considerar la preparación de certificaciones oficiales. Aunque estos exámenes suelen tener un costo, puedes encontrar recursos de preparación gratuitos y oficiales de los proveedores más conocidos en la industria.

- Video: Fundamentos de Cloud Computing de Datahack

- Lectura: Descubre las ventajas y desventajas de la nube de Platzi

- Lectura: Arquitectura para Big Data en Cloud de Platzi

- Ingeniería de datos de Google Cloud

- Ingeniería de datos de Microsoft Azure

- Ingeniería de datos de AWS (pronto)

Finalmente te dejo algunas lecturas y videos que ofrecen consejos y experiencias relacionadas con la búsqueda laboral en el ámbito de sistemas. Más adelante, se agregarán desafíos técnicos y otros recursos vinculados al tema.

- Video: ¿Cómo obtener tu primer empleo en ingeniería de datos? de Spark México

- Videos: Consejos Laborales para el mundo IT de TodoCode

- Videos: Esenciales para comenzar en el mundo de los sistemas de Maxi Programa

- Hilo: Consejos para completar el perfil de LinkedIn de @natayadev

- Hilo: Consejos para conseguir un trabajo remoto en IT de @natayadev

- Hilo: Cómo crear un CV ordenado y legible de @iamdoomling

- Hilo: Te dejo estos tips para sobrevivir entrevistas con recursos humanos de @iamdoomling

- Video: Programar en empresas, startups o freelance ¿Qué es mejor? de @iamdoomling

- Video: Terminé el bootcamp de programación ¿Y ahora qué? de @iamdoomling

- Video: Trabajar como contractor desde Argentina de @iamdoomling

- Podcast: DevRock de Jonatan Ariste

- (2023) Repositorio: Desafíos de código de la comunidad de MoureDev

- (2024) Repositorio: Roadmap retos de programación de la comunidad de MoureDev

En proceso 😊

Si te resultó útil este repositorio, regalame una estrella ⭐

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for dataengineering-roadmap

Similar Open Source Tools

dataengineering-roadmap

A repository providing basic concepts, technical challenges, and resources on data engineering in Spanish. It is a curated list of free, Spanish-language materials found on the internet to facilitate the study of data engineering enthusiasts. The repository covers programming fundamentals, programming languages like Python, version control with Git, database fundamentals, SQL, design concepts, Big Data, analytics, cloud computing, data processing, and job search tips in the IT field.

basdonax-ai-rag

Basdonax AI RAG v1.0 is a repository that contains all the necessary resources to create your own AI-powered secretary using the RAG from Basdonax AI. It leverages open-source models from Meta and Microsoft, namely 'Llama3-7b' and 'Phi3-4b', allowing users to upload documents and make queries. This tool aims to simplify life for individuals by harnessing the power of AI. The installation process involves choosing between different data models based on GPU capabilities, setting up Docker, pulling the desired model, and customizing the assistant prompt file. Once installed, users can access the RAG through a local link and enjoy its functionalities.

AireLibre

AireLibre is a community response to the need for freely, collaboratively, and decentralized air quality information. It includes projects like Red Descentralizada de Aire Libre (ReDAL), Linka, Linka Firmware, LinkaBot, AQmap, and Android/iOS apps. Users can join the network with a sensor communicating with Linka. Materials and tools are needed to build a sensor. The initiative is decentralized and open for community collaboration. Users can extend or add projects to AireLibre. The license allows for creating personal networks. AireLibre is not for professional/industrial/scientific/military use, and the sensors are not calibrated in Switzerland.

AI.Hub

AI.Hub is a website displaying cards with artificial intelligences (AIs) for various applications, developed using HTML, CSS, and JavaScript. The site aims to democratize access to disruptive AIs by categorizing and organizing them dynamically. It features a responsive layout, search bar for filtering AIs, interactive cards, and column expansion on mobile devices. The project promotes innovation and helps users explore the potential of new technologies.

aios-core

Synkra AIOS is a Framework for Universal AI Agents powered by AI. It is founded on Agent-Driven Agile Development, offering revolutionary capabilities for AI-driven development and more. Transform any domain with specialized AI expertise: software development, entertainment, creative writing, business strategy, personal well-being, and more. The framework follows a clear hierarchy of priorities: CLI First, Observability Second, UI Third. The CLI is where intelligence resides, all execution, decisions, and automation happen there. Observability is for observing and monitoring what happens in the CLI in real-time. The UI is for specific management and visualizations when necessary. The two key innovations of Synkra AIOS are Planejamento Agêntico and Desenvolvimento Contextualizado por Engenharia, which eliminate inconsistency in planning and loss of context in AI-assisted development.

switch_AIO_LS_pack

Switch_AIO_LS_pack is a comprehensive package for setting up the SD card of the Nintendo Switch. It includes custom firmware, homebrew applications, payloads, and essential modules to enhance the console experience. The pack also contains the latest firmware and has been prepared using the Ultimate-Switch-Hack-Script project in collaboration with the user nightwolf from Logic-sunrise. It is compatible with all models of the Switch.

angular-node-java-ai

This repository contains a project that integrates Angular frontend, Node.js backend, Java services, and AI capabilities. The project aims to demonstrate a full-stack application with modern technologies and AI features. It showcases how to build a scalable and efficient system using Angular for the frontend, Node.js for the backend, Java for services, and AI for advanced functionalities.

Airchains

Airchains is a tool for setting up a local EVM network for testing and development purposes. It provides step-by-step instructions for installing and configuring the necessary components. The tool helps users create their own local EVM network, manage keys, deploy contracts, and interact with the network using RPC. It also guides users on setting up a station for tracking and managing transactions. Airchains is designed to facilitate testing and development activities related to blockchain applications built on the EVM platform.

tafrigh

Tafrigh is a tool for transcribing visual and audio content into text using advanced artificial intelligence techniques provided by OpenAI and wit.ai. It allows direct downloading of content from platforms like YouTube, Facebook, Twitter, and SoundCloud, and provides various output formats such as txt, srt, vtt, csv, tsv, and json. Users can install Tafrigh via pip or by cloning the GitHub repository and using Poetry. The tool supports features like skipping transcription if output exists, specifying playlist items, setting download retries, using different Whisper models, and utilizing wit.ai for transcription. Tafrigh can be used via command line or programmatically, and Docker images are available for easy usage.

chatgpt-tarot-divination

ChatGPT Tarot Divination is a tool that offers AI fortune-telling and divination functionalities. Users can download the executable installation package, deploy it using Docker, and run it locally. The tool supports various divination methods such as Tarot cards, birth charts, name analysis, dream interpretation, naming suggestions, and more. It allows customization through setting API base URL and key, and provides a user-friendly interface for easy usage.

ezwork-ai-doc-translation

EZ-Work AI Document Translation is an AI document translation assistant accessible to everyone. It enables quick and cost-effective utilization of major language model APIs like OpenAI to translate documents in formats such as txt, word, csv, excel, pdf, and ppt. The tool supports AI translation for various document types, including pdf scanning, compatibility with OpenAI format endpoints via intermediary API, batch operations, multi-threading, and Docker deployment.

RTXZY-MD

RTXZY-MD is a bot tool that supports file hosting, QR code, pairing code, and RestApi features. Users must fill in the Apikey for the bot to function properly. It is not recommended to install the bot on platforms lacking ffmpeg, imagemagick, webp, or express.js support. The tool allows for 95% implementation of website api and supports free and premium ApiKeys. Users can join group bots and get support from Sociabuzz. The tool can be run on Heroku with specific buildpacks and is suitable for Windows/VPS/RDP users who need Git, NodeJS, FFmpeg, and ImageMagick installations.

rookie_text2data

A natural language to SQL plugin powered by large language models, supporting seamless database connection for zero-code SQL queries. The plugin is designed to facilitate communication and learning among users. It supports MySQL database and various large models for natural language processing. Users can quickly install the plugin, authorize a database address, import the plugin, select a model, and perform natural language SQL queries.

oba-live-tool

The oba live tool is a small tool for Douyin small shops and Kuaishou Baiying live broadcasts. It features multiple account management, intelligent message assistant, automatic product explanation, AI automatic reply, and AI intelligent assistant. The tool requires Windows 10 or above, Chrome or Edge browser, and a valid account for Douyin small shops or Kuaishou Baiying. Users can download the tool from the Releases page, connect to the control panel, set API keys for AI functions, and configure auto-reply prompts. The tool is licensed under the MIT license.

botgroup.chat

botgroup.chat is a multi-person AI chat application based on React and Cloudflare Pages for free one-click deployment. It supports multiple AI roles participating in conversations simultaneously, providing an interactive experience similar to group chat. The application features real-time streaming responses, customizable AI roles and personalities, group management functionality, AI role mute function, Markdown format support, mathematical formula display with KaTeX, aesthetically pleasing UI design, and responsive design for mobile devices.

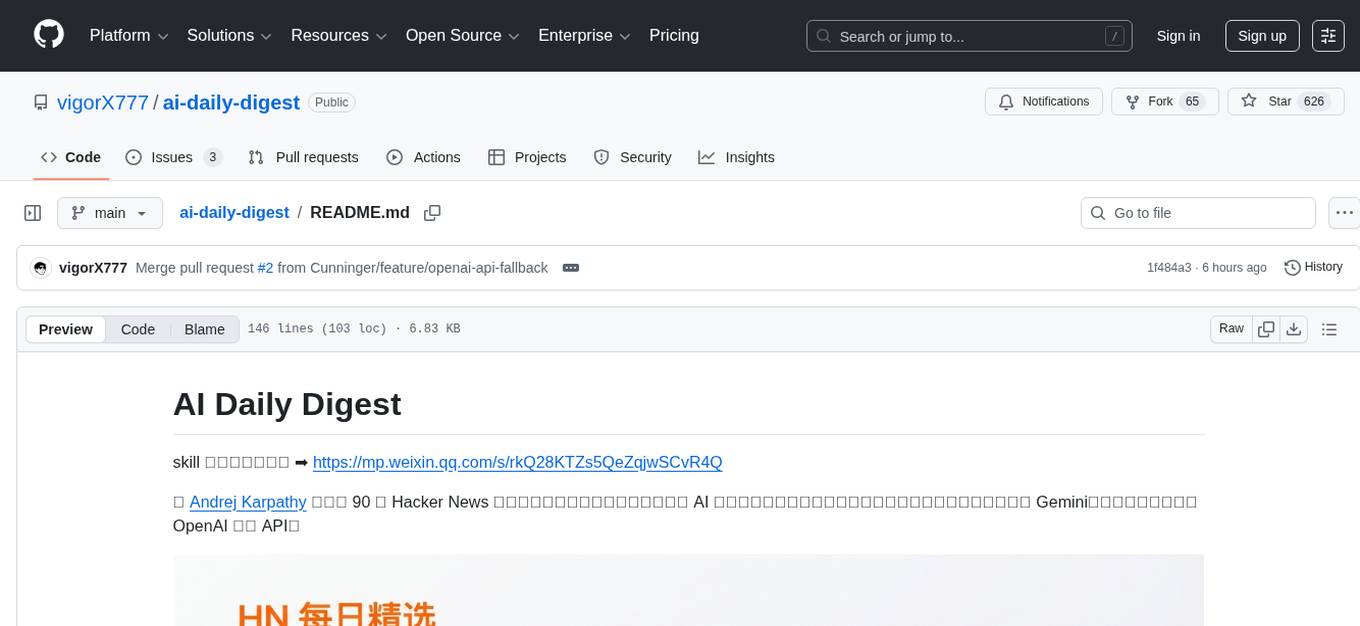

ai-daily-digest

AI Daily Digest is a tool that fetches the latest articles from the top 90 Hacker News technology blogs recommended by Andrej Karpathy. It uses AI multi-dimensional scoring to curate a structured daily digest. The tool supports Gemini by default and can automatically degrade to OpenAI compatible API. It offers a five-step processing pipeline including RSS fetching, time filtering, AI scoring and classification, AI summarization and translation, and trend summarization. The generated daily digest includes sections like today's highlights, must-read articles, data overview, and categorized article lists. The tool is designed to be dependency-free, bilingual, with structured summaries, visual statistics, intelligent categorization, trend insights, and persistent configuration memory.

For similar tasks

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.

sorrentum

Sorrentum is an open-source project that aims to combine open-source development, startups, and brilliant students to build machine learning, AI, and Web3 / DeFi protocols geared towards finance and economics. The project provides opportunities for internships, research assistantships, and development grants, as well as the chance to work on cutting-edge problems, learn about startups, write academic papers, and get internships and full-time positions at companies working on Sorrentum applications.

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

zep-python

Zep is an open-source platform for building and deploying large language model (LLM) applications. It provides a suite of tools and services that make it easy to integrate LLMs into your applications, including chat history memory, embedding, vector search, and data enrichment. Zep is designed to be scalable, reliable, and easy to use, making it a great choice for developers who want to build LLM-powered applications quickly and easily.

telemetry-airflow

This repository codifies the Airflow cluster that is deployed at workflow.telemetry.mozilla.org (behind SSO) and commonly referred to as "WTMO" or simply "Airflow". Some links relevant to users and developers of WTMO: * The `dags` directory in this repository contains some custom DAG definitions * Many of the DAGs registered with WTMO don't live in this repository, but are instead generated from ETL task definitions in bigquery-etl * The Data SRE team maintains a WTMO Developer Guide (behind SSO)

mojo

Mojo is a new programming language that bridges the gap between research and production by combining Python syntax and ecosystem with systems programming and metaprogramming features. Mojo is still young, but it is designed to become a superset of Python over time.

pandas-ai

PandasAI is a Python library that makes it easy to ask questions to your data in natural language. It helps you to explore, clean, and analyze your data using generative AI.

databend

Databend is an open-source cloud data warehouse that serves as a cost-effective alternative to Snowflake. With its focus on fast query execution and data ingestion, it's designed for complex analysis of the world's largest datasets.

For similar jobs

AirGo

AirGo is a front and rear end separation, multi user, multi protocol proxy service management system, simple and easy to use. It supports vless, vmess, shadowsocks, and hysteria2.

mosec

Mosec is a high-performance and flexible model serving framework for building ML model-enabled backend and microservices. It bridges the gap between any machine learning models you just trained and the efficient online service API. * **Highly performant** : web layer and task coordination built with Rust 🦀, which offers blazing speed in addition to efficient CPU utilization powered by async I/O * **Ease of use** : user interface purely in Python 🐍, by which users can serve their models in an ML framework-agnostic manner using the same code as they do for offline testing * **Dynamic batching** : aggregate requests from different users for batched inference and distribute results back * **Pipelined stages** : spawn multiple processes for pipelined stages to handle CPU/GPU/IO mixed workloads * **Cloud friendly** : designed to run in the cloud, with the model warmup, graceful shutdown, and Prometheus monitoring metrics, easily managed by Kubernetes or any container orchestration systems * **Do one thing well** : focus on the online serving part, users can pay attention to the model optimization and business logic

llm-code-interpreter

The 'llm-code-interpreter' repository is a deprecated plugin that provides a code interpreter on steroids for ChatGPT by E2B. It gives ChatGPT access to a sandboxed cloud environment with capabilities like running any code, accessing Linux OS, installing programs, using filesystem, running processes, and accessing the internet. The plugin exposes commands to run shell commands, read files, and write files, enabling various possibilities such as running different languages, installing programs, starting servers, deploying websites, and more. It is powered by the E2B API and is designed for agents to freely experiment within a sandboxed environment.

pezzo

Pezzo is a fully cloud-native and open-source LLMOps platform that allows users to observe and monitor AI operations, troubleshoot issues, save costs and latency, collaborate, manage prompts, and deliver AI changes instantly. It supports various clients for prompt management, observability, and caching. Users can run the full Pezzo stack locally using Docker Compose, with prerequisites including Node.js 18+, Docker, and a GraphQL Language Feature Support VSCode Extension. Contributions are welcome, and the source code is available under the Apache 2.0 License.

learn-generative-ai

Learn Cloud Applied Generative AI Engineering (GenEng) is a course focusing on the application of generative AI technologies in various industries. The course covers topics such as the economic impact of generative AI, the role of developers in adopting and integrating generative AI technologies, and the future trends in generative AI. Students will learn about tools like OpenAI API, LangChain, and Pinecone, and how to build and deploy Large Language Models (LLMs) for different applications. The course also explores the convergence of generative AI with Web 3.0 and its potential implications for decentralized intelligence.

gcloud-aio

This repository contains shared codebase for two projects: gcloud-aio and gcloud-rest. gcloud-aio is built for Python 3's asyncio, while gcloud-rest is a threadsafe requests-based implementation. It provides clients for Google Cloud services like Auth, BigQuery, Datastore, KMS, PubSub, Storage, and Task Queue. Users can install the library using pip and refer to the documentation for usage details. Developers can contribute to the project by following the contribution guide.

fluid

Fluid is an open source Kubernetes-native Distributed Dataset Orchestrator and Accelerator for data-intensive applications, such as big data and AI applications. It implements dataset abstraction, scalable cache runtime, automated data operations, elasticity and scheduling, and is runtime platform agnostic. Key concepts include Dataset and Runtime. Prerequisites include Kubernetes version > 1.16, Golang 1.18+, and Helm 3. The tool offers features like accelerating remote file accessing, machine learning, accelerating PVC, preloading dataset, and on-the-fly dataset cache scaling. Contributions are welcomed, and the project is under the Apache 2.0 license with a vendor-neutral approach.

aiges

AIGES is a core component of the Athena Serving Framework, designed as a universal encapsulation tool for AI developers to deploy AI algorithm models and engines quickly. By integrating AIGES, you can deploy AI algorithm models and engines rapidly and host them on the Athena Serving Framework, utilizing supporting auxiliary systems for networking, distribution strategies, data processing, etc. The Athena Serving Framework aims to accelerate the cloud service of AI algorithm models and engines, providing multiple guarantees for cloud service stability through cloud-native architecture. You can efficiently and securely deploy, upgrade, scale, operate, and monitor models and engines without focusing on underlying infrastructure and service-related development, governance, and operations.