Awesome-Graph-LLM

A collection of AWESOME things about Graph-Related LLMs.

Stars: 1995

Awesome-Graph-LLM is a curated collection of research papers exploring the intersection of graph-based techniques with Large Language Models (LLMs). The repository aims to bridge the gap between LLMs and graph structures prevalent in real-world applications by providing a comprehensive list of papers covering various aspects of graph reasoning, node classification, graph classification/regression, knowledge graphs, multimodal models, applications, and tools. It serves as a valuable resource for researchers and practitioners interested in leveraging LLMs for graph-related tasks.

README:

A collection of AWESOME things about Graph-Related Large Language Models (LLMs).

Large Language Models (LLMs) have shown remarkable progress in natural language processing tasks. However, their integration with graph structures, which are prevalent in real-world applications, remains relatively unexplored. This repository aims to bridge that gap by providing a curated list of research papers that explore the intersection of graph-based techniques with LLMs.

- Awesome-Graph-LLM

- (NAACL'21) Knowledge Graph Based Synthetic Corpus Generation for Knowledge-Enhanced Language Model Pre-training [paper][code]

- (NeurIPS'23) Can Language Models Solve Graph Problems in Natural Language? [paper][code]

- (IEEE Intelligent Systems 2023) Integrating Graphs with Large Language Models: Methods and Prospects [paper]

- (ICLR'24) Talk like a Graph: Encoding Graphs for Large Language Models [paper]

- (NeurIPS'24) TEG-DB: A Comprehensive Dataset and Benchmark of Textual-Edge Graphs [pdf][code][datasets]

- (NAACL'24) Can Knowledge Graphs Reduce Hallucinations in LLMs? : A Survey [paper]

- (NeurIPS'24 D&B) Can Large Language Models Analyze Graphs like Professionals? A Benchmark, Datasets and Models [paper][code]

- (NeurIPS'24 D&B) GLBench: A Comprehensive Benchmark for Graph with Large Language Models [paper][code]

- (KDD'24) A Survey of Large Language Models for Graphs [paper][code]

- (IJCAI'24) A Survey of Graph Meets Large Language Model: Progress and Future Directions [paper][code]

- (TKDE'24) Large Language Models on Graphs: A Comprehensive Survey [paper][code]

- (arxiv 2023.05) GPT4Graph: Can Large Language Models Understand Graph Structured Data? An Empirical Evaluation and Benchmarking [paper]

- (arXiv 2023.08) Graph Meets LLMs: Towards Large Graph Models [paper]

- (arXiv 2023.10) Towards Graph Foundation Models: A Survey and Beyond [paper]

- (arXiv 2024.02) Towards Versatile Graph Learning Approach: from the Perspective of Large Language Models [paper]

- (arXiv 2024.04) Graph Machine Learning in the Era of Large Language Models (LLMs) [paper]

- (ICLR'25) How Do Large Language Models Understand Graph Patterns? A Benchmark for Graph Pattern Comprehension [paper]

- (arXiv 2024.10) GRS-QA - Graph Reasoning-Structured Question Answering Dataset [paper]

- (arXiv 2024.12) Large Language Models Meet Graph Neural Networks: A Perspective of Graph Mining [paper]

- (arxiv 2025.01) Graph2text or Graph2token: A Perspective of Large Language Models for Graph Learning [paper]

- (arXiv 2025.02) A Comprehensive Analysis on LLM-based Node Classification Algorithms [paper] [code] [project papge]

- (EMNLP'23) StructGPT: A General Framework for Large Language Model to Reason over Structured Data [paper][code]

- (ACL'24) Graph Chain-of-Thought: Augmenting Large Language Models by Reasoning on Graphs [paper][code]

- (AAAI'24) Graph of Thoughts: Solving Elaborate Problems with Large Language Models [paper][code]

- (arXiv 2023.05) PiVe: Prompting with Iterative Verification Improving Graph-based Generative Capability of LLMs [paper][code]

- (arXiv 2023.08) Boosting Logical Reasoning in Large Language Models through a New Framework: The Graph of Thought [paper]

- (arxiv 2023.10) Thought Propagation: An Analogical Approach to Complex Reasoning with Large Language Models [paper]

- (arxiv 2024.01) Topologies of Reasoning: Demystifying Chains, Trees, and Graphs of Thoughts [paper]

- (arXiv 2024.10) Can Graph Descriptive Order Affect Solving Graph Problems with LLMs? [paper]

- (ICLR'24) One for All: Towards Training One Graph Model for All Classification Tasks [paper][code]

- (ICML'24) LLaGA: Large Language and Graph Assistant [paper][code]

- (NeurIPS'24) LLMs as Zero-shot Graph Learners: Alignment of GNN Representations with LLM Token Embeddings [paper][code]

- (WWW'24) GraphTranslator: Aligning Graph Model to Large Language Model for Open-ended Tasks [paper][code]

- (KDD'24) HiGPT: Heterogeneous Graph Language Model [paper][code]

- (KDD'24) ZeroG: Investigating Cross-dataset Zero-shot Transferability in Graphs [paper][code]

- (SIGIR'24) GraphGPT: Graph Instruction Tuning for Large Language Models [paper][code][blog in Chinese]

- (ACL'24) InstructGraph: Boosting Large Language Models via Graph-centric Instruction Tuning and Preference Alignment [paper][code]

- (EACL'24') Natural Language is All a Graph Needs [paper][code]

- (KDD'25) UniGraph: Learning a Cross-Domain Graph Foundation Model From Natural Language [paper]

- (arXiv 2023.10) Graph Agent: Explicit Reasoning Agent for Graphs [paper]

- (arXiv 2024.02) Let Your Graph Do the Talking: Encoding Structured Data for LLMs [paper]

- (arXiv 2024.06) UniGLM: Training One Unified Language Model for Text-Attributed Graphs [paper][code]

- (arXiv 2024.07) GOFA: A Generative One-For-All Model for Joint Graph Language Modeling [paper][code]

- (arXiv 2024.08) AnyGraph: Graph Foundation Model in the Wild [paper][code]

- (arXiv 2024.10) NT-LLM: A Novel Node Tokenizer for Integrating Graph Structure into Large Language Models [paper]

- (NeurIPS'23) GraphAdapter: Tuning Vision-Language Models With Dual Knowledge Graph [paper][code]

- (NeurIPS'24) GITA: Graph to Visual and Textual Integration for Vision-Language Graph Reasoning [paper][code][project]

- (ACL 2024) Graph Language Models [paper][code]

- (arXiv 2023.10) Multimodal Graph Learning for Generative Tasks [paper][code]

- (KDD'24) GraphWiz: An Instruction-Following Language Model for Graph Problems [paper][code][project]

- (arXiv 2023.04) Graph-ToolFormer: To Empower LLMs with Graph Reasoning Ability via Prompt Augmented by ChatGPT [paper][code]

- (arXiv 2023.10) GraphText: Graph Reasoning in Text Space [paper]

- (arXiv 2023.10) GraphLLM: Boosting Graph Reasoning Ability of Large Language Model [paper][code]

- (arXiv 2024.10) GUNDAM: Aligning Large Language Models with Graph Understanding [paper]

- (arXiv 2024.10) Are Large-Language Models Graph Algorithmic Reasoners? [paper][code]

- (arXiv 2024.10) GCoder: Improving Large Language Model for Generalized Graph Problem Solving [paper] [code]

- (arXiv 2024.10) GraphTeam: Facilitating Large Language Model-based Graph Analysis via Multi-Agent Collaboration [paper] [code]

- (ICLR'25) GraphArena: Evaluating and Exploring Large Language Models on Graph Computation [paper] [code]

- (ICLR'24) Explanations as Features: LLM-Based Features for Text-Attributed Graphs [paper][code]

- (ICLR'24) Label-free Node Classification on Graphs with Large Language Models (LLMS) [paper]

- (WWW'24) Can GNN be Good Adapter for LLMs? [paper][code]

- (CIKM'24) Distilling Large Language Models for Text-Attributed Graph Learning [paper]

- (EMNLP'24) Let's Ask GNN: Empowering Large Language Model for Graph In-Context Learning [paper]

- (TMLR'24) Can LLMs Effectively Leverage Graph Structural Information through Prompts, and Why? [paper][code]

- (IJCAI'24) Efficient Tuning and Inference for Large Language Models on Textual Graphs [paper][code]

- (CIKM'24) Distilling Large Language Models for Text-Attributed Graph Learning [paper]

- (AAAI'25) Leveraging Large Language Models for Node Generation in Few-Shot Learning on Text-Attributed Graphs [paper]

- (WSDM'25) LOGIN: A Large Language Model Consulted Graph Neural Network Training Framework [paper][code]

- (arXiv 2023.07) Exploring the Potential of Large Language Models (LLMs) in Learning on Graphs [paper][code]

- (arXiv 2023.10) Disentangled Representation Learning with Large Language Models for Text-Attributed Graphs [paper]

- (arXiv 2023.11) Large Language Models as Topological Structure Enhancers for Text-Attributed Graphs [paper]

- (arXiv 2024.02) Similarity-based Neighbor Selection for Graph LLMs [paper][code]

- (arXiv 2024.02) GraphEdit: Large Language Models for Graph Structure Learning [paper][code]

- (arXiv 2024.06) GAugLLM: Improving Graph Contrastive Learning for Text-Attributed Graphs with Large Language Models [paper][code]

- (arXiv 2024.07) Enhancing Data-Limited Graph Neural Networks by Actively Distilling Knowledge from Large Language Models [paper]

- (arXiv 2024.07) All Against Some: Efficient Integration of Large Language Models for Message Passing in Graph Neural Networks [paper]

- (arXiv 2024.10) Let's Ask GNN: Empowering Large Language Model for Graph In-Context Learning [paper]

- (arXiv 2024.10) Large Language Model-based Augmentation for Imbalanced Node Classification on Text-Attributed Graphs [paper]

- (arXiv 2024.10) Enhance Graph Alignment for Large Language Models [paper]

- (arXiv 2025.01) Each Graph is a New Language: Graph Learning with LLMs [paper]

- (arXiv 2025.02) A Comprehensive Analysis on LLM-based Node Classification Algorithms [paper][code] [project papge]

- (AAAI'22) Enhanced Story Comprehension for Large Language Models through Dynamic Document-Based Knowledge Graphs [paper]

- (EMNLP'22) Language Models of Code are Few-Shot Commonsense Learners [paper][code]

- (SIGIR'23) Schema-aware Reference as Prompt Improves Data-Efficient Knowledge Graph Construction [paper][code]

- (TKDE‘23) AutoAlign: Fully Automatic and Effective Knowledge Graph Alignment enabled by Large Language Models [paper][code]

- (ICLR'24) Think-on-Graph: Deep and Responsible Reasoning of Large Language Model on Knowledge Graph [paper][code]

- (ICLR‘24) Reasoning on Graphs: Faithful and Interpretable Large Language Model Reasoning [paper][code]

- (AAAI'24) Graph Neural Prompting with Large Language Models [paper][code]

- (EMNLP'24) Extract, Define, Canonicalize: An LLM-based Framework for Knowledge Graph Construction [paper][code]

- (EMNLP'24) LLM-Based Multi-Hop Question Answering with Knowledge Graph Integration in Evolving Environments [paper]

- (ACL'24) Graph Language Models [paper][code]

- (ACL'24) Large Language Models Can Learn Temporal Reasoning [paper][code]

- (ACL'24) Call Me When Necessary: LLMs can Efficiently and Faithfully Reason over Structured Environments [paper][code]

- (ACL'24) MindMap: Knowledge Graph Prompting Sparks Graph of Thoughts in Large Language Models [paper][code]

- (NAACL'24) zrLLM: Zero-Shot Relational Learning on Temporal Knowledge Graphs with Large Language Models[paper]

- (arXiv 2023.04) CodeKGC: Code Language Model for Generative Knowledge Graph Construction [paper][code]

- (arXiv 2023.05) Knowledge Graph Completion Models are Few-shot Learners: An Empirical Study of Relation Labeling in E-commerce with LLMs [paper]

- (arXiv 2023.10) Faithful Path Language Modelling for Explainable Recommendation over Knowledge Graph [paper]

- (arXiv 2023.12) KGLens: A Parameterized Knowledge Graph Solution to Assess What an LLM Does and Doesn’t Know [paper]

- (arXiv 2024.02) Large Language Model Meets Graph Neural Network in Knowledge Distillation [paper]

- (arXiv 2024.02) Knowledge Graph Large Language Model (KG-LLM) for Link Prediction [paper]

- (arXiv 2024.04) Evaluating the Factuality of Large Language Models using Large-Scale Knowledge Graphs [paper][code]

- (arXiv 2024.05) FiDeLiS: Faithful Reasoning in Large Language Model for Knowledge Graph Question Answering [paper]

- (arXiv 2024.06) Explore then Determine: A GNN-LLM Synergy Framework for Reasoning over Knowledge Graph [paper]

- (arXiv 2024.11) Synergizing LLM Agents and Knowledge Graph for Socioeconomic Prediction in LBSN [paper]

- (arXiv 2025.01) Fast Think-on-Graph: Wider, Deeper and Faster Reasoning of Large Language Model on Knowledge Graph [paper][code]

- (NeurIPS'23) GIMLET: A Unified Graph-Text Model for Instruction-Based Molecule Zero-Shot Learning [paper][code]

- (arXiv 2023.07) Can Large Language Models Empower Molecular Property Prediction? [paper][code]

- (arXiv 2024.06) MolecularGPT: Open Large Language Model (LLM) for Few-Shot Molecular Property Prediction [paper][code]

- (arXiv 2024.06) HIGHT: Hierarchical Graph Tokenization for Graph-Language Alignment [paper][project]

- (arXiv 2024.06) MolX: Enhancing Large Language Models for Molecular Learning with A Multi-Modal Extension [paper]

- (arXiv 2024.06) LLM and GNN are Complementary: Distilling LLM for Multimodal Graph Learning [paper]

- (arXiv 2024.10) G2T-LLM: Graph-to-Tree Text Encoding for Molecule Generation with Fine-Tuned Large Language Models [paper]

- (NeurIPS'24) G-Retriever: Retrieval-Augmented Generation for Textual Graph Understanding and Question Answering [paper][code][blog]

- (NeurIPS'24) HippoRAG: Neurobiologically Inspired Long-Term Memory for Large Language Models [paper][code]

- (arxiv 2024.04) From Local to Global: A Graph RAG Approach to Query-Focused Summarization [paper]

- (arXiv 2024.05) Don't Forget to Connect! Improving RAG with Graph-based Reranking [paper]

- (arXiv 2024.06) GNN-RAG: Graph Neural Retrieval for Large Language Modeling Reasoning [paper][code]

- (arXiv 2024.10) Graph of Records: Boosting Retrieval Augmented Generation for Long-context Summarization with Graphs [paper] [code]

- (arXiv 2025.01) Retrieval-Augmented Generation with Graphs (GraphRAG) [paper][code]

- (WWW'25) G-Refer: Graph Retrieval-Augmented Large Language Model for Explainable Recommendation [paper]

- (arXiv 2025.02) GFM-RAG: Graph Foundation Model for Retrieval Augmented Generation [paper] [code]

- (NeurIPS'24) Can Graph Learning Improve Planning in LLM-based Agents? [paper][code]

- (ICML'24) Graph-enhanced Large Language Models in Asynchronous Plan Reasoning [paper][code]

- (ICLR'25) Benchmarking Agentic Workflow Generation [paper] [code]

- (ICML'24) GPTSwarm: Language Agents as Optimizable Graphs [paper] [code]

- (ICLR'25) Scaling Large-Language-Model-based Multi-Agent Collaboration [paper] [code]

- (ICLR'25) Cut the Crap: An Economical Communication Pipeline for LLM-based Multi-Agent Systems [paper] [code]

- (arXiv 2024.10) G-Designer: Architecting Multi-agent Communication Topologies via Graph Neural Networks [paper] [code]

- (arXiv 2025.02) EvoFlow: Evolving Diverse Agentic Workflows On The Fly [paper]

- (NeurIPS'24) Intruding with Words: Towards Understanding Graph Injection Attacks at the Text Level [paper]

- (KDD'25) Can Large Language Models Improve the Adversarial Robustness of Graph Neural Networks? [paper][code]

- (arXiv 2024.07) Learning on Graphs with Large Language Models(LLMs): A Deep Dive into Model Robustness [paper][code]

- (NeurIPS'24) Microstructures and Accuracy of Graph Recall by Large Language Models [paper][code]

- (WSDM'24) LLMRec: Large Language Models with Graph Augmentation for Recommendation [paper][code][blog in Chinese]

- (KDD'24) LLM4DyG: Can Large Language Models Solve Problems on Dynamic Graphs? [paper][code]

- (Complex Networks 2024) LLMs hallucinate graphs too: a structural perspective [paper]

- (AAAI'25) Bootstrapping Heterogeneous Graph Representation Learning via Large Language Models: A Generalized Approach [paper]

- (arXiv 2023.03) Ask and You Shall Receive (a Graph Drawing): Testing ChatGPT’s Potential to Apply Graph Layout Algorithms [paper]

- (arXiv 2023.05) Graph Meets LLM: A Novel Approach to Collaborative Filtering for Robust Conversational Understanding [paper]

- (arXiv 2023.05) ChatGPT Informed Graph Neural Network for Stock Movement Prediction [paper][code]

- (arXiv 2023.10) Graph Neural Architecture Search with GPT-4 [paper]

- (arXiv 2023.11) Biomedical knowledge graph-enhanced prompt generation for large language models [paper][code]

- (arXiv 2023.11) Graph-Guided Reasoning for Multi-Hop Question Answering in Large Language Models [paper]

- (arXiv 2024.02) Causal Graph Discovery with Retrieval-Augmented Generation based Large Language Models [paper]

- (arXiv 2024.02) Efficient Causal Graph Discovery Using Large Language Models [paper]

- (arXiv 2024.03) Exploring the Potential of Large Language Models in Graph Generation [paper]

- (arXiv 2024.07) LLMExplainer: Large Language Model based Bayesian Inference for Graph Explanation Generation [paper]

- (arXiv 2024.08) CodexGraph: Bridging Large Language Models and Code Repositories via Code Graph Databases [paper][code][project]

- (arXiv 2024.10) Graph Linearization Methods for Reasoning on Graphs with Large Language Models [paper]

- (arXiv 2024.10) GraphRouter: A Graph-based Router for LLM Selections [paper][code]

- (ICLR'25) RepoGraph: Enhancing AI Software Engineering with Repository-level Code Graph [paper] [code]

- GraphGPT: Extrapolating knowledge graphs from unstructured text using GPT-3

- GraphML: Graph markup language. An XML-based file format for graphs.

- GML: Graph modelling language. Read graphs in GML format.

- PyG: GNNs + LLMs: Examples for Co-training LLMs and GNNs

👍 Contributions to this repository are welcome!

If you have come across relevant resources, feel free to open an issue or submit a pull request.

- (*conference|journal*) paper_name [[pdf](link)][[code](link)]

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Awesome-Graph-LLM

Similar Open Source Tools

Awesome-Graph-LLM

Awesome-Graph-LLM is a curated collection of research papers exploring the intersection of graph-based techniques with Large Language Models (LLMs). The repository aims to bridge the gap between LLMs and graph structures prevalent in real-world applications by providing a comprehensive list of papers covering various aspects of graph reasoning, node classification, graph classification/regression, knowledge graphs, multimodal models, applications, and tools. It serves as a valuable resource for researchers and practitioners interested in leveraging LLMs for graph-related tasks.

Awesome-LLMs-in-Graph-tasks

This repository is a collection of papers on leveraging Large Language Models (LLMs) in Graph Tasks. It provides a comprehensive overview of how LLMs can enhance graph-related tasks by combining them with traditional Graph Neural Networks (GNNs). The integration of LLMs with GNNs allows for capturing both structural and contextual aspects of nodes in graph data, leading to more powerful graph learning. The repository includes summaries of various models that leverage LLMs to assist in graph-related tasks, along with links to papers and code repositories for further exploration.

Awesome-Efficient-AIGC

This repository, Awesome Efficient AIGC, collects efficient approaches for AI-generated content (AIGC) to cope with its huge demand for computing resources. It includes efficient Large Language Models (LLMs), Diffusion Models (DMs), and more. The repository is continuously improving and welcomes contributions of works like papers and repositories that are missed by the collection.

aim

Aim is an open-source, self-hosted ML experiment tracking tool designed to handle 10,000s of training runs. Aim provides a performant and beautiful UI for exploring and comparing training runs. Additionally, its SDK enables programmatic access to tracked metadata — perfect for automations and Jupyter Notebook analysis. **Aim's mission is to democratize AI dev tools 🎯**

llama.cpp

llama.cpp is a C++ implementation of LLaMA, a large language model from Meta. It provides a command-line interface for inference and can be used for a variety of tasks, including text generation, translation, and question answering. llama.cpp is highly optimized for performance and can be run on a variety of hardware, including CPUs, GPUs, and TPUs.

Awesome-TimeSeries-SpatioTemporal-LM-LLM

Awesome-TimeSeries-SpatioTemporal-LM-LLM is a curated list of Large (Language) Models and Foundation Models for Temporal Data, including Time Series, Spatio-temporal, and Event Data. The repository aims to summarize recent advances in Large Models and Foundation Models for Time Series and Spatio-Temporal Data with resources such as papers, code, and data. It covers various applications like General Time Series Analysis, Transportation, Finance, Healthcare, Event Analysis, Climate, Video Data, and more. The repository also includes related resources, surveys, and papers on Large Language Models, Foundation Models, and their applications in AIOps.

VideoRefer

VideoRefer Suite is a tool designed to enhance the fine-grained spatial-temporal understanding capabilities of Video Large Language Models (Video LLMs). It consists of three primary components: Model (VideoRefer) for perceiving, reasoning, and retrieval for user-defined regions at any specified timestamps, Dataset (VideoRefer-700K) for high-quality object-level video instruction data, and Benchmark (VideoRefer-Bench) to evaluate object-level video understanding capabilities. The tool can understand any object within a video.

verl

verl is a flexible and efficient RL training library for large language models (LLMs). It offers easy extension of diverse RL algorithms, seamless integration with existing LLM infra, flexible device mapping, and integration with popular Hugging Face models. The library provides state-of-the-art throughput, efficient actor model resharding, and supports various RL algorithms like PPO, GRPO, and more. It also supports model-based and function-based rewards for tasks like math and coding, vision-language models, and multi-modal RL. verl is used for tasks like training large language models, reasoning tasks, reinforcement learning with diverse algorithms, and multi-modal RL.

GraphGen

GraphGen is a framework for synthetic data generation guided by knowledge graphs. It enhances supervised fine-tuning for large language models (LLMs) by generating synthetic data based on a fine-grained knowledge graph. The tool identifies knowledge gaps in LLMs, prioritizes generating QA pairs targeting high-value knowledge, incorporates multi-hop neighborhood sampling, and employs style-controlled generation to diversify QA data. Users can use LLaMA-Factory and xtuner for fine-tuning LLMs after data generation.

semantic-router

The Semantic Router is an intelligent routing tool that utilizes a Mixture-of-Models (MoM) approach to direct OpenAI API requests to the most suitable models based on semantic understanding. It enhances inference accuracy by selecting models tailored to different types of tasks. The tool also automatically selects relevant tools based on the prompt to improve tool selection accuracy. Additionally, it includes features for enterprise security such as PII detection and prompt guard to protect user privacy and prevent misbehavior. The tool implements similarity caching to reduce latency. The comprehensive documentation covers setup instructions, architecture guides, and API references.

feast

Feast is an open source feature store for machine learning, providing a fast path to manage infrastructure for productionizing analytic data. It allows ML platform teams to make features consistently available, avoid data leakage, and decouple ML from data infrastructure. Feast abstracts feature storage from retrieval, ensuring portability across different model training and serving scenarios.

Awesome-Text2SQL

Awesome Text2SQL is a curated repository containing tutorials and resources for Large Language Models, Text2SQL, Text2DSL, Text2API, Text2Vis, and more. It provides guidelines on converting natural language questions into structured SQL queries, with a focus on NL2SQL. The repository includes information on various models, datasets, evaluation metrics, fine-tuning methods, libraries, and practice projects related to Text2SQL. It serves as a comprehensive resource for individuals interested in working with Text2SQL and related technologies.

L3AGI

L3AGI is an open-source tool that enables AI Assistants to collaborate together as effectively as human teams. It provides a robust set of functionalities that empower users to design, supervise, and execute both autonomous AI Assistants and Teams of Assistants. Key features include the ability to create and manage Teams of AI Assistants, design and oversee standalone AI Assistants, equip AI Assistants with the ability to retain and recall information, connect AI Assistants to an array of data sources for efficient information retrieval and processing, and employ curated sets of tools for specific tasks. L3AGI also offers a user-friendly interface, APIs for integration with other systems, and a vibrant community for support and collaboration.

SuperAGI

SuperAGI is an open-source framework designed to build, manage, and run autonomous AI agents. It enables developers to create production-ready and scalable agents, extend agent capabilities with toolkits, and interact with agents through a graphical user interface. The framework allows users to connect to multiple Vector DBs, optimize token usage, store agent memory, utilize custom fine-tuned models, and automate tasks with predefined steps. SuperAGI also provides a marketplace for toolkits that enable agents to interact with external systems and third-party plugins.

lmdeploy

LMDeploy is a toolkit for compressing, deploying, and serving LLM, developed by the MMRazor and MMDeploy teams. It has the following core features: * **Efficient Inference** : LMDeploy delivers up to 1.8x higher request throughput than vLLM, by introducing key features like persistent batch(a.k.a. continuous batching), blocked KV cache, dynamic split&fuse, tensor parallelism, high-performance CUDA kernels and so on. * **Effective Quantization** : LMDeploy supports weight-only and k/v quantization, and the 4-bit inference performance is 2.4x higher than FP16. The quantization quality has been confirmed via OpenCompass evaluation. * **Effortless Distribution Server** : Leveraging the request distribution service, LMDeploy facilitates an easy and efficient deployment of multi-model services across multiple machines and cards. * **Interactive Inference Mode** : By caching the k/v of attention during multi-round dialogue processes, the engine remembers dialogue history, thus avoiding repetitive processing of historical sessions.

For similar tasks

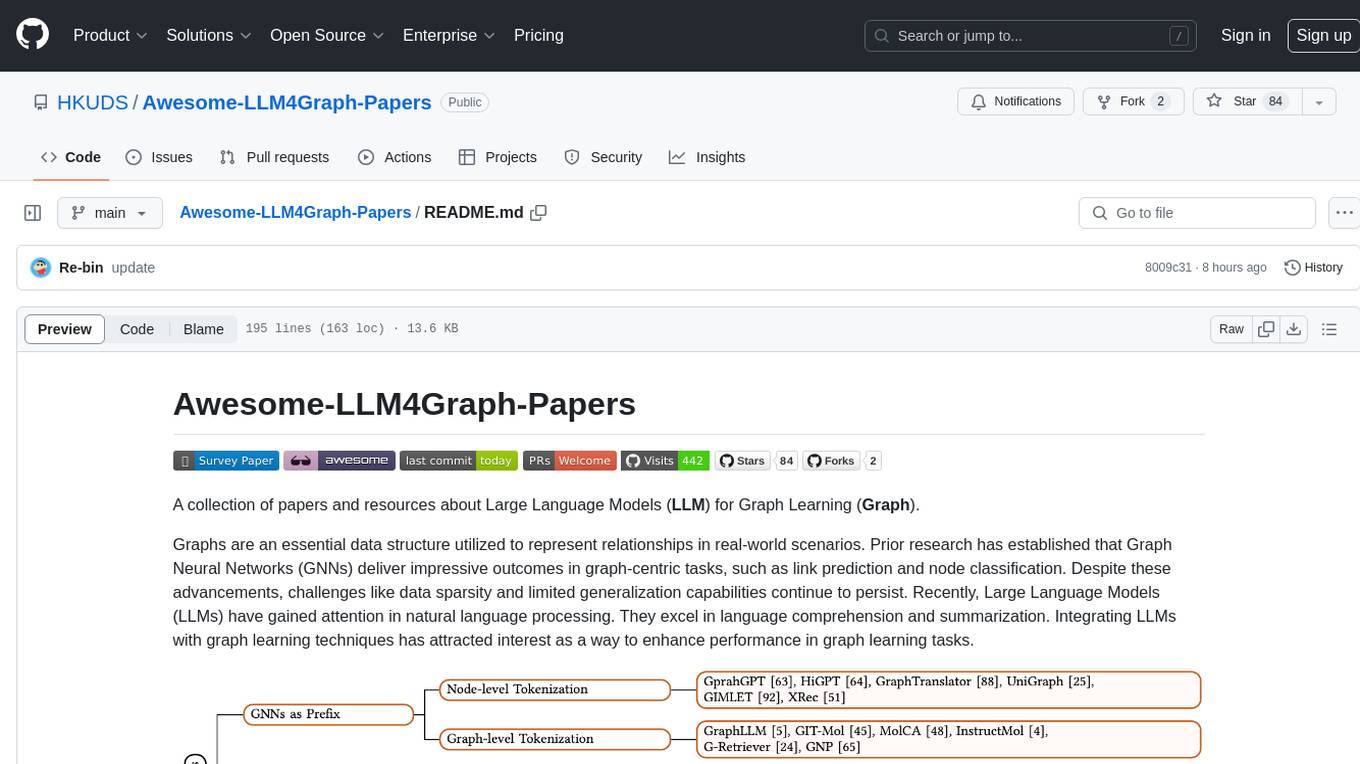

Awesome-LLM4Graph-Papers

A collection of papers and resources about Large Language Models (LLM) for Graph Learning (Graph). Integrating LLMs with graph learning techniques to enhance performance in graph learning tasks. Categorizes approaches based on four primary paradigms and nine secondary-level categories. Valuable for research or practice in self-supervised learning for recommendation systems.

Graph-CoT

This repository contains the source code and datasets for Graph Chain-of-Thought: Augmenting Large Language Models by Reasoning on Graphs accepted to ACL 2024. It proposes a framework called Graph Chain-of-thought (Graph-CoT) to enable Language Models to traverse graphs step-by-step for reasoning, interaction, and execution. The motivation is to alleviate hallucination issues in Language Models by augmenting them with structured knowledge sources represented as graphs.

Awesome-Graph-LLM

Awesome-Graph-LLM is a curated collection of research papers exploring the intersection of graph-based techniques with Large Language Models (LLMs). The repository aims to bridge the gap between LLMs and graph structures prevalent in real-world applications by providing a comprehensive list of papers covering various aspects of graph reasoning, node classification, graph classification/regression, knowledge graphs, multimodal models, applications, and tools. It serves as a valuable resource for researchers and practitioners interested in leveraging LLMs for graph-related tasks.

all-in-rag

All-in-RAG is a comprehensive repository for all things related to Randomized Algorithms and Graphs. It provides a wide range of resources, including implementations of various randomized algorithms, graph data structures, and visualization tools. The repository aims to serve as a one-stop solution for researchers, students, and enthusiasts interested in exploring the intersection of randomized algorithms and graph theory. Whether you are looking to study theoretical concepts, implement algorithms in practice, or visualize graph structures, All-in-RAG has got you covered.

automatic-KG-creation-with-LLM

This repository presents a (semi-)automatic pipeline for Ontology and Knowledge Graph Construction using Large Language Models (LLMs) such as Mixtral 8x22B Instruct v0.1, GPT-4o, GPT-3.5, and Gemini. It explores the generation of Knowledge Graphs by formulating competency questions, developing ontologies, constructing KGs, and evaluating the results with minimal human involvement. The project showcases the creation of a KG on deep learning methodologies from scholarly publications. It includes components for data preprocessing, prompts for LLMs, datasets, and results from the selected LLMs.

Sarvadnya

Sarvadnya is a repository focused on interfacing custom data using Large Language Models (LLMs) through Proof-of-Concepts (PoCs) like Retrieval Augmented Generation (RAG) and Fine-Tuning. It aims to enable domain adaptation for LLMs to answer on user-specific corpora. The repository also covers topics such as Indic-languages models, 3D World Simulations, Knowledge Graphs Generation, Signal Processing, Drones, UAV Image Processing, and Floor Plan Segmentation. It provides insights into building chatbots of various modalities, preparing videos, and creating content for different platforms like Medium, LinkedIn, and YouTube. The tech stacks involved range from enterprise solutions like Google Doc AI and Microsoft Azure Language AI Services to open-source tools like Langchain and HuggingFace.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.