Best AI tools for< Etl Developer >

Infographic

5 - AI tool Sites

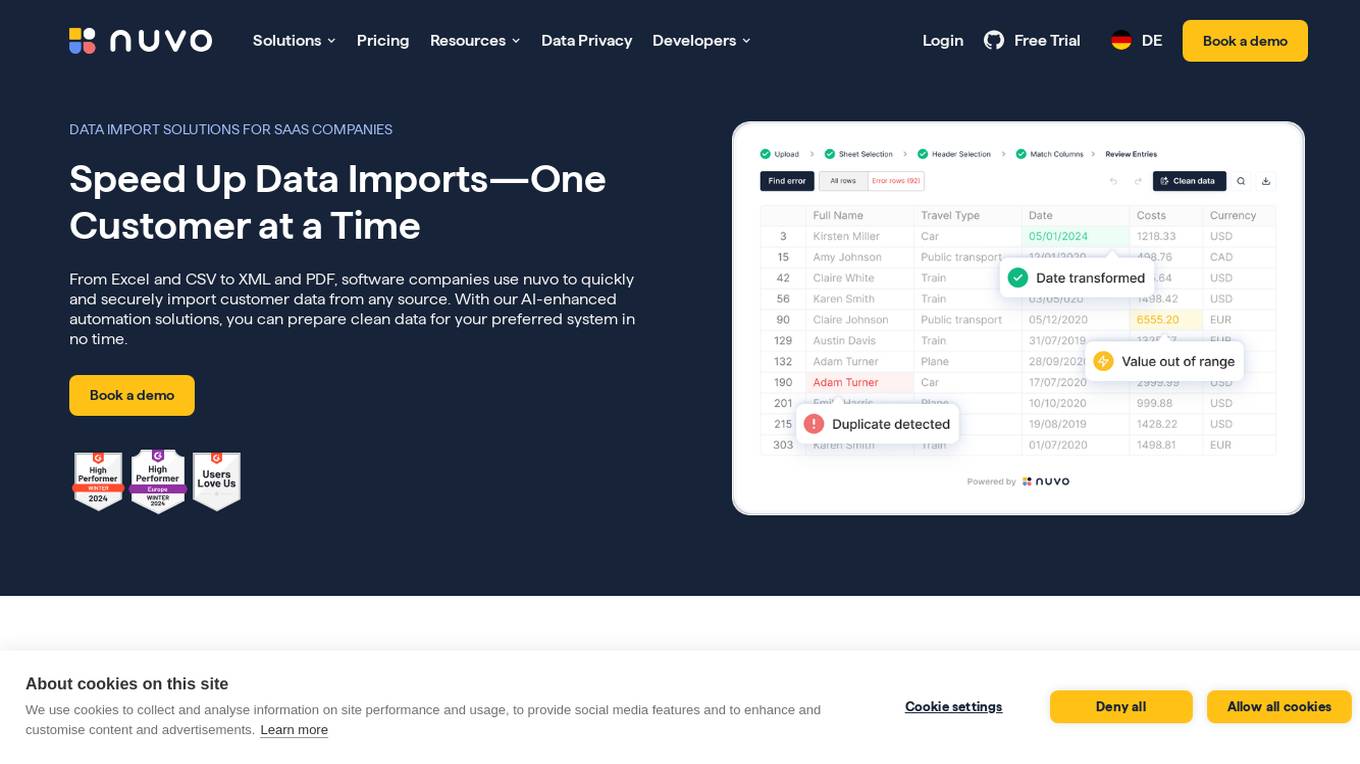

nuvo

nuvo is an AI-powered data import solution that offers fast, secure, and scalable data import solutions for software companies. It provides tools like nuvo Data Importer SDK and nuvo Data Pipeline to streamline manual and recurring ETL data imports, enabling users to manage data imports independently. With AI-enhanced automation, nuvo helps prepare clean data for preferred systems quickly and efficiently, reducing manual effort and improving data quality. The platform allows users to upload unlimited data in various formats, match imported data to system schemas, clean and validate data, and import clean data into target systems with just a click.

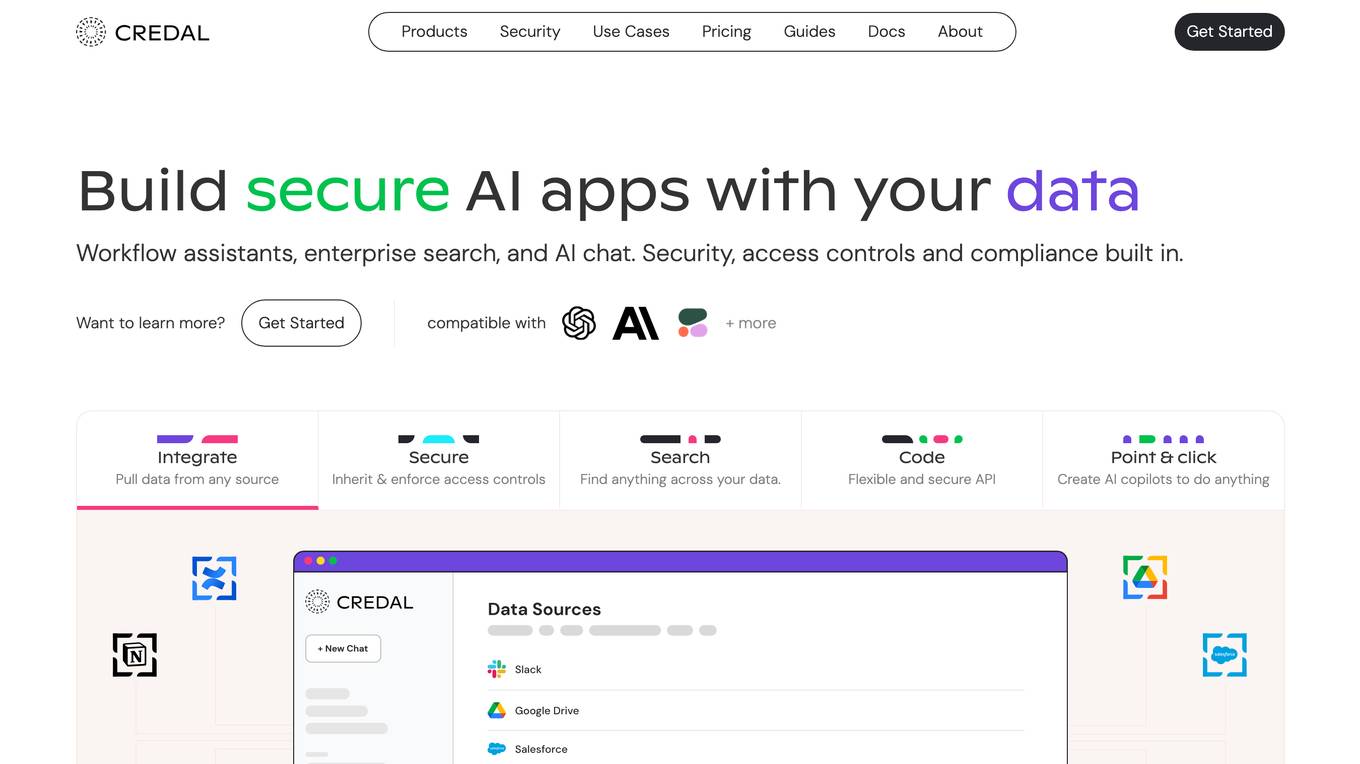

Credal

Credal is an AI tool that allows users to build secure AI assistants for enterprise operations. It enables every employee to create customized AI assistants with built-in security, permissions, and compliance features. Credal supports data integration, access control, search functionalities, and API development. The platform offers real-time sync, automatic permissions synchronization, and AI model deployment with security and compliance measures. It helps enterprises manage ETL pipelines, schedule tasks, and configure data processing. Credal ensures data protection, compliance with regulations like HIPAA, and comprehensive audit capabilities for generative AI applications.

Databricks

Databricks is a data and AI company that provides a unified platform for data, analytics, and AI. The platform includes a variety of tools and services for data management, data warehousing, real-time analytics, data engineering, data science, and AI development. Databricks also offers a variety of integrations with other tools and services, such as ETL tools, data ingestion tools, business intelligence tools, AI tools, and governance tools.

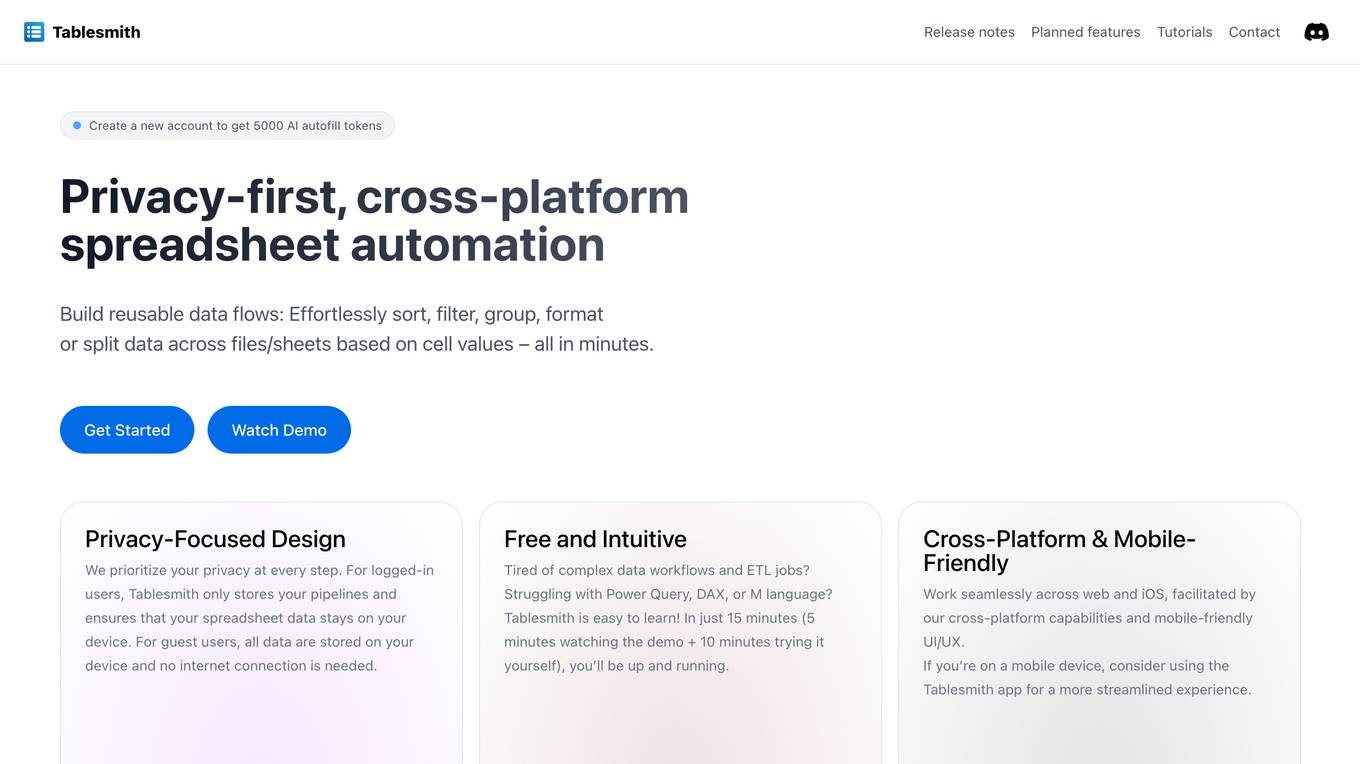

Tablesmith

Tablesmith is a free, privacy-first, and intuitive spreadsheet automation tool that allows users to build reusable data flows, effortlessly sort, filter, group, format, or split data across files/sheets based on cell values. It is designed to be easy to learn and use, with a focus on privacy and cross-platform compatibility. Tablesmith also offers an AI autofill feature that suggests and fills in information based on the user's prompt.

PurpleCube.ai

PurpleCube.ai is an AI-powered platform that revolutionizes data engineering by unifying, automating, and activating data processes. The platform offers real-time Gen AI assistance to enhance data team productivity, efficiency, and accuracy. PurpleCube.ai empowers data experts to drive business innovation, collaborate seamlessly, and deliver impactful business value through advanced analytics and data engineering capabilities. The platform is trusted by various enterprises globally for its comprehensive metadata management, governance, and generative AI features.

11 - Open Source Tools

ethereum-etl-airflow

This repository contains Airflow DAGs for extracting, transforming, and loading (ETL) data from the Ethereum blockchain into BigQuery. The DAGs use the Google Cloud Platform (GCP) services, including BigQuery, Cloud Storage, and Cloud Composer, to automate the ETL process. The repository also includes scripts for setting up the GCP environment and running the DAGs locally.

airbyte_serverless

AirbyteServerless is a lightweight tool designed to simplify the management of Airbyte connectors. It offers a serverless mode for running connectors, allowing users to easily move data from any source to their data warehouse. Unlike the full Airbyte-Open-Source-Platform, AirbyteServerless focuses solely on the Extract-Load process without a UI, database, or transform layer. It provides a CLI tool, 'abs', for managing connectors, creating connections, running jobs, selecting specific data streams, handling secrets securely, and scheduling remote runs. The tool is scalable, allowing independent deployment of multiple connectors. It aims to streamline the connector management process and provide a more agile alternative to the comprehensive Airbyte platform.

PyAirbyte

PyAirbyte brings the power of Airbyte to every Python developer by providing a set of utilities to use Airbyte connectors in Python. It enables users to easily manage secrets, work with various connectors like GitHub, Shopify, and Postgres, and contribute to the project. PyAirbyte is not a replacement for Airbyte but complements it, supporting data orchestration frameworks like Airflow and Snowpark. Users can develop ETL pipelines and import connectors from local directories. The tool simplifies data integration tasks for Python developers.

ezdata

Ezdata is a data processing and task scheduling system developed based on Python backend and Vue3 frontend. It supports managing multiple data sources, abstracting various data sources into a unified data model, integrating chatgpt for data question and answer functionality, enabling low-code data integration and visualization processing, scheduling single and dag tasks, and integrating a low-code data visualization dashboard system.

neo4j-runway

Neo4j Runway is a Python library that simplifies the process of migrating relational data into a graph. It provides tools to abstract communication with OpenAI for data discovery, generate data models, ingestion code, and load data into a Neo4j instance. The library leverages OpenAI LLMs for insights, Instructor Python library for modeling, and PyIngest for data loading. Users can visualize data models using graphviz and benefit from a seamless integration with Neo4j for efficient data migration.

DataEngineeringPilipinas

DataEngineeringPilipinas is a repository dedicated to data engineering resources in the Philippines. It serves as a platform for data engineering professionals to contribute and access high-quality content related to data engineering. The repository provides guidelines for contributing, including forking the repository, making changes, and submitting contributions. It emphasizes the importance of quality, relevance, and respect in the contributions made to the project. By following the guidelines and contributing to the repository, users can help build a valuable resource for the data engineering community in the Philippines and beyond.

dbt-airflow

A Python package that helps Data and Analytics engineers render dbt projects in Apache Airflow DAGs. It enables teams to automatically render their dbt projects in a granular level, creating individual Airflow tasks for every model, seed, snapshot, and test within the dbt project. This allows for full control at the task-level, improving visibility and management of data models within the team.

dataengineering-roadmap

A repository providing basic concepts, technical challenges, and resources on data engineering in Spanish. It is a curated list of free, Spanish-language materials found on the internet to facilitate the study of data engineering enthusiasts. The repository covers programming fundamentals, programming languages like Python, version control with Git, database fundamentals, SQL, design concepts, Big Data, analytics, cloud computing, data processing, and job search tips in the IT field.

airflow-provider-great-expectations

The 'airflow-provider-great-expectations' repository contains a set of Airflow operators for Great Expectations, a Python library used for testing and validating data. The operators enable users to run Great Expectations validations and checks within Apache Airflow workflows. The package requires Airflow 2.1.0+ and Great Expectations >=v0.13.9. It provides functionalities to work with Great Expectations V3 Batch Request API, Checkpoints, and allows passing kwargs to Checkpoints at runtime. The repository includes modules for a base operator and examples of DAGs with sample tasks demonstrating the operator's functionality.

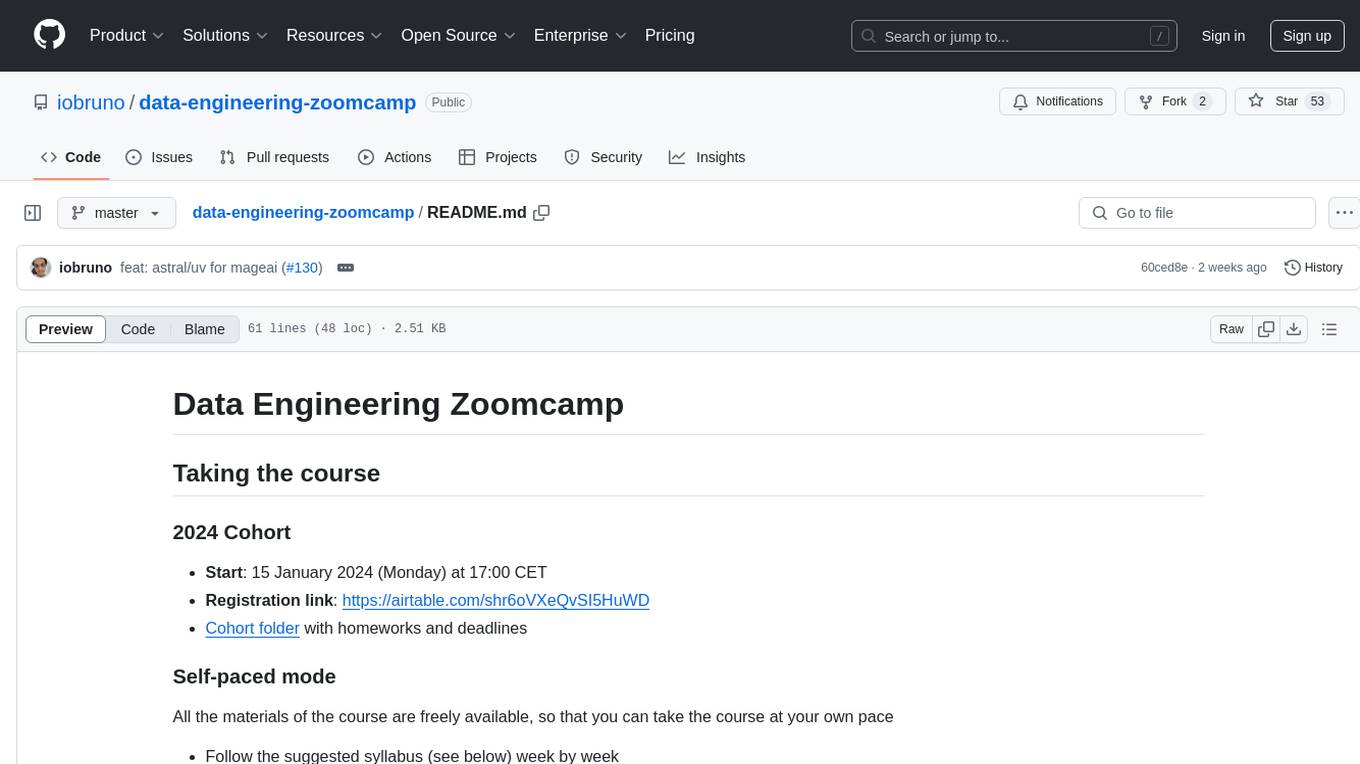

data-engineering-zoomcamp

Data Engineering Zoomcamp is a comprehensive course covering various aspects of data engineering, including data ingestion, workflow orchestration, data warehouse, analytics engineering, batch processing, and stream processing. The course provides hands-on experience with tools like Python, Rust, Terraform, Airflow, BigQuery, dbt, PySpark, Kafka, and more. Students will learn how to work with different data technologies to build scalable and efficient data pipelines for analytics and processing. The course is designed for individuals looking to enhance their data engineering skills and gain practical experience in working with big data technologies.

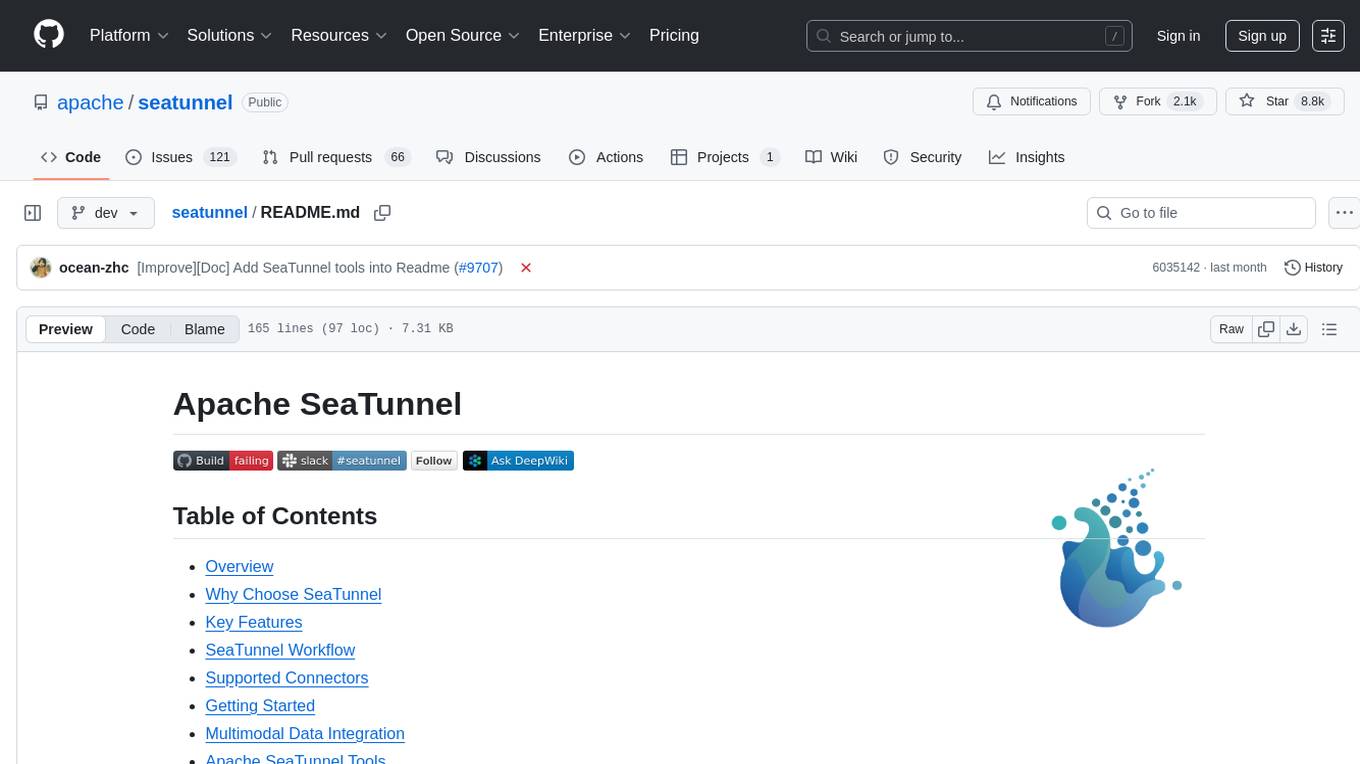

seatunnel

SeaTunnel is a high-performance, distributed data integration tool trusted by numerous companies for synchronizing vast amounts of data daily. It addresses common data integration challenges by seamlessly integrating with diverse data sources, supporting multimodal data integration, complex synchronization scenarios, resource efficiency, and quality monitoring. With over 100 connectors, SeaTunnel offers batch-stream integration, distributed snapshot algorithm, multi-engine support, JDBC multiplexing, and log parsing. It provides high throughput, low latency, real-time monitoring, and supports two job development methods. Users can configure jobs, select execution engines, and parallelize data using source connectors. SeaTunnel also supports multimodal data integration, Apache SeaTunnel tools, real-world use cases, and visual management of jobs through the SeaTunnel Web Project.

1 - OpenAI Gpts

Python Pro

Assistant Python ultra-personnalisé, conçu pour transformer les programmeurs de tous niveaux en maîtres de Python. Spécialisé dans l'analyse approfondie du code, les tutoriels interactifs, et l'optimisation de performance.