Best AI tools for< Cloud Operations Engineer >

Infographic

20 - AI tool Sites

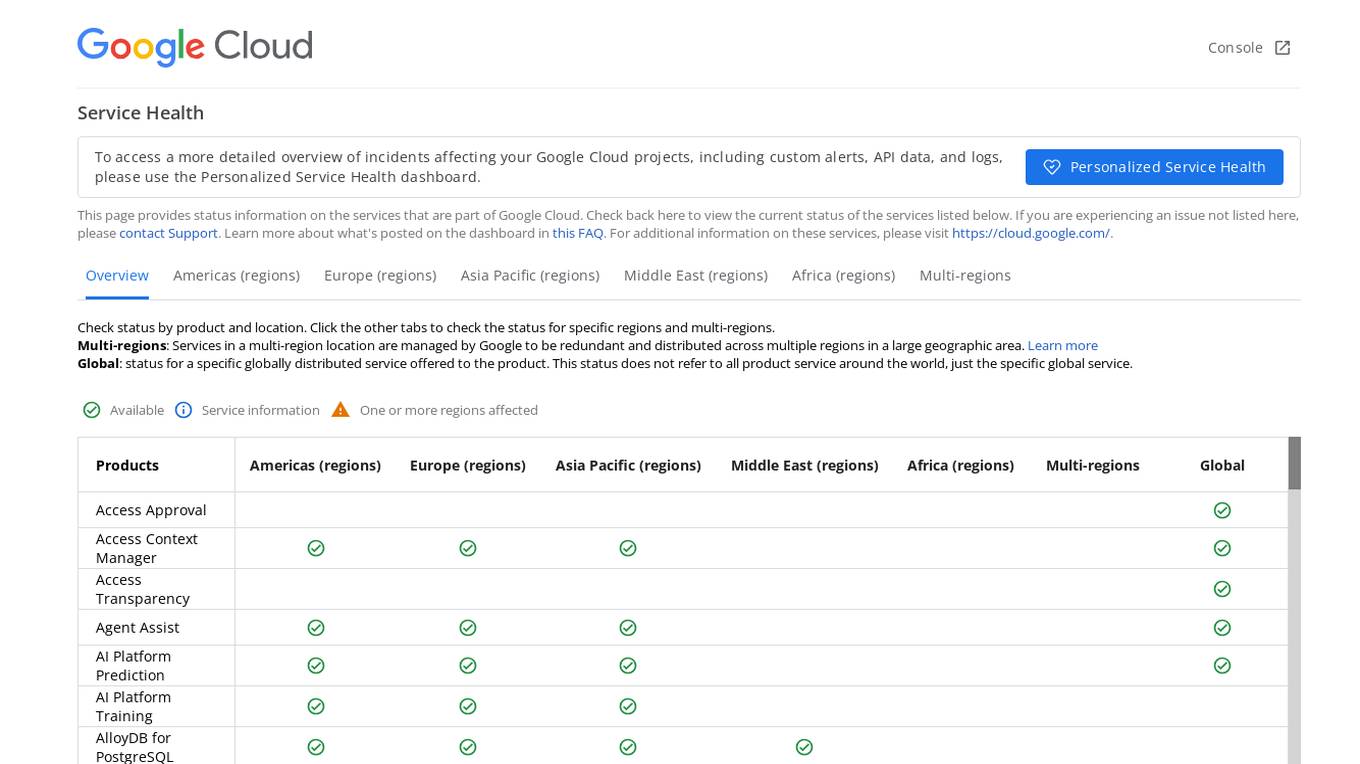

Google Cloud Service Health Console

Google Cloud Service Health Console provides status information on the services that are part of Google Cloud. It allows users to check the current status of services, view detailed overviews of incidents affecting their Google Cloud projects, and access custom alerts, API data, and logs through the Personalized Service Health dashboard. The console also offers a global view of the status of specific globally distributed services and allows users to check the status by product and location.

Cirroe AI

Cirroe AI is an intelligent chatbot designed to help users deploy and troubleshoot their AWS cloud infrastructure quickly and efficiently. With Cirroe AI, users can experience seamless automation, reduced downtime, and increased productivity by simplifying their AWS cloud operations. The chatbot allows for fast deployments, intuitive debugging, and cost-effective solutions, ultimately saving time and boosting efficiency in managing cloud infrastructure.

cloudNito

cloudNito is an AI-driven platform that specializes in cloud cost optimization and management for businesses using AWS services. The platform offers automated cost optimization, comprehensive insights and analytics, unified cloud management, anomaly detection, cost and usage explorer, recommendations for waste reduction, and resource optimization. By leveraging advanced AI solutions, cloudNito aims to help businesses efficiently manage their AWS cloud resources, reduce costs, and enhance performance.

CloudKeeper

CloudKeeper is a comprehensive cloud cost optimization partner that offers solutions for AWS, Azure, and GCP. The platform provides services such as rate optimization, usage optimization, cloud consulting & support, and cloud cost visibility. CloudKeeper combines group buying, commitments management, expert consulting, and analytics to reduce cloud costs and maximize value. With a focus on savings, visibility, and services bundled together, CloudKeeper aims to simplify the cloud cost optimization journey for businesses of all sizes.

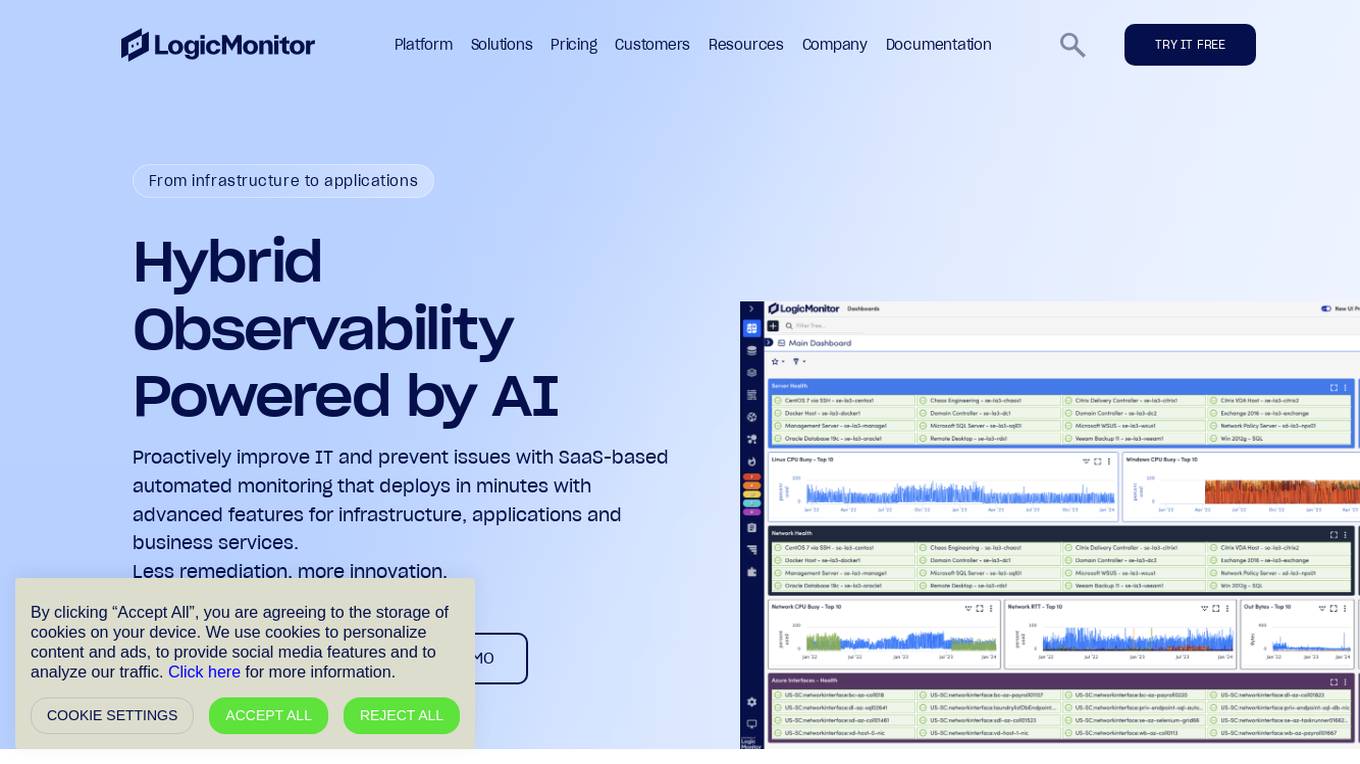

LogicMonitor

LogicMonitor is a cloud-based infrastructure monitoring platform that provides real-time insights and automation for comprehensive, seamless monitoring with agentless architecture. It offers a unified platform for monitoring infrastructure, applications, and business services, with advanced features for hybrid observability. LogicMonitor's AI-driven capabilities simplify complex IT ecosystems, accelerate incident response, and empower organizations to thrive in the digital landscape.

Microtica

Microtica is an AI-powered cloud delivery platform that offers a comprehensive suite of DevOps tools to help users build, deploy, and optimize their infrastructure efficiently. With features like AI Incident Investigator, AI Infrastructure Builder, Kubernetes deployment simplification, alert monitoring, pipeline automation, and cloud monitoring, Microtica aims to streamline the development and management processes for DevOps teams. The platform provides real-time insights, cost optimization suggestions, and guided deployments, making it a valuable tool for businesses looking to enhance their cloud infrastructure operations.

Palo Alto Networks

Palo Alto Networks is a cybersecurity company offering advanced security solutions powered by Precision AI to protect modern enterprises from cyber threats. The company provides network security, cloud security, and AI-driven security operations to defend against AI-generated threats in real time. Palo Alto Networks aims to simplify security and achieve better security outcomes through platformization, intelligence-driven expertise, and proactive monitoring of sophisticated threats.

n8n

n8n is a powerful workflow automation software and tool that offers advanced AI capabilities. It is a popular platform for technical teams to automate workflows, integrate various services, and build autonomous agents. With over 400 integrations, n8n enables users to save time, streamline operations, and enhance security through AI-powered solutions. The tool supports self-hosting, external libraries, and a user-friendly interface for both coding and non-coding users.

CloudDefense.AI

CloudDefense.AI is an industry-leading multi-layered Cloud Native Application Protection Platform (CNAPP) that safeguards cloud infrastructure and cloud-native apps with expertise, precision, and confidence. It offers comprehensive cloud security solutions, vulnerability management, compliance, and application security testing. The platform utilizes advanced AI technology to proactively detect and analyze real-time threats, ensuring robust protection for businesses against cyber threats.

Microtica AI Deployment Tool

Microtica AI Deployment Tool is an advanced platform that leverages artificial intelligence to streamline the deployment process of cloud infrastructure. The tool offers features such as incident investigation with AI assistance, designing and deploying cloud infrastructure using natural language, and automated deployments. With Microtica, users can easily analyze logs, metrics, and system state to identify root causes and solutions, as well as get infrastructure-as-code and architecture diagrams. The platform aims to simplify the deployment process and enhance productivity by integrating AI capabilities into the workflow.

KubeHelper

KubeHelper is an AI-powered tool designed to reduce Kubernetes downtime by providing troubleshooting solutions and command searches. It seamlessly integrates with Slack, allowing users to interact with their Kubernetes cluster in plain English without the need to remember complex commands. With features like troubleshooting steps, command search, infrastructure management, scaling capabilities, and service disruption detection, KubeHelper aims to simplify Kubernetes operations and enhance system reliability.

Abnormal

Abnormal is an AI-powered platform that leverages superhuman understanding of human behavior to protect against email attacks such as phishing, social engineering, and account takeovers. The platform offers unified protection across email and cloud applications, behavioral anomaly detection, account compromise detection, data security, and autonomous AI agents for security operations. Abnormal is recognized as a leader in email security and AI-native security, trusted by over 3,000 customers, including 20% of the Fortune 500. The platform aims to autonomously protect humans, reduce risks, save costs, accelerate AI adoption, and provide industry-leading security solutions.

Abnormal Security

Abnormal Security is an AI-powered platform that leverages superhuman understanding of human behavior to protect against email threats such as phishing, social engineering, and account takeovers. The platform is trusted by over 3,000 customers, including 25% of the Fortune 500 companies. Abnormal Security offers a comprehensive cloud email security solution, behavioral anomaly detection, SaaS security, and autonomous AI security agents to provide multi-layered protection against advanced email attacks. The platform is recognized as a leader in email security and AI-native security, delivering unmatched protection and reducing the risk of phishing attacks by 90%.

Wallaroo.AI

Wallaroo.AI is an AI inference platform that offers production-grade AI inference microservices optimized on OpenVINO for cloud and Edge AI application deployments on CPUs and GPUs. It provides hassle-free AI inferencing for any model, any hardware, anywhere, with ultrafast turnkey inference microservices. The platform enables users to deploy, manage, observe, and scale AI models effortlessly, reducing deployment costs and time-to-value significantly.

ZENfra.ai

ZENfra.ai is an AI-powered platform that offers innovative solutions for InfraOps, SecOps, FinOps, and more. It provides cutting-edge technologies and industry expertise to help organizations achieve unparalleled success in the digital landscape. The platform features solutions for cybersecurity risk management, financial management, IT infrastructure oversight, migration insights, and observability. ZENfra.ai is committed to excellence, providing comprehensive services to transform the way businesses operate, secure, and optimize their digital assets.

Cyguru

Cyguru is an all-in-one cloud-based AI Security Operation Center (SOC) that offers a comprehensive range of features for a robust and secure digital landscape. Its Security Operation Center is the cornerstone of its service domain, providing AI-Powered Attack Detection, Continuous Monitoring for Vulnerabilities and Misconfigurations, Compliance Assurance, SecPedia: Your Cybersecurity Knowledge Hub, and Advanced ML & AI Detection. Cyguru's AI-Powered Analyst promptly alerts users to any suspicious behavior or activity that demands attention, ensuring timely delivery of notifications. The platform is accessible to everyone, with up to three free servers and subsequent pricing that is more than 85% below the industry average.

Seldon

Seldon is an MLOps platform that helps enterprises deploy, monitor, and manage machine learning models at scale. It provides a range of features to help organizations accelerate model deployment, optimize infrastructure resource allocation, and manage models and risk. Seldon is trusted by the world's leading MLOps teams and has been used to install and manage over 10 million ML models. With Seldon, organizations can reduce deployment time from months to minutes, increase efficiency, and reduce infrastructure and cloud costs.

MixMode

MixMode is the world's most advanced AI for threat detection, offering a dynamic threat detection platform that utilizes patented Third Wave AI technology. It provides real-time detection of known and novel attacks with high precision, self-supervised learning capabilities, and context-awareness to defend against modern threats. MixMode empowers modern enterprises with unprecedented speed and scale in threat detection, delivering unrivaled capabilities without the need for predefined rules or human input. The platform is trusted by top security teams and offers rapid deployment, customization to individual network dynamics, and state-of-the-art AI-driven threat detection.

Darktrace

Darktrace is a cybersecurity platform that leverages AI technology to provide proactive protection against cyber threats. It offers cloud-native AI security solutions for networks, emails, cloud environments, identity protection, and endpoint security. Darktrace's AI Analyst investigates alerts at the speed and scale of AI, mimicking human analyst behavior. The platform also includes services such as 24/7 expert support and incident management. Darktrace's AI is built on a unique approach where it learns from the organization's data to detect and respond to threats effectively. The platform caters to organizations of all sizes and industries, offering real-time detection and autonomous response to known and novel threats.

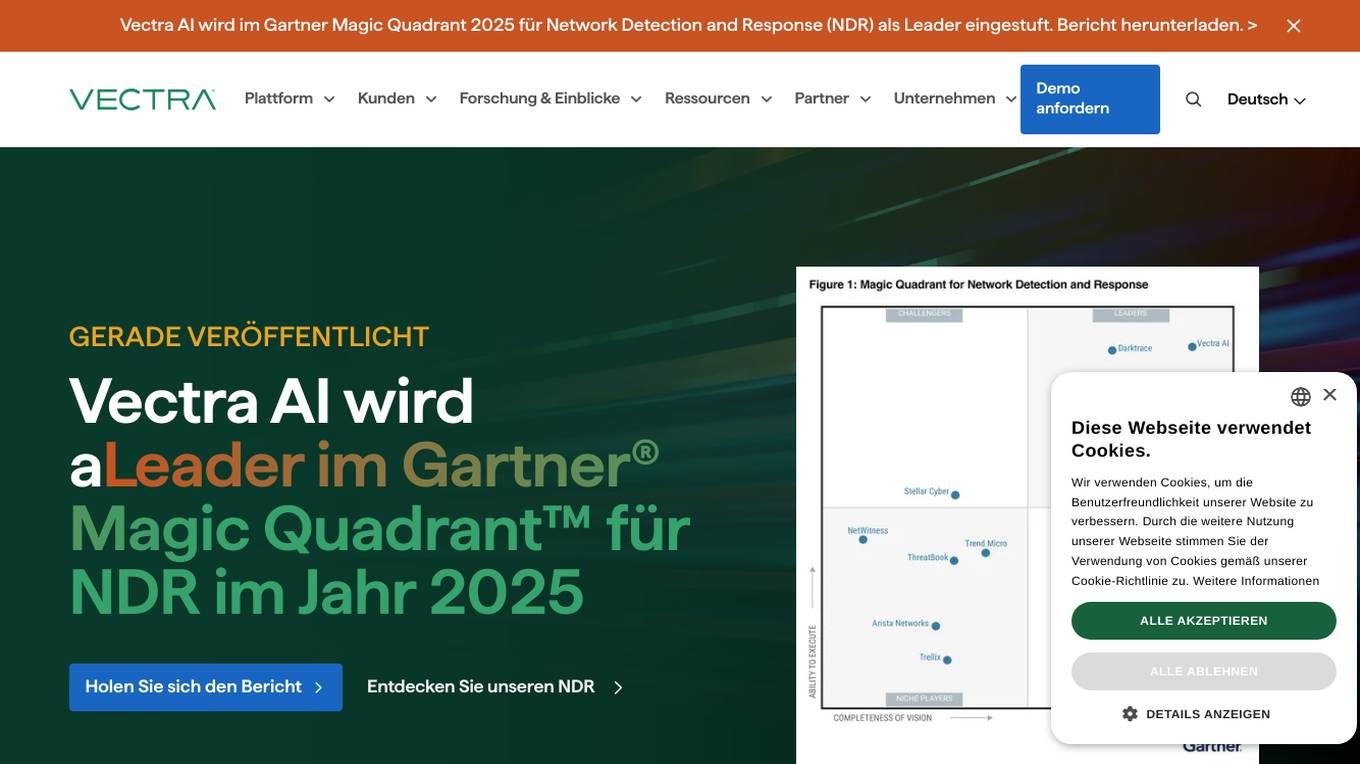

Vectra AI

Vectra AI is a leading cybersecurity AI application that stops attacks that others cannot. It is recognized in the Gartner Magic Quadrant 2025 for Network Detection and Response (NDR) as a leader. Vectra AI's platform protects modern networks from advanced threats by providing real-time attack signal intelligence and AI-driven detections. It equips security analysts with the information needed to quickly stop attacks across various security application scenarios. The application covers a wide range of security areas such as SOC modernization, SIEM optimization, IDS replacement, EDR extension, cloud resilience, and more.

5 - Open Source Tools

cb-tumblebug

CB-Tumblebug (CB-TB) is a system for managing multi-cloud infrastructure consisting of resources from multiple cloud service providers. It provides an overview, features, and architecture. The tool supports various cloud providers and resource types, with ongoing development and localization efforts. Users can deploy a multi-cloud infra with GPUs, enjoy multiple LLMs in parallel, and utilize LLM-related scripts. The tool requires Linux, Docker, Docker Compose, and Golang for building the source. Users can run CB-TB with Docker Compose or from the Makefile, set up prerequisites, contribute to the project, and view a list of contributors. The tool is licensed under an open-source license.

robusta

Robusta is a tool designed to enhance Prometheus notifications for Kubernetes environments. It offers features such as smart grouping to reduce notification spam, AI investigation for alert analysis, alert enrichment with additional data like pod logs, self-healing capabilities for defining auto-remediation rules, advanced routing options, problem detection without PromQL, change-tracking for Kubernetes resources, auto-resolve functionality, and integration with various external systems like Slack, Teams, and Jira. Users can utilize Robusta with or without Prometheus, and it can be installed alongside existing Prometheus setups or as part of an all-in-one Kubernetes observability stack.

AI-CloudOps

AI+CloudOps is a cloud-native operations management platform designed for enterprises. It aims to integrate artificial intelligence technology with cloud-native practices to significantly improve the efficiency and level of operations work. The platform offers features such as AIOps for monitoring data analysis and alerts, multi-dimensional permission management, visual CMDB for resource management, efficient ticketing system, deep integration with Prometheus for real-time monitoring, and unified Kubernetes management for cluster optimization.

awesome-mcp-servers-devops

This repository, 'awesome-mcp-servers-devops', is a curated list of Model Context Protocol servers for DevOps workflows. It includes servers for various aspects of DevOps such as infrastructure, CI/CD, monitoring, security, and cloud operations. The repository provides information on different MCP servers available for tools like GitHub, GitLab, Azure DevOps, Gitea, Terraform, Vault, Pulumi, Kubernetes, Docker Hub, Portainer, Qovery, various command line and local operation tools, browser automation tools, code execution tools, coding agents, aggregators, CI/CD tools like Argo CD, Jenkins, GitHub Actions, Codemagic, DevOps visibility tools, build tools, cloud platforms like AWS, Azure, Cloudflare, Alibaba Cloud, observability tools like Grafana, Datadog, Prometheus, VictoriaMetrics, Alertmanager, APM & monitoring tools, security tools like Snyk, Semgrep, and community security servers, collaboration tools like Atlassian, Jira, project management tools, service desks, Notion, and more.

sre-agent

SRE Agent is an open-source AI agent designed to help Site Reliability Engineers (SREs) debug, maintain healthy Kubernetes systems, and simplify DevOps tasks. With a command-line interface (CLI), users can interact directly with the agent to diagnose issues, report diagnostics, and streamline operations. The agent supports root cause debugging, Kubernetes log querying, GitHub codebase search, and CLI-powered interactions. It is powered by the Model Context Protocol (MCP) for seamless connectivity. Users can configure AWS credentials, GitHub integration, and Anthropic API key to start monitoring deployments and diagnosing issues. The tool is structured with Python services and TypeScript MCP servers for development and maintenance.

20 - OpenAI Gpts

Cloud Architecture Advisor

Guides cloud strategy and architecture to optimize business operations.

Cloud Networking Advisor

Optimizes cloud-based networks for efficient organizational operations.

Cloud Price

Your up-to-date GCP, AWS and Azure pricing expert with the latest virtual machines details.

Cloud Scholar

Super astronomer identifying clouds in English and Chinese, sharing facts in Chinese.

cloud exams coach

AI Cloud Computing (Engineering, Architecture, DevOps ) Certifications Coach for AWS, GCP, and Azure. I provide timed mock exams.

Cloud Services Management Advisor

Manages and optimizes organization's cloud resources and services.

Cloud Certifications

AI Cloud Certification Assistant: Google Cloud expert with timed exams and specific service exercises.

AzurePilot | Steer & Streamline Your Cloud Costs🌐

Specialized advisor on Azure costs and optimizations

Alexandre Leroy : Architecte de Solutions Cloud

Architecte cloud chez KingLand et passionné de nature. Conception d'architectures cloud, expertise en solutions cloud, capacité d'innovation technologique, compétences en gestion de projet, collaboration interdépartementale.

Cloud Computing

Expert in cloud computing, offering insights on services, security, and infrastructure.

TMF Cloud Diagram Assistant

Specializes in PlantUML diagrams with structured API and microservice groups

Commerce Cloud Guru

Professional voice for SFCC B2C Commerce Cloud expertise. 🔒 Unlock the full potential of B2C Commerce Cloud

JIMAI - Cloud Researcher

Cybernetic humanoid expert in extraterrestrial tech, driven to merge past and future.

Javascript Cloud services coding assistant

Expert on google cloud services with javascript