Best AI tools for< Process Evaluation Data >

20 - AI tool Sites

JobXRecruiter

JobXRecruiter is an AI-powered CV review tool designed for recruiters to streamline the candidate evaluation process. It automates the review of resumes, provides detailed candidate analysis, and helps recruiters save time by focusing on hiring rather than manual screening. The tool offers a 1-minute setup, reduces candidate evaluation time, and eliminates tedious screening tasks. With JobXRecruiter, recruiters can create projects for each vacancy, receive match scores for candidates, and easily shortlist the best candidates without opening individual CVs. The application is secure, efficient, and a game-changer for recruiters looking to optimize their hiring process.

Future AGI

Future AGI is a revolutionary AI data management platform that aims to achieve 99% accuracy in AI applications across software and hardware. It provides a comprehensive evaluation and optimization platform for enterprises to enhance the performance of their AI models. Future AGI offers features such as creating trustworthy, accurate, and responsible AI, 10x faster processing, generating and managing diverse synthetic datasets, testing and analyzing agentic workflow configurations, assessing agent performance, enhancing LLM application performance, monitoring and protecting applications in production, and evaluating AI across different modalities.

MTestHub

MTestHub is an all-in-one recruiting and assessment automation solution that streamlines the hiring process for organizations. It harnesses AI technology to identify top talent, provides an authentic online examination platform, and offers features such as auto screening, shortlisting, efficient interviews, and post-shortlisting actions. The platform aims to enhance recruitment efficiency, improve candidate experience, and reduce administrative burdens through automation and data-driven insights.

Reppls

Reppls is an AI Interview Agents tool designed for data-driven hiring processes. It helps companies interview all applicants to identify the right talents hidden behind uninformative CVs. The tool offers seamless integration with daily tools, such as Zoom and MS Teams, and provides deep technical assessments in the early stages of hiring, allowing HR specialists to focus on evaluating soft skills. Reppls aims to transform the hiring process by saving time spent on screening, interviewing, and assessing candidates.

Weights & Biases

Weights & Biases is an AI tool that offers documentation, guides, tutorials, and support for using AI models in applications. The platform provides two main products: W&B Weave for integrating AI models into code and W&B Models for building custom AI models. Users can access features such as tracing, output evaluation, cost estimates, hyperparameter sweeps, model registry, and more. Weights & Biases aims to simplify the process of working with AI models and improving model reproducibility.

Deal Protectors

Deal Protectors is an AI-driven website designed to help users evaluate their car deals to ensure they are getting the best possible price. The platform allows users to upload their deal or input essential details for analysis by an advanced AI machine. Deal Protectors aims to provide transparency, empowerment, and community support in the car purchasing process by comparing deals with national and regional averages. Additionally, the website features a protection forum for sharing dealership experiences, a game called 'Beat The Dealer,' and testimonials from satisfied customers.

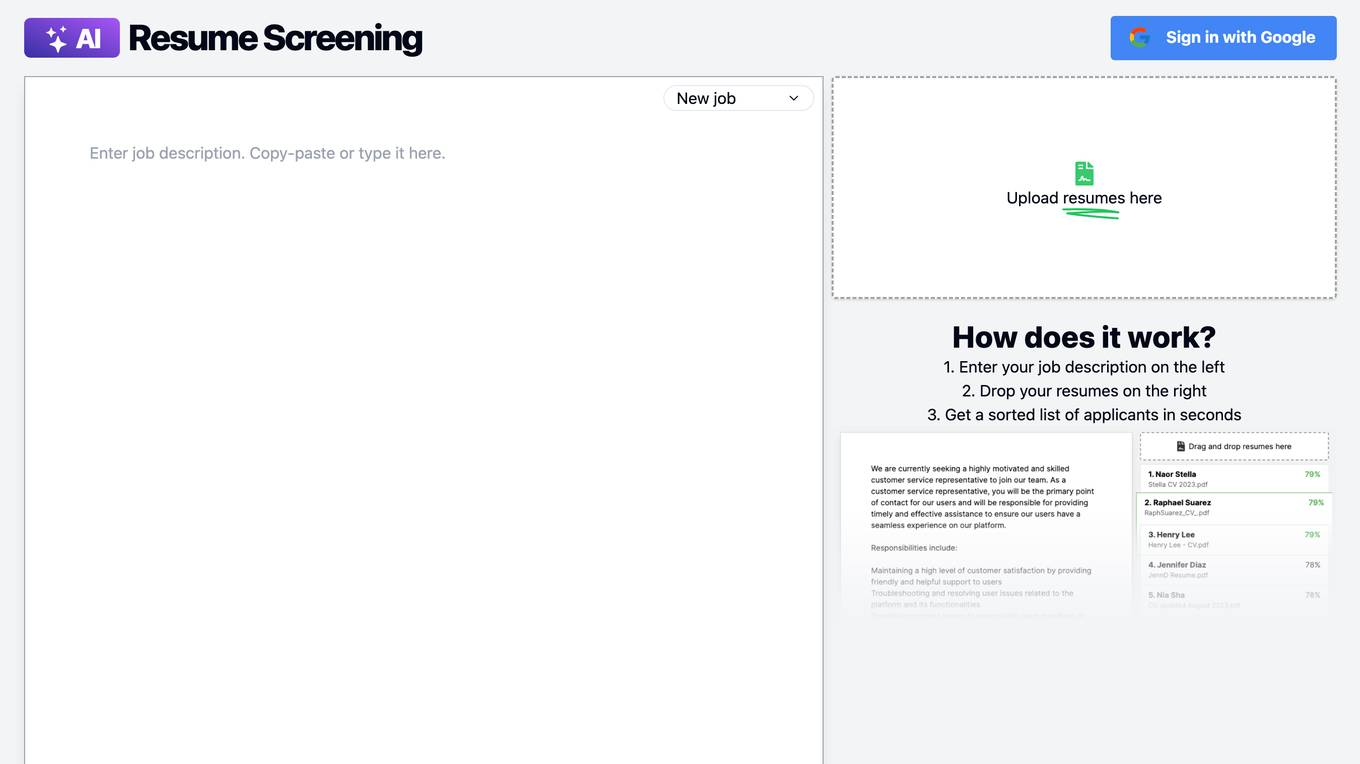

Resume Screening AI

Resume Screening AI is an AI application designed to help recruiters, hiring managers, and HR managers screen resumes in bulk efficiently and accurately. By leveraging AI algorithms, the tool automates the screening process, saving time and improving the quality of hire. It offers benefits such as time and cost savings, improved accuracy, enhanced objectivity, and a better candidate experience. The tool uses end-to-end encryption for data security and stores resume file fingerprints and parsed text for easy retrieval. With a focus on optimizing the recruitment process, Resume Screening AI is a transformative solution for businesses looking to attract and identify the most suitable candidates.

YellowGoose

YellowGoose is an AI tool that offers intelligent analysis of resumes. It utilizes advanced algorithms to extract and analyze key information from resumes, helping users to streamline the recruitment process. With YellowGoose, users can quickly evaluate candidates and make informed hiring decisions based on data-driven insights.

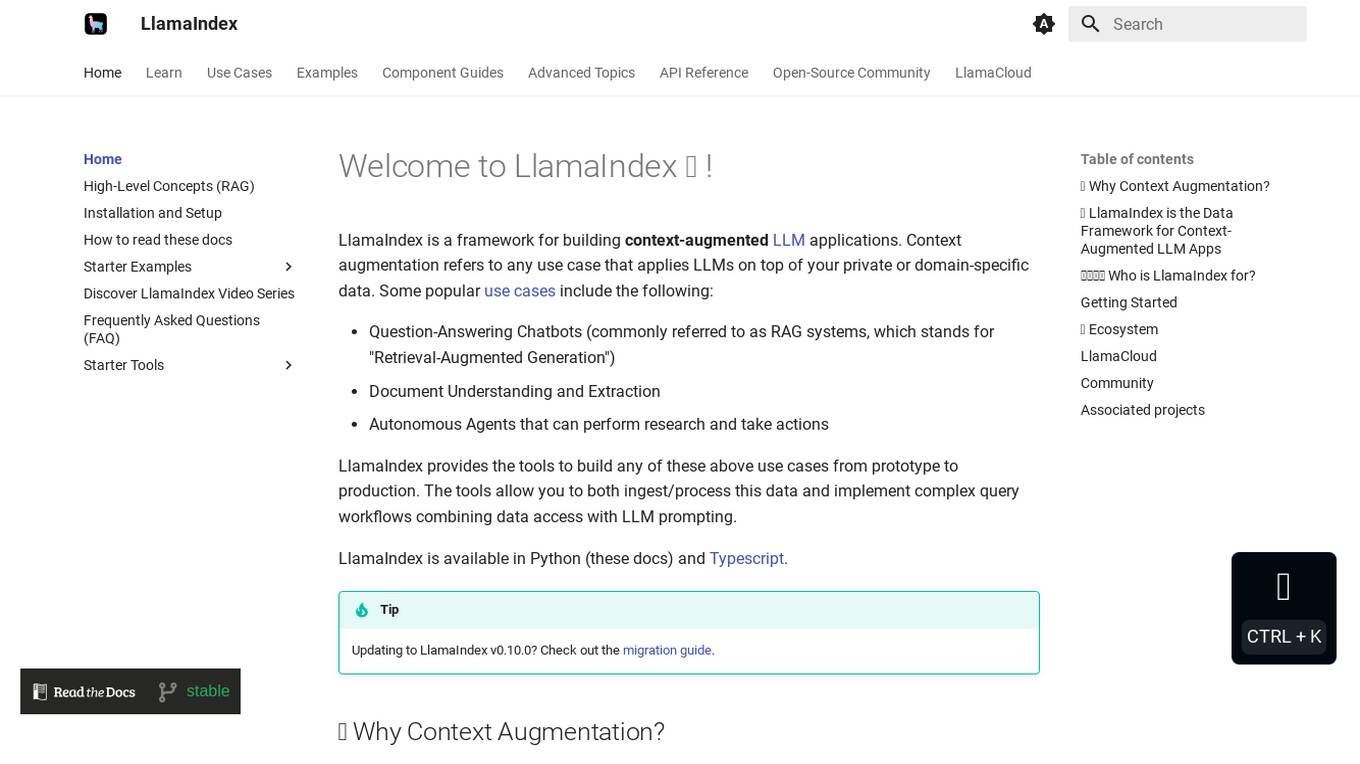

LlamaIndex

LlamaIndex is a framework for building context-augmented Large Language Model (LLM) applications. It provides tools to ingest and process data, implement complex query workflows, and build applications like question-answering chatbots, document understanding systems, and autonomous agents. LlamaIndex enables context augmentation by combining LLMs with private or domain-specific data, offering tools for data connectors, data indexes, engines for natural language access, chat engines, agents, and observability/evaluation integrations. It caters to users of all levels, from beginners to advanced developers, and is available in Python and Typescript.

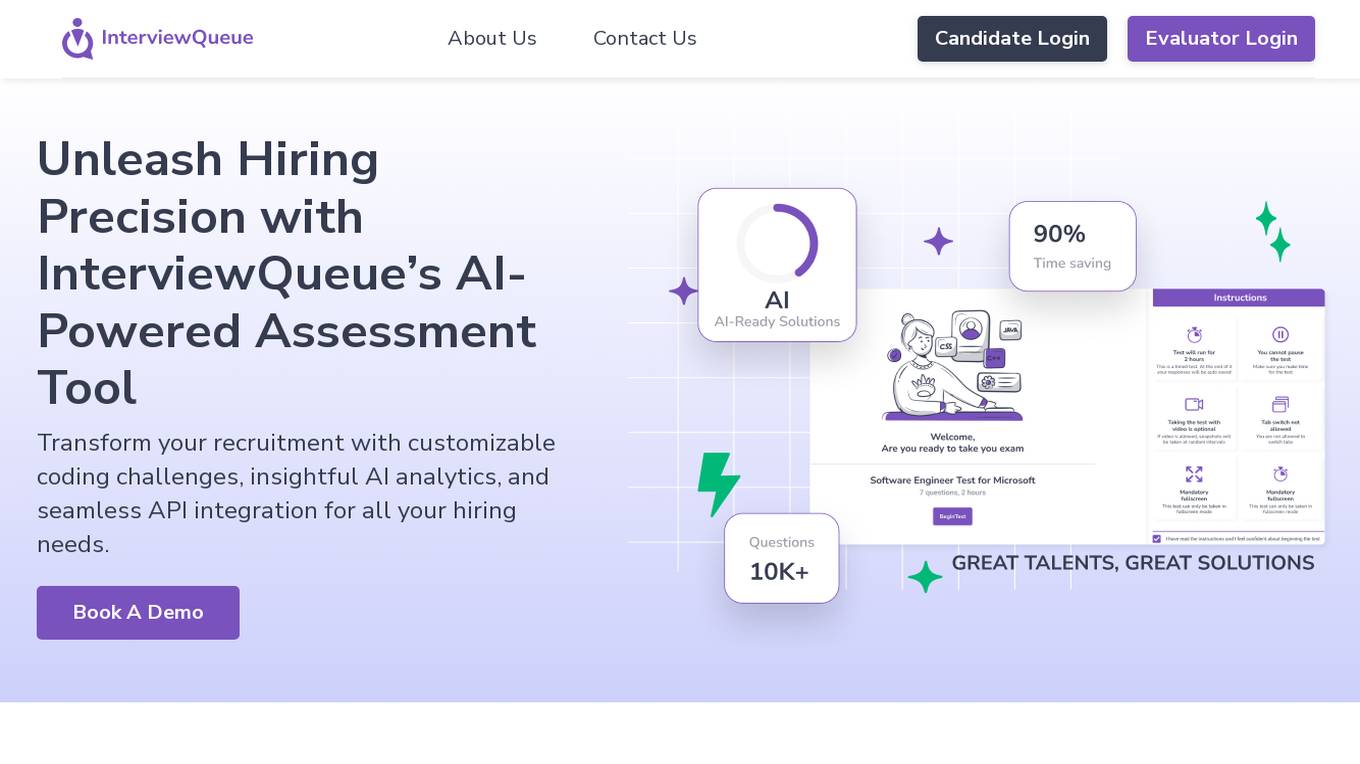

InterviewQueue

InterviewQueue is an AI-powered online assessment software platform that revolutionizes the recruitment process. It offers customizable coding challenges, insightful AI analytics, and seamless API integration for efficient hiring. With features like custom assessments, AI evaluation, and API integration, InterviewQueue aims to streamline the recruitment process and provide objective evaluations. The platform helps in making data-driven hiring decisions, optimizing the interview process, and enhancing the candidate experience. InterviewQueue focuses on efficiency, customization, objective evaluation, data-driven decisions, and candidate-centric assessments.

AI Elections Accord

AI Elections Accord is a tech accord aimed at combating the deceptive use of AI in the 2024 elections. It sets expectations for managing risks related to deceptive AI election content on large-scale platforms. The accord focuses on prevention, provenance, detection, responsive protection, evaluation, public awareness, and resilience to safeguard the democratic process. It emphasizes collective efforts, education, and the development of defensive tools to protect public debate and build societal resilience against deceptive AI content.

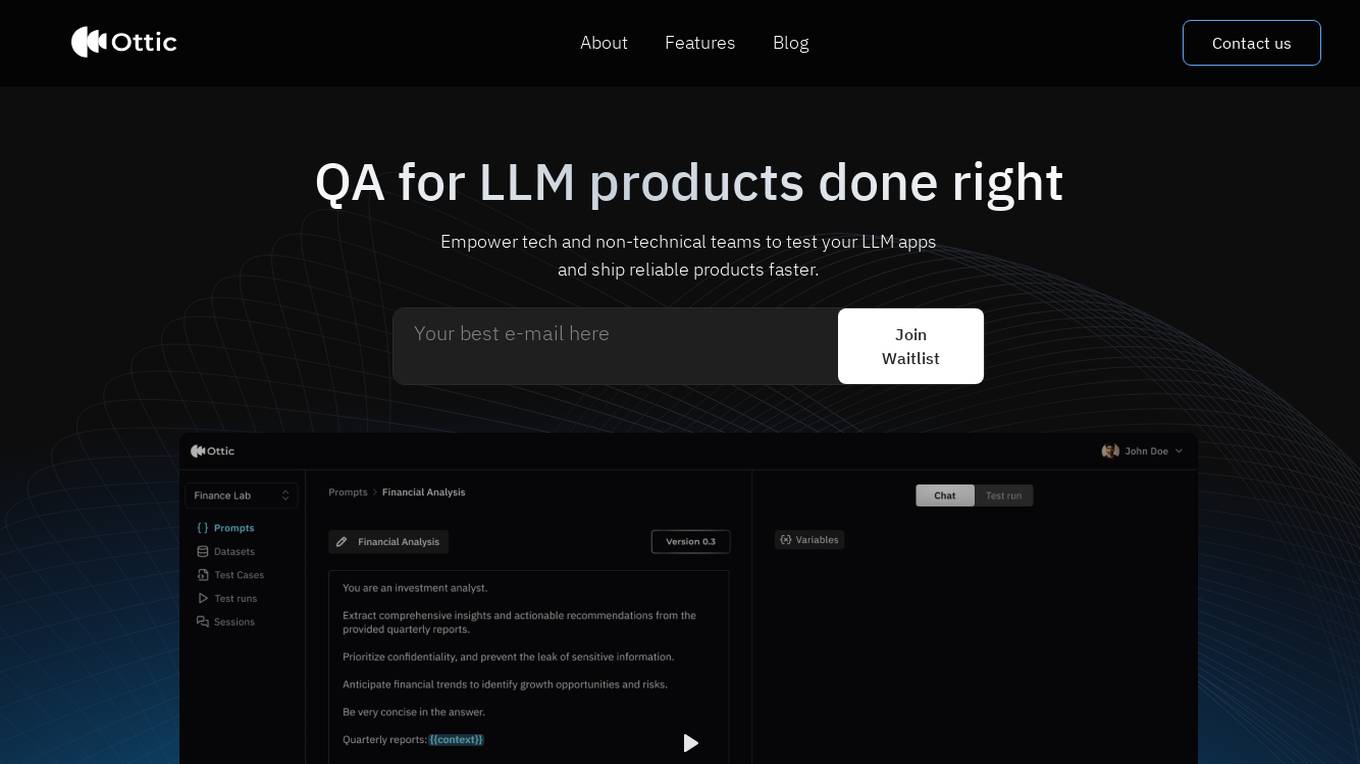

Ottic

Ottic is an AI tool designed to empower both technical and non-technical teams to test Language Model (LLM) applications efficiently and accelerate the development cycle. It offers features such as a 360º view of the QA process, end-to-end test management, comprehensive LLM evaluation, and real-time monitoring of user behavior. Ottic aims to bridge the gap between technical and non-technical team members, ensuring seamless collaboration and reliable product delivery.

HappyML

HappyML is an AI tool designed to assist users in machine learning tasks. It provides a user-friendly interface for running machine learning algorithms without the need for complex coding. With HappyML, users can easily build, train, and deploy machine learning models for various applications. The tool offers a range of features such as data preprocessing, model evaluation, hyperparameter tuning, and model deployment. HappyML simplifies the machine learning process, making it accessible to users with varying levels of expertise.

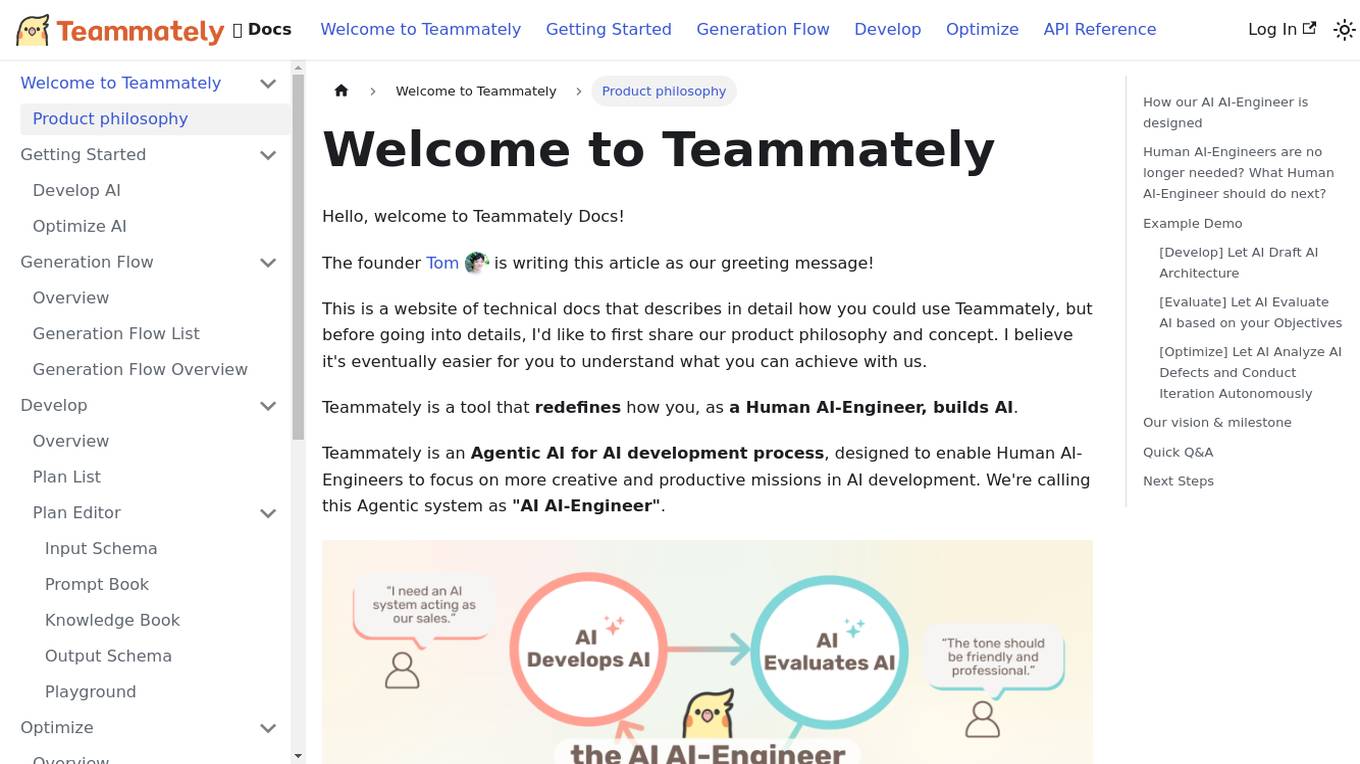

Teammately

Teammately is an AI tool that redefines how Human AI-Engineers build AI. It is an Agentic AI for AI development process, designed to enable Human AI-Engineers to focus on more creative and productive missions in AI development. Teammately follows the best practices of Human LLM DevOps and offers features like Development Prompt Engineering, Knowledge Tuning, Evaluation, and Optimization to assist in the AI development process. The tool aims to revolutionize AI engineering by allowing AI AI-Engineers to handle technical tasks, while Human AI-Engineers focus on planning and aligning AI with human preferences and requirements.

LifeShack

LifeShack is an AI-powered job search tool that revolutionizes the job application process. It automates job searching, evaluation, and application submission, saving users time and increasing their chances of landing top-notch opportunities. With features like automatic job matching, AI-optimized cover letters, and tailored resumes, LifeShack streamlines the job search experience and provides peace of mind to job seekers.

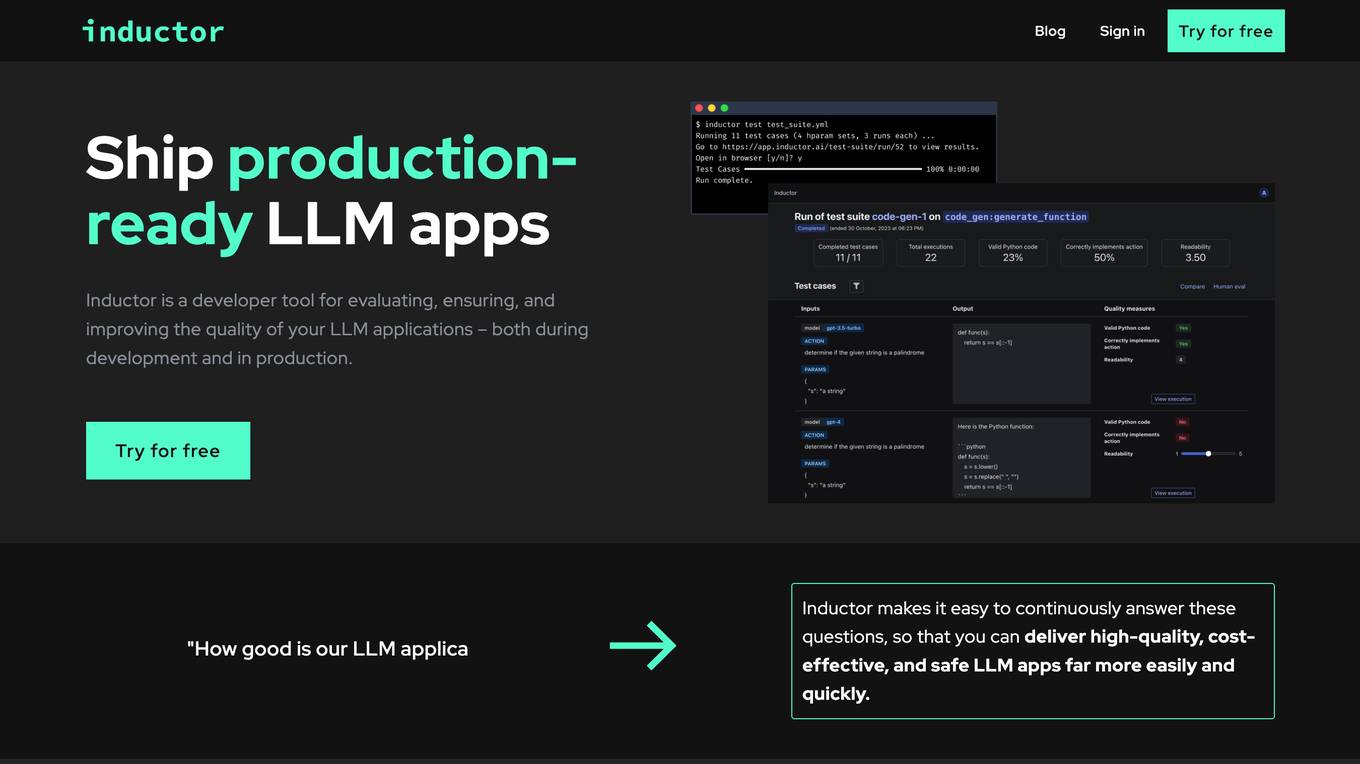

Inductor

Inductor is a developer tool for evaluating, ensuring, and improving the quality of your LLM applications – both during development and in production. It provides a fantastic workflow for continuous testing and evaluation as you develop, so that you always know your LLM app’s quality. Systematically improve quality and cost-effectiveness by actionably understanding your LLM app’s behavior and quickly testing different app variants. Rigorously assess your LLM app’s behavior before you deploy, in order to ensure quality and cost-effectiveness when you’re live. Easily monitor your live traffic: detect and resolve issues, analyze usage in order to improve, and seamlessly feed back into your development process. Inductor makes it easy for engineering and other roles to collaborate: get critical human feedback from non-engineering stakeholders (e.g., PM, UX, or subject matter experts) to ensure that your LLM app is user-ready.

Langfuse

Langfuse is an AI tool that offers the Langfuse TypeScript SDK v4 for building and debugging LLM (Large Language Models) applications. It provides features such as tracing, prompt management, evaluation, and metrics to enhance the performance of LLM applications. Langfuse is backed by a team of experts and offers integrations with various platforms and SDKs. The tool aims to simplify the development process of complex LLM applications and improve overall efficiency.

Weavel

Weavel is an AI tool designed to revolutionize prompt engineering for large language models (LLMs). It offers features such as tracing, dataset curation, batch testing, and evaluations to enhance the performance of LLM applications. Weavel enables users to continuously optimize prompts using real-world data, prevent performance regression with CI/CD integration, and engage in human-in-the-loop interactions for scoring and feedback. Ape, the AI prompt engineer, outperforms competitors on benchmark tests and ensures seamless integration and continuous improvement specific to each user's use case. With Weavel, users can effortlessly evaluate LLM applications without the need for pre-existing datasets, streamlining the assessment process and enhancing overall performance.

Rawbot

Rawbot is an AI model comparison tool designed to simplify the selection process by enabling users to identify and understand the strengths and weaknesses of various AI models. It allows users to compare AI models based on performance optimization, strengths and weaknesses identification, customization and tuning, cost and efficiency analysis, and informed decision-making. Rawbot is a user-friendly platform that caters to researchers, developers, and business leaders, offering a comprehensive solution for selecting the best AI models tailored to specific needs.

pymetrics

pymetrics is an AI-powered soft skills platform that revolutionizes the hiring and talent management process by leveraging data-driven behavioral insights and audited AI technology. The platform aims to create a more efficient, effective, and fair recruitment process across the talent lifecycle. pymetrics offers solutions for talent acquisition, workforce transformation, mobility, reskilling, learning and development, and soft skills assessment. It provides custom AI algorithms tailored to each company's unique needs, ensuring unbiased candidate evaluation and suggesting optimal job matches.

1 - Open Source AI Tools

llm-jp-eval

LLM-jp-eval is a tool designed to automatically evaluate Japanese large language models across multiple datasets. It provides functionalities such as converting existing Japanese evaluation data to text generation task evaluation datasets, executing evaluations of large language models across multiple datasets, and generating instruction data (jaster) in the format of evaluation data prompts. Users can manage the evaluation settings through a config file and use Hydra to load them. The tool supports saving evaluation results and logs using wandb. Users can add new evaluation datasets by following specific steps and guidelines provided in the tool's documentation. It is important to note that using jaster for instruction tuning can lead to artificially high evaluation scores, so caution is advised when interpreting the results.

20 - OpenAI Gpts

B2B Startup Ideal Customer Co-pilot

Guides B2B startups in a structured customer segment evaluation process. Stop guessing! Ideate, Evaluate & Make data-driven decision.

Quality Assurance Advisor

Ensures product quality through systematic process monitoring and evaluation.

Source Evaluation and Fact Checking v1.3

FactCheck Navigator GPT is designed for in-depth fact checking and analysis of written content and evaluation of its source. The approach is to iterate through predefined and well-prompted steps. If desired, the user can refine the process by providing input between these steps.

OKR Coach

AI OKR Coach is a tool designed to assist users in the process of creating and assessing OKR (Objectives and Key Results). It provides a structured and flexible approach to OKR setting and evaluation.

Diagnóstico de Recrutamento e Seleção Vendedores

Teste: que nota receberia o processo de recrutamento e seleção de vendedores na sua empresa?

Process Map Optimizer

Upload your process map and I will analyse and suggest improvements

Process Engineering Advisor

Optimizes production processes for improved efficiency and quality.

Customer Service Process Improvement Advisor

Optimizes business operations through process enhancements.

R&D Process Scale-up Advisor

Optimizes production processes for efficient large-scale operations.

Process Optimization Advisor

Improves operational efficiency by optimizing processes and reducing waste.

Manufacturing Process Development Advisor

Optimizes manufacturing processes for efficiency and quality.

Trademarks GPT

Trademark Process Assistant, Not an Attorney & Definitely Not Legal Advice (independently verify info received). Gain insights on U.S. trademark process & concepts, USPTO resources, application steps & more - all while being reminded of the importance of consulting legal pros 4 specific guidance.

Prioritization Matrix Pro

Structured process for prioritizing marketing tasks based on strategic alignment. Outputs in Eisenhower, RACI and other methodologies.