curategpt

LLM-driven curation assist tool

Stars: 81

CurateGPT is a prototype web application and framework designed for general purpose AI-guided curation and curation-related operations over collections of objects. It provides functionalities for loading example data, building indexes, interacting with knowledge bases, and performing tasks such as chatting with a knowledge base, querying Pubmed, interacting with a GitHub issue tracker, term autocompletion, and all-by-all comparisons. The tool is built to work best with the OpenAI gpt-4 model and OpenAI ada-text-embedding-002 for embedding, but also supports alternative models through a plugin architecture.

README:

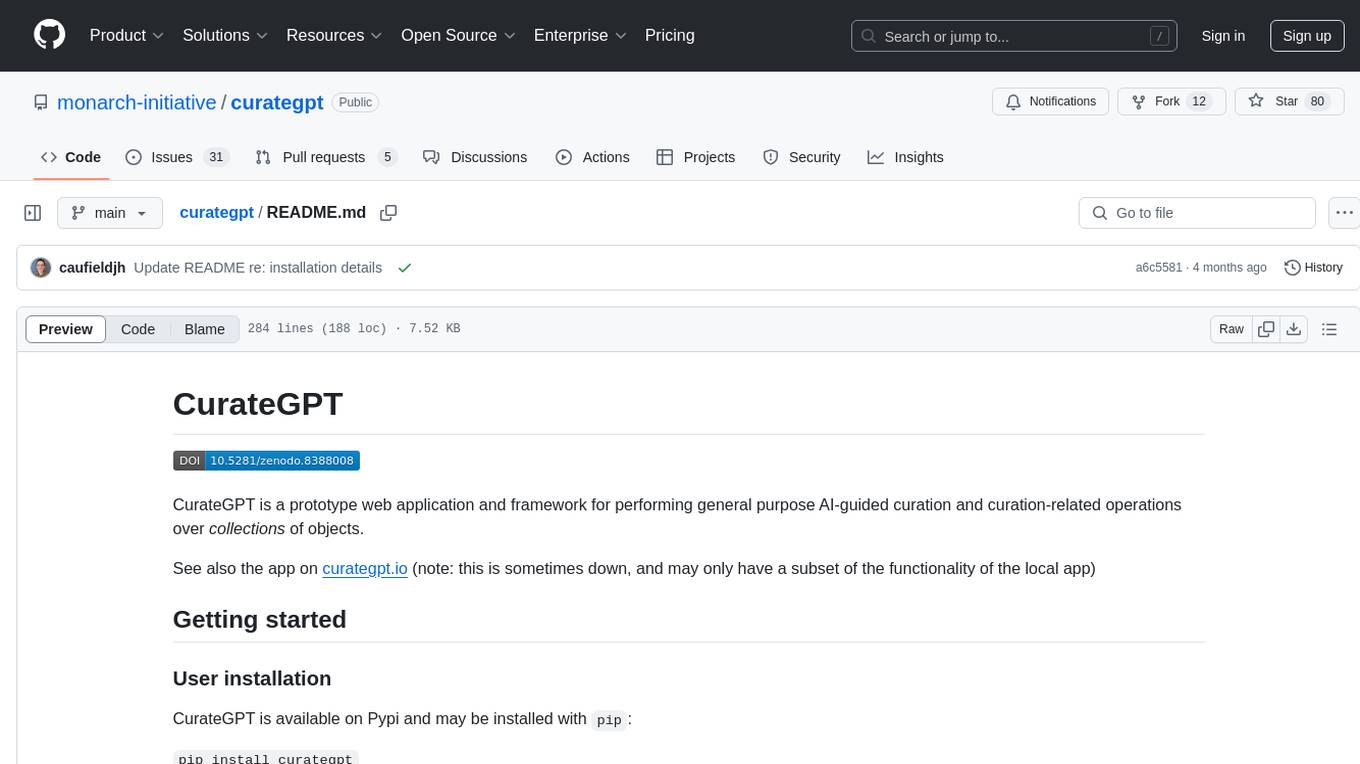

CurateGPT is a prototype web application and framework for performing general purpose AI-guided curation and curation-related operations over collections of objects.

See also the app on curategpt.io (note: this is sometimes down, and may only have a subset of the functionality of the local app)

CurateGPT is available on Pypi and may be installed with pip:

pip install curategpt

You will first need to install Poetry.

Then clone this repo.

git clone https://github.com/monarch-initiative/curategpt.git

cd curategpt

and install the dependencies:

poetry install

In order to get the best performance from CurateGPT, we recommend getting an OpenAI API key, and setting it:

export OPENAI_API_KEY=<your key>

(for members of Monarch: ask on Slack if you would like to use the group key)

CurateGPT will also work with other large language models - see "Selecting models" below.

You initially start with an empty database. You can load whatever you like into this

database! Any JSON, YAML, or CSV is accepted.

CurateGPT comes with wrappers for some existing local and remote sources, including

ontologies. The Makefile contains some examples of how to load these. You can

load any ontology using the ont-<name> target, e.g.:

make ont-cl

This loads CL (via OAK) into a collection called ont_cl

Note that by default this loads into a collection set stored at stagedb, whereas the app works off

of db. You can copy the collection set to the db with:

cp -r stagedb/* db/

You can then run the streamlit app with:

make app

CurateGPT depends on vector database indexes of the databases/ontologies you want to curate.

The flagship application is ontology curation, so to build an index for an OBO ontology like CL:

make ont-cl

This requires an OpenAI key.

(You can build indexes using an open embedding model, modify the command to leave off

the -m option, but this is not recommended as currently oai embeddings seem to work best).

To load the default ontologies:

make all

(this may take some time)

To load different databases:

make load-db-hpoa

make load-db-reactome

You can load an arbitrary json, yaml, or csv file:

curategpt view index -c my_foo foo.json

(you will need to do this in the poetry shell)

To load a GitHub repo of issues:

curategpt -v view index -c gh_uberon -m openai: --view github --init-with "{repo: obophenotype/uberon}"

The following are also supported:

- Google Drives

- Google Sheets

- Markdown files

- LinkML Schemas

- HPOA files

- GOCAMs

- MAXOA files

- Many more

- See notebooks for examples.

Currently this tool works best with the OpenAI gpt-4 model (for instruction tasks) and OpenAI ada-text-embedding-002 for embedding.

CurateGPT is layered on top of simonw/llm which has a plugin architecture for using alternative models. In theory you can use any of these plugins.

Additionally, you can set up an openai-emulating proxy using litellm.

The litellm proxy may be installed with pip as pip install litellm[proxy].

Let's say you want to run mixtral locally using ollama. You start up ollama (you may have to run ollama serve first):

ollama run mixtral

Then start up litellm:

litellm -m ollama/mixtral

Next edit your extra-openai-models.yaml as detailed in the llm docs:

- model_name: ollama/mixtral

model_id: litellm-mixtral

api_base: "http://0.0.0.0:8000"

You can now use this:

curategpt ask -m litellm-mixtral -c ont_cl "What neurotransmitter is released by the hippocampus?"But be warned that many of the prompts in curategpt were engineered

against openai models, and they may give suboptimal results or fail

entirely on other models. As an example, ask seems to work quite

well with mixtral, but complete works horribly. We haven't yet

investigated if the issue is the model or our prompts or the overall

approach.

Welcome to the world of AI engineering!

curategpt --helpYou will see various commands for working with indexes, searching, extracting, generating, etc.

These functions are generally available through the UI, and the current priority is documenting these.

curategpt ask -c ont_cl "What neurotransmitter is released by the hippocampus?"

may yield something like:

The hippocampus releases gamma-aminobutyric acid (GABA) as a neurotransmitter [1](#ref-1).

...

## 1

id: GammaAminobutyricAcidSecretion_neurotransmission

label: gamma-aminobutyric acid secretion, neurotransmission

definition: The regulated release of gamma-aminobutyric acid by a cell, in which the

gamma-aminobutyric acid acts as a neurotransmitter.

...

curategpt view ask -V pubmed "what neurons express VIP?"

curategpt ask -c gh_obi "what are some new term requests for electrophysiology terms?"

curategpt complete -c ont_cl "mesenchymal stem cell of the apical papilla"

yields

id: MesenchymalStemCellOfTheApicalPapilla

definition: A mesenchymal cell that is part of the apical papilla of a tooth and has

the ability to self-renew and differentiate into various cell types such as odontoblasts,

fibroblasts, and osteoblasts.

relationships:

- predicate: PartOf

target: ApicalPapilla

- predicate: subClassOf

target: MesenchymalCell

- predicate: subClassOf

target: StemCell

original_id: CL:0007045

label: mesenchymal stem cell of the apical papillaYou can compare all objects in one collection

curategpt all-by-all --threshold 0.80 -c ont_hp -X ont_mp --ids-only -t csv > ~/tmp/allxall.mp.hp.csv

This takes 1-2s, as it involves comparison over pre-computed vectors. It reports top hits above a threshold.

Results may vary. You may want to try different texts for embeddings (the default is the entire json object; for ontologies it is concatenation of labels, definition, aliases).

sample:

HP:5200068,Socially innappropriate questioning,MP:0001361,social withdrawal,0.844015132437909

HP:5200069,Spinning,MP:0001411,spinning,0.9077306606290237

HP:5200071,Delayed Echolalia,MP:0013140,excessive vocalization,0.8153252835818089

HP:5200072,Immediate Echolalia,MP:0001410,head bobbing,0.8348177036912526

HP:5200073,Excessive cleaning,MP:0001412,excessive scratching,0.8699103725005582

HP:5200104,Abnormal play,MP:0020437,abnormal social play behavior,0.8984862078522344

HP:5200105,Reduced imaginative play skills,MP:0001402,decreased locomotor activity,0.85571629684631

HP:5200108,Nonfunctional or atypical use of objects in play,MP:0003908,decreased stereotypic behavior,0.8586700411012859

HP:5200129,Abnormal rituals,MP:0010698,abnormal impulsive behavior control,0.8727804272023427

HP:5200134,Jumping,MP:0001401,jumpy,0.9011393233129765

Note that CurateGPT has a separate component for using an LLM to evaluate candidate matches (see also https://arxiv.org/abs/2310.03666); this is not enabled by default, this would be expensive to run for a whole ontology.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for curategpt

Similar Open Source Tools

curategpt

CurateGPT is a prototype web application and framework designed for general purpose AI-guided curation and curation-related operations over collections of objects. It provides functionalities for loading example data, building indexes, interacting with knowledge bases, and performing tasks such as chatting with a knowledge base, querying Pubmed, interacting with a GitHub issue tracker, term autocompletion, and all-by-all comparisons. The tool is built to work best with the OpenAI gpt-4 model and OpenAI ada-text-embedding-002 for embedding, but also supports alternative models through a plugin architecture.

curate-gpt

CurateGPT is a prototype web application and framework for performing general purpose AI-guided curation and curation-related operations over collections of objects. It allows users to load JSON, YAML, or CSV data, build vector database indexes for ontologies, and interact with various data sources like GitHub, Google Drives, Google Sheets, and more. The tool supports ontology curation, knowledge base querying, term autocompletion, and all-by-all comparisons for objects in a collection.

ontogpt

OntoGPT is a Python package for extracting structured information from text using large language models, instruction prompts, and ontology-based grounding. It provides a command line interface and a minimal web app for easy usage. The tool has been evaluated on test data and is used in related projects like TALISMAN for gene set analysis. OntoGPT enables users to extract information from text by specifying relevant terms and provides the extracted objects as output.

warc-gpt

WARC-GPT is an experimental retrieval augmented generation pipeline for web archive collections. It allows users to interact with WARC files, extract text, generate text embeddings, visualize embeddings, and interact with a web UI and API. The tool is highly customizable, supporting various LLMs, providers, and embedding models. Users can configure the application using environment variables, ingest WARC files, start the server, and interact with the web UI and API to search for content and generate text completions. WARC-GPT is designed for exploration and experimentation in exploring web archives using AI.

MultiPL-E

MultiPL-E is a system for translating unit test-driven neural code generation benchmarks to new languages. It is part of the BigCode Code Generation LM Harness and allows for evaluating Code LLMs using various benchmarks. The tool supports multiple versions with improvements and new language additions, providing a scalable and polyglot approach to benchmarking neural code generation. Users can access a tutorial for direct usage and explore the dataset of translated prompts on the Hugging Face Hub.

gpt-subtrans

GPT-Subtrans is an open-source subtitle translator that utilizes large language models (LLMs) as translation services. It supports translation between any language pairs that the language model supports. Note that GPT-Subtrans requires an active internet connection, as subtitles are sent to the provider's servers for translation, and their privacy policy applies.

LiveBench

LiveBench is a benchmark tool designed for Language Model Models (LLMs) with a focus on limiting contamination through monthly new questions based on recent datasets, arXiv papers, news articles, and IMDb movie synopses. It provides verifiable, objective ground-truth answers for accurate scoring without an LLM judge. The tool offers 18 diverse tasks across 6 categories and promises to release more challenging tasks over time. LiveBench is built on FastChat's llm_judge module and incorporates code from LiveCodeBench and IFEval.

eval-dev-quality

DevQualityEval is an evaluation benchmark and framework designed to compare and improve the quality of code generation of Language Model Models (LLMs). It provides developers with a standardized benchmark to enhance real-world usage in software development and offers users metrics and comparisons to assess the usefulness of LLMs for their tasks. The tool evaluates LLMs' performance in solving software development tasks and measures the quality of their results through a point-based system. Users can run specific tasks, such as test generation, across different programming languages to evaluate LLMs' language understanding and code generation capabilities.

llm-subtrans

LLM-Subtrans is an open source subtitle translator that utilizes LLMs as a translation service. It supports translating subtitles between any language pairs supported by the language model. The application offers multiple subtitle formats support through a pluggable system, including .srt, .ssa/.ass, and .vtt files. Users can choose to use the packaged release for easy usage or install from source for more control over the setup. The tool requires an active internet connection as subtitles are sent to translation service providers' servers for translation.

lovelaice

Lovelaice is an AI-powered assistant for your terminal and editor. It can run bash commands, search the Internet, answer general and technical questions, complete text files, chat casually, execute code in various languages, and more. Lovelaice is configurable with API keys and LLM models, and can be used for a wide range of tasks requiring bash commands or coding assistance. It is designed to be versatile, interactive, and helpful for daily tasks and projects.

ezkl

EZKL is a library and command-line tool for doing inference for deep learning models and other computational graphs in a zk-snark (ZKML). It enables the following workflow: 1. Define a computational graph, for instance a neural network (but really any arbitrary set of operations), as you would normally in pytorch or tensorflow. 2. Export the final graph of operations as an .onnx file and some sample inputs to a .json file. 3. Point ezkl to the .onnx and .json files to generate a ZK-SNARK circuit with which you can prove statements such as: > "I ran this publicly available neural network on some private data and it produced this output" > "I ran my private neural network on some public data and it produced this output" > "I correctly ran this publicly available neural network on some public data and it produced this output" In the backend we use the collaboratively-developed Halo2 as a proof system. The generated proofs can then be verified with much less computational resources, including on-chain (with the Ethereum Virtual Machine), in a browser, or on a device.

pgai

pgai simplifies the process of building search and Retrieval Augmented Generation (RAG) AI applications with PostgreSQL. It brings embedding and generation AI models closer to the database, allowing users to create embeddings, retrieve LLM chat completions, reason over data for classification, summarization, and data enrichment directly from within PostgreSQL in a SQL query. The tool requires an OpenAI API key and a PostgreSQL client to enable AI functionality in the database. Users can install pgai from source, run it in a pre-built Docker container, or enable it in a Timescale Cloud service. The tool provides functions to handle API keys using psql or Python, and offers various AI functionalities like tokenizing, detokenizing, embedding, chat completion, and content moderation.

ray-llm

RayLLM (formerly known as Aviary) is an LLM serving solution that makes it easy to deploy and manage a variety of open source LLMs, built on Ray Serve. It provides an extensive suite of pre-configured open source LLMs, with defaults that work out of the box. RayLLM supports Transformer models hosted on Hugging Face Hub or present on local disk. It simplifies the deployment of multiple LLMs, the addition of new LLMs, and offers unique autoscaling support, including scale-to-zero. RayLLM fully supports multi-GPU & multi-node model deployments and offers high performance features like continuous batching, quantization and streaming. It provides a REST API that is similar to OpenAI's to make it easy to migrate and cross test them. RayLLM supports multiple LLM backends out of the box, including vLLM and TensorRT-LLM.

ai-rag-chat-evaluator

This repository contains scripts and tools for evaluating a chat app that uses the RAG architecture. It provides parameters to assess the quality and style of answers generated by the chat app, including system prompt, search parameters, and GPT model parameters. The tools facilitate running evaluations, with examples of evaluations on a sample chat app. The repo also offers guidance on cost estimation, setting up the project, deploying a GPT-4 model, generating ground truth data, running evaluations, and measuring the app's ability to say 'I don't know'. Users can customize evaluations, view results, and compare runs using provided tools.

knowledge-graph-of-thoughts

Knowledge Graph of Thoughts (KGoT) is an innovative AI assistant architecture that integrates LLM reasoning with dynamically constructed knowledge graphs (KGs). KGoT extracts and structures task-relevant knowledge into a dynamic KG representation, iteratively enhanced through external tools such as math solvers, web crawlers, and Python scripts. Such structured representation of task-relevant knowledge enables low-cost models to solve complex tasks effectively. The KGoT system consists of three main components: the Controller, the Graph Store, and the Integrated Tools, each playing a critical role in the task-solving process.

OlympicArena

OlympicArena is a comprehensive benchmark designed to evaluate advanced AI capabilities across various disciplines. It aims to push AI towards superintelligence by tackling complex challenges in science and beyond. The repository provides detailed data for different disciplines, allows users to run inference and evaluation locally, and offers a submission platform for testing models on the test set. Additionally, it includes an annotation interface and encourages users to cite their paper if they find the code or dataset helpful.

For similar tasks

curate-gpt

CurateGPT is a prototype web application and framework for performing general purpose AI-guided curation and curation-related operations over collections of objects. It allows users to load JSON, YAML, or CSV data, build vector database indexes for ontologies, and interact with various data sources like GitHub, Google Drives, Google Sheets, and more. The tool supports ontology curation, knowledge base querying, term autocompletion, and all-by-all comparisons for objects in a collection.

curategpt

CurateGPT is a prototype web application and framework designed for general purpose AI-guided curation and curation-related operations over collections of objects. It provides functionalities for loading example data, building indexes, interacting with knowledge bases, and performing tasks such as chatting with a knowledge base, querying Pubmed, interacting with a GitHub issue tracker, term autocompletion, and all-by-all comparisons. The tool is built to work best with the OpenAI gpt-4 model and OpenAI ada-text-embedding-002 for embedding, but also supports alternative models through a plugin architecture.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.