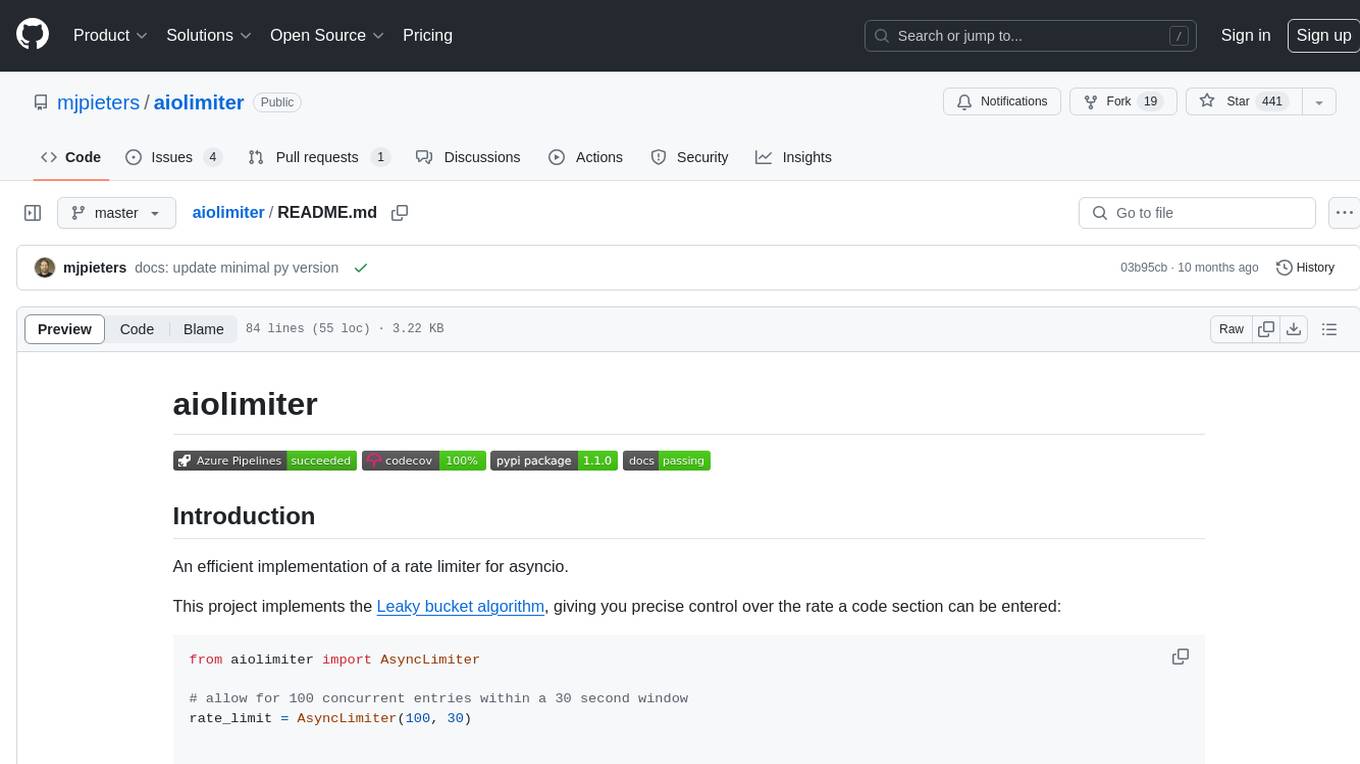

lmstudio-python

LM Studio Python SDK

Stars: 267

LM Studio Python SDK provides a convenient API for interacting with LM Studio instance, including text completion and chat response functionalities. The SDK allows users to manage websocket connections and chat history easily. It also offers tools for code consistency checks, automated testing, and expanding the API.

README:

The SDK can be installed from PyPI as follows:

$ pip install lmstudioInstallation from the repository URL or a local clone is also supported for development and pre-release testing purposes.

The base component of the LM Studio SDK is the (synchronous) Client.

This should be created once and used to manage the underlying

websocket connections to the LM Studio instance.

However, a top level convenience API is provided for convenience in

interactive use (this API implicitly creates a default Client instance

which will remain active until the Python interpreter is terminated).

Using this convenience API, requesting text completion from an already loaded LLM is as straightforward as:

import lmstudio as lms

model = lms.llm()

model.complete("Once upon a time,")Requesting a chat response instead only requires the extra step of

setting up a Chat helper to manage the chat history and include

it in response prediction requests:

import lmstudio as lms

EXAMPLE_MESSAGES = (

"My hovercraft is full of eels!",

"I will not buy this record, it is scratched."

)

model = lms.llm()

chat = lms.Chat("You are a helpful shopkeeper assisting a foreign traveller")

for message in EXAMPLE_MESSAGES:

chat.add_user_message(message)

print(f"Customer: {message}")

response = model.respond(chat)

chat.add_assistant_response(response)

print(f"Shopkeeper: {response}")Additional SDK examples and usage recommendations may be found in the main LM Studio Python SDK documentation.

The LM Studio Python SDK uses a 3-part X.Y.Z numeric version identifier:

-

X: incremented when the minimum version of significant dependencies is updated (for example, dropping support for older versions of Python or LM Studio). Previously deprecated features may be dropped when this part of the version number increases. -

Y: incremented when new features are added, or some other notable change is introduced (such as support for additional versions of Python). New deprecation warnings may be introduced when this part of the version number increases. -

Z: incremented for bug fix releases which don't contain any other changes. Adding exceptions and warnings for previously undetected situations is considered a bug fix.

This versioning policy is intentionally similar to semantic versioning, but differs in the specifics of when the different parts of the version number will be updated.

Release candidates may be published prior to full releases, but this will typically only occur when seeking broader feedback on particular features prior to finalizing the release.

Outside the preparation of a new release, the SDK repository will include a .devN suffix

on the nominal Python package version.

$ git clone https://github.com/lmstudio-ai/lmstudio-python

$ cd lmstudio-pythonTo be able to run tox -e sync-sdk-schema, it is also

necessary to ensure the lmstudio-js submodule is updated:

$ git submodule update --init --recursiveIn order to work on the Python SDK, you need to install

:pypi:pdm, :pypi:tox, and :pypi:tox-pdm

(everything else can be executed via tox environments).

Given these tools, the default development environment can be set up and other commands executed as described below.

The simplest option for handling that is to install uv, and then use

its uv tool command to set up pdm and a second environment

with tox + tox-pdm. pipx is another reasonable option for this task.

In order to use the Python SDK, you just need some form of

Python environment manager (since lmstudio-python publishes

the package lmstudio to PyPI).

The set of checks recommended for local execution are accessible via

the check marker in tox:

$ tox -m checkThis runs the same checks as the static and test markers (described below).

The project source code is autoformatted and linted using :pypi:ruff.

It also uses :pypi:mypy in strict mode to statically check that Python APIs

are being accessed as expected.

All of these commands can be invoked via tox:

$ tox -e format$ tox -e lint$ tox -e typecheckLinting and type checking can be executed together using the static marker:

$ tox -m staticAvoid using # noqa comments to suppress these warnings - wherever

possible, warnings should be fixed instead. # noqa comments are

reserved for rare cases where the recommended style causes severe

readability problems, and there isn't a more explicit mechanism

(such as typing.cast) to indicate which check is being skipped.

# fmt: off/on and # fmt: skip comments may be used as needed

when the autoformatter makes readability worse instead of better

(for example, collapsing lists to a single line when they intentionally

cover multiple lines, or breaking alignment of end-of-line comments).

The project's tests are written using the :pypi:pytest test framework.

:pypi:tox is used to automate the setup and execution of these tests

across multiple Python versions. One of these is nominated as the

default test target, and is accessible via the test marker:

$ tox -m testYou can also use other defined versions by specifying the target environment directly:

$ tox -e py3.11There are additional labels defined for running the oldest test environment, the latest test environment, and all test environments:

$ tox -m test_oldest

$ tox -m test_latest

$ tox -m test_allTo ensure all the required models are loaded before running the tests, run the following command:

$ tox -e load-test-models

tox has been configured to forward any additional arguments it is given to

pytest. This enables the use of pytest's

rich CLI.

In particular, you can select tests using all the options that pytest provides:

$ # Using file name

$ tox -m test -- tests/test_basics.py

$ # Using markers

$ tox -m test -- -m "slow"

$ # Using keyword text search

$ tox -m test -- -k "catalog"Additional notes on running and updating the tests can be found in the

tests/README.md file.

- the content of

src/lmstudio/_sdk_modelsis automatically generated by thesync-sdk-schema.pyscript insdk-schemaand should not be modified directly. Runtox -e sync-sdk-schemato regenerate the Python submodule from the existing export of thelmstudio-jsschema (for example, after modifying the data model template). Runtox -e sync-sdk-schema -- --regen-schemaafter updating thesdk-schema/lmstudio-jssubmodule itself to a newer iteration of thelmstudio-jsJSON API. - as support for new API namespaces is added to the SDK, each should get a dedicated session type (similar to those for the already supported namespaces), even if it is only used privately by the client implementation.

- as support for new API channel endppoints is added to the SDK, each should get a dedicated base endpoint type (similar to those for the already supported channels). This avoids duplicating the receive message processing between the sync and async APIs.

- the

json_api.SessionDatabase class is useful for defining rich result objects which offer additional methods that call back into the SDK (for example, this is how downloaded model listings offer their interfaces to load a new instance of a model).

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for lmstudio-python

Similar Open Source Tools

lmstudio-python

LM Studio Python SDK provides a convenient API for interacting with LM Studio instance, including text completion and chat response functionalities. The SDK allows users to manage websocket connections and chat history easily. It also offers tools for code consistency checks, automated testing, and expanding the API.

llm-subtrans

LLM-Subtrans is an open source subtitle translator that utilizes LLMs as a translation service. It supports translating subtitles between any language pairs supported by the language model. The application offers multiple subtitle formats support through a pluggable system, including .srt, .ssa/.ass, and .vtt files. Users can choose to use the packaged release for easy usage or install from source for more control over the setup. The tool requires an active internet connection as subtitles are sent to translation service providers' servers for translation.

safety-tooling

This repository, safety-tooling, is designed to be shared across various AI Safety projects. It provides an LLM API with a common interface for OpenAI, Anthropic, and Google models. The aim is to facilitate collaboration among AI Safety researchers, especially those with limited software engineering backgrounds, by offering a platform for contributing to a larger codebase. The repo can be used as a git submodule for easy collaboration and updates. It also supports pip installation for convenience. The repository includes features for installation, secrets management, linting, formatting, Redis configuration, testing, dependency management, inference, finetuning, API usage tracking, and various utilities for data processing and experimentation.

2p-kt

2P-Kt is a Kotlin-based and multi-platform reboot of tuProlog (2P), a multi-paradigm logic programming framework written in Java. It consists of an open ecosystem for Symbolic Artificial Intelligence (AI) with modules supporting logic terms, unification, indexing, resolution of logic queries, probabilistic logic programming, binary decision diagrams, OR-concurrent resolution, DSL for logic programming, parsing modules, serialisation modules, command-line interface, and graphical user interface. The tool is designed to support knowledge representation and automatic reasoning through logic programming in an extensible and flexible way, encouraging extensions towards other symbolic AI systems than Prolog. It is a pure, multi-platform Kotlin project supporting JVM, JS, Android, and Native platforms, with a lightweight library leveraging the Kotlin common library.

qb

QANTA is a system and dataset for question answering tasks. It provides a script to download datasets, preprocesses questions, and matches them with Wikipedia pages. The system includes various datasets, training, dev, and test data in JSON and SQLite formats. Dependencies include Python 3.6, `click`, and NLTK models. Elastic Search 5.6 is needed for the Guesser component. Configuration is managed through environment variables and YAML files. QANTA supports multiple guesser implementations that can be enabled/disabled. Running QANTA involves using `cli.py` and Luigi pipelines. The system accesses raw Wikipedia dumps for data processing. The QANTA ID numbering scheme categorizes datasets based on events and competitions.

eval-dev-quality

DevQualityEval is an evaluation benchmark and framework designed to compare and improve the quality of code generation of Language Model Models (LLMs). It provides developers with a standardized benchmark to enhance real-world usage in software development and offers users metrics and comparisons to assess the usefulness of LLMs for their tasks. The tool evaluates LLMs' performance in solving software development tasks and measures the quality of their results through a point-based system. Users can run specific tasks, such as test generation, across different programming languages to evaluate LLMs' language understanding and code generation capabilities.

PolyMind

PolyMind is a multimodal, function calling powered LLM webui designed for various tasks such as internet searching, image generation, port scanning, Wolfram Alpha integration, Python interpretation, and semantic search. It offers a plugin system for adding extra functions and supports different models and endpoints. The tool allows users to interact via function calling and provides features like image input, image generation, and text file search. The application's configuration is stored in a `config.json` file with options for backend selection, compatibility mode, IP address settings, API key, and enabled features.

gpt-subtrans

GPT-Subtrans is an open-source subtitle translator that utilizes large language models (LLMs) as translation services. It supports translation between any language pairs that the language model supports. Note that GPT-Subtrans requires an active internet connection, as subtitles are sent to the provider's servers for translation, and their privacy policy applies.

vulnerability-analysis

The NVIDIA AI Blueprint for Vulnerability Analysis for Container Security showcases accelerated analysis on common vulnerabilities and exposures (CVE) at an enterprise scale, reducing mitigation time from days to seconds. It enables security analysts to determine software package vulnerabilities using large language models (LLMs) and retrieval-augmented generation (RAG). The blueprint is designed for security analysts, IT engineers, and AI practitioners in cybersecurity. It requires NVAIE developer license and API keys for vulnerability databases, search engines, and LLM model services. Hardware requirements include L40 GPU for pipeline operation and optional LLM NIM and Embedding NIM. The workflow involves LLM pipeline for CVE impact analysis, utilizing LLM planner, agent, and summarization nodes. The blueprint uses NVIDIA NIM microservices and Morpheus Cybersecurity AI SDK for vulnerability analysis.

aisheets

Hugging Face AI Sheets is an open-source tool for building, enriching, and transforming datasets using AI models with no code. It can be deployed locally or on the Hub, providing access to thousands of open models. Users can easily generate datasets, run data generation scripts, and customize inference endpoints for text generation. The tool supports custom LLMs and offers advanced configuration options for authentication, inference, and miscellaneous settings. With AI Sheets, users can leverage the power of AI models without writing any code, making dataset management and transformation efficient and accessible.

debug-gym

debug-gym is a text-based interactive debugging framework designed for debugging Python programs. It provides an environment where agents can interact with code repositories, use various tools like pdb and grep to investigate and fix bugs, and propose code patches. The framework supports different LLM backends such as OpenAI, Azure OpenAI, and Anthropic. Users can customize tools, manage environment states, and run agents to debug code effectively. debug-gym is modular, extensible, and suitable for interactive debugging tasks in a text-based environment.

0chain

Züs is a high-performance cloud on a fast blockchain offering privacy and configurable uptime. It uses erasure code to distribute data between data and parity servers, allowing flexibility for IT managers to design for security and uptime. Users can easily share encrypted data with business partners through a proxy key sharing protocol. The ecosystem includes apps like Blimp for cloud migration, Vult for personal cloud storage, and Chalk for NFT artists. Other apps include Bolt for secure wallet and staking, Atlus for blockchain explorer, and Chimney for network participation. The QoS protocol challenges providers based on response time, while the privacy protocol enables secure data sharing. Züs supports hybrid and multi-cloud architectures, allowing users to improve regulatory compliance and security requirements.

aiac

AIAC is a library and command line tool to generate Infrastructure as Code (IaC) templates, configurations, utilities, queries, and more via LLM providers such as OpenAI, Amazon Bedrock, and Ollama. Users can define multiple 'backends' targeting different LLM providers and environments using a simple configuration file. The tool allows users to ask a model to generate templates for different scenarios and composes an appropriate request to the selected provider, storing the resulting code to a file and/or printing it to standard output.

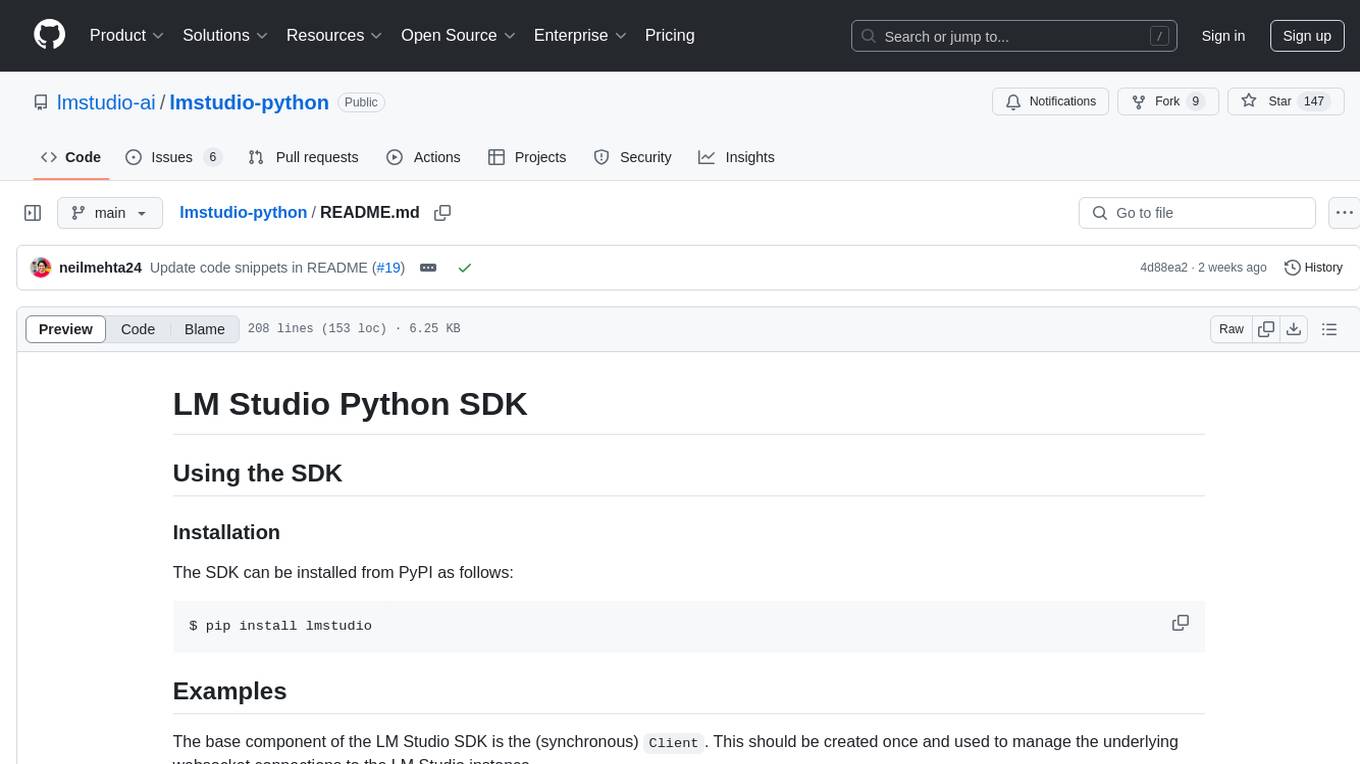

aiolimiter

An efficient implementation of a rate limiter for asyncio using the Leaky bucket algorithm, providing precise control over the rate a code section can be entered. It allows for limiting the number of concurrent entries within a specified time window, ensuring that a section of code is executed a maximum number of times in that period.

MultiPL-E

MultiPL-E is a system for translating unit test-driven neural code generation benchmarks to new languages. It is part of the BigCode Code Generation LM Harness and allows for evaluating Code LLMs using various benchmarks. The tool supports multiple versions with improvements and new language additions, providing a scalable and polyglot approach to benchmarking neural code generation. Users can access a tutorial for direct usage and explore the dataset of translated prompts on the Hugging Face Hub.

llm-verified-with-monte-carlo-tree-search

This prototype synthesizes verified code with an LLM using Monte Carlo Tree Search (MCTS). It explores the space of possible generation of a verified program and checks at every step that it's on the right track by calling the verifier. This prototype uses Dafny, Coq, Lean, Scala, or Rust. By using this technique, weaker models that might not even know the generated language all that well can compete with stronger models.

For similar tasks

LLMstudio

LLMstudio by TensorOps is a platform that offers prompt engineering tools for accessing models from providers like OpenAI, VertexAI, and Bedrock. It provides features such as Python Client Gateway, Prompt Editing UI, History Management, and Context Limit Adaptability. Users can track past runs, log costs and latency, and export history to CSV. The tool also supports automatic switching to larger-context models when needed. Coming soon features include side-by-side comparison of LLMs, automated testing, API key administration, project organization, and resilience against rate limits. LLMstudio aims to streamline prompt engineering, provide execution history tracking, and enable effortless data export, offering an evolving environment for teams to experiment with advanced language models.

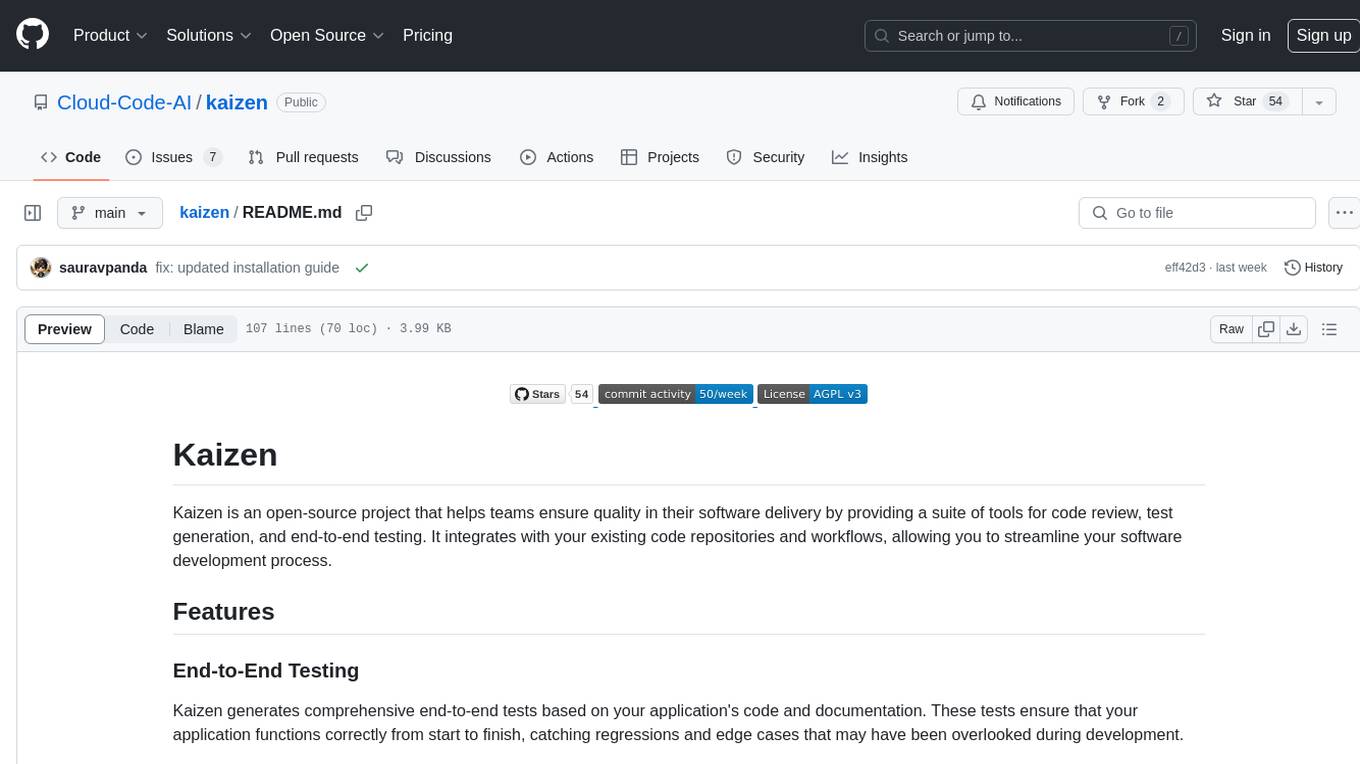

kaizen

Kaizen is an open-source project that helps teams ensure quality in their software delivery by providing a suite of tools for code review, test generation, and end-to-end testing. It integrates with your existing code repositories and workflows, allowing you to streamline your software development process. Kaizen generates comprehensive end-to-end tests, provides UI testing and review, and automates code review with insightful feedback. The file structure includes components for API server, logic, actors, generators, LLM integrations, documentation, and sample code. Getting started involves installing the Kaizen package, generating tests for websites, and executing tests. The tool also runs an API server for GitHub App actions. Contributions are welcome under the AGPL License.

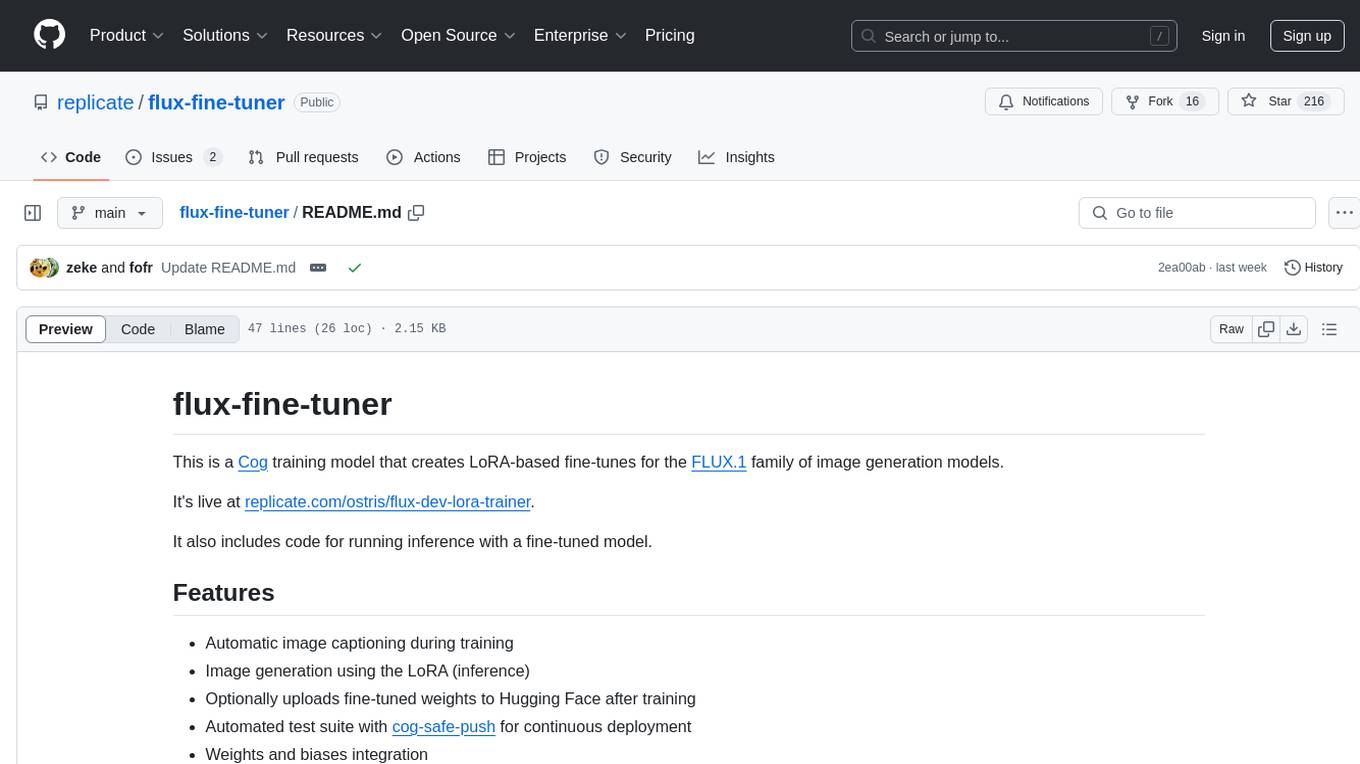

flux-fine-tuner

This is a Cog training model that creates LoRA-based fine-tunes for the FLUX.1 family of image generation models. It includes features such as automatic image captioning during training, image generation using LoRA, uploading fine-tuned weights to Hugging Face, automated test suite for continuous deployment, and Weights and biases integration. The tool is designed for users to fine-tune Flux models on Replicate for image generation tasks.

shortest

Shortest is an AI-powered natural language end-to-end testing framework built on Playwright. It provides a seamless testing experience by allowing users to write tests in natural language and execute them using Anthropic Claude API. The framework also offers GitHub integration with 2FA support, making it suitable for testing web applications with complex authentication flows. Shortest simplifies the testing process by enabling users to run tests locally or in CI/CD pipelines, ensuring the reliability and efficiency of web applications.

lmstudio-python

LM Studio Python SDK provides a convenient API for interacting with LM Studio instance, including text completion and chat response functionalities. The SDK allows users to manage websocket connections and chat history easily. It also offers tools for code consistency checks, automated testing, and expanding the API.

mastering-github-copilot-for-dotnet-csharp-developers

Enhance coding efficiency with expert-led GitHub Copilot course for C#/.NET developers. Learn to integrate AI-powered coding assistance, automate testing, and boost collaboration using Visual Studio Code and Copilot Chat. From autocompletion to unit testing, cover essential techniques for cleaner, faster, smarter code.

agentql

AgentQL is a suite of tools for extracting data and automating workflows on live web sites featuring an AI-powered query language, Python and JavaScript SDKs, a browser-based debugger, and a REST API endpoint. It uses natural language queries to pinpoint data and elements on any web page, including authenticated and dynamically generated content. Users can define structured data output and apply transforms within queries. AgentQL's natural language selectors find elements intuitively based on the content of the web page and work across similar web sites, self-healing as UI changes over time.

c4-genai-suite

C4-GenAI-Suite is a comprehensive AI tool for generating code snippets and automating software development tasks. It leverages advanced machine learning models to assist developers in writing efficient and error-free code. The suite includes features such as code completion, refactoring suggestions, and automated testing, making it a valuable asset for enhancing productivity and code quality in software development projects.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.