c4-genai-suite

c4 GenAI Suite

Stars: 164

C4-GenAI-Suite is a comprehensive AI tool for generating code snippets and automating software development tasks. It leverages advanced machine learning models to assist developers in writing efficient and error-free code. The suite includes features such as code completion, refactoring suggestions, and automated testing, making it a valuable asset for enhancing productivity and code quality in software development projects.

README:

An AI chatbot application with Model Context Provider (MCP) integration, powered by ai-sdk and compatibility for all major Large Language Models (LLMs) and Embedding Models.

Administrators can create assistants with different capabilities by adding extensions, such as RAG (Retrieval-Augmented Generation) services or MCP servers. The application is built using a modern tech stack, including React, NestJS, and Python FastAPI for the REI-S service.

Users can interact with assistants through a user-friendly interface. Depending on the assistant's configuration, users may be able to ask questions, upload their own files, or use other features. The assistants interact with various LLM providers to provide responses based on the configured extensions. Contextual information provided by the configured extensions allows the assistants to answer domain-specific questions and provide relevant information.

The application is designed to be modular and extensible, allowing users to create assistants with different capabilities by adding extensions.

The c4 GenAI Suite supports already many models directly. And if your preferred model is not supported already, it should be easy to write an extension to support it.

- OpenAI compatible models

- Azure OpenAI models

- Bedrock models

- Google GenAI models

- Ollama compatible models

The c4 GenAI Suite includes REI-S, a server to prepare files for consumption by the LLM.

- REI-S, a custom integrated RAG server

- Vector stores

- pgvector

- Azure AI Search

- Embedding models

- OpenAI compatible embeddings

- Azure OpenAI embeddings

- Ollama compatible embeddings

- File formats:

- pdf, docx, pptx, xlsx, ...

- audio file voice transcription (via Whisper)

- Vector stores

The c4 GenAI Suite is designed for extensibility. Writing extensions is easy, as is using an already existing MCP server.

- Model Context Protocol (MCP) servers

- Custom systemprompt

- Brave Search and Grounding with Bing Search

- Calculator

- Run

docker compose upin the project root. - Open the application in a browser. The default login credentials are user

[email protected]and passwordsecret.

For deployment in Kubernetes environments, please refer to the README of our Helm Chart.

The c4 GenAI Suite revolves around assistants. Each assistant consists of a set of extensions, which determine the LLM model and which tools it can use.

- In the admin area (click the username on the bottom left), go to the assistants section.

- Add an assistant with the green

+button next to the section title. Choose a name and a description. - Select the created assistant and click the green

+ Add Extension. - Select the model and fill in the credentials.

- Use the

TestButton to check that it works andsave.

Now you can return to the chat page (click on c4 GenAI Suite in the top left) and start a new conversation with your new assistant.

[!TIP] Our

docker-composeincludes a local Ollama, which runs on the CPU. You can use this for quick testing. But it will be slow and you probably want to use another model. If you want to use it, just create the following model extension in your Assistant.

- Extension:

Ollama- Endpoint:

http://ollama:11434- Model:

llama3.2

Use any MCP server offering an sse interface with the MCP Tools Extension (or use our mcp-tool-as-server as a proxy in front of an stdio MCP server).

Each MCP server can be configured in detail as an extension.

Use our RAG server REI-S to search user provided files. Just configure a Search Files extension for the assistant.

This process is described in detail in the services/reis subdirectory.

- See CONTRIBUTING.md for guidelines on how to contribute.

- For developer onboarding, check DEVELOPERS.md.

The application consists of a Frontend , a Backend and a REI-S service.

┌──────────┐

│ User │

└─────┬────┘

│ access

▼

┌──────────┐

│ Frontend │

└─────┬────┘

│ access

▼

┌──────────┐ ┌─────────────────┐

│ Backend │────►│ LLM │

└─────┬────┘ └─────────────────┘

│ access

▼

┌──────────┐ ┌─────────────────┐

│ REI-S │────►│ Embedding Model │

│ │ └─────────────────┘

│ │

│ │ ┌─────────────────┐

│ │────►│ Vector Store │

└──────────┘ └─────────────────┘

The frontend is built with React and TypeScript, providing a user-friendly interface for interacting with the backend and REI-S service. It includes features for managing assistants, extensions, and chat functionalities.

Sources:

/frontend

The backend is developed using NestJS and TypeScript, serving as the main API layer for the application. It handles requests from the frontend and interacts with llm providers to facilitate chat functionalities. The backend also manages assistants and their extensions, allowing users to configure and use various AI models for their chats.

Additionally, the backend manages user authentication, and communicates with the REI-S service for file indexing and retrieval.

For data persistence, the backend uses a PostgreSQL database.

Sources:

/backend

The REI-S (Retrieval Extraction Ingestion Server) is a Python-based server that provides basic RAG (Retrieval-Augmented Generation) capabilities. It allows for file content extraction, indexing and querying, enabling the application to handle large datasets efficiently. The REI-S service is designed to work seamlessly with the backend, providing necessary data for chat functionalities and file searches.

The REI-S supports Azure AI Search and pgvector for vector storage, allowing for flexible and scalable data retrieval options. The service can be configured using environment variables to specify the type of vector store and connection details.

Sources:

/services/reis

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for c4-genai-suite

Similar Open Source Tools

c4-genai-suite

C4-GenAI-Suite is a comprehensive AI tool for generating code snippets and automating software development tasks. It leverages advanced machine learning models to assist developers in writing efficient and error-free code. The suite includes features such as code completion, refactoring suggestions, and automated testing, making it a valuable asset for enhancing productivity and code quality in software development projects.

Awesome-Repo-Level-Code-Generation

This repository contains a collection of tools and scripts for generating code at the repository level. It provides a set of utilities to automate the process of creating and managing code across multiple files and directories. The tools included in this repository aim to improve code generation efficiency and maintainability by streamlining the development workflow. With a focus on enhancing productivity and reducing manual effort, this collection offers a variety of code generation options and customization features to suit different project requirements.

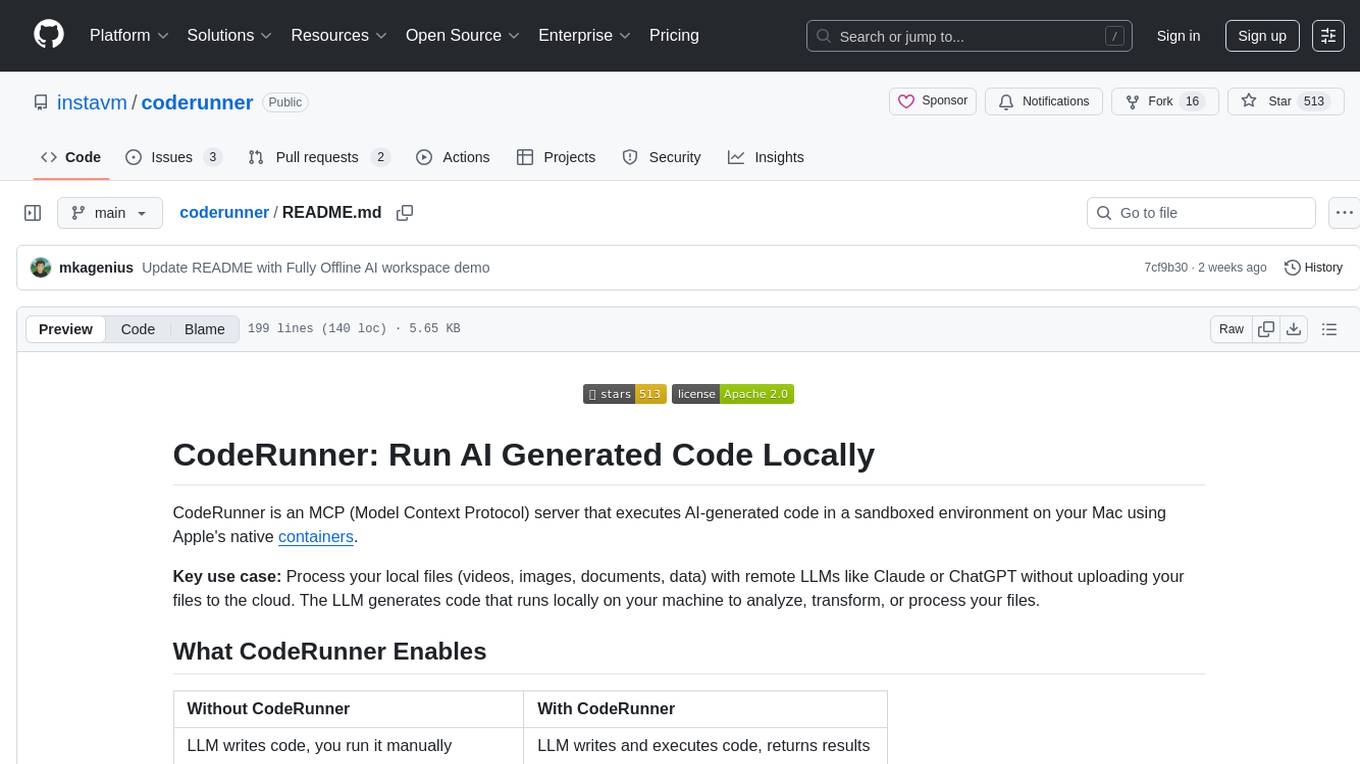

coderunner

Coderunner is a versatile tool designed for running code snippets in various programming languages. It provides an interactive environment for testing and debugging code without the need for a full-fledged IDE. With support for multiple languages and quick execution times, Coderunner is ideal for beginners learning to code, experienced developers prototyping algorithms, educators creating coding exercises, interview candidates practicing coding challenges, and professionals testing small code snippets.

ai-app-lab

The ai-app-lab is a high-code Python SDK Arkitect designed for enterprise developers with professional development capabilities. It provides a toolset and workflow set for developing large model applications tailored to specific business scenarios. The SDK offers highly customizable application orchestration, quality business tools, one-stop development and hosting services, security enhancements, and AI prototype application code examples. It caters to complex enterprise development scenarios, enabling the creation of highly customized intelligent applications for various industries.

tunacode

TunaCode CLI is an AI-powered coding assistant that provides a command-line interface for developers to enhance their coding experience. It offers features like model selection, parallel execution for faster file operations, and various commands for code management. The tool aims to improve coding efficiency and provide a seamless coding environment for developers.

python-sdk

Python SDK is a software development kit that provides tools and resources for developers to interact with Python programming language. It simplifies the process of integrating Python code into applications and services, offering a wide range of functionalities and libraries to streamline development workflows. With Python SDK, developers can easily access and manipulate data, create automation scripts, build web applications, and perform various tasks efficiently. It is designed to enhance the productivity and flexibility of Python developers by providing a comprehensive set of tools and utilities for software development.

p1

p1 is a code completion engine based on Large Language Models (LLM) that operates at the edge. It provides intelligent code suggestions and completions to enhance the coding experience. The tool is designed to assist developers in writing code more efficiently by predicting and offering context-aware completions based on the code being written. With implementations available for popular code editors like Vim and Visual Studio Code, p1 aims to improve productivity and streamline the coding process for software developers.

spec-workflow-mcp

Spec Workflow MCP is a Model Context Protocol (MCP) server that offers structured spec-driven development workflow tools for AI-assisted software development. It includes a real-time web dashboard and a VSCode extension for monitoring and managing project progress directly in the development environment. The tool supports sequential spec creation, real-time monitoring of specs and tasks, document management, archive system, task progress tracking, approval workflow, bug reporting, template system, and works on Windows, macOS, and Linux.

navigator

Navigator is a versatile tool for navigating through complex codebases efficiently. It provides a user-friendly interface to explore code files, search for specific functions or variables, and visualize code dependencies. With Navigator, developers can easily understand the structure of a project and quickly locate relevant code snippets. The tool supports various programming languages and offers customizable settings to enhance the coding experience. Whether you are working on a small project or a large codebase, Navigator can help you streamline your development process and improve code comprehension.

xlings

Xlings is a developer tool for programming learning, development, and course building. It provides features such as software installation, one-click environment setup, project dependency management, and cross-platform language package management. Additionally, it offers real-time compilation and running, AI code suggestions, tutorial project creation, automatic code checking for practice, and demo examples collection.

nanocoder

Nanocoder is a versatile code editor designed for beginners and experienced programmers alike. It provides a user-friendly interface with features such as syntax highlighting, code completion, and error checking. With Nanocoder, you can easily write and debug code in various programming languages, making it an ideal tool for learning, practicing, and developing software projects. Whether you are a student, hobbyist, or professional developer, Nanocoder offers a seamless coding experience to boost your productivity and creativity.

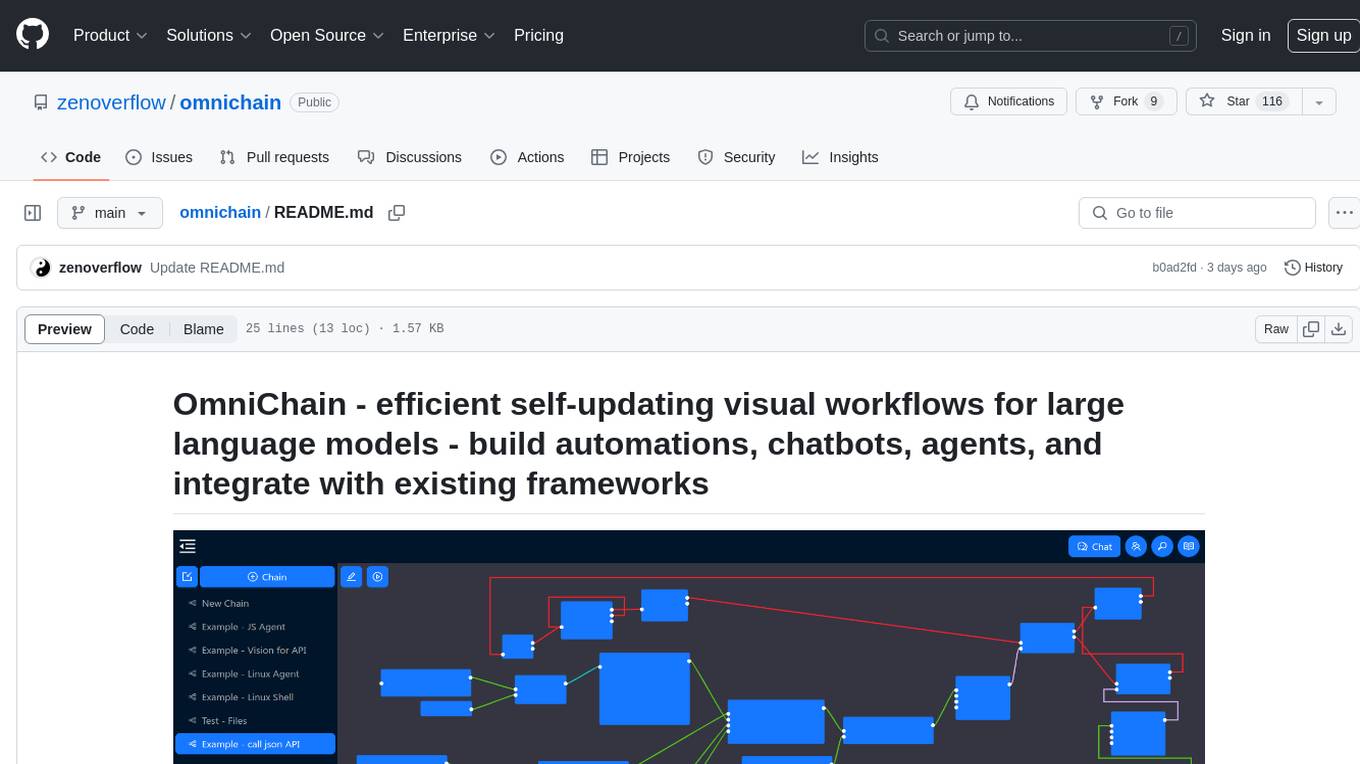

omnichain

OmniChain is a tool for building efficient self-updating visual workflows using AI language models, enabling users to automate tasks, create chatbots, agents, and integrate with existing frameworks. It allows users to create custom workflows guided by logic processes, store and recall information, and make decisions based on that information. The tool enables users to create tireless robot employees that operate 24/7, access the underlying operating system, generate and run NodeJS code snippets, and create custom agents and logic chains. OmniChain is self-hosted, open-source, and available for commercial use under the MIT license, with no coding skills required.

VectorCode

VectorCode is a code repository indexing tool that helps users write better prompts for coding LLMs by providing information about the code repository being worked on. It includes a neovim plugin and supports multiple embedding engines. The tool enhances completion results by providing project context and improves understanding of close-source or cutting edge projects.

tools

Strands Agents Tools is a community-driven project that provides a powerful set of tools for your agents to use. It bridges the gap between large language models and practical applications by offering ready-to-use tools for file operations, system execution, API interactions, mathematical operations, and more. The tools cover a wide range of functionalities including file operations, shell integration, memory storage, web infrastructure, HTTP client, Slack client, Python execution, mathematical tools, AWS integration, image and video processing, audio output, environment management, task scheduling, advanced reasoning, swarm intelligence, dynamic MCP client, parallel tool execution, browser automation, diagram creation, RSS feed management, and computer automation.

nvim-aider

Nvim-aider is a plugin for Neovim that provides additional functionality and key mappings to enhance the user's editing experience. It offers features such as code navigation, quick access to commonly used commands, and improved text manipulation tools. With Nvim-aider, users can streamline their workflow and increase productivity while working with Neovim.

mfish-nocode

Mfish-nocode is a low-code/no-code platform that aims to make development as easy as fishing. It breaks down technical barriers, allowing both developers and non-developers to quickly build business systems, increase efficiency, and unleash creativity. It is not only an efficiency tool for developers during leisure time, but also a website building tool for novices in the workplace, and even a secret weapon for leaders to prototype.

For similar tasks

LLMstudio

LLMstudio by TensorOps is a platform that offers prompt engineering tools for accessing models from providers like OpenAI, VertexAI, and Bedrock. It provides features such as Python Client Gateway, Prompt Editing UI, History Management, and Context Limit Adaptability. Users can track past runs, log costs and latency, and export history to CSV. The tool also supports automatic switching to larger-context models when needed. Coming soon features include side-by-side comparison of LLMs, automated testing, API key administration, project organization, and resilience against rate limits. LLMstudio aims to streamline prompt engineering, provide execution history tracking, and enable effortless data export, offering an evolving environment for teams to experiment with advanced language models.

kaizen

Kaizen is an open-source project that helps teams ensure quality in their software delivery by providing a suite of tools for code review, test generation, and end-to-end testing. It integrates with your existing code repositories and workflows, allowing you to streamline your software development process. Kaizen generates comprehensive end-to-end tests, provides UI testing and review, and automates code review with insightful feedback. The file structure includes components for API server, logic, actors, generators, LLM integrations, documentation, and sample code. Getting started involves installing the Kaizen package, generating tests for websites, and executing tests. The tool also runs an API server for GitHub App actions. Contributions are welcome under the AGPL License.

flux-fine-tuner

This is a Cog training model that creates LoRA-based fine-tunes for the FLUX.1 family of image generation models. It includes features such as automatic image captioning during training, image generation using LoRA, uploading fine-tuned weights to Hugging Face, automated test suite for continuous deployment, and Weights and biases integration. The tool is designed for users to fine-tune Flux models on Replicate for image generation tasks.

shortest

Shortest is an AI-powered natural language end-to-end testing framework built on Playwright. It provides a seamless testing experience by allowing users to write tests in natural language and execute them using Anthropic Claude API. The framework also offers GitHub integration with 2FA support, making it suitable for testing web applications with complex authentication flows. Shortest simplifies the testing process by enabling users to run tests locally or in CI/CD pipelines, ensuring the reliability and efficiency of web applications.

lmstudio-python

LM Studio Python SDK provides a convenient API for interacting with LM Studio instance, including text completion and chat response functionalities. The SDK allows users to manage websocket connections and chat history easily. It also offers tools for code consistency checks, automated testing, and expanding the API.

mastering-github-copilot-for-dotnet-csharp-developers

Enhance coding efficiency with expert-led GitHub Copilot course for C#/.NET developers. Learn to integrate AI-powered coding assistance, automate testing, and boost collaboration using Visual Studio Code and Copilot Chat. From autocompletion to unit testing, cover essential techniques for cleaner, faster, smarter code.

agentql

AgentQL is a suite of tools for extracting data and automating workflows on live web sites featuring an AI-powered query language, Python and JavaScript SDKs, a browser-based debugger, and a REST API endpoint. It uses natural language queries to pinpoint data and elements on any web page, including authenticated and dynamically generated content. Users can define structured data output and apply transforms within queries. AgentQL's natural language selectors find elements intuitively based on the content of the web page and work across similar web sites, self-healing as UI changes over time.

c4-genai-suite

C4-GenAI-Suite is a comprehensive AI tool for generating code snippets and automating software development tasks. It leverages advanced machine learning models to assist developers in writing efficient and error-free code. The suite includes features such as code completion, refactoring suggestions, and automated testing, making it a valuable asset for enhancing productivity and code quality in software development projects.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.