erag

an AI interaction tool with RAG hybrid search, conversation context, web content processing and structured data analysis with LLM / GPT

Stars: 92

ERAG is an advanced system that combines lexical, semantic, text, and knowledge graph searches with conversation context to provide accurate and contextually relevant responses. This tool processes various document types, creates embeddings, builds knowledge graphs, and uses this information to answer user queries intelligently. It includes modules for interacting with web content, GitHub repositories, and performing exploratory data analysis using various language models.

README:

You can use this application to:

- Talk privately with your documents using Ollama or talk fast using Groq and others.

- Perform Retrieval-Augmented Generation activities (RAG) using various APIs (Ollama, LLaMA, Groq, Gemini, Cohere).

- Perform AI powered web search.

- Talk with a specific url.

- Analyze and summarize GitHub repositories.

- Do AI powered Exploratory Data Analysis (EDA) with AI generated Business Intelligence and insights on excels and csv (see some examples in images below).

- Utilize multiple AI models in collaboration (worker, supervisor, manager) for pre-defined complex tasks.

- Generate specific knowledge entries (knol), or generate full size textbooks or use AI generated questions and answers to create datasets.

Thus, ERAG is an advanced system that combines lexical, semantic, text, and knowledge graph searches with conversation context to provide accurate and contextually relevant responses. This tool processes various document types, creates embeddings, builds knowledge graphs, and uses this information to answer user queries intelligently. It also includes modules for interacting with web content, GitHub repositories, performing exploratoru data analysis using various language models.

working on CPU only

tested on Windows 10

- Multi-modal Document Processing: Handles DOCX, PDF, TXT, and JSON files with intelligent chunking and table of contents extraction.

- Advanced Embedding Generation: Creates and manages embeddings for efficient semantic search using sentence transformers, with support for batch processing and caching.

- Knowledge Graph Creation: Builds and utilizes a knowledge graph for enhanced information retrieval using spaCy and NetworkX.

- Multi-API Support: Integrates with Ollama, LLaMA, and Groq APIs for flexible language model deployment.

- Retrieval-Augmented Generation (RAG): Combines retrieved context with language model capabilities for improved responses.

- Web Content Processing: Implements real-time web crawling, content extraction, and summarization.

- Query Routing: Intelligently routes queries to the most appropriate subsystem based on content relevance and query complexity.

- Server Management: Provides a GUI for managing local LLaMA.cpp servers, including model selection and server configuration.

- Customizable Settings: Offers a wide range of configurable parameters through a graphical user interface and a centralized settings management system.

- Advanced Search Utilities: Implements lexical, semantic, graph-based, and text search methods with configurable weights and thresholds.

- Conversation Context Management: Maintains and utilizes conversation history for more coherent and contextually relevant responses.

- GitHub Repository Analysis: Provides tools for analyzing and summarizing GitHub repositories, including code analysis, dependency checking, and code smell detection.

- Web Summarization: Offers capabilities to summarize web content based on user queries.

- Interactive Model Chat: Allows direct interaction with various language models for general conversation and task completion.

- Debug and Logging Capabilities: Provides comprehensive logging and debug information for system operations and search results.

- Color-coded Console Output: Enhances user experience with color-coded console messages for different types of information.

- Structured Data Analysis: Implements tools for analyzing structured data stored in SQLite databases, including value counts, grouped summary statistics, and advanced visualizations.

- Exploratory Data Analysis (EDA): Offers comprehensive EDA capabilities, including distribution analysis, correlation studies, and outlier detection.

- Advanced Data Visualization: Generates various types of plots and charts, such as histograms, box plots, scatter plots, and pair plots for in-depth data exploration.

- Statistical Analysis: Provides tools for conducting statistical tests and generating statistical summaries of the data.

- Multi-Model Collaboration: Utilizes worker, supervisor, and manager AI models to create, improve, and evaluate knowledge entries.

- Iterative Knowledge Refinement: Implements an iterative process of knowledge creation, improvement, and evaluation to achieve high-quality, comprehensive knowledge entries.

- Automated Quality Assessment: Includes an automated grading system for evaluating the quality of generated knowledge entries.

- Structured Knowledge Format: Enforces a consistent, hierarchical structure for knowledge entries to ensure comprehensive coverage and easy navigation.

- PDF Report Generation: Automatically generates comprehensive PDF reports summarizing the results of various analyses, including visualizations and AI-generated interpretations.

ERAG is composed of several interconnected components:

- File Processing: Handles document upload and processing, including table of contents extraction.

- Embedding Utilities: Manages the creation and retrieval of document embeddings.

- Knowledge Graph: Creates and maintains a graph representation of document content and entity relationships.

- RAG System: Implements the core retrieval-augmented generation functionality.

- Query Router: Analyzes queries and routes them to the appropriate subsystem.

- Server Manager: Handles the configuration and management of local LLaMA.cpp servers.

- Settings Manager: Centralizes system configuration and provides easy customization options.

- Search Utilities: Implements various search methods to retrieve relevant context for queries.

- API Integration: Provides a unified interface for interacting with different language model APIs.

- Talk2Model: Enables direct interaction with language models for general queries and tasks.

- Talk2URL: Allows interaction with web content, including crawling and question-answering based on web pages.

- WebRAG: Implements a web-based retrieval-augmented generation system for answering queries using internet content.

- WebSum: Provides tools for summarizing web content based on user queries.

- Talk2Git: Offers capabilities for analyzing and summarizing GitHub repositories.

- Talk2SD: Implements tools for interacting with and analyzing structured data stored in SQLite databases.

- Exploratory Data Analysis (EDA): Provides comprehensive EDA capabilities, including various statistical analyses and visualizations.

- Advanced Exploratory Data Analysis: Offers more sophisticated data analysis techniques, including machine learning-based approaches and complex visualizations.

- Self Knol Creator: Manages the process of creating, improving, and evaluating comprehensive knowledge entries on specific subjects.

- Innovative Exploratory Data Analysis: while the individual analytical techniques are not particularly innovative on their own, the overall system's attempt to automate the entire process from data analysis to interpretation and reporting, using multiple AI models, represents a more innovative approach to data analysis automation. However, the true innovation and effectiveness of this system would depend heavily on the quality of the AI models used.

- Clone the repository:

git clone https://github.com/EdwardDali/erag.git && cd erag - Install torch CPU only

pip install torch==2.3.1 torchvision==0.18.1 torchaudio==2.3.1 --index-url https://download.pytorch.org/whl/cpu

-

Install required Python dependencies:

pip install -r requirements.txt -

Download required spaCy and NLTK models:

python -m spacy download en_core_web_sm python -m nltk.downloader punkt -

Install Ollama (for using Ollama API and for embeddings) and install ollama models:

-

Linux/macOS:

curl https://ollama.ai/install.sh | sh -

Windows: Visit https://ollama.ai/download and follow installation instructions

-

ollama run gemma2:2b

-

ollama run chroma/all-minilm-l6-v2-f32:latest - for embedddings

-

-

Set up environment variables:

- Create a

.envfile in the project root - Add the following variables (if applicable):

GROQ_API_KEY='your_groq_api_key_here' GEMINI_API_KEY='your_gemini_api_key_here' CO_API_KEY='your_cohere_api_key_here' GITHUB_TOKEN='your_github_token_here'

- Create a

-

Start the ERAG GUI:

python main.py -

Use the GUI to:

- Upload and process documents

- Generate embeddings

- Create knowledge graphs

- Configure system settings

- Manage local LLaMA.cpp servers

- Run various RAG operations (Talk2Doc, WebRAG, etc.)

- Analyze structured data and perform exploratory data analysis

- Create and refine comprehensive knowledge entries (Self Knols)

Customize ERAG's behavior through the Settings tab in the GUI or by modifying settings.py. Key configurable options include:

- Chunk sizes and overlap for document processing

- Embedding model selection and batch size

- Knowledge graph parameters (similarity threshold, minimum entity occurrence)

- API selection (Ollama, LLaMA, Groq) and model choices

- Search method weights and thresholds

- RAG system parameters (conversation context size, update threshold)

- Server configuration for local LLaMA.cpp instances

- Web crawling and summarization settings

- GitHub analysis parameters

- Data analysis and visualization parameters

- Self Knol creation parameters (iteration thresholds, quality assessment criteria)

- Query Routing: Automatically determines the best subsystem to handle a query based on its content and complexity.

- Hybrid Search: Combines lexical, semantic, graph-based, and text search methods for comprehensive context retrieval.

- Dynamic Embedding Updates: Automatically updates embeddings as new content is added to the system.

- Conversation Context Management: Maintains a sliding window of recent conversation history for improved contextual understanding.

- Web Content Analysis: Crawls and analyzes web pages to answer queries and generate summaries.

- GitHub Repository Analysis: Provides static code analysis, dependency checking, project summarization, and code smell detection for GitHub repositories.

- Multi-model Support: Allows interaction with various language models through a unified interface.

- Structured Data Analysis: Offers tools for analyzing and visualizing structured data stored in SQLite databases.

- Advanced Exploratory Data Analysis: Provides comprehensive EDA capabilities, including statistical analyses, machine learning techniques, and various types of data visualizations.

- Automated Report Generation: Generates detailed PDF reports summarizing the results of data analyses, complete with visualizations and AI-generated interpretations.

- Self Knol Creation: Utilizes a multi-model approach to create, refine, and evaluate comprehensive knowledge entries on specific subjects.

- Iterative Knowledge Improvement: Implements an iterative process with AI-driven feedback and improvement cycles to enhance the quality and depth of knowledge entries.

Data Preprocessing Techniques

- Data type conversion (e.g., string to datetime)

- Sorting data (for time series analysis)

- Standardization of numerical features

Visualization Techniques

- Various plot types: bar plots, pie charts, line plots, scatter plots, heatmaps

- Use of libraries like Matplotlib and Seaborn for visualization

Performance Optimization

- Use of timeouts to handle long-running operations

Automated Reporting

- Generation of text reports

- Creation of PDF reports with embedded visualizations

Natural Language Processing (NLP)

- Use of language models for interpreting analysis results

- Generating human-readable insights from data analysis

Business Intelligence

- Extraction of key findings and insights

- Formulation of business implications and recommendations based on data analysis

-

Overall Table Analysis

- Row and column count

- Data type distribution

- Memory usage calculation

- Missing value analysis

- Unique value counts

-

Statistical Analysis

- Descriptive statistics (mean, median, standard deviation, min, max)

- Skewness and kurtosis calculation

- Visualization of statistical measures

-

Correlation Analysis

- Correlation matrix computation

- Heatmap visualization of correlations

- Identification of high correlations

- Analysis of top positive and negative correlations

-

Categorical Features Analysis

- Value counts for categorical variables

- Bar plots and pie charts for category distribution

- Analysis of top categories

-

Distribution Analysis

- Histogram with Kernel Density Estimation (KDE)

- Q-Q (Quantile-Quantile) plots

- Normality assessment

-

Outlier Detection

- Interquartile Range (IQR) method

- Box plots for visualizing outliers

- Calculation of outlier percentages

-

Time Series Analysis

- Identification of date columns

- Time series plotting

- Trend visualization over time

-

Feature Importance Analysis

- Random Forest Regressor for feature importance

- Visualization of feature importance

-

Dimensionality Reduction Analysis

- Principal Component Analysis (PCA)

- Scree plot for explained variance

- Cumulative explained variance plot

-

Cluster Analysis

- K-means clustering

- Elbow method for optimal cluster number

- 2D projection of clusters using PCA

-

Adaptive Multi-dimensional Pattern Recognition (AMPR)

- Standardization of numeric data

- Principal Component Analysis (PCA) for dimensionality reduction

- DBSCAN clustering with adaptive epsilon selection

- Silhouette score optimization for clustering

- Isolation Forest for anomaly detection

- Visualization of clusters and anomalies in reduced dimensional space

- Feature correlation analysis in the transformed space

-

Enhanced Time Series Forecasting (ETSF)

- Augmented Dickey-Fuller test for stationarity

- Seasonal decomposition of time series

- ARIMA modeling with exogenous variables

- Incorporation of lag features and Fourier terms for seasonality

- Time series cross-validation

- Forecast evaluation using Mean Squared Error (MSE) and Root Mean Squared Error (RMSE)

- Visualization of observed data, trend, seasonality, residuals, and forecasts

-

Value Counts Analysis

- Pie chart visualization of categorical variable distributions

-

Grouped Summary Statistics

- Calculation of summary statistics grouped by categorical variables

-

Frequency Distribution Analysis

- Histogram plots with Kernel Density Estimation (KDE)

-

KDE Plot Analysis

- Kernel Density Estimation plots for continuous variables

-

Violin Plot Analysis

- Visualization of data distribution across categories

-

Pair Plot Analysis

- Scatter plots for all pairs of numerical variables

-

Box Plot Analysis

- Visualization of data distribution and outliers

-

Scatter Plot Analysis

- Visualization of relationships between pairs of variables

-

Correlation Network Analysis

- Network graph of correlations between variables

-

Q-Q Plot Analysis

- Quantile-Quantile plots for assessing normality

-

Factor Analysis

- Identification of underlying factors in the data

-

Multidimensional Scaling (MDS)

- Visualization of high-dimensional data in lower dimensions

-

t-Distributed Stochastic Neighbor Embedding (t-SNE)

- Non-linear dimensionality reduction for data visualization

-

Conditional Plots

- Visualization of relationships between variables conditioned on categories

-

Individual Conditional Expectation (ICE) Plots

- Visualization of model predictions for individual instances

-

STL Decomposition Analysis

- Seasonal and Trend decomposition using Loess

-

Autocorrelation Plots

- Visualization of serial correlation in time series data

-

Bayesian Networks

- Probabilistic graphical models for representing dependencies

-

Isolation Forest

- Anomaly detection using isolation trees

-

One-Class SVM

- Anomaly detection using support vector machines

-

Local Outlier Factor (LOF)

- Anomaly detection based on local density deviation

-

Robust PCA

- Principal Component Analysis robust to outliers

-

Bayesian Change Point Detection

- Detection of changes in time series data

-

Hidden Markov Models (HMMs)

- Modeling of sequential data with hidden states

-

Dynamic Time Warping (DTW)

- Measurement of similarity between temporal sequences

-

Matrix Profile

- Time series motif discovery and anomaly detection

-

Ensemble Anomaly Detection

- Combination of multiple anomaly detection methods

-

Gaussian Mixture Models (GMM)

- Probabilistic model for representing normally distributed subpopulations

-

Expectation-Maximization Algorithm

- Iterative method for finding maximum likelihood estimates

-

Statistical Process Control (SPC) Charts

- CUSUM (Cumulative Sum) and EWMA (Exponentially Weighted Moving Average) charts

-

KDE Anomaly Detection

- Anomaly detection using Kernel Density Estimation

-

Hotelling's T-squared Analysis

- Multivariate statistical process control

-

Breakdown Point Analysis

- Assessment of the robustness of statistical estimators

-

Chi-Square Test Analysis

- Test for independence between categorical variables

-

Simple Thresholding Analysis

- Basic method for anomaly detection

-

Lilliefors Test Analysis

- Test for normality of the data

-

Jarque-Bera Test Analysis

- Test for normality based on skewness and kurtosis

-

Cook's Distance Analysis

- Identification of influential data points in regression analysis

-

Hampel Filter Analysis

- Robust outlier detection in time series data

-

GESD Test Analysis

- Generalized Extreme Studentized Deviate Test for outliers

-

Dixon's Q Test Analysis

- Identification of outliers in small sample sizes

-

Peirce's Criterion Analysis

- Method for eliminating outliers from data samples

-

Thompson Tau Test Analysis

- Statistical technique for detecting a single outlier in a dataset

-

Sequence Alignment and Matching

- Techniques for comparing and aligning sequences (e.g., in text data)

-

Conformal Anomaly Detection

- Anomaly detection with statistical guarantees

-

Trend Analysis

- Time series plotting

- Trend visualization over time

- Calculation of trend statistics (start, end, change, percent change)

-

Variance Analysis

- Calculation of variance for numeric columns

- Visualization of variance across different variables

-

Regression Analysis

- Simple linear regression for pairs of numeric columns

- Calculation of R-squared, coefficients, and intercepts

-

Stratification Analysis

- Data grouping and aggregation

- Box plot visualization of stratified data

-

Gap Analysis

- Comparison of current values to target values

- Calculation of gaps and gap percentages

-

Duplicate Detection

- Identification of duplicate rows

- Calculation of duplicate percentages

-

Process Mining

- Analysis of process sequences

- Visualization of top process flows

-

Data Validation Techniques

- Checking for missing values, negative values, and out-of-range values

-

Risk Scoring Models

- Development of simple risk scoring models

- Visualization of risk distributions

-

Fuzzy Matching

- Identification of similar text entries

- Calculation of string similarity ratios

-

Continuous Auditing Techniques

- Ongoing analysis of data for anomalies and outliers

-

Sensitivity Analysis

- Assessment of impact of variable changes on outcomes

-

Scenario Analysis

- Creation and comparison of different business scenarios

-

Monte Carlo Simulation

- Generation of multiple simulated scenarios

- Analysis of probabilistic outcomes

-

KPI Analysis

- Definition and calculation of Key Performance Indicators

-

ARIMA (AutoRegressive Integrated Moving Average) Analysis

- Time series forecasting

- Model parameter optimization

-

Auto ARIMAX Analysis

- ARIMA with exogenous variables

- Automatic model selection

-

Exponential Smoothing

- Time series smoothing and forecasting

- Handling of trends and seasonality

-

Holt-Winters Method

- Triple exponential smoothing

- Handling of level, trend, and seasonal components

-

SARIMAX (Seasonal AutoRegressive Integrated Moving Average with eXogenous factors) Analysis

- Seasonal time series forecasting

- Incorporation of external factors

-

Gradient Boosting for Time Series

- Machine learning approach to time series forecasting

- Feature importance analysis in time series context

-

Fourier Analysis

- Frequency domain analysis of time series

- Identification of dominant frequencies

-

Trend Extraction

- Separation of trend and cyclical components

- Use of Hodrick-Prescott filter

-

Cross-Sectional Regression

- Analysis of relationships between variables at a single point in time

-

Ensemble Time Series

- Combination of multiple time series models

- Improved forecast accuracy through model averaging

-

Bootstrapping Time Series

- Resampling techniques for time series data

- Estimation of forecast uncertainty

-

Theta Method

- Decomposition-based forecasting method

- Combination of linear regression and Simple Exponential Smoothing

This comprehensive list covers a wide range of data analytics techniques, from basic statistical analysis to advanced machine learning and time series forecasting methods, demonstrating a thorough approach to exploratory and innovative data analysis.

-

Missing Values

- Checks for null or empty values in columns.

-

Data Type Mismatches

- Verifies if the data type of values matches the expected column type.

-

Duplicate Records

- Identifies duplicate entries across all columns in a table.

-

Inconsistent Formatting

- Detects inconsistent date formats within a column.

-

Outliers

- Identifies statistical outliers in numeric columns using the Interquartile Range (IQR) method.

-

Whitespace Issues

- Checks for leading, trailing, or excessive whitespace in text columns.

-

Special Characters

- Detects the presence of special characters in text columns.

-

Inconsistent Capitalization

- Identifies inconsistent use of uppercase, lowercase, or title case in text columns.

-

Possible Data Truncation

- Checks for values that are close to the maximum observed length in a column, which might indicate truncation.

-

High Frequency Values

- Identifies values that appear with unusually high frequency (>90%) in a column.

-

Suspicious Date Range

- Checks for dates outside a reasonable range (e.g., before 1900 or far in the future).

-

Large Numeric Range

- Detects numeric columns with an unusually large range of values.

-

Very Short Strings

- Identifies strings that are unusually short (less than 2 characters).

-

Very Long Strings

- Identifies strings that are unusually long (more than 255 characters).

-

Invalid Email Format

- Checks if email addresses conform to a standard format.

-

Non-unique Values

- Identifies columns with non-unique values where uniqueness might be expected.

-

Invalid Foreign Keys

- Checks for foreign key violations in the database.

-

Date Inconsistency

- Verifies logical relationships between date columns (e.g., start date before end date).

-

Logical Relationship Violations

- Checks for violations of expected relationships between columns (e.g., a total column should equal the sum of its parts).

-

Pattern Mismatch

- Verifies if values in certain columns match expected patterns (e.g., phone numbers, zip codes, URLs).

- Ensure all dependencies are correctly installed.

- Check console output for detailed error messages (color-coded for easier identification).

- Verify API keys and tokens are correctly set in the

.envfile. - For performance issues, adjust chunk sizes, batch processing parameters, or consider using a GPU.

- If using local LLaMA.cpp servers, ensure the correct model files are available and properly configured.

For support or queries, please open an issue on the GitHub repository or contact the project maintainers.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for erag

Similar Open Source Tools

erag

ERAG is an advanced system that combines lexical, semantic, text, and knowledge graph searches with conversation context to provide accurate and contextually relevant responses. This tool processes various document types, creates embeddings, builds knowledge graphs, and uses this information to answer user queries intelligently. It includes modules for interacting with web content, GitHub repositories, and performing exploratory data analysis using various language models.

erag

ERAG is an advanced system that combines lexical, semantic, text, and knowledge graph searches with conversation context to provide accurate and contextually relevant responses. It processes various document types, creates embeddings, builds knowledge graphs, and uses this information to answer user queries intelligently. The tool includes modules for interacting with web content, GitHub repositories, and performing exploratory data analysis using various language models. It offers a GUI for managing local LLaMA.cpp servers, customizable settings, and advanced search utilities. ERAG supports multi-model collaboration, iterative knowledge refinement, automated quality assessment, and structured knowledge format enforcement. Users can generate specific knowledge entries, full-size textbooks, or datasets using AI-generated questions and answers.

GhidrAssist

GhidrAssist is an advanced LLM-powered plugin for interactive reverse engineering assistance in Ghidra. It integrates Large Language Models (LLMs) to provide intelligent assistance for binary exploration and reverse engineering. The tool supports various OpenAI v1-compatible APIs, including local models and cloud providers. Key features include code explanation, interactive chat, custom queries, Graph-RAG knowledge system with semantic knowledge graph, community detection, security feature extraction, semantic graph tab, extended thinking/reasoning control, ReAct agentic mode, MCP integration, function calling, actions tab, RAG (Retrieval Augmented Generation), and RLHF dataset generation. The plugin uses a modular, service-oriented architecture with core services, Graph-RAG backend, data layer, and UI components.

OAD

OAD is a powerful open-source tool for analyzing and visualizing data. It provides a user-friendly interface for exploring datasets, generating insights, and creating interactive visualizations. With OAD, users can easily import data from various sources, clean and preprocess data, perform statistical analysis, and create customizable visualizations to communicate findings effectively. Whether you are a data scientist, analyst, or researcher, OAD can help you streamline your data analysis workflow and uncover valuable insights from your data.

trustgraph

TrustGraph is a tool that deploys private GraphRAG pipelines to build a RDF style knowledge graph from data, enabling accurate and secure `RAG` requests compatible with cloud LLMs and open-source SLMs. It showcases the reliability and efficiencies of GraphRAG algorithms, capturing contextual language flags missed in conventional RAG approaches. The tool offers features like PDF decoding, text chunking, inference of various LMs, RDF-aligned Knowledge Graph extraction, and more. TrustGraph is designed to be modular, supporting multiple Language Models and environments, with a plug'n'play architecture for easy customization.

LLM-Scratch

LLM-Scratch is a minimal implementation of a GPT-style Large Language Model built from scratch using PyTorch. It utilizes BPE tokenization, multi head self-attention, feed-forward layers, and layer normalization. The model is designed for learning and experimentation purposes, focusing on autoregressive text generation. The codebase is clean, modular, and extensible, with a character-level tokenizer for easy understanding and no external dependencies like BPE or SentencePiece. The model architecture includes token embedding, positional embedding, transformer blocks with masked self-attention, feed-forward network, residual connections, layer normalization, and a language modeling head. Training objective involves next-token prediction using Cross-Entropy Loss and AdamW optimizer, with training data sampled in fixed-length blocks and gradients backpropagated through time. Configuration parameters are centralized for easy experimentation and reproducibility.

eureka-framework

The Eureka Framework is an open-source toolkit that leverages advanced Artificial Intelligence and Decentralized Science principles to revolutionize scientific discovery. It enables researchers, developers, and decentralized organizations to explore scientific papers, conduct AI-driven experiments, monetize research contributions, provide token-gated access to AI agents, and customize AI agents for specific research domains. The framework also offers features like a RESTful API, robust scheduler for task automation, and webhooks for real-time notifications, empowering users to automate research tasks, enhance productivity, and foster a committed research community.

awesome-hallucination-detection

This repository provides a curated list of papers, datasets, and resources related to the detection and mitigation of hallucinations in large language models (LLMs). Hallucinations refer to the generation of factually incorrect or nonsensical text by LLMs, which can be a significant challenge for their use in real-world applications. The resources in this repository aim to help researchers and practitioners better understand and address this issue.

Vodalus-Expert-LLM-Forge

Vodalus Expert LLM Forge is a tool designed for crafting datasets and efficiently fine-tuning models using free open-source tools. It includes components for data generation, LLM interaction, RAG engine integration, model training, fine-tuning, and quantization. The tool is suitable for users at all levels and is accompanied by comprehensive documentation. Users can generate synthetic data, interact with LLMs, train models, and optimize performance for local execution. The tool provides detailed guides and instructions for setup, usage, and customization.

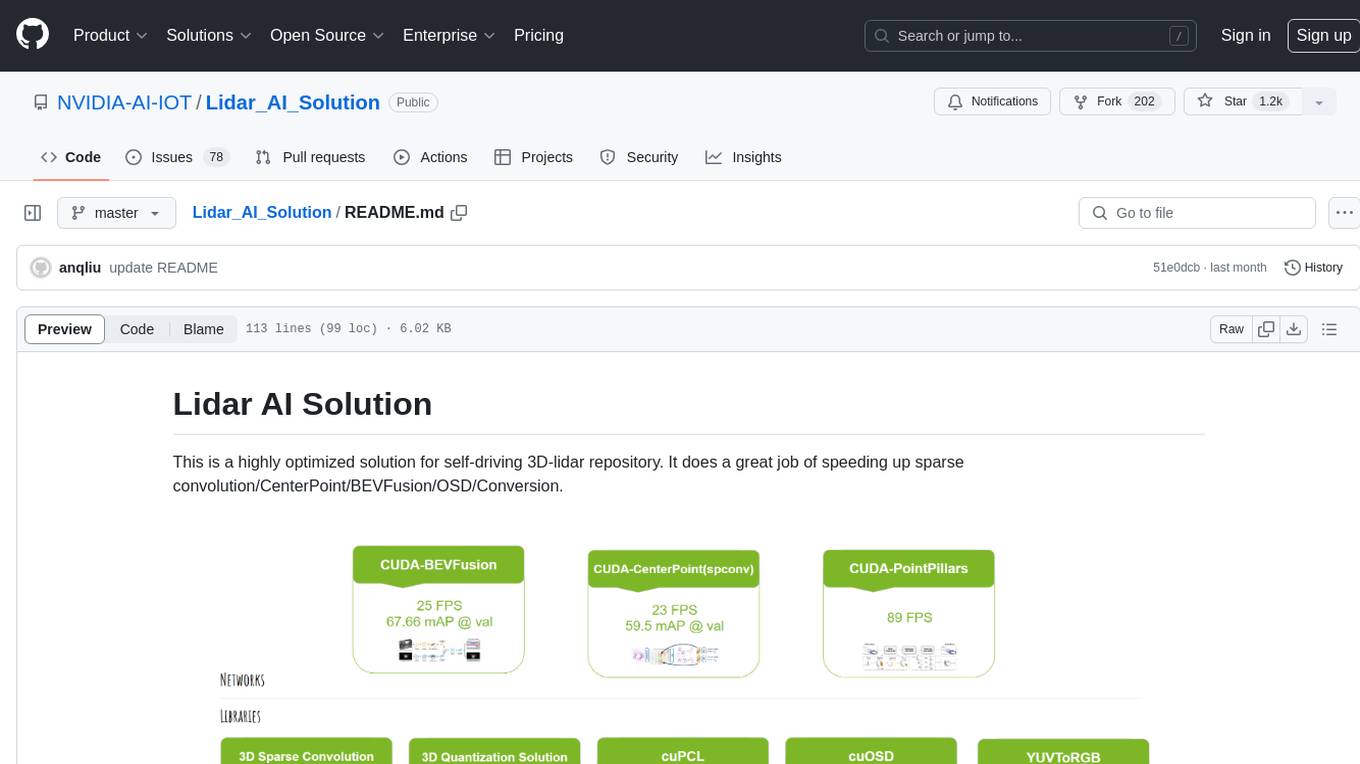

Lidar_AI_Solution

Lidar AI Solution is a highly optimized repository for self-driving 3D lidar, providing solutions for sparse convolution, BEVFusion, CenterPoint, OSD, and Conversion. It includes CUDA and TensorRT implementations for various tasks such as 3D sparse convolution, BEVFusion, CenterPoint, PointPillars, V2XFusion, cuOSD, cuPCL, and YUV to RGB conversion. The repository offers easy-to-use solutions, high accuracy, low memory usage, and quantization options for different tasks related to self-driving technology.

Streamline-Analyst

Streamline Analyst is a cutting-edge, open-source application powered by Large Language Models (LLMs) designed to revolutionize data analysis. This Data Analysis Agent effortlessly automates tasks such as data cleaning, preprocessing, and complex operations like identifying target objects, partitioning test sets, and selecting the best-fit models based on your data. With Streamline Analyst, results visualization and evaluation become seamless. It aims to expedite the data analysis process, making it accessible to all, regardless of their expertise in data analysis. The tool is built to empower users to process data and achieve high-quality visualizations with unparalleled efficiency, and to execute high-performance modeling with the best strategies. Future enhancements include Natural Language Processing (NLP), neural networks, and object detection utilizing YOLO, broadening its capabilities to meet diverse data analysis needs.

MM-RLHF

MM-RLHF is a comprehensive project for aligning Multimodal Large Language Models (MLLMs) with human preferences. It includes a high-quality MLLM alignment dataset, a Critique-Based MLLM reward model, a novel alignment algorithm MM-DPO, and benchmarks for reward models and multimodal safety. The dataset covers image understanding, video understanding, and safety-related tasks with model-generated responses and human-annotated scores. The reward model generates critiques of candidate texts before assigning scores for enhanced interpretability. MM-DPO is an alignment algorithm that achieves performance gains with simple adjustments to the DPO framework. The project enables consistent performance improvements across 10 dimensions and 27 benchmarks for open-source MLLMs.

AI-Blueprints

This repository hosts a collection of AI blueprint projects for HP AI Studio, providing end-to-end solutions across key AI domains like data science, machine learning, deep learning, and generative AI. The projects are designed to be plug-and-play, utilizing open-source and hosted models to offer ready-to-use solutions. The repository structure includes projects related to classical machine learning, deep learning applications, generative AI, NGC integration, and troubleshooting guidelines for common issues. Each project is accompanied by detailed descriptions and use cases, showcasing the versatility and applicability of AI technologies in various domains.

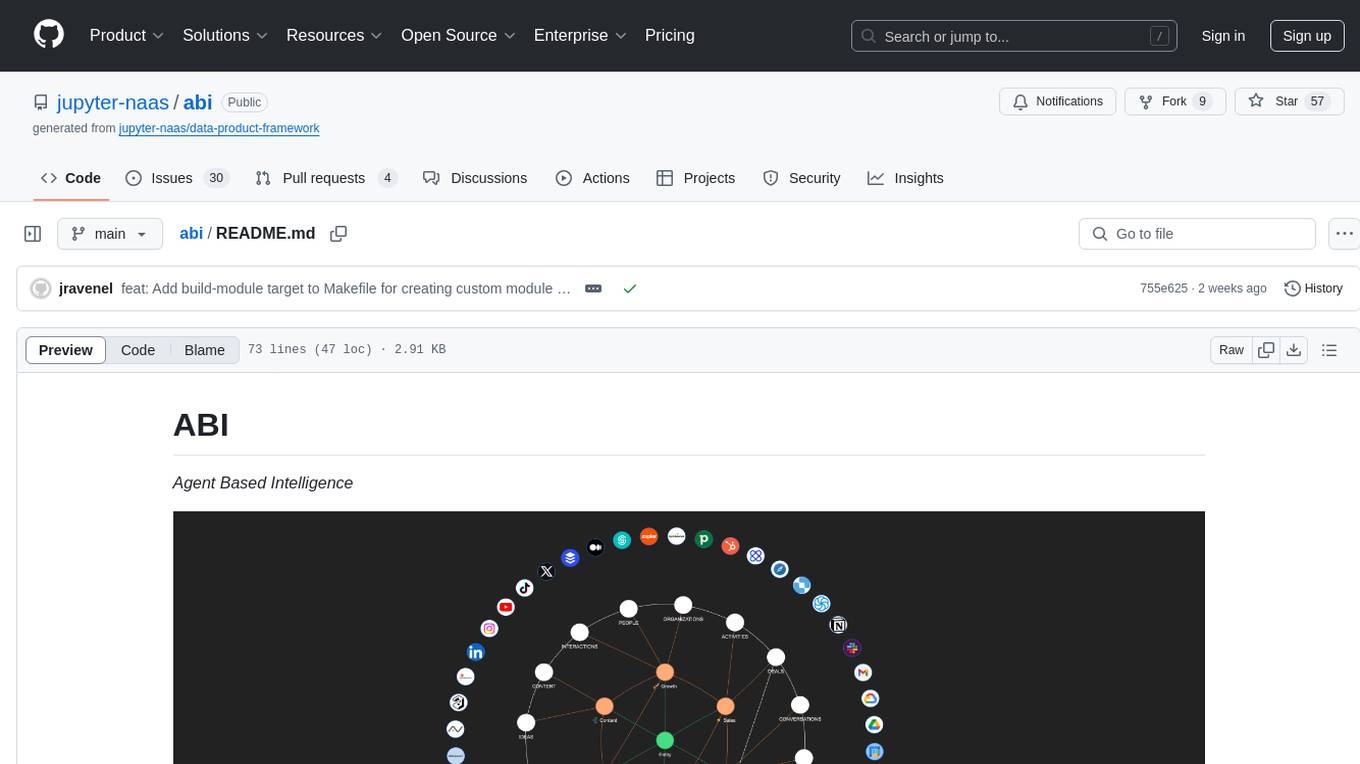

abi

ABI (Agentic Brain Infrastructure) is a Python-based AI Operating System designed to serve as the core infrastructure for building an Agentic AI Ontology Engine. It empowers organizations to integrate, manage, and scale AI-driven operations with multiple AI models, focusing on ontology, agent-driven workflows, and analytics. ABI emphasizes modularity and customization, providing a customizable framework aligned with international standards and regulatory frameworks. It offers features such as configurable AI agents, ontology management, integrations with external data sources, data processing pipelines, workflow automation, analytics, and data handling capabilities.

veScale

veScale is a PyTorch Native LLM Training Framework. It provides a set of tools and components to facilitate the training of large language models (LLMs) using PyTorch. veScale includes features such as 4D parallelism, fast checkpointing, and a CUDA event monitor. It is designed to be scalable and efficient, and it can be used to train LLMs on a variety of hardware platforms.

accelerated-intelligent-document-processing-on-aws

Accelerated Intelligent Document Processing on AWS is a scalable, serverless solution for automated document processing and information extraction using AWS services. It combines OCR capabilities with generative AI to convert unstructured documents into structured data at scale. The solution features a serverless architecture built on AWS technologies, modular processing patterns, advanced classification support, few-shot example support, custom business logic integration, high throughput processing, built-in resilience, cost optimization, comprehensive monitoring, web user interface, human-in-the-loop integration, AI-powered evaluation, extraction confidence assessment, and document knowledge base query. The architecture uses nested CloudFormation stacks to support multiple document processing patterns while maintaining common infrastructure for queueing, tracking, and monitoring.

For similar tasks

document-ai-samples

The Google Cloud Document AI Samples repository contains code samples and Community Samples demonstrating how to analyze, classify, and search documents using Google Cloud Document AI. It includes various projects showcasing different functionalities such as integrating with Google Drive, processing documents using Python, content moderation with Dialogflow CX, fraud detection, language extraction, paper summarization, tax processing pipeline, and more. The repository also provides access to test document files stored in a publicly-accessible Google Cloud Storage Bucket. Additionally, there are codelabs available for optical character recognition (OCR), form parsing, specialized processors, and managing Document AI processors. Community samples, like the PDF Annotator Sample, are also included. Contributions are welcome, and users can seek help or report issues through the repository's issues page. Please note that this repository is not an officially supported Google product and is intended for demonstrative purposes only.

step-free-api

The StepChat Free service provides high-speed streaming output, multi-turn dialogue support, online search support, long document interpretation, and image parsing. It offers zero-configuration deployment, multi-token support, and automatic session trace cleaning. It is fully compatible with the ChatGPT interface. Additionally, it provides seven other free APIs for various services. The repository includes a disclaimer about using reverse APIs and encourages users to avoid commercial use to prevent service pressure on the official platform. It offers online testing links, showcases different demos, and provides deployment guides for Docker, Docker-compose, Render, Vercel, and native deployments. The repository also includes information on using multiple accounts, optimizing Nginx reverse proxy, and checking the liveliness of refresh tokens.

unilm

The 'unilm' repository is a collection of tools, models, and architectures for Foundation Models and General AI, focusing on tasks such as NLP, MT, Speech, Document AI, and Multimodal AI. It includes various pre-trained models, such as UniLM, InfoXLM, DeltaLM, MiniLM, AdaLM, BEiT, LayoutLM, WavLM, VALL-E, and more, designed for tasks like language understanding, generation, translation, vision, speech, and multimodal processing. The repository also features toolkits like s2s-ft for sequence-to-sequence fine-tuning and Aggressive Decoding for efficient sequence-to-sequence decoding. Additionally, it offers applications like TrOCR for OCR, LayoutReader for reading order detection, and XLM-T for multilingual NMT.

searchGPT

searchGPT is an open-source project that aims to build a search engine based on Large Language Model (LLM) technology to provide natural language answers. It supports web search with real-time results, file content search, and semantic search from sources like the Internet. The tool integrates LLM technologies such as OpenAI and GooseAI, and offers an easy-to-use frontend user interface. The project is designed to provide grounded answers by referencing real-time factual information, addressing the limitations of LLM's training data. Contributions, especially from frontend developers, are welcome under the MIT License.

LLMs-at-DoD

This repository contains tutorials for using Large Language Models (LLMs) in the U.S. Department of Defense. The tutorials utilize open-source frameworks and LLMs, allowing users to run them in their own cloud environments. The repository is maintained by the Defense Digital Service and welcomes contributions from users.

LARS

LARS is an application that enables users to run Large Language Models (LLMs) locally on their devices, upload their own documents, and engage in conversations where the LLM grounds its responses with the uploaded content. The application focuses on Retrieval Augmented Generation (RAG) to increase accuracy and reduce AI-generated inaccuracies. LARS provides advanced citations, supports various file formats, allows follow-up questions, provides full chat history, and offers customization options for LLM settings. Users can force enable or disable RAG, change system prompts, and tweak advanced LLM settings. The application also supports GPU-accelerated inferencing, multiple embedding models, and text extraction methods. LARS is open-source and aims to be the ultimate RAG-centric LLM application.

EAGLE

Eagle is a family of Vision-Centric High-Resolution Multimodal LLMs that enhance multimodal LLM perception using a mix of vision encoders and various input resolutions. The model features a channel-concatenation-based fusion for vision experts with different architectures and knowledge, supporting up to over 1K input resolution. It excels in resolution-sensitive tasks like optical character recognition and document understanding.

erag

ERAG is an advanced system that combines lexical, semantic, text, and knowledge graph searches with conversation context to provide accurate and contextually relevant responses. This tool processes various document types, creates embeddings, builds knowledge graphs, and uses this information to answer user queries intelligently. It includes modules for interacting with web content, GitHub repositories, and performing exploratory data analysis using various language models.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.