llm-chain

PHP library for building LLM-based features and applications.

Stars: 67

LLM Chain is a PHP library for building LLM-based features and applications. It provides abstractions for Language Models and Embeddings Models from platforms like OpenAI, Azure, Google, Replicate, and others. The core feature is to interact with language models via messages, supporting different message types and content. LLM Chain also supports tool calling, document embedding, vector stores, similarity search, structured output, response streaming, image processing, audio processing, embeddings, parallel platform calls, and input/output processing. Contributions are welcome, and the repository contains fixture licenses for testing multi-modal features.

README:

PHP library for building LLM-based features and applications.

This library is not a stable yet, but still rather experimental. Feel free to try it out, give feedback, ask questions, contribute or share your use cases. Abstractions, concepts and interfaces are not final and potentially subject of change.

- PHP 8.2 or higher

The recommended way to install LLM Chain is through Composer:

composer require php-llm/llm-chainWhen using Symfony Framework, check out the integration bundle php-llm/llm-chain-bundle.

See examples folder to run example implementations using this library.

Depending on the example you need to export different environment variables

for API keys or deployment configurations or create a .env.local based on .env file.

To run all examples, use make run-examples or php example.

For a more sophisticated demo, see the Symfony Demo Application.

LLM Chain categorizes two main types of models: Language Models and Embeddings Models.

Language Models, like GPT, Claude and Llama, as essential centerpiece of LLM applications and Embeddings Models as supporting models to provide vector representations of text.

Those models are provided by different platforms, like OpenAI, Azure, Google, Replicate, and others.

use PhpLlm\LlmChain\Bridge\OpenAI\Embeddings;

use PhpLlm\LlmChain\Bridge\OpenAI\GPT;

use PhpLlm\LlmChain\Bridge\OpenAI\PlatformFactory;

// Platform: OpenAI

$platform = PlatformFactory::create($_ENV['OPENAI_API_KEY']);

// Language Model: GPT (OpenAI)

$llm = new GPT(GPT::GPT_4O_MINI);

// Embeddings Model: Embeddings (OpenAI)

$embeddings = new Embeddings();- Language Models

- OpenAI's GPT with OpenAI and Azure as Platform

- Anthropic's Claude with Anthropic as Platform

- Meta's Llama with Ollama and Replicate as Platform

- Google's Gemini with Google as Platform

- Google's Gemini with OpenRouter as Platform

- DeepSeek's R1 with OpenRouter as Platform

- Embeddings Models

- OpenAI's Text Embeddings with OpenAI and Azure as Platform

- Voyage's Embeddings with Voyage as Platform

- Other Models

- OpenAI's Dall·E with OpenAI as Platform

- OpenAI's Whisper with OpenAI and Azure as Platform

See issue #28 for planned support of other models and platforms.

The core feature of LLM Chain is to interact with language models via messages. This interaction is done by sending a MessageBag to a Chain, which takes care of LLM invocation and response handling.

Messages can be of different types, most importantly UserMessage, SystemMessage, or AssistantMessage, and can also

have different content types, like Text, Image or Audio.

use PhpLlm\LlmChain\Chain;

use PhpLlm\LlmChain\Model\Message\Message;

use PhpLlm\LlmChain\Model\Message\MessageBag;

// Platform & LLM instantiation

$chain = new Chain($platform, $llm);

$messages = new MessageBag(

Message::forSystem('You are a helpful chatbot answering questions about LLM Chain.'),

Message::ofUser('Hello, how are you?'),

);

$response = $chain->call($messages);

echo $response->getContent(); // "I'm fine, thank you. How can I help you today?"The MessageInterface and Content interface help to customize this process if needed, e.g. additional state handling.

The second parameter of the call method is an array of options, which can be used to configure the behavior of the

chain, like stream, output_structure, or response_format. This behavior is a combination of features provided by

the underlying model and platform, or additional features provided by processors registered to the chain.

Options design for additional features provided by LLM Chain can be found in this documentation. For model and platform specific options, please refer to the respective documentation.

// Chain and MessageBag instantiation

$response = $chain->call($messages, [

'temperature' => 0.5, // example option controlling the randomness of the response, e.g. GPT and Claude

'n' => 3, // example option controlling the number of responses generated, e.g. GPT

]);- Anthropic's Claude: chat-claude-anthropic.php

- OpenAI's GPT with Azure: chat-gpt-azure.php

- OpenAI's GPT: chat-gpt-openai.php

- OpenAI's o1: chat-o1-openai.php

- Meta's Llama with Ollama: chat-llama-ollama.php

- Meta's Llama with Replicate: chat-llama-replicate.php

- Google's Gemini with OpenRouter: chat-gemini-openrouter.php

To integrate LLMs with your application, LLM Chain supports tool calling out of the box. Tools are services that can be called by the LLM to provide additional features or process data.

Tool calling can be enabled by registering the processors in the chain:

use PhpLlm\LlmChain\Chain\Toolbox\ChainProcessor;

use PhpLlm\LlmChain\Chain\Toolbox\Toolbox;

// Platform & LLM instantiation

$yourTool = new YourTool();

$toolbox = Toolbox::create($yourTool);

$toolProcessor = new ChainProcessor($toolbox);

$chain = new Chain($platform, $llm, inputProcessor: [$toolProcessor], outputProcessor: [$toolProcessor]);Custom tools can basically be any class, but must configure by the #[AsTool] attribute.

use PhpLlm\LlmChain\Toolbox\Attribute\AsTool;

#[AsTool('company_name', 'Provides the name of your company')]

final class CompanyName

{

public function __invoke(): string

{

return 'ACME Corp.'

}

}In the end, the tool's response needs to be a string, but LLM Chain converts arrays and objects, that implement the

JsonSerializable interface, to JSON strings for you. So you can return arrays or objects directly from your tool.

You can configure the method to be called by the LLM with the #[AsTool] attribute and have multiple tools per class:

use PhpLlm\LlmChain\Toolbox\Attribute\AsTool;

#[AsTool(

name: 'weather_current',

description: 'get current weather for a location',

method: 'current',

)]

#[AsTool(

name: 'weather_forecast',

description: 'get weather forecast for a location',

method: 'forecast',

)]

final readonly class OpenMeteo

{

public function current(float $latitude, float $longitude): array

{

// ...

}

public function forecast(float $latitude, float $longitude): array

{

// ...

}

}LLM Chain generates a JSON Schema representation for all tools in the Toolbox based on the #[AsTool] attribute and

method arguments and param comments in the doc block. Additionally, JSON Schema support validation rules, which are

partially support by LLMs like GPT.

To leverage this, configure the #[With] attribute on the method arguments of your tool:

use PhpLlm\LlmChain\Chain\JsonSchema\Attribute\With;

use PhpLlm\LlmChain\Chain\Toolbox\Attribute\AsTool;

#[AsTool('my_tool', 'Example tool with parameters requirements.')]

final class MyTool

{

/**

* @param string $name The name of an object

* @param int $number The number of an object

*/

public function __invoke(

#[With(pattern: '/([a-z0-1]){5}/')]

string $name,

#[With(minimum: 0, maximum: 10)]

int $number,

): string {

// ...

}

}See attribute class With for all available options.

[!NOTE] Please be aware, that this is only converted in a JSON Schema for the LLM to respect, but not validated by LLM Chain.

In some cases you might want to use third-party tools, which are not part of your application. Adding the #[AsTool]

attribute to the class is not possible in those cases, but you can explicitly register the tool in the MemoryFactory:

use PhpLlm\LlmChain\Chain\Toolbox\Toolbox;

use PhpLlm\LlmChain\Chain\Toolbox\MetadataFactory\MemoryFactory;

use Symfony\Component\Clock\Clock;

$metadataFactory = (new MemoryFactory())

->addTool(Clock::class, 'clock', 'Get the current date and time', 'now');

$toolbox = new Toolbox($metadataFactory, [new Clock()]);[!NOTE] Please be aware that not all return types are supported by the toolbox, so a decorator might still be needed.

This can be combined with the ChainFactory which enables you to use explicitly registered tools and #[AsTool] tagged

tools in the same chain - which even enables you to overwrite the pre-existing configuration of a tool:

use PhpLlm\LlmChain\Chain\Toolbox\Toolbox;

use PhpLlm\LlmChain\Chain\Toolbox\MetadataFactory\ChainFactory;

use PhpLlm\LlmChain\Chain\Toolbox\MetadataFactory\MemoryFactory;

use PhpLlm\LlmChain\Chain\Toolbox\MetadataFactory\ReflectionFactory;

$reflectionFactory = new ReflectionFactory(); // Register tools with #[AsTool] attribute

$metadataFactory = (new MemoryFactory()) // Register or overwrite tools explicitly

->addTool(...);

$toolbox = new Toolbox(new ChainFactory($metadataFactory, $reflectionFactory), [...]);[!NOTE] The order of the factories in the

ChainFactorymatters, as the first factory has the highest priority.

Similar to third-party tools, you can also use a chain as a tool in another chain. This can be useful to encapsulate complex logic or to reuse a chain in multiple places or hide sub-chains from the LLM.

use PhpLlm\LlmChain\Chain\Toolbox\MetadataFactory\MemoryFactory;

use PhpLlm\LlmChain\Chain\Toolbox\Toolbox;

use PhpLlm\LlmChain\Chain\Toolbox\Tool\Chain;

// Chain was initialized before

$chainTool = new Chain($chain);

$metadataFactory = (new MemoryFactory())

->addTool($chainTool, 'research_agent', 'Meaningful description for sub-chain');

$toolbox = new Toolbox($metadataFactory, [$chainTool]);To gracefully handle errors that occur during tool calling, e.g. wrong tool names or runtime errors, you can use the

FaultTolerantToolbox as a decorator for the Toolbox. It will catch the exceptions and return readable error messages

to the LLM.

use PhpLlm\LlmChain\Chain\Toolbox\ChainProcessor;

use PhpLlm\LlmChain\Chain\Toolbox\FaultTolerantToolbox;

// Platform, LLM & Toolbox instantiation

$toolbox = new FaultTolerantToolbox($innerToolbox);

$toolProcessor = new ChainProcessor($toolbox);

$chain = new Chain($platform, $llm, inputProcessor: [$toolProcessor], outputProcessor: [$toolProcessor]);To limit the tools provided to the LLM in a specific chain call to a subset of the configured tools, you can use the

tools option with a list of tool names:

$this->chain->call($messages, ['tools' => ['tavily_search']]);To react to the result of a tool, you can implement an EventListener or EventSubscriber, that listens to the

ToolCallsExecuted event. This event is dispatched after the Toolbox executed all current tool calls and enables

you to skip the next LLM call by setting a response yourself:

$eventDispatcher->addListener(ToolCallsExecuted::class, function (ToolCallsExecuted $event): void {

foreach ($event->toolCallResults as $toolCallResult) {

if (str_starts_with($toolCallResult->toolCall->name, 'weather_')) {

$event->response = new StructuredResponse($toolCallResult->result);

}

}

});- Clock Tool: toolbox-clock.php

- SerpAPI Tool: toolbox-serpapi.php

- Tavily Tool: toolbox-tavily.php

- Weather Tool with Event Listener: toolbox-weather-event.php

- Wikipedia Tool: toolbox-wikipedia.php

- YouTube Transcriber Tool: toolbox-youtube.php (with streaming)

LLM Chain supports document embedding and similarity search using vector stores like ChromaDB, Azure AI Search, MongoDB Atlas Search, or Pinecone.

For populating a vector store, LLM Chain provides the service Embedder, which requires an instance of an

EmbeddingsModel and one of StoreInterface, and works with a collection of Document objects as input:

use PhpLlm\LlmChain\Embedder;

use PhpLlm\LlmChain\Bridge\OpenAI\Embeddings;

use PhpLlm\LlmChain\Bridge\OpenAI\PlatformFactory;

use PhpLlm\LlmChain\Bridge\Pinecone\Store;

use Probots\Pinecone\Pinecone;

use Symfony\Component\HttpClient\HttpClient;

$embedder = new Embedder(

PlatformFactory::create($_ENV['OPENAI_API_KEY']),

new Embeddings(),

new Store(Pinecone::client($_ENV['PINECONE_API_KEY'], $_ENV['PINECONE_HOST']),

);

$embedder->embed($documents);The collection of Document instances is usually created by text input of your domain entities:

use PhpLlm\LlmChain\Document\Metadata;

use PhpLlm\LlmChain\Document\TextDocument;

foreach ($entities as $entity) {

$documents[] = new TextDocument(

id: $entity->getId(), // UUID instance

content: $entity->toString(), // Text representation of relevant data for embedding

metadata: new Metadata($entity->toArray()), // Array representation of entity to be stored additionally

);

}[!NOTE] Not all data needs to be stored in the vector store, but you could also hydrate the original data entry based on the ID or metadata after retrieval from the store.*

In the end the chain is used in combination with a retrieval tool on top of the vector store, e.g. the built-in

SimilaritySearch tool provided by the library:

use PhpLlm\LlmChain\Chain;

use PhpLlm\LlmChain\Model\Message\Message;

use PhpLlm\LlmChain\Model\Message\MessageBag;

use PhpLlm\LlmChain\Chain\Toolbox\ChainProcessor;

use PhpLlm\LlmChain\Chain\Toolbox\Tool\SimilaritySearch;

use PhpLlm\LlmChain\Chain\Toolbox\Toolbox;

// Initialize Platform & Models

$similaritySearch = new SimilaritySearch($embeddings, $store);

$toolbox = Toolbox::create($similaritySearch);

$processor = new ChainProcessor($toolbox);

$chain = new Chain($platform, $llm, [$processor], [$processor]);

$messages = new MessageBag(

Message::forSystem(<<<PROMPT

Please answer all user questions only using the similary_search tool. Do not add information and if you cannot

find an answer, say so.

PROMPT),

Message::ofUser('...') // The user's question.

);

$response = $chain->call($messages);- MongoDB Store: store-mongodb-similarity-search.php

- Pinecone Store: store-pinecone-similarity-search.php

-

ChromaDB (requires

codewithkyrian/chromadb-phpas additional dependency) - Azure AI Search

-

MongoDB Atlas Search (requires

mongodb/mongodbas additional dependency) -

Pinecone (requires

probots-io/pinecone-phpas additional dependency)

See issue #28 for planned support of other models and platforms.

A typical use-case of LLMs is to classify and extract data from unstructured sources, which is supported by some models by features like Structured Output or providing a Response Format.

LLM Chain support that use-case by abstracting the hustle of defining and providing schemas to the LLM and converting the response back to PHP objects.

To achieve this, a specific chain processor needs to be registered:

use PhpLlm\LlmChain\Chain;

use PhpLlm\LlmChain\Model\Message\Message;

use PhpLlm\LlmChain\Model\Message\MessageBag;

use PhpLlm\LlmChain\Chain\StructuredOutput\ChainProcessor;

use PhpLlm\LlmChain\Chain\StructuredOutput\ResponseFormatFactory;

use PhpLlm\LlmChain\Tests\Chain\StructuredOutput\Data\MathReasoning;

use Symfony\Component\Serializer\Encoder\JsonEncoder;

use Symfony\Component\Serializer\Normalizer\ObjectNormalizer;

use Symfony\Component\Serializer\Serializer;

// Initialize Platform and LLM

$serializer = new Serializer([new ObjectNormalizer()], [new JsonEncoder()]);

$processor = new ChainProcessor(new ResponseFormatFactory(), $serializer);

$chain = new Chain($platform, $llm, [$processor], [$processor]);

$messages = new MessageBag(

Message::forSystem('You are a helpful math tutor. Guide the user through the solution step by step.'),

Message::ofUser('how can I solve 8x + 7 = -23'),

);

$response = $chain->call($messages, ['output_structure' => MathReasoning::class]);

dump($response->getContent()); // returns an instance of `MathReasoning` classAlso PHP array structures as response_format are supported, which also requires the chain processor mentioned above:

use PhpLlm\LlmChain\Model\Message\Message;

use PhpLlm\LlmChain\Model\Message\MessageBag;

// Initialize Platform, LLM and Chain with processors and Clock tool

$messages = new MessageBag(Message::ofUser('What date and time is it?'));

$response = $chain->call($messages, ['response_format' => [

'type' => 'json_schema',

'json_schema' => [

'name' => 'clock',

'strict' => true,

'schema' => [

'type' => 'object',

'properties' => [

'date' => ['type' => 'string', 'description' => 'The current date in the format YYYY-MM-DD.'],

'time' => ['type' => 'string', 'description' => 'The current time in the format HH:MM:SS.'],

],

'required' => ['date', 'time'],

'additionalProperties' => false,

],

],

]]);

dump($response->getContent()); // returns an array- Structured Output (PHP class): structured-output-math.php

- Structured Output (array): structured-output-clock.php

Since LLMs usually generate a response word by word, most of them also support streaming the response using Server Side Events. LLM Chain supports that by abstracting the conversion and returning a Generator as content of the response.

use PhpLlm\LlmChain\Chain;

use PhpLlm\LlmChain\Message\Message;

use PhpLlm\LlmChain\Message\MessageBag;

// Initialize Platform and LLM

$chain = new Chain($llm);

$messages = new MessageBag(

Message::forSystem('You are a thoughtful philosopher.'),

Message::ofUser('What is the purpose of an ant?'),

);

$response = $chain->call($messages, [

'stream' => true, // enable streaming of response text

]);

foreach ($response->getContent() as $word) {

echo $word;

}In a terminal application this generator can be used directly, but with a web app an additional layer like Mercure needs to be used.

- Streaming Claude: stream-claude-anthropic.php

- Streaming GPT: stream-gpt-openai.php

Some LLMs also support images as input, which LLM Chain supports as Content type within the UserMessage:

use PhpLlm\LlmChain\Model\Message\Content\Image;

use PhpLlm\LlmChain\Model\Message\Message;

use PhpLlm\LlmChain\Model\Message\MessageBag;

// Initialize Platform, LLM & Chain

$messages = new MessageBag(

Message::forSystem('You are an image analyzer bot that helps identify the content of images.'),

Message::ofUser(

'Describe the image as a comedian would do it.',

new Image(dirname(__DIR__).'/tests/Fixture/image.jpg'), // Path to an image file

new Image('https://foo.com/bar.png'), // URL to an image

new Image('data:image/png;base64,...'), // Data URL of an image

),

);

$response = $chain->call($messages);- Image Description: image-describer-binary.php (with binary file)

- Image Description: image-describer-url.php (with URL)

Similar to images, some LLMs also support audio as input, which is just another Content type within the UserMessage:

use PhpLlm\LlmChain\Model\Message\Content\Audio;

use PhpLlm\LlmChain\Model\Message\Message;

use PhpLlm\LlmChain\Model\Message\MessageBag;

// Initialize Platform, LLM & Chain

$messages = new MessageBag(

Message::ofUser(

'What is this recording about?',

Audio:fromFile(dirname(__DIR__).'/tests/Fixture/audio.mp3'), // Path to an audio file

),

);

$response = $chain->call($messages);- Audio Description: audio-describer.php

Creating embeddings of word, sentences or paragraphs is a typical use case around the interaction with LLMs and

therefore LLM Chain implements a EmbeddingsModel interface with various models, see above.

The standalone usage results in an Vector instance:

use PhpLlm\LlmChain\Bridge\OpenAI\Embeddings;

// Initialize Platform

$embeddings = new Embeddings($platform, Embeddings::TEXT_3_SMALL);

$vectors = $platform->request($embeddings, $textInput)->getContent();

dump($vectors[0]->getData()); // Array of float values- OpenAI's Emebddings: embeddings-openai.php

- Voyage's Embeddings: embeddings-voyage.php

Platform supports multiple model calls in parallel, which can be useful to speed up the processing:

// Initialize Platform & Model

foreach ($inputs as $input) {

$responses[] = $platform->request($model, $input);

}

foreach ($responses as $response) {

echo $response->getContent().PHP_EOL;

}[!NOTE] This requires cURL and the

ext-curlextension to be installed.

- Parallel GPT Calls: parallel-chat-gpt.php

- Parallel Embeddings Calls: parallel-embeddings.php

[!NOTE] Please be aware that some embeddings models also support batch processing out of the box.

The behavior of the Chain is extendable with services that implement InputProcessor and/or OutputProcessor

interface. They are provided while instantiating the Chain instance:

use PhpLlm\LlmChain\Chain;

// Initialize Platform, LLM and processors

$chain = new Chain($platform, $llm, $inputProcessors, $outputProcessors);InputProcessor instances are called in the chain before handing over the MessageBag and the $options array to the LLM and are

able to mutate both on top of the Input instance provided.

use PhpLlm\LlmChain\Chain\Input;

use PhpLlm\LlmChain\Chain\InputProcessor;

use PhpLlm\LlmChain\Model\Message\AssistantMessage

final class MyProcessor implements InputProcessor

{

public function processInput(Input $input): void

{

// mutate options

$options = $input->getOptions();

$options['foo'] = 'bar';

$input->setOptions($options);

// mutate MessageBag

$input->messages->append(new AssistantMessage(sprintf('Please answer using the locale %s', $this->locale)));

}

}OutputProcessor instances are called after the LLM provided a response and can - on top of options and messages -

mutate or replace the given response:

use PhpLlm\LlmChain\Chain\Output;

use PhpLlm\LlmChain\Chain\OutputProcessor;

use PhpLlm\LlmChain\Model\Message\AssistantMessage

final class MyProcessor implements OutputProcessor

{

public function processOutput(Output $out): void

{

// mutate response

if (str_contains($output->response->getContent, self::STOP_WORD)) {

$output->reponse = new TextReponse('Sorry, we were unable to find relevant information.')

}

}

}Both, Input and Output instances, provide access to the LLM used by the Chain, but the chain itself is only

provided, in case the processor implemented the ChainAwareProcessor interface, which can be combined with using the

ChainAwareTrait:

use PhpLlm\LlmChain\Chain\ChainAwareProcessor;

use PhpLlm\LlmChain\Chain\ChainAwareTrait;

use PhpLlm\LlmChain\Chain\Output;

use PhpLlm\LlmChain\Chain\OutputProcessor;

use PhpLlm\LlmChain\Model\Message\AssistantMessage

final class MyProcessor implements OutputProcessor, ChainAwareProcessor

{

use ChainAwareTrait;

public function processOutput(Output $out): void

{

// additional chain interaction

$response = $this->chain->call(...);

}

}Contributions are always welcome, so feel free to join the development of this library. To get started, please read the contribution guidelines.

Made with contrib.rocks.

For testing multi-modal features, the repository contains binary media content, with the following owners and licenses:

-

tests/Fixture/image.jpg: Chris F., Creative Commons, see pexels.com -

tests/Fixture/audio.mp3: davidbain, Creative Commons, see freesound.org

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for llm-chain

Similar Open Source Tools

llm-chain

LLM Chain is a PHP library for building LLM-based features and applications. It provides abstractions for Language Models and Embeddings Models from platforms like OpenAI, Azure, Google, Replicate, and others. The core feature is to interact with language models via messages, supporting different message types and content. LLM Chain also supports tool calling, document embedding, vector stores, similarity search, structured output, response streaming, image processing, audio processing, embeddings, parallel platform calls, and input/output processing. Contributions are welcome, and the repository contains fixture licenses for testing multi-modal features.

LarAgent

LarAgent is a framework designed to simplify the creation and management of AI agents within Laravel projects. It offers an Eloquent-like syntax for creating and managing AI agents, Laravel-style artisan commands, flexible agent configuration, structured output handling, image input support, and extensibility. LarAgent supports multiple chat history storage options, custom tool creation, event system for agent interactions, multiple provider support, and can be used both in Laravel and standalone environments. The framework is constantly evolving to enhance developer experience, improve AI capabilities, enhance security and storage features, and enable advanced integrations like provider fallback system, Laravel Actions integration, and voice chat support.

IntelliNode

IntelliNode is a javascript module that integrates cutting-edge AI models like ChatGPT, LLaMA, WaveNet, Gemini, and Stable diffusion into projects. It offers functions for generating text, speech, and images, as well as semantic search, multi-model evaluation, and chatbot capabilities. The module provides a wrapper layer for low-level model access, a controller layer for unified input handling, and a function layer for abstract functionality tailored to various use cases.

sparkle

Sparkle is a tool that streamlines the process of building AI-driven features in applications using Large Language Models (LLMs). It guides users through creating and managing agents, defining tools, and interacting with LLM providers like OpenAI. Sparkle allows customization of LLM provider settings, model configurations, and provides a seamless integration with Sparkle Server for exposing agents via an OpenAI-compatible chat API endpoint.

UniChat

UniChat is a pipeline tool for creating online and offline chat-bots in Unity. It leverages Unity.Sentis and text vector embedding technology to enable offline mode text content search based on vector databases. The tool includes a chain toolkit for embedding LLM and Agent in games, along with middleware components for Text to Speech, Speech to Text, and Sub-classifier functionalities. UniChat also offers a tool for invoking tools based on ReActAgent workflow, allowing users to create personalized chat scenarios and character cards. The tool provides a comprehensive solution for designing flexible conversations in games while maintaining developer's ideas.

extractor

Extractor is an AI-powered data extraction library for Laravel that leverages OpenAI's capabilities to effortlessly extract structured data from various sources, including images, PDFs, and emails. It features a convenient wrapper around OpenAI Chat and Completion endpoints, supports multiple input formats, includes a flexible Field Extractor for arbitrary data extraction, and integrates with Textract for OCR functionality. Extractor utilizes JSON Mode from the latest GPT-3.5 and GPT-4 models, providing accurate and efficient data extraction.

LightRAG

LightRAG is a PyTorch library designed for building and optimizing Retriever-Agent-Generator (RAG) pipelines. It follows principles of simplicity, quality, and optimization, offering developers maximum customizability with minimal abstraction. The library includes components for model interaction, output parsing, and structured data generation. LightRAG facilitates tasks like providing explanations and examples for concepts through a question-answering pipeline.

openai

An open-source client package that allows developers to easily integrate the power of OpenAI's state-of-the-art AI models into their Dart/Flutter applications. The library provides simple and intuitive methods for making requests to OpenAI's various APIs, including the GPT-3 language model, DALL-E image generation, and more. It is designed to be lightweight and easy to use, enabling developers to focus on building their applications without worrying about the complexities of dealing with HTTP requests. Note that this is an unofficial library as OpenAI does not have an official Dart library.

models.dev

Models.dev is an open-source database providing detailed specifications, pricing, and capabilities of various AI models. It serves as a centralized platform for accessing information on AI models, allowing users to contribute and utilize the data through an API. The repository contains data stored in TOML files, organized by provider and model, along with SVG logos. Users can contribute by adding new models following specific guidelines and submitting pull requests for validation. The project aims to maintain an up-to-date and comprehensive database of AI model information.

aiavatarkit

AIAvatarKit is a tool for building AI-based conversational avatars quickly. It supports various platforms like VRChat and cluster, along with real-world devices. The tool is extensible, allowing unlimited capabilities based on user needs. It requires VOICEVOX API, Google or Azure Speech Services API keys, and Python 3.10. Users can start conversations out of the box and enjoy seamless interactions with the avatars.

js-genai

The Google Gen AI JavaScript SDK is an experimental SDK for TypeScript and JavaScript developers to build applications powered by Gemini. It supports both the Gemini Developer API and Vertex AI. The SDK is designed to work with Gemini 2.0 features. Users can access API features through the GoogleGenAI classes, which provide submodules for querying models, managing caches, creating chats, uploading files, and starting live sessions. The SDK also allows for function calling to interact with external systems. Users can find more samples in the GitHub samples directory.

LightRAG

LightRAG is a repository hosting the code for LightRAG, a system that supports seamless integration of custom knowledge graphs, Oracle Database 23ai, Neo4J for storage, and multiple file types. It includes features like entity deletion, batch insert, incremental insert, and graph visualization. LightRAG provides an API server implementation for RESTful API access to RAG operations, allowing users to interact with it through HTTP requests. The repository also includes evaluation scripts, code for reproducing results, and a comprehensive code structure.

langchainrb

Langchain.rb is a Ruby library that makes it easy to build LLM-powered applications. It provides a unified interface to a variety of LLMs, vector search databases, and other tools, making it easy to build and deploy RAG (Retrieval Augmented Generation) systems and assistants. Langchain.rb is open source and available under the MIT License.

syncode

SynCode is a novel framework for the grammar-guided generation of Large Language Models (LLMs) that ensures syntactically valid output based on a Context-Free Grammar (CFG). It supports various programming languages like Python, Go, SQL, Math, JSON, and more. Users can define custom grammars using EBNF syntax. SynCode offers fast generation, seamless integration with HuggingFace Language Models, and the ability to sample with different decoding strategies.

gateway

Adaline Gateway is a fully local production-grade Super SDK that offers a unified interface for calling over 200+ LLMs. It is production-ready, supports batching, retries, caching, callbacks, and OpenTelemetry. Users can create custom plugins and providers for seamless integration with their infrastructure.

syncode

SynCode is a novel framework for the grammar-guided generation of Large Language Models (LLMs) that ensures syntactically valid output with respect to defined Context-Free Grammar (CFG) rules. It supports general-purpose programming languages like Python, Go, SQL, JSON, and more, allowing users to define custom grammars using EBNF syntax. The tool compares favorably to other constrained decoders and offers features like fast grammar-guided generation, compatibility with HuggingFace Language Models, and the ability to work with various decoding strategies.

For similar tasks

llm-chain

LLM Chain is a PHP library for building LLM-based features and applications. It provides abstractions for Language Models and Embeddings Models from platforms like OpenAI, Azure, Google, Replicate, and others. The core feature is to interact with language models via messages, supporting different message types and content. LLM Chain also supports tool calling, document embedding, vector stores, similarity search, structured output, response streaming, image processing, audio processing, embeddings, parallel platform calls, and input/output processing. Contributions are welcome, and the repository contains fixture licenses for testing multi-modal features.

NeoGPT

NeoGPT is an AI assistant that transforms your local workspace into a powerhouse of productivity from your CLI. With features like code interpretation, multi-RAG support, vision models, and LLM integration, NeoGPT redefines how you work and create. It supports executing code seamlessly, multiple RAG techniques, vision models, and interacting with various language models. Users can run the CLI to start using NeoGPT and access features like Code Interpreter, building vector database, running Streamlit UI, and changing LLM models. The tool also offers magic commands for chat sessions, such as resetting chat history, saving conversations, exporting settings, and more. Join the NeoGPT community to experience a new era of efficiency and contribute to its evolution.

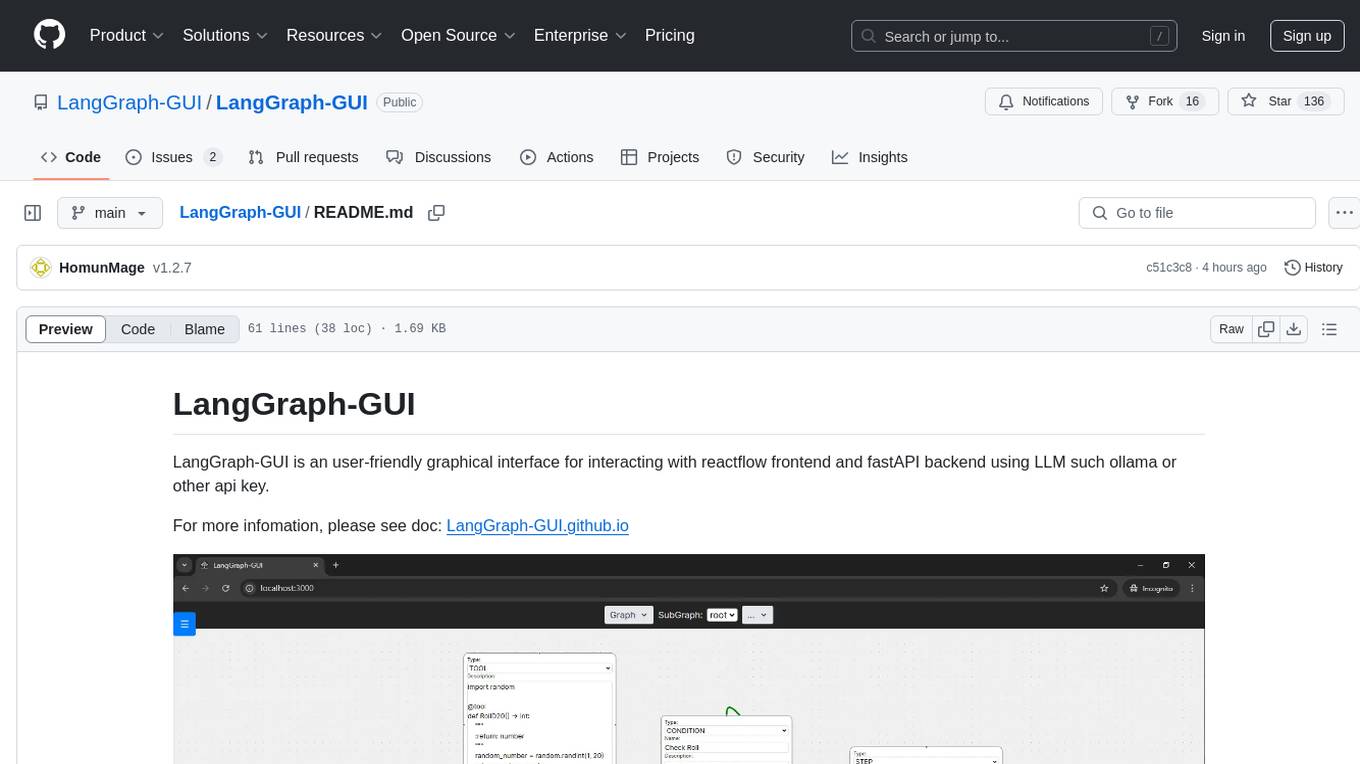

LangGraph-GUI

LangGraph-GUI is a user-friendly graphical interface for interacting with reactflow frontend and fastAPI backend using LLM such as ollama or other API key. It provides a convenient way to work with language models and APIs, offering a seamless experience for users to visualize and interact with the data flow. The tool simplifies the process of setting up the environment and accessing the application, making it easier for users to leverage the power of language models in their projects.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.