instructor-php

Structured data outputs with LLMs, in PHP. Designed for simplicity, transparency, and control.

Stars: 282

Instructor for PHP is a library designed for structured data extraction in PHP, powered by Large Language Models (LLMs). It simplifies the process of extracting structured, validated data from unstructured text or chat sequences. Instructor enhances workflow by providing a response model, validation capabilities, and max retries for requests. It supports classes as response models and provides features like partial results, string input, extracting scalar and enum values, and specifying data models using PHP type hints or DocBlock comments. The library allows customization of validation and provides detailed event notifications during request processing. Instructor is compatible with PHP 8.2+ and leverages PHP reflection, Symfony components, and SaloonPHP for communication with LLM API providers.

README:

Structured data extraction in PHP, powered by LLMs. Designed for simplicity, transparency, and control.

Instructor is a library that allows you to extract structured, validated data from multiple types of inputs: text, images or OpenAI style chat sequence arrays. It is powered by Large Language Models (LLMs).

Instructor simplifies LLM integration in PHP projects. It handles the complexity of extracting structured data from LLM outputs, so you can focus on building your application logic and iterate faster.

Instructor for PHP is inspired by the Instructor library for Python created by Jason Liu.

Here's a simple CLI demo app using Instructor to extract structured data from text:

- Official website https://instructorphp.com

- Docs website (Mintlify) https://docs.instructorphp.com

- Docs (Github Pages) https://cognesy.github.io/instructor-php/

Instructor introduces three key enhancements compared to direct API usage.

Specify a PHP class to extract data into via the 'magic' of LLM chat completion. And that's it.

Instructor reduces brittleness of the code extracting the information from textual data by leveraging structured LLM responses.

Instructor helps you write simpler, easier to understand code: you no longer have to define lengthy function call definitions or write code for assigning returned JSON into target data objects.

Response model generated by LLM can be automatically validated, following set of rules. Currently, Instructor supports only Symfony validation.

You can also provide a context object to use enhanced validator capabilities.

You can set the number of retry attempts for requests.

Instructor will repeat requests in case of validation or deserialization error up to the specified number of times, trying to get a valid response from LLM.

Instructor offers out-of-the-box support for the following LLM providers:

- A21 / Mamba

- Anthropic

- Azure OpenAI

- Cerebras

- Cohere (v1 and v2)

- Deepseek

- Fireworks

- Google Gemini (native and OpenAI compatible)

- Groq

- HuggingFace

- Minimaxi

- Mistral

- Moonshot / Kimi

- Ollama (on localhost)

- OpenAI

- OpenRouter

- Perplexity

- Sambanova

- Together AI

- xAI / Grok

For usage examples, check Hub section or examples directory in the code repository.

You can install Instructor via Composer:

composer require cognesy/instructor-phpThis is a simple example demonstrating how Instructor retrieves structured information from provided text (or chat message sequence).

Response model class is a plain PHP class with typehints specifying the types of fields of the object.

use Cognesy\Instructor\StructuredOutput;

// Step 0: Create .env file in your project root:

// OPENAI_API_KEY=your_api_key

// Step 1: Define target data structure(s)

class Person {

public string $name;

public int $age;

}

// Step 2: Provide content to process

$text = "His name is Jason and he is 28 years old.";

// Step 3: Use Instructor to run LLM inference

$person = (new StructuredOutput)

->withResponseClass(Person::class)

->withMessages($text)

->get();

// Step 4: Work with structured response data

assert($person instanceof Person); // true

assert($person->name === 'Jason'); // true

assert($person->age === 28); // true

echo $person->name; // Jason

echo $person->age; // 28

var_dump($person);

// Person {

// name: "Jason",

// age: 28

// } NOTE: Instructor supports classes / objects as response models. In case you want to extract simple types or enums, you need to wrap them in Scalar adapter - see section below: Extracting Scalar Values.

Instructor validates results of LLM response against validation rules specified in your data model.

For further details on available validation rules, check Symfony Validation constraints.

use Symfony\Component\Validator\Constraints as Assert;

class Person {

public string $name;

#[Assert\PositiveOrZero]

public int $age;

}

$text = "His name is Jason, he is -28 years old.";

$person = (new StructuredOutput)

->withResponseClass(Person::class)

->with(

messages: [['role' => 'user', 'content' => $text]],

)

->get();

// if the resulting object does not validate, Instructor throws an exceptionIn case maxRetries parameter is provided and LLM response does not meet validation criteria, Instructor will make subsequent inference attempts until results meet the requirements or maxRetries is reached.

Instructor uses validation errors to inform LLM on the problems identified in the response, so that LLM can try self-correcting in the next attempt.

use Cognesy\Instructor\StructuredOutput;

use Symfony\Component\Validator\Constraints as Assert;

class Person {

#[Assert\Length(min: 3)]

public string $name;

#[Assert\PositiveOrZero]

public int $age;

}

$text = "His name is JX, aka Jason, he is -28 years old.";

$person = (new StructuredOutput)

->with(

messages: [['role' => 'user', 'content' => $text]],

responseModel: Person::class,

maxRetries: 3,

)

->get();

// if all LLM's attempts to self-correct the results fail, Instructor throws an exceptionInstructor supports multiple output modes to allow working with various models depending on their capabilities.

-

OutputMode::Json- generate structured output via LLM's native JSON generation -

OutputMode::JsonSchema- use LLM's strict JSON Schema mode to enforce JSON Schema -

OutputMode::Tools- use tool calling API to get LLM follow provided schema -

OutputMode::MdJson- use prompting to generate structured output; fallback for the models that do not support JSON generation or tool calling

Additionally, you can use OutputMode::Text to get LLM to generate text output without any structured data extraction.

-

OutputMode::Text- generate text output -

OutputMode::Unrestricted- generate unrestricted output based on inputs provided by the user (with no enforcement of specific output format)

Check out the documentation website for more details and examples of how to use Instructor for PHP.

- Get structured responses from LLMs without writing boilerplate code

- Validation of returned data

- Automated retries in case of errors when LLM responds with invalid data

- Integrate LLM support into your existing PHP code with minimal friction - no framework, no extensive code changes

- Framework agnostic - use it with Laravel, Symfony, your custom framework, or - with no framework at all

- Supports multiple extraction modes to allow working with various models depending on their capabilities

-

OutputMode::Json- use response_format to get LLM follow provided JSON Schema -

OutputMode::JsonSchema- use strict JSON Schema mode to get LLM follow provided JSON Schema -

OutputMode::Tools- use tool calling API to get LLM follow provided JSON Schema -

OutputMode::MdJson- extract via prompting LLM to nudge it to generate provided JSON Schema

- Process various types of input data: text, series of chat messages or images using the same, simple API

- 'Structured-to-structured' processing - provide object or array as an input and get object with the results of inference back

- Demonstrate examples to improve the quality of inference

- Define response data model the way you want: type-hinted classes, JSON Schema arrays, or dynamic data shapes with

Structureclass - Customize prompts and retry prompts

- Use attributes or PHP DocBlocks to provide additional instructions for LLM

- Customize response model processing by providing your own implementation of schema, deserialization, validation and transformation interfaces

- Supports both synchronous or streaming responses

- Get partial updates & stream completed sequence items

- Get detailed insight into internal processing via events

- Debug mode to see the details of LLM API requests and responses

- Easily switch between LLM providers

- Support for most popular LLM APIs (incl. OpenAI, Gemini, Anthropic, Cohere, Azure, Groq, Mistral, Fireworks AI, Together AI)

- OpenRouter support - access to 100+ language models

- Use local models with Ollama

- Developer friendly LLM context caching for reduced costs and faster inference (for Anthropic models)

- Developer friendly data extraction from images (for OpenAI, Anthropic and Gemini models)

- Generate vector embeddings using APIs of multiple supported LLM providers

- Learn more from growing documentation and 100+ cookbooks

Check out implementations in other languages below:

- Python (original)

- Javascript (port)

- Elixir (port)

- Ruby (port)

If you want to port Instructor to another language, please reach out to us on Twitter we'd love to help you get started!

This repository is a monorepo containing all Instructor's components (required and optional). It hosts all that you need to work with LLMs via Instructor.

Individual components are also distributed as standalone packages that can be used independently.

Links to read-only repositories of the standalone package distributions:

- instructor-addons - extra capabilities and common LLM-related problem solutions

- instructor-aux - external tools and integrations, e.g. used by Instructor examples

- instructor-config - configuration management for Instructor

- instructor-evals - LLM output evaluation tools

- instructor-events - events and event listeners for Instructor

- instructor-http-client - easily switch between underlying HTTP client libraries (out-of-the-box support for Guzzle, Symfony, Laravel)

- instructor-hub - CLI tool for browsing and running Instructor examples

- instructor-messages - chat message sequence handling for Instructor

- instructor-polyglot - use single API for inference and embeddings across most of LLM providers, easily switch between them (e.g., develop on Ollama, switch to Groq in production)

- instructor-schema - object schema handling for Instructor

- instructor-setup - CLI tool for publishing Instructor config files in your app

- instructor-struct - get dev friendly structured outputs from LLMs

- instructor-tell - CLI tool for executing LLM prompts in your terminal

- instructor-templates - text and chat template tools used by Instructor, support Twig, Blade and ArrowPipe formats

- instructor-utils - common utility classes used by Instructor packages

NOTE: If you are just starting to use Instructor, I recommend using the

instructor-phppackage. It contains all the required components and is the easiest way to get started with the library.

This project is licensed under the terms of the MIT License.

If you have any questions or need help, please reach out to me on Twitter or GitHub.

If you want to help, check out some of the issues. All contributions are welcome - code improvements, documentation, bug reports, blog posts / articles, or new cookbooks and application examples.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for instructor-php

Similar Open Source Tools

instructor-php

Instructor for PHP is a library designed for structured data extraction in PHP, powered by Large Language Models (LLMs). It simplifies the process of extracting structured, validated data from unstructured text or chat sequences. Instructor enhances workflow by providing a response model, validation capabilities, and max retries for requests. It supports classes as response models and provides features like partial results, string input, extracting scalar and enum values, and specifying data models using PHP type hints or DocBlock comments. The library allows customization of validation and provides detailed event notifications during request processing. Instructor is compatible with PHP 8.2+ and leverages PHP reflection, Symfony components, and SaloonPHP for communication with LLM API providers.

poml

POML (Prompt Orchestration Markup Language) is a novel markup language designed to bring structure, maintainability, and versatility to advanced prompt engineering for Large Language Models (LLMs). It addresses common challenges in prompt development, such as lack of structure, complex data integration, format sensitivity, and inadequate tooling. POML provides a systematic way to organize prompt components, integrate diverse data types seamlessly, and manage presentation variations, empowering developers to create more sophisticated and reliable LLM applications.

fuse-med-ml

FuseMedML is a Python framework designed to accelerate machine learning-based discovery in the medical field by promoting code reuse. It provides a flexible design concept where data is stored in a nested dictionary, allowing easy handling of multi-modality information. The framework includes components for creating custom models, loss functions, metrics, and data processing operators. Additionally, FuseMedML offers 'batteries included' key components such as fuse.data for data processing, fuse.eval for model evaluation, and fuse.dl for reusable deep learning components. It supports PyTorch and PyTorch Lightning libraries and encourages the creation of domain extensions for specific medical domains.

premsql

PremSQL is an open-source library designed to help developers create secure, fully local Text-to-SQL solutions using small language models. It provides essential tools for building and deploying end-to-end Text-to-SQL pipelines with customizable components, ideal for secure, autonomous AI-powered data analysis. The library offers features like Local-First approach, Customizable Datasets, Robust Executors and Evaluators, Advanced Generators, Error Handling and Self-Correction, Fine-Tuning Support, and End-to-End Pipelines. Users can fine-tune models, generate SQL queries from natural language inputs, handle errors, and evaluate model performance against predefined metrics. PremSQL is extendible for customization and private data usage.

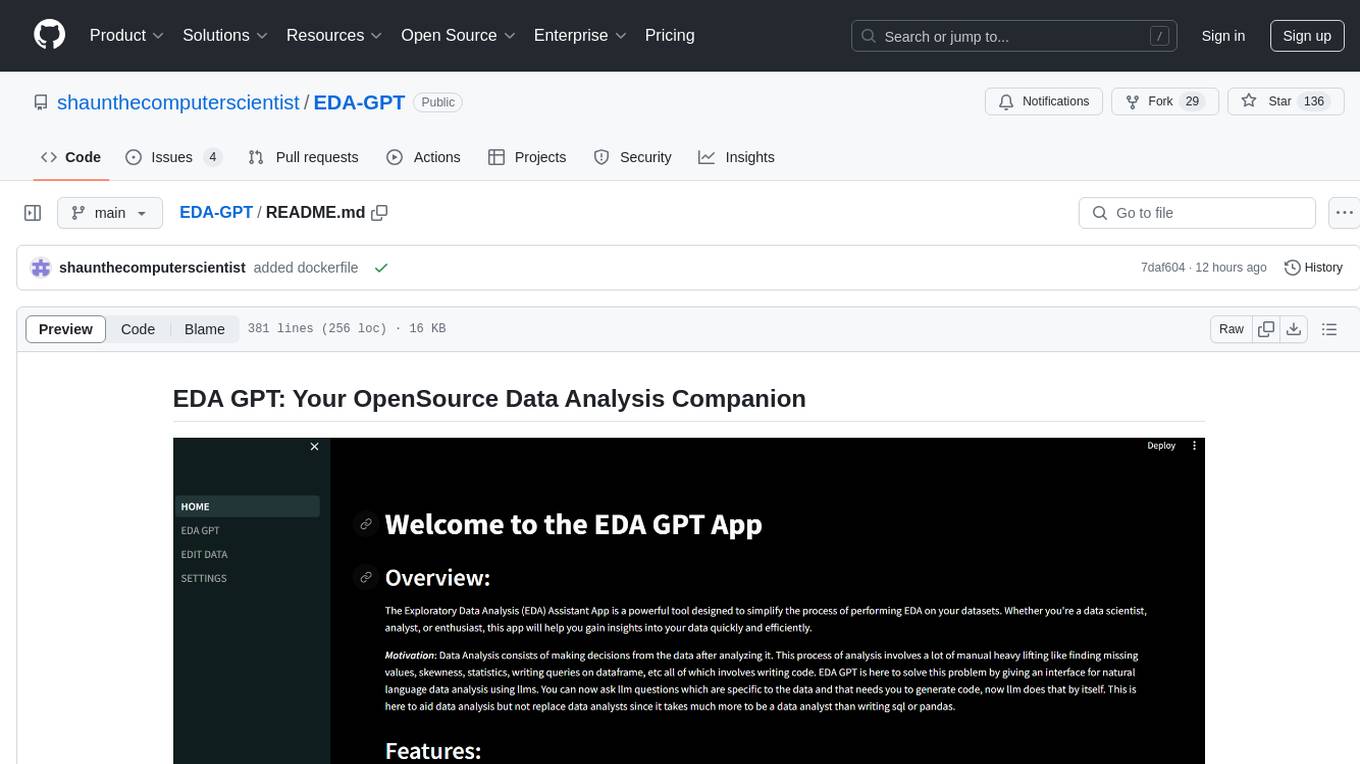

EDA-GPT

EDA GPT is an open-source data analysis companion that offers a comprehensive solution for structured and unstructured data analysis. It streamlines the data analysis process, empowering users to explore, visualize, and gain insights from their data. EDA GPT supports analyzing structured data in various formats like CSV, XLSX, and SQLite, generating graphs, and conducting in-depth analysis of unstructured data such as PDFs and images. It provides a user-friendly interface, powerful features, and capabilities like comparing performance with other tools, analyzing large language models, multimodal search, data cleaning, and editing. The tool is optimized for maximal parallel processing, searching internet and documents, and creating analysis reports from structured and unstructured data.

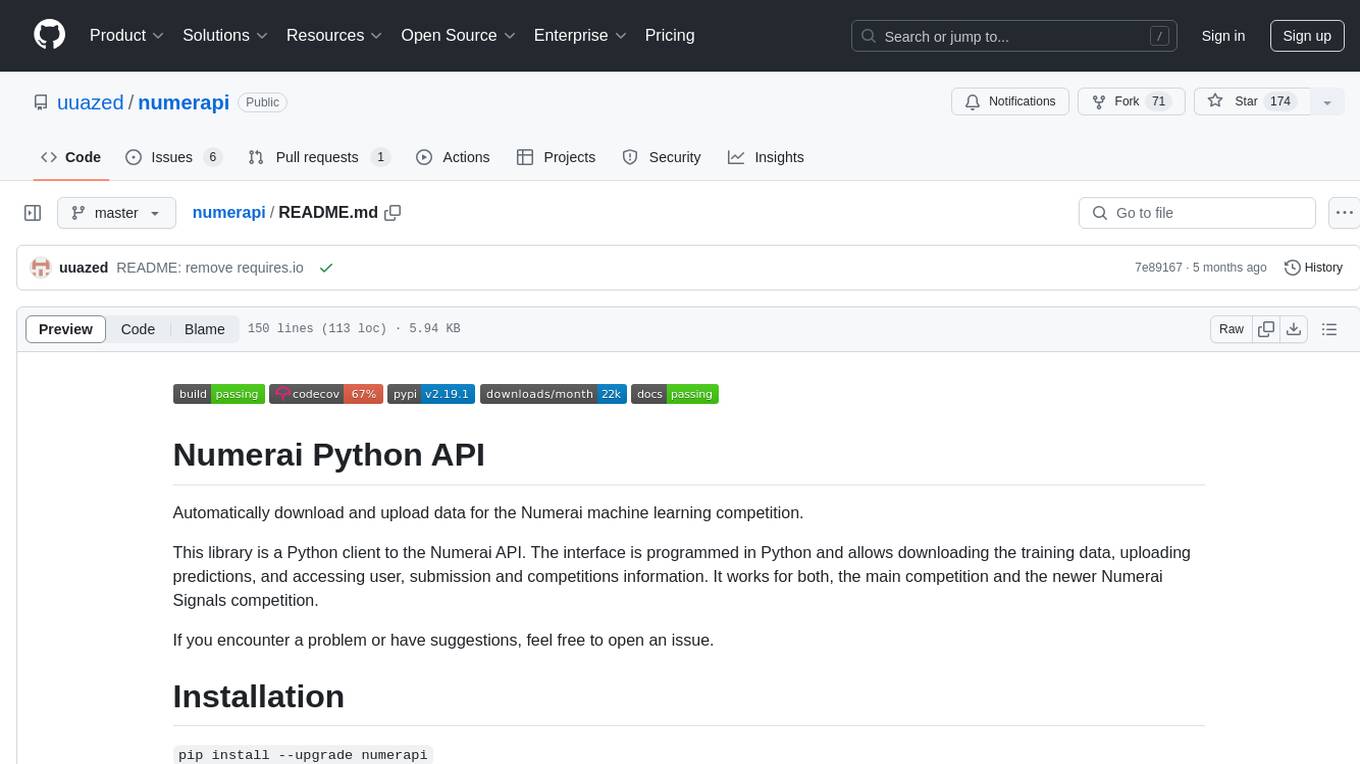

numerapi

Numerapi is a Python client to the Numerai API that allows users to automatically download and upload data for the Numerai machine learning competition. It provides functionalities for downloading training data, uploading predictions, and accessing user, submission, and competitions information for both the main competition and Numerai Signals competition. Users can interact with the API using Python modules or command line interface. Tokens are required for certain actions like uploading predictions or staking, which can be obtained from Numer.ai account settings. The tool also supports features like checking new rounds, getting leaderboards, and managing stakes.

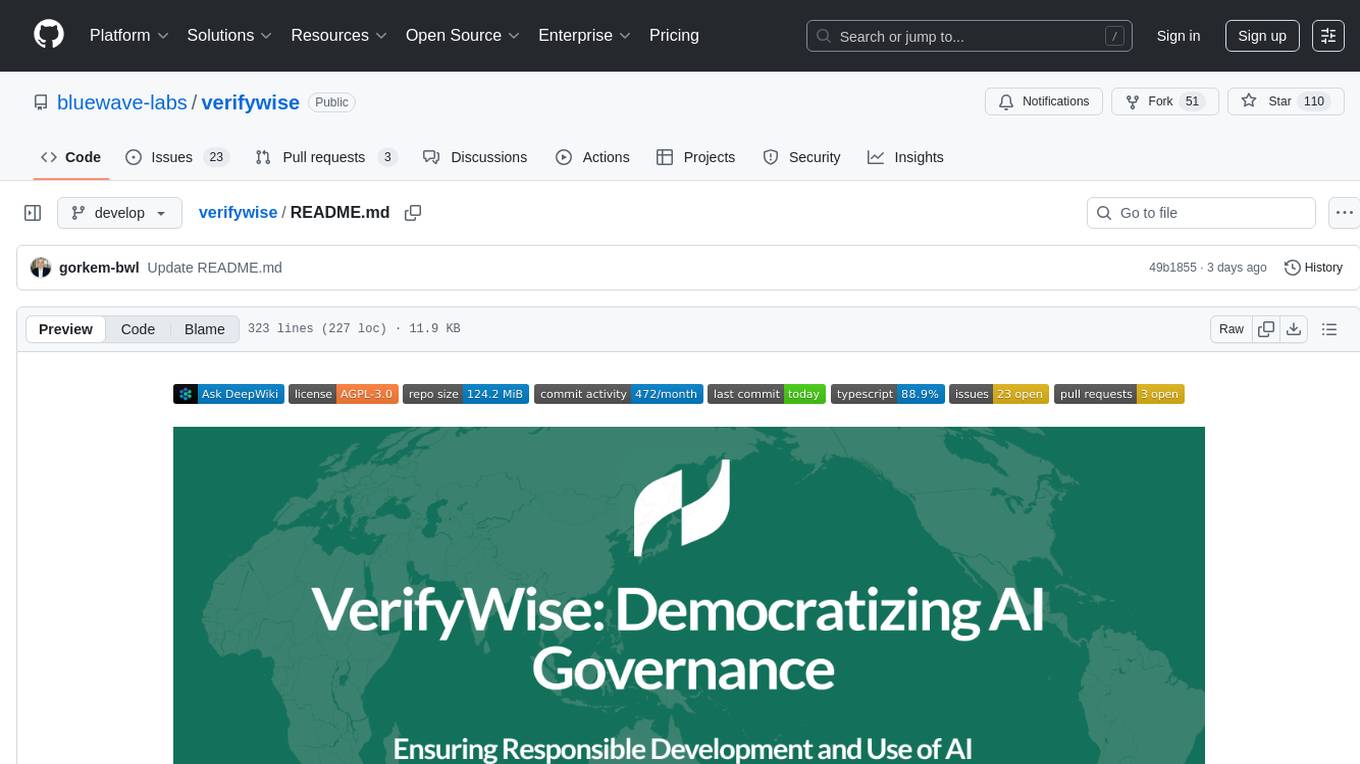

verifywise

VerifyWise is an open-source AI governance platform designed to help businesses harness the power of AI safely and responsibly. The platform ensures compliance and robust AI management without compromising on security. It offers additional products like MaskWise for data redaction, EvalWise for AI model evaluation, and FlagWise for security threat monitoring. VerifyWise simplifies AI governance for organizations, aiding in risk management, regulatory compliance, and promoting responsible AI practices. It features options for on-premises or private cloud hosting, open-source with AGPLv3 license, AI-generated answers for compliance audits, source code transparency, Docker deployment, user registration, role-based access control, and various AI governance tools like risk management, bias & fairness checks, evidence center, AI trust center, and more.

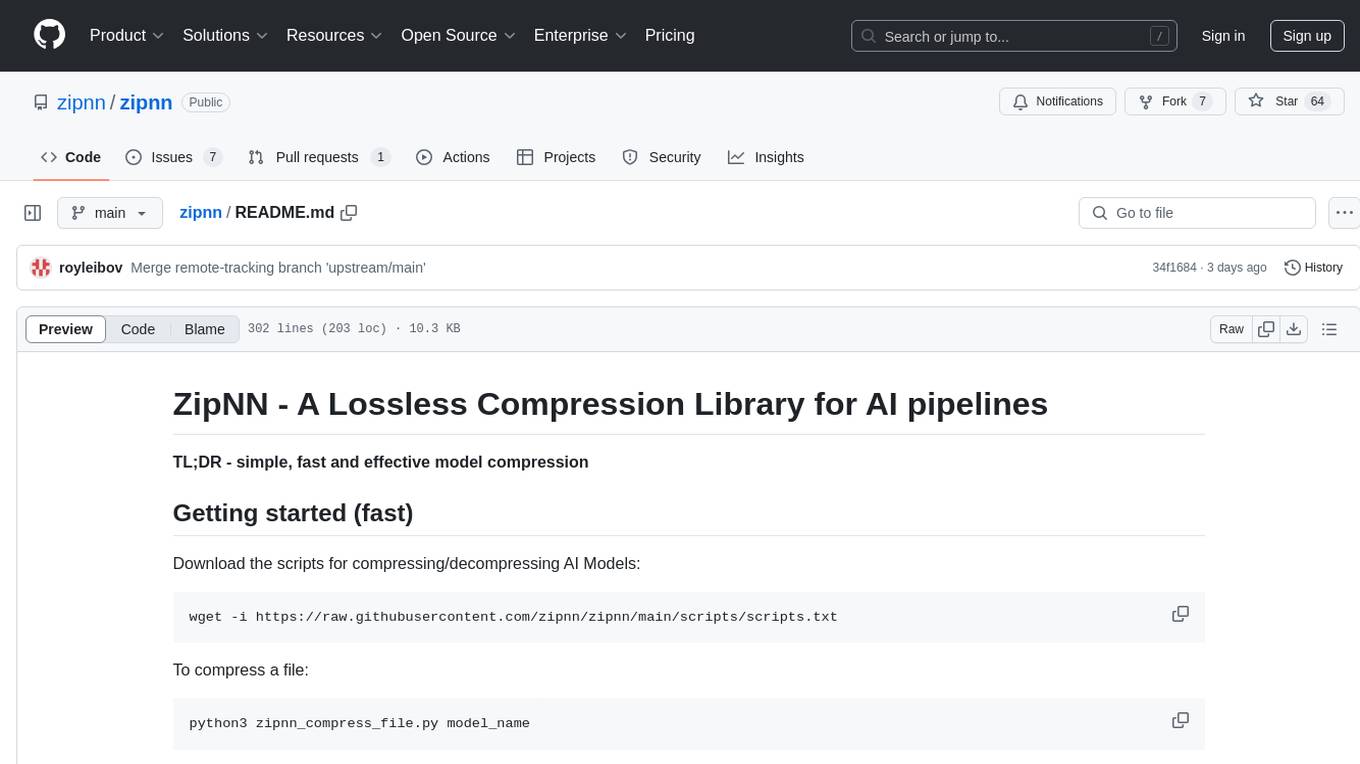

zipnn

ZipNN is a lossless and near-lossless compression library optimized for numbers/tensors in the Foundation Models environment. It automatically prepares data for compression based on its type, allowing users to focus on core tasks without worrying about compression complexities. The library delivers effective compression techniques for different data types and structures, achieving high compression ratios and rates. ZipNN supports various compression methods like ZSTD, lz4, and snappy, and provides ready-made scripts for file compression/decompression. Users can also manually import the package to compress and decompress data. The library offers advanced configuration options for customization and validation tests for different input and compression types.

FunClip

FunClip is an open-source, locally deployable automated video editing tool that utilizes the FunASR Paraformer series models from Alibaba DAMO Academy for speech recognition in videos. Users can select text segments or speakers from the recognition results and click the clip button to obtain the corresponding video segments. FunClip integrates advanced features such as the Paraformer-Large model for accurate Chinese ASR, SeACo-Paraformer for customized hotword recognition, CAM++ speaker recognition model, Gradio interactive interface for easy usage, support for multiple free edits with automatic SRT subtitles generation, and segment-specific SRT subtitles.

FunClip

FunClip is an open-source, locally deployed automated video clipping tool that leverages Alibaba TONGYI speech lab's FunASR Paraformer series models for speech recognition on videos. Users can select text segments or speakers from recognition results to obtain corresponding video clips. It integrates industrial-grade models for accurate predictions and offers hotword customization and speaker recognition features. The tool is user-friendly with Gradio interaction, supporting multi-segment clipping and providing full video and target segment subtitles. FunClip is suitable for users looking to automate video clipping tasks with advanced AI capabilities.

crewAI

CrewAI is a cutting-edge framework designed to orchestrate role-playing autonomous AI agents. By fostering collaborative intelligence, CrewAI empowers agents to work together seamlessly, tackling complex tasks. It enables AI agents to assume roles, share goals, and operate in a cohesive unit, much like a well-oiled crew. Whether you're building a smart assistant platform, an automated customer service ensemble, or a multi-agent research team, CrewAI provides the backbone for sophisticated multi-agent interactions. With features like role-based agent design, autonomous inter-agent delegation, flexible task management, and support for various LLMs, CrewAI offers a dynamic and adaptable solution for both development and production workflows.

TaskWeaver

TaskWeaver is a code-first agent framework designed for planning and executing data analytics tasks. It interprets user requests through code snippets, coordinates various plugins to execute tasks in a stateful manner, and preserves both chat history and code execution history. It supports rich data structures, customized algorithms, domain-specific knowledge incorporation, stateful execution, code verification, easy debugging, security considerations, and easy extension. TaskWeaver is easy to use with CLI and WebUI support, and it can be integrated as a library. It offers detailed documentation, demo examples, and citation guidelines.

llm-on-ray

LLM-on-Ray is a comprehensive solution for building, customizing, and deploying Large Language Models (LLMs). It simplifies complex processes into manageable steps by leveraging the power of Ray for distributed computing. The tool supports pretraining, finetuning, and serving LLMs across various hardware setups, incorporating industry and Intel optimizations for performance. It offers modular workflows with intuitive configurations, robust fault tolerance, and scalability. Additionally, it provides an Interactive Web UI for enhanced usability, including a chatbot application for testing and refining models.

AI-Office-Translator

AI-Office-Translator is a free, fully localized, user-friendly translation tool that helps you translate Office files (Word, PowerPoint, and Excel) between different languages. It supports .docx, .pptx, and .xlsx files and allows translation between English, Chinese, and Japanese. Users can run the tool after installing CUDA, downloading Ollama dependencies and models, setting up a virtual environment (optional), and installing requirements. The tool provides a UI where users can select languages, models, upload files for translation, start translation, and download translated files. It also supports an online mode with API key integration. The software is open-source under GPL-3.0 license and only provides AI translation services, with users expected to engage in legal translation activities.

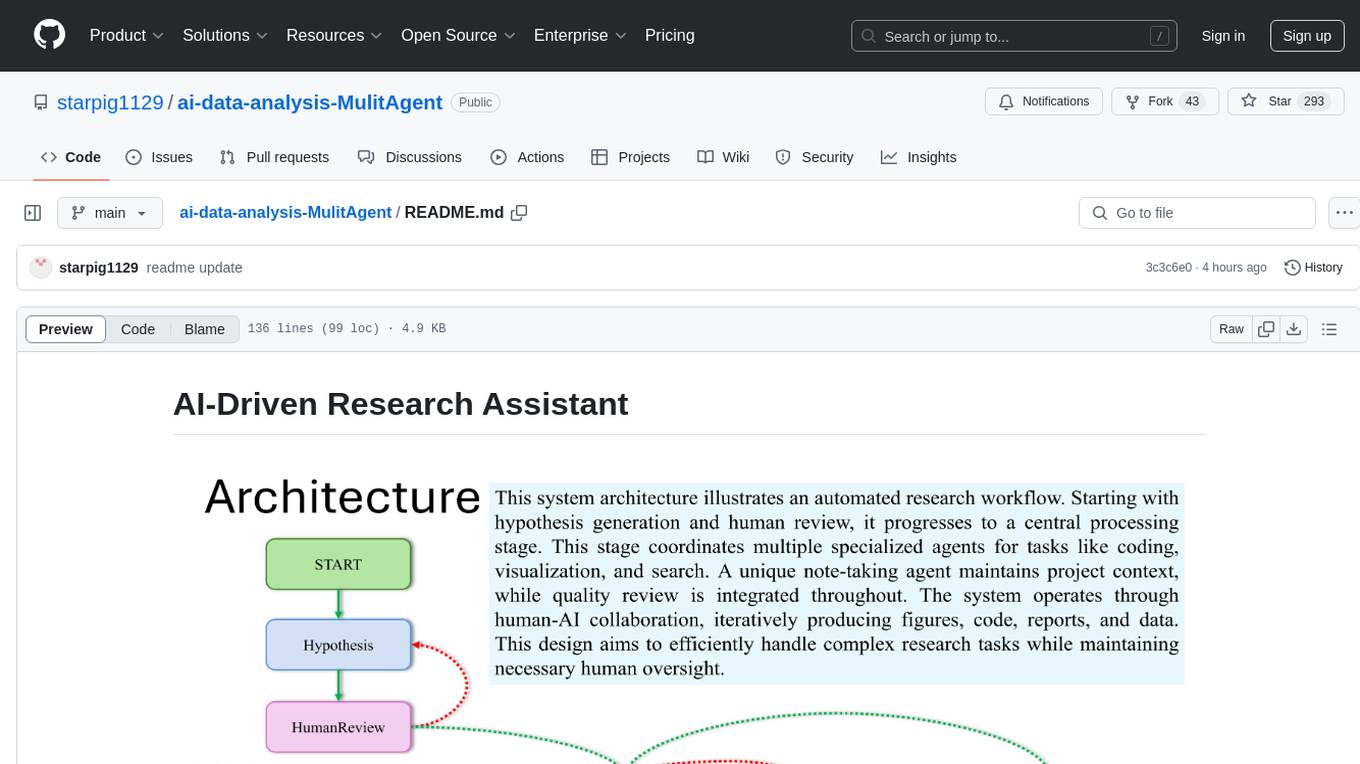

ai-data-analysis-MulitAgent

AI-Driven Research Assistant is an advanced AI-powered system utilizing specialized agents for data analysis, visualization, and report generation. It integrates LangChain, OpenAI's GPT models, and LangGraph for complex research processes. Key features include hypothesis generation, data processing, web search, code generation, and report writing. The system's unique Note Taker agent maintains project state, reducing overhead and improving context retention. System requirements include Python 3.10+ and Jupyter Notebook environment. Installation involves cloning the repository, setting up a Conda virtual environment, installing dependencies, and configuring environment variables. Usage instructions include setting data, running Jupyter Notebook, customizing research tasks, and viewing results. Main components include agents for hypothesis generation, process supervision, visualization, code writing, search, report writing, quality review, and note-taking. Workflow involves hypothesis generation, processing, quality review, and revision. Customization is possible by modifying agent creation and workflow definition. Current issues include OpenAI errors, NoteTaker efficiency, runtime optimization, and refiner improvement. Contributions via pull requests are welcome under the MIT License.

For similar tasks

instructor-php

Instructor for PHP is a library designed for structured data extraction in PHP, powered by Large Language Models (LLMs). It simplifies the process of extracting structured, validated data from unstructured text or chat sequences. Instructor enhances workflow by providing a response model, validation capabilities, and max retries for requests. It supports classes as response models and provides features like partial results, string input, extracting scalar and enum values, and specifying data models using PHP type hints or DocBlock comments. The library allows customization of validation and provides detailed event notifications during request processing. Instructor is compatible with PHP 8.2+ and leverages PHP reflection, Symfony components, and SaloonPHP for communication with LLM API providers.

cocoindex

CocoIndex is the world's first open-source engine that supports both custom transformation logic and incremental updates specialized for data indexing. Users declare the transformation, CocoIndex creates & maintains an index, and keeps the derived index up to date based on source update, with minimal computation and changes. It provides a Python library for data indexing with features like text embedding, code embedding, PDF parsing, and more. The tool is designed to simplify the process of indexing data for semantic search and structured information extraction.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.