AI4U

AI4U is a plugin that allows you use the Godot Game Engine to specify agents with reinforcement learning. Non-Player Characters (NPCs) of games can be designed using ready-made components.

Stars: 63

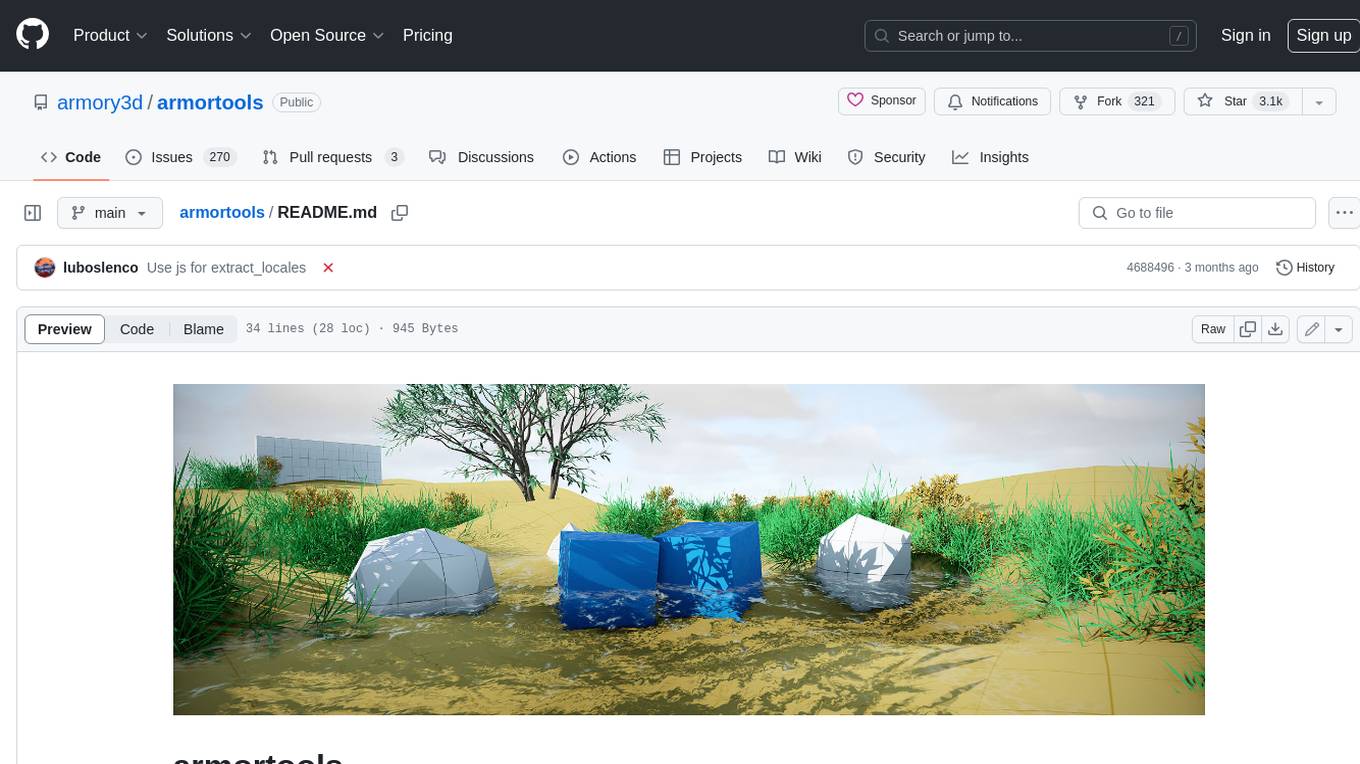

AI4U is a tool that provides a framework for modeling virtual reality and game environments. It offers an alternative approach to modeling Non-Player Characters (NPCs) in Godot Game Engine. AI4U defines an agent living in an environment and interacting with it through sensors and actuators. Sensors provide data to the agent's brain, while actuators send actions from the agent to the environment. The brain processes the sensor data and makes decisions (selects an action by time). AI4U can also be used in other situations, such as modeling environments for artificial intelligence experiments.

README:

AI4U tool AI4U is a agent framework for modeling virtual reality and game environment. This repo we keep the reference implementation for Godot Game Engine.

The Godot version of the AI4U get advantage of the Godot architecture and facilitate agent specification by means of an agent abstraction. In Godot, AI4U provides a alternative approach to modeling Non-Player Characters (NPC). Although, developers can apply this tool in others situations, for example, the modeling environment for artificial intelligence experiments.

Agent abstraction defines an agent living in a environment and interacting with this environment by means of sensors and actuators. So, NPC specification is a kind of agent specification. Agent's components are: sensors, actuators, events, reward functions and brain. Sensors and actuators are the interface between agents and environments. A sensor provides data to an agent's brain, while actuators send actions from agent to environment. A brain is a script that proccessing sensors' data e made a decision (selects an action by time).

There are two components required to run AI4U in a new project: Python scripts and C# scripts.

To install the stable version of AI4U, run the command:

pip install ai4uNext, download the latest AI4U release here. Unzip this file and place it in the main directory of your Godot project.

The Python component of AI4U can be installed using a local pip package. To install it, navigate to the directory pyplugin/ai4upy/ai4u and run the command:

pip install -e .This command fetches the latest modifications from the AI4U repository.

Clone the repository and copy the directory addons/ai4u to your project.

- Godot 4.2.2 Mono Version.

- Python 3.12.

- Micrsoft.ML.OnnxRuntime.

- Gynasium.

- Tested in Windows 11 and Ubuntu 24.04.

The minimum recommended hardware for AI4U is a computer with at least a GeForce 1050ti (4GB VRAM), 8GB of RAM, and at least 20GB of SSD storage. Naturally, the memory requirement can increase significantly if complex inputs are used in the agent's sensors (such as images) and if algorithms like Soft-Actor-Critic (SAC) and DQN are employed. For truly interesting use cases, such as using SAC with an image sensor, a computer with at least 24GB of RAM and a high-end GPU is necessary. For games, we recommend modest sensor configurations, such as moderate use of RayCasting.

Here we will include AI4U demo projects that you can use for experimentation.

An experimental version of AI4U that don't depend of the Python is available here.

AI4U is currently maintained by Gilzamir Gomes ([email protected]), Creto A. Vidal ([email protected]), Joaquim B. Cavalcante-Neto ([email protected]) and Yuri Nogueira ([email protected]).

Important Note: We do not do technical support, nor consulting and don't answer personal questions per email. How To Contribute To any interested in making the AI4U better, there is still some documentation that needs to be done. If you want to contribute, please read CONTRIBUTING.md guide first.

Acknowledgments AI4U was created in the CRab (Computer Graphics, Virtual Reality and Animations) Labs at UFC (Universidade Federal do Ceará).

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for AI4U

Similar Open Source Tools

AI4U

AI4U is a tool that provides a framework for modeling virtual reality and game environments. It offers an alternative approach to modeling Non-Player Characters (NPCs) in Godot Game Engine. AI4U defines an agent living in an environment and interacting with it through sensors and actuators. Sensors provide data to the agent's brain, while actuators send actions from the agent to the environment. The brain processes the sensor data and makes decisions (selects an action by time). AI4U can also be used in other situations, such as modeling environments for artificial intelligence experiments.

godot_rl_agents

Godot RL Agents is an open-source package that facilitates the integration of Machine Learning algorithms with games created in the Godot Engine. It provides interfaces for popular RL frameworks, support for memory-based agents, 2D and 3D games, AI sensors, and is licensed under MIT. Users can train agents in the Godot editor, create custom environments, export trained agents in ONNX format, and utilize advanced features like different RL training frameworks.

skyeye

SkyEye is an AI-powered Ground Controlled Intercept (GCI) bot designed for the flight simulator Digital Combat Simulator (DCS). It serves as an advanced replacement for the in-game E-2, E-3, and A-50 AI aircraft, offering modern voice recognition, natural-sounding voices, real-world brevity and procedures, a wide range of commands, and intelligent battlespace monitoring. The tool uses Speech-To-Text and Text-To-Speech technology, can run locally or on a cloud server, and is production-ready software used by various DCS communities.

HybridAGI

HybridAGI is the first Programmable LLM-based Autonomous Agent that lets you program its behavior using a **graph-based prompt programming** approach. This state-of-the-art feature allows the AGI to efficiently use any tool while controlling the long-term behavior of the agent. Become the _first Prompt Programmers in history_ ; be a part of the AI revolution one node at a time! **Disclaimer: We are currently in the process of upgrading the codebase to integrate DSPy**

lumigator

Lumigator is an open-source platform developed by Mozilla.ai to help users select the most suitable language model for their specific needs. It supports the evaluation of summarization tasks using sequence-to-sequence models such as BART and BERT, as well as causal models like GPT and Mistral. The platform aims to make model selection transparent, efficient, and empowering by providing a framework for comparing LLMs using task-specific metrics to evaluate how well a model fits a project's needs. Lumigator is in the early stages of development and plans to expand support to additional machine learning tasks and use cases in the future.

max

The Modular Accelerated Xecution (MAX) platform is an integrated suite of AI libraries, tools, and technologies that unifies commonly fragmented AI deployment workflows. MAX accelerates time to market for the latest innovations by giving AI developers a single toolchain that unlocks full programmability, unparalleled performance, and seamless hardware portability.

atomic_agents

Atomic Agents is a modular and extensible framework designed for creating powerful applications. It follows the principles of Atomic Design, emphasizing small and single-purpose components. Leveraging Pydantic for data validation and serialization, the framework offers a set of tools and agents that can be combined to build AI applications. It depends on the Instructor package and supports various APIs like OpenAI, Cohere, Anthropic, and Gemini. Atomic Agents is suitable for developers looking to create AI agents with a focus on modularity and flexibility.

agent-os

The Agent OS is an experimental framework and runtime to build sophisticated, long running, and self-coding AI agents. We believe that the most important super-power of AI agents is to write and execute their own code to interact with the world. But for that to work, they need to run in a suitable environment—a place designed to be inhabited by agents. The Agent OS is designed from the ground up to function as a long-term computing substrate for these kinds of self-evolving agents.

magic

Magic Cloud is a software development automation platform based on AI, Low-Code, and No-Code. It allows dynamic code creation and orchestration using Hyperlambda, generative AI, and meta programming. The platform includes features like CRUD generation, No-Code AI, Hyperlambda programming language, AI agents creation, and various components for software development. Magic is suitable for backend development, AI-related tasks, and creating AI chatbots. It offers high-level programming capabilities, productivity gains, and reduced technical debt.

max

The Modular Accelerated Xecution (MAX) platform is an integrated suite of AI libraries, tools, and technologies that unifies commonly fragmented AI deployment workflows. MAX accelerates time to market for the latest innovations by giving AI developers a single toolchain that unlocks full programmability, unparalleled performance, and seamless hardware portability.

Simulator-Controller

Simulator Controller is a modular administration and controller application for Sim Racing, featuring a comprehensive plugin automation framework for external controller hardware. It includes voice chat capable Assistants like Virtual Race Engineer, Race Strategist, Race Spotter, and Driving Coach. The tool offers features for setup, strategy development, monitoring races, and more. Developed in AutoHotkey, it supports various simulation games and integrates with third-party applications for enhanced functionality.

llm_agents

LLM Agents is a small library designed to build agents controlled by large language models. It aims to provide a better understanding of how such agents work in a concise manner. The library allows agents to be instructed by prompts, use custom-built components as tools, and run in a loop of Thought, Action, Observation. The agents leverage language models to generate Thought and Action, while tools like Python REPL, Google search, and Hacker News search provide Observations. The library requires setting up environment variables for OpenAI API and SERPAPI API keys. Users can create their own agents by importing the library and defining tools accordingly.

wandb

Weights & Biases (W&B) is a platform that helps users build better machine learning models faster by tracking and visualizing all components of the machine learning pipeline, from datasets to production models. It offers tools for tracking, debugging, evaluating, and monitoring machine learning applications. W&B provides integrations with popular frameworks like PyTorch, TensorFlow/Keras, Hugging Face Transformers, PyTorch Lightning, XGBoost, and Sci-Kit Learn. Users can easily log metrics, visualize performance, and compare experiments using W&B. The platform also supports hosting options in the cloud or on private infrastructure, making it versatile for various deployment needs.

AIlice

AIlice is a fully autonomous, general-purpose AI agent that aims to create a standalone artificial intelligence assistant, similar to JARVIS, based on the open-source LLM. AIlice achieves this goal by building a "text computer" that uses a Large Language Model (LLM) as its core processor. Currently, AIlice demonstrates proficiency in a range of tasks, including thematic research, coding, system management, literature reviews, and complex hybrid tasks that go beyond these basic capabilities. AIlice has reached near-perfect performance in everyday tasks using GPT-4 and is making strides towards practical application with the latest open-source models. We will ultimately achieve self-evolution of AI agents. That is, AI agents will autonomously build their own feature expansions and new types of agents, unleashing LLM's knowledge and reasoning capabilities into the real world seamlessly.

ModernBERT

ModernBERT is a repository focused on modernizing BERT through architecture changes and scaling. It introduces FlexBERT, a modular approach to encoder building blocks, and heavily relies on .yaml configuration files to build models. The codebase builds upon MosaicBERT and incorporates Flash Attention 2. The repository is used for pre-training and GLUE evaluations, with a focus on reproducibility and documentation. It provides a collaboration between Answer.AI, LightOn, and friends.

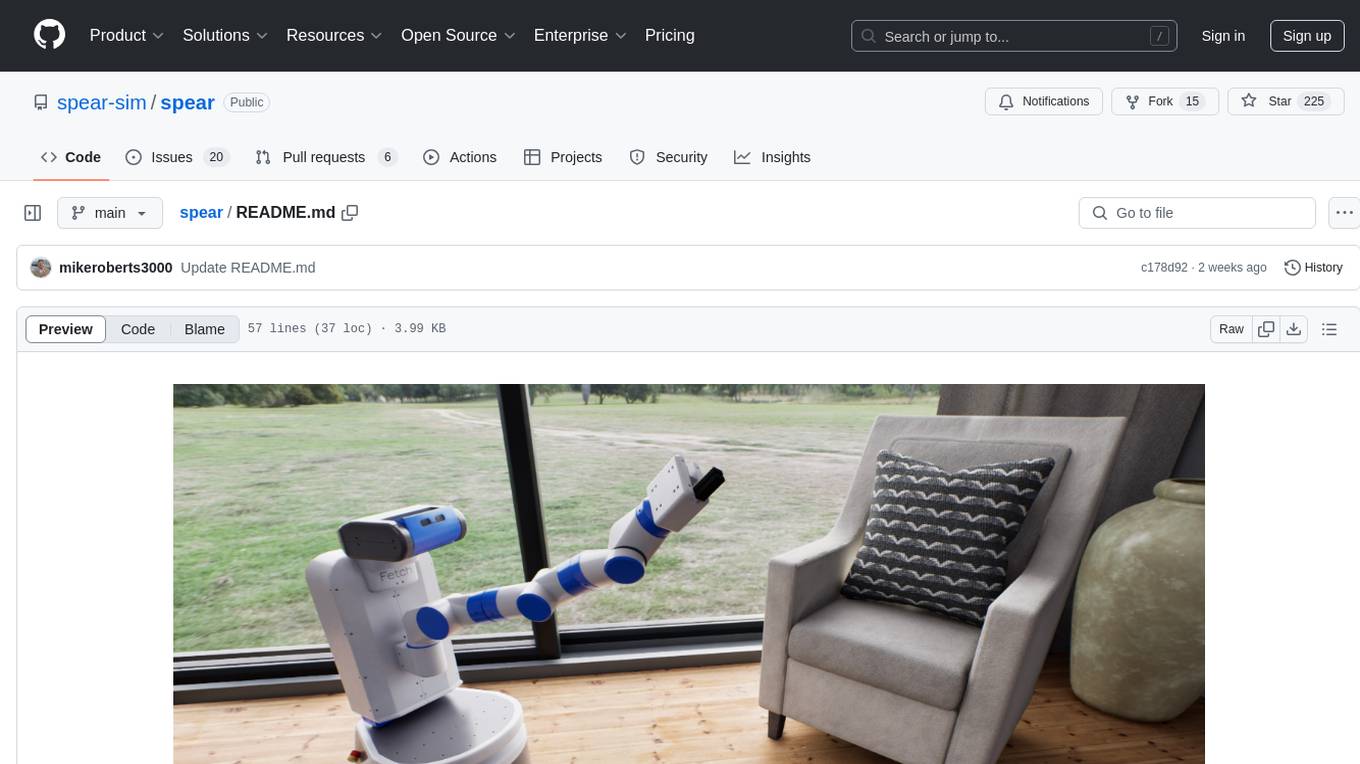

spear

SPEAR is a Simulator for Photorealistic Embodied AI Research that addresses limitations in existing simulators by offering 300 unique virtual indoor environments with detailed geometry, photorealistic materials, and unique floor plans. It provides an OpenAI Gym interface for interaction via Python, released under an MIT License. The simulator was developed with support from the Intelligent Systems Lab at Intel and Kujiale.

For similar tasks

AI4U

AI4U is a tool that provides a framework for modeling virtual reality and game environments. It offers an alternative approach to modeling Non-Player Characters (NPCs) in Godot Game Engine. AI4U defines an agent living in an environment and interacting with it through sensors and actuators. Sensors provide data to the agent's brain, while actuators send actions from the agent to the environment. The brain processes the sensor data and makes decisions (selects an action by time). AI4U can also be used in other situations, such as modeling environments for artificial intelligence experiments.

forge

Forge is a free and open-source digital collectible card game (CCG) engine written in Java. It is designed to be easy to use and extend, and it comes with a variety of features that make it a great choice for developers who want to create their own CCGs. Forge is used by a number of popular CCGs, including Ascension, Dominion, and Thunderstone.

making-games-with-ai-course

This repository hosts the Machine Learning for Games Course, providing mdx files and notebooks for learning. The course covers various topics related to applying machine learning techniques in game development. It offers a syllabus and resources for users to sign up and access the content for free. The project is maintained by Thomas Simonini and is available on GitHub for citation in publications.

live2d-TTS-LLM-GPT-SoVITS-Vtuber

This repository is a modification based on the pixi-live2d-display project. It provides a platform for TTS (Text-to-Speech) functionality and a large model voice chat page. Users can install node.js, run the provided commands, and access the specified URLs to utilize the features.

BlackFriday-GPTs-Prompts

BlackFriday-GPTs-Prompts is a repository that provides a collection of prompts and jailbreaks for various purposes such as programming, marketing, academic, job hunting, game, creative tasks, prompt engineering, business, productivity, and lifestyle. It introduces AiDark.net, an autonomous AI software engineer named Devin, capable of working collaboratively or independently on tasks for review. The repository offers prompts that can be used in GPTOS, along with demo videos showcasing an Android self-coding app builder.

LiveDevAgents

LiveDevAgents is a multi-agent danmaku game engine built using CAMEL. It was developed for the 2024.12 CAMEL-AI Hackathon project. The engine allows for the creation of games in real-time through live bullet comments during streaming, enabling interaction with AI hosts. The project aims to expand and deconstruct simple ideas with a team of agents of different expertise, continuously updating and self-correcting during runtime. It also supports workforce enhancement, migration of anchor agents to a new framework, improvement of bullet comment processing logic, expansion of live control for more platforms, integration of art and music agents, and VR shared workspace for collaborative development.

Learn-AI-Assisted-Python-Programming

Learn-AI-Assisted-Python-Programming is a book that introduces readers to the world of AI-assisted programming, focusing on using tools like GitHub Copilot and ChatGPT to create and optimize Python programs. The book guides users, even those with no prior coding experience, on how to leverage AI assistants to quickly turn their ideas into reality without getting bogged down in low-level programming details. Readers will learn to generate code using natural language prompts, fine-tune code manually or with AI assistance, test programs with AI, and automate tedious tasks. The book aims to accelerate the learning journey of Copilot programming and provide a user-friendly and thoughtful approach for beginners.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.