MediaAI

Aalto University's Intelligent Computational Media course (AI & ML for media, art & design)

Stars: 61

MediaAI is a repository containing lectures and materials for Aalto University's AI for Media, Art & Design course. The course is a hands-on, project-based crash course focusing on deep learning and AI techniques for artists and designers. It covers common AI algorithms & tools, their applications in art, media, and design, and provides hands-on practice in designing, implementing, and using these tools. The course includes lectures, exercises, and a final project based on students' interests. Students can complete the course without programming by creatively utilizing existing tools like ChatGPT and DALL-E. The course emphasizes collaboration, peer-to-peer tutoring, and project-based learning. It covers topics such as text generation, image generation, optimization, and game AI.

README:

This repository contains the lectures and materials of Aalto University's AI for Media, Art & Design course. Scroll down for lecture slides and exercises.

Follow the course's Twitter feed for links and resources.

This is a hands-on, project-based crash course for deep learning and other AI techniques for people with as little technical prerequisites as possible. The focus is on media processing and games, which makes this particularly suitable for artists and designers.

The 2024 edition of this course is taught during Aalto University's Period 3 (six weeks) by Prof Perttu Hämäläinen (Twitter) and Nam Hee Gordon Kim. (Twitter). Registration through Aalto's Mycourses system.

Learning Goals

The goal is for students to:

- Understand how common AI algorithms & tools work,

- Understand what the tools can be used for in context of art, media, and design, and

- Get hands-on practice of designing, implementing and/or using the tools.

Pedagogical approach

The course is taught through:

- Lectures

- Exercises that require you to either practice using existing AI tools, programmatically utilize the tools (e.g., to automate tedious manual prompting), or build new systems. We always try to provide both easy and advanced exercises to cater for different skill levels.

- Final project on topics based on each student's interests. This can also be done in pairs.

The exercises and project work are designed to scale for a broad range of skill levels and starting points.

Outside lectures and exercises, we use Teams for sharing results and peer-to-peer tutoring and guidance (Teams invitation will be send to registered students).

Student Prerequisites

Although many of the exercises do require some Python programming and math skills, one can complete the course without programming, by focusing on creative utilization of existing tools such as ChatGPT and DALL-E.

Grading / Project Work

You pass the course by submitting a report of your project in MyCourses. The grading is pass / fail, as numerical grading is not feasible on a course where students typically come from very different backgrounds. To get your project accepted, the main requirement is that a you make an effort and advance from your individual starting point.

It is also recommended to make the project publicly available, e.g., as a Colab notebook or Github repository. Instead of a project report, you may simply submit a link to the notebook or repository, if they contain the needed documentation.

Students can choose their project topics based on their own interests and learning goals. Projects are agreed on with the teachers. You could create something in Colab or Unity Machine Learning agents, or if you'd rather not write any code, experimenting with artist-friendly tools like GIMP-ML or RunwayML to create something new and/or interesting is also ok. For example, one could generate song lyrics using a text generator such as GPT-3, and use them to compose and record a song.

Before the first lecture, you should:

-

Prepare to add one slide to a shared Google Slides document (link provided at the first lecture), including 1) your name and photo, 2) your background and skillset, 3) and what kind of projects you want to work on. This will be useful for finding other students with similar interests and/or complementary skills. If you do not yet know what to work on, browse the Course Twitter for inspiration.

-

Make yourself a Google account if you don't have one. This is needed for Google Colab, which we use for many demos and programming exercises. Important security notice: Colab notebooks run on Google servers and by default cannot access files on your computer. However, some notebooks might contain malicious code. Thus, do not input any passwords or let a Colab notebook connect with your Google Drive unless you trust the notebook. The notebooks in this repository should be safe but the lecture slides also link to 3rd party notebooks that one can never be sure about.

-

For using OpenAI tools, it's also good to get an OpenAI account. When creating the account, you get some free quota for generating text and images.

-

If you plan to try the programming exercises of the course, these Python learning resources might come handy. However, if you know some other programming language, you should be able to learn while going through the exercises.

-

To grasp the fundamentals of what neural networks are doing, watch episodes 1-3 of 3Blue1Brown's neural network series

-

Learn about Colab notebooks (used for course exercises) by watching these videos: Video 1, Video 2 and reading through this Tutorial notebook. Feel free to also take a peek at this course's exercise notebooks such as GAN image generation. To test the notebook, select "run all" from the "runtime" menu. This runs all the code cells in sequence and should generate and display some images at the bottom of the notebook. In general, when opening a notebook, it's good to use "run all" first, before starting to modify individual code cells, because the cells often depend on variables initialized or packages imported in the preceding cells.

We will spend roughly one week per topic, devoting the last sessions to individual project work.

Overview and Motivation:

- Lecture: Overview and motivation. Why one should rather co-create than compete with AI technology.

- Exercise: Each student adds their slide to the shared Google Slides. We will then go through the slides and briefly discuss the topics and who might benefit from collaborating with others.

Optional programming exercises for those with at least some programming background:

- Exercise: If you didn't already do it, go through the Colab learning links in the course prerequisites.

- Exercise: Introduction to tensors, numpy and matplotlib through processing images and audio. [Open in Colab], [Solutions]

- Related to the above, see also: https://numpy.org/devdocs/user/absolute_beginners.html

- Exercise: Continuing the Numpy introduction, now for a simple data science project. [Open in Colab], [Solutions].

- Exercise: Training a very simple neural network using a Kaggle dataset of human height and weight. [Open in Colab], [Solutions]

Text Generation & Co-writing with AI:

-

Lecture: Co-writing with AI. Introduction to Large Language Models (LLMs), some history, examples of different types of texts and applications.

-

Lecture: Deeper into transformers. Understanding transformer attention and emergent abilities of LLMs.

-

Exercises: Generating game ideas, automating manual prompting using Python, Retrieval-Augmented Generation

-

Tutorial: Few-shot Prompting using OpenAI API

Image Generation

-

Lecture: Image generation

-

Setup: If you have 2060 GPU or better, consider installing Stable Diffusion locally - no subscription fees, no banned topics or keywords. It's best to install Stable Diffusion with a UI such as ComfyUI or Foocus, which provide advanced features like ControlNet that allows you to input a rough image sketch to control the layout of the generated images.

-

Prompting Exercise: Prompt images with different art styles, cameras, lighting... For reference, see The DALL-E 2 prompt book Use your preferred text-to-image tool such as DALL-E, MidJourney or Stable Diffusion. You can install Stable Diffusion locally (see above), and it is also available through Colab: Huggingface basic notebook, Notebook with Automatic1111 WebUI, Notebook with Foocus UI. If you prefer a mobile app, some free options are Microsoft Copilot for ChatGPT and DALL-E on iOS or Android, or Draw Things for Stable Diffusion

-

Prompting Exercise: Pick an interesting reference image and try to come up with a text prompt that produces an image as close to the reference as possible. This helps you hone your descriptive English writing skills.

-

Prompting Exercise: Practice using both text and image prompts using StableDiffusion and ControlNet. This can be done either in Colab, installing Stable Diffusion locally (see above), or using a mobile app such as Draw Things

-

Prompting Exercise: Make your own version of making a bunny happier, progressively exaggerating some other aspect of some initial prompt.

-

Colab Exercise: Using a Pre-Trained Generative Adversarial Network (GAN) to generate and interpolate images. [Open in Colab], [Solutions]

-

Colab Exercise: Interpolate between prompts using Stable Diffusion

-

Colab Exercise: Finetune StableDiffusion using your own images. There are multiple options, although all of them seem to require at least 24GB of GPU memory => you'll most likely need a paid Colab account. Some options: Huggingface Diffusers official tutorial, Joe Penna's DreamBooth, TheLastBen

Generating other media, real-life workflows

- Lecture: Generating other media

Optimization

- Lecture: Optimization. Mathematical optimization is at the heart of almost all AI and ML. We've already applied optimization when training neural networks; now it's the time to get a bit wider and deeper understanding. We'll cover a number of common techniques such as Deep Reinforcement Learning (DRL) and Covariance Matrix Adaptation Evolution Strategy (CMA-ES).

- Exercise: Experiment with abstract art generation using CLIPDraw and StyleCLIPDraw. First, follow the notebook instructions to get the code to generate something. Then try different text prompts and different drawing parameters.

- Exercise (hard, optional): Modify CLIPDraw or StyleCLIPDraw to use CMA-ES instead of Adam. This should allow more robust results if you use high abstraction (only a few drawing primitives), which tends to make Adam more probable to get stuck in a bad local optimum. For reference, you can see this old course exercise on Generating abstract adversarial art using CMA-ES. Note: You can also combine CMA-ES and Adam by first finding an approximate solution with CMA-ES and then finetuning with Adam.

- Unity exercise (optional): Discovering billiards trick shots in Unity. Download the project folder and test it in Unity.

Game AI

- Lecture: Game AI What is game AI? Game AI Research in industry / academia. Core areas of videogame AI. Deep Dive: State-of-the-art AI playtesting (Roohi et al., 2021): Combining deep reinforcement learning (DRL), Monte-Carlo tree search (MCTS) and a player population simulation to estimate player engagement and difficulty in a match-3 game.

- Exercise: Deep Reinforcement Learning for General Game-Playing [Open in Colab]. [Open in Colab with Solutions].

a.k.a. Heroes of Creative AI and ML coding

Here are some people who are mixing AI, machine learning, art, and design with awesome results:

- http://otoro.net/ml/

- http://genekogan.com/

- http://quasimondo.com/

- http://zach.li/

- http://memo.tv

- https://www.enist.org

The lecture slides have more extensive links to resources on each covered topic. Here, we only list some general resources:

- ml5js & p5js, if you prefer Javascript to Python, this toolset may provides the fastest way to creative AI coding in a browser-based editor, without installing anything. Works even on mobile browsers! This example uses a deep neural network to track your nose and draw on the webcam view. This one utilizes similar PoseNet tracking to control procedural audio synthesis.

- Machine Learning for Artists (ml4a), including many cool demos, many of them built using p5js and ml5js.

- Unity Machine Learning Agents, a framework for using deep reinforcement learning for Unity. Includes code examples and blog posts.

- Two Minute Papers, a YouTube channel with short and accessible explanations of AI and deep learning research papers.

- 3Blue1Brown, a YouTube channel with excellent visual explanations on math, including neural networks and linear algebra.

- Elements of AI, an online course by University of Helsinki and Reaktor. Aalto students can also get 2 credits for this course. This is a course about the basic concepts, societal implications etc., no coding.

- Game AI Book by Togelius and Yannakakis. PDF available.

- Understanding Deep Learning by Simon J.D. Prince. Published in December 2023, this is currently the most up-to-date textbook for deep learning, praised for its clear explanations and helpful visualizations. An excellent resource for digging deeper, for those that can handle some linear algebra, probability, and statistics. PDF available.

The field is changing rapidly and we are constantly collecting new teaching material.

Follow the course's Twitter feed to stay updated. The twitter works as a public backlog of material that is used when updating the lecture slides.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for MediaAI

Similar Open Source Tools

MediaAI

MediaAI is a repository containing lectures and materials for Aalto University's AI for Media, Art & Design course. The course is a hands-on, project-based crash course focusing on deep learning and AI techniques for artists and designers. It covers common AI algorithms & tools, their applications in art, media, and design, and provides hands-on practice in designing, implementing, and using these tools. The course includes lectures, exercises, and a final project based on students' interests. Students can complete the course without programming by creatively utilizing existing tools like ChatGPT and DALL-E. The course emphasizes collaboration, peer-to-peer tutoring, and project-based learning. It covers topics such as text generation, image generation, optimization, and game AI.

start-llms

This repository is a comprehensive guide for individuals looking to start and improve their skills in Large Language Models (LLMs) without an advanced background in the field. It provides free resources, online courses, books, articles, and practical tips to become an expert in machine learning. The guide covers topics such as terminology, transformers, prompting, retrieval augmented generation (RAG), and more. It also includes recommendations for podcasts, YouTube videos, and communities to stay updated with the latest news in AI and LLMs.

uvadlc_notebooks

The UvA Deep Learning Tutorials repository contains a series of Jupyter notebooks designed to help understand theoretical concepts from lectures by providing corresponding implementations. The notebooks cover topics such as optimization techniques, transformers, graph neural networks, and more. They aim to teach details of the PyTorch framework, including PyTorch Lightning, with alternative translations to JAX+Flax. The tutorials are integrated as official tutorials of PyTorch Lightning and are relevant for graded assignments and exams.

start-machine-learning

Start Machine Learning in 2024 is a comprehensive guide for beginners to advance in machine learning and artificial intelligence without any prior background. The guide covers various resources such as free online courses, articles, books, and practical tips to become an expert in the field. It emphasizes self-paced learning and provides recommendations for learning paths, including videos, podcasts, and online communities. The guide also includes information on building language models and applications, practicing through Kaggle competitions, and staying updated with the latest news and developments in AI. The goal is to empower individuals with the knowledge and resources to excel in machine learning and AI.

god-level-ai

A drill of scientific methods, processes, algorithms, and systems to build stories & models. An in-depth learning resource for humans. This is a drill for people who aim to be in the top 1% of Data and AI experts. The repository provides a routine for deep and shallow work sessions, covering topics from Python to AI/ML System Design and Personal Branding & Portfolio. It emphasizes the importance of continuous effort and action in the tech field.

chatgpt-universe

ChatGPT is a large language model that can generate human-like text, translate languages, write different kinds of creative content, and answer your questions in a conversational way. It is trained on a massive amount of text data, and it is able to understand and respond to a wide range of natural language prompts. Here are 5 jobs suitable for this tool, in lowercase letters: 1. content writer 2. chatbot assistant 3. language translator 4. creative writer 5. researcher

intro-to-intelligent-apps

This repository introduces and helps organizations get started with building AI Apps and incorporating Large Language Models (LLMs) into them. The workshop covers topics such as prompt engineering, AI orchestration, and deploying AI apps. Participants will learn how to use Azure OpenAI, Langchain/ Semantic Kernel, Qdrant, and Azure AI Search to build intelligent applications.

obsidian-Smart2Brain

Your Smart Second Brain is a free and open-source Obsidian plugin that serves as your personal assistant, powered by large language models like ChatGPT or Llama2. It can directly access and process your notes, eliminating the need for manual prompt editing, and it can operate completely offline, ensuring your data remains private and secure.

mlforpublicpolicylab

The Machine Learning for Public Policy Lab is a project-based course focused on solving real-world problems using machine learning in the context of public policy and social good. Students will gain hands-on experience building end-to-end machine learning systems, developing skills in problem formulation, working with messy data, communicating with non-technical stakeholders, model interpretability, and understanding algorithmic bias & disparities. The course covers topics such as project scoping, data acquisition, feature engineering, model evaluation, bias and fairness, and model interpretability. Students will work in small groups on policy projects, with graded components including project proposals, presentations, and final reports.

claudine

Claudine is an AI agent designed to reason and act autonomously, leveraging the Anthropic API, Unix command line tools, HTTP, local hard drive data, and internet data. It can administer computers, analyze files, implement features in source code, create new tools, and gather contextual information from the internet. Users can easily add specialized tools. Claudine serves as a blueprint for implementing complex autonomous systems, with potential for customization based on organization-specific needs. The tool is based on the anthropic-kotlin-sdk and aims to evolve into a versatile command line tool similar to 'git', enabling branching sessions for different tasks.

ChainForge

ChainForge is a visual programming environment for battle-testing prompts to LLMs. It is geared towards early-stage, quick-and-dirty exploration of prompts, chat responses, and response quality that goes beyond ad-hoc chatting with individual LLMs. With ChainForge, you can: * Query multiple LLMs at once to test prompt ideas and variations quickly and effectively. * Compare response quality across prompt permutations, across models, and across model settings to choose the best prompt and model for your use case. * Setup evaluation metrics (scoring function) and immediately visualize results across prompts, prompt parameters, models, and model settings. * Hold multiple conversations at once across template parameters and chat models. Template not just prompts, but follow-up chat messages, and inspect and evaluate outputs at each turn of a chat conversation. ChainForge comes with a number of example evaluation flows to give you a sense of what's possible, including 188 example flows generated from benchmarks in OpenAI evals. This is an open beta of Chainforge. We support model providers OpenAI, HuggingFace, Anthropic, Google PaLM2, Azure OpenAI endpoints, and Dalai-hosted models Alpaca and Llama. You can change the exact model and individual model settings. Visualization nodes support numeric and boolean evaluation metrics. ChainForge is built on ReactFlow and Flask.

pwnagotchi

Pwnagotchi is an AI tool leveraging bettercap to learn from WiFi environments and maximize crackable WPA key material. It uses LSTM with MLP feature extractor for A2C agent, learning over epochs to improve performance in various WiFi environments. Units can cooperate using a custom parasite protocol. Visit https://www.pwnagotchi.ai for documentation and community links.

AI-Engineer-Headquarters

AI Engineer Headquarters is a comprehensive learning resource designed to help individuals master scientific methods, processes, algorithms, and systems to build stories and models in the field of Data and AI. The repository provides in-depth content through video sessions and text materials, catering to individuals aspiring to be in the top 1% of Data and AI experts. It covers various topics such as AI engineering foundations, large language models, retrieval-augmented generation, fine-tuning LLMs, reinforcement learning, ethical AI, agentic workflows, and career acceleration. The learning approach emphasizes action-oriented drills and routines, encouraging consistent effort and dedication to excel in the AI field.

OpenCRISPR

OpenCRISPR is a set of free and open gene editing systems designed by Profluent Bio. The OpenCRISPR-1 protein maintains the prototypical architecture of a Type II Cas9 nuclease but is hundreds of mutations away from SpCas9 or any other known natural CRISPR-associated protein. You can view OpenCRISPR-1 as a drop-in replacement for many protocols that need a cas9-like protein with an NGG PAM and you can even use it with canonical SpCas9 gRNAs. OpenCRISPR-1 can be fused in a deactivated or nickase format for next generation gene editing techniques like base, prime, or epigenome editing.

LLM_Notebooks

LLM_Notebooks is a repository supporting The Machine Learning Engineer YouTube channel. It contains materials related to various topics such as Generative AI, MLOps, ML projects, Azure Projects, Google VertexAi, ML Tricks, and more. The repository includes notebooks and code in Python and C#, with a focus on Python. The videos on the channel cover a wide range of topics in English and Spanish, organized into playlists based on general themes. The repository links are provided in the video descriptions for easy access. The creator uploads videos regularly and encourages viewers to subscribe, like, and leave constructive comments. The repository serves as a valuable resource for learning and exploring machine learning concepts and tools.

Instruct2Act

Instruct2Act is a framework that utilizes Large Language Models to map multi-modal instructions to sequential actions for robotic manipulation tasks. It generates Python programs using the LLM model for perception, planning, and action. The framework leverages foundation models like SAM and CLIP to convert high-level instructions into policy codes, accommodating various instruction modalities and task demands. Instruct2Act has been validated on robotic tasks in tabletop manipulation domains, outperforming learning-based policies in several tasks.

For similar tasks

MediaAI

MediaAI is a repository containing lectures and materials for Aalto University's AI for Media, Art & Design course. The course is a hands-on, project-based crash course focusing on deep learning and AI techniques for artists and designers. It covers common AI algorithms & tools, their applications in art, media, and design, and provides hands-on practice in designing, implementing, and using these tools. The course includes lectures, exercises, and a final project based on students' interests. Students can complete the course without programming by creatively utilizing existing tools like ChatGPT and DALL-E. The course emphasizes collaboration, peer-to-peer tutoring, and project-based learning. It covers topics such as text generation, image generation, optimization, and game AI.

For similar jobs

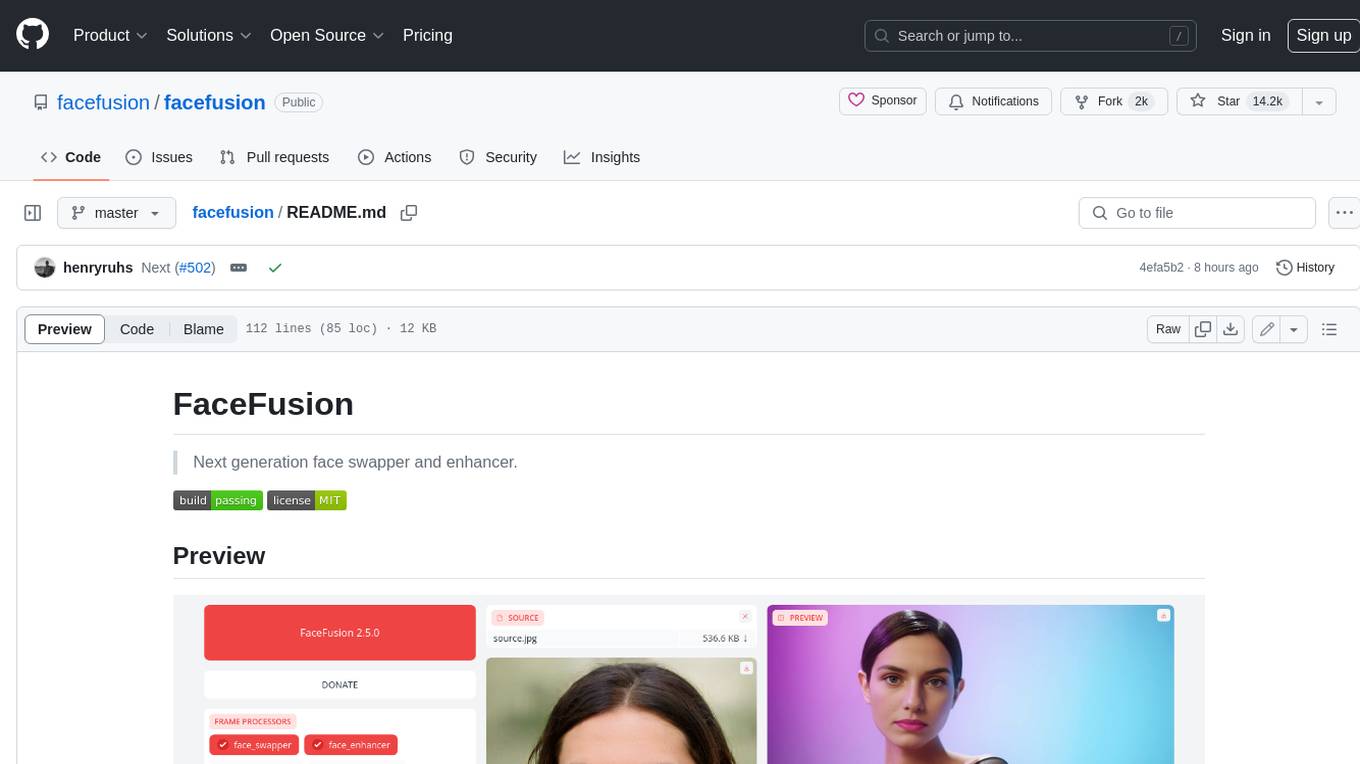

facefusion

FaceFusion is a next-generation face swapper and enhancer that allows users to seamlessly swap faces in images and videos, as well as enhance facial features for a more polished and refined look. With its advanced deep learning models, FaceFusion provides users with a wide range of options for customizing their face swaps and enhancements, making it an ideal tool for content creators, artists, and anyone looking to explore their creativity with facial manipulation.

forge

Forge is a free and open-source digital collectible card game (CCG) engine written in Java. It is designed to be easy to use and extend, and it comes with a variety of features that make it a great choice for developers who want to create their own CCGs. Forge is used by a number of popular CCGs, including Ascension, Dominion, and Thunderstone.

latentbox

Latent Box is a curated collection of resources for AI, creativity, and art. It aims to bridge the information gap with high-quality content, promote diversity and interdisciplinary collaboration, and maintain updates through community co-creation. The website features a wide range of resources, including articles, tutorials, tools, and datasets, covering various topics such as machine learning, computer vision, natural language processing, generative art, and creative coding.

fabric

Fabric is an open-source framework for augmenting humans using AI. It provides a structured approach to breaking down problems into individual components and applying AI to them one at a time. Fabric includes a collection of pre-defined Patterns (prompts) that can be used for a variety of tasks, such as extracting the most interesting parts of YouTube videos and podcasts, writing essays, summarizing academic papers, creating AI art prompts, and more. Users can also create their own custom Patterns. Fabric is designed to be easy to use, with a command-line interface and a variety of helper apps. It is also extensible, allowing users to integrate it with their own AI applications and infrastructure.

ColorPicker

ColorPicker Max is a powerful and intuitive color selection and manipulation tool that is designed to make working with color easier and more efficient than ever before. With its wide range of features and tools, ColorPicker Max offers an unprecedented level of control and customization over every aspect of color selection and manipulation.

ai-notes

Notes on AI state of the art, with a focus on generative and large language models. These are the "raw materials" for the https://lspace.swyx.io/ newsletter. This repo used to be called https://github.com/sw-yx/prompt-eng, but was renamed because Prompt Engineering is Overhyped. This is now an AI Engineering notes repo.

Neurite

Neurite is an innovative project that combines chaos theory and graph theory to create a digital interface that explores hidden patterns and connections for creative thinking. It offers a unique workspace blending fractals with mind mapping techniques, allowing users to navigate the Mandelbrot set in real-time. Nodes in Neurite represent various content types like text, images, videos, code, and AI agents, enabling users to create personalized microcosms of thoughts and inspirations. The tool supports synchronized knowledge management through bi-directional synchronization between mind-mapping and text-based hyperlinking. Neurite also features FractalGPT for modular conversation with AI, local AI capabilities for multi-agent chat networks, and a Neural API for executing code and sequencing animations. The project is actively developed with plans for deeper fractal zoom, advanced control over node placement, and experimental features.

ScribbleArchitect

ScribbleArchitect is a GUI tool designed for generating images from simple brush strokes or Bezier curves in real-time. It is primarily intended for use in architecture and sketching in the early stages of a project. The tool utilizes Stable Diffusion and ControlNet as AI backbone for the generative process, with IP Adapter support and a library of predefined styles. Users can transfer specific styles to their line work, upscale images for high resolution export, and utilize a ControlNet upscaler. The tool also features a screen capture function for working with external tools like Adobe Illustrator or Inkscape.