Mastering-NLP-from-Foundations-to-LLMs

Mastering NLP from Foundations to LLMs, Published by Packt

Stars: 86

This code repository is for the book 'Mastering NLP from Foundations to LLMs', which provides an in-depth introduction to Natural Language Processing (NLP) techniques. It covers mathematical foundations of machine learning, advanced NLP applications such as large language models (LLMs) and AI applications, as well as practical skills for working on real-world NLP business problems. The book includes Python code samples and expert insights into current and future trends in NLP.

README:

Apply advanced rule-based techniques to LLMs and solve real-world business problems using Python

-

Lior Gazit is a highly skilled ML professional with a proven track record of success in building and leading teams that use ML to drive business growth. He is an expert in NLP and has successfully developed innovative ML pipelines and products. He holds a master’s degree and has published in peer-reviewed journals and conferences. As a senior director of a ML group in the financial sector and a principal ML advisor at an emerging start-up, Lior is a respected leader in the industry, with a wealth of knowledge and experience to share. With much passion and inspiration, Lior is dedicated to using ML to drive positive change and growth in his organizations.

-

Meysam Ghaffari is a senior data scientist with a strong background in NLP and deep learning. He currently works at MSKCC, where he specializes in developing and improving ML and NLP models for healthcare problems. He has over nine years of experience in ML and over four years of experience in NLP and deep learning. He received his Ph.D. in computer science from Florida State University, his MS in computer science – artificial intelligence from the Isfahan University of Technology, and his BS in computer science from Iran University of Science and Technology. He also worked as a post-doctoral research associate at the University of Wisconsin-Madison before joining MSKCC.

Enhance your NLP proficiency with modern frameworks like LangChain, explore mathematical foundations and code samples, and gain expert insights into current and future trends

- Learn how to build Python-driven solutions with a focus on NLP, LLMs, RAGs, and GPT

- Master embedding techniques and machine learning principles for real-world applications

- Understand the mathematical foundations of NLP and deep learning designs Purchase of the print or Kindle book includes a free PDF eBook

If you feel this book is for you, get your copy today!

Do you want to master Natural Language Processing (NLP) but don’t know where to begin? This book will give you the right head start. Written by leaders in machine learning and NLP, Mastering NLP from Foundations to LLMs provides an in-depth introduction to techniques. Starting with the mathematical foundations of machine learning (ML), you’ll gradually progress to advanced NLP applications such as large language models (LLMs) and AI applications. You’ll get to grips with linear algebra, optimization, probability, and statistics, which are essential for understanding and implementing machine learning and NLP algorithms. You’ll also explore general machine learning techniques and find out how they relate to NLP. Next, you’ll learn how to preprocess text data, explore methods for cleaning and preparing text for analysis, and understand how to do text classification. You’ll get all of this and more along with complete Python code samples.

By the end of the book, the advanced topics of LLMs’ theory, design, and applications will be discussed along with the future trends in NLP, which will feature expert opinions. You’ll also get to strengthen your practical skills by working on sample real-world NLP business problems and solutions.

- Master the mathematical foundations of machine learning and NLP Implement advanced techniques for preprocessing text data and analysis Design ML-NLP systems in Python

- Model and classify text using traditional machine learning and deep learning methods

- Understand the theory and design of LLMs and their implementation for various applications in AI

- Explore NLP insights, trends, and expert opinions on its future direction and potential

All of the code is organized into folders.

The code will look like the following:

import pandas as pd

import matplotlib.pyplot as plt

# Load the record dict from URL

import requests

import pickle

This book is for deep learning and machine learning researchers, NLP practitioners, ML/NLP educators, and STEM students. Professionals working with text data as part of their projects will also find plenty of useful information in this book. Beginner-level familiarity with machine learning and a basic working knowledge of Python will help you get the best out of this book.

With the following software and hardware list you can run all code files present in the book (Chapter 1-11).

| Chapter | Software required | OS required |

|---|---|---|

| 1-11 | Access to a Python environment via one of the following: Accessing Google Colab, which is free and easy from any browser on any device (recommended). A local/cloud development environment of Python with the ability to install public packages and access OpenAI’s API | Windows, macOS or Linux |

| 1-11 | Sufficient computation resources, as follows: The previously recommended free access to Google Colab includes a free GPU instance. If opting to avoid Google Colab, the local/cloud environment should have a GPU for several code examples |

- Navigating the NLP Landscape: A comprehensive introduction

- Mastering Linear Algebra, Probability, and Statistics for Machine Learning and NLP

- Unleashing Machine Learning Potentials in NLP

- Streamlining Text Preprocessing Techniques for Optimal NLP Performance (Notebooks for chapter 4)

- Empowering Text Classification: Leveraging Traditional Machine Learning Techniques (Notebooks for chapter 5)

- Text Classification Reimagined: Delving Deep into Deep Learning Language Models (Notebooks for chapter 6)

- Demystifying Large Language Models: Theory, Design, and Langchain Implementation

- Accessing the Power of Large Language Models: Advanced Setup and Integration with RAG (Notebooks for chapter 8)

- Exploring the Frontiers: Advanced Applications and Innovations Driven by LLMs (Notebooks for chapter 9)

- Riding the Wave: Analyzing Past, Present, and Future Trends Shaped by LLMs and AI

- Exclusive Industry Insights: Perspectives and Predictions from World Class Experts

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Mastering-NLP-from-Foundations-to-LLMs

Similar Open Source Tools

Mastering-NLP-from-Foundations-to-LLMs

This code repository is for the book 'Mastering NLP from Foundations to LLMs', which provides an in-depth introduction to Natural Language Processing (NLP) techniques. It covers mathematical foundations of machine learning, advanced NLP applications such as large language models (LLMs) and AI applications, as well as practical skills for working on real-world NLP business problems. The book includes Python code samples and expert insights into current and future trends in NLP.

god-level-ai

A drill of scientific methods, processes, algorithms, and systems to build stories & models. An in-depth learning resource for humans. This is a drill for people who aim to be in the top 1% of Data and AI experts. The repository provides a routine for deep and shallow work sessions, covering topics from Python to AI/ML System Design and Personal Branding & Portfolio. It emphasizes the importance of continuous effort and action in the tech field.

AI-Engineer-Headquarters

AI Engineer Headquarters is a comprehensive learning resource designed to help individuals master scientific methods, processes, algorithms, and systems to build stories and models in the field of Data and AI. The repository provides in-depth content through video sessions and text materials, catering to individuals aspiring to be in the top 1% of Data and AI experts. It covers various topics such as AI engineering foundations, large language models, retrieval-augmented generation, fine-tuning LLMs, reinforcement learning, ethical AI, agentic workflows, and career acceleration. The learning approach emphasizes action-oriented drills and routines, encouraging consistent effort and dedication to excel in the AI field.

oreilly-hands-on-gpt-llm

This repository contains code for the O'Reilly Live Online Training for Deploying GPT & LLMs. Learn how to use GPT-4, ChatGPT, OpenAI embeddings, and other large language models to build applications for experimenting and production. Gain practical experience in building applications like text generation, summarization, question answering, and more. Explore alternative generative models such as Cohere and GPT-J. Understand prompt engineering, context stuffing, and few-shot learning to maximize the potential of GPT-like models. Focus on deploying models in production with best practices and debugging techniques. By the end of the training, you will have the skills to start building applications with GPT and other large language models.

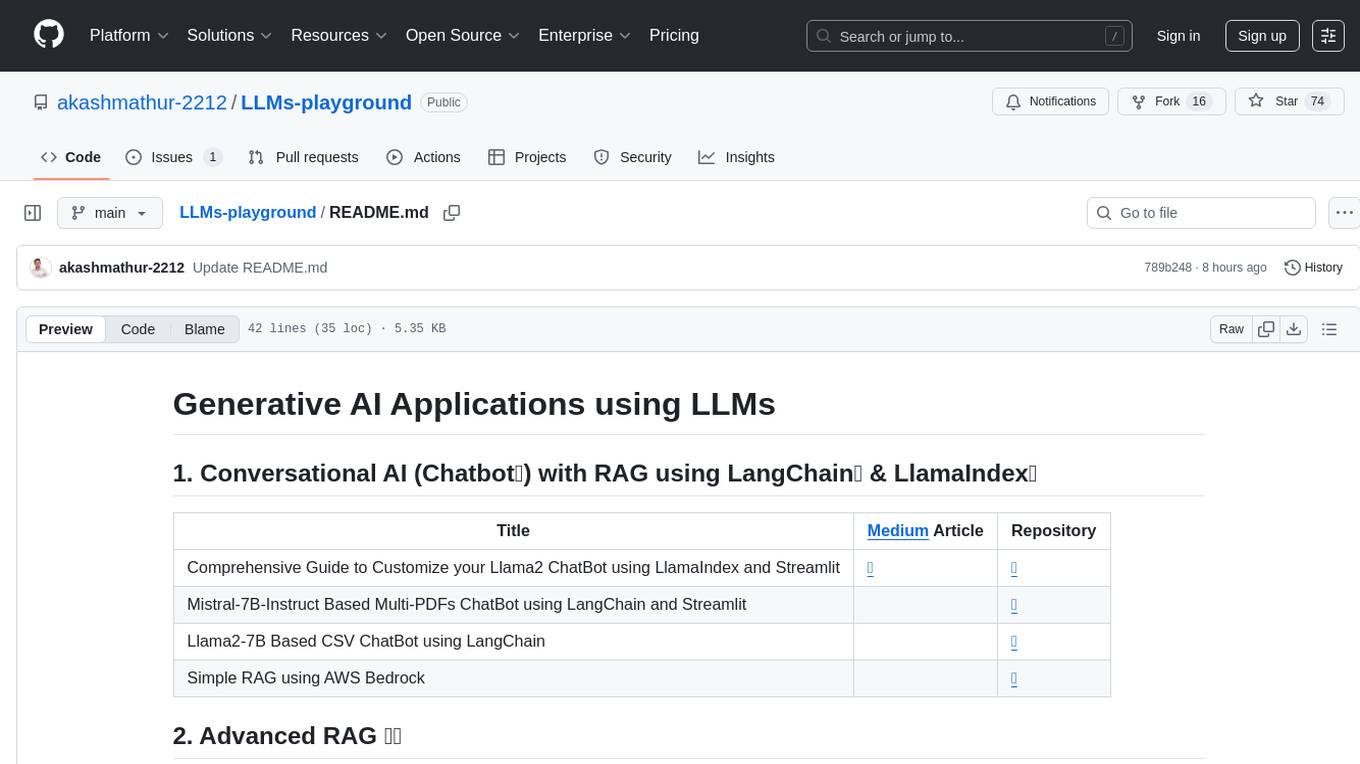

LLMs-playground

LLMs-playground is a repository containing code examples and tutorials for learning and experimenting with Large Language Models (LLMs). It provides a hands-on approach to understanding how LLMs work and how to fine-tune them for specific tasks. The repository covers various LLM architectures, pre-training techniques, and fine-tuning strategies, making it a valuable resource for researchers, students, and practitioners interested in natural language processing and machine learning. By exploring the code and following the tutorials, users can gain practical insights into working with LLMs and apply their knowledge to real-world projects.

awesome-generative-ai-guide

This repository serves as a comprehensive hub for updates on generative AI research, interview materials, notebooks, and more. It includes monthly best GenAI papers list, interview resources, free courses, and code repositories/notebooks for developing generative AI applications. The repository is regularly updated with the latest additions to keep users informed and engaged in the field of generative AI.

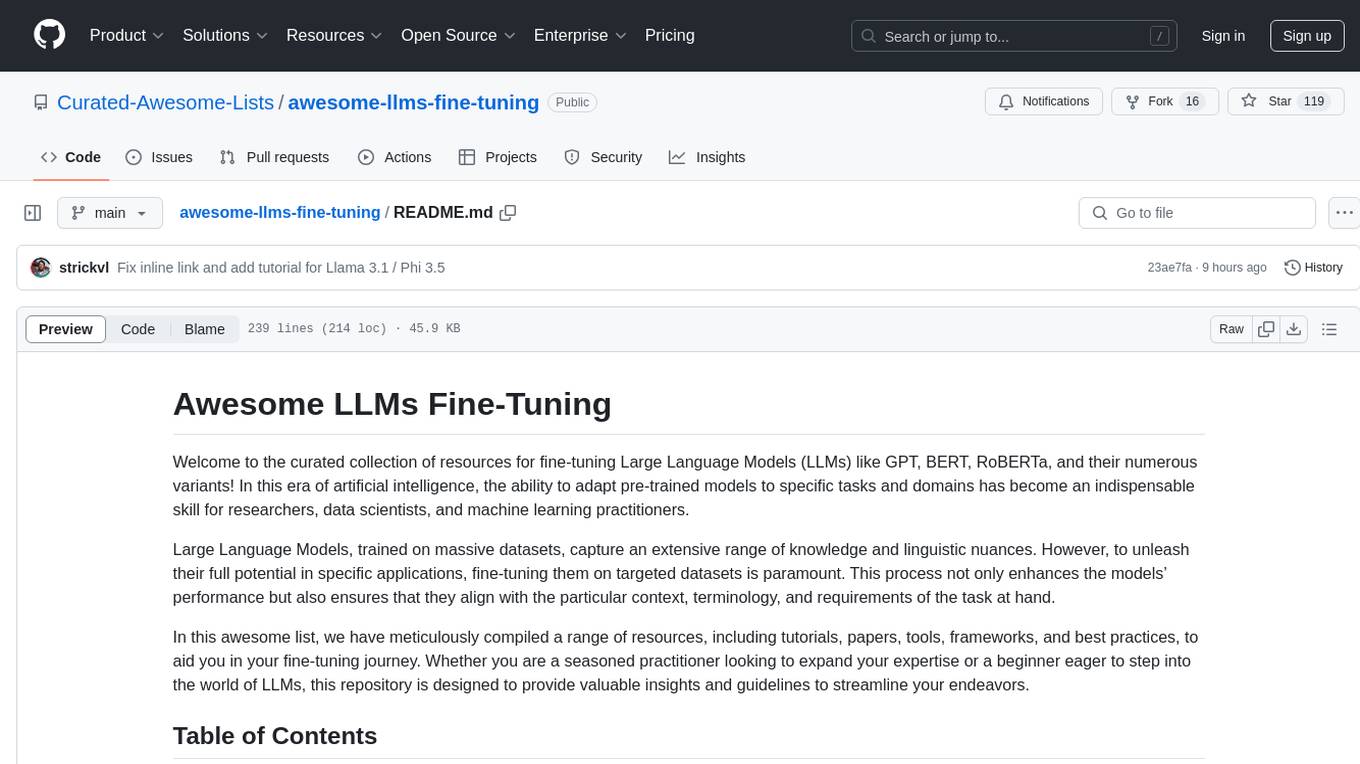

awesome-llms-fine-tuning

This repository is a curated collection of resources for fine-tuning Large Language Models (LLMs) like GPT, BERT, RoBERTa, and their variants. It includes tutorials, papers, tools, frameworks, and best practices to aid researchers, data scientists, and machine learning practitioners in adapting pre-trained models to specific tasks and domains. The resources cover a wide range of topics related to fine-tuning LLMs, providing valuable insights and guidelines to streamline the process and enhance model performance.

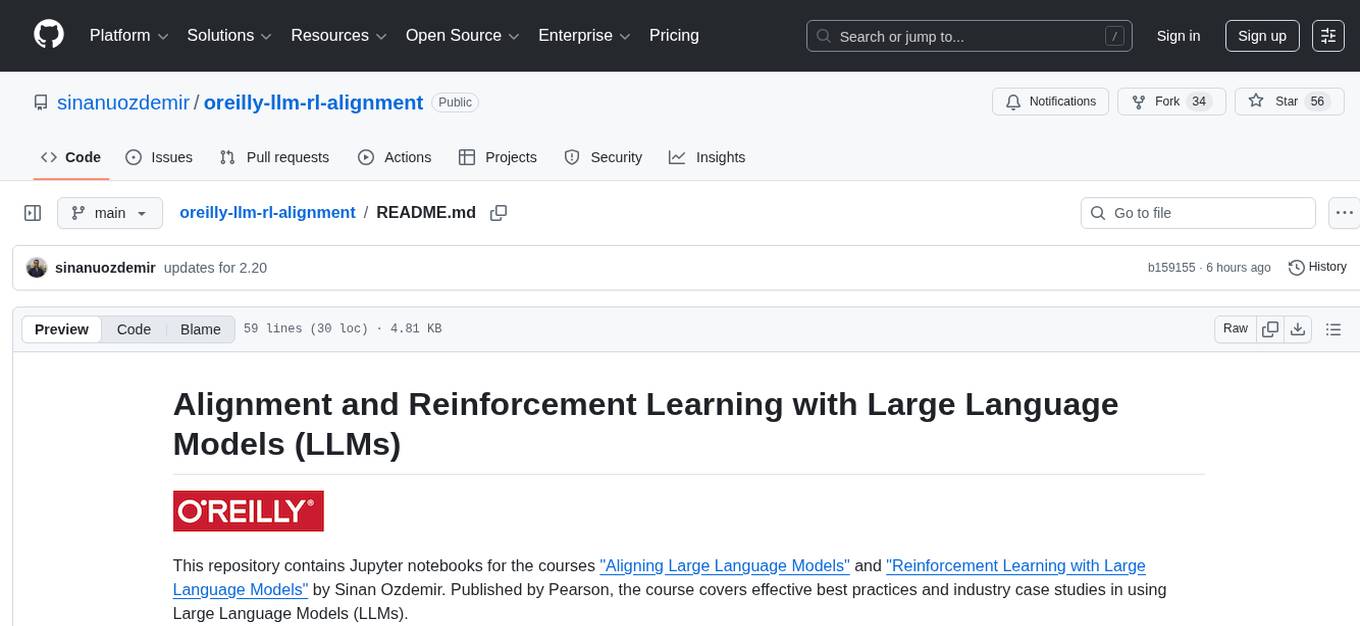

oreilly-llm-rl-alignment

This repository contains Jupyter notebooks for the courses 'Aligning Large Language Models' and 'Reinforcement Learning with Large Language Models' by Sinan Ozdemir. It covers effective best practices and industry case studies in using Large Language Models (LLMs). The courses provide in-depth exploration of alignment techniques, evaluation methods, ethical considerations, and reinforcement learning concepts with practical applications. Participants will gain theoretical insights and hands-on experience in working with LLMs, including fine-tuning models and understanding advanced concepts like RLHF, RLAIF, and Constitutional AI.

RecAI

RecAI is a project that explores the integration of Large Language Models (LLMs) into recommender systems, addressing the challenges of interactivity, explainability, and controllability. It aims to bridge the gap between general-purpose LLMs and domain-specific recommender systems, providing a holistic perspective on the practical requirements of LLM4Rec. The project investigates various techniques, including Recommender AI agents, selective knowledge injection, fine-tuning language models, evaluation, and LLMs as model explainers, to create more sophisticated, interactive, and user-centric recommender systems.

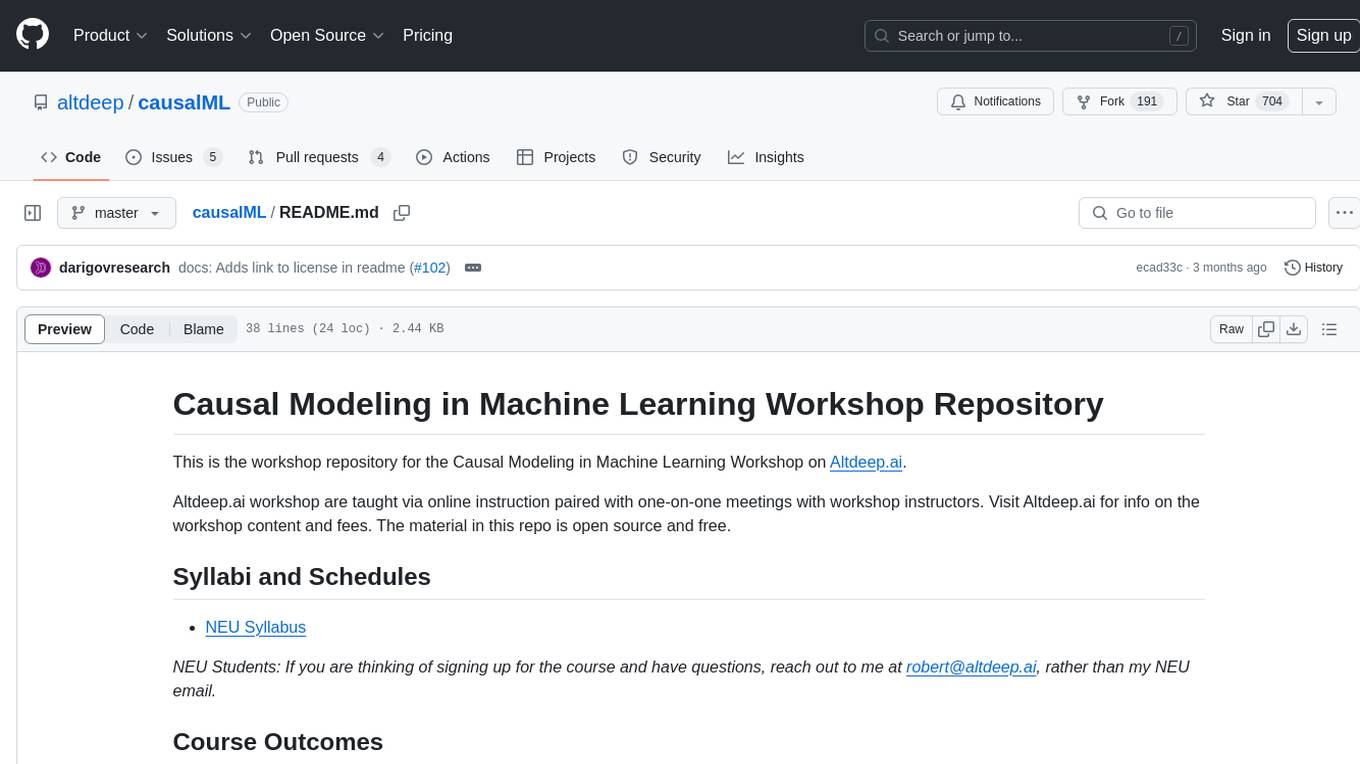

causalML

This repository is the workshop repository for the Causal Modeling in Machine Learning Workshop on Altdeep.ai. The material is open source and free. The course covers causality in model-based machine learning, Bayesian modeling, interventions, counterfactual reasoning, and deep causal latent variable models. It aims to equip learners with the ability to build causal reasoning algorithms into decision-making systems in data science and machine learning teams within top-tier technology organizations.

SuperKnowa

SuperKnowa is a fast framework to build Enterprise RAG (Retriever Augmented Generation) Pipelines at Scale, powered by watsonx. It accelerates Enterprise Generative AI applications to get prod-ready solutions quickly on private data. The framework provides pluggable components for tackling various Generative AI use cases using Large Language Models (LLMs), allowing users to assemble building blocks to address challenges in AI-driven text generation. SuperKnowa is battle-tested from 1M to 200M private knowledge base & scaled to billions of retriever tokens.

nlp-llms-resources

The 'nlp-llms-resources' repository is a comprehensive resource list for Natural Language Processing (NLP) and Large Language Models (LLMs). It covers a wide range of topics including traditional NLP datasets, data acquisition, libraries for NLP, neural networks, sentiment analysis, optical character recognition, information extraction, semantics, topic modeling, multilingual NLP, domain-specific LLMs, vector databases, ethics, costing, books, courses, surveys, aggregators, newsletters, papers, conferences, and societies. The repository provides valuable information and resources for individuals interested in NLP and LLMs.

awesome-RLAIF

Reinforcement Learning from AI Feedback (RLAIF) is a concept that describes a type of machine learning approach where **an AI agent learns by receiving feedback or guidance from another AI system**. This concept is closely related to the field of Reinforcement Learning (RL), which is a type of machine learning where an agent learns to make a sequence of decisions in an environment to maximize a cumulative reward. In traditional RL, an agent interacts with an environment and receives feedback in the form of rewards or penalties based on the actions it takes. It learns to improve its decision-making over time to achieve its goals. In the context of Reinforcement Learning from AI Feedback, the AI agent still aims to learn optimal behavior through interactions, but **the feedback comes from another AI system rather than from the environment or human evaluators**. This can be **particularly useful in situations where it may be challenging to define clear reward functions or when it is more efficient to use another AI system to provide guidance**. The feedback from the AI system can take various forms, such as: - **Demonstrations** : The AI system provides demonstrations of desired behavior, and the learning agent tries to imitate these demonstrations. - **Comparison Data** : The AI system ranks or compares different actions taken by the learning agent, helping it to understand which actions are better or worse. - **Reward Shaping** : The AI system provides additional reward signals to guide the learning agent's behavior, supplementing the rewards from the environment. This approach is often used in scenarios where the RL agent needs to learn from **limited human or expert feedback or when the reward signal from the environment is sparse or unclear**. It can also be used to **accelerate the learning process and make RL more sample-efficient**. Reinforcement Learning from AI Feedback is an area of ongoing research and has applications in various domains, including robotics, autonomous vehicles, and game playing, among others.

mlforpublicpolicylab

The Machine Learning for Public Policy Lab is a project-based course focused on solving real-world problems using machine learning in the context of public policy and social good. Students will gain hands-on experience building end-to-end machine learning systems, developing skills in problem formulation, working with messy data, communicating with non-technical stakeholders, model interpretability, and understanding algorithmic bias & disparities. The course covers topics such as project scoping, data acquisition, feature engineering, model evaluation, bias and fairness, and model interpretability. Students will work in small groups on policy projects, with graded components including project proposals, presentations, and final reports.

ai-powered-search

AI-Powered Search provides code examples for the book 'AI-Powered Search' by Trey Grainger, Doug Turnbull, and Max Irwin. The book teaches modern machine learning techniques for building search engines that continuously learn from users and content to deliver more intelligent and domain-aware search experiences. It covers semantic search, retrieval augmented generation, question answering, summarization, fine-tuning transformer-based models, personalized search, machine-learned ranking, click models, and more. The code examples are in Python, leveraging PySpark for data processing and Apache Solr as the default search engine. The repository is open source under the Apache License, Version 2.0.

For similar tasks

Mastering-NLP-from-Foundations-to-LLMs

This code repository is for the book 'Mastering NLP from Foundations to LLMs', which provides an in-depth introduction to Natural Language Processing (NLP) techniques. It covers mathematical foundations of machine learning, advanced NLP applications such as large language models (LLMs) and AI applications, as well as practical skills for working on real-world NLP business problems. The book includes Python code samples and expert insights into current and future trends in NLP.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.

oss-fuzz-gen

This framework generates fuzz targets for real-world `C`/`C++` projects with various Large Language Models (LLM) and benchmarks them via the `OSS-Fuzz` platform. It manages to successfully leverage LLMs to generate valid fuzz targets (which generate non-zero coverage increase) for 160 C/C++ projects. The maximum line coverage increase is 29% from the existing human-written targets.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.