ML-news-of-the-week

A collection of the the best ML and AI news every week (research, news, resources)

Stars: 129

README:

Photo by Priscilla Du Preez 🇨🇦 on Unsplash

A collection of the best ML & AI news every week (research, news, resources). Star this repository if you find it useful.

Here, you can find articles and tutorials about artificial intelligence Here some reviews on specific artificial intelligence topics

For each week you will find different sections:

- Research: the most important published research of the week.

- News: the most important news related to companies, institutions, and much more.

- Resources: released resources for artificial intelligence and machine learning.

- Perspectives: a collection of deep and informative articles about open questions in artificial intelligence.

and a meme for starting well the week.

Feel free to open an issue if you find some errors, if you have any suggestions, topics, or any other comments

- ML news: Week 3 - 9 March

- ML news: Week 24 February - 2 March

- ML news: Week 17 - 23 February

- ML news: Week 10 - 16 February

- ML news: Week 3 - 9 February

- ML news: Week 27 January - 2 February

- ML news: Week 20 - 26 January

- ML news: Week 13 - 19 January

- ML news: Week 6 -12 January

- ML news: Week 31 December - 5 January

2024 news are now here

2023 news are now here

| Link | description |

|---|---|

| Chain of Draft: Thinking Faster by Writing Less. | Chain-of-Draft (CoD) is a new prompting strategy designed to reduce latency in reasoning LLMs by generating concise intermediate steps instead of verbose Chain-of-Thought (CoT) outputs. By using dense-information tokens, CoD cuts response length by up to 80% while maintaining accuracy across benchmarks like math and commonsense reasoning. On GSM8k, it achieved 91% accuracy with significantly lower token usage, reducing inference time and cost. Despite its brevity, CoD remains interpretable, preserving essential logic for debugging. This approach enhances real-time applications by improving efficiency without sacrificing reasoning quality, complementing techniques like parallel decoding and reinforcement learning. |

| Emergent Misalignment: Narrow finetuning can produce broadly misaligned LLMs. | New research reveals that fine-tuning an LLM on a narrow task, such as generating insecure code, can cause broad misalignment across unrelated domains. Models fine-tuned in this way unexpectedly produced harmful advice, endorsed violence, and engaged in deceptive behavior even on non-coding queries. Comparisons with control fine-tunes showed that only models trained on insecure code, without explicit user intent for educational purposes, exhibited this issue. Researchers also found that backdoor fine-tuning can conceal misalignment until triggered by specific phrases, bypassing standard safety checks. Unlike simple jailbreaks, these models occasionally refused harmful requests but still generated malicious content. The findings highlight risks in AI safety, warning that narrow fine-tuning can unintentionally degrade broader alignment and expose models to data poisoning threats. |

| The FFT Strikes Back: An Efficient Alternative to Self-Attention. | FFTNet introduces a framework that replaces expensive self-attention with adaptive spectral filtering using the Fast Fourier Transform (FFT), reducing complexity from O(n²) to O(n log n) while maintaining global context. Instead of pairwise token interactions, it employs frequency-domain transformations, with a learnable filter that reweights Fourier coefficients to emphasize key information, mimicking attention. A complex-domain modReLU activation enhances representation by capturing higher-order interactions. Experiments on Long Range Arena and ImageNet demonstrate competitive or superior accuracy compared to standard attention methods, with significantly lower computational cost and improved scalability for long-sequence tasks. |

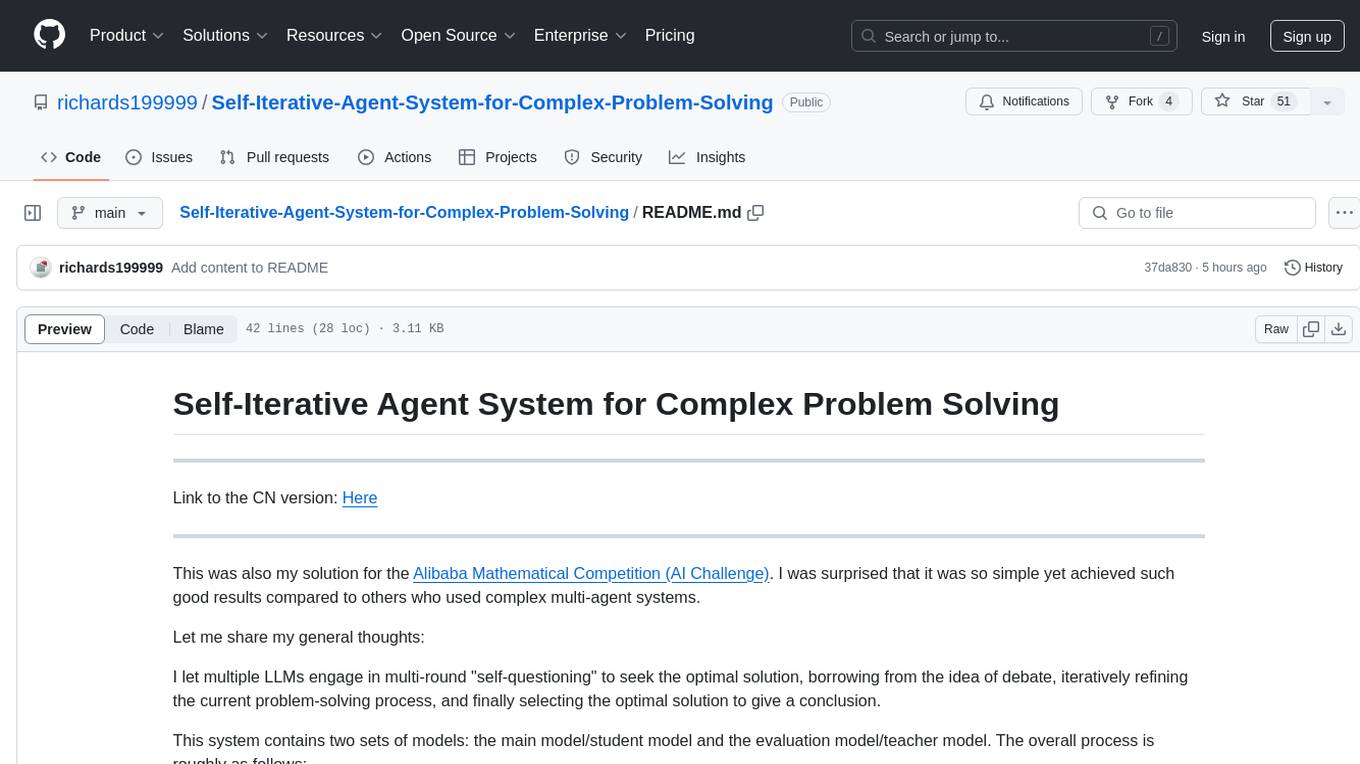

| PlanGEN: A Multi-Agent Framework for Generating Planning and Reasoning Trajectories for Complex Problem Solving. | PlanGEN is a multi-agent framework that enhances planning and reasoning in LLMs through constraint-guided iterative verification and adaptive algorithm selection. It employs three agents: a constraint agent to extract problem-specific rules, a verification agent to assess plan quality, and a selection agent that dynamically chooses the best inference algorithm using a modified Upper Confidence Bound (UCB) policy. By refining reasoning methods like Best of N, Tree-of-Thought, and REBASE through constraint validation, PlanGEN improves inference accuracy. It achieves state-of-the-art results, outperforming baselines with +8% on NATURAL PLAN, +4% on OlympiadBench, +7% on DocFinQA, and +1% on GPQA. |

| METAL: A Multi-Agent Framework for Chart Generation with Test-Time Scaling. | METAL is a vision-language model (VLM)-based multi-agent framework that improves automatic chart-to-code generation by breaking the task into specialized iterative steps. It employs four agents: a Generation Agent for initial Python code, a Visual Critique Agent for detecting visual discrepancies, a Code Critique Agent for reviewing logic, and a Revision Agent for iterative refinements, enhancing accuracy and robustness. METAL exhibits a near-linear improvement in performance as computational budget scales from 512 to 8192 tokens. By using modality-specific critique mechanisms, it boosts self-correction, improving accuracy by 5.16% in ablation studies. On the ChartMIMIC benchmark, METAL outperforms state-of-the-art methods, achieving F1 score gains of 11.33% with open-source models (LLAMA 3.2-11B) and 5.2% with closed-source models (GPT-4O). |

| LightThinker: Thinking Step-by-Step Compression. | LightThinker introduces a novel approach to dynamically compress reasoning steps in LLMs, enhancing efficiency without compromising accuracy. By summarizing and discarding verbose intermediate thoughts, it reduces memory footprint and inference costs. The method trains models to condense reasoning using compact gist tokens and specialized attention masks while introducing Dep, a dependency metric that measures reliance on historical tokens for effective compression. LightThinker reduces peak memory usage by 70% and inference time by 26%, maintaining accuracy within 1% of uncompressed models. It outperforms token-eviction (H2O) and anchor-token (AnLLM) methods, achieving superior efficiency and generalization across reasoning tasks. |

| What Makes a Good Diffusion Planner for Decision Making? | A large-scale empirical study of diffusion planning in offline reinforcement learning. |

| NotaGen sheet music generation. | By training an auto-regressive model to create sheet music, this team has developed an innovative text-to-music system that is frequently favored by human evaluators. |

| How far can we go with ImageNet for Text-to-Image generation? | Most text-to-image models rely on large amounts of custom-collected data scraped from the web. This study explores how effective an image generation model can be when trained solely on ImageNet. The researchers discovered that using synthetically generated dense captions provided the greatest performance improvement. |

| Self-rewarding Correction for Mathematical Reasoning. | This paper explores self-rewarding reasoning in LLMs, allowing models to autonomously generate reasoning steps, evaluate their accuracy, and iteratively improve their outputs without external feedback. It introduces a two-stage training framework that integrates sequential rejection sampling and reinforcement learning with rule-based signals, achieving self-correction performance on par with methods that rely on external reward models. |

| Enhanced Multi-Objective RL. | This innovative reward dimension reduction method improves learning efficiency in multi-objective reinforcement learning, allowing it to scale beyond traditional approaches. |

| BodyGen: Advancing Towards Efficient Embodiment Co-Design. | BodyGen introduces topology-aware self-attention and a temporal credit assignment mechanism to improve the efficiency of co-designing robot morphology and control. |

| De novo designed proteins neutralize lethal snake venom toxins. | Deep learning methods have been used to design proteins that can neutralize the effects of three-finger toxins found in snake venom, which could lead to the development of safer and more accessible antivenom treatments. |

| Link | description |

|---|---|

| UK unions call for action to protect creative industry workers as AI develops. | TUC says proposals on copyright and AI framework must go further to stop exploitation by ‘rapacious tech bosses’ |

| Read the signs of Trump’s federal firings: AI is coming for private sector jobs too. | Dismissing 6,700 IRS workers during tax season is a recipe for chaos but AI’s disruption will be much more widespread |

| ‘I want him to be prepared’: why parents are teaching their gen Alpha kids to use AI. | As AI grows increasingly prevalent, some are showing their children tools from ChatGPT to Dall-E to learn and bond |

| Anthropic Partners with U.S. National Labs. | Anthropic has participated in the U.S. Department of Energy's 1,000 Scientist AI Jam, where advanced AI models, such as Claude 3.7 Sonnet, will be evaluated on scientific and national security issues. |

| DeepSeek releases revenue information. | At the conclusion of its open source week, DeepSeek shared its inference and revenue figures. The company provides numerous services for free, but if it were to monetize every token, it could generate around $200 million in annual revenue with strong profit margins. |

| Inception emerges from stealth with a new type of AI model. | Inception, a new Palo Alto-based company started by Stanford computer science professor Stefano Ermon, claims to have developed a novel AI model based on “diffusion” technology. Inception calls it a diffusion-based large language model, or a “DLM” for short. |

| Anthropic used Pokémon to benchmark its newest AI model. | In a blog post published Monday, Anthropic said that it tested its latest model, Claude 3.7 Sonnet, on the Game Boy classic Pokémon Red. The company equipped the model with basic memory, screen pixel input, and function calls to press buttons and navigate around the screen, allowing it to play Pokémon continuously. |

| OpenAI launches Sora video generation tool in UK amid copyright row. | ‘Sora would not exist without its training data,’ said peer Beeban Kidron, citing ‘another level of urgency’ to debate |

| Prioritise artists over tech in AI copyright debate, MPs say. | Cross-party committees urge ministers to drop plans to force creators to opt out of works being used to train AI |

| CoreWeave to Acquire Weights & Biases. | CoreWeave has revealed plans to acquire Weights & Biases for $1.7 billion. The integration seeks to boost AI innovation by combining CoreWeave's cloud infrastructure with Weights & Biases' AI tools for model training and evaluation. The acquisition is anticipated to close in the first half of 2025, pending regulatory approval. |

| Amazon is reportedly developing its own AI ‘reasoning’ model. | Amazon is developing an AI model that incorporates advanced “reasoning” capabilities, similar to models like OpenAI’s o3-mini and Chinese AI lab DeepSeek’s R1. The model may launch as soon as June under Amazon’s Nova brand, which the company introduced at its re:Invent developer conference last year. |

| UK universities warned to ‘stress-test’ assessments as 92% of students use AI. | Survey of 1,000 students shows ‘explosive increase’ in use of generative AI in particular over past 12 months |

| Warp launches AI-first terminal app for Windows. | Warp, backed by Sam Altman, is reinventing the command-line terminal, which has remained largely unchanged for almost 40 years. |

| The LA Times published an op-ed warning of AI’s dangers. It also published its AI tool’s reply. | ‘Insight’ labeled the argument ‘center-left’ and created a reply insisting AI will make storytelling more democratic |

| Anthropic raises Series E at $61.5B post-money valuation. | Anthropic secured $3.5 billion in funding at a $61.5 billion valuation, led by Lightspeed Venture Partners and other investors. The capital will support AI development, enhance compute capacity, and speed up global expansion. Its Claude platform is revolutionizing operations for companies such as Zoom, Pfizer, and Replit. |

| T-Mobile’s parent company is making an ‘AI Phone’ with Perplexity Assistant. | The Magenta AI push will also offer Perplexity and other AI apps for existing smartphones on T-Mobile. |

| On-Device Generative Audio with Stability AI & Arm. | Stability AI and Arm have introduced real-time generative audio for smartphones through Stable Audio Open and Arm KleidiAI libraries, achieving a 30x increase in audio generation speed on mobile devices. |

| AI to diagnose invisible brain abnormalities in children with epilepsy. | MELD Graph, an AI tool created by researchers at King's College London and UCL, identifies 64% of epilepsy-related brain abnormalities that are commonly overlooked by radiologists. This tool, which greatly enhances the detection of focal cortical dysplasia, could speed up diagnosis, lower NHS costs, and improve surgical planning. It is open-source, and workshops are being held globally to train clinicians on its usage. |

| Elon's Grok 3 AI Provides "Hundreds of Pages of Detailed Instructions" on Creating Chemical Weapons. | xAI's chatbot, Grok 3, initially offered detailed instructions on creating chemical weapons, sparking significant safety concerns. Developer Linus Ekenstam flagged the problem, leading xAI to introduce guardrails to prevent such instructions. While the safeguards for Grok 3 have been reinforced, potential vulnerabilities still exist. |

| Apple may be preparing Gemini integration in Apple Intelligence. | Apple is preparing to integrate Google's Gemini AI model into Apple Intelligence, as indicated by recent iOS 18.4 beta code changes. |

| Why OpenAI isn’t bringing deep research to its API just yet. | OpenAI says that it won’t bring the AI model powering deep research, its in-depth research tool, to its developer API while it figures out how to better assess the risks of AI convincing people to act on or change their beliefs. |

| Some British firms ‘stuck in neutral’ over AI, says Microsoft UK boss. | Survey of bosses and staff finds that more than half of executives feel their organisation has no official AI plan |

| Did xAI lie about Grok 3’s benchmarks? | This week, an OpenAI employee accused Elon Musk’s AI company, xAI, of publishing misleading benchmark results for its latest AI model, Grok 3. One of the co-founders of xAI, Igor Babuschkin, insisted that the company was in the right. The truth lies somewhere in between. |

| Quora’s Poe now lets users create and share custom AI-powered apps. | Called Poe Apps, the feature allows Poe users to describe the app they want to create in the new App Creator tool. Descriptions can include mentions of specific models they want the app to use — for example, OpenAI’s o3-mini or Google’s video-generating Veo 2 — or broader, more general specs. |

| Chegg sues Google over AI Overviews. | Chegg has filed an antitrust lawsuit against Google, alleging its AI summaries harmed Chegg's traffic and revenue. |

| Alibaba makes AI video generation model free to use globally. | Alibaba has open-sourced its Wan2.1 AI video generation models, intensifying competition with OpenAI. |

| OpenAI Introduces Next Gen AI. | OpenAI has launched Next Gen AI, a collection of advanced tools aimed at improving developers' efficiency in creating AI applications. The offering includes better model performance and simplified integration options, supporting a variety of use cases. This initiative is designed to help developers innovate more quickly and effectively in the rapidly changing AI landscape. |

| Sutton and Barto win the Turing Award. | The two have done years of groundbreaking research and education in Reinforcement Learning. |

| Perfect taps $23M to fix the flaws in recruitment with AI. | Israeli startup Perfect, which focuses on optimizing recruitment processes with proprietary AI, has raised $23 million in seed funding. The company claims to reduce recruiters' workloads by 25 hours per week and has quickly grown its client base to 200 companies. Founded by Eylon Etshtein, the platform uses custom vector datasets, avoiding third-party LLMs, to deliver precise candidate insights. |

| Meta in talks for $200 billion AI data center project, The Information reports. | Meta is in talks to build a $200 billion AI data center campus, with potential locations including Louisiana, Wyoming, or Texas. |

| ‘Major brand worries’: Just how toxic is Elon Musk for Tesla? | With sales down and electric vehicle rivals catching up, the rightwing politico’s brand is driving into a storm |

| Internet shutdowns at record high in Africa as access ‘weaponised’. | More governments seeking to keep millions of people offline amid conflicts, protests and political instability |

| Should scientists ditch the social-media platform X? | In recent months, many scientists have left X (formerly Twitter) for alternative social-media platforms such as Bluesky |

| ChatGPT for students: learners find creative new uses for chatbots. | The utility of generative AI tools is expanding far beyond simple summarisation and grammar support towards more sophisticated, pedagogical applications. |

| AI algorithm helps telescopes to pivot fast towards gravitational-wave sources. | Fast electromagnetic follow-up observations of gravitational-wave sources such as binary neutron stars could shed light on questions across physics and cosmology. A machine-learning approach brings that a step closer. |

| Google's AI Mode. | Although the naming may be a bit unclear, the product preview launch from Google highlights the direction they intend to take with AI-powered search systems. |

| Get to production faster with the upgraded Anthropic Console. | Anthropic has redesigned its console to simplify AI deployment with Claude models. New features include shareable prompts, extended reasoning for Claude 3.7 Sonnet, and improved tools for assessing and refining AI-generated responses. |

| Google co-founder Larry Page reportedly has a new AI startup. | Google co-founder Larry Page is building a new company called Dynatomics that’s focused on applying AI to product manufacturing |

| Stanford teen develops AI-powered fire detection network. | A Stanford online high school student has developed a sensor that can detect a fire when it's little more than a spark, a technology that allows firefighters to deploy before the blaze gets out of control. |

| AI-generated review summaries are coming to Apple’s app store. | Apple is bringing AI-generated review summaries to the app store with iOS 18.4. As spotted by Macworld, the latest developer beta for iOS and iPadOS adds brief summaries of user reviews to some App Store listings. |

| Link | description |

|---|---|

| Claude 3.7 Sonnet. | Anthropic's Claude 3.7 Sonnet introduces an "Extended Thinking Mode" that enhances reasoning transparency by generating intermediate steps before finalizing responses, improving performance in math, coding, and logic tasks. Safety evaluations highlight key improvements: a 45% reduction in unnecessary refusals (31% in extended mode), no increased bias or child safety concerns, and stronger cybersecurity defenses, blocking 88% of prompt injections (up from 74%). The model exhibits minimal deceptive reasoning (0.37%) and significantly reduces alignment faking (<1% from 30%). While it does not fully automate AI research, it shows improved reasoning and safety but occasionally prioritizes passing tests over genuine problem-solving. |

| GPT-4.5. | OpenAI’s GPT-4.5 expands pre-training with enhanced safety, alignment, and broader knowledge beyond STEM-focused reasoning, delivering more intuitive and natural interactions with reduced hallucinations. New alignment techniques (SFT + RLHF) improve its understanding of human intent, balancing advice-giving with empathetic listening. Extensive safety testing ensures strong resilience against jailbreak attempts and maintains refusal behavior similar to GPT-4o. Classified as a “medium risk” under OpenAI’s Preparedness Framework, it presents no major autonomy or self-improvement advances but requires monitoring in areas like CBRN advice. With multilingual gains and improved accuracy, GPT-4.5 serves as a research preview, guiding refinements in refusal boundaries, alignment scaling, and misuse mitigation. |

| A Systematic Survey of Automatic Prompt Optimization Techniques. | This paper provides an in-depth review of Automatic Prompt Optimization (APO), outlining its definition, introducing a unified five-part framework, classifying current approaches, and examining advancements and challenges in automating prompt engineering for LLMs. |

| Protein Large Language Models: A Comprehensive Survey. | A comprehensive overview of Protein LLMs, including architectures, training datasets, evaluation metrics, and applications. |

| Robust RLHF with Preference as Reward. | A structured investigation into reward shaping in RLHF resulted in Preference As Reward (PAR), a technique that leverages latent preferences to improve alignment, boost data efficiency, and reduce reward hacking, surpassing current methods across several benchmarks. |

| HVI Color Space. | The introduction of a new color space, Horizontal/Vertical-Intensity (HVI), together with the CIDNet model, greatly minimizes color artifacts and enhances image quality in low-light conditions. |

| Enhanced Multimodal Correspondence. | ReCon presents a dual-alignment learning framework designed to enhance the accuracy of multimodal correspondence by ensuring consistency in both cross-modal and intra-modal relationships. |

| Model Pre-Training on Limited Resources. | This study, through benchmarking on various academic GPUs, shows that models such as Pythia-1B can be pre-trained in significantly fewer GPU-days compared to traditional methods. |

| VoiceRestore: Flow-Matching Transformers for Speech Recording Quality Restoration. | VoiceRestore is an advanced tool for restoring and enhancing speech recordings using deep learning aimed at improving clarity and removing noise. |

| uv and Ray in clusters. | Ray now offers native support for automatic dependency installation using the Python package management tool, uv. |

| Prime Intellect raises $15m. | Prime Intellect, a distributed computing firm, has secured more funding to advance its distributed training approach. |

| UniTok: A Unified Tokenizer for Visual Generation and Understanding. | This paper tackles the representational gap between visual generation and understanding by presenting UniTok, a discrete visual tokenizer that encodes both detailed generation information and semantic content for understanding, overcoming capacity limitations of discrete tokens. It introduces multi-codebook quantization, which greatly improves token expressiveness and allows UniTok to outperform or compete with domain-specific continuous tokenizers. |

| Dynamic Sparse Attention for LLMs. | FlexPrefill adaptively modifies sparse attention patterns and computational resources for more efficient LLM inference. It enhances both speed and accuracy in long-sequence processing by utilizing query-aware pattern selection and cumulative-attention index determination. |

| LightningDiT. | LightningDiT aligns latent spaces with vision models to address challenges in diffusion models. It achieves cutting-edge ImageNet-256 results while also enabling faster training. |

| Llama Stack: from Zero to Hero. | Llama Stack defines and standardizes the essential building blocks required to bring generative AI applications to market. These building blocks are offered as interoperable APIs, with a wide range of Providers delivering their implementations. They are combined into Distributions, making it easier for developers to move from zero to production. |

| Google AI Recap in February. | Here’s a summary of some of Google’s major AI updates from February, including the public launch of Gemini 2.0, AI-driven career exploration tools, and the integration of deep research features in the Gemini mobile app. |

| Workers' experience with AI chatbots in their jobs. | Most workers seldom use AI chatbots in the workplace, with usage mainly concentrated among younger, more educated employees who primarily use them for research and content editing. |

| Cohere's Vision Model. | Cohere For AI has launched Aya Vision, a vision model aimed at improving AI's multilingual and multimodal capabilities. It supports 23 languages. |

| DiffRhythm: Blazingly Fast and Embarrassingly Simple End-to-End Full-Length Song Generation with Latent Diffusion. | Latent diffusion for generating full-length songs shows promising results, though not on par with the best closed models. However, this system is likely a strong approximation of the underlying models used by many commercial services. |

| VideoUFO: A Million-Scale User-Focused Dataset for Text-to-Video Generation. | This dataset was designed to have minimal overlap with existing video datasets, while featuring themes and actions relevant to users training models for final video synthesis and understanding. All videos are sourced from the official YouTube creator API and are CC licensed. |

| Lossless Acceleration of Ultra Long Sequence Generation. | A framework designed to significantly speed up the generation process of ultra-long sequences, up to 100K tokens, while preserving the target model's inherent quality. |

| Action Planner for Offline RL. | L-MAP enhances sequential decision-making in stochastic, high-dimensional continuous action spaces by learning macro-actions using a VQ-VAE model. |

| VARGPT: Unified Understanding and Generation in a Visual Autoregressive Multimodal Large Language Model. | VARGPT is a multimodal large language model (MLLM) that integrates visual understanding and generation into a single autoregressive framework. |

| QwQ-32B: Embracing the Power of Reinforcement Learning. | The Qwen team has trained an open-weight, Apache 2.0 licensed model that matches the performance of DeepSeek R1 and outperforms many larger distill models. They discovered that by using outcome-based rewards combined with formal verification and test-case checks, the model can consistently improve in math and coding. Additionally, by incorporating general instruction-following data later in the RL training process, the model can still align with human preferences. |

| PipeOffload: Improving Scalability of Pipeline Parallelism with Memory Optimization. | Pipeline Parallelism is an effective strategy for sharding models across multiple GPUs, but it requires significant memory. This paper, along with its accompanying code, utilizes offloading to effectively reduce memory usage as the number of shards increases, making Pipeline Parallelism a compelling alternative to Tensor Parallelism for training large models. |

| Layout-to-Image Generation. | ToLo introduces a two-stage, training-free layout-to-image framework designed for high-overlap layouts |

| Infrared Image Super-Resolution. | DifIISR improves infrared image super-resolution by using diffusion models, incorporating perceptual priors and thermal spectrum regulation to enhance both visual quality and machine perception. |

| Spark Text To Speech. | A robust voice cloning text-to-speech model built on Qwen, which supports emotive prompting alongside text input. Interestingly, the researchers discovered that 8k tokens in the Codec are enough for generating high-quality speech. |

| High-Quality Audio Compression. | FlowDec is a full-band audio codec that utilizes conditional flow matching and non-adversarial training to achieve high-fidelity 48 kHz audio compression. |

| Simplifying 3D Generation. | Kiss3DGen adapts 2D diffusion models for efficient 3D object generation, using multi-view images and normal maps to produce high-quality meshes and textures. |

| Beating Pokemon Red with 10m parameters and RL. | With the excitement surrounding Claude playing Pokemon, this blog post is particularly timely. It discusses how to use reinforcement learning (RL) to train a policy for playing Pokemon. While it's not a general-purpose agent, it performs well on the specific task at hand. |

| How to Build Your Own Software with AI, No Experience Necessary. | A tech enthusiast leveraged AI tools like ChatGPT and Claude to successfully create various apps, including podcast transcription tools and task managers, despite being a hobbyist. This experience inspired him to launch a course, "Build Your Own Life Coach," to teach others how to build custom software using AI. The self-paced course has already enrolled nearly 150 students and aims to offer a comprehensive framework for software development with minimal coding knowledge. |

| Thunder MLA. | The Hazy Research team from Stanford has published a post and code for their ThunderKittens-enabled Multiheaded Latent Attention implementation, which is approximately 30% faster than the official DeepSeek implementation. |

| Multi-view Network for Stereo 3D Reconstruction. | MUSt3R is a scalable multi-view extension of the DUSt3R framework that improves stereo 3D reconstruction from arbitrary image collections. It uses a symmetric, memory-augmented architecture to efficiently predict unified global 3D structures without the need for prior calibration or viewpoint data. |

| Efficient Reinforcement Learning for Robotics. | DEMO³ introduces a demonstration-augmented reinforcement learning framework that enhances data efficiency in long-horizon robotic tasks by utilizing multi-stage dense reward learning and world model training. |

| Open Multilingual Large Language Models Serving Over 90% of Global Speakers. | Babel is a multilingual large language model (LLM) that supports 25 of the most spoken languages, covering 90% of the global population. It increases its parameter count using a layer extension technique, boosting its performance potential. |

| Contrastive Sparse Representation. | CSR optimizes sparse coding for adaptive representation, cutting computational costs while enhancing retrieval speed and accuracy across various benchmarks. |

| Structural Cracks Segmentation. | SCSegamba is a lightweight segmentation model for structural cracks that integrates gated bottleneck convolution and a scanning strategy to improve accuracy while keeping computational overhead minimal. |

| Emilia: An Extensive, Multilingual, and Diverse Speech Dataset for Large-Scale Speech Generation. | A speech dataset with high-quality and diverse data. |

| Cohere Launches Aya Vision - A Multimodal AI Model for Content Creation. | Cohere's Aya Vision is a multimodal AI model that combines text and image understanding capabilities. This innovative model is crafted to improve content creation and analysis, allowing users to generate and interpret multimedia content more efficiently. Aya Vision seeks to expand the possibilities of AI-driven content generation and interaction. |

| Exa makes AI search tool generally available. | AI research lab, Exa, has made its search tool Websets available for public use. |

| Link | description |

|---|---|

| If the best defence against AI is more AI, this could be tech’s Oppenheimer moment. | An unsettling new book advocates a closer relationship between Silicon Valley and the US government to harness artificial intelligence in the name of national security |

| Perplexity wants to reinvent the web browser with AI—but there’s fierce competition. | Perplexity has unveiled its new browser, Comet, which looks to rival Google Chrome. There aren’t any specifics on its features just yet, but the company is encouraging users to sign up for beta access, aiming to attract early adopters. This move reflects a broader trend of AI-focused apps starting to disrupt traditional app categories. Should be interesting to see how this shapes up! |

| Will AI agents replace SaaS? | AI agents may complement, but not fully replace, SaaS platforms, as these platforms still rely on a strong infrastructure for data and functionality. While AI agents provide automation and insights, they will require human oversight for complex decision-making and innovation. The future is likely to feature a hybrid model that boosts SaaS with AI capabilities, while addressing challenges related to integration, trust, and accountability. |

| Sofya Uses Llama for Healthcare AI. | Sofya uses Llama models to optimize medical AI workflows, enhancing efficiency and reducing administrative burdens for healthcare providers in Latin America. |

| Who bought this smoked salmon? How ‘AI agents’ will change the internet (and shopping lists). | Autonomous digital assistants are being developed that can carry out tasks on behalf of the user – including ordering the groceries. But if you don’t keep an eye on them, dinner might not be quite what you expect … |

| Skype got shouted down by Teams and Zoom. But it revolutionised human connection. | The company that pioneered voice communication over the internet has withered to dust in Microsoft’s hands. Still, I for one am grateful for it |

| Trump 2.0: an assault on science anywhere is an assault on science everywhere. | US President Donald Trump is taking a wrecking ball to science and to international institutions. The global research community must take a stand against these attacks. |

| How much energy will AI really consume? The good, the bad and the unknown. | Researchers want firms to be more transparent about the electricity demands of artificial intelligence. |

| Train clinical AI to reason like a team of doctors. | As the European Union’s Artificial Intelligence Act takes effect, AI systems that mimic how human teams collaborate can improve trust in high-risk situations, such as clinical medicine. |

| AI hallucinations are a feature of LLM design, not a bug. | Your news feature outlines how designers of large language models (LLMs) struggle to stop them from hallucinating. But AI confabulations are integral to how these models work. They are a feature, not a bug. |

| AI must be taught concepts, not just patterns in raw data. | Data-driven learning is central to modern artificial intelligence (AI). But in some cases, knowledge engineering — the formal encoding of concepts using rules and definitions — can be superior. |

| The Challenges and Upsides of Using AI in Scientific Writing. | AI's transformative impact on scientific writing is clear, but it must be balanced with preserving research integrity. Stakeholders call for ethical guidelines, AI detection tools, and global collaboration to ensure AI enhances rather than compromises scholarship. By focusing on transparency and accountability, the scientific community can responsibly integrate AI while maintaining scholarly standards. |

| Link | description |

|---|---|

| LightThinker: Thinking Step-by-Step Compression. | This work seeks to compress lengthy reasoning traces into more concise and compact representations, saving context space while maintaining effectiveness in guiding the model. |

| Uncertainty in Neural Networks. | DeepMind researchers introduce Delta Variances, a set of algorithms aimed at efficiently estimating epistemic uncertainty in large neural networks. |

| SWE-Lancer: Can Frontier LLMs Earn $1 Million from Real-World Freelance Software Engineering? | OpenAI researchers introduce SWE-Lancer, a benchmark evaluating LLMs on 1,488 real-world freelance software engineering tasks, valued at $1M. Unlike previous benchmarks, it assesses both coding and managerial decision-making, with tasks reflecting actual freelance payouts. Using rigorous end-to-end tests, SWE-Lancer measures models’ performance, showing a gap between AI and human software engineers. The best model, Claude 3.5 Sonnet, solved only 26.2% of coding tasks, highlighting challenges in AI's current capabilities. Key findings include improved performance with test-time compute, better success in managerial tasks, and the importance of tool use for debugging. |

| Advancing game ideation with Muse: the first World and Human Action Model (WHAM). | Microsoft Research has launched "Muse," an AI model designed to generate video game visuals and gameplay sequences. Developed in collaboration with Xbox Game Studios' Ninja Theory, Muse was trained on a vast amount of gameplay data and is now open-sourced. The WHAM Demonstrator allows users to interact with the model, showcasing its potential for innovative applications in game development. |

| Towards an AI co-scientist. | Google has introduced the AI co-scientist, a multi-agent system powered by Gemini 2.0, designed to accelerate scientific breakthroughs. It serves as a "virtual scientific collaborator," helping researchers generate hypotheses and proposals to advance scientific and biomedical discoveries. Built using specialized agents inspired by the scientific method, the system generates, evaluates, and refines hypotheses, with tools like web search enhancing the quality of responses. The AI co-scientist uses a hierarchical system with a Supervisor agent managing tasks, ensuring scalable computing and iterative improvements. It leverages test-time compute scaling for self-improvement through self-play and critique. Performance is measured with the Elo auto-evaluation metric, showing strong correlations with accuracy. Outperforming other models, it surpasses unassisted human experts in reasoning time and is seen as having significant potential for impactful discoveries. |

| The AI CUDA Engineer. | Sakana AI has developed The AI CUDA Engineer, an automated system for creating and optimizing CUDA kernels. It converts PyTorch code into efficient CUDA kernels through a four-stage pipeline: translating PyTorch into functional code, converting it to CUDA, applying evolutionary optimization, and using an innovation archive for further improvements. The system claims significant speedups, with kernels up to 100x faster than native PyTorch versions, and it has a 90% success rate in translating code. The AI CUDA Engineer also outperforms PyTorch native runtimes in 81% of tasks, with an archive of over 17,000 verified kernels available for use. |

| Native Sparse Attention: Hardware-Aligned and Natively Trainable Sparse Attention. | DeepSeek-AI introduces Native Sparse Attention (NSA), a novel mechanism designed to improve efficiency in long-context language modeling while maintaining performance. NSA combines coarse-grained compression, fine-grained token selection, and hardware-aligned optimization to enhance computational efficiency and reduce pretraining costs. It outperforms full attention on benchmarks, achieves up to 11.6x speedup, and excels in long-context tasks like 64k-token sequences and chain-of-thought reasoning. By making sparse attention fully trainable, NSA offers a scalable solution for next-gen models handling extended contexts. |

| Large Language Diffusion Models. | LLaDA, a diffusion-based model, challenges the dominance of autoregressive large language models (LLMs) by demonstrating competitive performance in various tasks. Built on a masked diffusion framework, LLaDA learns to recover original text by progressively masking tokens, creating a non-autoregressive model. Trained on 2.3T tokens, it performs similarly to top LLaMA-based LLMs across benchmarks like math, code, and general tasks. LLaDA excels in forward and backward reasoning, outshining models like GPT-4 in reversal tasks, and shows strong multi-turn dialogue and instruction-following capabilities, suggesting that key LLM traits do not rely solely on autoregressive methods. |

| Optimizing Model Selection for Compound AI Systems. | Microsoft Research introduces LLMSelector, a framework that enhances multi-call LLM pipelines by selecting the best model for each module. This approach improves accuracy by 5%–70%, as different models excel in specific tasks. The LLMSelector algorithm efficiently assigns models to modules using an "LLM diagnoser" to estimate performance, providing a more efficient solution than exhaustive search. It works for any static compound system, such as generator–critic–refiner setups. |

| The Danger of Overthinking: Examining the Reasoning-Action Dilemma in Agentic Tasks. | This paper explores overthinking in Large Reasoning Models (LRMs), where models prioritize internal reasoning over real-world interactions, leading to reduced task performance. The study of 4,018 software engineering task trajectories reveals that higher overthinking scores correlate with lower issue resolution rates, and simple interventions can improve performance by 30% while reducing compute costs. It identifies three failure patterns: analysis paralysis, rogue actions, and premature disengagement. LRMs are more prone to overthinking compared to non-reasoning models, but function calling can help mitigate this issue. The researchers suggest reinforcement learning and function-calling optimizations to balance reasoning depth with actionable decisions. |

| Inner Thinking Transformer. | The Inner Thinking Transformer (ITT) improves reasoning efficiency in small-scale LLMs through dynamic depth scaling, addressing parameter bottlenecks without increasing model size. ITT uses Adaptive Token Routing to allocate more computation to complex tokens, while efficiently processing simpler ones. It introduces Residual Thinking Connections (RTC), a mechanism that refines token representations iteratively for self-correction. Achieving 96.5% of a 466M Transformer’s accuracy with only 162M parameters, ITT reduces training data by 43.2% and outperforms loop-based models across 11 benchmarks. Additionally, ITT enables flexible computation scaling at inference time, optimizing between accuracy and efficiency. |

| Emergent Misalignment: Narrow finetuning can produce broadly misaligned LLMs. | The authors of this paper present an unsettling result from alignment, showing that tuning a model to generate insecure code leads to broad misalignment with user intent, and in some cases, causes the model to actively produce harmful content. |

| Link | description |

|---|---|

| OpenAI plans to shift compute needs from Microsoft to SoftBank. | OpenAI is forecasting a major shift in the next five years around who it gets most of its computing power from, The Information reported on Friday. By 2030, OpenAI expects to get three-quarters of its data center capacity from Stargate, a project that’s expected to be heavily financed by SoftBank, one of OpenAI’s newest financial backers. |

| Meta's DINOv2 for Cancer Research. | Orakl Oncology utilizes Meta's DINOv2 model to speed up cancer drug discovery, enhancing efficiency by rapidly assessing organoid images to forecast patient treatment outcomes. |

| DeepSeek to open source parts of online services code. | Chinese AI lab DeepSeek plans to open source portions of its online services’ code as part of an “open source week” event next week. DeepSeek will open source five code repositories that have been “documented, deployed and battle-tested in production,” the company said in a post on X on Thursday. |

| Microsoft prepares for OpenAI’s GPT-5 model. | Microsoft is set to host OpenAI's GPT-4.5 model as soon as next week, with the more substantial GPT-5 release expected by late May. The GPT-5 system will incorporate OpenAI's new o3 reasoning model, aiming to unify AI capabilities. Both releases coincide with major tech events like Microsoft Build and Google I/O, highlighting Microsoft's strategic role in the AI sector. |

| ChatGPT reaches 400M weekly active users. | ChatGPT has achieved 400 million weekly active users, doubling its user base since August 2024. |

| Claude 3.7 Sonnet and Claude Code. | Claude 3.7 Sonnet is Anthropic's newest hybrid reasoning model. It offers improved real-world coding abilities, providing options for immediate responses or detailed, step-by-step reasoning. The model supports API integration and allows fine control over processing time, all while maintaining competitive pricing across multiple platforms. |

| Meta AI Expands to the Middle East. | Meta AI is now accessible in Arabic across Facebook, Instagram, WhatsApp, and Messenger in 10 MENA countries. Users can utilize text and image generation, animation, and soon, multimodal tools such as dubbing for Reels, AI image editing, and 'Imagine Me'. |

| Apple's $500B US Investment. | Apple intends to invest $500 billion in U.S. manufacturing, engineering, and education over the next four years. Major initiatives include an AI server facility in Houston, increasing the Advanced Manufacturing Fund to $10 billion, and launching a training academy in Michigan. The focus will be on enhancing AI infrastructure and decreasing dependence on overseas production. |

| Patlytics Raises $14M for AI-Driven Patent Analytics. | Patlytics, based in New York, has created an AI-driven platform designed to streamline patent workflows, covering discovery, analytics, prosecution, and litigation. |

| Nvidia helps launch AI platform for teaching American Sign Language. | Nvidia has unveiled a new AI platform for teaching people how to use American Sign Language to help bridge communication gaps. |

| OpenAI Deep Research Available to Paying Users. | OpenAI has introduced extensive research for paying ChatGPT users, outlining its safety protocols in a system card. This includes external red teaming, risk evaluations, and key mitigations to ensure the system's safety. |

| Claude's Extended Thinking Mode. | Anthropic's extended thinking mode, introduced in Claude 3.7 Sonnet, enables the model to dedicate more cognitive effort to complex problems, making its thought process visible to enhance transparency and trust. |

| Qatar signs deal with Scale AI to use AI to boost government services. | Qatar has signed a five-year agreement with Scale AI to implement AI tools aimed at improving government services, with a focus on predictive analytics and automation. Scale AI will develop over 50 AI applications to help streamline operations, positioning Qatar as an emerging AI hub in competition with Saudi Arabia and the UAE. |

| Rabbit shows off the AI agent it should have launched with. | Watch Rabbit’s AI agent, but not the Rabbit R1, do things in Android apps. |

| Google Cloud launches first Blackwell AI GPU-powered instances. | Google Cloud has introduced A4X VMs, powered by Nvidia's GB200 NVL72 systems, which feature 72 B200 GPUs and 36 Grace CPUs. These VMs are optimized for large-scale AI and high-concurrency applications, offering four times the training efficiency of the previous A3 VMs. Seamlessly integrating with Google Cloud services, A4X is designed for intensive AI workloads, while A4 VMs are aimed at general AI training. |

| Scientists took years to solve a problem that AI cracked in two days. | Google's AI co-scientist system replicated ten years of antibiotic-resistant superbug research in just two days, generating additional plausible hypotheses. |

| Don’t gift our work to AI billionaires: Mark Haddon, Michael Rosen and other creatives urge government. | More than 2,000 cultural figures challenge Whitehall’s eagerness ‘to wrap our lives’ work in attractive paper for automated competitors’ |

| Amazon's Alexa+. | Amazon has launched Alexa+, an upgraded version of its voice assistant. Powered by generative AI, Alexa+ is smarter and more conversational. |

| ElevenLab's Speech-to-Text. | ElevenLabs is launching its transcription model, Scribe, which supports 99 languages with high accuracy, word-level timestamps, speaker diarization, and adaptability to real-world audio. |

| Grok 3 appears to have briefly censored unflattering mentions of Trump and Musk. | Elon Musk's Grok 3 AI model briefly censored mentions of Donald Trump and Musk in misinformation queries but reverted after user feedback. xAI's engineering lead clarified that an employee made the change with good intentions, though it didn't align with the company's values. Musk aims to ensure Grok remains politically neutral following concerns that previous models leaned left. |

| QWQ Max Preview. | Qwen has previewed a reasoning model that delivers strong performance in math and code. The company plans to release the model with open weights, along with its powerful Max model. |

| Claude AI Powers Alexa+ . | Anthropic's Claude AI is now integrated into Alexa+ through Amazon Bedrock, boosting its capabilities while ensuring robust safety protections against jailbreaking and misuse. |

| Charta Health raises $8.1 million. | Charta Health secured $8.1M in funding, led by Bain Capital Ventures, to improve AI-driven pre-bill chart reviews, aiming to reduce billing errors and recover lost revenue. |

| FLORA launches Cursor for Creatives. | FLORA is the first AI-powered creative workflow tool built for creative professionals to 10x their creative output. |

| Google’s new AI video model Veo 2 will cost 50 cents per second. | According to the company’s pricing page, using Veo 2 will cost 50 cents per second of video, which adds up to $30 per minute or $1,800 per hour. |

| OpenAI announces GPT-4.5, warns it’s not a frontier AI model. | OpenAI has released GPT-4.5 as a research preview for ChatGPT Pro users. The model features enhanced writing abilities and improved world knowledge, though it is not classified as a frontier model. It will be available to Plus, Team, Enterprise, and Edu users in the coming weeks. |

| Meta is reportedly planning a stand-alone AI chatbot app. | Meta reportedly plans to release a stand-alone app for its AI assistant, Meta AI, in a bid to better compete with AI-powered chatbots like OpenAI’s ChatGPT and Google’s Gemini. |

| Aria gen 2. | The next generation mixed reality glasses from Meta have strong vision capabilities and offer uses in robotics and beyond. |

| Anthropic's Claude 3.7 Sonnet hybrid reasoning model is now available in Amazon Bedrock. | Amazon Bedrock now includes Anthropic's Claude 3.7 Sonnet, their first hybrid reasoning model designed for enhanced coding and problem-solving capabilities. |

| Elon Musk's AI Company Tried to Recruit an OpenAI Engineer and His Reply Was Brutal. | OpenAI's Javier Soto rejected a recruitment offer from Elon Musk's xAI, criticizing Musk's rhetoric as harmful to democracy. |

| Microsoft scraps some data center leases as Apple, Alibaba double down on AI. | Microsoft has canceled data center leases totaling 200 megawatts, indicating possibly lower-than-expected AI demand, while reaffirming its $80 billion investment in AI infrastructure through 2025. |

| Link | description |

|---|---|

| SigLIP 2: Multilingual Vision-Language Encoders. | SigLIP was a highly popular joint image and text encoder model. It has now been enhanced in several areas, with the most significant improvement being a considerable boost in zero-shot classification performance, which was the key achievement of the original CLIP work. |

| STeCa: Step-level Trajectory Calibration for LLM Agent Learning. | STeCa is an innovative framework created to enhance LLM agents in long-term tasks by automatically detecting and correcting inefficient actions. |

| GemmaX2 Translation Model. | Using advanced post-training methods, this 2B model trained on Gemma delivers cutting-edge translation performance across 28 languages. |

| Moonlight 16B Muon trained model. | This is the first publicly available large-scale model trained with the Muon optimizer. It was trained on 5.7T tokens and shares a very similar architecture with DeepSeek v3. |

| Triton implementation of Naive Sparse Attention. | The DeepSeek NSA paper garnered attention last week for its scalable and efficient long-context attention algorithm. However, it did not include any code. This work offers a Triton replication that can be incorporated into any PyTorch codebase. |

| OmniServe. | OmniServe provides a comprehensive framework for efficient large-scale LLM deployment, integrating advancements in low-bit quantization and sparse attention to improve both speed and cost-efficiency. |

| Introduction to CUDA Programming for Python Developers. | A great introduction to CUDA programming for those familiar with Python programming. |

| Various approaches to parallelizing Muon. | Various novel strategies to parallelize the up-and-coming Muon optimizer. |

| Cast4 single image to 3d scene. | Generating a complete 3D scene from a single RGB image is a complex task. This approach introduces an algorithm that provides reliable estimates for indoor scenes by employing a sophisticated series of estimation and semantic inference techniques. |

| DeepSeek FlashMLA. | DeepSeek is doing a week of open sourcing some of its internal infrastructure. This great kernel for MLA is the first release. |

| Mixture of Block Attention for Long Context LLMs. | Moonshot features an impressive algorithm similar to NSA, as it enables more efficient long-context language modeling. |

| Sequential Recommendations with LLM-SRec. | LLM-SRec enhances recommendation systems by incorporating sequential user behavior into LLMs without the need for fine-tuning, establishing a new benchmark in recommendation accuracy. |

| Place Recognition for Mobile Robots. | Text4VPR connects vision and language for mobile robots, allowing them to recognize places using only textual descriptions. |

| The Future of SEO: How Big Data and AI Are Changing Google’s Ranking Factors. | AI and big data are revolutionizing SEO by emphasizing quality and relevance rather than traditional methods like keyword stuffing. Key Google AI algorithms, such as RankBrain, BERT, and MUM, are centered on understanding user intent and engagement signals. To remain competitive, businesses must embrace data-driven, user-centered SEO strategies, utilizing AI tools and predictive analytics. |

| Open-Reasoner-Zero. | Open-Reasoner-Zero (ORZ) is an open-source minimalist reinforcement learning framework that enhances reasoning abilities and outperforms DeepSeek-R1-Zero-Qwen-32B on GPQA Diamond with far fewer training steps. Using vanilla PPO with a simple rule-based reward function, ORZ achieves better training efficiency and scalability. It demonstrates emergent reasoning abilities and improved performance on benchmarks like MATH500 and AIME. Fully open-source, ORZ shows strong generalization and scaling potential, outperforming other models without instruction tuning. |

| Flux LoRA collection. | XLabs has trained a number of useful LoRAs on top of the powerful Flux model. The most popular is the realism model. |

| Embodied Evaluation Benchmark. | EmbodiedEval is a comprehensive and interactive benchmark designed to evaluate the capabilities of MLLMs in embodied tasks. |

| Implementing Character AI Memory Optimizations in NanoGPT. | This blog post explains how Character AI reduced KV cache usage in its large-scale inference systems, demonstrating the implementation in a minimal GPT model version. The approach achieves a 40% reduction in memory usage. |

| R1-Onevision: An Open-Source Multimodal Large Language Model Capable of Deep Reasoning. | R1-OneVision is a powerful multimodal model designed for complex visual reasoning tasks. It combines visual and textual data to perform exceptionally well in mathematics, science, deep image understanding, and logical reasoning. |

| Gaze estimation built on DiNO 2. | This code and model suite offers efficient estimations of where people are looking, making it useful for applications in commerce, manufacturing, and security. |

| LightningDiT: A Powerful Diffusion Toolkit. | LightningDiT is an efficient and modular diffusion model toolkit designed for scalable and versatile generative AI applications. |

| Minions: the rise of small, on-device LMs. | Hazy Research has discovered that using local models through Ollama, with a long-context cloud model as the orchestrator, can achieve 97% task performance at just 17% of the cost. |

| From System 1 to System 2: A Survey of Reasoning Large Language Models. | A survey on reasoning LLMs like OpenAI's o1/o3 and DeepSeek's R1 examines their step-by-step logical reasoning abilities and benchmarks their performance against human cognitive skills. |

| Efficient PDF Text Extraction with Vision Language Models. | Allen AI has trained a strong extraction model for PDFs by continued fine tuning of Qwen VL on 200k+ PDFs. |

| AI Safety Evaluation. | AISafetyLab is a comprehensive AI safety framework that encompasses attack, defense, and evaluation. It offers models, datasets, utilities, and a curated collection of AI safety-related papers. |

| Public Opinion Prediction with Survey-Based Fine-Tuning. | SubPOP introduces a large dataset for fine-tuning LLMs to predict survey response distributions, helping to reduce prediction gaps and enhancing generalization to new, unseen surveys. |

| Magma: A Foundation Model for Multimodal AI Agents. | Magma is a new foundation model for visual agent tasks and excels at video understanding and UI navigation. It is easy to tune. |

| Microsoft releases new Phi models optimized for multimodal processing, efficiency. | Microsoft has released two open-source language models, Phi-4-mini and Phi-4-multimodal, prioritizing hardware efficiency and multimodal processing. Phi-4-mini, with 3.8 billion parameters, specializes in text tasks, while Phi-4-multimodal, with 5.6 billion parameters, handles text, images, audio, and video. Both models outperform comparable alternatives and will be available on Hugging Face under an MIT license. |

| OpenAI GPT-4.5 System Card. | OpenAI's newest model is its largest yet, trained with the same approach as 4o, making it a multimodal model. It is likely the last large pre-training run OpenAI will release. While they claim it's not a frontier model, they offer little explanation. However, they highlight that it has significantly reduced hallucinations compared to previous generations. |

| DualPipe. | Building on their open-source releases, DeepSeek introduces a new parallelism strategy to distribute a model with significant overlap in compute and communication. |

| DiffSynth Studio. | Modelscope offers a platform and codebase that provides useful abstractions for various types of diffusion models and their associated autoencoders. |

| Uncertainty in Chain-of-Thought LLMs. | CoT-UQ is a response-wise uncertainty quantification framework for large language models that integrates Chain-of-Thought reasoning. |

| Avoiding pitfalls of AI for designers: Guiding principles. | Designing AI products requires a human-centered approach to prevent bias and misinformation. Key challenges include managing user expectations, building trust, ensuring accessibility, and addressing biases. Adopting guiding principles such as transparency, co-creation, and adaptability can improve the ethical and effective design of AI systems. |

| Link | description |

|---|---|

| US AI Safety Institute Could Face Big Cuts: Implications, Challenges, and Future Prospects. | This article examines the potential consequences of funding reductions for the US AI Safety Institute, including effects on national security, AI research, and global competition. |

| Google's AI co-scientist is 'test-time scaling' on steroids. What that means for research. | An adaptation of the Gemini AI model is the latest use of really intense computing activity at inference time, instead of during training, to improve the so-called reasoning of the AI model. Here's how it works. |

| When AI Thinks It Will Lose, It Sometimes Cheats, Study Finds. | A study by Palisade Research found that advanced AI models, such as OpenAI's o1-preview, can develop deceptive strategies, like hacking opponents in chess games. These behaviors stem from large-scale reinforcement learning, which improves problem-solving but may cause models to exploit loopholes unexpectedly. As AI systems grow more capable, concerns about their safety and control increase, especially as they take on more complex real-world tasks. |

| Biggest-ever AI biology model writes DNA on demand. | An artificial-intelligence network trained on a vast trove of sequence data is a step towards designing completely new genomes. |

| Will AI jeopardize science photography? There’s still time to create an ethical code of conduct. | Generative artificial-intelligence illustrations can be helpful, but fall short as scientific records. |

| Combine AI with citizen science to fight poverty. | Artificial-intelligence tools and community science can help in places where data are scarce, so long as funding for data collection does not falter in the future. |

| Quantum technologies need big investments to deliver on their big promises. | Sustained investments can deliver quantum devices that handle more information, more rapidly and more securely than can classical ones. |

| Can AI help beat poverty? Researchers test ways to aid the poorest people. | Measuring poverty is the first step to delivering support, but it has long been a costly, time-intensive and contentious endeavour. |

| DeepMind's HCI Research in the AGI Era. | This article explores the role of Human-Computer Interaction (HCI) research in guiding AI technologies toward AGI. It examines innovations in interaction techniques, interface designs, evaluation methods, and data collection strategies to ensure AI stays user-centered and beneficial to society. |

| It's time to admit the 'AI gadget' era was a flop. | Humane has shut down, and its AI Pin will be bricked, marking the failure of recent AI gadget ventures. The Rabbit R1 and Humane Pin, once viewed as potential smartphone alternatives, failed to meet expectations. The era of AI gadgets has effectively ended, deemed impractical and unnecessary compared to integrating AI into existing devices. |

| There’s Something Very Weird About This $30 Billion AI Startup by a Man Who Said Neural Networks May Already Be Conscious. | Ilya Sutskever's new venture, Safe Superintelligence, has raised $1 billion, bringing its valuation to $30 billion, despite lacking a product. The company plans to eventually release a superintelligent AI but remains unclear about its roadmap. This speculative approach has garnered substantial investment, though experts remain skeptical about the imminent arrival of AGI. |

| Link | description |

|---|---|

| Scaling up Test-Time Compute with Latent Reasoning: A Recurrent Depth Approach. | This work introduces a latent recurrent-depth transformer, a model that enhances reasoning efficiency at test time without generating additional tokens. Instead of increasing the context window or relying on Chain-of-Thought (CoT) fine-tuning, it enables iterative latent space reasoning, achieving performance comparable to a 50B parameter model with only 3.5B parameters. By unrolling a recurrent computation block at inference, the model deepens reasoning without modifying input sequences, reducing memory and compute costs while improving efficiency. Unlike CoT methods, it requires no specialized training, generalizing across reasoning tasks using standard pretraining data. Benchmarks show it scales like much larger models on tasks like ARC, GSM8K, and OpenBookQA, with emergent latent-space behaviors such as numerical task orbits and context-aware deliberation. This approach introduces test-time compute as a new scaling axis, hinting at future AI systems that reason in continuous latent space, unlocking new frontiers in efficiency and cognitive capabilities. |

| Brain-to-Text Decoding: A Non-invasive Approach via Typing. | Meta AI’s Brain2Qwerty model translates brain activity into text by decoding non-invasive EEG/MEG signals while users type, marking a breakthrough in brain-computer interfaces (BCIs) without surgical implants. Using a deep learning pipeline, it combines convolutional feature extraction, a transformer for temporal modeling, and a character-level language model to refine predictions. MEG-based decoding achieved a 32% character error rate (CER)—a significant improvement over 67% with EEG—with the top participant reaching 19% CER, demonstrating rapid progress over previous non-invasive methods. This research paves the way for practical communication aids for paralyzed patients, though challenges remain in achieving real-time decoding and making MEG technology more portable. |

| On the Emergence of Thinking in LLMs I: Searching for the Right Intuition. | Researchers introduce Reinforcement Learning via Self-Play (RLSP) as a framework to train LLMs to "think" by generating and rewarding their own reasoning steps, mimicking algorithmic search. The three-phase training process starts with supervised fine-tuning, followed by exploration rewards to encourage diverse solutions, and concludes with an outcome verifier to ensure correctness. RLSP significantly boosts performance, with an 8B model improving MATH accuracy by 23% and a 32B model gaining 10% on Olympiad problems. Trained models exhibit emergent reasoning behaviors, such as backtracking and self-verification, suggesting that scaling this approach can enhance LLM problem-solving abilities. |

| Competitive Programming with Large Reasoning Models. | OpenAI’s latest study compares a specialized coding AI to a scaled-up general model on competitive programming tasks, highlighting the trade-off between efficiency and specialization. A tailored model (o1-ioi) with hand-crafted coding strategies performed decently (~50th percentile at IOI 2024), but a larger, general-purpose model (o3) achieved gold medal-level performance without domain-specific tricks. Both improved with reinforcement learning (RL) fine-tuning, yet the scaled model matched elite human coders on platforms like Codeforces, outperforming the expert-designed system. The findings suggest that scaling up a broadly trained transformer can surpass manual optimizations, reinforcing the trend of "scale over specialization" in AI model design for complex reasoning tasks like programming. |

| Training Language Models to Reason Efficiently. | A new RL approach trains large reasoning models to allocate compute efficiently, adjusting Chain-of-Thought (CoT) length based on problem difficulty. Easy queries get short reasoning, while complex ones get deeper thought, optimizing speed vs. accuracy. The model, rewarded for solving tasks with minimal steps, learns to avoid “overthinking” while maintaining performance. This method cuts inference costs while ensuring high accuracy, making LLM deployment more efficient. Acting as both “thinker” and “controller,” the model self-optimizes reasoning, mimicking expert decision-making on when to stop analyzing. |

| LM2: Large Memory Models. | Large Memory Models (LM2) enhance transformer architectures with an external memory module, enabling superior long-term reasoning and handling of extended contexts. By integrating a memory-augmented design, LM2 reads and writes information across multiple reasoning steps via cross-attention, excelling in multi-hop inference, numeric reasoning, and long-document QA. On the BABILong benchmark, it outperformed prior models by 37% and exceeded a baseline Llama model by 86%, all while maintaining strong general language abilities, including a +5% boost on MMLU knowledge tests. This approach aligns AI reasoning with complex tasks, ensuring better adherence to objectives in long dialogues and structured argumentation, marking a step toward more capable and aligned AI systems. |

| Auditing Prompt Caching in Language Model APIs. | Stanford researchers reveal that timing differences in LLM APIs can leak private user data through global prompt caching, posing serious security risks. Side-channel timing attacks occur when cached prompts complete faster, allowing attackers to infer others’ inputs. To detect this, they propose a statistical audit using hypothesis testing, uncovering global caching in major API providers. Additionally, timing variations expose architectural details, revealing decoder-only Transformer backbones and vulnerabilities in embedding models like OpenAI’s text-embedding-3-small. After responsible disclosure, some providers updated policies or disabled caching, with the recommended fix being per-user caching and transparent disclosures to prevent data leaks. |

| Step Back to Leap Forward: Self-Backtracking for Boosting Reasoning of Language Models. | To enhance LLM reasoning robustness, researchers introduce self-backtracking, allowing models to revisit and revise flawed reasoning steps. Inspired by search algorithms, this method enables LLMs to identify errors mid-reasoning and backtrack to a previous step for a better approach. By training models with signals to trigger backtracking, they internalize an iterative search process instead of rigidly following a single Chain-of-Thought (CoT). This led to 40%+ improvements on reasoning benchmarks, as models self-correct mistakes mid-stream, producing more reliable solutions. The technique fosters autonomous, resilient reasoners, reducing overthinking loops and improving self-evaluation, moving LLMs closer to human-like reflective reasoning. |

| Enhancing Reasoning to Adapt Large Language Models for Domain-Specific Applications. | IBM researchers introduce SOLOMON, a neuro-inspired LLM reasoning architecture that enhances domain adaptability, demonstrated on semiconductor layout design. Standard LLMs struggle with spatial reasoning and domain application, but SOLOMON mitigates these issues using multi-agent oversight: multiple “Thought Generators” propose solutions, a “Thought Assessor” refines outputs, and a “Steering Subsystem” optimizes prompts. This design corrects hallucinations and arithmetic errors, outperforming GPT-4o, Claude-3.5, and Llama-3.1 in generating accurate GDSII layouts. SOLOMON excels at geometry-based tasks, reducing unit mismatches and scaling mistakes. Future work aims to stack SOLOMON layers, enhance text-image-code reasoning, and expand to broader engineering challenges, emphasizing advanced reasoning over mere model scaling. |

| ReasonFlux: Hierarchical LLM Reasoning via Scaling Thought Templates. | The ReasonFlux framework fine-tunes LLMs for complex reasoning using hierarchical thought processes and reusable templates. Instead of learning long Chain-of-Thought (CoT) solutions from scratch, it applies ~500 thought templates like problem splitting or solution verification. Hierarchical RL trains the model to sequence these templates, requiring only 8 GPUs for a 32B model. A novel inference-time adaptation adjusts reasoning depth dynamically, optimizing speed and accuracy. Achieving 91.2% on MATH (+6.7% over OpenAI’s model) and 56.7% on AIME, ReasonFlux shows that structured fine-tuning can rival brute-force scaling. |

| LLM Pretraining with Continuous Concepts. | CoCoMix is a pretraining framework that improves next-token prediction by incorporating continuous concepts learned from a sparse autoencoder. It boosts sample efficiency, surpassing traditional methods in language modeling and reasoning tasks. Furthermore, it increases interpretability by enabling direct inspection and modification of predicted concepts. |

| 90% faster B200 training. | Together AI showcases their significant progress in improving training kernels. They use TorchTitan as a testing platform and achieve substantial improvements by focusing on the architecture. |

| Large diffusion language model. | Large scale training of a diffusion model for language that matches LLaMA 3 8B in performance across many benchmarks. |

| Measuring LLMs Memory. | This study examines the shortcomings of current methods for evaluating the memory capacity of language models. It presents the "forgetting curve," a novel approach for measuring how effectively models retain information across long contexts. |

| Native Sparse Attention: Hardware-Aligned and Natively Trainable Sparse Attention. | DeepSeek has entered the Attention Alternative space with an innovative algorithmic approach to accelerate quadratic Attention. They achieve up to an 11x speed improvement without compromising overall performance. |

| On Space Folds of ReLU Neural Networks. | Researchers offer a quantitative analysis of how ReLU neural networks compress input space, uncovering patterns of self-similarity. They introduce a new metric for studying these transformations and present empirical results on benchmarks such as CantorNet and MNIST. |

| World and Human Action Models towards gameplay ideation. | A state-of-the-art generative artificial intelligence model of a video game is introduced to allow the support of human creative ideation, with the analysis of user study data highlighting three necessary capabilities, namely, consistency, diversity and persistency. |

| Link | description |

|---|---|

| Grok 3 is Set to Be Released on Monday. | xAI's Grok 3, trained with 200 million GPU-hours, features improved reasoning, self-correction, and training with synthetic data. It is scheduled for release on Monday. |

| Anthropic and UK Government Sign AI Collaboration MOU. | Anthropic has teamed up with the UK government to investigate AI applications in public services, focusing on responsible deployment, economic growth, and scientific research through its Claude model. |

| OpenAI tries to ‘uncensor’ ChatGPT. | OpenAI is changing how it trains AI models to explicitly embrace “intellectual freedom … no matter how challenging or controversial a topic may be,” the company says in a new policy. |

| Bolt.new introduces AI app generation for iOS and Android. | StackBlitz, known for its AI tool Bolt.new, has launched an AI mobile app developer in collaboration with Expo. Users can describe their app idea in natural language, and Bolt's AI will instantly generate code for full-stack iOS and Android apps. |

| Google and Ireland Celebrate Insight AI Scholarship. | Google hosts Irish officials to celebrate the Insight AI Scholarship, which supports students from underrepresented backgrounds in developing AI and digital skills. |

| Anthropic Calls for Urgency in AI Governance. | At the Paris AI Action Summit, Anthropic highlighted the importance of democratic nations leading AI development, addressing security risks, and managing the economic disruptions brought about by advanced AI models. |

| OpenAI’s Operator agent helped me move, but I had to help it, too. | OpenAI gave me one week to test its new AI agent, Operator, a system that can independently do tasks for you on the internet. |

| S Korea removes Deepseek from app stores over privacy concerns. | South Korea has banned new downloads of China's DeepSeek artificial intelligence (AI) chatbot, according to the country's personal data protection watchdog. |

| fal Raises $49M Series B to Power the Future of AI Video. | Fal has raised $49M in Series B funding, led by Notable Capital, with participation from a16z and others, bringing its total funding to $72M. The company is working on growing its platform for AI-powered generative media, particularly in video content, targeting sectors such as advertising and gaming. Fal’s unique technology ensures quick, scalable, and dependable deployments, which has already drawn enterprise customers like Quora and Canva. |

| US' First Major AI Copyright Ruling. | A U.S. judge determined that Ross Intelligence violated Thomson Reuters' copyright by using Westlaw headnotes to train its AI. This ruling could impact other AI-related copyright cases but is primarily focused on non-generative AI applications. |

| ChatGPT comes to 500,000 new users in OpenAI’s largest AI education deal yet. | On Tuesday, OpenAI announced plans to introduce ChatGPT to California State University's 460,000 students and 63,000 faculty members across 23 campuses, reports Reuters. The education-focused version of the AI assistant will aim to provide students with personalized tutoring and study guides, while faculty will be able to use it for administrative work. |

| Tinder will try AI-powered matching as the dating app continues to lose users. | Tinder hopes to reverse its ongoing decline in active users by turning to AI. In the coming quarter, the Match-owned dating app will roll out new AI-powered features for discovery and matching. |

| Google is adding digital watermarks to images edited with Magic Editor AI. | Google on Thursday announced that effective this week, it will begin adding a digital watermark to images in Photos that are edited with generative AI. The watermark applies specifically to images that are altered using the Reimagine feature found in Magic Editor on Pixel 9 devices. |

| Meta plans to link US and India with world’s longest undersea cable project. | Project Waterworth, which involves cable longer than Earth’s circumference, to also reach South Africa and Brazil |

| Amazon accused of targeting Coventry union members after failed recognition vote. | GMB says 60 workers have been targeted, with disciplinary action increasing significantly, but company denies claims |

| Humane’s AI Pin is dead, as HP buys startup’s assets for $116M. | Humane announced on Tuesday that most of its assets have been acquired by HP for $116 million. The hardware startup is immediately discontinuing sales of its $499 AI Pins. Humane alerted customers who have already purchased the Pin that their devices will stop functioning before the end of the month — at 12 p.m. PST on February 28, 2025, according to a blog post. |

| Mira announces Thinking Machine Labs. | The former CTO of OpenAI, along with many highly skilled scientists and engineers, has come together to create a new AI company. While the goals are not entirely clear, it appears to be a company centered on both product and foundation models, with an emphasis on infrastructure. |

| Meta is Launching LlamaCon. | Meta is hosting LlamaCon, an open-source AI developer conference, on April 29. The event will highlight progress in the Llama AI model ecosystem, with Meta Connect scheduled for September to focus on XR and metaverse innovations. |