awesome-artificial-intelligence-guidelines

This repository aims to map the ecosystem of artificial intelligence guidelines, principles, codes of ethics, standards, regulation and beyond.

Stars: 1245

The 'Awesome AI Guidelines' repository aims to simplify the ecosystem of guidelines, principles, codes of ethics, standards, and regulations around artificial intelligence. It provides a comprehensive collection of resources addressing ethical and societal challenges in AI systems, including high-level frameworks, principles, processes, checklists, interactive tools, industry standards initiatives, online courses, research, and industry newsletters, as well as regulations and policies from various countries. The repository serves as a valuable reference for individuals and teams designing, building, and operating AI systems to navigate the complex landscape of AI ethics and governance.

README:

(AKA Writing AI Responsibly) |

As AI systems become more prevalent in society, we face bigger and tougher societal challenges. Given many of these challenges have not been faced before, practitioners will face scenarios that will require dealing with hard ethical and societal questions.

There has been a large amount of content published which attempts to address these issues through “Principles”, “Ethics Frameworks”, “Checklists” and beyond. However navigating the broad number of resources is not easy.

This repository aims to simplify this by mapping the ecosystem of guidelines, principles, codes of ethics, standards and regulation being put in place around artificial intelligence.

| You can join the Machine Learning Engineer newsletter. You will receive updates on open source frameworks, tutorials and articles curated by machine learning professionals. |

|

- AI & Machine Learning 8 principles for Responsible ML - The Institute for Ethical AI & Machine Learning has put together 8 principles for responsible machine learning that are to be adopted by individuals and delivery teams designing, building and operating machine learning systems.

- An Evaluation of Guidelines - The Ethics of Ethics - A research paper that analyses multiple Ethics principles

- Association for Computer Machinery's Code of Ethics and Professional Conduct - This is the code of ethics that has been put together in 1992 by the Association for Computer Machinery and updated in 2018. The Code is designed to inspire and guide the ethical conduct of all computing professionals, including current and aspiring practitioners, instructors, students, influencers, and anyone who uses computing technology in an impactful way. Additionally, the Code serves as a basis for remediation when violations occur. The Code includes principles formulated as statements of responsibility, based on the understanding that the public good is always the primary consideration.

- From What to How: An initial review of publicly available AI Ethics Tools, Methods and Research to translate principles into practices - A paper published by the UK Digital Catapult that aims to identify and present the gap between principles and their practical applications.

- European Commission's Guidelines for Trustworthy AI - The Ethics Guidelines for Trustworthy Artificial Intelligence (AI) is a document prepared by the High-Level Expert Group on Artificial Intelligence (AI HLEG). This independent expert group was set up by the European Commission in June 2018, as part of the AI strategy announced earlier that year.

- IEEE's Ethically Aligned Design - A Vision for Prioritizing Human Wellbeing with Artificial Intelligence and Autonomous Systems that encourages technologists to prioritize ethical considerations in the creation of autonomous and intelligent technologies.

- Montréal Declaration for a responsible development of artificial intelligence - ethical principles and values that promote the fundamental interests of people and group created as an initiative by Université de Montréal

- Oxford's Recommendations for AI Governance - A set of recommendations from Oxford's Future of Humanity institute which focus on the infrastructure and attributes required for efficient design, development, and research around the ongoing work building & implementing AI standards.

- PWC's Responsible AI - PWC has put together a survey and a set of principles that abstract some of the key areas they've identified for responsible AI.

- Singapore Data Protection Govt Commission's AI Governance Principles - The Singapore government's Personal Data Protection Commission has put together a set of guiding principles towards data protection and human involvement in automated systems, and comes with a report that breaks down the guiding principles and motivations.

- Toronto Declaration Protecting the right to equality and non-discrimination in machine learning systems by accessnow.

- UK Government's Data Ethics Framework Principles - A resource put together by the Department for Digital, Culture, Media and Sport (DCMS) which outlines an overview of data ethics, together with a 7-principle framework.

- Algorithm charter for Aotearoa New Zealand - The Algorithm Charter for Aotearoa New Zealand is an evolving piece of work that needs to respond to emerging technologies and also be fit-for-purpose for government agencies.

- Montreal AI Ethics Institute State of AI Ethics June 2020 Report - A resource put together by the Montreal AI Ethics Institute that captures the most relevant research and reporting in the domain of AI ethics between March 2020 and June 2020.

- Montreal AI Ethics Institute State of AI Ethics October 2020 Report - A resource put together by the Montreal AI Ethics Institute that captures the most relevant research and reporting in the domain of AI ethics between July 2020 and October 2020.

- Technical and Organizational Best Practices - A resource put together by Foundation for Best Practices in Machine Learning (FBPML) with technical guidelines (e.g. fairness and non-discrimination, monitoring and maintenance, data quality, product traceability, explainability) and organizational guidelines (e.g. data governance, product management, human resources management, compliance and auditing). Community contributions are welcome via the FBPML Wiki.

- Understanding artificial intelligence ethics and safety - A guide for the responsible design and implementation of AI systems in the public sector by David Leslie from the Alan Turing Institute.

- Declaration for responsible and intelligent data practice - a shared vision of what best practice in data looks like by Open Data Manchester.

- Recommendation on the Ethics of Artificial Intelligence - The Recommendation by the UNESCO is a comprehensive international framework aiming to shape the development and use of AI technologies and establishes a set of values in line with the promotion and protection of human rights, human dignity, and environmental sustainability. It has been adopted by acclamation by 193 Member States at UNESCO’s General Conference in November 2021. For more information, refer to UNESCO's 2023 publication on key facts here.

- AI RFX Procurement Framework - A procurement framework for evaluating the maturity of machine learning systems put together by cross functional teams of academics, industry practitioners and technical individuals at The Institute for Ethical AI & Machine Learning to empower industry practitioners looking to procure machine learning suppliers.

- Checklist for data science projects – Deon by DrivenData is a command line tool that allows you to easily add an ethics checklist to your data science projects.

- Designing Ethical AI Experiences Checklist and Agreement - document to guide the development of accountable, de-risked, respectful, secure, honest, and usable artificial intelligence (AI) systems with a diverse team aligned on shared ethics. Carnegie Mellon University, Software Engineering Institute.

- Ethical OS Toolkit - A toolkit that dives into 8 risk zones to assess the potential challenges that a technology team may face, together with 14 scenarios to provide examples, and 7 future-proofing strategies to help take ethical action.

- Ethics Canvas - A resource inspired by the traditional business canvas, which provides an interactive way to brainstorm potential risks, opportunities and solutions to ethical challenges that may be faced in a project using post-it note-like approach.

- Kat Zhou's Design Ethically Resources - A set of workshops that can be organised across teams to identify challenges, assess current risks and take action on potential issues around ethical challenges that may be faced.

- Markula Center's Ethical Toolkit for Engineering/Design Practice - A practical and comprehensible toolkit with seven components to aid practitioners reflect, and judge the moral grounds in which they are operating.

- San Francisco City's Ethics & Algorithms Toolkit - A risk management framework for government leaders and staff who work with algorithms, providing a two part assessment process including an algorithmic assessment process, and a process to address the risks.

- UK Government's Data Ethics Workbook - A resource put together by the Department for Digital, Culture, Media and Sport (DCMS) which provides a set of questions that can be asked by practitioners in the public sector, which address each of the principles in their Data Ethics Framework Principles.

- World Economic Forum's Guidelines for Procurement - The WEF has put together a set of guidelines for governments to be able to safely and reliably procure machine learning related systems, which has been trialled with the UK government.

- Machine Learning Assurance - Quick look at machine learning assurance: process of recording, understanding, verifying, and auditing machine learning models and their transactions.

- ODEP's Checklist for Employers: Facilitating the Hiring of People with Disabilities Through the Use of eRecruiting Screening Systems, Including AI - The Employer Assistance and Resource Network on Disability Inclusion (EARN) and the Partnership on Employment & Accessible Technology (PEAT), which are both funded through the U.S. Department of Labor's Office of Disability Employment Policy (ODEP), collaborated on an inclusive AI checklist for employers. The checklist provides direction for leadership, human resources personnel, equal employment opportunity managers, and procurement officers for reviewing AI tools used in recruiting and candidate assessment for fairness and inclusion of individuals with disabilities.

- Microsoft AI Fairness Checklist

- US NIST AI Risk Management Framework - The Framework is intended to help developers, users and evaluators of AI systems better manage AI risks which could affect individuals, organizations, society, or the environment.

- Aequitas' Bias & Fairness Audit Toolkit - The Bias Report is powered by Aequitas, an open-source bias audit toolkit for machine learning developers, analysts, and policymakers to audit machine learning models for discrimination and bias, and make informed and equitable decisions around developing and deploying predictive risk-assessment tools.

- Awesome Machine Learning Production List - A list of tools and frameworks that support the design, development and operation of production machine learning systems, currently maintained by The Institute for Ethical AI & Machine Learning.

- Cape Python - Easily apply privacy-enhancing techniques for data science and machine learning tasks in Pandas and Spark. Can be used in conjunction with Cape Core to collaborate on privacy policies and distribute those policies for data projects across teams and organizations.

- eXplainability Toolbox - The Institute for Ethical AI & Machine Learning proposal for an extended version of the traditional data science process which focuses on algorithmic bias and explainability, to ensure a baseline of risks around undesired biases can be mitigated.

- FAT Forensics is a Python toolkit for evaluating Fairness, Accountability and Transparency of Artificial Intelligence systems. It is built on top of SciPy and NumPy, and distributed under the 3-Clause BSD license (new BSD).

- IBM's AI Explainability 360 Open Source Toolkit - This is IBM's toolkit that includes large number of examples, research papers and demos implementing several algorithms that provide insights on fairness in machine learning systems.

- Linux Foundation AI Landscape - The official list of tools in the AI landscape curated by the Linux Foundation, which contains well maintained and used tools and frameworks.

- Taking action on digital ethics from Avanade

- Microsoft Fairlearn - An open-source toolkit for assessing and improving fairness in machine learning products developed by Microsoft

- Microsoft Interpret ML - An open-source toolkit for improving explainability/interpretability developed by Microsoft

- Alibi - An open-source Python library for machine learning model inspection and interpretation.

- ACS Code of Professional Conduct - PDF - Australian ICT (Information and Communication Technology) sector professional organization.

- Association for Computer Machinery's Code of Ethics and Professional Conduct - This is the code of ethics that has been put together in 1992 by the Association for Computer Machinery and updated in 2018. The Code is designed to inspire and guide the ethical conduct of all computing professionals, including current and aspiring practitioners, instructors, students, influencers, and anyone who uses computing technology in an impactful way. Additionally, the Code serves as a basis for remediation when violations occur. The Code includes principles formulated as statements of responsibility, based on the understanding that the public good is always the primary consideration.

- IEEE Global Initiative for Ethical Considerations in Artificial Intelligence (AI) and Autonomous Systems (AS) - IEEE Approved Standards Projects specifically focused on the Ethically Aligned Design principles, and includes 14 (P700X) standards which cover topics from data collection to privacy, to algorithmic bias and beyond.

- ISO/IEC's Standards for Artificial Intelligence - The ISO's initiative for Artificial Intelligence standards, which include a large set of subsequent standards ranging across Big Data, AI Terminology, Machine Learning frameworks, etc.

- Udacity's Secure & Private AI Course - Free course by Udacity which introduces three cutting-edge technologies for privacy-preserving AI: Federated Learning, Differential Privacy, and Encrypted Computation.

- Data science ethics - Free course by Prof. Jagadish from the University of Michigan (via Coursera) that covers data ownership, privacy and anonymity, data validity, and algorithmic fairness.

- Practical Data Ethics - Free course by Rachel Thomas from the University of San Francisco Data Institute (via fast.ai) that covers disinformation, bias and fairness, foundations of ethics, privacy and surveillance, algorithmic colonialism

- Bias and Discrimination in AI - Free course by the University of Montreal and IVADO (via edX) about the discriminatory effects of algorithmic decision-making and responsible machine learning (institutional and technical strategies to identify and address bias).

- Intro to AI ethics - Free course by Kaggle introducing the base concepts of ethics in AI and how to mitigate related problems.

- Introduction to AI Safety, Ethics, and Society - Developed by Dan Hendrycks, director of the Center for AI Safety, this free online textbook aims to provide an accessible introduction to students, practitioners and others looking to better understand issues pertaining to AI Safety and Ethics. Apart from online reading, this book is available as a PDF here and also as a free virtual course here.

- Import AI - A newsletter curated by OpenAI's Jack Clark which curates the most resent and relevant AI research, as well as relevant societal issues that intersect with technical AI research.

- Matt's thoughts in between - Newsletter curated by Entrepreneur First CEO Matt Clifford that provides a curated critical analysis on topics surrounding geopolitics, deep tech startups, economics and beyond.

- The Machine Learning Engineer - A newsletter curated by The Institute for Ethical AI & Machine Learning that contains curated articles, tutorials and blog posts from experienced Machine Learning professionals and includes insights on best practices, tools and techniques in machine learning explainability, reproducibility, model evaluation, feature analysis and beyond.

- Montreal AI Ethics Institute Weekly AI Ethics newsletter - A weekly newsletter curated by Abhishek Gupta and his team at the Montreal AI Ethics Institute that presents accessible summaries of technical and academic research papers along with commentary on the latest in the domain of AI ethics.

- AI Safety Newsletter - A weekly newsletter from the Center for AI Safety providing updates on AI research, policy, and other areas for a non-technical audience.

- ML Safety Newsletter - A newsletter from the Center for AI Safety providing occasional deep-dives on key results in technical AI research.

- Artificial Intelligence Mission Austria 2030 - Shaping the Future of Artificial Intelligence in Austria. The Austrian ministry for Innovation and Technology published their vision for AI until 2030.

- Beijing AI Principles - initiative for the research, development, use, governance and long-term planning of AI, calling for its healthy development to support the construction of a human community with a shared future, and the realization of beneficial AI for humankind and nature.

- China Internet Security Law - China's law which enacted to increase cybersecurity and national security, safeguard cyberspace sovereignty and public interest, protect the legitimate rights and interests of citizens, legal persons and other organisations, and promote healthy economic and social development (and was argued by the Chinese ministry for industry and information that this law justified the means of pursuing the "Going Out" strategy China has persisted on ever since 1999). KPMG's summary of the Cybersecurity Law. Center for strategic & international studies overview of China's new Data Privacy law

- China's Personal Information Security Specification (Translation) - The Chinese Government's first major digital privacy rules which took effect in May 2018, which lays out granular guidelines for consent and how personal data should be collected, used and shared. Center for strategic & international studies overview of the specification

- China's Administrative Provisions on Information Services on Microblogs - China's provisions which require microblogging sites (social media sites) to obtain relevant credentials by law, verify users' real identities, establish mechanisms for dispelling and refuting rumors, etc. Summary of rules by the US Law Library of Congress.

- Decision on strengthening the protection of online information - The Standing Committee of the National People's Congress (NPC) of the People's Republic of China adopted the decision on strengthening the protection of online information - this is an act that contains 12 clauses applicable to entities both in the public and private sectors in respect to the collection and processing of electronic personal information on the internet.

- Personal Data Protection Act - The personal data protection act of the Republic of China, which is enacted to regulate the collection, processing and use of personal data as so to prevent harm on personality rights, and to facilitate the proper use of personal data.

- Smart Dubai Artificial Intelligence Principles and Ethics - Ethical AI Toolkit - created to provide practical help across a city ecosystem. It supports industry, academia and individuals in understanding how AI systems can be used responsibly. It consists of principles and guidelines, and a self-assessment tool for developers to assess their platforms.

- Ethics Guidelines for Trustworthy AI - European Commission document prepared by the High-Level Expert Group on Artificial Intelligence (AI HLEG).

- General Data Protection Regulation GDPR - Legal text for the EU GDPR regulation 2016/679 of the European Parliament and of the Council of 27 April 2016 on the protection of natural persons with regard to the processing of personal data and on the free movement of such data, and repealing Directive 95/46/EC

- GDPR.EU Guide - A project co-funded by the Horizon 2020 Framework programme of the EU which provides a resource for organisations and individuals researching GDPR, including a library of straightforward and up-to-date information to help organisations achieve GDPR compliance (Legal Text).

- Data Protection Act 2012 - The Personal Data Protection Act 2012 (the "Act") sets out the law on data protection in Singapore. Apart from establishing a general data protection regime, the Act also regulates telemarketing practices.

- Protection from Online Falsehoods and Manipulation Act 2019 - An act to prevent the electronic communication in Singapore of false statements of fact, to suppress support for and counteract the effects of such communication, to safeguard against the use of online accounts for such communication and for information manipulation, to enable measures to be taken to enhance transparency of online political advertisements and for related matters.

- The White House Executive Order in AI - The USA Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence

- California Consumer Privacy Act (CCPA) - Legal text for California's consumer privacy act

- EU-U.S. and Swiss-U.S. Privacy Shield Frameworks - The EU-U.S. and Swiss-U.S. Privacy Shield Frameworks were designed by the U.S. Department of Commerce and the European Commission and Swiss Administration to provide companies on both sides of the Atlantic with a mechanism to comply with data protection requirements when transferring personal data from the European Union and Switzerland to the United States in support of transatlantic commerce.

- Fair Credit Reporting Act 2018 - The Fair Reporting Act is a federal law that regulates the collection of consumers' credit information and access to their credit reports.

- Gramm-Leach-Billey Act (for financial institutions) - The Graham-Leach-Billey Act requires financial institutions (companies that offer consumers financial projects or services like loans, financial, or investment advice, or insurance) to explain their information-sharing practices to their customers and to safeguard sensitive data.

- Health Insurance Portability and Accountability Act of 1996 - The HIPAA required the secretary of the US department of health and human services (HHS) to develop regulations protecting the privacy and security of certain health information, which then HHS published what is known as the HIPAA Privacy Rule, and the HIPAA Security Rule.

- Executive Order on Maintaining American Leadership in AI - Official mandate by the President of the US to

- Privacy Act of 1974 - The privacy act of 1974 which establishes a code of fair information practices that governs the collection, maintenance, use and dissemination of information about individuals that is maintained in systems of records by federal agencies.

- Privacy Protection Act of 1980 - The Privacy Protection Act of 1980 protects journalists from being required to turn over to law enforcement any work product and documentary materials, including sources, before it is disseminated to the public.

- DoD's Ethical Principles for AI - The U.S.A. Department of Defense responsible AI guidelines for tech contractors. The guidelines provide a step-by-step process to follow during the planning, development, and deployment phases of the technical lifecycle.

- UK Data Protection Act of 2018 - The DPA 2018 enacts the GDPR into UK Law, however in doing so has included various "derogations" as permitted by the GDPR, resulting in some key differenced (which although small are not of insignificance impact and may have a greater impact after Brexit).

- The Information Commissioner's Office guide to Data Protection - This guide is for data protection officers and others who have day-to-day responsibility for data protection. It is aimed at small and medium-sized organisations, but it may be useful for larger organisations too.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for awesome-artificial-intelligence-guidelines

Similar Open Source Tools

awesome-artificial-intelligence-guidelines

The 'Awesome AI Guidelines' repository aims to simplify the ecosystem of guidelines, principles, codes of ethics, standards, and regulations around artificial intelligence. It provides a comprehensive collection of resources addressing ethical and societal challenges in AI systems, including high-level frameworks, principles, processes, checklists, interactive tools, industry standards initiatives, online courses, research, and industry newsletters, as well as regulations and policies from various countries. The repository serves as a valuable reference for individuals and teams designing, building, and operating AI systems to navigate the complex landscape of AI ethics and governance.

kaapana

Kaapana is an open-source toolkit for state-of-the-art platform provisioning in the field of medical data analysis. The applications comprise AI-based workflows and federated learning scenarios with a focus on radiological and radiotherapeutic imaging. Obtaining large amounts of medical data necessary for developing and training modern machine learning methods is an extremely challenging effort that often fails in a multi-center setting, e.g. due to technical, organizational and legal hurdles. A federated approach where the data remains under the authority of the individual institutions and is only processed on-site is, in contrast, a promising approach ideally suited to overcome these difficulties. Following this federated concept, the goal of Kaapana is to provide a framework and a set of tools for sharing data processing algorithms, for standardized workflow design and execution as well as for performing distributed method development. This will facilitate data analysis in a compliant way enabling researchers and clinicians to perform large-scale multi-center studies. By adhering to established standards and by adopting widely used open technologies for private cloud development and containerized data processing, Kaapana integrates seamlessly with the existing clinical IT infrastructure, such as the Picture Archiving and Communication System (PACS), and ensures modularity and easy extensibility.

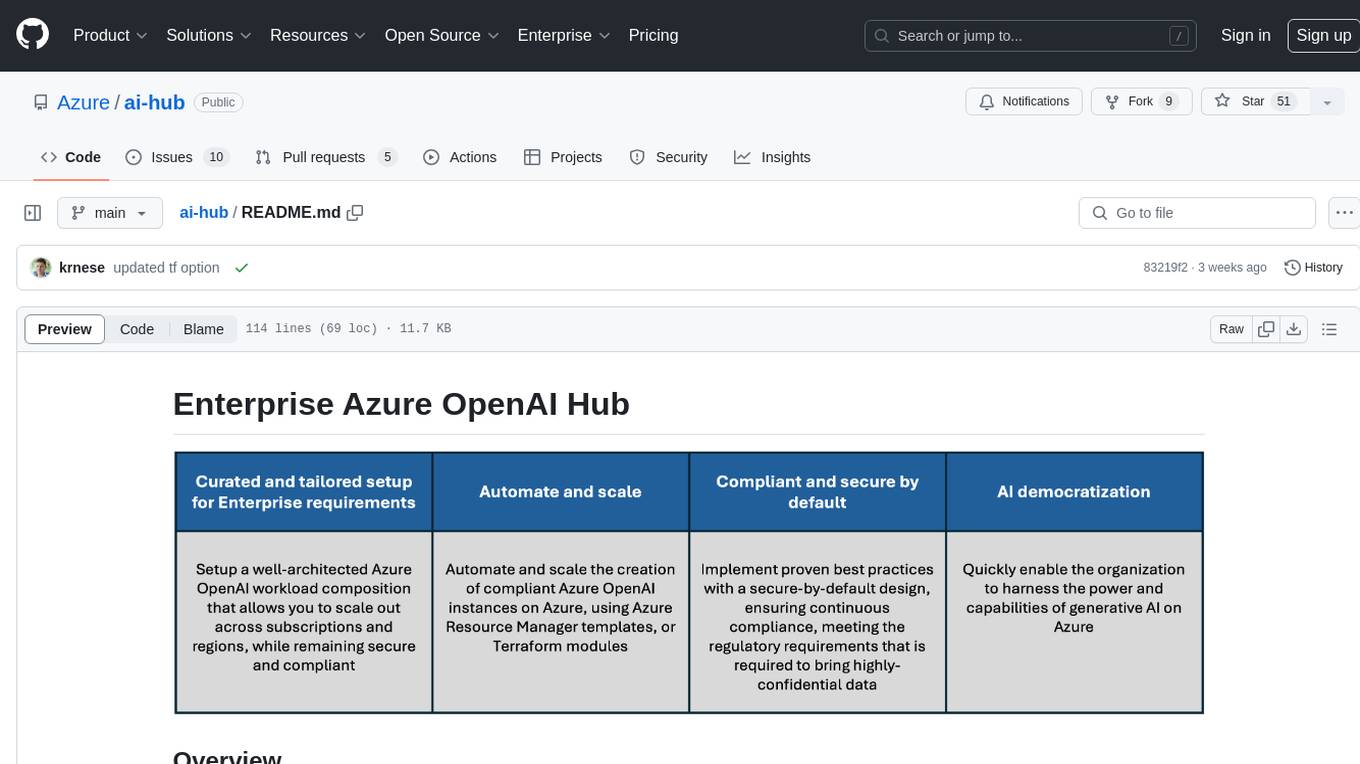

ai-hub

The Enterprise Azure OpenAI Hub is a comprehensive repository designed to guide users through the world of Generative AI on the Azure platform. It offers a structured learning experience to accelerate the transition from concept to production in an Enterprise context. The hub empowers users to explore various use cases with Azure services, ensuring security and compliance. It provides real-world examples and playbooks for practical insights into solving complex problems and developing cutting-edge AI solutions. The repository also serves as a library of proven patterns, aligning with industry standards and promoting best practices for secure and compliant AI development.

cogai

The W3C Cognitive AI Community Group focuses on advancing Cognitive AI through collaboration on defining use cases, open source implementations, and application areas. The group aims to demonstrate the potential of Cognitive AI in various domains such as customer services, healthcare, cybersecurity, online learning, autonomous vehicles, manufacturing, and web search. They work on formal specifications for chunk data and rules, plausible knowledge notation, and neural networks for human-like AI. The group positions Cognitive AI as a combination of symbolic and statistical approaches inspired by human thought processes. They address research challenges including mimicry, emotional intelligence, natural language processing, and common sense reasoning. The long-term goal is to develop cognitive agents that are knowledgeable, creative, collaborative, empathic, and multilingual, capable of continual learning and self-awareness.

RecAI

RecAI is a project that explores the integration of Large Language Models (LLMs) into recommender systems, addressing the challenges of interactivity, explainability, and controllability. It aims to bridge the gap between general-purpose LLMs and domain-specific recommender systems, providing a holistic perspective on the practical requirements of LLM4Rec. The project investigates various techniques, including Recommender AI agents, selective knowledge injection, fine-tuning language models, evaluation, and LLMs as model explainers, to create more sophisticated, interactive, and user-centric recommender systems.

Build-Modern-AI-Apps

This repository serves as a hub for Microsoft Official Build & Modernize AI Applications reference solutions and content. It provides access to projects demonstrating how to build Generative AI applications using Azure services like Azure OpenAI, Azure Container Apps, Azure Kubernetes, and Azure Cosmos DB. The solutions include Vector Search & AI Assistant, Real-Time Payment and Transaction Processing, and Medical Claims Processing. Additionally, there are workshops like the Intelligent App Workshop for Microsoft Copilot Stack, focusing on infusing intelligence into traditional software systems using foundation models and design thinking.

awesome-RLAIF

Reinforcement Learning from AI Feedback (RLAIF) is a concept that describes a type of machine learning approach where **an AI agent learns by receiving feedback or guidance from another AI system**. This concept is closely related to the field of Reinforcement Learning (RL), which is a type of machine learning where an agent learns to make a sequence of decisions in an environment to maximize a cumulative reward. In traditional RL, an agent interacts with an environment and receives feedback in the form of rewards or penalties based on the actions it takes. It learns to improve its decision-making over time to achieve its goals. In the context of Reinforcement Learning from AI Feedback, the AI agent still aims to learn optimal behavior through interactions, but **the feedback comes from another AI system rather than from the environment or human evaluators**. This can be **particularly useful in situations where it may be challenging to define clear reward functions or when it is more efficient to use another AI system to provide guidance**. The feedback from the AI system can take various forms, such as: - **Demonstrations** : The AI system provides demonstrations of desired behavior, and the learning agent tries to imitate these demonstrations. - **Comparison Data** : The AI system ranks or compares different actions taken by the learning agent, helping it to understand which actions are better or worse. - **Reward Shaping** : The AI system provides additional reward signals to guide the learning agent's behavior, supplementing the rewards from the environment. This approach is often used in scenarios where the RL agent needs to learn from **limited human or expert feedback or when the reward signal from the environment is sparse or unclear**. It can also be used to **accelerate the learning process and make RL more sample-efficient**. Reinforcement Learning from AI Feedback is an area of ongoing research and has applications in various domains, including robotics, autonomous vehicles, and game playing, among others.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

dioptra

Dioptra is a software test platform for assessing the trustworthy characteristics of artificial intelligence (AI). It supports the NIST AI Risk Management Framework by providing functionality to assess, analyze, and track identified AI risks. Dioptra provides a REST API and can be controlled via a web interface or Python client for designing, managing, executing, and tracking experiments. It aims to be reproducible, traceable, extensible, interoperable, modular, secure, interactive, shareable, and reusable.

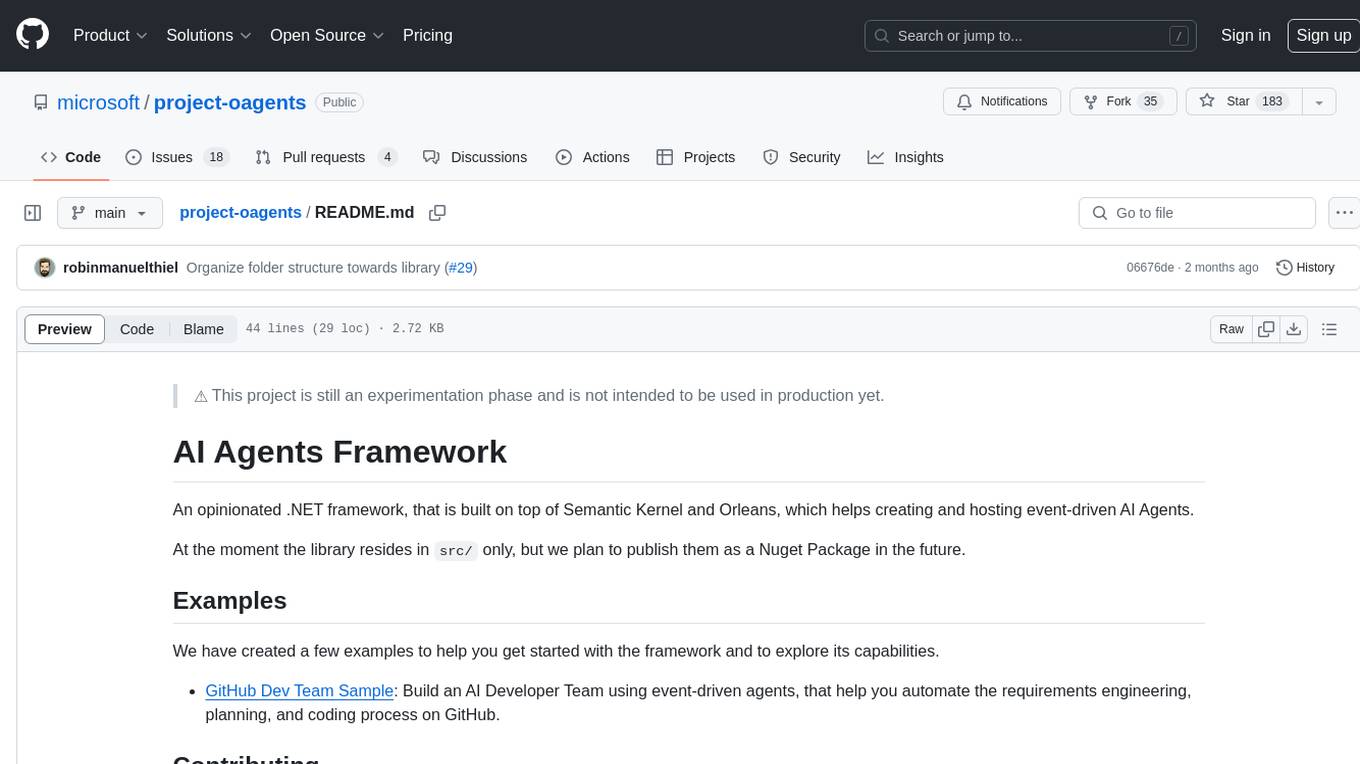

project-oagents

AI Agents Framework is a .NET framework built on Semantic Kernel and Orleans for creating and hosting event-driven AI Agents. It is currently in an experimental phase and not recommended for production use. The framework aims to automate requirements engineering, planning, and coding processes using event-driven agents.

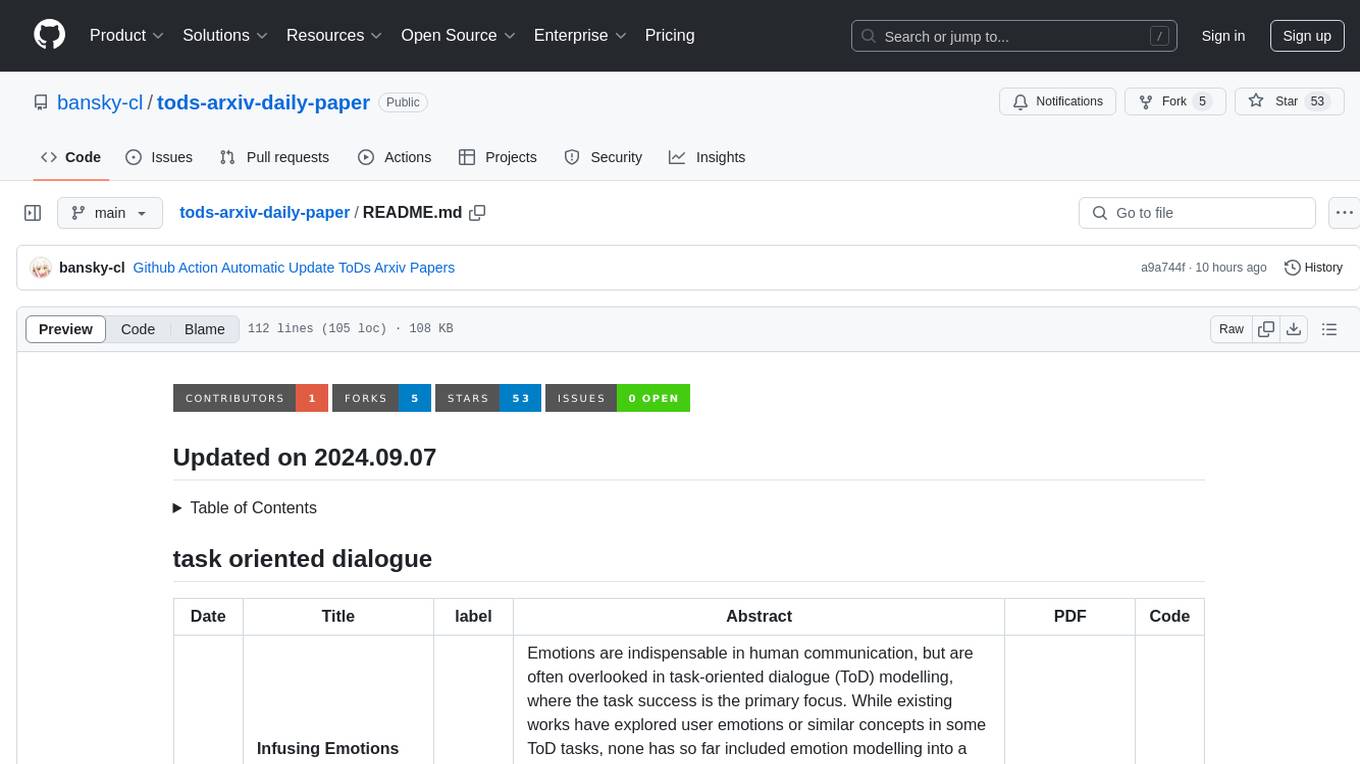

tods-arxiv-daily-paper

This repository provides a tool for fetching and summarizing daily papers from the arXiv repository. It allows users to stay updated with the latest research in various fields by automatically retrieving and summarizing papers on a daily basis. The tool simplifies the process of accessing and digesting academic papers, making it easier for researchers and enthusiasts to keep track of new developments in their areas of interest.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.

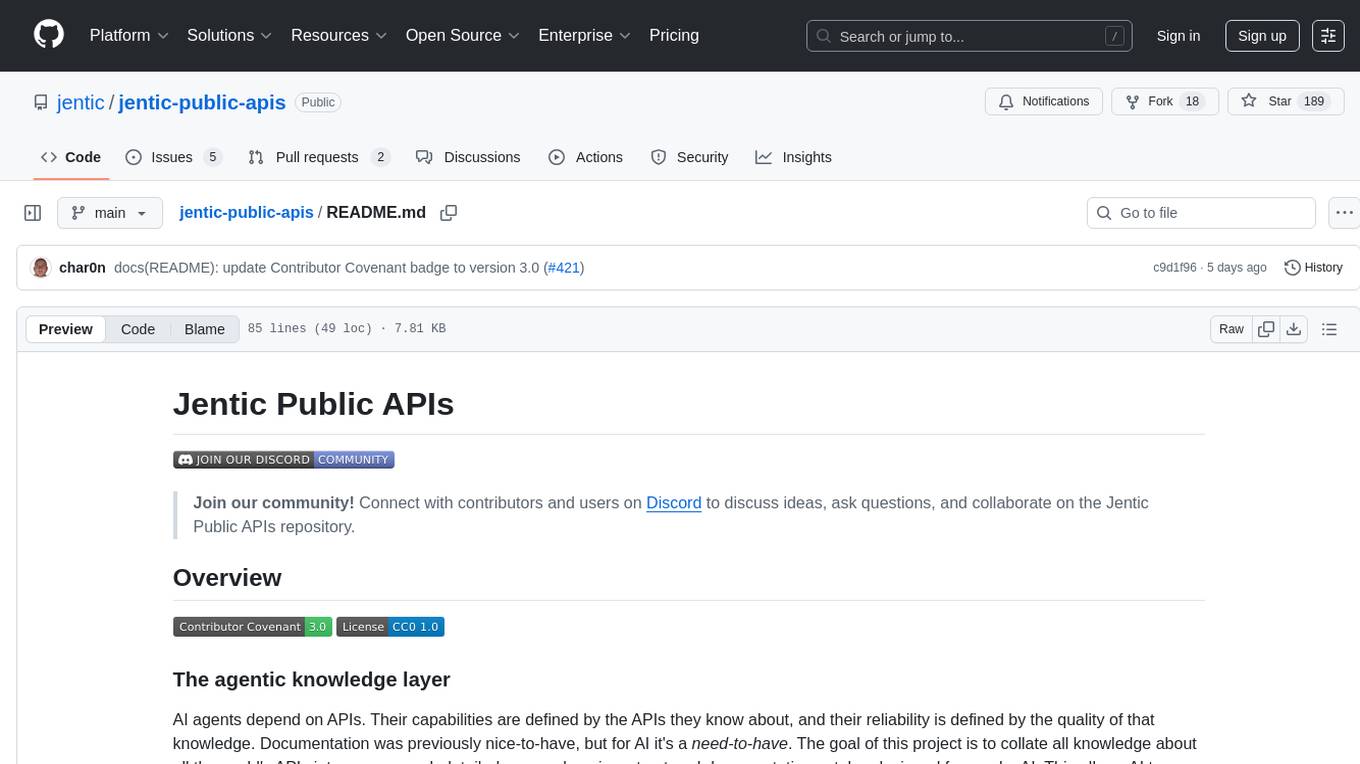

jentic-public-apis

The Jentic Public APIs repository aims to collate all knowledge about the world's APIs into a detailed, comprehensive, structured documentation catalog designed for use by AI. It focuses on standardized OpenAPI specifications, Arazzo workflows, associated tooling, evaluations, and RFCs for extensions to open formats. The project is in ALPHA stage and welcomes contributions to accelerate the effort of building an open knowledge foundation for AI agents.

PromptChains

ChatGPT Queue Prompts is a collection of prompt chains designed to enhance interactions with large language models like ChatGPT. These prompt chains help build context for the AI before performing specific tasks, improving performance. Users can copy and paste prompt chains into the ChatGPT Queue extension to process prompts in sequence. The repository includes example prompt chains for tasks like conducting AI company research, building SEO optimized blog posts, creating courses, revising resumes, enriching leads for CRM, personal finance document creation, workout and nutrition plans, marketing plans, and more.

learn-generative-ai

Learn Cloud Applied Generative AI Engineering (GenEng) is a course focusing on the application of generative AI technologies in various industries. The course covers topics such as the economic impact of generative AI, the role of developers in adopting and integrating generative AI technologies, and the future trends in generative AI. Students will learn about tools like OpenAI API, LangChain, and Pinecone, and how to build and deploy Large Language Models (LLMs) for different applications. The course also explores the convergence of generative AI with Web 3.0 and its potential implications for decentralized intelligence.

For similar tasks

awesome-artificial-intelligence-guidelines

The 'Awesome AI Guidelines' repository aims to simplify the ecosystem of guidelines, principles, codes of ethics, standards, and regulations around artificial intelligence. It provides a comprehensive collection of resources addressing ethical and societal challenges in AI systems, including high-level frameworks, principles, processes, checklists, interactive tools, industry standards initiatives, online courses, research, and industry newsletters, as well as regulations and policies from various countries. The repository serves as a valuable reference for individuals and teams designing, building, and operating AI systems to navigate the complex landscape of AI ethics and governance.

aiverify

AI Verify is an AI governance testing framework and software toolkit that validates the performance of AI systems against a set of internationally recognised principles through standardised tests. AI Verify is consistent with international AI governance frameworks such as those from European Union, OECD and Singapore. It is a single integrated toolkit that operates within an enterprise environment. It can perform technical tests on common supervised learning classification and regression models for most tabular and image datasets. It however does not define AI ethical standards and does not guarantee that any AI system tested will be free from risks or biases or is completely safe.

For similar jobs

awesome-artificial-intelligence-guidelines

The 'Awesome AI Guidelines' repository aims to simplify the ecosystem of guidelines, principles, codes of ethics, standards, and regulations around artificial intelligence. It provides a comprehensive collection of resources addressing ethical and societal challenges in AI systems, including high-level frameworks, principles, processes, checklists, interactive tools, industry standards initiatives, online courses, research, and industry newsletters, as well as regulations and policies from various countries. The repository serves as a valuable reference for individuals and teams designing, building, and operating AI systems to navigate the complex landscape of AI ethics and governance.

LLM-and-Law

This repository is dedicated to summarizing papers related to large language models with the field of law. It includes applications of large language models in legal tasks, legal agents, legal problems of large language models, data resources for large language models in law, law LLMs, and evaluation of large language models in the legal domain.

start-llms

This repository is a comprehensive guide for individuals looking to start and improve their skills in Large Language Models (LLMs) without an advanced background in the field. It provides free resources, online courses, books, articles, and practical tips to become an expert in machine learning. The guide covers topics such as terminology, transformers, prompting, retrieval augmented generation (RAG), and more. It also includes recommendations for podcasts, YouTube videos, and communities to stay updated with the latest news in AI and LLMs.

aiverify

AI Verify is an AI governance testing framework and software toolkit that validates the performance of AI systems against internationally recognised principles through standardised tests. It offers a new API Connector feature to bypass size limitations, test various AI frameworks, and configure connection settings for batch requests. The toolkit operates within an enterprise environment, conducting technical tests on common supervised learning models for tabular and image datasets. It does not define AI ethical standards or guarantee complete safety from risks or biases.

Awesome-LLM-Watermark

This repository contains a collection of research papers related to watermarking techniques for text and images, specifically focusing on large language models (LLMs). The papers cover various aspects of watermarking LLM-generated content, including robustness, statistical understanding, topic-based watermarks, quality-detection trade-offs, dual watermarks, watermark collision, and more. Researchers have explored different methods and frameworks for watermarking LLMs to protect intellectual property, detect machine-generated text, improve generation quality, and evaluate watermarking techniques. The repository serves as a valuable resource for those interested in the field of watermarking for LLMs.

LLM-LieDetector

This repository contains code for reproducing experiments on lie detection in black-box LLMs by asking unrelated questions. It includes Q/A datasets, prompts, and fine-tuning datasets for generating lies with language models. The lie detectors rely on asking binary 'elicitation questions' to diagnose whether the model has lied. The code covers generating lies from language models, training and testing lie detectors, and generalization experiments. It requires access to GPUs and OpenAI API calls for running experiments with open-source models. Results are stored in the repository for reproducibility.

graphrag

The GraphRAG project is a data pipeline and transformation suite designed to extract meaningful, structured data from unstructured text using LLMs. It enhances LLMs' ability to reason about private data. The repository provides guidance on using knowledge graph memory structures to enhance LLM outputs, with a warning about the potential costs of GraphRAG indexing. It offers contribution guidelines, development resources, and encourages prompt tuning for optimal results. The Responsible AI FAQ addresses GraphRAG's capabilities, intended uses, evaluation metrics, limitations, and operational factors for effective and responsible use.

langtest

LangTest is a comprehensive evaluation library for custom LLM and NLP models. It aims to deliver safe and effective language models by providing tools to test model quality, augment training data, and support popular NLP frameworks. LangTest comes with benchmark datasets to challenge and enhance language models, ensuring peak performance in various linguistic tasks. The tool offers more than 60 distinct types of tests with just one line of code, covering aspects like robustness, bias, representation, fairness, and accuracy. It supports testing LLMS for question answering, toxicity, clinical tests, legal support, factuality, sycophancy, and summarization.