gaianet-node

Install and run your own AI agent service

Stars: 4870

GaiaNet-node is a tool that allows users to run their own GaiaNet node, enabling them to interact with an AI agent. The tool provides functionalities to install the default node software stack, initialize the node with model files and vector database files, start the node, stop the node, and update configurations. Users can use pre-set configurations or pass a custom URL for initialization. The tool is designed to facilitate communication with the AI agent and access node information via a browser. GaiaNet-node requires sudo privilege for installation but can also be installed without sudo privileges with specific commands.

README:

Japanese(日本語) | Chinese(中文) | Korean(한국어) | Turkish (Türkçe) | Farsi(فارسی) | Arabic (العربية) | Indonesia | Russian (русскийة) | Portuguese (português) | We need your help to translate this README into your native language.

Like our work? ⭐ Star us!

Checkout our official docs and a Manning ebook on how to customize open source models.

Install the default node software stack with a single line of command on Mac, Linux, or Windows WSL.

curl -sSfL 'https://github.com/GaiaNet-AI/gaianet-node/releases/latest/download/install.sh' | bashThen, follow the prompt on your screen to set up the environment path. The command line will begin with

source.

Initialize the node. It will download the model files and vector database files specified in the $HOME/gaianet/config.json file, and it could take a few minutes since the files are large.

gaianet initStart the node.

gaianet startThe script prints the official node address on the console as follows. You can open a browser to that URL to see the node information and then chat with the AI agent on the node.

... ... https://0xf63939431ee11267f4855a166e11cc44d24960c0.us.gaianet.network

To stop the node, you can run the following script.

gaianet stopcurl -sSfL 'https://raw.githubusercontent.com/GaiaNet-AI/gaianet-node/main/install.sh' | bashThe output should look like below:

[+] Downloading default config file ...

[+] Downloading nodeid.json ...

[+] Installing WasmEdge with wasi-nn_ggml plugin ...

Info: Detected Linux-x86_64

Info: WasmEdge Installation at /home/azureuser/.wasmedge

Info: Fetching WasmEdge-0.13.5

/tmp/wasmedge.2884467 ~/gaianet

######################################################################## 100.0%

~/gaianet

Info: Fetching WasmEdge-GGML-Plugin

Info: Detected CUDA version:

/tmp/wasmedge.2884467 ~/gaianet

######################################################################## 100.0%

~/gaianet

Installation of wasmedge-0.13.5 successful

WasmEdge binaries accessible

The WasmEdge Runtime wasmedge version 0.13.5 is installed in /home/azureuser/.wasmedge/bin/wasmedge.

[+] Installing Qdrant binary...

* Download Qdrant binary

################################################################################################## 100.0%

* Initialize Qdrant directory

[+] Downloading the rag-api-server.wasm ...

################################################################################################## 100.0%

[+] Downloading dashboard ...

################################################################################################## 100.0%By default, it installs into the $HOME/gaianet directory. You can also choose to install into an alternative directory.

curl -sSfL 'https://raw.githubusercontent.com/GaiaNet-AI/gaianet-node/main/install.sh' | bash -s -- --base $HOME/gaianet.altgaianet init

The output should look like below:

[+] Downloading Llama-2-7b-chat-hf-Q5_K_M.gguf ...

############################################################################################################################## 100.0%############################################################################################################################## 100.0%

[+] Downloading all-MiniLM-L6-v2-ggml-model-f16.gguf ...

############################################################################################################################## 100.0%############################################################################################################################## 100.0%

[+] Creating 'default' collection in the Qdrant instance ...

* Start a Qdrant instance ...

* Remove the existed 'default' Qdrant collection ...

* Download Qdrant collection snapshot ...

############################################################################################################################## 100.0%############################################################################################################################## 100.0%

* Import the Qdrant collection snapshot ...

* Recovery is done successfullyThe init command initializes the node according to the $HOME/gaianet/config.json file. You can use some of our pre-set configurations. For example, the command below initializes a node with the llama-3 8B model with a London guidebook as knowledge base.

gaianet init --config https://raw.githubusercontent.com/GaiaNet-AI/node-configs/main/llama-3-8b-instruct_london/config.jsonTo see a list of pre-set configurations, you can do gaianet init --help.

Besides a pre-set configurations like gaianet_docs, you can also pass a URL to your own config.json for the node to be initialized to the state you'd like.

If you need to init a node installed in an alternative directory, do this.

gaianet init --base $HOME/gaianet.altgaianet start

The output should look like below:

[+] Starting Qdrant instance ...

Qdrant instance started with pid: 39762

[+] Starting LlamaEdge API Server ...

Run the following command to start the LlamaEdge API Server:

wasmedge --dir .:./dashboard --nn-preload default:GGML:AUTO:Llama-2-7b-chat-hf-Q5_K_M.gguf --nn-preload embedding:GGML:AUTO:all-MiniLM-L6-v2-ggml-model-f16.gguf rag-api-server.wasm --model-name Llama-2-7b-chat-hf-Q5_K_M,all-MiniLM-L6-v2-ggml-model-f16 --ctx-size 4096,384 --prompt-template llama-2-chat --qdrant-collection-name default --web-ui ./ --socket-addr 0.0.0.0:8080 --log-prompts --log-stat --rag-prompt "Use the following pieces of context to answer the user's question.\nIf you don't know the answer, just say that you don't know, don't try to make up an answer.\n----------------\n"

LlamaEdge API Server started with pid: 39796You can start the node for local use. It will be only accessible via localhost and not available on any of the GaiaNet domain's public URLs.

gaianet start --local-onlyYou can also start a node installed in an alternative base directory.

gaianet start --base $HOME/gaianet.altgaianet stopThe output should look like below:

[+] Stopping WasmEdge, Qdrant and frpc ...Stop a node installed in an alternative base directory.

gaianet stop --base $HOME/gaianet.altUsing gaianet config subcommand can update the key fields defined in the config.json file. You MUST run gaianet init again after you update the configuartion.

To update the chat field, for example, use the following command:

gaianet config --chat-url "https://huggingface.co/second-state/Llama-2-13B-Chat-GGUF/resolve/main/Llama-2-13b-chat-hf-Q5_K_M.gguf"To update the chat_ctx_size field, for example, use the following command:

gaianet config --chat-ctx-size 5120Below are all options of the config subcommand.

$ gaianet config --help

Usage: gaianet config [OPTIONS]

Options:

--chat-url <url> Update the url of chat model.

--chat-ctx-size <val> Update the context size of chat model.

--embedding-url <url> Update the url of embedding model.

--embedding-ctx-size <val> Update the context size of embedding model.

--prompt-template <val> Update the prompt template of chat model.

--port <val> Update the port of LlamaEdge API Server.

--system-prompt <val> Update the system prompt.

--rag-prompt <val> Update the rag prompt.

--rag-policy <val> Update the rag policy [Possible values: system-message, last-user-message].

--reverse-prompt <val> Update the reverse prompt.

--domain <val> Update the domain of GaiaNet node.

--snapshot <url> Update the Qdrant snapshot.

--qdrant-limit <val> Update the max number of result to return.

--qdrant-score-threshold <val> Update the minimal score threshold for the result.

--base <path> The base directory of GaiaNet node.

--help Show this help messageHave fun!

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for gaianet-node

Similar Open Source Tools

gaianet-node

GaiaNet-node is a tool that allows users to run their own GaiaNet node, enabling them to interact with an AI agent. The tool provides functionalities to install the default node software stack, initialize the node with model files and vector database files, start the node, stop the node, and update configurations. Users can use pre-set configurations or pass a custom URL for initialization. The tool is designed to facilitate communication with the AI agent and access node information via a browser. GaiaNet-node requires sudo privilege for installation but can also be installed without sudo privileges with specific commands.

blog

This repository contains a simple blog application built using Python and Flask framework. It allows users to create, read, update, and delete blog posts. The application uses SQLite database for storing blog data and provides a basic user interface for interacting with the blog. The code is well-organized and easy to understand, making it suitable for beginners looking to learn web development with Python and Flask.

Drag-and-Drop-LLMs

Drag-and-Drop LLMs (DnD) is a prompt-conditioned parameter generator that eliminates per-task training by mapping task prompts directly to LoRA weight updates. It uses a lightweight text encoder to distill prompt batches into condition embeddings, transformed by a cascaded hyper convolutional decoder into LoRA matrices. DnD offers up to 12,000× lower overhead than full fine-tuning, gains up to 30% in performance over strong LoRAs on various tasks, and shows robust cross-domain generalization. It provides a rapid way to specialize large language models without gradient-based adaptation.

Tegridy-MIDI-Dataset

Tegridy MIDI Dataset is an ultimate multi-instrumental MIDI dataset designed for Music Information Retrieval (MIR) and Music AI purposes. It provides a comprehensive collection of MIDI datasets and essential software tools for MIDI editing, rendering, transcription, search, classification, comparison, and various other MIDI applications.

aipan-netdisk-search

Aipan-Netdisk-Search is a free and open-source web project for searching netdisk resources. It utilizes third-party APIs with IP access restrictions, suggesting self-deployment. The project can be easily deployed on Vercel and provides instructions for manual deployment. Users can clone the project, install dependencies, run it in the browser, and access it at localhost:3001. The project also includes documentation for deploying on personal servers using NUXT.JS. Additionally, there are options for donations and communication via WeChat.

weixin-dyh-ai

WeiXin-Dyh-AI is a backend management system that supports integrating WeChat subscription accounts with AI services. It currently supports integration with Ali AI, Moonshot, and Tencent Hyunyuan. Users can configure different AI models to simulate and interact with AI in multiple modes: text-based knowledge Q&A, text-to-image drawing, image description, text-to-voice conversion, enabling human-AI conversations on WeChat. The system allows hierarchical AI prompt settings at system, subscription account, and WeChat user levels. Users can configure AI model types, providers, and specific instances. The system also supports rules for allocating models and keys at different levels. It addresses limitations of WeChat's messaging system and offers features like text-based commands and voice support for interactions with AI.

Comfyui-zhenzhen

Comfyui-zhenzhen is a repository that provides various API nodes for generating images, videos, and text using different AI models. It includes nodes for models like Nano Banana, Veo3, Sora2, and more. The repository offers tools for image editing, video creation, and text generation, with options for customizing parameters and outputs. Users can access different AI models for specific tasks like image generation, video editing, and text creation.

rime_wanxiang_pro

Rime Wanxiang Pro is an enhanced version of Wanxiang, supporting the 9, 14, and 18-key layouts. It features a pinyin library with optimized word and language models, supporting accurate sentence output with tones. The tool also allows for mixed Chinese and English input, offering various usage scenarios. Users can customize their input method by selecting different decoding and auxiliary code rules, enabling flexible combinations of pinyin and auxiliary codes. The tool simplifies the complex configuration of Rime and provides a unified word library for multiple input methods, enhancing input efficiency and user experience.

fastllm

FastLLM is a high-performance large model inference library implemented in pure C++ with no third-party dependencies. Models of 6-7B size can run smoothly on Android devices. Deployment communication QQ group: 831641348

AHU-AI-Repository

This repository is dedicated to the learning and exchange of resources for the School of Artificial Intelligence at Anhui University. Notes will be published on this website first: https://www.aoaoaoao.cn and will be synchronized to the repository regularly. You can also contact me at [email protected].

nonebot_plugin_naturel_gpt

NoneBotPluginNaturelGPT is a plugin for NoneBot that enhances the GPT chat AI with more human-like characteristics. It supports multiple customizable personalities, preset sharing, and various features to improve chat interactions. Users can create personalized chat experiences, enable context-aware conversations, and benefit from features like long-term memory, user-specific impressions, and data persistence. The plugin also allows for personality switching, custom trigger words, content blocking, and more. It offers extensive capabilities for enhancing chat interactions and enabling AI to actively participate in conversations.

unity-AI-Chat-Toolkit

The Unity-AI-Chat-Toolkit is a toolset for Unity developers to quickly implement AI chat-related functions. Currently, this library includes code implementations for API calls to large language models such as ChatGPT, RKV, and ChatGLM, as well as web API access to Microsoft Azure and Baidu AI for speech synthesis and speech recognition. With this library, we can quickly implement cross-platform applications on Unity.

LLMAI-writer

LLMAI-writer is a powerful AI tool for assisting in novel writing, utilizing state-of-the-art large language models to help writers brainstorm, plan, and create novels. Whether you are an experienced writer or a beginner, LLMAI-writer can help you efficiently complete the writing process.

meet-libai

The 'meet-libai' project aims to promote and popularize the cultural heritage of the Chinese poet Li Bai by constructing a knowledge graph of Li Bai and training a professional AI intelligent body using large models. The project includes features such as data preprocessing, knowledge graph construction, question-answering system development, and visualization exploration of the graph structure. It also provides code implementations for large models and RAG retrieval enhancement.

ezwork-ai-doc-translation

EZ-Work AI Document Translation is an AI document translation assistant accessible to everyone. It enables quick and cost-effective utilization of major language model APIs like OpenAI to translate documents in formats such as txt, word, csv, excel, pdf, and ppt. The tool supports AI translation for various document types, including pdf scanning, compatibility with OpenAI format endpoints via intermediary API, batch operations, multi-threading, and Docker deployment.

For similar tasks

gaianet-node

GaiaNet-node is a tool that allows users to run their own GaiaNet node, enabling them to interact with an AI agent. The tool provides functionalities to install the default node software stack, initialize the node with model files and vector database files, start the node, stop the node, and update configurations. Users can use pre-set configurations or pass a custom URL for initialization. The tool is designed to facilitate communication with the AI agent and access node information via a browser. GaiaNet-node requires sudo privilege for installation but can also be installed without sudo privileges with specific commands.

airo

Airo is a tool designed to simplify the process of deploying containers to self-hosted servers. It allows users to focus on building their products without the complexity of Kubernetes or CI/CD pipelines. With Airo, users can easily build and push Docker images, deploy instantly with a single command, update configurations securely using SSH, and set up HTTPS and reverse proxy automatically using Caddy.

amazon-sagemaker-llm-fine-tuning-remote-decorator

This repository provides interactive fine-tuning of Foundation Models with Amazon SageMaker Training using the @remote decorator. It showcases the use of SageMaker AI capabilities for Small/Large Language Models fine-tuning by employing different distribution techniques like FSDP and DDP. Users can run the repository from Amazon SageMaker Studio or a local IDE. The notebooks cover various supervised and self-supervised fine-tuning scenarios for different models, along with instructions for updating configurations based on the AWS region and Python version compatibility.

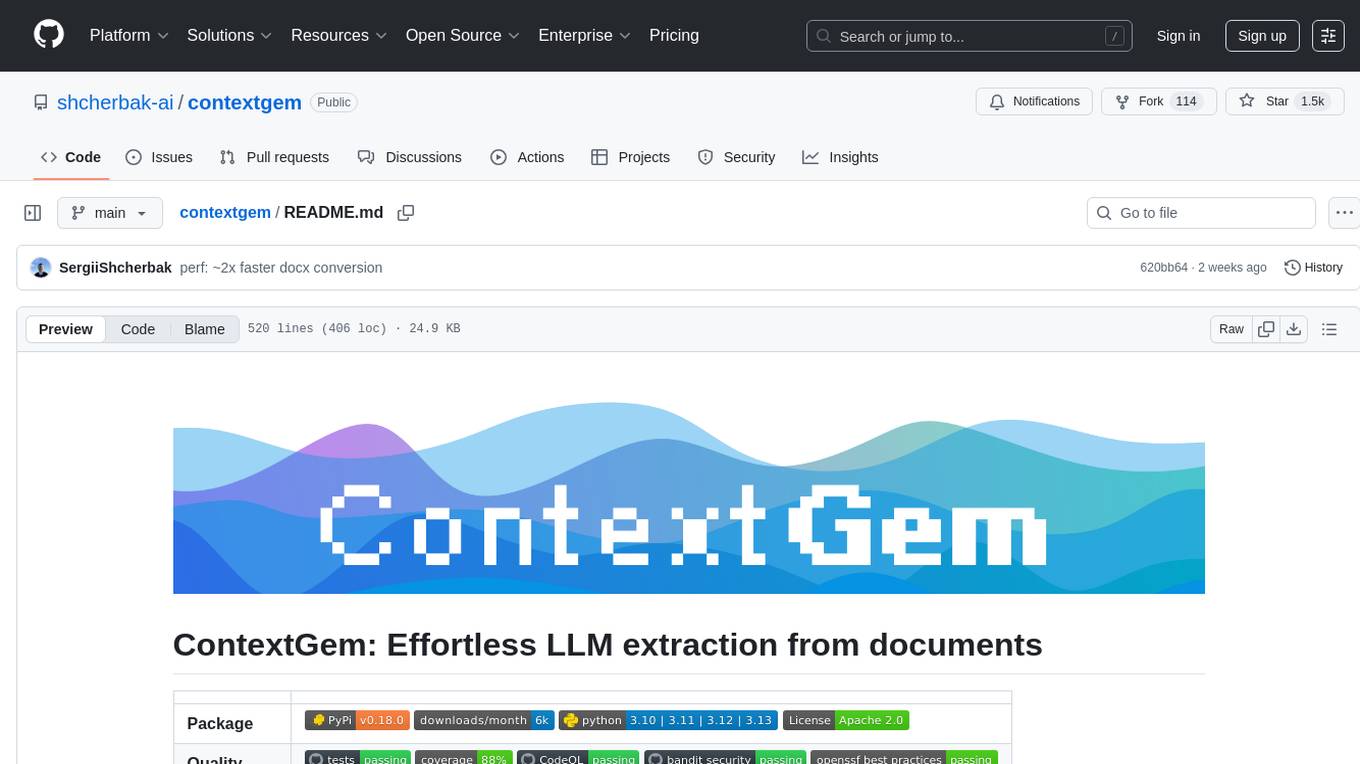

contextgem

Contextgem is a Ruby gem that provides a simple way to manage context-specific configurations in your Ruby applications. It allows you to define different configurations based on the context in which your application is running, such as development, testing, or production. This helps you keep your configuration settings organized and easily accessible, making it easier to maintain and update your application. With Contextgem, you can easily switch between different configurations without having to modify your code, making it a valuable tool for managing complex applications with multiple environments.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.