Awesome-CVPR2024-AIGC

A Collection of Papers and Codes for CVPR2024 AIGC

Stars: 251

README:

A Collection of Papers and Codes for CVPR2024 AIGC

整理汇总下今年CVPR AIGC相关的论文和代码,具体如下。

欢迎star,fork和PR~

Please feel free to star, fork or PR if helpful~

CVPR2024官网:https://cvpr.thecvf.com/Conferences/2024

CVPR完整论文列表:https://cvpr.thecvf.com/Conferences/2024/AcceptedPapers

开会时间:2024年6月17日-6月21日

论文接收公布时间:2024年2月27日

【Contents】

- 1.图像生成(Image Generation/Image Synthesis)

- 2.图像编辑(Image Editing)

- 3.视频生成(Video Generation/Image Synthesis)

- 4.视频编辑(Video Editing)

- 5.3D生成(3D Generation/3D Synthesis)

- 6.3D编辑(3D Editing)

- 7.多模态大语言模型(Multi-Modal Large Language Model)

- 8.其他多任务(Others)

Arbitrary-Scale Image Generation and Upsampling using Latent Diffusion Model and Implicit Neural Decoder

- Paper: https://arxiv.org/abs/2403.10255

- Code:

CHAIN: Enhancing Generalization in Data-Efficient GANs via lipsCHitz continuity constrAIned Normalization

- Paper: https://arxiv.org/abs/2404.00521

- Code:

- Paper: https://arxiv.org/abs/2311.15773

- Code:

- Paper: https://arxiv.org/abs/2312.15905

- Code:

- Paper:

- Code: https://github.com/haofengl/DragNoise

DreamMatcher: Appearance Matching Self-Attention for Semantically-Consistent Text-to-Image Personalization

- Paper: https://arxiv.org/abs/2311.18822

- Code: https://github.com/MoayedHajiAli/ElasticDiffusion-official

- Paper:

- Code:

- Paper:

- Code:

FaceChain-SuDe: Building Derived Class to Inherit Category Attributes for One-shot Subject-Driven Generation

- Paper: https://arxiv.org/abs/2403.06775

- Code:

- Paper:

- Code: https://github.com/aim-uofa/FreeCustom

- Paper: https://www.cs.jhu.edu/~alanlab/Pubs24/chen2024towards.pdf

- Code: https://github.com/MrGiovanni/DiffTumor

- Paper: https://arxiv.org/abs/2404.13353

- Code:

- Paper: https://arxiv.org/abs/2311.10329

- Code: https://github.com/CodeGoat24/Face-diffuser?tab=readme-ov-file

- Paper:

- Code: https://github.com/xiefan-guo/initno

- Paper: https://arxiv.org/abs/2401.01952

- Code:

Intriguing Properties of Diffusion Models: An Empirical Study of the Natural Attack Capability in Text-to-Image Generative Models

LAKE-RED: Camouflaged Images Generation by Latent Background Knowledge Retrieval-Augmented Diffusion

- Paper:

- Code: https://github.com/PanchengZhao/LAKE-RED

- Paper: https://arxiv.org/abs/2312.07330

- Code: https://github.com/cvlab-stonybrook/Large-Image-Diffusion

- Paper: https://arxiv.org/abs/2402.08654

- Code: https://github.com/ttchengab/continuous_3d_words_code/

- Paper: https://arxiv.org/abs/2311.15841

- Code:

LeftRefill: Filling Right Canvas based on Left Reference through Generalized Text-to-Image Diffusion Model

- Paper:

- Code: https://github.com/ewrfcas/LeftRefill

- Paper: https://arxiv.org/abs/2404.02883

- Code:

Perturbing Attention Gives You More Bang for the Buck: Subtle Imaging Perturbations That Efficiently Fool Customized Diffusion Models

- Paper: https://arxiv.org/abs/2404.15081

- Code:

- Paper:

- Code: https://github.com/cszy98/PLACE

- Paper:

- Code: https://github.com/WUyinwei-hah/RRNet

- Paper: https://arxiv.org/abs/2401.09603

- Code: https://github.com/google-research/google-research/tree/master/cmmd

- Paper: https://arxiv.org/abs/2401.08053

- Code:

- Paper: https://arxiv.org/abs/2308.09972

- Code: https://github.com/bcmi/Object-Shadow-Generation-Dataset-DESOBAv2

- Paper: https://arxiv.org/abs/2402.17563

- Code:

- Paper: https://arxiv.org/abs/2403.18978

- Code:

Towards Effective Usage of Human-Centric Priors in Diffusion Models for Text-based Human Image Generation

- Paper: https://arxiv.org/abs/2404.00922

- Code:

- Paper:

- Code: https://github.com/Snowfallingplum/CSD-MT

- Paper: https://arxiv.org/abs/2311.18608

- Code: https://github.com/HyelinNAM/ContrastiveDenoisingScore

- Paper:

- Code: https://github.com/HansSunY/DiffAM

- Paper: https://arxiv.org/abs/2312.10113

- Code: https://github.com/guoqincode/Focus-on-Your-Instruction

- Paper: https://arxiv.org/abs/2403.09632

- Code: https://github.com/guoqincode/Focus-on-Your-Instruction

- Paper: hhttps://arxiv.org/abs/2312.04965

- Code: https://github.com/sled-group/InfEdit

Person in Place: Generating Associative Skeleton-Guidance Maps for Human-Object Interaction Image Editing

- Paper: https://arxiv.org/abs/2303.17546

- Code: https://github.com/YangChangHee/CVPR2024_Person-In-Place_RELEASE?tab=readme-ov-file

- Paper: https://arxiv.org/abs/2403.00483

- Code:

Style Injection in Diffusion: A Training-free Approach for Adapting Large-scale Diffusion Models for Style Transfer

SwitchLight: Co-design of Physics-driven Architecture and Pre-training Framework for Human Portrait Relighting

- Paper: https://arxiv.org/abs/2402.18848

- Code:

BIVDiff: A Training-Free Framework for General-Purpose Video Synthesis via Bridging Image and Video Diffusion Models

DiffPerformer: Iterative Learning of Consistent Latent Guidance for Diffusion-based Human Video Generation

- Paper: https://arxiv.org/abs/2404.00234

- Code: https://github.com/taegyeong-lee/Grid-Diffusion-Models-for-Text-to-Video-Generation

Lodge: A Coarse to Fine Diffusion Network for Long Dance Generation guided by the Characteristic Dance Primitives

- Paper: https://arxiv.org/abs/2311.17590

- Code:

A Video is Worth 256 Bases: Spatial-Temporal Expectation-Maximization Inversion for Zero-Shot Video Editing

- Paper:

- Code: https://github.com/zhangguiwei610/CAMEL

VMC: Video Motion Customization using Temporal Attention Adaption for Text-to-Video Diffusion Models

- Paper: https://arxiv.org/abs/2312.00845

- Code: https://github.com/HyeonHo99/Video-Motion-Customization

Consistent3D: Towards Consistent High-Fidelity Text-to-3D Generation with Deterministic Sampling Prior

DiffSHEG: A Diffusion-Based Approach for Real-Time Speech-driven Holistic 3D Expression and Gesture Generation

- Paper: https://paperswithcode.com/paper/diffusion-time-step-curriculum-for-one-image

- Code: https://github.com/yxymessi/DTC123

- Paper: https://arxiv.org/abs/2312.12274

- Code: https://github.com/Peter-Kocsis/IntrinsicImageDiffusion

- Paper: https://arxiv.org/abs/2402.05746

- Code: https://github.com/yifanlu0227/ChatSim?tab=readme-ov-file

One-2-3-45++: Fast Single Image to 3D Objects with Consistent Multi-View Generation and 3D Diffusion

Paint-it: Text-to-Texture Synthesis via Deep Convolutional Texture Map Optimization and Physically-Based Rendering

- Paper: https://arxiv.org/abs/2403.07773

- Code: https://github.com/zoomin-lee/SemCity?tab=readme-ov-file

- Paper: https://arxiv.org/abs/2312.09250

- Code: https://github.com/google-research/google-research/tree/master/mesh_diffusion

- Paper: https://cvlab.cse.msu.edu/pdfs/Ren_Kim_Liu_Liu_TIGER_supp.pdf

- Code: https://github.com/Zhiyuan-R/Tiger-Diffusion

Chat-UniVi: Unified Visual Representation Empowers Large Language Models with Image and Video Understanding

- Paper: https://arxiv.org/abs/2312.02974

- Code: https://github.com/Understanding-Visual-Datasets/VisDiff

- Paper: https://arxiv.org/abs/2404.11207

- Code: https://github.com/zycheiheihei/transferable-visual-prompting

- Paper: https://arxiv.org/abs/2403.19949

- Code: https://github.com/Harvard-Ophthalmology-AI-Lab/FairCLIP

FairDeDup: Detecting and Mitigating Vision-Language Fairness Disparities in Semantic Dataset Deduplication

- Paper: https://arxiv.org/abs/2404.16123

- Code:

- Paper: https://arxiv.org/abs/2404.00909

- Code:

Let's Think Outside the Box: Exploring Leap-of-Thought in Large Language Models with Creative Humor Generation

Mitigating Object Hallucinations in Large Vision-Language Models through Visual Contrastive Decoding

MoPE-CLIP: Structured Pruning for Efficient Vision-Language Models with Module-wise Pruning Error Metric

- Paper: https://arxiv.org/abs/2403.07839

- Code:

OPERA: Alleviating Hallucination in Multi-Modal Large Language Models via Over-Trust Penalty and Retrospection-Allocation

- Paper: https://arxiv.org/abs/2404.09011

- Code:

- Paper: https://arxiv.org/abs/2404.01156

- Code:

- Paper: https://arxiv.org/abs/2403.12532

- Code:

AEROBLADE: Training-Free Detection of Latent Diffusion Images Using Autoencoder Reconstruction Error

持续更新~

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Awesome-CVPR2024-AIGC

Similar Open Source Tools

aigc-platform-server

This project aims to integrate mainstream open-source large models to achieve the coordination and cooperation between different types of large models, providing comprehensive and flexible AI content generation services.

MachineLearning

MachineLearning is a repository focused on practical applications in various algorithm scenarios such as ship, education, and enterprise development. It covers a wide range of topics from basic machine learning and deep learning to object detection and the latest large models. The project utilizes mature third-party libraries, open-source pre-trained models, and the latest technologies from related papers to document the learning process and facilitate direct usage by a wider audience.

100-days-of-code

100 Days of Code is a repository containing 100 frontend challenges with professional designs, user stories, and acceptance criteria. It provides a platform for developers to practice coding daily, from beginner-friendly cards to advanced dashboards. The challenges are structured for AI collaboration, allowing users to work with coding agents like Claude, Cursor, and GitHub Copilot. The repository also includes an AI Collaboration Log template to document the use of AI tools and showcase effective collaboration. Developers can replicate design mockups, add interactivity with JavaScript, and share their solutions on social media platforms.

awesome-chatgpt-zh

The Awesome ChatGPT Chinese Guide project aims to help Chinese users understand and use ChatGPT. It collects various free and paid ChatGPT resources, as well as methods to communicate more effectively with ChatGPT in Chinese. The repository contains a rich collection of ChatGPT tools, applications, and examples.

ai-enablement-stack

The AI Enablement Stack is a curated collection of venture-backed companies, tools, and technologies that enable developers to build, deploy, and manage AI applications. It provides a structured view of the AI development ecosystem across five key layers: Agent Consumer Layer, Observability and Governance Layer, Engineering Layer, Intelligence Layer, and Infrastructure Layer. Each layer focuses on specific aspects of AI development, from end-user interaction to model training and deployment. The stack aims to help developers find the right tools for building AI applications faster and more efficiently, assist engineering leaders in making informed decisions about AI infrastructure and tooling, and help organizations understand the AI development landscape to plan technology adoption.

ShitCodify

ShitCodify is an AI-powered tool that transforms normal, readable, and maintainable code into hard-to-understand, hard-to-maintain 'shit code'. It uses large language models like GPT-4 to analyze code and apply various 'anti-patterns' and bad practices to reduce code readability and maintainability while keeping the code functional.

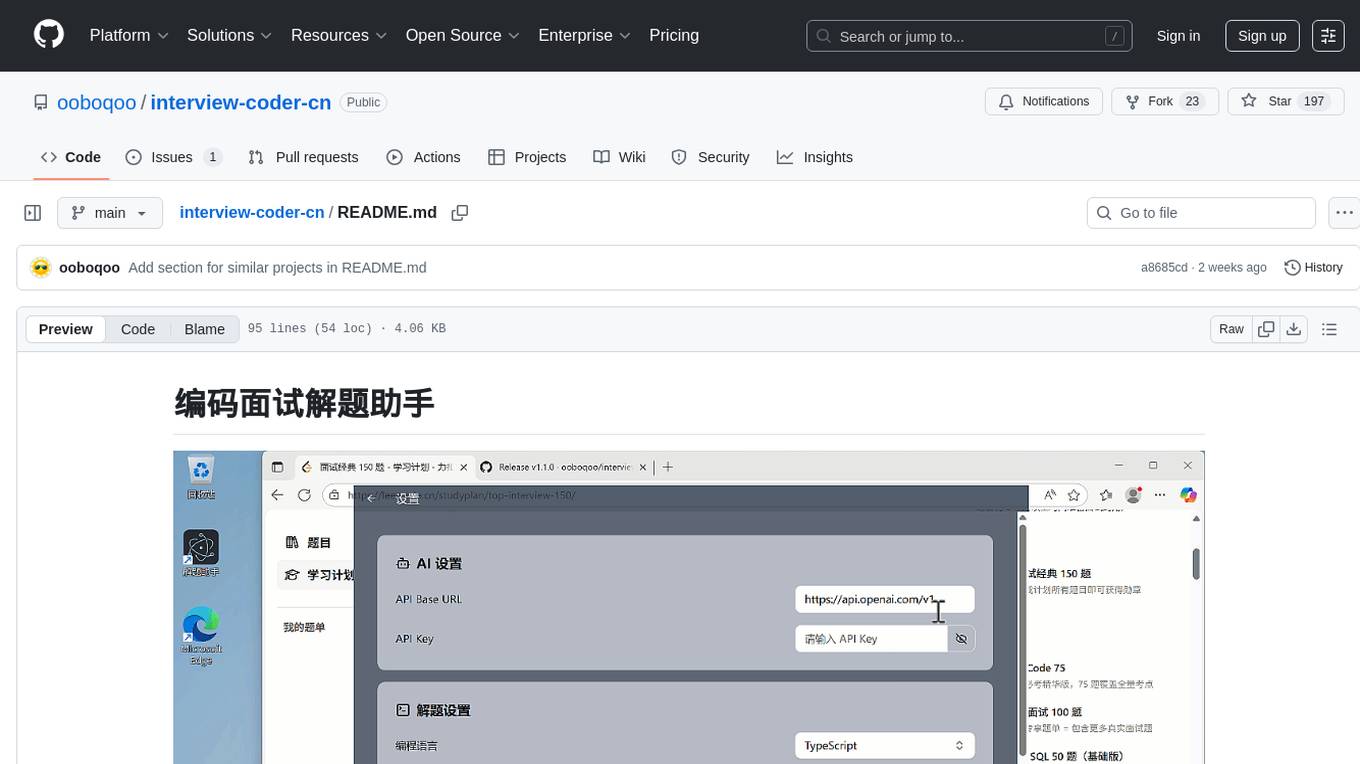

interview-coder-cn

This is a coding problem-solving assistant for Chinese users, tailored to the domestic AI ecosystem, simple and easy to use. It provides real-time problem-solving ideas and code analysis for coding interviews, avoiding detection during screen sharing. Users can also extend its functionality for other scenarios by customizing prompt words. The tool supports various programming languages and has stealth capabilities to hide its interface from interviewers even when screen sharing.

ComfyUI-BRIA_AI-RMBG

ComfyUI-BRIA_AI-RMBG is an unofficial implementation of the BRIA Background Removal v1.4 model for ComfyUI. The tool supports batch processing, including video background removal, and introduces a new mask output feature. Users can install the tool using ComfyUI Manager or manually by cloning the repository. The tool includes nodes for automatically loading the Removal v1.4 model and removing backgrounds. Updates include support for batch processing and the addition of a mask output feature.

claude-code-engingeering

Claude Code is an advanced AI Agent framework that goes beyond a smart command-line tool. It is programmable, extensible, and composable, allowing users to teach it project specifications, split tasks into sub-agents, provide domain skills, automate responses to specific events, and integrate it into CI/CD pipelines for unmanned operation. The course aims to transform users from 'users' of Claude Code to 'masters' who can design agent 'memories', delegate tasks to sub-agents, build reusable skill packages, drive automation workflows with code, and collaborate with intelligent agents in a dance of development.

MathModelAgent

MathModelAgent is an agent designed specifically for mathematical modeling tasks. It automates the process of mathematical modeling and generates a complete paper that can be directly submitted. The tool features automatic problem analysis, code writing, error correction, and paper writing. It supports various models, offers low costs, and allows customization through prompt inject. The tool is ideal for individuals or teams working on mathematical modeling projects.

Stable-Diffusion

Stable Diffusion is a text-to-image AI model that can generate realistic images from a given text prompt. It is a powerful tool that can be used for a variety of creative and practical applications, such as generating concept art, creating illustrations, and designing products. Stable Diffusion is also a great tool for learning about AI and machine learning. This repository contains a collection of tutorials and resources on how to use Stable Diffusion.

llm-dev

The 'llm-dev' repository contains source code and resources for the book 'Practical Projects of Large Models: Multi-Domain Intelligent Application Development'. It covers topics such as language model basics, application architecture, working modes, environment setup, model installation, fine-tuning, quantization, multi-modal model applications, chat applications, programming large model applications, VS Code plugin development, enhanced generation applications, translation applications, intelligent agent applications, speech model applications, digital human applications, model training applications, and AI town applications.

Awesome-Lists

Awesome-Lists is a curated list of awesome lists across various domains of computer science and beyond, including programming languages, web development, data science, and more. It provides a comprehensive index of articles, books, courses, open source projects, and other resources. The lists are organized by topic and subtopic, making it easy to find the information you need. Awesome-Lists is a valuable resource for anyone looking to learn more about a particular topic or to stay up-to-date on the latest developments in the field.

azooKey-Desktop

azooKey-Desktop is an open-source Japanese input system for macOS that incorporates the high-precision neural kana-kanji conversion engine 'Zenzai'. It offers features such as neural kana-kanji conversion, profile prompt, history learning, user dictionary, integration with personal optimization system 'Tuner', 'nice feeling conversion' with LLM, live conversion, and native support for AZIK. The tool is currently in alpha version, and its operation is not guaranteed. Users can install it via `.pkg` file or Homebrew. Development contributions are welcome, and the project has received support from the Information-technology Promotion Agency, Japan (IPA) for the 2024 fiscal year's untapped IT human resources discovery and nurturing project.