LLM-And-More

LLM-And-More is a professional, plug-and-play, llm trainer and application builder that guides you through the complete LLM workflow from data to evaluation, from training to deployment, from idea to sevice. / LLM-And-More 是一个专业、开箱即用的大模型训练及应用构建一站式解决方案,包含从数据到评估、从训练到部署、从想法到服务的全流程最佳实践。

Stars: 447

LLM-And-More is a one-stop solution for training and applying large models, covering the entire process from data processing to model evaluation, from training to deployment, and from idea to service. In this project, users can easily train models through this project and generate the required product services with one click.

README:

LLM-And-More 是一站式大模型训练及应用构建的解决方案,其覆盖了从数据处理到模型评估、从训练到部署、从想法到服务等整个流程。在本项目中,用户可以轻松地通过本项目进行模型训练并一键生成所需的产品服务。

本项目的优势主要体现在以下三点:

- 总结了不同应用场景下的专业知识和最佳实践,以保证模型在实际生产中的表现优异。

- 集成了高性能模型并行框架,有效地减少了训练和推理时的算力开销。

- 用户可以基于自身需要定制化模型及服务,便捷且自由度高。

https://github.com/IceBearAI/LLM-And-More/assets/9782796/cc410f8e-00eb-46d3-820f-03860ea4f743

如有任何问题,欢迎加入我们的微信群交流!

LLM-And-More致力于为专业开发者和一线业务人员提供专业、易用的LLM应用构建方案。LLM-And-More将LLM应用开发过程分解为以下六个模块:

这些模块涵盖了开发一个LLM产品服务的所有细节。通过注入专业知识和性能优化组件,全流程协助用户构建符合自身需求的LLM应用。

数据模块是构建LLM应用的首要组成部分,直接影响最终效果。LLM-And-More提供全面的数据标注平台,包括任务管理、分配和自主标注功能。标注完成后,数据自动转换为模型可处理的jsonl格式,并存入本地数据库,以便后续训练和评估模块使用。此外,LLM-And-More还提供了数据质量一键检测,用户可通过检测报告发现标注过程中可能存在的错误,从而提升模型训练的最终效果。

训练模块LLM应用构建中最关键、最复杂的部分。LLM-And-More提供了一个即开即用的高性能模型训练框架。该框架使用户无需深入了解深度学习,即可轻松实现最佳实践。在训练模块中,用户可以自由调整基座模型、训练方式、批处理大小、学习率等超参数。如果用户对此不甚了解,LLM-And-More提供智能默认参数,帮助用户选择和调整参数。此外,LLM-And-More还自动为用户提供DeepSpeed多卡多机加速适配,节省训练时间,最大程度利用算力资源。

在训练过程中,用户可能会面临无法清晰观察模型性能变化的困扰。LLM-And-More提供智能化的模型训练监控模块,实时可视化显示CPU、GPU等核心算力资源的使用情况,并监控模型损失、学习率、训练步数的变化。此外,监控模块会智能提示用户可能存在的模型性能风险,并提供适当的解决方案建议。例如,当系统提示“过拟合风险”时,建议“停止训练,降低学习率或增大数据量”,用户可以据此调整训练策略,避免不必要的算力浪费,更准确地把握模型性能。

训练结束后,LLM-And-More提供评估模块,其分为两个主要任务:一是评估模型在训练任务上的性能,检验其在特定任务上的表现;二是评估模型在五个通用维度上的能力,包括推理、阅读理解、语言理解、指令遵从和创新。用户可根据两方面的评估结果调整训练数据和迭代次数,选择最适合的模型应用于线上系统。

除了简单的提供输入输出训练LLM应用外,我们还提供了丰富的场景支持,可以帮助用户更好的解决在工作生产当中遇到的复杂问题。例如,用户可以基于FAQ场景适配方案,直接构建一个客服,协助完成识别客户意图、解决淘宝店铺自动回复的问题;或是基于RAG场景方案,构建一套企业内部规章制度问答机器人。所有场景均具备独立的六个功能模块,但拥有深度定制的UI和专业Know-How。LLM-And-More支持以下场景:

该场景可以接受任意的输入输出,这是最基础的训练场景。在该场景中,我们没有针对数据特征、应用范围等做任何假设,在数据、训练、监控、评估、部署、交互模块中的各项参数均调整至最均衡的水平,并适配了任何场景均有收益的专业Know-How辅助模型训练。总的来说,如果用户不确定应该使用哪个场景,或认为LLM-And-More提供的任何场景均不符合您的要求,您可以选用该场景来开始您的构建。

该场景适用于FAQ客服或FAQ问答机器人。FAQ(Frequently Asked Questions) 即常见问题,通常应用于客服、快速助手、和在线论坛等场景,在这些场景中常见问题往往会反复出现,例如,用户经常以各种不同的表达方式询问发货时间。在FAQ场景中,用户的问题往往被归类,并对于每一类问题有一个统一的回答,例如针对询问发货时间的问题,统一回复“我们将尽快安排发货,请及时查看物流信息”。直接尝试使用大模型生成这些回答往往是事倍功半的,因为没有充分利用数据的特征,很难让大模型回复保持稳定,且常常会发生幻觉现象。为此,我们为FAQ场景设计了涵盖全部六个模块的全流程解决方案,主要引入用户意图识别,让LLM预测用户意图(在上述例子中,“查询物流信息”),而不是直接预测回复(在上述例子中,“我们将尽快安排发货,请及时查看物流信息”)。我们在大量FAQ场景中的实验表明,该解决方案可以提升30%以上的回复准确率,并显著降低模型过拟合风险与幻觉现象。

为了支持更广泛的应用,LLM-And-More支持多种多样的模型,并支持用户选择多种不同的训练方式。

| 模型名 | 模型大小 | 支持的训练方法 |

|---|---|---|

| Baichuan2 | 7B/13B | 全参数训练/Lora |

| ChatGLM3 | 6B | 全参数训练/Lora |

| LLaMA | 7B/13B/33B/65B | 全参数训练/Lora |

| LLaMA-2 | 7B/13B/70B | 全参数训练/Lora |

| Qwen | 0.5B/1.8B/4B/7B/14B/72B | 全参数训练/Lora |

为了实现模型的微调,您可以参考我们的详细指南:模型微调。

您可以将模型部署到任意配备GPU的节点上,无论是私有的K8s集群、Docker集群,还是云服务商提供的K8s集群,均能轻松完成对接。

- 克隆项目:

git clone https://github.com/IceBearAI/LLM-And-More.git - 进入项目:

cd aigc-web

该系统依赖Docker需要安装此服务

推理或训练节点只需要安装Docker和Nvidia-Docker 即可。NVIDIA Container Toolkit

golang版本请安装go1.22以上版本

- 安装依赖包:

go mod tidy - 本地启动:

make run - build成x86 Linux可执行文件:

make build-linux - build成当前电脑可执行文件:

make build

build完通常会保存在 $(GOPATH)/bin/ 目录下

安装docker和docker-compose可以参考官网教程:Install Docker Engine

需要在 当前目录下增加 .env 文件来设置相关的环境变量。

执行命令启动全部服务

$ docker-compose up

如果不需要执行build流程,可以进入到docker目录下执行docker-compose up即可。或把docker-compose.yaml的build注释掉。

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for LLM-And-More

Similar Open Source Tools

LLM-And-More

LLM-And-More is a one-stop solution for training and applying large models, covering the entire process from data processing to model evaluation, from training to deployment, and from idea to service. In this project, users can easily train models through this project and generate the required product services with one click.

ai-paint-today-BE

AI Paint Today is an API server repository that allows users to record their emotions and daily experiences, and based on that, AI generates a beautiful picture diary of their day. The project includes features such as generating picture diaries from written entries, utilizing DALL-E 2 model for image generation, and deploying on AWS and Cloudflare. The project also follows specific conventions and collaboration strategies for development.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.

Long-Novel-GPT

Long-Novel-GPT is a long novel generator based on large language models like GPT. It utilizes a hierarchical outline/chapter/text structure to maintain the coherence of long novels. It optimizes API calls cost through context management and continuously improves based on self or user feedback until reaching the set goal. The tool aims to continuously refine and build novel content based on user-provided initial ideas, ultimately generating long novels at the level of human writers.

wechat-robot-client

The Wechat Robot Client is an intelligent robot management system that provides rich interactive experiences. It includes features such as AI chat, drawing, voice, group chat functionalities, song requests, daily summaries, friend circle viewing, friend adding, group chat management, file messaging, multiple login methods support, and more. The system also supports features like sending files, various login methods, and integration with other apps like '王者荣耀' and '吃鸡'. It offers a comprehensive solution for managing Wechat interactions and automating various tasks.

ZcChat

ZcChat is an AI desktop pet suitable for Galgame characters, featuring long-term memory, expressive actions, control over the computer, and voice functions. It utilizes Letta for AI long-term memory, Galgame-style character illustrations for more actions and expressions, and voice interaction with support for various voice synthesis tools like Vits. Users can configure characters, install Letta, set up voice synthesis and input, and control the pet to interact with the computer. The tool enhances visual and auditory experiences for users interested in AI desktop pets.

Code-Review-GPT-Gitlab

A project that utilizes large models to help with Code Review on Gitlab, aimed at improving development efficiency. The project is customized for Gitlab and is developing a Multi-Agent plugin for collaborative review. It integrates various large models for code security issues and stays updated with the latest Code Review trends. The project architecture is designed to be powerful, flexible, and efficient, with easy integration of different models and high customization for developers.

FisherAI

FisherAI is a Chrome extension designed to improve learning efficiency. It supports automatic summarization, web and video translation, multi-turn dialogue, and various large language models such as gpt/azure/gemini/deepseek/mistral/groq/yi/moonshot. Users can enjoy flexible and powerful AI tools with FisherAI.

AIClient-2-API

AIClient-2-API is a versatile and lightweight API proxy designed for developers, providing ample free API request quotas and comprehensive support for various mainstream large models like Gemini, Qwen Code, Claude, etc. It converts multiple backend APIs into standard OpenAI format interfaces through a Node.js HTTP server. The project adopts a modern modular architecture, supports strategy and adapter patterns, comes with complete test coverage and health check mechanisms, and is ready to use after 'npm install'. By easily switching model service providers in the configuration file, any OpenAI-compatible client or application can seamlessly access different large model capabilities through the same API address, eliminating the hassle of maintaining multiple sets of configurations for different services and dealing with incompatible interfaces.

LLM-TPU

LLM-TPU project aims to deploy various open-source generative AI models on the BM1684X chip, with a focus on LLM. Models are converted to bmodel using TPU-MLIR compiler and deployed to PCIe or SoC environments using C++ code. The project has deployed various open-source models such as Baichuan2-7B, ChatGLM3-6B, CodeFuse-7B, DeepSeek-6.7B, Falcon-40B, Phi-3-mini-4k, Qwen-7B, Qwen-14B, Qwen-72B, Qwen1.5-0.5B, Qwen1.5-1.8B, Llama2-7B, Llama2-13B, LWM-Text-Chat, Mistral-7B-Instruct, Stable Diffusion, Stable Diffusion XL, WizardCoder-15B, Yi-6B-chat, Yi-34B-chat. Detailed model deployment information can be found in the 'models' subdirectory of the project. For demonstrations, users can follow the 'Quick Start' section. For inquiries about the chip, users can contact SOPHGO via the official website.

ChuanhuChatGPT

Chuanhu Chat is a user-friendly web graphical interface that provides various additional features for ChatGPT and other language models. It supports GPT-4, file-based question answering, local deployment of language models, online search, agent assistant, and fine-tuning. The tool offers a range of functionalities including auto-solving questions, online searching with network support, knowledge base for quick reading, local deployment of language models, GPT 3.5 fine-tuning, and custom model integration. It also features system prompts for effective role-playing, basic conversation capabilities with options to regenerate or delete dialogues, conversation history management with auto-saving and search functionalities, and a visually appealing user experience with themes, dark mode, LaTeX rendering, and PWA application support.

ezwork-ai-doc-translation

EZ-Work AI Document Translation is an AI document translation assistant accessible to everyone. It enables quick and cost-effective utilization of major language model APIs like OpenAI to translate documents in formats such as txt, word, csv, excel, pdf, and ppt. The tool supports AI translation for various document types, including pdf scanning, compatibility with OpenAI format endpoints via intermediary API, batch operations, multi-threading, and Docker deployment.

XianyuAutoAgent

Xianyu AutoAgent is an AI customer service robot system specifically designed for the Xianyu platform, providing 24/7 automated customer service, supporting multi-expert collaborative decision-making, intelligent bargaining, and context-aware conversations. The system includes intelligent conversation engine with features like context awareness and expert routing, business function matrix with modules like core engine, bargaining system, technical support, and operation monitoring. It requires Python 3.8+ and NodeJS 18+ for installation and operation. Users can customize prompts for different experts and contribute to the project through issues or pull requests.

happy-llm

Happy-LLM is a systematic learning tutorial for Large Language Models (LLM) that covers NLP research methods, LLM architecture, training process, and practical applications. It aims to help readers understand the principles and training processes of large language models. The tutorial delves into Transformer architecture, attention mechanisms, pre-training language models, building LLMs, training processes, and practical applications like RAG and Agent technologies. It is suitable for students, researchers, and LLM enthusiasts with programming experience, Python knowledge, and familiarity with deep learning and NLP concepts. The tutorial encourages hands-on practice and participation in LLM projects and competitions to deepen understanding and contribute to the open-source LLM community.

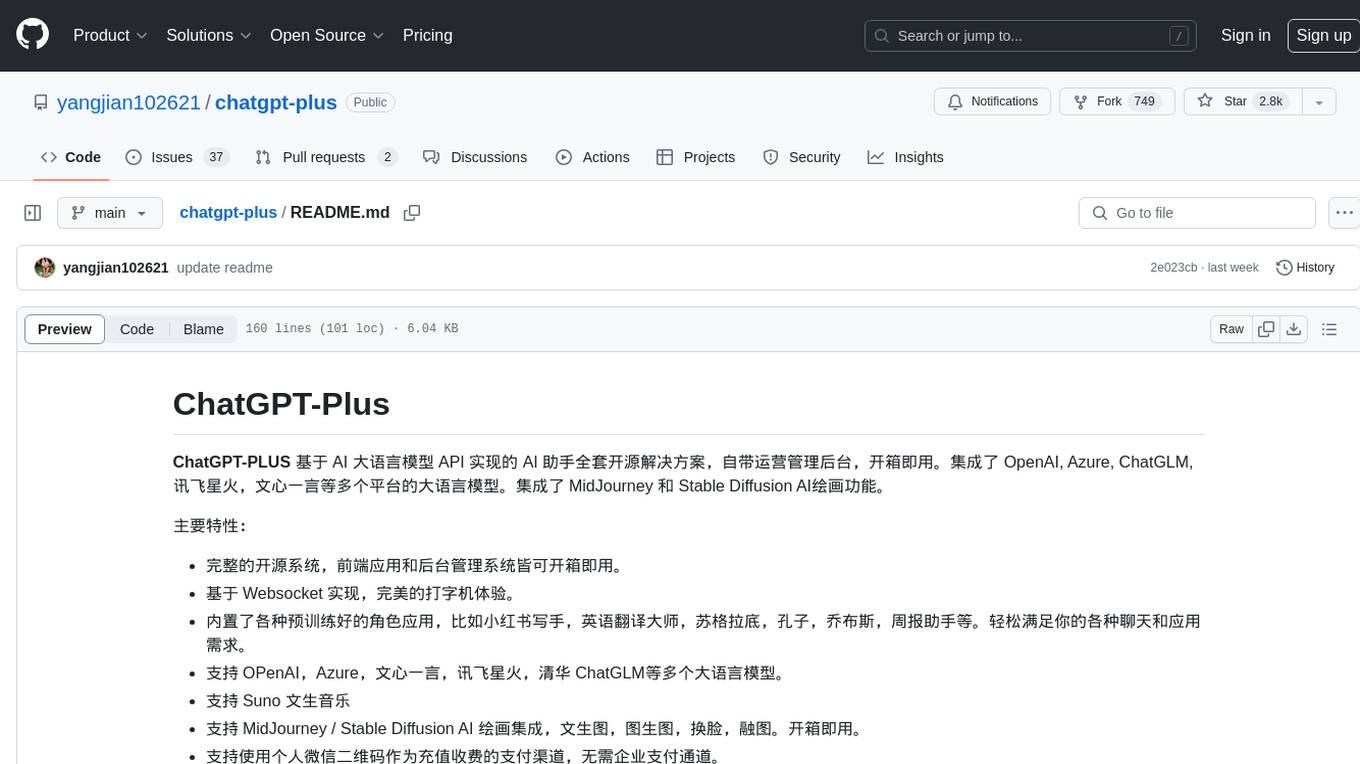

chatgpt-plus

ChatGPT-PLUS is an open-source AI assistant solution based on AI large language model API, with a built-in operational management backend for easy deployment. It integrates multiple large language models from platforms like OpenAI, Azure, ChatGLM, Xunfei Xinghuo, and Wenxin Yanyan. Additionally, it includes MidJourney and Stable Diffusion AI drawing features. The system offers a complete open-source solution with ready-to-use frontend and backend applications, providing a seamless typing experience via Websocket. It comes with various pre-trained role applications such as Xiaohongshu writer, English translation master, Socrates, Confucius, Steve Jobs, and weekly report assistant to meet various chat and application needs. Users can enjoy features like Suno Wensheng music, integration with MidJourney/Stable Diffusion AI drawing, personal WeChat QR code for payment, built-in Alipay and WeChat payment functions, support for various membership packages and point card purchases, and plugin API integration for developing powerful plugins using large language model functions.

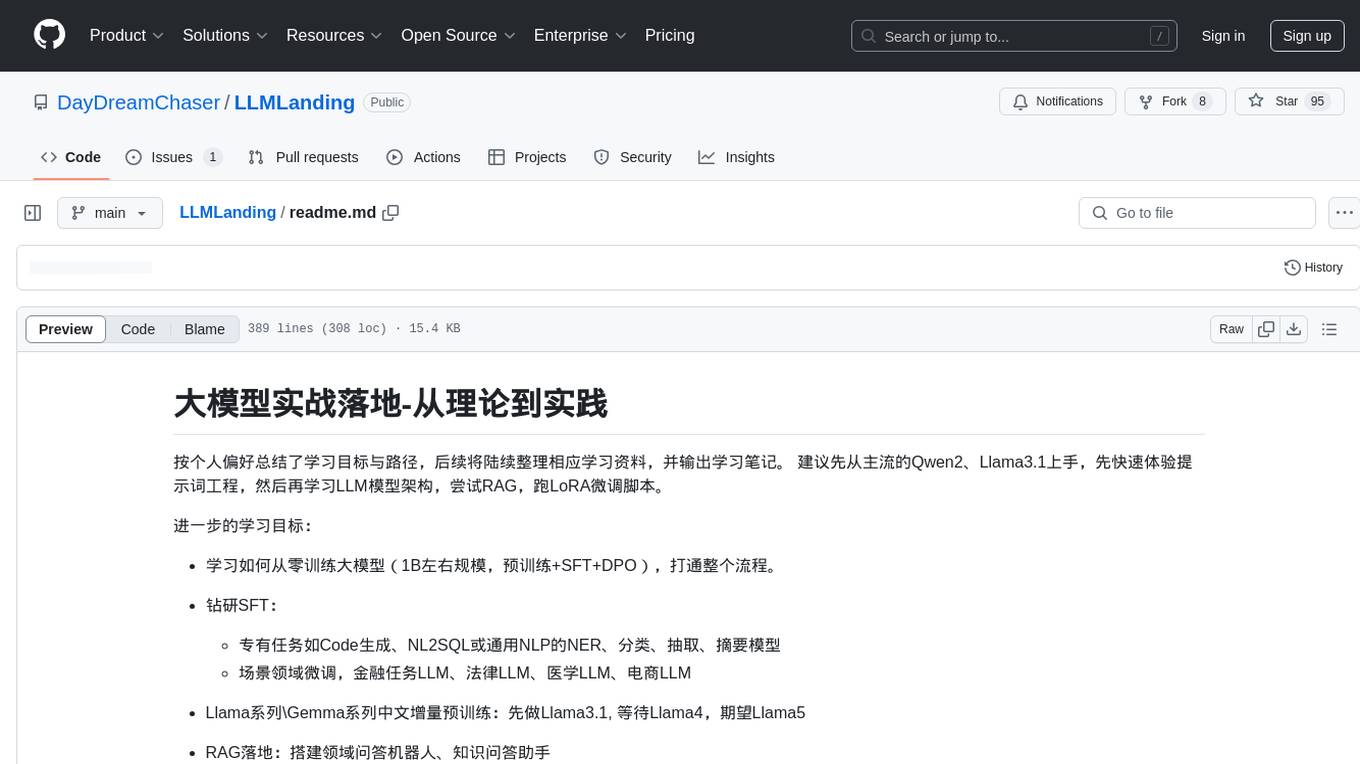

LLMLanding

LLMLanding is a repository focused on practical implementation of large models, covering topics from theory to practice. It provides a structured learning path for training large models, including specific tasks like training 1B-scale models, exploring SFT, and working on specialized tasks such as code generation, NLP tasks, and domain-specific fine-tuning. The repository emphasizes a dual learning approach: quickly applying existing tools for immediate output benefits and delving into foundational concepts for long-term understanding. It offers detailed resources and pathways for in-depth learning based on individual preferences and goals, combining theory with practical application to avoid overwhelm and ensure sustained learning progress.

For similar tasks

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.

danswer

Danswer is an open-source Gen-AI Chat and Unified Search tool that connects to your company's docs, apps, and people. It provides a Chat interface and plugs into any LLM of your choice. Danswer can be deployed anywhere and for any scale - on a laptop, on-premise, or to cloud. Since you own the deployment, your user data and chats are fully in your own control. Danswer is MIT licensed and designed to be modular and easily extensible. The system also comes fully ready for production usage with user authentication, role management (admin/basic users), chat persistence, and a UI for configuring Personas (AI Assistants) and their Prompts. Danswer also serves as a Unified Search across all common workplace tools such as Slack, Google Drive, Confluence, etc. By combining LLMs and team specific knowledge, Danswer becomes a subject matter expert for the team. Imagine ChatGPT if it had access to your team's unique knowledge! It enables questions such as "A customer wants feature X, is this already supported?" or "Where's the pull request for feature Y?"

semantic-kernel

Semantic Kernel is an SDK that integrates Large Language Models (LLMs) like OpenAI, Azure OpenAI, and Hugging Face with conventional programming languages like C#, Python, and Java. Semantic Kernel achieves this by allowing you to define plugins that can be chained together in just a few lines of code. What makes Semantic Kernel _special_ , however, is its ability to _automatically_ orchestrate plugins with AI. With Semantic Kernel planners, you can ask an LLM to generate a plan that achieves a user's unique goal. Afterwards, Semantic Kernel will execute the plan for the user.

floneum

Floneum is a graph editor that makes it easy to develop your own AI workflows. It uses large language models (LLMs) to run AI models locally, without any external dependencies or even a GPU. This makes it easy to use LLMs with your own data, without worrying about privacy. Floneum also has a plugin system that allows you to improve the performance of LLMs and make them work better for your specific use case. Plugins can be used in any language that supports web assembly, and they can control the output of LLMs with a process similar to JSONformer or guidance.

mindsdb

MindsDB is a platform for customizing AI from enterprise data. You can create, serve, and fine-tune models in real-time from your database, vector store, and application data. MindsDB "enhances" SQL syntax with AI capabilities to make it accessible for developers worldwide. With MindsDB’s nearly 200 integrations, any developer can create AI customized for their purpose, faster and more securely. Their AI systems will constantly improve themselves — using companies’ own data, in real-time.

aiscript

AiScript is a lightweight scripting language that runs on JavaScript. It supports arrays, objects, and functions as first-class citizens, and is easy to write without the need for semicolons or commas. AiScript runs in a secure sandbox environment, preventing infinite loops from freezing the host. It also allows for easy provision of variables and functions from the host.

activepieces

Activepieces is an open source replacement for Zapier, designed to be extensible through a type-safe pieces framework written in Typescript. It features a user-friendly Workflow Builder with support for Branches, Loops, and Drag and Drop. Activepieces integrates with Google Sheets, OpenAI, Discord, and RSS, along with 80+ other integrations. The list of supported integrations continues to grow rapidly, thanks to valuable contributions from the community. Activepieces is an open ecosystem; all piece source code is available in the repository, and they are versioned and published directly to npmjs.com upon contributions. If you cannot find a specific piece on the pieces roadmap, please submit a request by visiting the following link: Request Piece Alternatively, if you are a developer, you can quickly build your own piece using our TypeScript framework. For guidance, please refer to the following guide: Contributor's Guide

superagent-js

Superagent is an open source framework that enables any developer to integrate production ready AI Assistants into any application in a matter of minutes.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.