ai-enablement-stack

A Community-Driven Mapping of AI Development Tools

Stars: 351

The AI Enablement Stack is a curated collection of venture-backed companies, tools, and technologies that enable developers to build, deploy, and manage AI applications. It provides a structured view of the AI development ecosystem across five key layers: Agent Consumer Layer, Observability and Governance Layer, Engineering Layer, Intelligence Layer, and Infrastructure Layer. Each layer focuses on specific aspects of AI development, from end-user interaction to model training and deployment. The stack aims to help developers find the right tools for building AI applications faster and more efficiently, assist engineering leaders in making informed decisions about AI infrastructure and tooling, and help organizations understand the AI development landscape to plan technology adoption.

README:

The AI Enablement Stack is a curated collection of venture-backed companies, tools and technologies that enable developers to build, deploy, and manage AI applications. It provides a structured view of the AI development ecosystem across five key layers:

AGENT CONSUMER LAYER: Where AI meets end-users through autonomous agents, assistive tools, and specialized solutions. This layer showcases ready-to-use AI applications and agentic tools.

OBSERVABILITY AND GOVERNANCE LAYER: Tools for monitoring, securing, and managing AI systems in production. Essential for maintaining reliable and compliant AI operations.

ENGINEERING LAYER: Development resources for building production-ready AI applications, including training tools, testing frameworks, and quality assurance solutions.

INTELLIGENCE LAYER: The cognitive foundation featuring frameworks, knowledge engines, and specialized models that power AI capabilities.

INFRASTRUCTURE LAYER: The computing backbone that enables AI development and deployment, from development environments to model serving platforms.

- For Developers: Find the right tools to build AI applications faster and more efficiently

- For Engineering Leaders: Make informed decisions about AI infrastructure and tooling

- For Organizations: Understand the AI development landscape and plan technology adoption

Each tool in this stack is carefully selected based on:

- Production readiness

- Enterprise-grade capabilities

- Active development and support

- Venture backing or significant market presence

To contribute to this list:

- Read the CONTRIBUTING.md

- Fork the repository

- Add logo under the ./public/images/ folder

- Add your tool in the appropriate category in the file ai-enablement-stack.json

- Submit a PR with a compelling rationale for its acceptance

Self-operating AI systems that can complete complex tasks independently

AGENT CONSUMER LAYER - Autonomous Agents

Cognition develops Devin, the world's first AI software engineer, designed to work as a collaborative teammate that helps engineering teams scale their capabilities through parallel task execution and comprehensive development support.

AGENT CONSUMER LAYER - Autonomous Agents

Bolt.new is a web-based development platform that enables in-browser application development with AI assistance (Claude 3.5 Sonnet), featuring real-time execution, one-click Netlify deployment, and no-setup required development environment, particularly suited for rapid prototyping and non-technical founders.

AGENT CONSUMER LAYER - Autonomous Agents

Vercel v0 is an AI-powered UI generation platform that enables developers to create React components through natural language prompts, featuring integration with Tailwind CSS and Shadcn/UI, rapid prototyping capabilities, and production-ready code generation with customization options.

AGENT CONSUMER LAYER - Autonomous Agents

Morph AI delivers an enterprise-grade developer assistant that automates engineering tasks across multiple languages and frameworks, enabling developers to focus on high-impact work while ensuring code quality through automated testing and compliance.

AGENT CONSUMER LAYER - Autonomous Agents

Coworked created Harmony, the most comprehensive AI Project Manager coworker, designed to work as a teammate that enhances project management capacity, streamlines execution, and enables teams to deliver complex projects with greater efficiency and confidence.

AI tools that enhance human capabilities and workflow efficiency

AGENT CONSUMER LAYER - Assistive Agents

Sourcegraph's Cody is an AI coding assistant that combines the latest LLM models (including Claude 3 and GPT-4) with comprehensive codebase context to help developers write, understand, and fix code across multiple IDEs, while offering enterprise-grade security and flexible deployment options.

AGENT CONSUMER LAYER - Assistive Agents

Tabnine provides a privacy-focused AI code assistant that offers personalized code generation, testing, and review capabilities, featuring bespoke models trained on team codebases, zero data retention, and enterprise-grade security with support for on-premises deployment.

AGENT CONSUMER LAYER - Assistive Agents

Supermaven provides ultra-fast code completion and assistance with a 1M token context window, supporting multiple IDEs (VS Code, JetBrains, Neovim) and LLMs (GPT-4, Claude 3.5), featuring real-time chat interface, codebase scanning, and 3x faster response times compared to competitors.

AGENT CONSUMER LAYER - Assistive Agents

Windsurf provides an agentic IDE that combines copilot and agent capabilities through 'Flows', featuring Cascade for deep contextual awareness, multi-file editing, command suggestions, and LLM-based search tools, all integrated into a VS Code-based editor for seamless AI-human collaboration.

AGENT CONSUMER LAYER - Assistive Agents

Goose is an open-source autonomous developer agent that operates directly on your machine, capable of executing shell commands, debugging code, managing dependencies, and interacting with development tools like GitHub and Jira, featuring extensible toolkits and support for multiple LLM providers.

AGENT CONSUMER LAYER - Assistive Agents

Hex provides an AI-powered analytics platform featuring Magic AI for query writing, chart building, and debugging, combining LLM capabilities with data warehouse context and semantic models to assist with SQL, Python, and visualization tasks while maintaining enterprise-grade security.

AGENT CONSUMER LAYER - Assistive Agents

Bloop.ai provides a code understanding and modernization platform with AI-powered code conversion from legacy languages to modern ones, featuring automatic behavioral validation, offline operation, continuous delivery support, and enhanced developer productivity through AI assistance.

AGENT CONSUMER LAYER - Assistive Agents

Fabi.ai combines SQL, Python and AI automation into one collaborative platform to help you conquer complex and ad hoc analyses, turning questions into answers.

AGENT CONSUMER LAYER - Assistive Agents

Augment Code provides an AI-powered development assistant that deeply understands codebases, featuring context-aware chat, guided multi-file edits, and intelligent completions, with built-in documentation integration and SOC 2 Type II compliance for enterprise security.

AGENT CONSUMER LAYER - Assistive Agents

Trae from ByteDance provides an adaptive AI-powered IDE that combines multimodal understanding, context-aware code completion, and project building capabilities, featuring image-to-code conversion and real-time collaborative assistance through an integrated chat interface.

Purpose-built AI agents designed for specific functions, like PR reviews and similar.

AGENT CONSUMER LAYER - Specialized Agents

Ellipsis provides AI-powered code reviews and automated bug fixes for GitHub repositories, offering features like style guide enforcement, code generation, and automated testing while maintaining SOC 2 Type 1 compliance and secure processing without data retention.

AGENT CONSUMER LAYER - Specialized Agents

Codeflash is a CI tool that keeps your Python code performant by using AI to automatically find the most optimized version of your code through benchmarking and verifying the new code for correctness.

AGENT CONSUMER LAYER - Specialized Agents

Superflex is a VSCode Extension that builds features from Figma designs, images and text prompts, while maintaining your design standards, code style, and reusing your UI components.

AGENT CONSUMER LAYER - Specialized Agents

Codemod provides AI-powered code migration agents that automate framework migrations, API upgrades, and refactoring at scale, featuring a community registry of migration recipes, AI-assisted codemod creation, and comprehensive migration management capabilities.

AGENT CONSUMER LAYER - Specialized Agents

Codegen provides enterprise-grade static analysis and codemod capabilities for large-scale code transformations, featuring advanced visualization tools, automated documentation generation, and platform engineering templates, with SOC 2 Type II certification for secure refactoring at scale.

Tools for managing and monitoring AI application lifecycles

OBSERVABILITY AND GOVERNANCE LAYER - Development Pipeline

Portkey provides a comprehensive AI gateway and control panel that enables teams to route to 200+ LLMs, implement guardrails, manage prompts, and monitor AI applications with detailed observability features while maintaining SOC2 compliance and HIPAA/GDPR standards.

OBSERVABILITY AND GOVERNANCE LAYER - Development Pipeline

Baseten provides high-performance inference infrastructure featuring up to 1,500 tokens/second throughput, sub-100ms latency, and GPU autoscaling, with Truss open-source model packaging, enterprise security (SOC2, HIPAA), and support for deployment in customer clouds or self-hosted environments.

OBSERVABILITY AND GOVERNANCE LAYER - Development Pipeline

Stack AI provides an enterprise generative AI platform for building and deploying AI applications with a no-code interface, offering pre-built templates, workflow automation, enterprise security features (SOC2, HIPAA, GDPR), and on-premise deployment options with support for multiple AI models and data sources.

Systems for tracking AI performance and behavior

OBSERVABILITY AND GOVERNANCE LAYER - Evaluation & Monitoring

- No description available

OBSERVABILITY AND GOVERNANCE LAYER - Evaluation & Monitoring

Cleanlab provides an AI-powered data curation platform that helps organizations improve their GenAI and ML solutions by automatically detecting and fixing data quality issues, reducing hallucinations, and enabling trustworthy AI deployment while offering VPC integration for enhanced security.

OBSERVABILITY AND GOVERNANCE LAYER - Evaluation & Monitoring

Patronus provides a comprehensive AI evaluation platform built on industry-leading research, offering features for testing hallucinations, security risks, alignment, and performance monitoring, with both pre-built evaluators and custom evaluation capabilities for RAG systems and AI agents.

OBSERVABILITY AND GOVERNANCE LAYER - Evaluation & Monitoring

Log10 provides an end-to-end AI accuracy platform for evaluating and monitoring LLM applications in high-stakes industries, featuring expert-driven evaluation, automated feedback systems, real-time monitoring, and continuous improvement workflows with built-in security and compliance features.

OBSERVABILITY AND GOVERNANCE LAYER - Evaluation & Monitoring

Traceloop provides open-source LLM monitoring through OpenLLMetry, offering real-time hallucination detection, output quality monitoring, and prompt debugging capabilities across 22+ LLM providers with zero-intrusion integration.

OBSERVABILITY AND GOVERNANCE LAYER - Evaluation & Monitoring

WhyLabs provides a comprehensive AI Control Center for monitoring, securing, and optimizing AI applications, offering real-time LLM monitoring, security guardrails, and privacy-preserving observability with SOC 2 Type 2 compliance and support for multiple modalities.

OBSERVABILITY AND GOVERNANCE LAYER - Evaluation & Monitoring

OpenLLMetry provides an open-source observability solution for LLMs built on OpenTelemetry standards, offering easy integration with major observability platforms like Datadog, New Relic, and Grafana, requiring just two lines of code to implement.

OBSERVABILITY AND GOVERNANCE LAYER - Evaluation & Monitoring

LangWatch provides a comprehensive LLMOps platform for optimizing and monitoring LLM performance, featuring automated prompt optimization using DSPy, quality evaluations, performance monitoring, and collaborative tools for AI teams, with enterprise-grade security and self-hosting options.

OBSERVABILITY AND GOVERNANCE LAYER - Evaluation & Monitoring

Elastic Observability is a full-stack observability solution and includes Log Monitoring and Analytics, Cloud and Infrastructure Monitoring, Application Performance Monitoring, Digital Experience Monitoring, Continuous Profiling, AIOps and LLM Observability.

Frameworks for ensuring responsible AI use and regulatory compliance

OBSERVABILITY AND GOVERNANCE LAYER - Risk & Compliance

Socket provides a developer-first security platform that protects against supply chain attacks by scanning dependencies and AI model files for malicious code, featuring real-time detection of 70+ risk signals, integration with major package registries, and trusted by leading AI companies including OpenAI and Anthropic.

Tools for protecting AI systems and managing access and user permissions

OBSERVABILITY AND GOVERNANCE LAYER - Security & Access Control

LiteLLM provides a unified API gateway for managing 100+ LLM providers with OpenAI-compatible formatting, offering features like authentication, load balancing, spend tracking, and monitoring integrations, available both as an open-source solution and enterprise service.

OBSERVABILITY AND GOVERNANCE LAYER - Security & Access Control

Martian provides an intelligent LLM routing system that dynamically selects the optimal model for each request, featuring performance prediction, automatic failover, cost optimization (up to 98% savings), and simplified integration, outperforming single models like GPT-4 while ensuring high uptime.

Resources for customizing and optimizing AI models

ENGINEERING LAYER - Training & Fine-Tuning

Provides tools for efficient fine-tuning of large language models, including techniques like quantization and memory optimization.

ENGINEERING LAYER - Training & Fine-Tuning

Platform for building and deploying machine learning models, with a focus on simplifying the development process and enabling faster iteration.

ENGINEERING LAYER - Training & Fine-Tuning

Modal offers a serverless cloud platform for AI and ML applications that enables developers to deploy and scale workloads instantly with simple Python code, featuring high-performance GPU infrastructure and pay-per-use pricing.

ENGINEERING LAYER - Training & Fine-Tuning

Lightning AI provides a comprehensive platform for building AI products, featuring GPU access, development environments, training capabilities, and deployment tools, with support for enterprise-grade security, multi-cloud deployment, and team collaboration, used by major organizations like NVIDIA and Microsoft.

Development utilities, libraries and services for building AI applications

ENGINEERING LAYER - Tools

CopilotKit is an open-source framework for building custom AI copilots and assistants into any application. Features include In-App AI Chatbot, Generative UI, Copilot Tasks, and RAG capabilities, with easy integration and full customization options.

ENGINEERING LAYER - Tools

Ant Design X is a brand new AGI component library from Ant Design, designed to help developers more easily develop AI product user interfaces. Building on Ant Design, Ant Design X further expands the design specifications for AI products, offering developers more powerful tools and resources.

ENGINEERING LAYER - Tools

Relevance AI provides a no-code AI workforce platform that enables businesses to build, customize, and manage AI agents and tools for various functions like sales and support, featuring Bosh, their AI Sales Agent, while ensuring enterprise-grade security and compliance.

ENGINEERING LAYER - Tools

Greptile provides an AI-powered code analysis platform that helps software teams ship faster by offering intelligent code reviews, codebase chat, and custom dev tools with full contextual understanding, while maintaining SOC2 Type II compliance and optional self-hosting capabilities.

ENGINEERING LAYER - Tools

Sourcegraph provides a code intelligence platform featuring Cody, an AI coding assistant, and advanced code search capabilities that help developers navigate, understand, and modify complex codebases while automating routine tasks across enterprise environments.

ENGINEERING LAYER - Tools

PromptLayer provides a comprehensive prompt engineering platform that enables technical and non-technical teams to collaboratively edit, evaluate, and deploy LLM prompts through a visual CMS, while offering version control, A/B testing, and monitoring capabilities with SOC 2 Type 2 compliance.

ENGINEERING LAYER - Tools

JigsawStack provides a comprehensive suite of AI APIs including web scraping, translation, speech-to-text, OCR, prediction, and prompt optimization, offering globally distributed infrastructure with type-safe SDKs and built-in monitoring capabilities across 99+ locations.

Systems for validating AI performance and reliability

ENGINEERING LAYER - Testing & Quality Assurance

Adaline is the single platform to iterate, evalute, deploy, and monitor prompts for your LLM applications.

ENGINEERING LAYER - Testing & Quality Assurance

Langfuse is an Open Source LLM Engineering platform with a focus on LLM Observability, Evaluation, and Prompt Management. Use automated evaluators or the Langfuse Playground to iteratively test and improve an LLM application. Langfuse is SOC2/ISO27001 certified and can be easily self-hosted at scale.

ENGINEERING LAYER - Testing & Quality Assurance

Confident AI provides an LLM evaluation platform that enables organizations to benchmark, unit test, and monitor their LLM applications through automated regression testing, A/B testing, and synthetic dataset generation, while offering research-backed evaluation metrics and comprehensive observability features.

ENGINEERING LAYER - Testing & Quality Assurance

AI agent specifically designed for software testing and quality assurance, automating the testing process and providing comprehensive test coverage.

ENGINEERING LAYER - Testing & Quality Assurance

Braintrust provides an end-to-end platform for evaluating and testing LLM applications, offering features like prompt testing, custom scoring, dataset management, real-time tracing, and production monitoring, with support for both UI-based and SDK-driven workflows.

ENGINEERING LAYER - Testing & Quality Assurance

Athina is a collaborative AI development platform designed for your team to build, test and monitor AI features.

Core libraries and building blocks for AI application development

INTELLIGENCE LAYER - Frameworks

Provides an agent development platform with advanced memory management for LLMs, enabling developers to build, deploy, and scale production-ready AI agents with transparent reasoning and model-agnostic flexibility.

INTELLIGENCE LAYER - Frameworks

Langbase provides a serverless AI development platform featuring BaseAI (Web AI Framework), composable AI Pipes for agent development, 50-100x cheaper serverless RAG, unified LLM API access, and collaboration tools, with enterprise-grade security and observability.

INTELLIGENCE LAYER - Frameworks

Framework for developing LLM applications with multiple conversational agents that collaborate and interact with humans.

INTELLIGENCE LAYER - Frameworks

A framework for creating and managing workflows and tasks for AI agents.

INTELLIGENCE LAYER - Frameworks

Toolhouse provides a cloud infrastructure platform and universal SDK that enables developers to equip LLMs with actions and knowledge through a Tool Store, offering pre-built optimized functions, low-latency execution, and cross-LLM compatibility with just three lines of code.

INTELLIGENCE LAYER - Frameworks

Composio provides an integration platform for AI agents and LLMs with 250+ pre-built tools, managed authentication, and RPA capabilities, enabling developers to easily connect their AI applications with various services while maintaining SOC-2 compliance and supporting multiple agent frameworks.

INTELLIGENCE LAYER - Frameworks

CrewAI provides a comprehensive platform for building, deploying, and managing multi-agent AI systems, offering both open-source framework and enterprise solutions with support for any LLM and cloud platform, enabling organizations to create automated workflows across various industries.

INTELLIGENCE LAYER - Frameworks

AI Suite provides a unified interface for multiple LLM providers (OpenAI, Anthropic, Azure, Google, AWS, Groq, Mistral, etc.), offering standardized API access with OpenAI-compatible syntax, easy provider switching, and seamless integration capabilities, available as an open-source MIT-licensed framework.

INTELLIGENCE LAYER - Frameworks

Promptflow is Microsoft's open-source development framework for LLM applications, offering tools for flow creation, testing, evaluation, and deployment, featuring visual flow design through VS Code extension, built-in evaluation metrics, and CI/CD integration capabilities.

INTELLIGENCE LAYER - Frameworks

LLMStack is an open-source platform for building AI agents, workflows, and applications, featuring model chaining across major providers, data integration from multiple sources (PDFs, URLs, Audio, Drive), and collaborative development capabilities with granular permissions.

INTELLIGENCE LAYER - Frameworks

Graphlit is a serverless, batteries-included, RAG-as-a-Service platform. Graphlit manages data ingestion, vector embeddings, and LLM flows — allowing teams to quickly build AI apps and agents without the burden of complex data infrastructure.

INTELLIGENCE LAYER - Frameworks

Griptape provides an enterprise AI development platform featuring Off-Prompt™ technology, combining a Python framework for predictable AI development with cloud infrastructure for ETL, RAG, and agent deployment, offering built-in monitoring and policy enforcement capabilities.

Databases and systems for managing and retrieving information

INTELLIGENCE LAYER - Knowledge Engines

Epsilla provides an open-source high performance vector database and an all-in-one platform for RAG and AI Agent powered by your private data and knowledge

INTELLIGENCE LAYER - Knowledge Engines

Supabase Vector provides an open-source vector database built on Postgres and pgvector, offering scalable embedding storage, indexing, and querying capabilities with integrated AI tooling for OpenAI and Hugging Face, featuring enterprise-grade security and global deployment options.

INTELLIGENCE LAYER - Knowledge Engines

Contextual AI provides enterprise-grade RAG (Retrieval-Augmented Generation) solutions that enable organizations in regulated industries to build and deploy production-ready AI applications for searching and analyzing large volumes of business-critical documents.

INTELLIGENCE LAYER - Knowledge Engines

Platform for working with unstructured data, offering tools for data pre-processing, ETL, and integration with LLMs.

INTELLIGENCE LAYER - Knowledge Engines

SciPhi offers R2R, an all-in-one RAG (Retrieval Augmented Generation) solution that enables developers to build and scale AI applications with advanced features including document management, hybrid vector search, and knowledge graphs, while providing superior ingestion performance compared to competitors.

INTELLIGENCE LAYER - Knowledge Engines

pgAI is a PostgreSQL extension that enables AI capabilities directly in the database, featuring automated vector embedding creation, RAG implementation, semantic search, and LLM integration (OpenAI, Claude, Cohere, Llama) with support for high-performance vector operations through pgvector and pgvectorscale.

INTELLIGENCE LAYER - Knowledge Engines

Zep is a memory layer for AI agents that continuously learns from user interactions and changing business data. Zep ensures that your Agent has a complete and holistic view of the user, enabling you to build more personalized and accurate user experiences.

INTELLIGENCE LAYER - Knowledge Engines

FalkorDB provides a graph database platform optimized for AI applications, featuring GraphRAG technology for knowledge graph creation, sub-millisecond querying, and advanced relationship modeling, enabling more accurate and contextual LLM responses through graph-based data relationships.

INTELLIGENCE LAYER - Knowledge Engines

Superduper provides a platform for building and deploying AI applications directly with existing databases, featuring integration with multiple AI frameworks and databases, support for RAG, vector search, and ML workflows, while enabling deployment on existing infrastructure without data movement or ETL pipelines.

INTELLIGENCE LAYER - Knowledge Engines

Elasticsearch's open source vector database offers an efficient way to create, store, and search vector embeddings. Combine text search and vector search for hybrid retrieval, resulting in the best of both capabilities for greater relevance and accuracy.

INTELLIGENCE LAYER - Knowledge Engines

Exa provides business-grade search and web crawling capabilities through meaning-based search, featuring neural search APIs, content scraping, and Websets for creating custom datasets, with seamless integration for RAG applications and LLM contextualization.

INTELLIGENCE LAYER - Knowledge Engines

Firebase Data Connect enables vector similarity search leveraging its underlying PostgreSQL database and Google Vertex AI embeddings.

AI models optimized for software development

INTELLIGENCE LAYER - Specialized Coding Models

Codestral is Mistral AI's specialized 22B code generation model supporting 80+ programming languages, featuring a 32k context window, fill-in-the-middle capabilities, and state-of-the-art performance on coding benchmarks, available through API endpoints and IDE integrations.

INTELLIGENCE LAYER - Specialized Coding Models

Claude 3.5 Sonnet is Anthropic's frontier AI model offering state-of-the-art performance in reasoning, coding, and vision tasks, featuring a 200K token context window, computer use capabilities, and enhanced safety measures, available through multiple platforms including Claude.ai and major cloud providers.

INTELLIGENCE LAYER - Specialized Coding Models

Qwen2.5-Coder is a specialized code-focused model matching GPT-4's coding capabilities, featuring 32B parameters, 128K token context window, support for 80+ programming languages, and state-of-the-art performance on coding benchmarks, available as an open-source Apache 2.0 licensed model.

INTELLIGENCE LAYER - Specialized Coding Models

Poolside Malibu is an enterprise-focused code generation model trained using Reinforcement Learning from Code Execution Feedback (RLCEF), featuring 100K token context, custom fine-tuning capabilities, and deep integration with development environments, available through Amazon Bedrock for secure deployment.

Development environments for sandboxing and building AI applications

INFRASTRUCTURE LAYER - AI Workspaces

Daytona.io is an open-source Development Environment Manager designed to simplify the setup and management of development environments across various platforms, including local, remote, and cloud infrastructures.

INFRASTRUCTURE LAYER - AI Workspaces

Runloop provides a secure, high-performance infrastructure platform that enables developers to build, scale, and deploy AI-powered coding solutions with seamless integration and real-time monitoring capabilities.

INFRASTRUCTURE LAYER - AI Workspaces

E2B provides an open-source runtime platform that enables developers to securely execute AI-generated code in cloud sandboxes, supporting multiple languages and frameworks for AI-powered development use cases.

INFRASTRUCTURE LAYER - AI Workspaces

Morph Labs provides infrastructure for developing and deploying autonomous software engineers at scale, offering Infinibranch for Morph Cloud and focusing on advanced infrastructure for AI-powered development, backed by partnerships with Together AI, Nomic AI, and other leading AI companies.

Services for preparing data for AI applications and training

INFRASTRUCTURE LAYER - Data Ingestion & Transformation

Confluent is a cloud-native data streaming platform that helps companies access, store, and manage data to bring real-time, contextual, highly governed and trustworthy data to your AI systems and applications.

Services for deploying and running AI models

INFRASTRUCTURE LAYER - Model Access & Deployment

OpenAI develops advanced artificial intelligence systems like ChatGPT, GPT-4, and Sora, focusing on creating safe AGI that benefits humanity through products spanning language models, image generation, and video creation while maintaining leadership in AI research and safety.

INFRASTRUCTURE LAYER - Model Access & Deployment

Deepseek develops advanced AI systems capable of performing a wide range of tasks with human-like or superior intelligence. Moving beyond narrow AI, Deepseek focuses on creating generalizable, autonomous systems that can learn, adapt, and apply knowledge across domains. With cutting-edge research in machine learning, deep learning, natural language processing, and robotics, Deepseek aims to push the boundaries of AI innovation. Its applications span various industries, delivering intelligent solutions for complex challenges.

INFRASTRUCTURE LAYER - Model Access & Deployment

Anthropic provides frontier AI models through the Claude family, emphasizing safety and reliability, with offerings including Claude 3.5 Sonnet and Haiku. Their models feature advanced capabilities in reasoning, coding, and computer use, while maintaining strong safety standards through Constitutional AI and comprehensive testing.

INFRASTRUCTURE LAYER - Model Access & Deployment

Mistral AI provides frontier AI models with emphasis on openness and portability, offering both open-weight models (Mistral 7B, Mixtral 8x7B) and commercial models (Mistral Large 2), available through multiple deployment options including serverless APIs, cloud services, and on-premise deployment.

INFRASTRUCTURE LAYER - Model Access & Deployment

Groq provides ultra-fast AI inference infrastructure for openly-available models like Llama 3.1, Mixtral, and Gemma, offering OpenAI-compatible API endpoints with industry-leading speed and simple three-line integration for existing applications.

INFRASTRUCTURE LAYER - Model Access & Deployment

AI21 Labs delivers enterprise-grade generative AI solutions through its Jamba foundation model and RAG engine, enabling organizations to build secure, production-ready AI applications with flexible deployment options and dedicated integration support.

INFRASTRUCTURE LAYER - Model Access & Deployment

Cohere provides an enterprise AI platform featuring advanced language models, embedding, and retrieval capabilities that enables businesses to build production-ready AI applications with flexible deployment options across cloud or on-premises environments.

INFRASTRUCTURE LAYER - Model Access & Deployment

Hugging Face provides fully managed inference infrastructure for ML models with support for multiple hardware options (CPU, GPU, TPU) across various cloud providers, offering autoscaling and dedicated deployments with enterprise-grade security.

INFRASTRUCTURE LAYER - Model Access & Deployment

Cartesia AI delivers real-time multimodal intelligence through state space models that enable fast, private, and offline inference capabilities across devices, offering streaming-first solutions with constant memory usage and low latency.

INFRASTRUCTURE LAYER - Model Access & Deployment

Provides easy access to open-source language models through a simple API, similar to offerings from closed-source providers.

INFRASTRUCTURE LAYER - Model Access & Deployment

Offers an API for accessing and running open-source LLMs, facilitating seamless integration into AI applications.

INFRASTRUCTURE LAYER - Model Access & Deployment

End-to-end platform for deploying and managing AI models, including LLMs, with integrated tools for monitoring, versioning, and scaling.

INFRASTRUCTURE LAYER - Model Access & Deployment

Amazon Bedrock is a fully managed service that provides access to leading foundation models through a unified API, featuring customization capabilities through fine-tuning and RAG, managed AI agents for workflow automation, and enterprise-grade security with HIPAA and GDPR compliance.

INFRASTRUCTURE LAYER - Model Access & Deployment

Serverless platform for running machine learning models, allowing developers to deploy and scale models without managing infrastructure.

INFRASTRUCTURE LAYER - Model Access & Deployment

SambaNova provides custom AI infrastructure featuring their SN40L Reconfigurable Dataflow Unit (RDU), offering world-record inference speeds for large language models, with integrated fine-tuning capabilities and enterprise-grade security, delivered through both cloud and on-premises solutions.

INFRASTRUCTURE LAYER - Model Access & Deployment

BentoML provides an open-source unified inference platform that enables organizations to build, deploy, and scale AI systems across any cloud with high performance and flexibility, while offering enterprise features like auto-scaling, rapid iteration, and SOC II compliance.

INFRASTRUCTURE LAYER - Model Access & Deployment

OpenRouter provides a unified OpenAI-compatible API for accessing 282+ models across multiple providers, offering standardized access, provider routing, and model rankings, with support for multiple SDKs and framework integrations.

Computing infrastructure that powers AI systems and their workspaces

INFRASTRUCTURE LAYER - Cloud Providers

Koyeb provides a high-performance serverless platform specifically optimized for AI workloads, offering GPU/NPU infrastructure, global deployment across 50+ locations, and seamless scaling capabilities for ML model inference and training with built-in observability.

INFRASTRUCTURE LAYER - Cloud Providers

Hyperbolic provides a decentralized GPU marketplace for AI compute and inference, offering up to 80% cost reduction compared to traditional providers, featuring high-throughput inference services, pay-as-you-go GPU access, and compute monetization capabilities with hardware-agnostic support.

INFRASTRUCTURE LAYER - Cloud Providers

Prime Intellect provides a unified GPU marketplace aggregating multiple cloud providers, featuring competitive pricing for various GPUs (H100, A100, RTX series), decentralized training capabilities across distributed clusters, and tools for collaborative AI model development with a focus on open-source innovation.

INFRASTRUCTURE LAYER - Cloud Providers

CoreWeave is an AI-focused cloud provider offering Kubernetes-native infrastructure optimized for GPU workloads, featuring 11+ NVIDIA GPU types, up to 35x faster performance and 80% cost reduction compared to traditional providers, with specialized solutions for ML/AI, VFX, and inference at scale.

INFRASTRUCTURE LAYER - Cloud Providers

Nebius provides an AI-optimized cloud platform featuring latest NVIDIA GPUs (H200, H100, L40S) with InfiniBand networking, offering managed Kubernetes and Slurm clusters, MLflow integration, and specialized infrastructure for AI training, fine-tuning, and inference workloads.

Please read the contribution guidelines before submitting a pull request.

This project is licensed under the Apache 2.0 License - see the LICENSE file for details

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for ai-enablement-stack

Similar Open Source Tools

ai-enablement-stack

The AI Enablement Stack is a curated collection of venture-backed companies, tools, and technologies that enable developers to build, deploy, and manage AI applications. It provides a structured view of the AI development ecosystem across five key layers: Agent Consumer Layer, Observability and Governance Layer, Engineering Layer, Intelligence Layer, and Infrastructure Layer. Each layer focuses on specific aspects of AI development, from end-user interaction to model training and deployment. The stack aims to help developers find the right tools for building AI applications faster and more efficiently, assist engineering leaders in making informed decisions about AI infrastructure and tooling, and help organizations understand the AI development landscape to plan technology adoption.

llm-dev

The 'llm-dev' repository contains source code and resources for the book 'Practical Projects of Large Models: Multi-Domain Intelligent Application Development'. It covers topics such as language model basics, application architecture, working modes, environment setup, model installation, fine-tuning, quantization, multi-modal model applications, chat applications, programming large model applications, VS Code plugin development, enhanced generation applications, translation applications, intelligent agent applications, speech model applications, digital human applications, model training applications, and AI town applications.

lowcode-vscode

This repository is a low-code tool that supports ChatGPT and other LLM models. It provides functionalities such as OCR translation, generating specified format JSON, translating Chinese to camel case, translating current directory to English, and quickly creating code templates. Users can also generate CURD operations for managing backend list pages. The tool allows users to select templates, initialize query form configurations using OCR, initialize table configurations using OCR, translate Chinese fields using ChatGPT, and generate code without writing a single line. It aims to enhance productivity by simplifying code generation and development processes.

MachineLearning

MachineLearning is a repository focused on practical applications in various algorithm scenarios such as ship, education, and enterprise development. It covers a wide range of topics from basic machine learning and deep learning to object detection and the latest large models. The project utilizes mature third-party libraries, open-source pre-trained models, and the latest technologies from related papers to document the learning process and facilitate direct usage by a wider audience.

100-days-of-code

100 Days of Code is a repository containing 100 frontend challenges with professional designs, user stories, and acceptance criteria. It provides a platform for developers to practice coding daily, from beginner-friendly cards to advanced dashboards. The challenges are structured for AI collaboration, allowing users to work with coding agents like Claude, Cursor, and GitHub Copilot. The repository also includes an AI Collaboration Log template to document the use of AI tools and showcase effective collaboration. Developers can replicate design mockups, add interactivity with JavaScript, and share their solutions on social media platforms.

AIHub

AIHub is a client that integrates the capabilities of multiple large models, allowing users to quickly and easily build their own personalized AI assistants. It supports custom plugins for endless possibilities. The tool provides powerful AI capabilities, rich configuration options, customization of AI assistants for text and image conversations, AI drawing, installation of custom plugins, personal knowledge base building, AI calendar generation, support for AI mini programs, and ongoing development of additional features. Users can download the application package from the release section, resolve issues related to macOS app installation, and contribute ideas by submitting issues. The project development involves installation, development, and building processes for different operating systems.

AgentVerse

AgentVerse is an open-source ecosystem for intelligent agents, supporting multiple mainstream AI models to facilitate autonomous discussions, thought collisions, and knowledge exploration. Each intelligent agent can play a unique role here, collectively creating wisdom beyond individuals.

AIBotPublic

AIBotPublic is an open-source version of AIBotPro, a comprehensive AI tool that provides various features such as knowledge base construction, AI drawing, API hosting, and more. It supports custom plugins and parallel processing of multiple files. The tool is built using bootstrap4 for the frontend, .NET6.0 for the backend, and utilizes technologies like SqlServer, Redis, and Milvus for database and vector database functionalities. It integrates third-party dependencies like Baidu AI OCR, Milvus C# SDK, Google Search, and more to enhance its capabilities.

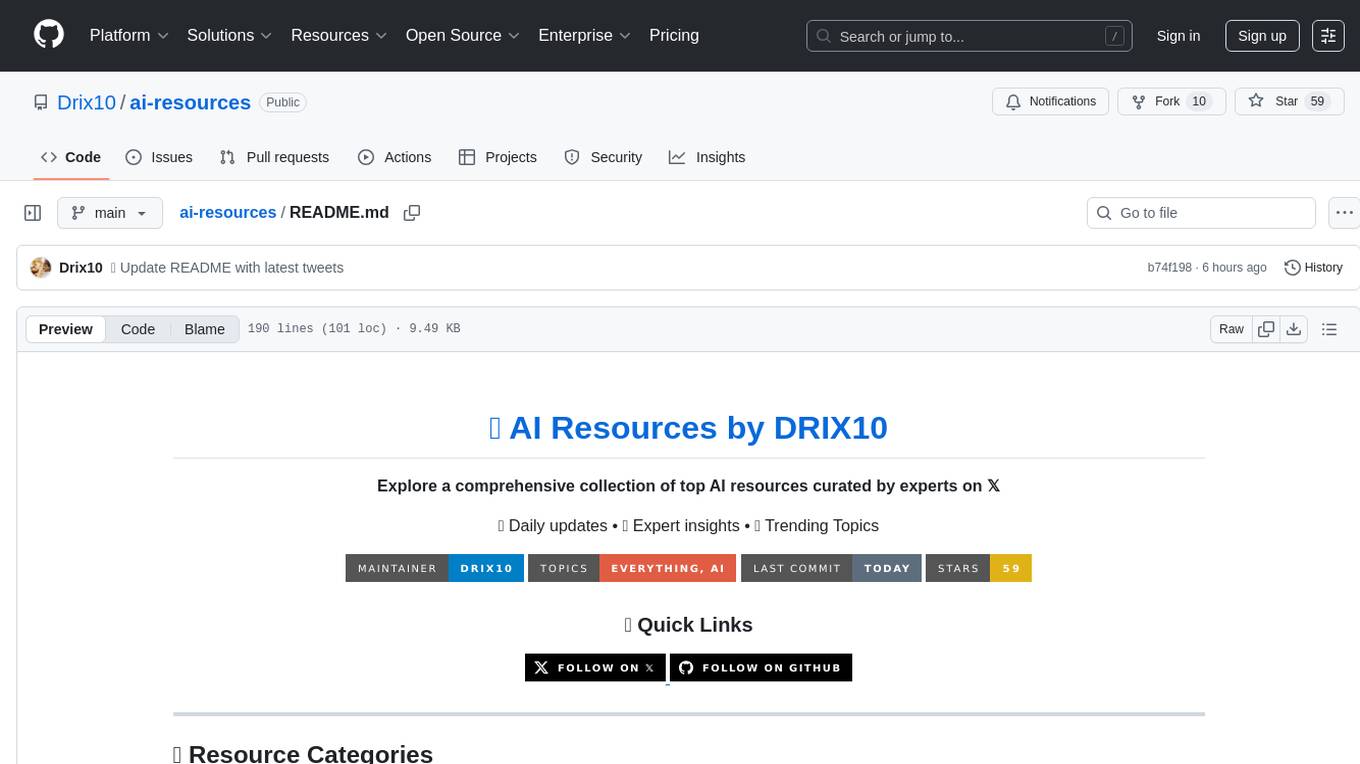

ai-resources

AI Resources by DRIX10 is a comprehensive collection of top AI resources curated by experts. It provides daily updates, expert insights, and trending topics across various categories such as AI Developer Tools, AI Education, AI Artists and Creators, AI Companies and Ventures, and more. Users can explore resources related to different aspects of AI, including healthcare, music generation, real estate, content creation, climate technology, cybersecurity, and more.

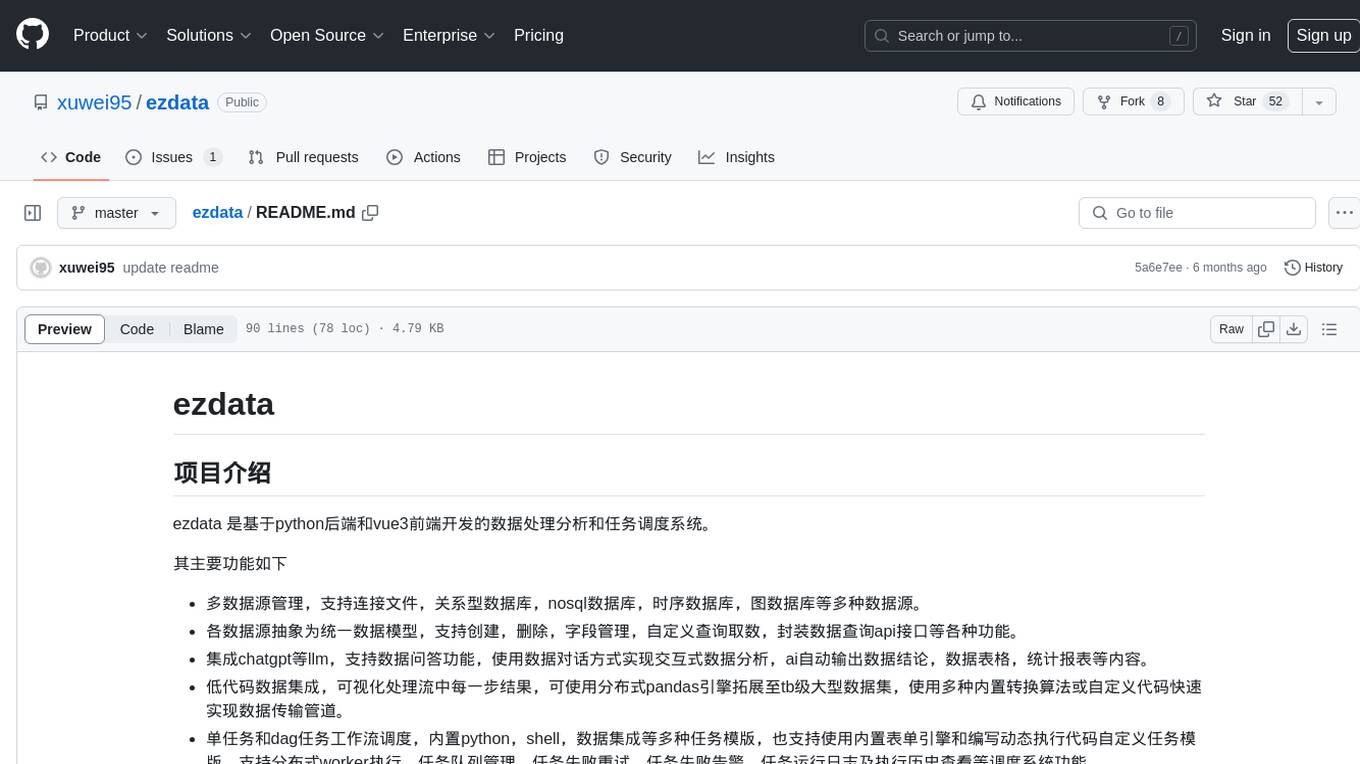

ezdata

Ezdata is a data processing and task scheduling system developed based on Python backend and Vue3 frontend. It supports managing multiple data sources, abstracting various data sources into a unified data model, integrating chatgpt for data question and answer functionality, enabling low-code data integration and visualization processing, scheduling single and dag tasks, and integrating a low-code data visualization dashboard system.

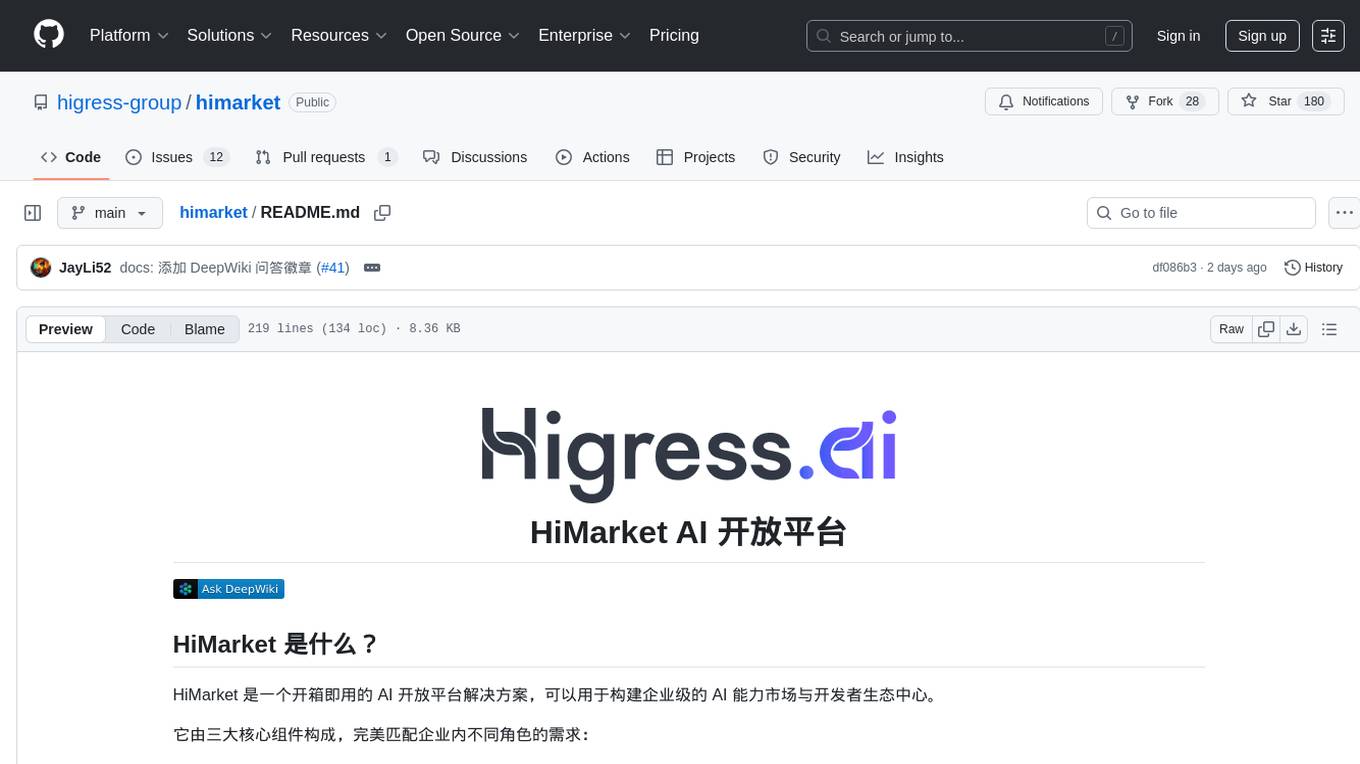

himarket

HiMarket is an out-of-the-box AI open platform solution that can be used to build enterprise-level AI capability markets and developer ecosystem centers. It consists of three core components tailored to different roles within the enterprise: 1. AI open platform management backend (for administrators/operators) for easy packaging of diverse AI capabilities such as model services, MCP Server, Agent, etc., into standardized 'AI products' in API form with comprehensive documentation and examples for one-click publishing to the portal. 2. AI open platform portal (for developers/internal users) as a 'storefront' for developers to complete registration, create consumers, obtain credentials, browse and subscribe to AI products, test online, and monitor their own call status and costs clearly. 3. AI Gateway: As a subproject of the Higress community, the Higress AI Gateway carries out all AI call authentication, security, flow control, protocol conversion, and observability capabilities.

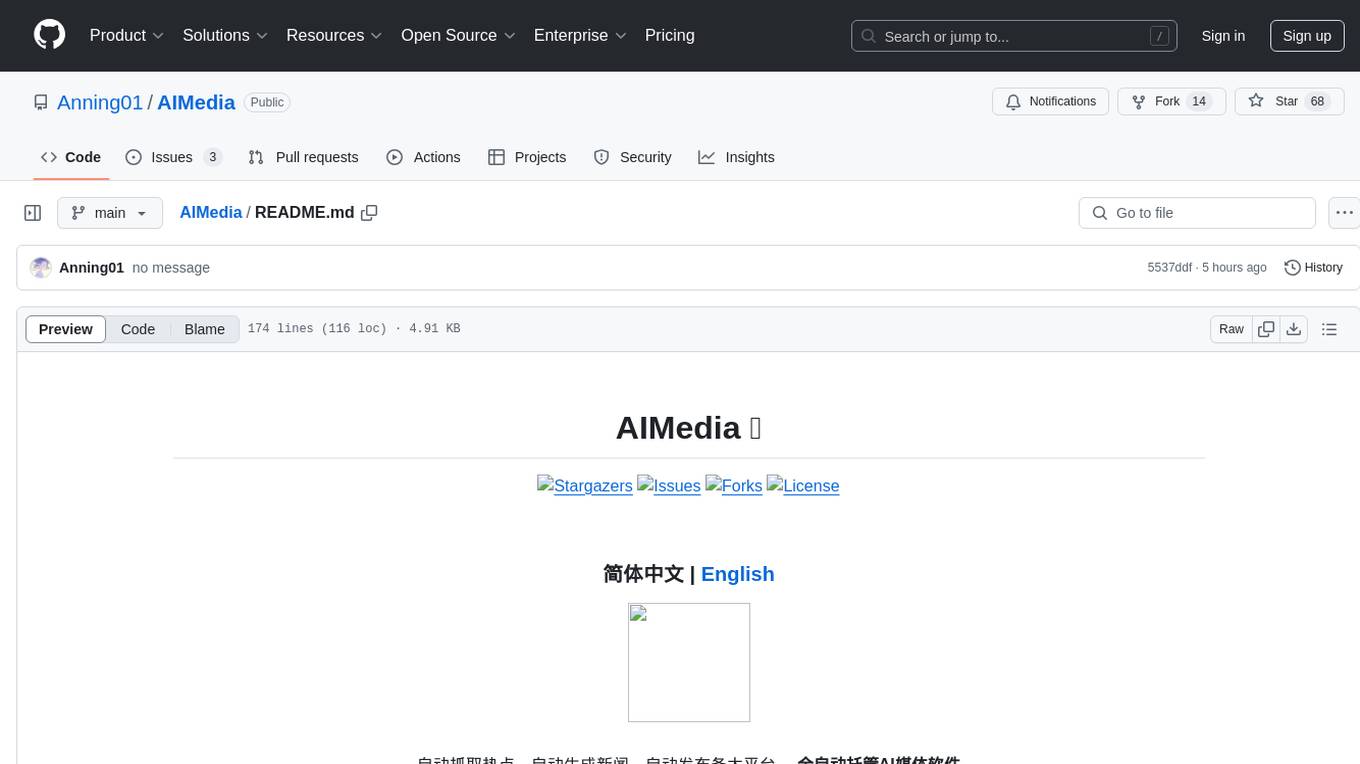

AIMedia

AIMedia is a fully automated AI media software that automatically fetches hot news, generates news, and publishes on various platforms. It supports hot news fetching from platforms like Douyin, NetEase News, Weibo, The Paper, China Daily, and Sohu News. Additionally, it enables AI-generated images for text-only news to enhance originality and reading experience. The tool is currently commercialized with plans to support video auto-generation for platform publishing in the future. It requires a minimum CPU of 4 cores or above, 8GB RAM, and supports Windows 10 or above. Users can deploy the tool by cloning the repository, modifying the configuration file, creating a virtual environment using Conda, and starting the web interface. Feedback and suggestions can be submitted through issues or pull requests.

claude-code-engingeering

Claude Code is an advanced AI Agent framework that goes beyond a smart command-line tool. It is programmable, extensible, and composable, allowing users to teach it project specifications, split tasks into sub-agents, provide domain skills, automate responses to specific events, and integrate it into CI/CD pipelines for unmanned operation. The course aims to transform users from 'users' of Claude Code to 'masters' who can design agent 'memories', delegate tasks to sub-agents, build reusable skill packages, drive automation workflows with code, and collaborate with intelligent agents in a dance of development.

bedrock-book

This repository contains sample code for hands-on exercises related to the book 'Amazon Bedrock 生成AIアプリ開発入門'. It allows readers to easily access and copy the code. The repository also includes directories for each chapter's hands-on code, settings, and a 'requirements.txt' file listing necessary Python libraries. Updates and error fixes will be provided as needed. Users can report issues in the repository's 'Issues' section, and errata will be published on the SB Creative official website.

awesome-chatgpt-zh

The Awesome ChatGPT Chinese Guide project aims to help Chinese users understand and use ChatGPT. It collects various free and paid ChatGPT resources, as well as methods to communicate more effectively with ChatGPT in Chinese. The repository contains a rich collection of ChatGPT tools, applications, and examples.

For similar tasks

python-tutorial-notebooks

This repository contains Jupyter-based tutorials for NLP, ML, AI in Python for classes in Computational Linguistics, Natural Language Processing (NLP), Machine Learning (ML), and Artificial Intelligence (AI) at Indiana University.

open-parse

Open Parse is a Python library for visually discerning document layouts and chunking them effectively. It is designed to fill the gap in open-source libraries for handling complex documents. Unlike text splitting, which converts a file to raw text and slices it up, Open Parse visually analyzes documents for superior LLM input. It also supports basic markdown for parsing headings, bold, and italics, and has high-precision table support, extracting tables into clean Markdown formats with accuracy that surpasses traditional tools. Open Parse is extensible, allowing users to easily implement their own post-processing steps. It is also intuitive, with great editor support and completion everywhere, making it easy to use and learn.

MoonshotAI-Cookbook

The MoonshotAI-Cookbook provides example code and guides for accomplishing common tasks with the MoonshotAI API. To run these examples, you'll need an MoonshotAI account and associated API key. Most code examples are written in Python, though the concepts can be applied in any language.

AHU-AI-Repository

This repository is dedicated to the learning and exchange of resources for the School of Artificial Intelligence at Anhui University. Notes will be published on this website first: https://www.aoaoaoao.cn and will be synchronized to the repository regularly. You can also contact me at [email protected].

modern_ai_for_beginners

This repository provides a comprehensive guide to modern AI for beginners, covering both theoretical foundations and practical implementation. It emphasizes the importance of understanding both the mathematical principles and the code implementation of AI models. The repository includes resources on PyTorch, deep learning fundamentals, mathematical foundations, transformer-based LLMs, diffusion models, software engineering, and full-stack development. It also features tutorials on natural language processing with transformers, reinforcement learning, and practical deep learning for coders.

Building-AI-Applications-with-ChatGPT-APIs

This repository is for the book 'Building AI Applications with ChatGPT APIs' published by Packt. It provides code examples and instructions for mastering ChatGPT, Whisper, and DALL-E APIs through building innovative AI projects. Readers will learn to develop AI applications using ChatGPT APIs, integrate them with frameworks like Flask and Django, create AI-generated art with DALL-E APIs, and optimize ChatGPT models through fine-tuning.

examples

This repository contains a collection of sample applications and Jupyter Notebooks for hands-on experience with Pinecone vector databases and common AI patterns, tools, and algorithms. It includes production-ready examples for review and support, as well as learning-optimized examples for exploring AI techniques and building applications. Users can contribute, provide feedback, and collaborate to improve the resource.

lingoose

LinGoose is a modular Go framework designed for building AI/LLM applications. It offers the flexibility to import only the necessary modules, abstracts features for customization, and provides a comprehensive solution for developing AI/LLM applications from scratch. The framework simplifies the process of creating intelligent applications by allowing users to choose preferred implementations or create their own. LinGoose empowers developers to leverage its capabilities to streamline the development of cutting-edge AI and LLM projects.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.