OSHW-SenseCAP-Watcher

SenseCAP Watcher: Intelligent ESP32S3-based device with Himax WiseEye2 AI, capable of seeing, hearing, and interacting using advanced AI and the LLM-enabled SenseCraft suite. Perfect for environment-aware applications in automation and security.

Stars: 77

SenseCAP Watcher is a monitoring device built on ESP32S3 with Himax WiseEye2 HX6538 AI chip, excelling in image and vector data processing. It features a camera, microphone, and speaker for visual, auditory, and interactive capabilities. With LLM-enabled SenseCraft suite, it understands commands, perceives surroundings, and triggers actions. The repository provides firmware, hardware documentation, and applications for the Watcher, along with detailed guides for setup, task assignment, and firmware flashing.

README:

SenseCAP Watcher is built on ESP32S3, incorporating a Himax WiseEye2 HX6538 AI chip with Arm Cortex-M55 & Ethos-U55, excelling in image and vector data processing. Equipped with a camera, microphone, speaker, SenseCAP Watcher can see, hear, talk. Plus, with LLM-enabled SenseCraft suite, SenseCAP Watcher understands your commands, perceives its surroundings, and triggers actions accordingly.

-

📁 Firmwave: Watcher's factory firmware bin files.

-

📁 Hardware: Open source hardware documentation available for Watcher.

-📎 SenseCAP Watcher v1.0 Schematic

-

📁 Documentation: All Wiki documents about Watcher.

-

📁 Applications: Application projects using SenseCAP Watcher will be continuously updated.

-

📁 assets: Image material used in the README.

Before using Watcher as a space assistant, we must ensure that the following three steps are performed successfully, which is the basis for all subsequent steps.

The Watcher comes with a unique packaging that doubles as a stylish, calendar-like stand. By placing the Watcher inside this stand, you can create an attractive decoration for your home. Follow the step-by-step instructions below and refer to the accompanying video for a visual guide on how to install your Watcher in its stand.

https://files.seeedstudio.com/wiki/watcher_getting_started/watcher-packaging.mp4

To power on your Watcher device, press and hold the wheel button located on the upper right corner for approximately 3 seconds until the Seeed Studio logo appears on the screen.

Once the logo is displayed, release the button and allow the device to complete its initialization process. After a few moments, the Watcher will display its main menu, indicating that it is ready for use.

If the device won't turn on with a long press, the device may be low on power and you may need to connect a cable to power it up before you can wake up the Watcher.

SenseCraft Pro will automatically activate the trial service when your device is tethered to the SenseCraft APP.

After powering on your Watcher, if it has not been previously bound to a device, it will prompt you to connect to the SenseCraft app. Alternatively, you can find the "Connect to APP" option in the Settings menu. The Watcher will then display a QR code to download the SenseCraft app.

You can either scan the QR code or use the provided link to download the app.

To proceed with binding your Watcher to the SenseCraft app, follow these steps:

1. On your Watcher, rotate the wheel button clockwise to enter the QR code interface for binding the device.

2. Open the SenseCraft app on your mobile device and tap the plus sign (+) in the top right corner to add your Watcher by scanning the QR code.

Ensure that your mobile device's Bluetooth permissions are enabled, as the binding process requires a Bluetooth connection.

3. After successfully scanning the QR code, the app will navigate to the network configuration page. Select a 2.4GHz Wi-Fi network to connect your Watcher to the internet.

Tap the "Next" button to proceed.

4. Choose a name for your Watcher and select an appropriate group for it.

Tap the "Finish" button to complete the setup process.

5. The SenseCraft app will display a tutorial page, providing guidance on how to use your Watcher. Take a moment to familiarize yourself with the instructions.

Once the setup is complete, the app will open a chat window to communicate with your Watcher, while the Watcher will return to its main menu.

With the binding process finished, your Watcher is now connected to the SenseCraft app, and you can start exploring its features and capabilities. The app serves as a convenient way to interact with your Watcher, adjust settings, and receive notifications remotely.

Next, select the method you want to use to schedule an executable task for Watcher.

To run a task from the Task Templates on your Watcher, follow these steps:

1. From the main menu, use the wheel button to navigate to the Task Templates option.

2. Press the wheel button to enter the Task Templates submenu.

3. Scroll through the available task templates using the wheel button until you find the desired model task.

4. Press the wheel button to select and start running the chosen task.

Once the task begins, the Watcher will display an animated emoji face on the screen. This emoji indicates that the device is actively monitoring for the target object specified by the selected task template.

When the Watcher detects the target object, the display will switch from the emoji animation to a real-time view of the detected object. This allows you to see what the Watcher has identified.

If the target object moves out of the Watcher's view or is no longer detected, the display will automatically return to the animated emoji face, signifying that the device is still monitoring for the target.

Task Templates:

-

👨🦰 Human Detection:

-

This task template is designed to detect the presence of human beings.

-

When the Watcher identifies a person, it will trigger an alarm notification.

-

-

😼 Pet Detection:

-

The Pet Detection task template focuses on recognizing cats or dogs.

-

If the Watcher detects a cat, it will trigger an alarm notification.

-

-

🖖 Gesture Detection:

-

This task template is configured to identify the "paper" hand gesture.

-

When the Watcher recognizes the paper gesture, it will trigger an alarm notification.

-

Each of these task templates has specific alarm triggering conditions based on the detection of their respective targets: humans, cats, or the paper gesture. By using these templates, you can quickly set up the Watcher to monitor for the desired object without the need for extensive configuration.

Fees may apply for using some of SenseCraft AI's services, please refer to the documentation below for details: SenseCraft AI for Watcher Plans and Benefits

The SenseCraft APP allows you to send Tasks to your Watcher device. In this example, we will demonstrate the process of sending a task using one of the sample tasks provided by Watcher. Let's use the command If you see a candles, please notify me.

1. Open the SenseCraft APP and navigate to the chat window for your connected Watcher device.

2. In the chat window, either select the desired task from the available options or manually type in the command If you see a candles, please notify me. Send the command to your Watcher by tapping the send button or pressing enter.

3. Upon receiving the command, Watcher will interpret it and break it down into a task flow consisting of When, Do, and Capture Frequency components.

Review the parsed task flow to ensure that Watcher has correctly understood your command. The app will display the interpreted task details for your verification. If any part of the task flow does not align with your intended command, you can modify the task details by accessing the Detail Config section within the app.

4. Once you have confirmed or adjusted the task details, click the Run button to send the finalized task to your Watcher.

Watcher will download the task instructions, and once the download is complete, it will transform into a vigilant monitoring system, ready to detect any instances of candles.

5. If Watcher identifies a candles, it will send an alert based on the predefined settings, which may include flashing lights, audible alarms, and notifications through the SenseCraft APP.

Please note that due to the time constraints of the task flow, there will be a minimum interval between consecutive alerts to avoid excessive notifications.

By following these steps, you can effectively send commands to your Watcher using the SenseCraft APP, enabling it to perform specific monitoring tasks and notify you when the specified conditions are met.

Remember to regularly review and adjust your Watcher's settings and task flows to ensure optimal performance and alignment with your monitoring requirements. For a more detailed description and explanation of the APP's tasks and options, as well as a detailed description of the intervals, please read Watcher Task Assignment Guideline to learn more.

Fees may apply for using some of SenseCraft AI's services, please refer to the documentation below for details: SenseCraft AI for Watcher Plans and Benefits

The Watcher offers a convenient and intuitive way to send tasks or engage in conversation using voice commands, thanks to its "Push to Talk" feature. This functionality is accessible from any screen or interface on the device, making it easy to interact with the Watcher without navigating through menus. Here's a step-by-step guide on how to use this feature:

-

Activate Push to Talk:

- Locate the Wheel Button on the top-right corner of the Watcher.

- Press and hold the Wheel Button to enter the voice input interface.

-

Speak Your Command or Message:

- While holding the Wheel Button, clearly speak your task or message to the Watcher.

- You can assign tasks, such as "Tell me if the baby is crying" or "If the dog is stealing food, say stop Copper". (Copper is the name of my dog.)

-

Release the Wheel Button:

- Once you have finished speaking, release the Wheel Button.

- The Watcher will process your voice input and determine whether it is a task assignment.

-

Task Assignment:

- If the Watcher recognizes your voice input as a task assignment, it will automatically break down your task into relevant components.

- The Watcher will display cards on its screen, showing the Object (what to monitor), Behavior (what action to look for), Notification (how to alert you), Time Range (when to monitor), and Frequency (how often to monitor).

- Review the displayed information to ensure it accurately represents your intended task.

- If the details are correct, confirm the task, and the Watcher will begin executing it according to the specified parameters.

- If Watcher misunderstands your task, long press the scroll wheel button and you can continue through the dialogue to try to correct Watcher's understanding of the task. If you still can't understand it correctly after several attempts, we recommend that you use the SenseCraft APP to place the task.

Tips for Optimal Usage:

- Speak clearly and at a moderate pace to ensure accurate voice recognition.

- When speaking, please get as close to Watcher as possible, about 3 ~ 10cm distance speaking recognition accuracy is best.

- Minimize background noise to improve the Watcher's ability to understand your voice commands.

- Be specific and concise when assigning tasks to help the Watcher accurately interpret your intentions.

By leveraging the Push to Talk feature, you can effortlessly send tasks and engage in conversations with the Watcher, making your interaction with the device more natural and efficient.

If you encounter an error of 0x7002, it means that the current Watcher's network status is not good and the audio service call failed, please change the network or location and retry again.

-

Tap the screen once or press the wheel button.

-

A confirmation popup will appear with two options: Main Menu and End Task. To terminate the task, either tap End Task on the screen or use the scroll wheel to navigate to "End Task" and press the scroll wheel button to confirm.

- Alternatively, you can end the task via the SenseCraft APP on your mobile device by pressing the End button on the task card.

When you download the firmware for SenseCAP Watcher, you will notice that it comes with two different firmwares, so keep an eye out for the distinction.

-

ESP32 Firmware

-

Himax Firmware

Please be especially careful with the partition address of the flash firmware to avoid incorrectly erasing the SenseCAP Watcher's own device information (EUI, etc.), otherwise the device may not be able to connect to the SenseCraft server properly! Please make a note of the necessary information about the device before flashing the firmware to ensure that there is a way to recover it!

You will see these messages when the device is powered up and switched on.

Please also keep your information about these devices safe to avoid losing them!

If you are using Linux/MacOS, you can flash Watcher's firmware using the command line.

You can use the following two commands to complete the ESP32 firmware flash.

pip3 install --upgrade esptool

esptool.py --chip esp32s3 -b 2000000 --before default_reset --after hard_reset write_flash --flash_mode dio --flash_size 32MB --flash_freq 80m 0x0 bootloader/bootloader.bin 0x8000 partition_table/partition-table.bin 0x10d000 ota_data_initial.bin 0x110000 factory_firmware.bin 0x1910000 srmodels/srmodels.bin 0x1a10000 storage.bin

If you are using Windows, you can use esptool.

You will need to flash 5 firmware for the SenseCAP Watcher and you will find two serial ports (one for esp32, another for himax) when the Watcher is connected to the computer. The serial port for flash memory could be any of these.

Open esptool and select the MCU model:

Follow the diagram below to enter the correct address and select the correct firmware.

If you find that there is no progress when flashing, then the wrong flash serial port may be selected and you may need to switch to another one.

We do not recommend that any user make any kind of modifications to the Himax firmware. The methods we provide are limited to use for restoring the firmware in special cases.

Next, you need to switch to another serial port, which is the serial port of the Himax chip, please operate the Himax chip under this serial port.

Make sure your Python version is above 3.8.

Execute the following commands to install python-sscma and finish burning the firmware and model. Models only support model files that are supported in SenseCraft AI.

pip3 install python-sscma

sscma.cli flasher -f firmware.img

SenseCAP Watcher source repository address:

https://github.com/Seeed-Studio/SenseCAP-Watcher-Firmware

The project provides basic SDK for the SenseCAP Watcher, as well as the examples for getting started. It is based on the ESP-IDF.

Follow instructions in this guide

ESP-IDF - Get Started

to setup the built toolchain used by SSCMA examples. Currently we're using the latest version v5.1.

-

Clone our repository.

git clone https://github.com/Seeed-Studio/SenseCAP-Watcher

-

Go to

SenseCAP-Watcherfolder.cd SenseCAP-Watcher -

Fetch the submodules.

git submodule update --init

-

Go to examples folder and list all available examples.

cd examples && \ ls

-

Choose a

factory_firmwareand enter its folder.cd factory_firmware -

Generate build config using ESP-IDF.

# set build target idf.py set-target esp32s3 -

Build the demo firmware.

idf.py build

-

Flash the demo firmware to device and Run.

To flash (the target serial port may vary depend on your operating system, please replace

/dev/ttyACM0with your device serial port).idf.py --port /dev/ttyACM0 flashMonitor the serial output.

idf.py --port /dev/ttyACM0 monitor

-

Use

Ctrl+]to exit monitor. -

The previous two commands can be combined.

idf.py --port /dev/ttyACM0 flash monitor

Introducing the Watcher Product Catalogue, your comprehensive guide to setting up, using, and maintaining your Watcher device. Discover the key features, customization options, and mounting possibilities that make Watcher the perfect monitoring solution. With detailed instructions, troubleshooting tips, and specifications, this catalogue empowers you to fully utilize your Watcher and experience peace of mind through advanced surveillance technology.

This project is licensed under the Apache License, Version 2.0 (the "License");

You may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and limitations under the License.

Please see the LICENSE file for more details.

Special thanks to all contributors and supporters that starred this repository.

Our amazing contributors:

Do you like this project? Please join us or give a ⭐. This will help to attract more contributors.

Do you have an idea or found a bug? Please open an issue or start a discussion.

Follow us at

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for OSHW-SenseCAP-Watcher

Similar Open Source Tools

OSHW-SenseCAP-Watcher

SenseCAP Watcher is a monitoring device built on ESP32S3 with Himax WiseEye2 HX6538 AI chip, excelling in image and vector data processing. It features a camera, microphone, and speaker for visual, auditory, and interactive capabilities. With LLM-enabled SenseCraft suite, it understands commands, perceives surroundings, and triggers actions. The repository provides firmware, hardware documentation, and applications for the Watcher, along with detailed guides for setup, task assignment, and firmware flashing.

burpference

Burpference is an open-source extension designed to capture in-scope HTTP requests and responses from Burp's proxy history and send them to a remote LLM API in JSON format. It automates response capture, integrates with APIs, optimizes resource usage, provides color-coded findings visualization, offers comprehensive logging, supports native Burp reporting, and allows flexible configuration. Users can customize system prompts, API keys, and remote hosts, and host models locally to prevent high inference costs. The tool is ideal for offensive web application engagements to surface findings and vulnerabilities.

agent-lightning

Agent Lightning is a lightweight and efficient tool for automating repetitive tasks in the field of data analysis and machine learning. It provides a user-friendly interface to create and manage automated workflows, allowing users to easily schedule and execute data processing, model training, and evaluation tasks. With its intuitive design and powerful features, Agent Lightning streamlines the process of building and deploying machine learning models, making it ideal for data scientists, machine learning engineers, and AI enthusiasts looking to boost their productivity and efficiency in their projects.

Open_Data_QnA

Open Data QnA is a Python library that allows users to interact with their PostgreSQL or BigQuery databases in a conversational manner, without needing to write SQL queries. The library leverages Large Language Models (LLMs) to bridge the gap between human language and database queries, enabling users to ask questions in natural language and receive informative responses. It offers features such as conversational querying with multiturn support, table grouping, multi schema/dataset support, SQL generation, query refinement, natural language responses, visualizations, and extensibility. The library is built on a modular design and supports various components like Database Connectors, Vector Stores, and Agents for SQL generation, validation, debugging, descriptions, embeddings, responses, and visualizations.

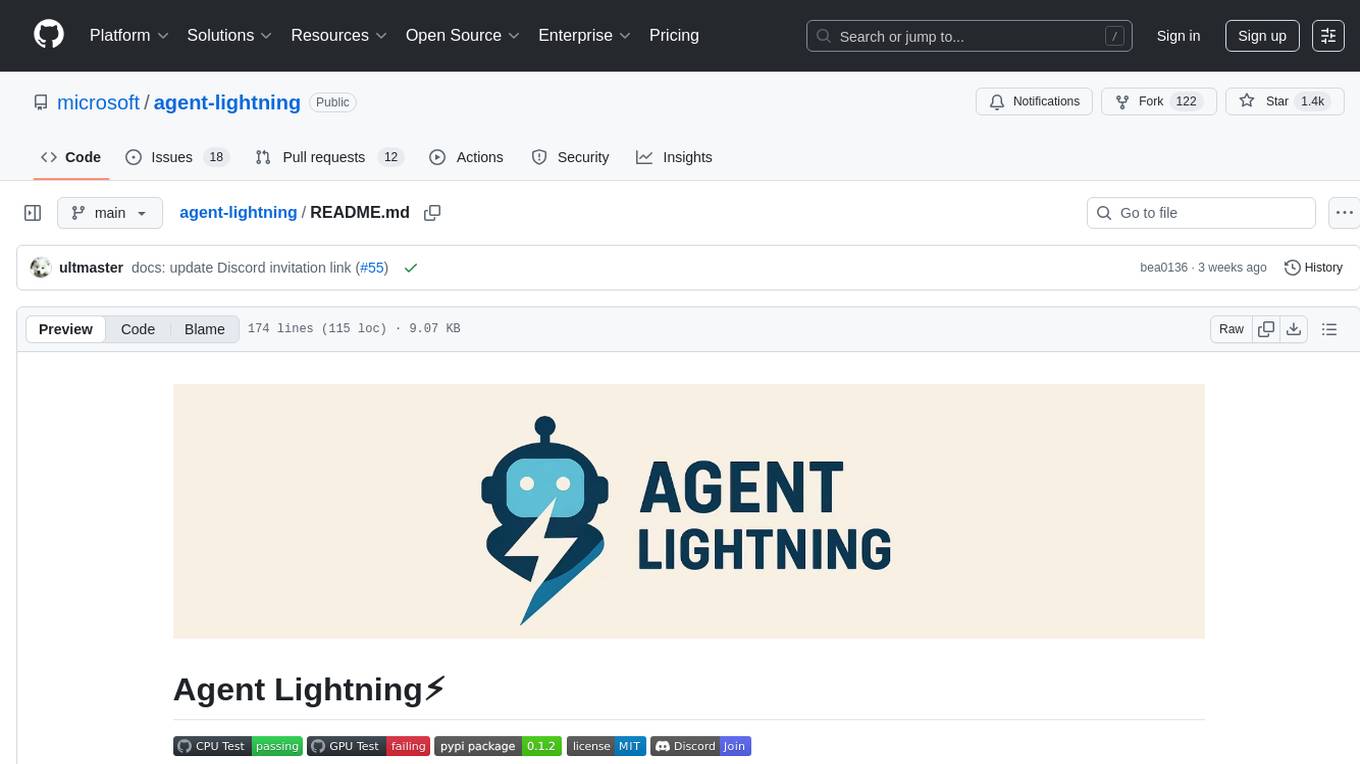

AI-Player

AI-Player is a Minecraft mod that adds an 'intelligent' second player to the game to combat loneliness while playing solo. It aims to enhance gameplay by providing companionship and interactive features. The mod leverages advanced AI algorithms and integrates with external tools to enhance the player experience. Developed with a focus on addressing the social aspect of gaming, AI-Player is a community-driven project that continues to evolve with user feedback and contributions.

OpenDAN-Personal-AI-OS

OpenDAN is an open source Personal AI OS that consolidates various AI modules for personal use. It empowers users to create powerful AI agents like assistants, tutors, and companions. The OS allows agents to collaborate, integrate with services, and control smart devices. OpenDAN offers features like rapid installation, AI agent customization, connectivity via Telegram/Email, building a local knowledge base, distributed AI computing, and more. It aims to simplify life by putting AI in users' hands. The project is in early stages with ongoing development and future plans for user and kernel mode separation, home IoT device control, and an official OpenDAN SDK release.

stride-gpt

STRIDE GPT is an AI-powered threat modelling tool that leverages Large Language Models (LLMs) to generate threat models and attack trees for a given application based on the STRIDE methodology. Users provide application details, such as the application type, authentication methods, and whether the application is internet-facing or processes sensitive data. The model then generates its output based on the provided information. It features a simple and user-friendly interface, supports multi-modal threat modelling, generates attack trees, suggests possible mitigations for identified threats, and does not store application details. STRIDE GPT can be accessed via OpenAI API, Azure OpenAI Service, Google AI API, or Mistral API. It is available as a Docker container image for easy deployment.

AutoGPT

AutoGPT is a revolutionary tool that empowers everyone to harness the power of AI. With AutoGPT, you can effortlessly build, test, and delegate tasks to AI agents, unlocking a world of possibilities. Our mission is to provide the tools you need to focus on what truly matters: innovation and creativity.

LLM-Minutes-of-Meeting

LLM-Minutes-of-Meeting is a project showcasing NLP & LLM's capability to summarize long meetings and automate the task of delegating Minutes of Meeting(MoM) emails. It converts audio/video files to text, generates editable MoM, and aims to develop a real-time python web-application for meeting automation. The tool features keyword highlighting, topic tagging, export in various formats, user-friendly interface, and uses Celery for asynchronous processing. It is designed for corporate meetings, educational institutions, legal and medical fields, accessibility, and event coverage.

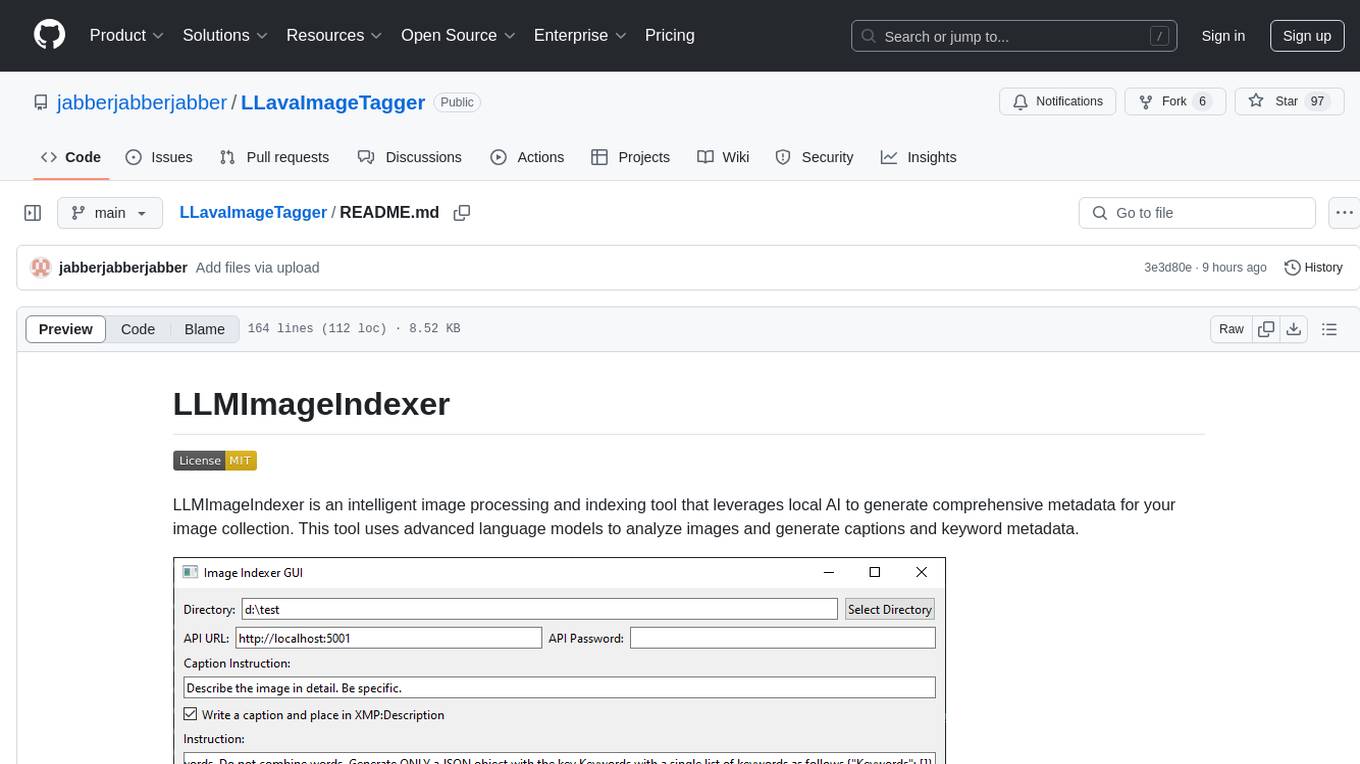

LLavaImageTagger

LLMImageIndexer is an intelligent image processing and indexing tool that leverages local AI to generate comprehensive metadata for your image collection. It uses advanced language models to analyze images and generate captions and keyword metadata. The tool offers features like intelligent image analysis, metadata enhancement, local processing, multi-format support, user-friendly GUI, GPU acceleration, cross-platform support, stop and start capability, and keyword post-processing. It operates directly on image file metadata, allowing users to manage files, add new files, and run the tool multiple times without reprocessing previously keyworded files. Installation instructions are provided for Windows, macOS, and Linux platforms, along with usage guidelines and configuration options.

Sentient

Sentient is a personal, private, and interactive AI companion developed by Existence. The project aims to build a completely private AI companion that is deeply personalized and context-aware of the user. It utilizes automation and privacy to create a true companion for humans. The tool is designed to remember information about the user and use it to respond to queries and perform various actions. Sentient features a local and private environment, MBTI personality test, integrations with LinkedIn, Reddit, and more, self-managed graph memory, web search capabilities, multi-chat functionality, and auto-updates for the app. The project is built using technologies like ElectronJS, Next.js, TailwindCSS, FastAPI, Neo4j, and various APIs.

OpenCopilot

OpenCopilot allows you to have your own product's AI copilot. It integrates with your underlying APIs and can execute API calls whenever needed. It uses LLMs to determine if the user's request requires calling an API endpoint. Then, it decides which endpoint to call and passes the appropriate payload based on the given API definition.

azure-search-openai-demo

This sample demonstrates a few approaches for creating ChatGPT-like experiences over your own data using the Retrieval Augmented Generation pattern. It uses Azure OpenAI Service to access a GPT model (gpt-35-turbo), and Azure AI Search for data indexing and retrieval. The repo includes sample data so it's ready to try end to end. In this sample application we use a fictitious company called Contoso Electronics, and the experience allows its employees to ask questions about the benefits, internal policies, as well as job descriptions and roles.

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

Local-Multimodal-AI-Chat

Local Multimodal AI Chat is a multimodal chat application that integrates various AI models to manage audio, images, and PDFs seamlessly within a single interface. It offers local model processing with Ollama for data privacy, integration with OpenAI API for broader AI capabilities, audio chatting with Whisper AI for accurate voice interpretation, and PDF chatting with Chroma DB for efficient PDF interactions. The application is designed for AI enthusiasts and developers seeking a comprehensive solution for multimodal AI technologies.

serverless-chat-langchainjs

This sample shows how to build a serverless chat experience with Retrieval-Augmented Generation using LangChain.js and Azure. The application is hosted on Azure Static Web Apps and Azure Functions, with Azure Cosmos DB for MongoDB vCore as the vector database. You can use it as a starting point for building more complex AI applications.

For similar tasks

OSHW-SenseCAP-Watcher

SenseCAP Watcher is a monitoring device built on ESP32S3 with Himax WiseEye2 HX6538 AI chip, excelling in image and vector data processing. It features a camera, microphone, and speaker for visual, auditory, and interactive capabilities. With LLM-enabled SenseCraft suite, it understands commands, perceives surroundings, and triggers actions. The repository provides firmware, hardware documentation, and applications for the Watcher, along with detailed guides for setup, task assignment, and firmware flashing.

human

AI-powered 3D Face Detection & Rotation Tracking, Face Description & Recognition, Body Pose Tracking, 3D Hand & Finger Tracking, Iris Analysis, Age & Gender & Emotion Prediction, Gaze Tracking, Gesture Recognition, Body Segmentation

mediapipe-rs

MediaPipe-rs is a Rust library designed for MediaPipe tasks on WasmEdge WASI-NN. It offers easy-to-use low-code APIs similar to mediapipe-python, with low overhead and flexibility for custom media input. The library supports various tasks like object detection, image classification, gesture recognition, and more, including TfLite models, TF Hub models, and custom models. Users can create task instances, run sessions for pre-processing, inference, and post-processing, and speed up processing by reusing sessions. The library also provides support for audio tasks using audio data from symphonia, ffmpeg, or raw audio. Users can choose between CPU, GPU, or TPU devices for processing.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.