lawyer-llama

中文法律LLaMA (LLaMA for Chinese legel domain)

Stars: 751

Lawyer LLaMA is a large language model that has been specifically trained on legal data, including Chinese laws, regulations, and case documents. It has been fine-tuned on a large dataset of legal questions and answers, enabling it to understand and respond to legal inquiries in a comprehensive and informative manner. Lawyer LLaMA is designed to assist legal professionals and individuals with a variety of law-related tasks, including: * **Legal research:** Quickly and efficiently search through vast amounts of legal information to find relevant laws, regulations, and case precedents. * **Legal analysis:** Analyze legal issues, identify potential legal risks, and provide insights on how to proceed. * **Document drafting:** Draft legal documents, such as contracts, pleadings, and legal opinions, with accuracy and precision. * **Legal advice:** Provide general legal advice and guidance on a wide range of legal matters, helping users understand their rights and options. Lawyer LLaMA is a powerful tool that can significantly enhance the efficiency and effectiveness of legal research, analysis, and decision-making. It is an invaluable resource for lawyers, paralegals, law students, and anyone else who needs to navigate the complexities of the legal system.

README:

[ZH] [EN]

通过指令微调,LLaMA 模型在通用领域展现出了非常好的表现。但由于缺少合适的数据,少有人探究LLaMA在法律领域的能力。为了弥补这一空白,我们提出了Lawyer LLaMA,一个在法律领域数据上进行了额外训练的模型。

Lawyer LLaMA 首先在大规模法律语料上进行了continual pretraining,让它系统的学习中国的法律知识体系。 在此基础上,我们借助ChatGPT收集了一批对中国国家统一法律职业资格考试客观题(以下简称法考)的分析和对法律咨询的回答,利用收集到的数据对模型进行指令微调,让模型习得将法律知识应用到具体场景中的能力。

我们的模型能够:

-

掌握中国法律知识: 能够正确的理解民法、刑法、行政法、诉讼法等常见领域的法律概念。例如,掌握了刑法中的犯罪构成理论,能够从刑事案件的事实描述中识别犯罪主体、犯罪客体、犯罪行为、主观心理状态等犯罪构成要件。模型利用学到的法律概念与理论,能够较好回答法考中的大部分题目。

-

应用于中国法律实务:能够以通俗易懂的语言解释法律概念,并且进行基础的法律咨询,涵盖婚姻、借贷、海商、刑事等法律领域。

为了给中文法律大模型的开放研究添砖加瓦,本项目将开源一系列法律领域的指令微调数据和基于LLaMA训练的中文法律大模型的参数 。

[2024/4/25] 🆕 发布了新版Lawyer LLaMA 2 (lawyer-llama-13b-v2)模型参数,以及更高质量的法律指令微调数据。

[2023/10/14] 更新了Lawyer LLaMA技术报告Lawyer LLaMA: Enhancing LLMs with Legal Knowledge,提供了更多的技术细节和研究发现。

[2023/6/8] 开源了lawyer-llama-13b-beta1.0模型参数。

[2023/5/25] 开源了更大规模的指令微调数据,发布了包含和用户问题相关法条的对话数据。

[2023/5/25] 发布了Lawyer LLaMA技术报告。

[2023/4/13] 开源了由ChatGPT生成的指令微调数据,包含2k条法考题目的解答及5k条法律咨询回复。

我们收集了部分公开的法律数据用于模型的continual training,包括法律条文、裁判文书等。

Alpaca-GPT4 52k 中文,52k 英文。

| 文件名 | 规模 | 描述 |

|---|---|---|

| 🆕 fakao_gpt4.json | 1,000 | GPT-4生成的法考题解答 |

| 🆕 zixun_gpt4.json | 1,000 | GPT-4基于法条生成的法律咨询回复 |

| judical_examination.json | 2,000 | GPT-3.5生成的法考题解答 |

| judical_examination_v2.json | 5,000 | GPT-3.5生成的法考题解答(第二批) |

| legal_advice.json | 5,000 | GPT-3.5生成的法律咨询回复 |

| legal_counsel_v2.json | 8,000 | GPT-3.5生成的法律咨询回复(第二批) |

| legal_counsel_with_article_v2.json | 1,000 | GPT-3.5基于法条生成的法律咨询回复 |

| legal_counsel_multi_turn_with_article_v2.json | 476 | GPT-3.5基于法条生成的多轮法律咨询对话 |

在Lawyer LLaMA 2 (lawyer-llama-13b-v2)的训练中我们使用了新版的法律微调数据,以下是一些新旧数据的对比。

我们将JEC-QA中国法考数据集中的试题输入ChatGPT,让其生成对每个答案的解析。

以下对比了新旧版本Lawyer LLaMA使用的数据:

| GPT-3.5生成 | 🆕 GPT-4生成 |

|---|---|

|

问题: 根据《物权法》,下列关于物权变动的特别规定,表述正确吗? 因继承或者受遗赠取得物权的,自继承或者受遗赠开始时发生效力 请给出详细的推理过程之后再给出答案。 回复: 这个表述是正确的。 根据《物权法》第X条规定:“因继承或者受遗赠取得物权的,自继承或者受遗赠开始时发生效力。”这意味着,当一个人通过继承或受遗赠获得物权时,这个物权的效力是从继承或受遗赠开始时就生效的,而不是从物权转移的时间开始生效。这是因为,继承或受遗赠是一种法定的方式来获得物权,因此,物权的效力应该从这个法定的时间点开始生效。 |

问题: 情景分析:张某欠李某2万元,张某有一辆出租车价值4万元,现张某将该车赠与其友王五;李某发现张某无其他值钱的东西,这一赠与会影响张某偿还债务,于是向法院起诉请求撤销张某的赠与行为。法院对该赠与行为的效力应认定。 回复: 张某欠李某2万元,而张某将其价值4万元的出租车赠与给王五,这一行为在法律上可能构成恶意转移财产,目的在于逃避债务。李某因此向法院提起诉讼,请求撤销张某的赠与行为,以保护自己的债权不受损害。 首先,根据《中华人民共和国民法典》第二百五十四条规定,债务人恶意转移财产,损害债权人利益的,债权人有权请求人民法院撤销债务人的行为。在本案中,张某的赠与行为可能被视为恶意转移财产,因为这一行为发生在张某欠债的情况下,且张某无其他值钱的东西,这一赠与行为很可能导致李某的债权无法实现。 其次,根据《中华人民共和国民法典》第二百五十五条规定,请求撤销权的行使,不得损害善意第三人的权益。然而,在本案中,王五作为接受赠与的一方,是否具有善意以及是否知道张某的赠与行为会损害债权人(李某)的利益,对于判断赠与行为是否有效是关键。如果王五知道张某的赠与行为是为了逃避偿还债务而进行的,则王五不能被视为善意第三人。 因此,结合上述分析,张某的赠与行为损害了债权人李某的利益,且在债务人恶意转移财产的情况下进行,根据《中华人民共和国民法典》相关规定,法院应当认定该赠与行为无效。这样的判决旨在保护债权人的利益,防止债务人通过转移财产逃避债务的行为。因此,法院对该赠与行为的效力应认定为无效。 |

我们从开放数据集中收集了一些咨询问题,输入ChatGPT,让ChatGPT扮演律师解答问题。将相关法条一同输入,可以提升ChatGPT生成的质量。

以下对比了新旧版本Lawyer LLaMA使用的数据:

| GPT-3.5生成 | 🆕 GPT-4生成 |

|---|---|

|

问题: 你好,离婚后夫妻的财产是怎么分配的 回复: 您好,根据《民法典》的相关规定,离婚后夫妻的财产分配可以通过夫妻双方协商处理,如果没有协商一致,则需要由法院根据财产的具体情况,按照照顾子女、女方和无过错方权益的原则进行判决。对于夫妻在婚姻关系存续期间所得的共同财产,包括工资、奖金、劳务报酬、生产、经营、投资的收益、知识产权的收益、继承或者受赠的财产以及其他应当归共同所有的财产,应当平分。而夫妻在婚姻关系存续期间所获得的个人财产,包括婚前财产、因受到人身损害获得的赔偿或者补偿、遗嘱或者赠与合同中确定只归一方的财产、一方专用的生活用品,应当归该方所有。请问你们在婚姻关系存续期间所得的财产情况如何呢? |

问题: 我老公经常不回家,我知道他在外面有人,同时干了许多坏事,一年365天,这个情况怎么办呢? 回复: 根据您提供的情况,您老公长期不回家且外面有其他人,这可能触及到《民法典》第一千零四十二条中关于“禁止有配偶者与他人同居”的规定。若您的老公确实与他人同居,这在法律上构成违法行为,您作为受害一方,有权采取相应的法律行动。 首先,您需要收集证据。这包括但不限于您老公与他人同居的证据、他不回家的时间以及他所做的“许多坏事”的相关证据。证据可以是照片、视频、通讯记录、目击者的证词等,这对于您后续的法律行动至关重要。 其次,若您老公的行为给您造成了精神伤害或经济损失,根据《民法典》的相关规定,您可以要求赔偿。婚姻法内还规定了夫妻之间忠诚的义务,一旦违反,受损害一方有权要求精神损害赔偿。 第三,考虑到您提到的您老公“干了许多坏事”,具体情况需要进一步明确。这些行为是涉及违法犯罪还是道德层面的“坏事”?如果涉及违法犯罪,您可以考虑向相关部门举报。这不仅是维护自己权益的行为,也是对社会责任的履行。 最后,关于是否结束婚姻,这是一个需要认真考虑的问题。不忠是许多人选择离婚的重要原因之一,根据您收集的证据,如果决定采取此步骤,可以向人民法院提起离婚诉讼,请求分割夫妻共同财产、争取子女抚养权(如果有)等。 在这个过程中,考虑到法律问题的复杂性,您可能需要寻求专业律师的帮助,他们可以为您提供更专业的法律意见,并代表您进行诉讼活动。 总而言之,您面临的情况较为复杂,但您完全有权利采取行动保护自己的合法权益。关键在于搜集证据、评估情况,并在必要时寻求专业法律帮助。 |

目前公开了以下版本的Lawyer LLaMA:

- 🆕

lawyer-llama-13b-v2: 以quzhe/llama_chinese_13B(对LLaMA-2进行了中文持续预训练)为基础,使用通用instruction和GPT-4生成的法律instruction进行SFT,配有婚姻相关法律检索模块。下载地址和使用方法见demo/run_inference_v2.md。 -

lawyer-llama-13b-beta1.0: 以Chinese-LLaMA-13B为基础,使用通用instruction和GPT-3.5生成的法律instruction进行SFT,配有婚姻相关法律检索模块。下载地址和使用方法见demo/run_inference_v1.md。

我们使用Chain-of-Discussion中的200条婚姻家事相关的咨询问题,对以下模型进行测试:

- 🆕 Lawyer LLaMA 2 (

lawyer-llama-13b-v2) - Lawyer LLaMA 1 (

lawyer-llama-13b-beta1.0) - DISC-LawLLM

- GPT-3.5-Turbo

- Gemini-1.0-Pro

使用GPT-4对咨询回复质量打分(满分10分)。在评分的过程中,我们要求GPT-4模型围绕着文本的流畅性、逻辑性、是否引用了无关法条、是否与律师撰写的金标准回复在语义上一致等角度进行评分。各模型表现如下:

| 模型 | 得分 |

|---|---|

| 🆕 Lawyer LLaMA 2 | 6.71 |

| Lawyer LLaMA 1 | 5.61 |

| DISC-LawLLM | 5.70 |

| GPT-3.5-Turbo | 6.90 |

| Gemini-1.0-Pro | 6.94 |

关于旧版模型lawyer-llama-13b-beta1.0的表现,请参考旧版README.

下面展示了Lawyer LLaMA 2、Lawyer LLaMA 1和DISC-LawLLM对于同一问题的输出。

问题: 没有赡养老人就无法继承财产吗?

相关法条:

- 《民法典》第一千一百三十条:同一顺序继承人继承遗产的份额,一般应当均等。对生活有特殊困难又缺乏劳动能力的继承人,分配遗产时,应当予以照顾。对被继承人尽了主要扶养义务或者与被继承人共同生活的继承人,分配遗产时,可以多分。有扶养能力和有扶养条件的继承人,不尽扶养义务的,分配遗产时,应当不分或者少分。继承人协商同意的,也可以不均等。

- 《民法典》第一千一百二十五条:继承人有下列行为之一的,丧失继承权:(一)故意杀害被继承人;(二)为争夺遗产而杀害其他继承人;(三)遗弃被继承人,或者虐待被继承人情节严重;(四)伪造、篡改、隐匿或者销毁遗嘱,情节严重;(五)以欺诈、胁迫手段迫使或者妨碍被继承人设立、变更或者撤回遗嘱,情节严重。继承人有前款第三项至第五项行为,确有悔改表现,被继承人表示宽恕或者事后在遗嘱中将其列为继承人的,该继承人不丧失继承权。受遗赠人有本条第一款规定行为的,丧失受遗赠权。

- 《民法典》第一千一百二十七条:遗产按照下列顺序继承:(一)第一顺序:配偶、子女、父母;(二)第二顺序:兄弟姐妹、祖父母、外祖父母。继承开始后,由第一顺序继承人继承,第二顺序继承人不继承;没有第一顺序继承人继承的,由第二顺序继承人继承。本编所称子女,包括婚生子女、非婚生子女、养子女和有扶养关系的继子女。本编所称父母,包括生父母、养父母和有扶养关系的继父母。本编所称兄弟姐妹,包括同父母的兄弟姐妹、同父异母或者同母异父的兄弟姐妹、养兄弟姐妹、有扶养关系的继兄弟姐妹。

模型输出:

| DISC-LawLLM | Lawyer LLaMA 1 | 🆕 Lawyer LLaMA 2 |

|---|---|---|

|

GPT-4评分:4分 根据《民法典》规定,子女对父母有赡养扶助的义务。如果成年子女不履行赡养义务的话,缺乏劳动能力或者生活困难的父母,有要求成年子女给付赡养费的权利。所以,在法律上,未尽到赡养老人的义务是会丧失继承权的。 但是需要注意的是,是否存在“丧失继承权”的情况应当由法院根据具体案件情况来进行认定,而不能直接将所有未赡养老人的行为一概而论。 |

GPT-4评分:3分 您好,根据中国的法律规定,没有赡养老人就不能成为继承人,但是如果被继承人已经去世,其财产将按照法定继承顺序分配给合法的继承人。同时,如果被继承人在生前曾经给予过抚养或扶养,则在分配遗产时,可以给予一定的照顾。此外,如果被继承人遗嘱中有关于财产分配的规定,则应按照遗嘱执行。请问您的具体情况是什么呢? |

GPT-4评分:8分 依据您的咨询,关于是否因为没有赡养老就不能继承财产的问题,我们可以从《民法典》的相关规定进行分析。 首先,根据《民法典》第一千一百三十条的规定,继承人在继承遗产时,如果对被继承人尽了主要扶养义务或者与被继承人共同生活的继承人,可以多分遗产。这意味着,如果继承人在被继承人生前对其进行了经济上的支持或生活上的照料,那么在继承遗产时可能会得到更多的份额。 其次,《民法典》第一千一百二十五条明确指出,继承人有遗弃被继承人,或者虐待被继承人情节严重的,将丧失继承权。这表明,如果继承人在被继承人生前对其进行了遗弃或虐待,导致其生活处于困境,那么在继承遗产时可能会受到限制。 然而,《民法典》并没有直接规定说没有赡养老就不能继承财产。继承权的获取主要基于血缘关系和法律规定的继承人范围,而不是直接取决于赡养老的行为。因此,即使在没有履行赡养老义务的情况下,继承人仍然有权继承遗产。 综上所述,虽然赡养老行为可能会影响继承权的行使,但不赡养老并不直接剥夺继承权。继承权的获得主要取决于血缘关系和法律规定的继承人资格。如果您有继承权的疑问,建议详细了解自己的继承权情况,并考虑是否有遗弃或虐待行为,以免影响继承权利的行使。 |

本项目的开放过程中,获得了以下项目的帮助,在此表示感谢。

https://opendata.pku.edu.cn/dataset.xhtml?persistentId=doi:10.18170/DVN/OLO4G8

https://github.com/tatsu-lab/stanford_alpaca

https://github.com/LianjiaTech/BELLE

https://github.com/ymcui/Chinese-LLaMA-Alpaca

https://github.com/pointnetwork/point-alpaca

本项目贡献人员:

黄曲哲*,陶铭绪*,张晨*,安震威*,姜聪,陈智斌,伍子睿,冯岩松

* Equal Contribution

本项目是在冯岩松教授的指导下进行的。

本项目内容仅供用于学术研究,不得用于商业以及其他会对社会带来危害的用途。使用涉及第三方代码的部分时,请严格遵循相应的开源协议。

本项目中使用的数据由ChatGPT生成,未经严格验证,可能会存在错误内容,在使用时请注意甄别。

本项目中的模型输出并非专业法律咨询结果,可能会包含错误内容。如需法律援助,请从专业人士处获得帮助。

如果您使用了本项目的内容,或者认为本项目对您的研究有帮助,请引用本项目。

@misc{huang2023lawyer,

title={Lawyer LLaMA Technical Report},

author={Quzhe Huang and Mingxu Tao and Chen Zhang and Zhenwei An and Cong Jiang and Zhibin Chen and Zirui Wu and Yansong Feng},

year={2023},

eprint={2305.15062},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

@misc{Lawyer-LLama,

title={Lawyer Llama},

author={Quzhe Huang and Mingxu Tao and Chen Zhang and Zhenwei An and Cong Jiang and Zhibin Chen and Zirui Wu and Yansong Feng},

year={2023},

publisher={GitHub},

journal={GitHub repository},

howpublished={\url{https://github.com/AndrewZhe/lawyer-llama}},

}

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for lawyer-llama

Similar Open Source Tools

lawyer-llama

Lawyer LLaMA is a large language model that has been specifically trained on legal data, including Chinese laws, regulations, and case documents. It has been fine-tuned on a large dataset of legal questions and answers, enabling it to understand and respond to legal inquiries in a comprehensive and informative manner. Lawyer LLaMA is designed to assist legal professionals and individuals with a variety of law-related tasks, including: * **Legal research:** Quickly and efficiently search through vast amounts of legal information to find relevant laws, regulations, and case precedents. * **Legal analysis:** Analyze legal issues, identify potential legal risks, and provide insights on how to proceed. * **Document drafting:** Draft legal documents, such as contracts, pleadings, and legal opinions, with accuracy and precision. * **Legal advice:** Provide general legal advice and guidance on a wide range of legal matters, helping users understand their rights and options. Lawyer LLaMA is a powerful tool that can significantly enhance the efficiency and effectiveness of legal research, analysis, and decision-making. It is an invaluable resource for lawyers, paralegals, law students, and anyone else who needs to navigate the complexities of the legal system.

HaE

HaE is a framework project in the field of network security (data security) that combines artificial intelligence (AI) large models to achieve highlighting and information extraction of HTTP messages (including WebSocket). It aims to reduce testing time, focus on valuable and meaningful messages, and improve vulnerability discovery efficiency. The project provides a clear and visual interface design, simple interface interaction, and centralized data panel for querying and extracting information. It also features built-in color upgrade algorithm, one-click export/import of data, and integration of AI large models API for optimized data processing.

UltraRAG

The UltraRAG framework is a researcher and developer-friendly RAG system solution that simplifies the process from data construction to model fine-tuning in domain adaptation. It introduces an automated knowledge adaptation technology system, supporting no-code programming, one-click synthesis and fine-tuning, multidimensional evaluation, and research-friendly exploration work integration. The architecture consists of Frontend, Service, and Backend components, offering flexibility in customization and optimization. Performance evaluation in the legal field shows improved results compared to VanillaRAG, with specific metrics provided. The repository is licensed under Apache-2.0 and encourages citation for support.

XianyuAutoAgent

Xianyu AutoAgent is an AI customer service robot system specifically designed for the Xianyu platform, providing 24/7 automated customer service, supporting multi-expert collaborative decision-making, intelligent bargaining, and context-aware conversations. The system includes intelligent conversation engine with features like context awareness and expert routing, business function matrix with modules like core engine, bargaining system, technical support, and operation monitoring. It requires Python 3.8+ and NodeJS 18+ for installation and operation. Users can customize prompts for different experts and contribute to the project through issues or pull requests.

motia

Motia is an AI agent framework designed for software engineers to create, test, and deploy production-ready AI agents quickly. It provides a code-first approach, allowing developers to write agent logic in familiar languages and visualize execution in real-time. With Motia, developers can focus on business logic rather than infrastructure, offering zero infrastructure headaches, multi-language support, composable steps, built-in observability, instant APIs, and full control over AI logic. Ideal for building sophisticated agents and intelligent automations, Motia's event-driven architecture and modular steps enable the creation of GenAI-powered workflows, decision-making systems, and data processing pipelines.

Open-dLLM

Open-dLLM is the most open release of a diffusion-based large language model, providing pretraining, evaluation, inference, and checkpoints. It introduces Open-dCoder, the code-generation variant of Open-dLLM. The repo offers a complete stack for diffusion LLMs, enabling users to go from raw data to training, checkpoints, evaluation, and inference in one place. It includes pretraining pipeline with open datasets, inference scripts for easy sampling and generation, evaluation suite with various metrics, weights and checkpoints on Hugging Face, and transparent configs for full reproducibility.

bailing

Bailing is an open-source voice assistant designed for natural conversations with users. It combines Automatic Speech Recognition (ASR), Voice Activity Detection (VAD), Large Language Model (LLM), and Text-to-Speech (TTS) technologies to provide a high-quality voice interaction experience similar to GPT-4o. Bailing aims to achieve GPT-4o-like conversation effects without the need for GPU, making it suitable for various edge devices and low-resource environments. The project features efficient open-source models, modular design allowing for module replacement and upgrades, support for memory function, tool integration for information retrieval and task execution via voice commands, and efficient task management with progress tracking and reminders.

ChuanhuChatGPT

Chuanhu Chat is a user-friendly web graphical interface that provides various additional features for ChatGPT and other language models. It supports GPT-4, file-based question answering, local deployment of language models, online search, agent assistant, and fine-tuning. The tool offers a range of functionalities including auto-solving questions, online searching with network support, knowledge base for quick reading, local deployment of language models, GPT 3.5 fine-tuning, and custom model integration. It also features system prompts for effective role-playing, basic conversation capabilities with options to regenerate or delete dialogues, conversation history management with auto-saving and search functionalities, and a visually appealing user experience with themes, dark mode, LaTeX rendering, and PWA application support.

go-interview-practice

The Go Interview Practice repository is a comprehensive platform designed to help users practice and master Go programming through interactive coding challenges. It offers an interactive web interface with a code editor, testing experience, and competitive leaderboard. Users can practice with challenges categorized by difficulty levels, contribute solutions, and track their progress. The repository also features AI-powered interview simulation, real-time code review, dynamic interview questions, and progressive hints. Users can showcase their achievements with auto-updating profile badges and contribute to the project by submitting solutions or adding new challenges.

Video-ChatGPT

Video-ChatGPT is a video conversation model that aims to generate meaningful conversations about videos by combining large language models with a pretrained visual encoder adapted for spatiotemporal video representation. It introduces high-quality video-instruction pairs, a quantitative evaluation framework for video conversation models, and a unique multimodal capability for video understanding and language generation. The tool is designed to excel in tasks related to video reasoning, creativity, spatial and temporal understanding, and action recognition.

langtrace

Langtrace is an open source observability software that lets you capture, debug, and analyze traces and metrics from all your applications that leverage LLM APIs, Vector Databases, and LLM-based Frameworks. It supports Open Telemetry Standards (OTEL), and the traces generated adhere to these standards. Langtrace offers both a managed SaaS version (Langtrace Cloud) and a self-hosted option. The SDKs for both Typescript/Javascript and Python are available, making it easy to integrate Langtrace into your applications. Langtrace automatically captures traces from various vendors, including OpenAI, Anthropic, Azure OpenAI, Langchain, LlamaIndex, Pinecone, and ChromaDB.

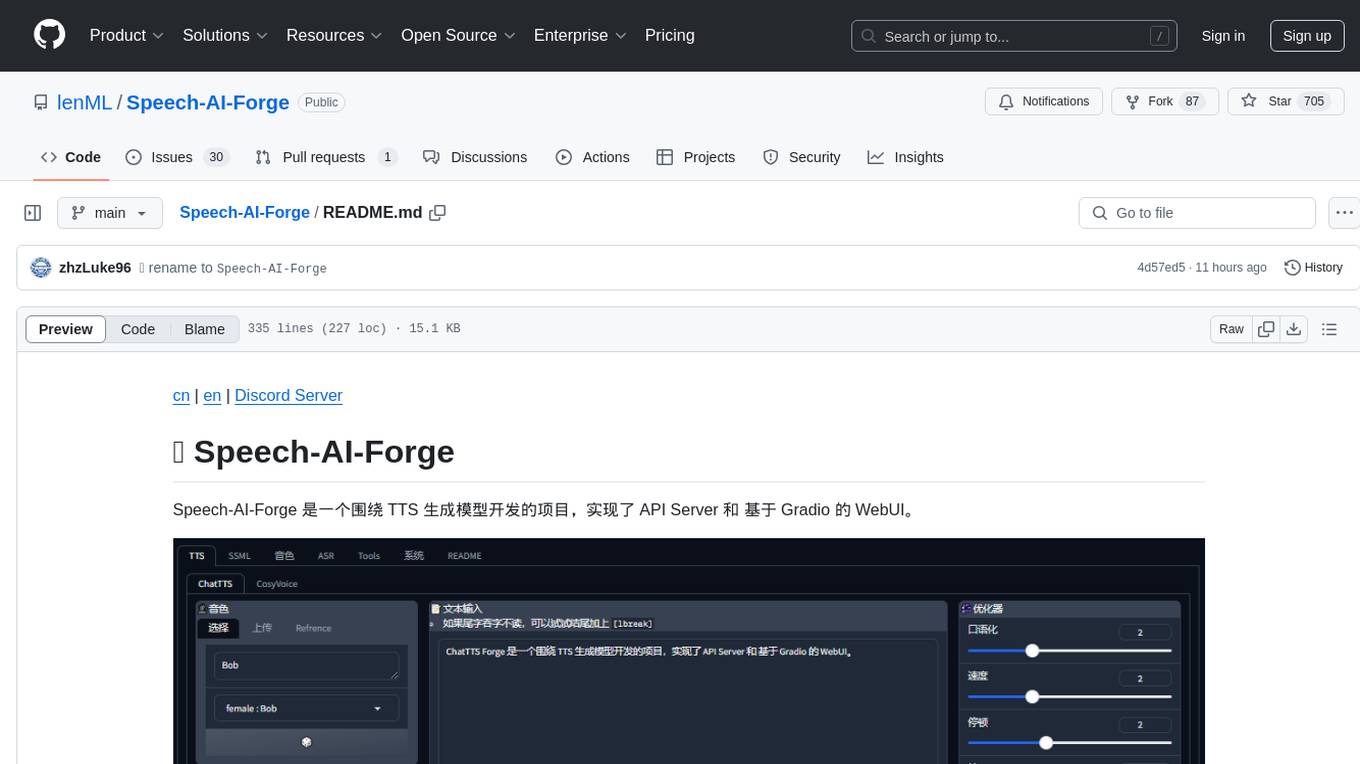

Speech-AI-Forge

Speech-AI-Forge is a project developed around TTS generation models, implementing an API Server and a WebUI based on Gradio. The project offers various ways to experience and deploy Speech-AI-Forge, including online experience on HuggingFace Spaces, one-click launch on Colab, container deployment with Docker, and local deployment. The WebUI features include TTS model functionality, speaker switch for changing voices, style control, long text support with automatic text segmentation, refiner for ChatTTS native text refinement, various tools for voice control and enhancement, support for multiple TTS models, SSML synthesis control, podcast creation tools, voice creation, voice testing, ASR tools, and post-processing tools. The API Server can be launched separately for higher API throughput. The project roadmap includes support for various TTS models, ASR models, voice clone models, and enhancer models. Model downloads can be manually initiated using provided scripts. The project aims to provide inference services and may include training-related functionalities in the future.

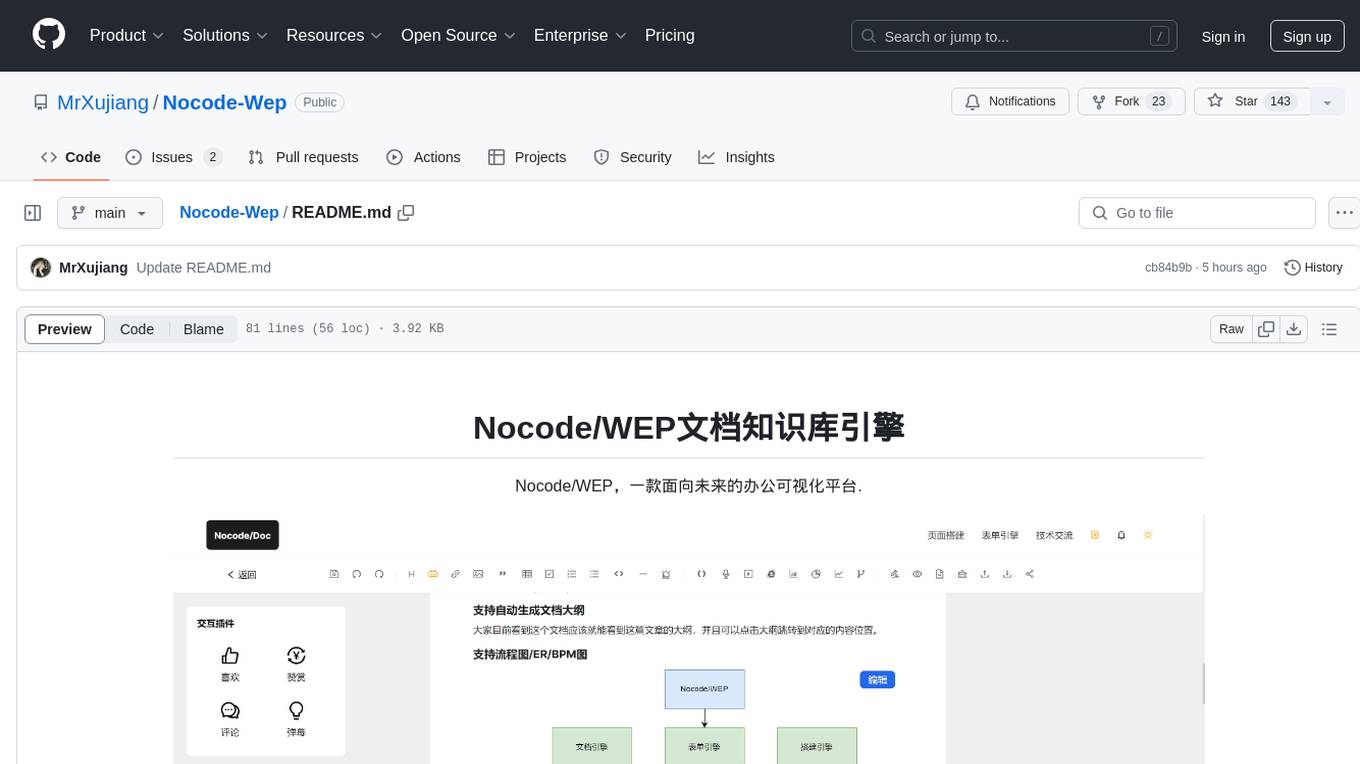

Nocode-Wep

Nocode/WEP is a forward-looking office visualization platform that includes modules for document building, web application creation, presentation design, and AI capabilities for office scenarios. It supports features such as configuring bullet comments, global article comments, multimedia content, custom drawing boards, flowchart editor, form designer, keyword annotations, article statistics, custom appreciation settings, JSON import/export, content block copying, and unlimited hierarchical directories. The platform is compatible with major browsers and aims to deliver content value, iterate products, share technology, and promote open-source collaboration.

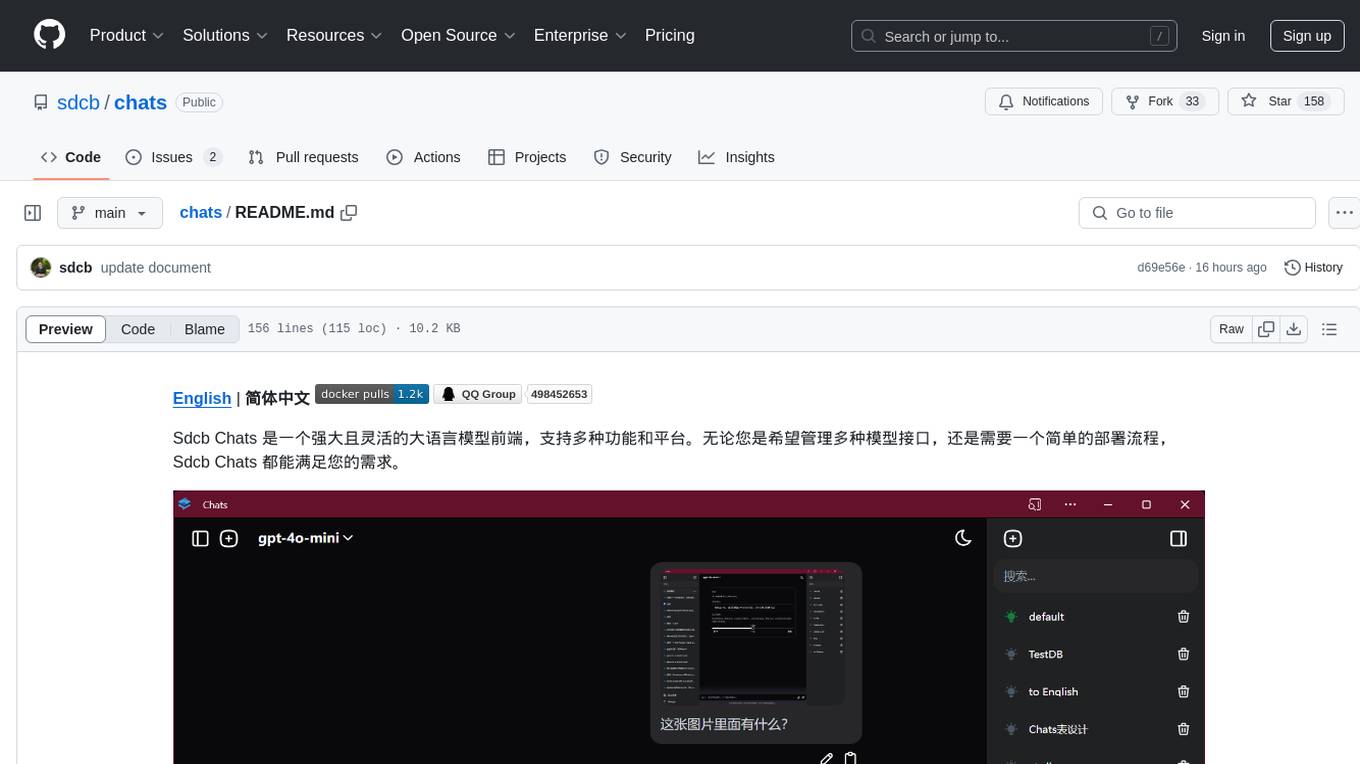

chats

Sdcb Chats is a powerful and flexible frontend for large language models, supporting multiple functions and platforms. Whether you want to manage multiple model interfaces or need a simple deployment process, Sdcb Chats can meet your needs. It supports dynamic management of multiple large language model interfaces, integrates visual models to enhance user interaction experience, provides fine-grained user permission settings for security, real-time tracking and management of user account balances, easy addition, deletion, and configuration of models, transparently forwards user chat requests based on the OpenAI protocol, supports multiple databases including SQLite, SQL Server, and PostgreSQL, compatible with various file services such as local files, AWS S3, Minio, Aliyun OSS, Azure Blob Storage, and supports multiple login methods including Keycloak SSO and phone SMS verification.

general

General is a DART & Flutter library created by AZKADEV to speed up development on various platforms and CLI easily. It allows access to features such as camera, fingerprint, SMS, and MMS. The library is designed for Dart language and provides functionalities for app background, text to speech, speech to text, and more.

agentica

Agentica is a human-centric framework for building large language model agents. It provides functionalities for planning, memory management, tool usage, and supports features like reflection, planning and execution, RAG, multi-agent, multi-role, and workflow. The tool allows users to quickly code and orchestrate agents, customize prompts, and make API calls to various services. It supports API calls to OpenAI, Azure, Deepseek, Moonshot, Claude, Ollama, and Together. Agentica aims to simplify the process of building AI agents by providing a user-friendly interface and a range of functionalities for agent development.

For similar tasks

lawyer-llama

Lawyer LLaMA is a large language model that has been specifically trained on legal data, including Chinese laws, regulations, and case documents. It has been fine-tuned on a large dataset of legal questions and answers, enabling it to understand and respond to legal inquiries in a comprehensive and informative manner. Lawyer LLaMA is designed to assist legal professionals and individuals with a variety of law-related tasks, including: * **Legal research:** Quickly and efficiently search through vast amounts of legal information to find relevant laws, regulations, and case precedents. * **Legal analysis:** Analyze legal issues, identify potential legal risks, and provide insights on how to proceed. * **Document drafting:** Draft legal documents, such as contracts, pleadings, and legal opinions, with accuracy and precision. * **Legal advice:** Provide general legal advice and guidance on a wide range of legal matters, helping users understand their rights and options. Lawyer LLaMA is a powerful tool that can significantly enhance the efficiency and effectiveness of legal research, analysis, and decision-making. It is an invaluable resource for lawyers, paralegals, law students, and anyone else who needs to navigate the complexities of the legal system.

ChatLaw

ChatLaw is an open-source legal large language model tailored for Chinese legal scenarios. It aims to combine LLM and knowledge bases to provide solutions for legal scenarios. The models include ChatLaw-13B and ChatLaw-33B, trained on various legal texts to construct dialogue data. The project focuses on improving logical reasoning abilities and plans to train models with parameters exceeding 30B for better performance. The dataset consists of forum posts, news, legal texts, judicial interpretations, legal consultations, exam questions, and court judgments, cleaned and enhanced to create dialogue data. The tool is designed to assist in legal tasks requiring complex logical reasoning, with a focus on accuracy and reliability.

For similar jobs

lawyer-llama

Lawyer LLaMA is a large language model that has been specifically trained on legal data, including Chinese laws, regulations, and case documents. It has been fine-tuned on a large dataset of legal questions and answers, enabling it to understand and respond to legal inquiries in a comprehensive and informative manner. Lawyer LLaMA is designed to assist legal professionals and individuals with a variety of law-related tasks, including: * **Legal research:** Quickly and efficiently search through vast amounts of legal information to find relevant laws, regulations, and case precedents. * **Legal analysis:** Analyze legal issues, identify potential legal risks, and provide insights on how to proceed. * **Document drafting:** Draft legal documents, such as contracts, pleadings, and legal opinions, with accuracy and precision. * **Legal advice:** Provide general legal advice and guidance on a wide range of legal matters, helping users understand their rights and options. Lawyer LLaMA is a powerful tool that can significantly enhance the efficiency and effectiveness of legal research, analysis, and decision-making. It is an invaluable resource for lawyers, paralegals, law students, and anyone else who needs to navigate the complexities of the legal system.

DISC-LawLLM

DISC-LawLLM is a legal domain large model that aims to provide professional, intelligent, and comprehensive **legal services** to users. It is developed and open-sourced by the Data Intelligence and Social Computing Lab (Fudan-DISC) at Fudan University.

ChatLaw

ChatLaw is an open-source legal large language model tailored for Chinese legal scenarios. It aims to combine LLM and knowledge bases to provide solutions for legal scenarios. The models include ChatLaw-13B and ChatLaw-33B, trained on various legal texts to construct dialogue data. The project focuses on improving logical reasoning abilities and plans to train models with parameters exceeding 30B for better performance. The dataset consists of forum posts, news, legal texts, judicial interpretations, legal consultations, exam questions, and court judgments, cleaned and enhanced to create dialogue data. The tool is designed to assist in legal tasks requiring complex logical reasoning, with a focus on accuracy and reliability.

lawglance

LawGlance is an AI-powered legal assistant that aims to bridge the gap between people and legal access. It is a free, open-source initiative designed to provide quick and accurate legal support tailored to individual needs. The project covers various laws, with plans for international expansion in the future. LawGlance utilizes AI-powered Retriever-Augmented Generation (RAG) to deliver legal guidance accessible to both laypersons and professionals. The tool is developed with support from mentors and experts at Data Science Academy and Curvelogics.

Free-LLM-Collection

Free-LLM-Collection is a curated list of free resources for mastering the Legal Language Model (LLM) technology. It includes datasets, research papers, tutorials, and tools to help individuals learn and work with LLM models. The repository aims to provide a comprehensive collection of materials to support researchers, developers, and enthusiasts interested in exploring and leveraging LLM technology for various applications in the legal domain.

LLM-and-Law

This repository is dedicated to summarizing papers related to large language models with the field of law. It includes applications of large language models in legal tasks, legal agents, legal problems of large language models, data resources for large language models in law, law LLMs, and evaluation of large language models in the legal domain.

AutoPatent

AutoPatent is a multi-agent framework designed for automatic patent generation. It challenges large language models to generate full-length patents based on initial drafts. The framework leverages planner, writer, and examiner agents along with PGTree and RRAG to craft lengthy, intricate, and high-quality patent documents. It introduces a new metric, IRR (Inverse Repetition Rate), to measure sentence repetition within patents. The tool aims to streamline the patent generation process by automating the creation of detailed and specialized patent documents.

OpenContracts

OpenContracts is an Apache-2 licensed enterprise document analytics tool that supports multiple formats, including PDF and txt-based formats. It features multiple document ingestion pipelines with a pluggable architecture for easy format and ingestion engine support. Users can create custom document analytics tools with beautiful result displays, support mass document data extraction with a LlamaIndex wrapper, and manage document collections, layout parsing, automatic vector embeddings, and human annotation. The tool also offers pluggable parsing pipelines, human annotation interface, LlamaIndex integration, data extraction capabilities, and custom data extract pipelines for bulk document querying.