llmstxt-generator

None

Stars: 284

llms.txt Generator is a tool designed for LLM (Legal Language Model) training and inference. It crawls websites to combine content into consolidated text files, offering both standard and full versions. Users can access the tool through a web interface or API without requiring an API key. Powered by Firecrawl for web crawling and GPT-4-mini for text processing.

README:

Generate consolidated text files from websites for LLM training and inference. Powered by @firecrawl_dev for web crawling and GPT-4-mini for text processing.

- Crawls websites and combines content into a single text file

- Generates both standard (

llms.txt) and full (llms-full.txt) versions - Web interface and API access available

- No API key required for basic usage

Visit llmstxt.firecrawl.dev to generate files through the browser.

GET https://llmstxt.firecrawl.dev/[YOUR_URL_HERE]

Note: Processing may take several minutes due to crawling and LLM operations.

Create a .env file with the following variables:

FIRECRAWL_API_KEY=

SUPABASE_URL=

SUPABASE_KEY=

OPENAI_API_KEY=

npm install

npm run devFor Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for llmstxt-generator

Similar Open Source Tools

llmstxt-generator

llms.txt Generator is a tool designed for LLM (Legal Language Model) training and inference. It crawls websites to combine content into consolidated text files, offering both standard and full versions. Users can access the tool through a web interface or API without requiring an API key. Powered by Firecrawl for web crawling and GPT-4-mini for text processing.

OmniSteward

OmniSteward is an AI-powered steward system based on large language models that can interact with users through voice or text to help control smart home devices and computer programs. It supports multi-turn dialogue, tool calling for complex tasks, multiple LLM models, voice recognition, smart home control, computer program management, online information retrieval, command line operations, and file management. The system is highly extensible, allowing users to customize and share their own tools.

gemini_multipdf_chat

Gemini PDF Chatbot is a Streamlit-based application that allows users to chat with a conversational AI model trained on PDF documents. The chatbot extracts information from uploaded PDF files and answers user questions based on the provided context. It features PDF upload, text extraction, conversational AI using the Gemini model, and a chat interface. Users can deploy the application locally or to the cloud, and the project structure includes main application script, environment variable file, requirements, and documentation. Dependencies include PyPDF2, langchain, Streamlit, google.generativeai, and dotenv.

mdserve

Markdown preview server for AI coding agents. mdserve is a tool that allows AI agents to write markdown and see it rendered live in the browser. It features zero configuration, single binary installation, instant live reload via WebSocket, ephemeral sessions, and agent-friendly content support. It is not a documentation site generator, static site server, or general-purpose markdown authoring tool. mdserve is designed for AI coding agents to produce content like tables, diagrams, and code blocks.

DeepBI

DeepBI is an AI-native data analysis platform that leverages the power of large language models to explore, query, visualize, and share data from any data source. Users can use DeepBI to gain data insight and make data-driven decisions.

verbis

Verbis AI is a secure and fully local AI assistant for MacOS that indexes data from various SaaS applications securely on the user's system. It provides a single interface powered by GenAI models to query and manage information. Users can connect Verbis to apps like Google Drive, Outlook, Gmail, and Slack, and use it as a chatbot to search across their data without data leaving their device. The tool is powered by Ollama and Weaviate, utilizing models like Mistral 7B, ms-marco-MiniLM-L-12-v2, and nomic-embed-text. Verbis AI requires Apple Silicon Mac (m1+) and has minimal system resource utilization requirements.

next-ai-draw-io

Next AI Draw.io is a next.js web application that integrates AI capabilities with draw.io diagrams. It allows users to create, modify, and enhance diagrams through natural language commands and AI-assisted visualization. Features include LLM-Powered Diagram Creation, Image-Based Diagram Replication, Diagram History, Interactive Chat Interface, and Smart Editing. The application uses Next.js for frontend framework, @ai-sdk/react for chat interface and AI interactions, and react-drawio for diagram representation and manipulation. Diagrams are represented as XML that can be rendered in draw.io, with AI processing commands to generate or modify the XML accordingly.

OllamaKit

OllamaKit is a Swift library designed to simplify interactions with the Ollama API. It handles network communication and data processing, offering an efficient interface for Swift applications to communicate with the Ollama API. The library is optimized for use within Ollamac, a macOS app for interacting with Ollama models.

sosumi.ai

sosumi.ai provides Apple Developer documentation in an AI-readable format by converting JavaScript-rendered pages into Markdown. It offers an HTTP API to access Apple docs, supports external Swift-DocC sites, integrates with MCP server, and provides tools like searchAppleDocumentation and fetchAppleDocumentation. The project can be self-hosted and is currently hosted on Cloudflare Workers. It is built with Hono and supports various runtimes. The application is designed for accessibility-first, on-demand rendering of Apple Developer pages to Markdown.

aisdk-prompt-optimizer

AISDK Prompt Optimizer is an open-source tool designed to transform AI interactions by optimizing prompts. It utilizes the GEPA reflective optimizer to evolve textual components of AI systems, providing features such as reflective prompt mutation, rich textual feedback, and Pareto-based selection. Users can teach their AI desired behaviors, collect ideal samples, run optimization to generate optimized prompts, and deploy the results in their applications. The tool leverages advanced optimization algorithms to guide AI through interactive conversations and refine prompt candidates for improved performance.

repo2txt

The GitHub Repo to Text Converter is a web-based tool that converts GitHub repository contents into a formatted text file for Large Language Model (LLM) prompts. It streamlines the process of transforming repository data into LLM-friendly input. The tool displays the GitHub repository structure, allows users to select files/directories to include, generates a formatted text file, enables copying text to clipboard, supports downloading generated text, and works with private repositories. It ensures data security by running entirely in the browser without server-side processing.

AppFlowy-Cloud

AppFlowy Cloud is a secure user authentication, file storage, and real-time WebSocket communication tool written in Rust. It is part of the AppFlowy ecosystem, providing an efficient and collaborative user experience. The tool offers deployment guides, development setup with Rust and Docker, debugging tips for components like PostgreSQL, Redis, Minio, and Portainer, and guidelines for contributing to the project.

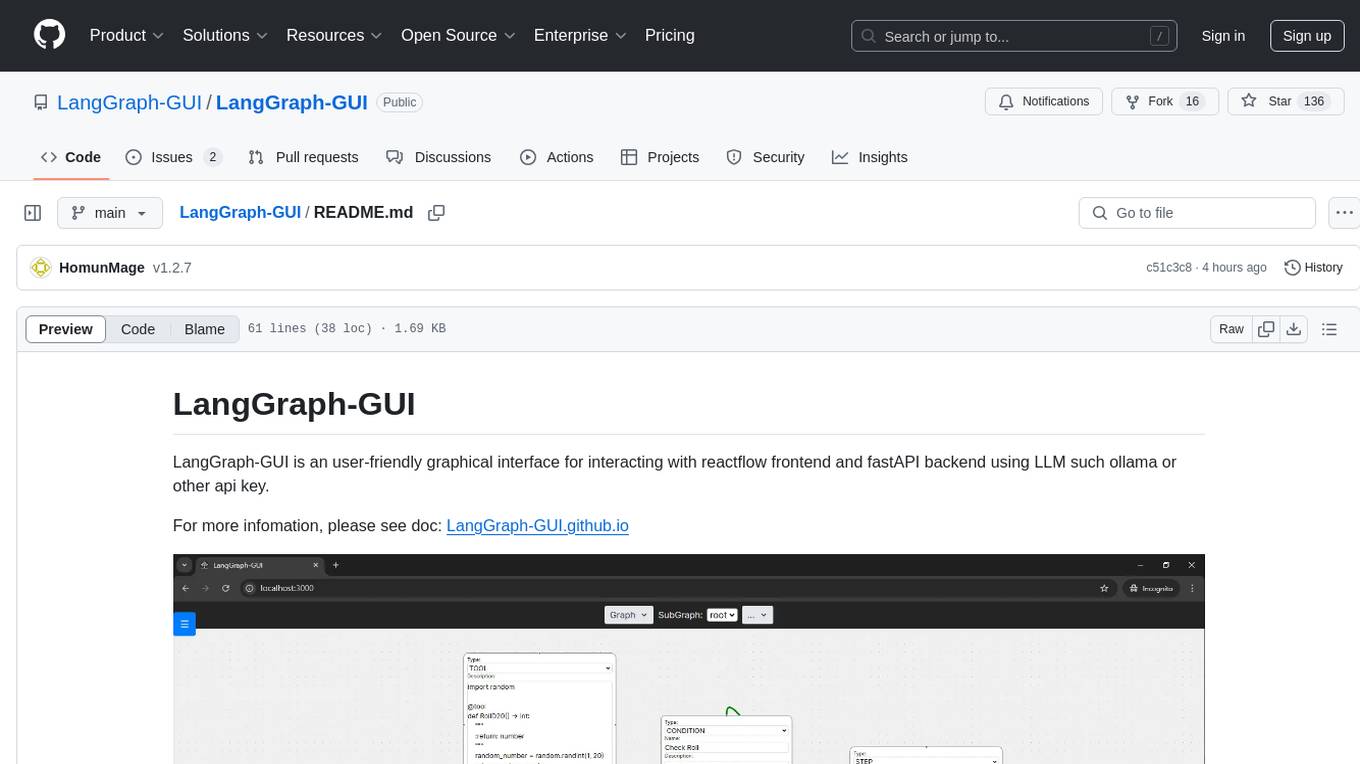

LangGraph-GUI

LangGraph-GUI is a user-friendly graphical interface for interacting with reactflow frontend and fastAPI backend using LLM such as ollama or other API key. It provides a convenient way to work with language models and APIs, offering a seamless experience for users to visualize and interact with the data flow. The tool simplifies the process of setting up the environment and accessing the application, making it easier for users to leverage the power of language models in their projects.

Roo-Code-Docs

Roo Code Docs is a website built using Docusaurus, a modern static website generator. It serves as a documentation platform for Roo Code, accessible at https://docs.roocode.com. The website provides detailed information and guides for users to navigate and utilize Roo Code effectively. With a clean and user-friendly interface, it offers a seamless experience for developers and users seeking information about Roo Code.

document-ai-samples

The Google Cloud Document AI Samples repository contains code samples and Community Samples demonstrating how to analyze, classify, and search documents using Google Cloud Document AI. It includes various projects showcasing different functionalities such as integrating with Google Drive, processing documents using Python, content moderation with Dialogflow CX, fraud detection, language extraction, paper summarization, tax processing pipeline, and more. The repository also provides access to test document files stored in a publicly-accessible Google Cloud Storage Bucket. Additionally, there are codelabs available for optical character recognition (OCR), form parsing, specialized processors, and managing Document AI processors. Community samples, like the PDF Annotator Sample, are also included. Contributions are welcome, and users can seek help or report issues through the repository's issues page. Please note that this repository is not an officially supported Google product and is intended for demonstrative purposes only.

ai-chatbot-svelte

SvelteKit AI Chatbot is an open-source template built with SvelteKit and the AI SDK by Vercel. It provides a unified API for generating text, structured objects, and tool calls with LLMs. The template includes hooks for building dynamic chat and generative user interfaces, supports various model providers, and offers styling with Tailwind CSS. Data persistence is ensured with Vercel Postgres and Blob for saving chat history and user data. Users can easily deploy their own version of the chatbot to Vercel with one click and run it locally using the provided environment variables.

For similar tasks

llmstxt-generator

llms.txt Generator is a tool designed for LLM (Legal Language Model) training and inference. It crawls websites to combine content into consolidated text files, offering both standard and full versions. Users can access the tool through a web interface or API without requiring an API key. Powered by Firecrawl for web crawling and GPT-4-mini for text processing.

LlamaIndexTS

LlamaIndex.TS is a data framework for your LLM application. Use your own data with large language models (LLMs, OpenAI ChatGPT and others) in Typescript and Javascript.

RTL-Coder

RTL-Coder is a tool designed to outperform GPT-3.5 in RTL code generation by providing a fully open-source dataset and a lightweight solution. It targets Verilog code generation and offers an automated flow to generate a large labeled dataset with over 27,000 diverse Verilog design problems and answers. The tool addresses the data availability challenge in IC design-related tasks and can be used for various applications beyond LLMs. The tool includes four RTL code generation models available on the HuggingFace platform, each with specific features and performance characteristics. Additionally, RTL-Coder introduces a new LLM training scheme based on code quality feedback to further enhance model performance and reduce GPU memory consumption.

AI-System-School

AI System School is a curated list of research in machine learning systems, focusing on ML/DL infra, LLM infra, domain-specific infra, ML/LLM conferences, and general resources. It provides resources such as data processing, training systems, video systems, autoML systems, and more. The repository aims to help users navigate the landscape of AI systems and machine learning infrastructure, offering insights into conferences, surveys, books, videos, courses, and blogs related to the field.

long-context-attention

Long-Context-Attention (YunChang) is a unified sequence parallel approach that combines the strengths of DeepSpeed-Ulysses-Attention and Ring-Attention to provide a versatile and high-performance solution for long context LLM model training and inference. It addresses the limitations of both methods by offering no limitation on the number of heads, compatibility with advanced parallel strategies, and enhanced performance benchmarks. The tool is verified in Megatron-LM and offers best practices for 4D parallelism, making it suitable for various attention mechanisms and parallel computing advancements.

Graph-Reasoning-LLM

This repository, GraphWiz, focuses on developing an instruction-following Language Model (LLM) for solving graph problems. It includes GraphWiz LLMs with strong graph problem-solving abilities, GraphInstruct dataset with over 72.5k training samples across nine graph problem tasks, and models like GPT-4 and Mistral-7B for comparison. The project aims to map textual descriptions of graphs and structures to solve various graph problems explicitly in natural language.

effective_llm_alignment

This is a super customizable, concise, user-friendly, and efficient toolkit for training and aligning LLMs. It provides support for various methods such as SFT, Distillation, DPO, ORPO, CPO, SimPO, SMPO, Non-pair Reward Modeling, Special prompts basket format, Rejection Sampling, Scoring using RM, Effective FAISS Map-Reduce Deduplication, LLM scoring using RM, NER, CLIP, Classification, and STS. The toolkit offers key libraries like PyTorch, Transformers, TRL, Accelerate, FSDP, DeepSpeed, and tools for result logging with wandb or clearml. It allows mixing datasets, generation and logging in wandb/clearml, vLLM batched generation, and aligns models using the SMPO method.

self-learn-llms

Self Learn LLMs is a repository containing resources for self-learning about Large Language Models. It includes theoretical and practical hands-on resources to facilitate learning. The repository aims to provide a clear roadmap with milestones for proper understanding of LLMs. The owner plans to refactor the repository to remove irrelevant content, organize model zoo better, and enhance the learning experience by adding contributors and hosting notes, tutorials, and open discussions.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.