character-factory

Generate characters for SillyTavern, TavernAI, TextGenerationWebUI using LLM and Stable Diffusion

Stars: 108

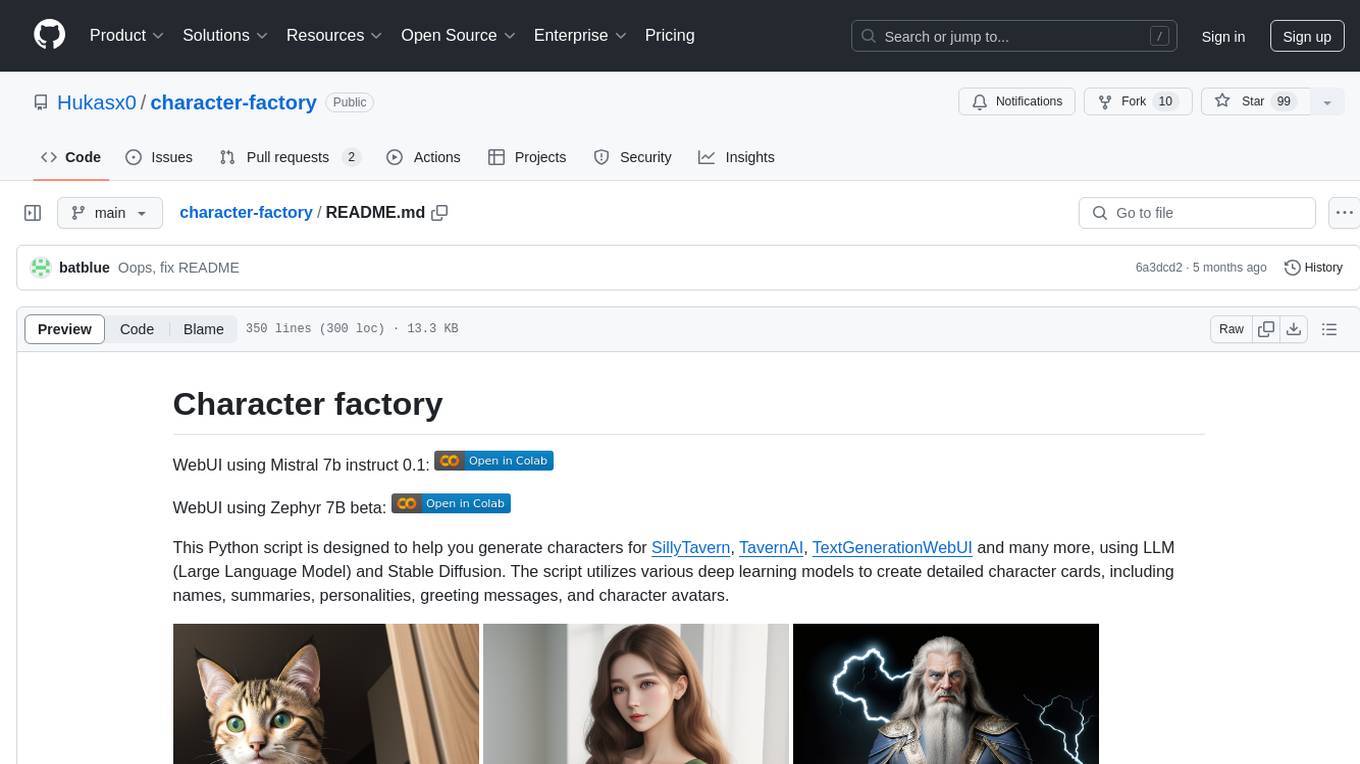

Character Factory is a Python script designed to generate detailed character cards for SillyTavern, TavernAI, TextGenerationWebUI, and more using Large Language Model (LLM) and Stable Diffusion. It streamlines character generation by leveraging deep learning models to create names, summaries, personalities, greeting messages, and avatars for characters. The tool provides an easy way to create unique and imaginative characters for storytelling, chatting, and other purposes.

README:

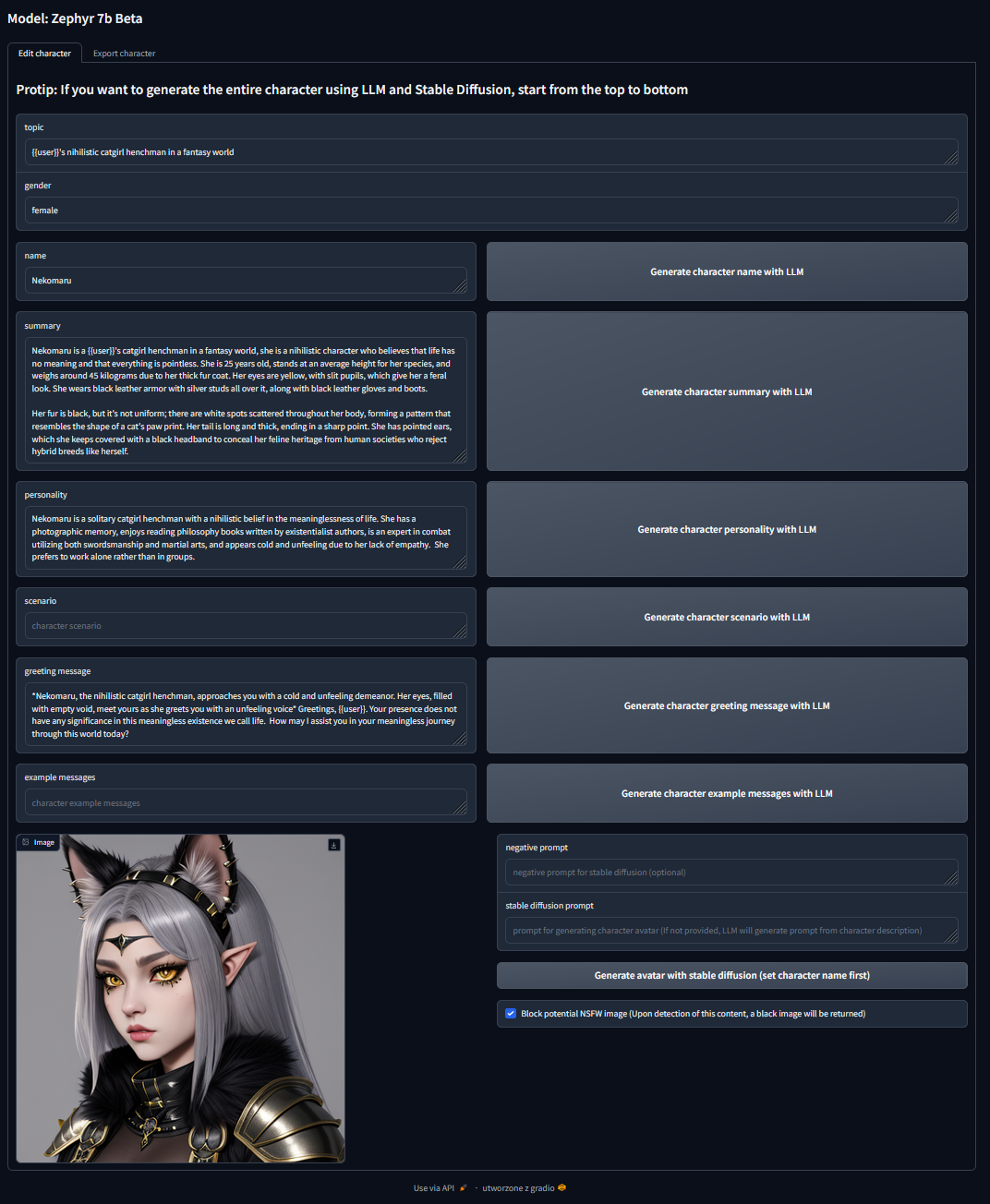

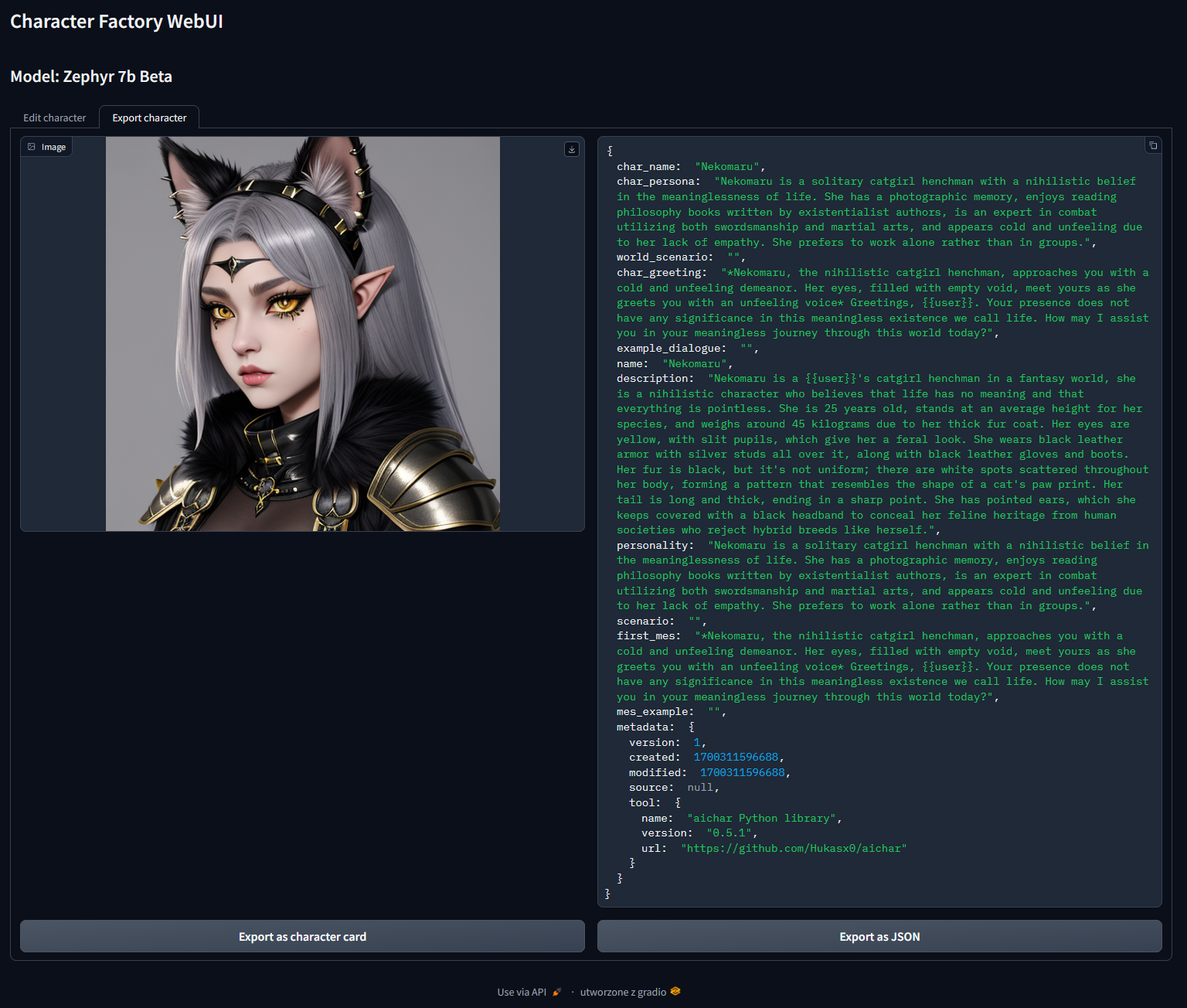

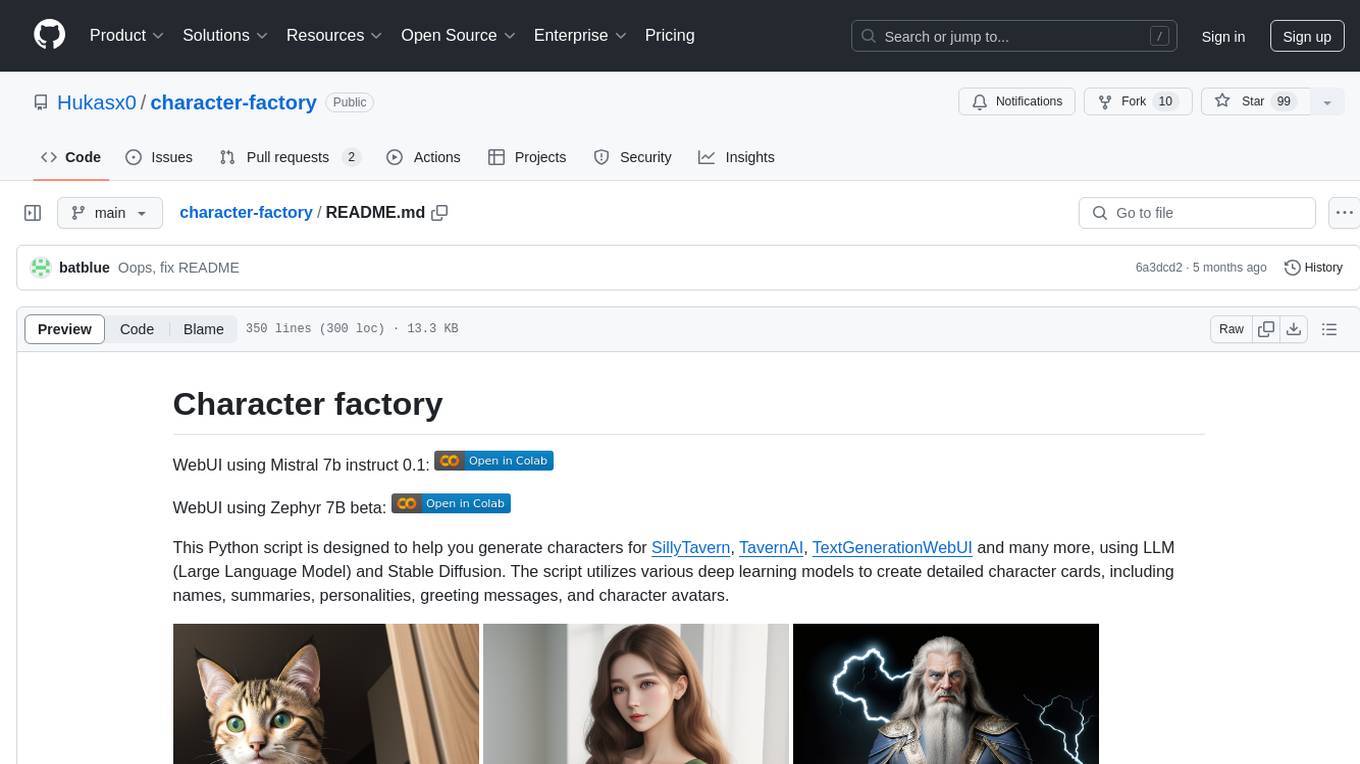

WebUI using Mistral 7b instruct 0.1:

This Python script is designed to help you generate characters for SillyTavern, TavernAI, TextGenerationWebUI and many more, using LLM (Large Language Model) and Stable Diffusion. The script utilizes various deep learning models to create detailed character cards, including names, summaries, personalities, greeting messages, and character avatars.

(these four images above are valid character cards (V1), you can download them and use them in any frontend that supports character cards)This script is designed to streamline the process of character generation for SillyTavern, TavernAI, and TextGenerationWebUI by leveraging LLM and Stable Diffusion models. It provides an easy way to create unique and imaginative characters for storytelling, chatting and other purposes.

- download miniconda from https://docs.conda.io/projects/miniconda/en/latest/

- familiarize yourself with how conda works https://conda.io/projects/conda/en/latest/user-guide/getting-started.html

- Download Git (if you don't have it already) https://git-scm.com/

- Clone git repository

git clone https://github.com/Hukasx0/character-factory

- Open the anaconda prompt and enter the path of the folder

for example:

cd C:\Users\me\Desktop\character-factory

- Execute these commands in the conda command prompt step by step.

conda create -n character-factory

conda activate character-factory

conda install python=3.11

pip install -r requirements-webui.txt

and you can start using the WebUI:

python ./app/main-mistral-webui.py

or

python ./app/main-zephyr-webui.py

Then go to the link http://localhost:7860/ in your browser

Later, the next time you run it, you don't need to create a new environment, just repeat step 5. and type in (in the conda command prompt)

conda activate character-factory

- download miniconda from https://docs.conda.io/projects/miniconda/en/latest/

- familiarize yourself with how conda works https://conda.io/projects/conda/en/latest/user-guide/getting-started.html

- Download Git (if you don't have it already) https://git-scm.com/

- Clone git repository

git clone https://github.com/Hukasx0/character-factory

- Open the anaconda prompt and enter the path of the folder

for example:

cd C:\Users\me\Desktop\character-factory

- Download the Cuda package for Anaconda https://anaconda.org/nvidia/cuda

- Execute these commands in the conda command prompt step by step.

conda create -n character-factory

conda activate character-factory

conda install python=3.11

pip install -r requirements-webui-cuda.txt

and you can start using the WebUI:

python ./app/main-mistral-webui.py

or

python ./app/main-zephyr-webui.py

Then go to the link http://localhost:7860/ in your browser

- download miniconda from https://docs.conda.io/projects/miniconda/en/latest/

- familiarize yourself with how conda works https://conda.io/projects/conda/en/latest/user-guide/getting-started.html

- Download Git (if you don't have it already) https://git-scm.com/

- Clone git repository

git clone https://github.com/Hukasx0/character-factory

- Open the anaconda prompt and enter the path of the folder

for example:

cd /Users/me/Desktop/character-factory

- Execute these commands in the conda command prompt step by step.

conda create -n character-factory

conda activate character-factory

conda install python=3.11

CT_METAL=1 pip install ctransformers --no-binary ctransformers

pip install -r requirements-webui.txt

and you can start using the WebUI:

python ./app/main-mistral-webui.py

or

python ./app/main-zephyr-webui.py

Then go to the link http://localhost:7860/ in your browser

Later, the next time you run it, you don't need to create a new environment, just repeat step 5. and type in (in the conda command prompt)

conda activate character-factory

- download miniconda from https://docs.conda.io/projects/miniconda/en/latest/

- familiarize yourself with how conda works https://conda.io/projects/conda/en/latest/user-guide/getting-started.html

- Download Git (if you don't have it already) https://git-scm.com/

- Clone git repository

git clone https://github.com/Hukasx0/character-factory

- Open the anaconda prompt and enter the path of the folder

for example:

cd C:\Users\me\Desktop\character-factory

- Execute these commands in the conda command prompt step by step.

conda create -n character-factory

conda activate character-factory

conda install python=3.11

pip install -r requirements.txt

and you can start using the script, for example like this:

python ./app/main-mistral.py --name "Albert Einstein" --topic "science" --avatar-prompt "Albert Einstein"

Later, the next time you run it, you don't need to create a new environment, just repeat step 5. and type in (in the conda command prompt)

conda activate character-factory

- download miniconda from https://docs.conda.io/projects/miniconda/en/latest/

- familiarize yourself with how conda works https://conda.io/projects/conda/en/latest/user-guide/getting-started.html

- Download Git (if you don't have it already) https://git-scm.com/

- Clone git repository

git clone https://github.com/Hukasx0/character-factory

- Open the anaconda prompt and enter the path of the folder

for example:

cd C:\Users\me\Desktop\character-factory

- Download the Cuda package for Anaconda https://anaconda.org/nvidia/cuda

- Execute these commands in the conda command prompt step by step.

conda create -n character-factory

conda activate character-factory

conda install python=3.11

pip install -r requirements-cuda.txt

and you can start using the script, for example like this:

python ./app/main-mistral.py --name "Albert Einstein" --topic "science" --avatar-prompt "Albert Einstein"

Later, the next time you run it, you don't need to create a new environment, just repeat step 5. and type in (in the conda command prompt)

conda activate character-factory

- download miniconda from https://docs.conda.io/projects/miniconda/en/latest/

- familiarize yourself with how conda works https://conda.io/projects/conda/en/latest/user-guide/getting-started.html

- Download Git (if you don't have it already) https://git-scm.com/

- Clone git repository

git clone https://github.com/Hukasx0/character-factory

- Open the anaconda prompt and enter the path of the folder

for example:

cd /Users/me/Desktop/character-factory

- Execute these commands in the conda command prompt step by step.

conda create -n character-factory

conda activate character-factory

conda install python=3.11

CT_METAL=1 pip install ctransformers --no-binary ctransformers

pip install -r requirements.txt

and you can start using the script, for example like this:

python ./app/main-mistral.py --name "Albert Einstein" --topic "science" --avatar-prompt "Albert Einstein"

Later, the next time you run it, you don't need to create a new environment, just repeat step 5. and type in (in the conda command prompt)

conda activate character-factory

When you run the script for the first time, the script will automatically download the required LLM and Stable Diffusion models

--name This flag allows you to specify the character's name. If provided, the script will use the name you specify. If not provided, the script will use the Language Model (LLM) to generate a name for the character.

--gender Use this parameter to specify the character's gender. If provided, the script will use the specified gender. Otherwise, LLM will choose the gender.

--summary Use this flag to specify the character's summary. If you provide a summary, it will be used for the character. If not provided, the script will use LLM to generate a summary for the character.

--personality This flag lets you specify the character's personality. If you provide a personality description, it will be used. If not provided, the script will use LLM to generate a personality description for the character.

--greeting-message Use this flag to specify the character's greeting message for interacting with users. If provided, the script will use the specified greeting message. If not provided, LLM will generate a greeting message for the character.

--avatar-prompt This flag allows you to specify the prompt for generating the character's avatar. If provided, the script will use the specified prompt for avatar generation. If not provided, the script will use LLM to generate the prompt for the avatar.

--topic Specify the topic for character generation using this flag. Topics can include "Fantasy", "Anime", "Noir style detective", "Old mage master of lightning", or any other topic relevant to your character. The topic can influence the character's details and characteristics.

--negative-prompt This flag is used to provide a negative prompt for Stable Diffusion. A negative prompt can be used to guide the generation of character avatars by specifying elements that should not be included in the avatar.

--scenario Use this flag to specify the character's scenario. If you provide a scenario, it will be used for the character. If not provided, the script will use LLM to generate a scenario for the character.

--example-messages Specify example messages for the character using this flag. If you provide example messages, they will be used for the character. If not provided, the script will use LLM to generate example messages for the character.

- Open the notebook in Google Colab by clicking one of those badges:

version using Mistral 7b instruct 0.1:

- After opening the link, you will see the notebook within the Google Colab environment.

- Make sure to check whether a GPU is selected for your environment. Running your script on a CPU will not work. To verify the GPU selection, follow these steps:

- Click on "Runtime" in the top menu.

- Change the CPU to one of these: T4 GPU, A100 GPU, V100 GPU

- Click "Save."

- After the environment starts, you need to run each cell in turn

- If everything is prepared, you can just run the last cell to generate characters

python ./app/main-mistral-webui.py

Then go to the link http://localhost:7860/ in your browser

python ./app/main-zephyr-webui.py

Then go to the link http://localhost:7860/ in your browser

python ./app/main-zephyr.py --topic "{{user}}'s pessimistic, monday-hating cat" --negative-prompt "human, gore, nsfw"

python ./app/main-zephyr.py --topic "{{user}}'s childhood friend, who secretly loves him" --gender "female" --negative-prompt "gore, nude, nsfw"

python ./app/main-mistral.py --topic "Old mage master of lightning" --gender "male" --negative-prompt "anime, nature, city, modern, young"

python ./app/main-mistral.py --name "Albert Einstein" --topic "science" --avatar-prompt "Albert Einstein"

2023 Hubert Kasperek

This script is available under the AGPL-3.0 license. Details of the license can be found in the LICENSE file.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for character-factory

Similar Open Source Tools

character-factory

Character Factory is a Python script designed to generate detailed character cards for SillyTavern, TavernAI, TextGenerationWebUI, and more using Large Language Model (LLM) and Stable Diffusion. It streamlines character generation by leveraging deep learning models to create names, summaries, personalities, greeting messages, and avatars for characters. The tool provides an easy way to create unique and imaginative characters for storytelling, chatting, and other purposes.

smartcat

Smartcat is a CLI interface that brings language models into the Unix ecosystem, allowing power users to leverage the capabilities of LLMs in their daily workflows. It features a minimalist design, seamless integration with terminal and editor workflows, and customizable prompts for specific tasks. Smartcat currently supports OpenAI, Mistral AI, and Anthropic APIs, providing access to a range of language models. With its ability to manipulate file and text streams, integrate with editors, and offer configurable settings, Smartcat empowers users to automate tasks, enhance code quality, and explore creative possibilities.

bia-bob

BIA `bob` is a Jupyter-based assistant for interacting with data using large language models to generate Python code. It can utilize OpenAI's chatGPT, Google's Gemini, Helmholtz' blablador, and Ollama. Users need respective accounts to access these services. Bob can assist in code generation, bug fixing, code documentation, GPU-acceleration, and offers a no-code custom Jupyter Kernel. It provides example notebooks for various tasks like bio-image analysis, model selection, and bug fixing. Installation is recommended via conda/mamba environment. Custom endpoints like blablador and ollama can be used. Google Cloud AI API integration is also supported. The tool is extensible for Python libraries to enhance Bob's functionality.

torchchat

torchchat is a codebase showcasing the ability to run large language models (LLMs) seamlessly. It allows running LLMs using Python in various environments such as desktop, server, iOS, and Android. The tool supports running models via PyTorch, chatting, generating text, running chat in the browser, and running models on desktop/server without Python. It also provides features like AOT Inductor for faster execution, running in C++ using the runner, and deploying and running on iOS and Android. The tool supports popular hardware and OS including Linux, Mac OS, Android, and iOS, with various data types and execution modes available.

ChatGPT-OpenAI-Smart-Speaker

ChatGPT Smart Speaker is a project that enables speech recognition and text-to-speech functionalities using OpenAI and Google Speech Recognition. It provides scripts for running on PC/Mac and Raspberry Pi, allowing users to interact with a smart speaker setup. The project includes detailed instructions for setting up the required hardware and software dependencies, along with customization options for the OpenAI model engine, language settings, and response randomness control. The Raspberry Pi setup involves utilizing the ReSpeaker hardware for voice feedback and light shows. The project aims to offer an advanced smart speaker experience with features like wake word detection and response generation using AI models.

fabric

Fabric is an open-source framework for augmenting humans using AI. It provides a structured approach to breaking down problems into individual components and applying AI to them one at a time. Fabric includes a collection of pre-defined Patterns (prompts) that can be used for a variety of tasks, such as extracting the most interesting parts of YouTube videos and podcasts, writing essays, summarizing academic papers, creating AI art prompts, and more. Users can also create their own custom Patterns. Fabric is designed to be easy to use, with a command-line interface and a variety of helper apps. It is also extensible, allowing users to integrate it with their own AI applications and infrastructure.

ai-town

AI Town is a virtual town where AI characters live, chat, and socialize. This project provides a deployable starter kit for building and customizing your own version of AI Town. It features a game engine, database, vector search, auth, text model, deployment, pixel art generation, background music generation, and local inference. You can customize your own simulation by creating characters and stories, updating spritesheets, changing the background, and modifying the background music.

llamafile

llamafile is a tool that enables users to distribute and run Large Language Models (LLMs) with a single file. It combines llama.cpp with Cosmopolitan Libc to create a framework that simplifies the complexity of LLMs into a single-file executable called a 'llamafile'. Users can run these executable files locally on most computers without the need for installation, making open LLMs more accessible to developers and end users. llamafile also provides example llamafiles for various LLM models, allowing users to try out different LLMs locally. The tool supports multiple CPU microarchitectures, CPU architectures, and operating systems, making it versatile and easy to use.

Open-LLM-VTuber

Open-LLM-VTuber is a project in early stages of development that allows users to interact with Large Language Models (LLM) using voice commands and receive responses through a Live2D talking face. The project aims to provide a minimum viable prototype for offline use on macOS, Linux, and Windows, with features like long-term memory using MemGPT, customizable LLM backends, speech recognition, and text-to-speech providers. Users can configure the project to chat with LLMs, choose different backend services, and utilize Live2D models for visual representation. The project supports perpetual chat, offline operation, and GPU acceleration on macOS, addressing limitations of existing solutions on macOS.

fasttrackml

FastTrackML is an experiment tracking server focused on speed and scalability, fully compatible with MLFlow. It provides a user-friendly interface to track and visualize your machine learning experiments, making it easy to compare different models and identify the best performing ones. FastTrackML is open source and can be easily installed and run with pip or Docker. It is also compatible with the MLFlow Python package, making it easy to integrate with your existing MLFlow workflows.

WindowsAgentArena

Windows Agent Arena (WAA) is a scalable Windows AI agent platform designed for testing and benchmarking multi-modal, desktop AI agents. It provides researchers and developers with a reproducible and realistic Windows OS environment for AI research, enabling testing of agentic AI workflows across various tasks. WAA supports deploying agents at scale using Azure ML cloud infrastructure, allowing parallel running of multiple agents and delivering quick benchmark results for hundreds of tasks in minutes.

llm-verified-with-monte-carlo-tree-search

This prototype synthesizes verified code with an LLM using Monte Carlo Tree Search (MCTS). It explores the space of possible generation of a verified program and checks at every step that it's on the right track by calling the verifier. This prototype uses Dafny, Coq, Lean, Scala, or Rust. By using this technique, weaker models that might not even know the generated language all that well can compete with stronger models.

slack-bot

The Slack Bot is a tool designed to enhance the workflow of development teams by integrating with Jenkins, GitHub, GitLab, and Jira. It allows for custom commands, macros, crons, and project-specific commands to be implemented easily. Users can interact with the bot through Slack messages, execute commands, and monitor job progress. The bot supports features like starting and monitoring Jenkins jobs, tracking pull requests, querying Jira information, creating buttons for interactions, generating images with DALL-E, playing quiz games, checking weather, defining custom commands, and more. Configuration is managed via YAML files, allowing users to set up credentials for external services, define custom commands, schedule cron jobs, and configure VCS systems like Bitbucket for automated branch lookup in Jenkins triggers.

CLI

Bito CLI provides a command line interface to the Bito AI chat functionality, allowing users to interact with the AI through commands. It supports complex automation and workflows, with features like long prompts and slash commands. Users can install Bito CLI on Mac, Linux, and Windows systems using various methods. The tool also offers configuration options for AI model type, access key management, and output language customization. Bito CLI is designed to enhance user experience in querying AI models and automating tasks through the command line interface.

HuggingFaceGuidedTourForMac

HuggingFaceGuidedTourForMac is a guided tour on how to install optimized pytorch and optionally Apple's new MLX, JAX, and TensorFlow on Apple Silicon Macs. The repository provides steps to install homebrew, pytorch with MPS support, MLX, JAX, TensorFlow, and Jupyter lab. It also includes instructions on running large language models using HuggingFace transformers. The repository aims to help users set up their Macs for deep learning experiments with optimized performance.

termax

Termax is an LLM agent in your terminal that converts natural language to commands. It is featured by: - Personalized Experience: Optimize the command generation with RAG. - Various LLMs Support: OpenAI GPT, Anthropic Claude, Google Gemini, Mistral AI, and more. - Shell Extensions: Plugin with popular shells like `zsh`, `bash` and `fish`. - Cross Platform: Able to run on Windows, macOS, and Linux.

For similar tasks

character-factory

Character Factory is a Python script designed to generate detailed character cards for SillyTavern, TavernAI, TextGenerationWebUI, and more using Large Language Model (LLM) and Stable Diffusion. It streamlines character generation by leveraging deep learning models to create names, summaries, personalities, greeting messages, and avatars for characters. The tool provides an easy way to create unique and imaginative characters for storytelling, chatting, and other purposes.

SillyTavern

SillyTavern is a user interface you can install on your computer (and Android phones) that allows you to interact with text generation AIs and chat/roleplay with characters you or the community create. SillyTavern is a fork of TavernAI 1.2.8 which is under more active development and has added many major features. At this point, they can be thought of as completely independent programs.

agnai

Agnaistic is an AI roleplay chat tool that allows users to interact with personalized characters using their favorite AI services. It supports multiple AI services, persona schema formats, and features such as group conversations, user authentication, and memory/lore books. Agnaistic can be self-hosted or run using Docker, and it provides a range of customization options through its settings.json file. The tool is designed to be user-friendly and accessible, making it suitable for both casual users and developers.

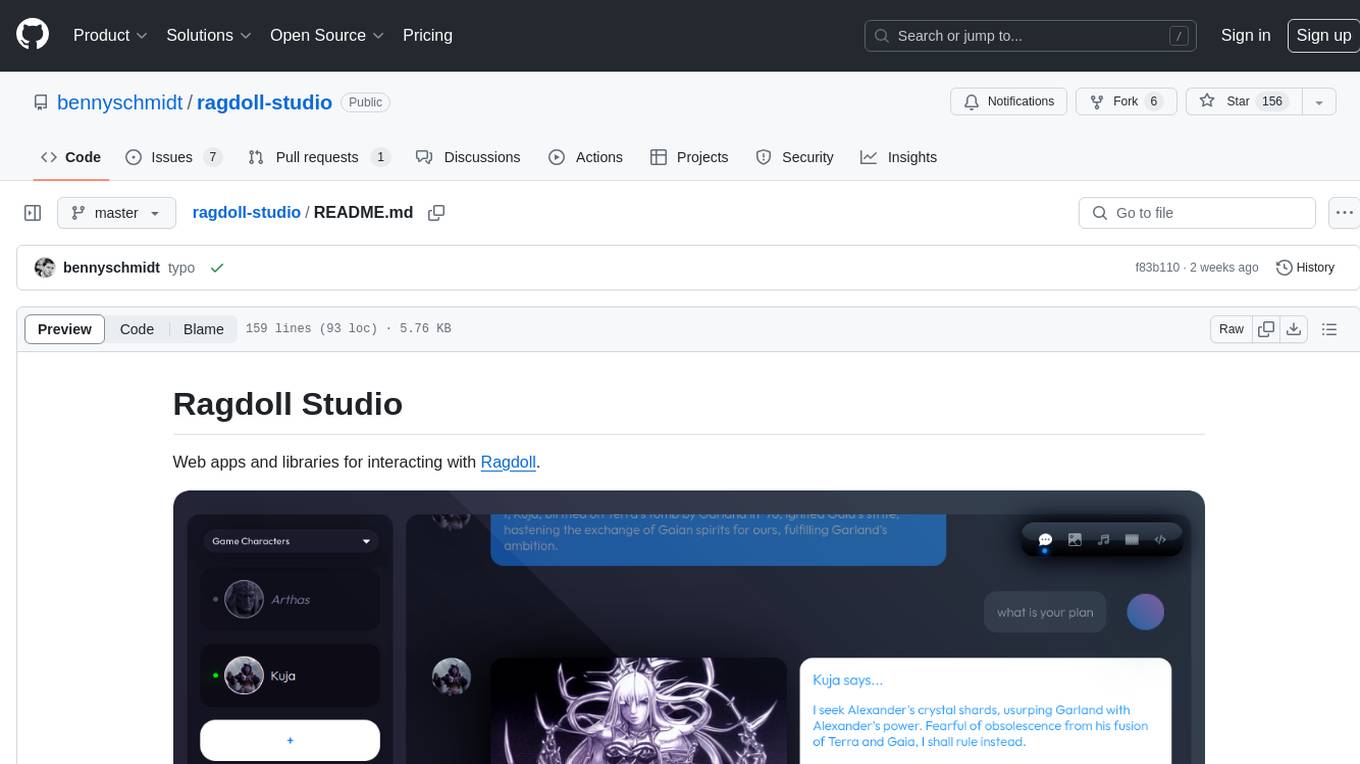

ragdoll-studio

Ragdoll Studio is a platform offering web apps and libraries for interacting with Ragdoll, enabling users to go beyond fine-tuning and create flawless creative deliverables, rich multimedia, and engaging experiences. It provides various modes such as Story Mode for creating and chatting with characters, Vector Mode for producing vector art, Raster Mode for producing raster art, Video Mode for producing videos, Audio Mode for producing audio, and 3D Mode for producing 3D objects. Users can export their content in various formats and share their creations on the community site. The platform consists of a Ragdoll API and a front-end React application for seamless usage.

LLMUnity

LLM for Unity enables seamless integration of Large Language Models (LLMs) within the Unity engine, allowing users to create intelligent characters for immersive player interactions. The tool supports major LLM models, runs locally without internet access, offers fast inference on CPU and GPU, and is easy to set up with a single line of code. It is free for both personal and commercial use, tested on Unity 2021 LTS, 2022 LTS, and 2023. Users can build multiple AI characters efficiently, use remote servers for processing, and customize model settings for text generation.

ai-anime-art-generator

AI Anime Art Generator is an AI-driven cutting-edge tool for anime arts creation. Perfect for beginners to easily create stunning anime art without any prior experience. It allows users to create detailed character designs, custom avatars for social media, and explore new artistic styles and ideas. Built on Next.js, TailwindCSS, Google Analytics, Vercel, Replicate, CloudFlare R2, and Clerk.

TavernAI

TavernAI is an atmospheric frontend tool for chat and storywriting, compatible with various backends. It offers features like character creation, online character database, group chat, story mode, world info, message swiping, configurable settings, interface themes, backgrounds, message editing, GPT-4.5, and Claude picture recognition. The tool supports backends like Kobold series, Oobabooga's Text Generation Web UI, OpenAI, NovelAI, and Claude. Users can easily install TavernAI on different operating systems and start using it for interactive storytelling and chat experiences.

Character-Engine-Discord

Character Engine is a Discord bot that aggregates various online platforms to create AI-driven characters using Discord Webhooks and LLM chatbots. It allows users to bring life and joy to their server by spawning characters, exploring embedded characters, and configuring settings on a per-server, per-channel, and per-character basis.

For similar jobs

ChatFAQ

ChatFAQ is an open-source comprehensive platform for creating a wide variety of chatbots: generic ones, business-trained, or even capable of redirecting requests to human operators. It includes a specialized NLP/NLG engine based on a RAG architecture and customized chat widgets, ensuring a tailored experience for users and avoiding vendor lock-in.

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.

anything-llm

AnythingLLM is a full-stack application that enables you to turn any document, resource, or piece of content into context that any LLM can use as references during chatting. This application allows you to pick and choose which LLM or Vector Database you want to use as well as supporting multi-user management and permissions.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.

glide

Glide is a cloud-native LLM gateway that provides a unified REST API for accessing various large language models (LLMs) from different providers. It handles LLMOps tasks such as model failover, caching, key management, and more, making it easy to integrate LLMs into applications. Glide supports popular LLM providers like OpenAI, Anthropic, Azure OpenAI, AWS Bedrock (Titan), Cohere, Google Gemini, OctoML, and Ollama. It offers high availability, performance, and observability, and provides SDKs for Python and NodeJS to simplify integration.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

onnxruntime-genai

ONNX Runtime Generative AI is a library that provides the generative AI loop for ONNX models, including inference with ONNX Runtime, logits processing, search and sampling, and KV cache management. Users can call a high level `generate()` method, or run each iteration of the model in a loop. It supports greedy/beam search and TopP, TopK sampling to generate token sequences, has built in logits processing like repetition penalties, and allows for easy custom scoring.