ai-gateway

Govern, Secure, and Optimize your AI Traffic. AI Gateway provides unified interface to all LLMs using OpenAI API format with a focus on performance and reliability. Built in Rust.

Stars: 675

LangDB AI Gateway is an open-source enterprise AI gateway built in Rust. It provides a unified interface to all LLMs using the OpenAI API format, focusing on high performance, enterprise readiness, and data control. The gateway offers features like comprehensive usage analytics, cost tracking, rate limiting, data ownership, and detailed logging. It supports various LLM providers and provides OpenAI-compatible endpoints for chat completions, model listing, embeddings generation, and image generation. Users can configure advanced settings, such as rate limiting, cost control, dynamic model routing, and observability with OpenTelemetry tracing. The gateway can be run with Docker Compose and integrated with MCP tools for server communication.

README:

Govern, Secure, and Optimize your AI Traffic. LangDB AI Gateway provides unified interface to all LLMs using OpenAI API format. Built with performance and reliability in mind.

🚀 High Performance

- Built in Rust for maximum speed and reliability

- Seamless integration with any framework (Langchain, Vercel AI SDK, CrewAI, etc.)

- Integrate with any MCP servers(https://docs.langdb.ai/ai-gateway/features/mcp-support)

📊 Enterprise Ready

- Comprehensive usage analytics and cost tracking

- Rate limiting and cost control

- Advanced routing, load balancing and failover

- Evaluations

🔒 Data Control

- Full ownership of your LLM usage data

- Detailed logging and tracing

🌟 Hosted Version - Get started in minutes with our fully managed solution

- Zero infrastructure management

- Automatic updates and maintenance

- Pay-as-you-go pricing

💼 Enterprise Version - Enhanced features for large-scale deployments

- Advanced team management and access controls

- Custom security guardrails and compliance features

- Intuitive monitoring dashboard

- Priority support and SLA guarantees

- Custom deployment options

Contact our team to learn more about enterprise solutions.

Choose one of these installation methods:

docker run -it \

-p 8080:8080 \

-e LANGDB_KEY=your-langdb-key-here \

langdb/ai-gateway serveInstall from crates.io:

export RUSTFLAGS="--cfg tracing_unstable --cfg aws_sdk_unstable"

cargo install ai-gateway

export LANGDB_KEY=your-langdb-key-here

ai-gateway serveTest the gateway with a simple chat completion:

# Chat completion with GPT-4

curl http://localhost:8080/v1/chat/completions \

-H "Content-Type: application/json" \

-d '{

"model": "gpt-4o-mini",

"messages": [{"role": "user", "content": "What is the capital of France?"}]

}'

# Or try Claude

curl http://localhost:8080/v1/chat/completions \

-H "Content-Type: application/json" \

-d '{

"model": "claude-3-opus",

"messages": [

{"role": "user", "content": "What is the capital of France?"}

]

}'LangDB AI Gateway currently supports the following LLM providers. Find all the available models here.

| Provider | |

|---|---|

| OpenAI | |

| Google Gemini | |

| Anthropic | |

| DeepSeek | |

| TogetherAI | |

| XAI | |

| Meta ( Provided by Bedrock ) | |

| Cohere ( Provided by Bedrock ) | |

| Mistral ( Provided by Bedrock ) |

The gateway provides the following OpenAI-compatible endpoints:

-

POST /v1/chat/completions- Chat completions -

GET /v1/models- List available models -

POST /v1/embeddings- Generate embeddings -

POST /v1/images/generations- Generate images

Create a config.yaml file:

providers:

openai:

api_key: "your-openai-key-here"

anthropic:

api_key: "your-anthropic-key-here"

# Supports mustache style variables

gemini:

api_key: {{LANGDB_GEMINI_API_KEY}}

http:

host: "0.0.0.0"

port: 8080# Run with custom host and port

ai-gateway serve --host 0.0.0.0 --port 3000

# Run with CORS origins

ai-gateway serve --cors-origins "http://localhost:3000,http://example.com"

# Run with rate limiting

ai-gateway serve --rate-hourly 1000

# Run with cost limits

ai-gateway serve --cost-daily 100.0 --cost-monthly 1000.0

# Run with custom database connections

ai-gateway serve --clickhouse-url "clickhouse://localhost:9000"Download the sample configuration from our repo.

- Copy the example config file:

curl -sL https://raw.githubusercontent.com/langdb/ai-gateway/main/config.sample.yaml -o config.sample.yaml

cp config.sample.yaml config.yamlCommand line options will override corresponding config file settings when both are specified.

Rate limiting helps prevent API abuse by limiting the number of requests within a time window. Configure rate limits using:

# Limit to 1000 requests per hour

ai-gateway serve --rate-hourly 1000Or in config.yaml:

rate_limit:

hourly: 1000

daily: 10000

monthly: 100000Cost control helps manage API spending by setting daily, monthly, or total cost limits. Configure cost limits using:

# Set daily and monthly limits

ai-gateway serve \

--cost-daily 100.0 \

--cost-monthly 1000.0 \

--cost-total 5000.0Or in config.yaml:

cost_control:

daily: 100.0 # $100 per day

monthly: 1000.0 # $1000 per month

total: 5000.0 # $5000 totalWhen a cost limit is reached, the API will return a 429 response with a message indicating the limit has been exceeded.

When a rate limit is exceeded, the API will return a 429 (Too Many Requests) response.

LangDB AI Gateway empowers you to implement sophisticated routing strategies for your LLM requests. By utilizing features such as fallback routing, script-based routing, and latency-based routing, you can optimize your AI traffic to balance cost, speed, and availability.

Here's an example of a dynamic routing configuration:

{

"model": "router/dynamic",

"messages": [

{ "role": "system", "content": "You are a helpful assistant." },

{ "role": "user", "content": "What is the formula of a square plot?" }

],

"router": {

"router": "router",

"type": "fallback", // Type: fallback/script/optimized/percentage/latency

"targets": [

{ "model": "openai/gpt-4o-mini", "temperature": 0.9, "max_tokens": 500, "top_p": 0.9 },

{ "model": "deepseek/deepseek-chat", "frequency_penalty": 1, "presence_penalty": 0.6 }

]

},

"stream": false

}This configuration demonstrates how you can define multiple targets with specific parameters to ensure your requests are handled by the most suitable models. For more detailed information, explore our routing documentation.

The gateway supports OpenTelemetry tracing with ClickHouse as the storage backend. All traces are stored in the langdb.traces table.

- Create the traces table in ClickHouse:

# Create langdb database if it doesn't exist

clickhouse-client --query "CREATE DATABASE IF NOT EXISTS langdb"

# Import the traces table schema

clickhouse-client --query "$(cat sql/traces.sql)"- Enable tracing by providing the ClickHouse URL when running the server:

ai-gateway serve --clickhouse-url "clickhouse://localhost:9000"You can also set the URL in your config.yaml:

clickhouse:

url: "http://localhost:8123"The traces are stored in the langdb.traces table. Here are some example queries:

-- Get recent traces

SELECT

trace_id,

operation_name,

start_time_us,

finish_time_us,

(finish_time_us - start_time_us) as duration_us

FROM langdb.traces

WHERE finish_date >= today() - 1

ORDER BY finish_time_us DESC

LIMIT 10;Did you know you can call LangDB APIs directly within ClickHouse? Check out our UDF documentation to learn how to use LLMs in your SQL queries!

For a complete setup including ClickHouse for analytics and tracing, follow these steps:

- Start the services using Docker Compose:

docker-compose up -dThis will start:

- ClickHouse server on ports 8123 (HTTP)

- All necessary configurations will be loaded from

docker/clickhouse/server/config.d

- Build and run the gateway:

ai-gatewayThe gateway will now be running with full analytics and logging capabilities, storing data in ClickHouse.

curl http://localhost:8080/v1/chat/completions \

-H "Content-Type: application/json" \

-d '{

"model": "gpt-4o-mini",

"messages": [{"role": "user", "content": "Ping the server using the tool and return the response"}],

"mcp_servers": [{"server_url": "http://localhost:3004"}]

}'To get started with development:

- Clone the repository

- Copy

config.sample.yamltoconfig.yamland configure as needed - Run

cargo buildto compile - Run

cargo testto run tests

We welcome contributions! Please check out our Contributing Guide for guidelines on:

- How to submit issues

- How to submit pull requests

- Code style conventions

- Development workflow

- Testing requirements

The gateway uses tracing for logging. Set the RUST_LOG environment variable to control log levels:

RUST_LOG=debug cargo run serve # For detailed logs

RUST_LOG=info cargo run serve # For standard logsThis project is released under the Apache License 2.0. See the license file for more information.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for ai-gateway

Similar Open Source Tools

ai-gateway

LangDB AI Gateway is an open-source enterprise AI gateway built in Rust. It provides a unified interface to all LLMs using the OpenAI API format, focusing on high performance, enterprise readiness, and data control. The gateway offers features like comprehensive usage analytics, cost tracking, rate limiting, data ownership, and detailed logging. It supports various LLM providers and provides OpenAI-compatible endpoints for chat completions, model listing, embeddings generation, and image generation. Users can configure advanced settings, such as rate limiting, cost control, dynamic model routing, and observability with OpenTelemetry tracing. The gateway can be run with Docker Compose and integrated with MCP tools for server communication.

open-responses

OpenResponses API provides enterprise-grade AI capabilities through a powerful API, simplifying development and deployment while ensuring complete data control. It offers automated tracing, integrated RAG for contextual information retrieval, pre-built tool integrations, self-hosted architecture, and an OpenAI-compatible interface. The toolkit addresses development challenges like feature gaps and integration complexity, as well as operational concerns such as data privacy and operational control. Engineering teams can benefit from improved productivity, production readiness, compliance confidence, and simplified architecture by choosing OpenResponses.

open-edison

OpenEdison is a secure MCP control panel that connects AI to data/software with additional security controls to reduce data exfiltration risks. It helps address the lethal trifecta problem by providing visibility, monitoring potential threats, and alerting on data interactions. The tool offers features like data leak monitoring, controlled execution, easy configuration, visibility into agent interactions, a simple API, and Docker support. It integrates with LangGraph, LangChain, and plain Python agents for observability and policy enforcement. OpenEdison helps gain observability, control, and policy enforcement for AI interactions with systems of records, existing company software, and data to reduce risks of AI-caused data leakage.

tuui

TUUI is a desktop MCP client designed for accelerating AI adoption through the Model Context Protocol (MCP) and enabling cross-vendor LLM API orchestration. It is an LLM chat desktop application based on MCP, created using AI-generated components with strict syntax checks and naming conventions. The tool integrates AI tools via MCP, orchestrates LLM APIs, supports automated application testing, TypeScript, multilingual, layout management, global state management, and offers quick support through the GitHub community and official documentation.

context7

Context7 is a powerful tool for analyzing and visualizing data in various formats. It provides a user-friendly interface for exploring datasets, generating insights, and creating interactive visualizations. With advanced features such as data filtering, aggregation, and customization, Context7 is suitable for both beginners and experienced data analysts. The tool supports a wide range of data sources and formats, making it versatile for different use cases. Whether you are working on exploratory data analysis, data visualization, or data storytelling, Context7 can help you uncover valuable insights and communicate your findings effectively.

ai-dial-sdk

AI DIAL Python SDK is a framework designed to create applications and model adapters for AI DIAL API, which is based on Azure OpenAI API. It provides a user-friendly interface for routing requests to applications. The SDK includes features for chat completions, response generation, and API interactions. Developers can easily build and deploy AI-powered applications using this SDK, ensuring compatibility with the AI DIAL platform.

claude-task-master

Claude Task Master is a task management system designed for AI-driven development with Claude, seamlessly integrating with Cursor AI. It allows users to configure tasks through environment variables, parse PRD documents, generate structured tasks with dependencies and priorities, and manage task status. The tool supports task expansion, complexity analysis, and smart task recommendations. Users can interact with the system through CLI commands for task discovery, implementation, verification, and completion. It offers features like task breakdown, dependency management, and AI-driven task generation, providing a structured workflow for efficient development.

scylla

Scylla is an intelligent proxy pool tool designed for humanities, enabling users to extract content from the internet and build their own Large Language Models in the AI era. It features automatic proxy IP crawling and validation, an easy-to-use JSON API, a simple web-based user interface, HTTP forward proxy server, Scrapy and requests integration, and headless browser crawling. Users can start using Scylla with just one command, making it a versatile tool for various web scraping and content extraction tasks.

mcphub.nvim

MCPHub.nvim is a powerful Neovim plugin that integrates MCP (Model Context Protocol) servers into your workflow. It offers a centralized config file for managing servers and tools, with an intuitive UI for testing resources. Ideal for LLM integration, it provides programmatic API access and interactive testing through the `:MCPHub` command.

mLoRA

mLoRA (Multi-LoRA Fine-Tune) is an open-source framework for efficient fine-tuning of multiple Large Language Models (LLMs) using LoRA and its variants. It allows concurrent fine-tuning of multiple LoRA adapters with a shared base model, efficient pipeline parallelism algorithm, support for various LoRA variant algorithms, and reinforcement learning preference alignment algorithms. mLoRA helps save computational and memory resources when training multiple adapters simultaneously, achieving high performance on consumer hardware.

trapster-community

Trapster Community is a low-interaction honeypot designed for internal networks or credential capture. It monitors and detects suspicious activities, providing deceptive security layer. Features include mimicking network services, asynchronous framework, easy configuration, expandable services, and HTTP honeypot engine with AI capabilities. Supported protocols include DNS, HTTP/HTTPS, FTP, LDAP, MSSQL, POSTGRES, RDP, SNMP, SSH, TELNET, VNC, and RSYNC. The tool generates various types of logs and offers HTTP engine with AI capabilities to emulate websites using YAML configuration. Contributions are welcome under AGPLv3+ license.

plexe

Plexe is a tool that allows users to create machine learning models by describing them in plain language. Users can explain their requirements, provide a dataset, and the AI-powered system will build a fully functional model through an automated agentic approach. It supports multiple AI agents and model building frameworks like XGBoost, CatBoost, and Keras. Plexe also provides Docker images with pre-configured environments, YAML configuration for customization, and support for multiple LiteLLM providers. Users can visualize experiment results using the built-in Streamlit dashboard and extend Plexe's functionality through custom integrations.

mcpdoc

The MCP LLMS-TXT Documentation Server is an open-source server that provides developers full control over tools used by applications like Cursor, Windsurf, and Claude Code/Desktop. It allows users to create a user-defined list of `llms.txt` files and use a `fetch_docs` tool to read URLs within these files, enabling auditing of tool calls and context returned. The server supports various applications and provides a way to connect to them, configure rules, and test tool calls for tasks related to documentation retrieval and processing.

yomo

YoMo is an open-source LLM Function Calling Framework for building Geo-distributed AI applications. It is built atop QUIC Transport Protocol and Stateful Serverless architecture, making AI applications low-latency, reliable, secure, and easy. The framework focuses on providing low-latency, secure, stateful serverless functions that can be distributed geographically to bring AI inference closer to end users. It offers features such as low-latency communication, security with TLS v1.3, stateful serverless functions for faster GPU processing, geo-distributed architecture, and a faster-than-real-time codec called Y3. YoMo enables developers to create and deploy stateful serverless functions for AI inference in a distributed manner, ensuring quick responses to user queries from various locations worldwide.

clewdr

Clewdr is a collaborative platform for data analysis and visualization. It allows users to upload datasets, perform various data analysis tasks, and create interactive visualizations. The platform supports multiple users working on the same project simultaneously, enabling real-time collaboration and sharing of insights. Clewdr is designed to streamline the data analysis process and facilitate communication among team members. With its user-friendly interface and powerful features, Clewdr is suitable for data scientists, analysts, researchers, and anyone working with data to gain valuable insights and make informed decisions.

ruby_llm-monitoring

RubyLLM::Monitoring is a tool designed to monitor the LLM (Live-Link Monitoring) usage within a Rails application. It provides a dashboard to display metrics such as Throughput, Cost, Response Time, and Error Rate. Users can customize the displayed metrics and add their own custom metrics. The tool also supports setting up alerts based on predefined conditions, such as monitoring cost and errors. Authentication and authorization are left to the user, allowing for flexibility in securing the monitoring dashboard. Overall, RubyLLM::Monitoring aims to provide a comprehensive solution for monitoring and analyzing the performance of a Rails application.

For similar tasks

ai-gateway

LangDB AI Gateway is an open-source enterprise AI gateway built in Rust. It provides a unified interface to all LLMs using the OpenAI API format, focusing on high performance, enterprise readiness, and data control. The gateway offers features like comprehensive usage analytics, cost tracking, rate limiting, data ownership, and detailed logging. It supports various LLM providers and provides OpenAI-compatible endpoints for chat completions, model listing, embeddings generation, and image generation. Users can configure advanced settings, such as rate limiting, cost control, dynamic model routing, and observability with OpenTelemetry tracing. The gateway can be run with Docker Compose and integrated with MCP tools for server communication.

devopness

Devopness is a tool that simplifies the management of cloud applications and multi-cloud infrastructure for both AI agents and humans. It provides role-based access control, permission management, cost control, and visibility into DevOps and CI/CD workflows. The tool allows provisioning and deployment to major cloud providers like AWS, Azure, DigitalOcean, and GCP. Devopness aims to make software deployment and cloud infrastructure management accessible and affordable to all involved in software projects.

llamafarm

LlamaFarm is a comprehensive AI framework that empowers users to build powerful AI applications locally, with full control over costs and deployment options. It provides modular components for RAG systems, vector databases, model management, prompt engineering, and fine-tuning. Users can create differentiated AI products without needing extensive ML expertise, using simple CLI commands and YAML configs. The framework supports local-first development, production-ready components, strategy-based configuration, and deployment anywhere from laptops to the cloud.

huf

HUF is an AI-native engine designed to centralize intelligence and execution into a single engine, enabling AI to operate inside real business systems. It offers multi-provider AI connectivity, intelligent tools, knowledge grounding, event-driven execution, visual workflow builder, full auditability, and cost control. HUF can be used as AI infrastructure for products, internal intelligence platform, automation & orchestration engine, embedded AI layer for SaaS, and enterprise AI control plane. Core capabilities include agent system, knowledge management, trigger system, visual flow builder, and observability. The tech stack includes Frappe Framework, Python 3.10+, LiteLLM, SQLite FTS5, React 18, TypeScript, Tailwind CSS, and MariaDB.

paddler

Paddler is an open-source LLM load balancer and serving platform designed for digital products and users who prioritize privacy, reliability, cost control, and independence from closed-source model providers. It allows running inference, deploying, and scaling LLMs on personal infrastructure, offering a seamless developer experience. Key features include inference through llama.cpp engine, LLM-specific load balancing, dynamic model swapping, request buffering, and built-in web admin panel for management and monitoring. Paddler is suitable for product teams needing LLM inference, DevOps/LLMOps teams deploying LLMs at scale, organizations handling sensitive data, and product leaders aiming for predictable LLM costs and reliable model performance.

AIG-ModelMatching-For-MSFS

This tool is an AIG install for MSFS ONLY EXCLUDING offline AI flight plans. It provides a solution to model matching for online networks along with providing a tool to inject live traffic to your simulator, directly from Flightradar24. The tool is designed for use with online virtual traffic networks like VATSIM, but it will also work for offline traffic. A VMR File for VATSIM usage has been included in the folder.

aigne-hub

AIGNE Hub is a unified AI gateway that manages connections to multiple LLM and AIGC providers, eliminating the complexity of handling API keys, usage tracking, and billing across different AI services. It provides self-hosting capabilities, multi-provider management, unified security, usage analytics, flexible billing, and seamless integration with the AIGNE framework. The tool supports various AI providers and deployment scenarios, catering to both enterprise self-hosting and service provider modes. Users can easily deploy and configure AI providers, enable billing, and utilize core capabilities such as chat completions, image generation, embeddings, and RESTful APIs. AIGNE Hub ensures secure access, encrypted API key management, user permissions, and audit logging. Built with modern technologies like AIGNE Framework, Node.js, TypeScript, React, SQLite, and Blocklet for cloud-native deployment.

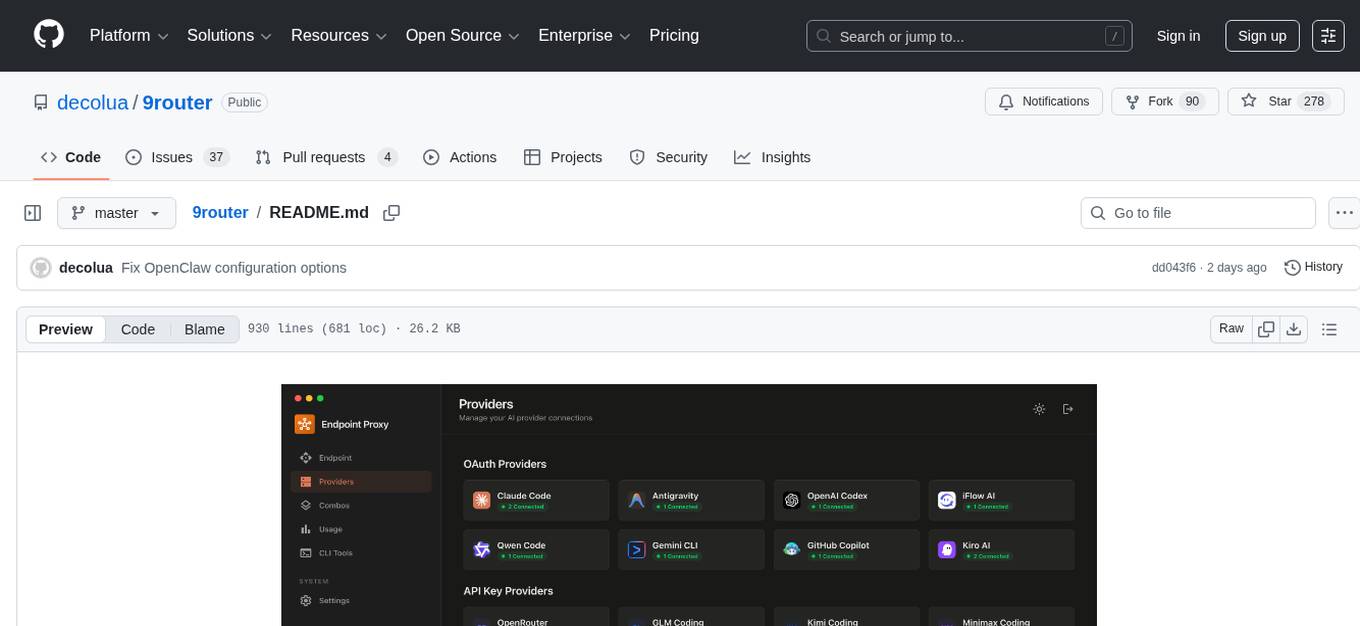

9router

9Router is a free AI router tool designed to help developers maximize their AI subscriptions, auto-route to free and cheap AI models with smart fallback, and avoid hitting limits and wasting money. It offers features like real-time quota tracking, format translation between OpenAI, Claude, and Gemini, multi-account support, auto token refresh, custom model combinations, request logging, cloud sync, usage analytics, and flexible deployment options. The tool supports various providers like Claude Code, Codex, Gemini CLI, GitHub Copilot, GLM, MiniMax, iFlow, Qwen, and Kiro, and allows users to create combos for different scenarios. Users can connect to the tool via CLI tools like Cursor, Claude Code, Codex, OpenClaw, and Cline, and deploy it on VPS, Docker, or Cloudflare Workers.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.