uni-api

This is a project that unifies the management of LLM APIs. It can call multiple backend services through a unified API interface, convert them to the OpenAI format uniformly, and support load balancing. Currently supported backend services include: OpenAI, Anthropic, DeepBricks, OpenRouter, Gemini, Vertex, etc.

Stars: 1098

uni-api is a project that unifies the management of large language model APIs, allowing you to call multiple backend services through a single unified API interface, converting them all to OpenAI format, and supporting load balancing. It supports various backend services such as OpenAI, Anthropic, Gemini, Vertex, Azure, xai, Cohere, Groq, Cloudflare, OpenRouter, and more. The project offers features like no front-end, pure configuration file setup, unified management of multiple backend services, support for multiple standard OpenAI format interfaces, rate limiting, automatic retry, channel cooling, fine-grained model timeout settings, and fine-grained permission control.

README:

For personal use, one/new-api is too complex with many commercial features that individuals don't need. If you don't want a complicated frontend interface and prefer support for more models, you can try uni-api. This is a project that unifies the management of large language model APIs, allowing you to call multiple backend services through a single unified API interface, converting them all to OpenAI format, and supporting load balancing. Currently supported backend services include: OpenAI, Anthropic, Gemini, Vertex, Azure, AWS, xai, Cohere, Groq, Cloudflare, OpenRouter, 302.AI and more.

- No front-end, pure configuration file to configure API channels. You can run your own API station just by writing a file, and the documentation has a detailed configuration guide, beginner-friendly.

- Unified management of multiple backend services, supporting providers such as OpenAI, Deepseek, OpenRouter, and other APIs in OpenAI format. Supports OpenAI Dalle-3 image generation.

- Simultaneously supports Anthropic, Gemini, Vertex AI, Azure, AWS, xai, Cohere, Groq, Cloudflare, 302.AI. Vertex simultaneously supports Claude and Gemini API.

- Support OpenAI, Anthropic, Gemini, Vertex, Azure, AWS, xai native tool use function calls.

- Support OpenAI, Anthropic, Gemini, Vertex, Azure, AWS, xai native image recognition API.

- Support four types of load balancing.

- Supports channel-level weighted load balancing, allowing requests to be distributed according to different channel weights. It is not enabled by default and requires configuring channel weights.

- Support Vertex regional load balancing and high concurrency, which can increase Gemini and Claude concurrency by up to (number of APIs * number of regions) times. Automatically enabled without additional configuration.

- Except for Vertex region-level load balancing, all APIs support channel-level sequential load balancing, enhancing the immersive translation experience. It is not enabled by default and requires configuring

SCHEDULING_ALGORITHMasround_robin. - Support automatic API key-level round-robin load balancing for multiple API Keys in a single channel.

- Support automatic retry, when an API channel response fails, automatically retry the next API channel.

- Support channel cooling: When an API channel response fails, the channel will automatically be excluded and cooled for a period of time, and requests to the channel will be stopped. After the cooling period ends, the model will automatically be restored until it fails again, at which point it will be cooled again.

- Support fine-grained model timeout settings, allowing different timeout durations for each model.

- Support fine-grained permission control. Support using wildcards to set specific models available for API key channels.

- Support rate limiting, you can set the maximum number of requests per minute as an integer, such as 2/min, 2 times per minute, 5/hour, 5 times per hour, 10/day, 10 times per day, 10/month, 10 times per month, 10/year, 10 times per year. Default is 60/min.

- Supports multiple standard OpenAI format interfaces:

/v1/chat/completions,/v1/images/generations,/v1/audio/transcriptions,/v1/moderations,/v1/models. - Support OpenAI moderation moral review, which can conduct moral reviews of user messages. If inappropriate messages are found, an error message will be returned. This reduces the risk of the backend API being banned by providers.

To start uni-api, a configuration file must be used. There are two ways to start with a configuration file:

- The first method is to use the

CONFIG_URLenvironment variable to fill in the configuration file URL, which will be automatically downloaded when uni-api starts. - The second method is to mount a configuration file named

api.yamlinto the container.

You must fill in the configuration file in advance to start uni-api, and you must use a configuration file named api.yaml to start uni-api, you can configure multiple models, each model can configure multiple backend services, and support load balancing. Below is an example of the minimum api.yaml configuration file that can be run:

providers:

- provider: provider_name # Service provider name, such as openai, anthropic, gemini, openrouter, can be any name, required

base_url: https://api.your.com/v1/chat/completions # Backend service API address, required

api: sk-YgS6GTi0b4bEabc4C # Provider's API Key, required, automatically uses base_url and api to get all available models through the /v1/models endpoint.

# Multiple providers can be configured here, each provider can configure multiple API Keys, and each provider can configure multiple models.

api_keys:

- api: sk-Pkj60Yf8JFWxfgRmXQFWyGtWUddGZnmi3KlvowmRWpWpQxx # API Key, user request uni-api requires API key, required

# This API Key can use all models, that is, it can use all models in all channels set under providers, without needing to add available channels one by one.Detailed advanced configuration of api.yaml:

providers:

- provider: provider_name # Service provider name, such as openai, anthropic, gemini, openrouter, can be any name, required

base_url: https://api.your.com/v1/chat/completions # Backend service API address, required

api: sk-YgS6GTi0b4bEabc4C # Provider's API Key, required

model: # Optional, if model is not configured, all available models will be automatically obtained through base_url and api via the /v1/models endpoint.

- gpt-4o # Usable model name, required

- claude-3-5-sonnet-20240620: claude-3-5-sonnet # Rename model, claude-3-5-sonnet-20240620 is the provider's model name, claude-3-5-sonnet is the renamed name, you can use a simple name to replace the original complex name, optional

- dall-e-3

- provider: anthropic

base_url: https://api.anthropic.com/v1/messages

api: # Supports multiple API Keys, multiple keys automatically enable polling load balancing, at least one key, required

- sk-ant-api03-bNnAOJyA-xQw_twAA

- sk-ant-api02-bNnxxxx

model:

- claude-3-7-sonnet-20240620: claude-3-7-sonnet # Rename model, claude-3-7-sonnet-20240620 is the provider's model name, claude-3-7-sonnet is the renamed name, you can use a simple name to replace the original complex name, optional

- claude-3-7-sonnet-20250219: claude-3-7-sonnet-think # Rename model, claude-3-7-sonnet-20250219 is the provider's model name, claude-3-7-sonnet-think is the renamed name, if "think" is in the renamed name, it will be automatically converted to claude think model, default think token limit is 4096. Optional

tools: true # Whether to support tools, such as generating code, generating documents, etc., default is true, optional

preferences:

post_body_parameter_overrides: # Support customizing request body parameters

claude-3-7-sonnet-think: # Add custom request body parameters to the model claude-3-7-sonnet-think

tools:

- type: code_execution_20250522 # Add code_execution tool to the model claude-3-7-sonnet-think

name: code_execution

- type: web_search_20250305 # Add web_search tool to the model claude-3-7-sonnet-think, max_uses means to use up to 5 times

name: web_search

max_uses: 5

- provider: gemini

base_url: https://generativelanguage.googleapis.com/v1beta # base_url supports v1beta/v1, only for Gemini model use, required

api: # Supports multiple API Keys, multiple keys automatically enable polling load balancing, at least one key, required

- AIzaSyAN2k6IRdgw123

- AIzaSyAN2k6IRdgw456

- AIzaSyAN2k6IRdgw789

model:

- gemini-2.5-pro

- gemini-2.5-flash: gemini-2.5-flash # After renaming, the original model name gemini-2.5-flash cannot be used, if you want to use the original name, you can add the original name in the model, just add the line below to use the original name

- gemini-2.5-flash

- gemini-2.5-pro: gemini-2.5-pro-search # To enable search for a model, rename it with the -search suffix and set custom request body parameters for this model in `post_body_parameter_overrides`.

- gemini-2.5-flash: gemini-2.5-flash-think-24576-search # To enable search for a model, rename it with the -search suffix and set custom request body parameters for this model in post_body_parameter_overrides. Additionally, you can customize the inference budget using -think-number. These options can be used together or separately.

- gemini-2.5-flash: gemini-2.5-flash-think-0 # Support to rename models with -think-number suffix to enable search, if the number is 0, it means to close the reasoning.

- gemini-embedding-001

- text-embedding-004

tools: true

preferences:

api_key_rate_limit: 15/min # Each API Key can request up to 15 times per minute, optional. The default is 999999/min. Supports multiple frequency constraints: 15/min,10/day

# api_key_rate_limit: # You can set different frequency limits for each model

# gemini-2.5-flash: 10/min,500/day

# gemini-2.5-pro: 5/min,25/day,1048576/tpr # 1048576/tpr means the token limit per request is 1,048,576 tokens.

# default: 4/min # If the model does not set the frequency limit, use the frequency limit of default

api_key_cooldown_period: 60 # Each API Key will be cooled down for 60 seconds after encountering a 429 error. Optional, the default is 0 seconds. When set to 0, the cooling mechanism is not enabled. When there are multiple API keys, the cooling mechanism will take effect.

api_key_schedule_algorithm: round_robin # Set the request order of multiple API Keys, optional. The default is round_robin, and the optional values are: round_robin, random, fixed_priority, smart_round_robin. It will take effect when there are multiple API keys. round_robin is polling load balancing, and random is random load balancing. fixed_priority is fixed priority scheduling, always use the first available API key. `smart_round_robin` is an intelligent scheduling algorithm based on historical success rates, see FAQ for details.

model_timeout: # Model timeout, in seconds, default 100 seconds, optional

gemini-2.5-pro: 500 # Model gemini-2.5-pro timeout is 500 seconds

gemini-2.5-flash: 500 # Model gemini-2.5-flash timeout is 500 seconds

default: 10 # Model does not have a timeout set, use the default timeout of 10 seconds, when requesting a model not in model_timeout, the timeout is also 10 seconds, if default is not set, uni-api will use the default timeout set by the environment variable TIMEOUT, the default timeout is 100 seconds

keepalive_interval: # Heartbeat interval, in seconds, default 99999 seconds, optional. Suitable for when uni-api is hosted on cloudflare and uses inference models. Priority is higher than the global configuration keepalive_interval.

gemini-2.5-pro: 50 # Model gemini-2.5-pro heartbeat interval is 50 seconds, this value must be less than the model_timeout set timeout, otherwise it will be ignored.

proxy: socks5://[username]:[password]@[ip]:[port] # Proxy address, optional. Supports socks5 and http proxies, default is not used.

headers: # Add custom http request headers, optional

Custom-Header-1: Value-1

Custom-Header-2: Value-2

post_body_parameter_overrides: # Support customizing request body parameters

gemini-2.5-flash-search: # Add custom request body parameters to the model gemini-2.5-flash-search

tools:

- google_search: {} # Add google_search tool to the model gemini-2.5-flash-search

- url_context: {} # Add url_context tool to the model gemini-2.5-flash-search

- provider: vertex

project_id: gen-lang-client-xxxxxxxxxxxxxx # Description: Your Google Cloud project ID. Format: String, usually composed of lowercase letters, numbers, and hyphens. How to obtain: You can find your project ID in the project selector of the Google Cloud Console.

private_key: "-----BEGIN PRIVATE KEY-----\nxxxxx\n-----END PRIVATE" # Description: Private key for Google Cloud Vertex AI service account. Format: A JSON formatted string containing the private key information of the service account. How to obtain: Create a service account in Google Cloud Console, generate a JSON formatted key file, and then set its content as the value of this environment variable.

client_email: [email protected] # Description: Email address of the Google Cloud Vertex AI service account. Format: Usually a string like "[email protected]". How to obtain: Generated when creating a service account, or you can view the service account details in the "IAM and Admin" section of the Google Cloud Console.

model:

- gemini-2.5-flash

- gemini-2.5-pro

- gemini-2.5-pro: gemini-2.5-pro-search # To enable search for a model, rename it with the -search suffix and set custom request body parameters for this model in `post_body_parameter_overrides`. Not setting post_body_parameter_overrides will not enable search.

- claude-3-5-sonnet@20240620: claude-3-5-sonnet

- claude-3-opus@20240229: claude-3-opus

- claude-3-sonnet@20240229: claude-3-sonnet

- claude-3-haiku@20240307: claude-3-haiku

- gemini-embedding-001

- text-embedding-004

tools: true

notes: https://xxxxx.com/ # You can put the provider's website, notes, official documentation, optional

preferences:

post_body_parameter_overrides: # Support customizing request body parameters

gemini-2.5-pro-search: # Add custom request body parameters to the model gemini-2.5-pro-search

tools:

- google_search: {} # Add google_search tool to the model gemini-2.5-pro-search

gemini-2.5-flash:

generationConfig:

thinkingConfig:

includeThoughts: True

thinkingBudget: 24576

maxOutputTokens: 65535

gemini-2.5-flash-search:

tools:

- google_search: {}

- url_context: {}

- provider: cloudflare

api: f42b3xxxxxxxxxxq4aoGAh # Cloudflare API Key, required

cf_account_id: 8ec0xxxxxxxxxxxxe721 # Cloudflare Account ID, required

model:

- '@cf/meta/llama-3.1-8b-instruct': llama-3.1-8b # Rename model, @cf/meta/llama-3.1-8b-instruct is the provider's original model name, must be enclosed in quotes, otherwise yaml syntax error, llama-3.1-8b is the renamed name, you can use a simple name to replace the original complex name, optional

- '@cf/meta/llama-3.1-8b-instruct' # Must be enclosed in quotes, otherwise yaml syntax error

- provider: azure

base_url: https://your-endpoint.openai.azure.com

api: your-api-key

model:

- gpt-4o

preferences:

post_body_parameter_overrides: # Support customizing request body parameters

key1: value1 # Force the request to add "key1": "value1" parameter

key2: value2 # Force the request to add "key2": "value2" parameter

stream_options:

include_usage: true # Force the request to add "stream_options": {"include_usage": true} parameter

cooldown_period: 0 # When cooldown_period is set to 0, the cooling mechanism is not enabled, the priority is higher than the global configuration cooldown_period.

- provider: databricks

base_url: https://xxx.azuredatabricks.net

api:

- xxx

model:

- databricks-claude-sonnet-4: claude-sonnet-4

- databricks-claude-opus-4: claude-opus-4

- databricks-claude-3-7-sonnet: claude-3-7-sonnet

- provider: aws

base_url: https://bedrock-runtime.us-east-1.amazonaws.com

aws_access_key: xxxxxxxx

aws_secret_key: xxxxxxxx

model:

- anthropic.claude-3-5-sonnet-20240620-v1:0: claude-3-5-sonnet

- provider: vertex-express

base_url: https://aiplatform.googleapis.com/

project_id:

- xxx # project_id of key1

- xxx # project_id of key2

api:

- xx.xxx # api of key1

- xx.xxx # api of key2

model:

- gemini-2.5-pro-preview-06-05

- provider: other-provider

base_url: https://api.xxx.com/v1/messages

api: sk-bNnAOJyA-xQw_twAA

model:

- causallm-35b-beta2ep-q6k: causallm-35b

- anthropic/claude-3-5-sonnet

tools: false

engine: openrouter # Force the use of a specific message format, currently supports gpt, claude, gemini, openrouter native format, optional

api_keys:

- api: sk-KjjI60Yf0JFWxfgRmXqFWyGtWUd9GZnmi3KlvowmRWpWpQRo # API Key, required for users to use this service

model: # Models that can be used by this API Key, optional. Default channel-level polling load balancing is enabled, and each request model is requested in sequence according to the model configuration. It is not related to the original channel order in providers. Therefore, you can set different request sequences for each API key.

- gpt-4o # Usable model name, can use all gpt-4o models provided by providers

- claude-3-5-sonnet # Usable model name, can use all claude-3-5-sonnet models provided by providers

- gemini/* # Usable model name, can only use all models provided by providers named gemini, where gemini is the provider name, * represents all models

role: admin # Set the alias of the API key, optional. The request log will display the alias of the API key. If role is admin, only this API key can request the v1/stats,/v1/generate-api-key endpoints. If all API keys do not have role set to admin, the first API key is set as admin and has permission to request the v1/stats,/v1/generate-api-key endpoints.

- api: sk-pkhf60Yf0JGyJxgRmXqFQyTgWUd9GZnmi3KlvowmRWpWqrhy

model:

- anthropic/claude-3-5-sonnet # Usable model name, can only use the claude-3-5-sonnet model provided by the provider named anthropic. Models with the same name from other providers cannot be used. This syntax will not match the model named anthropic/claude-3-5-sonnet provided by other-provider.

- <anthropic/claude-3-5-sonnet> # By adding angle brackets on both sides of the model name, it will not search for the claude-3-5-sonnet model under the channel named anthropic, but will take the entire anthropic/claude-3-5-sonnet as the model name. This syntax can match the model named anthropic/claude-3-5-sonnet provided by other-provider. But it will not match the claude-3-5-sonnet model under anthropic.

- openai-test/text-moderation-latest # When message moderation is enabled, the text-moderation-latest model under the channel named openai-test can be used for moderation.

- sk-KjjI60Yd0JFWtxxxxxxxxxxxxxxwmRWpWpQRo/* # Support using other API keys as channels

preferences:

SCHEDULING_ALGORITHM: fixed_priority # When SCHEDULING_ALGORITHM is fixed_priority, use fixed priority scheduling, always execute the channel of the first model with a request. Default is enabled, SCHEDULING_ALGORITHM default value is fixed_priority. SCHEDULING_ALGORITHM optional values are: fixed_priority, round_robin, weighted_round_robin, lottery, random.

# When SCHEDULING_ALGORITHM is random, use random polling load balancing, randomly request the channel of the model with a request.

# When SCHEDULING_ALGORITHM is round_robin, use polling load balancing, request the channel of the model used by the user in order.

AUTO_RETRY: true # Whether to automatically retry, automatically retry the next provider, true for automatic retry, false for no automatic retry, default is true. Also supports setting a number, indicating the number of retries.

rate_limit: 15/min # Supports rate limiting, each API Key can request up to 15 times per minute, optional. The default is 999999/min. Supports multiple frequency constraints: 15/min,10/day

# rate_limit: # You can set different frequency limits for each model

# gemini-2.5-flash: 10/min,500/day

# gemini-2.5-pro: 5/min,25/day

# default: 4/min # If the model does not set the frequency limit, use the frequency limit of default

ENABLE_MODERATION: true # Whether to enable message moderation, true for enable, false for disable, default is false, when enabled, it will moderate the user's message, if inappropriate messages are found, an error message will be returned.

# Channel-level weighted load balancing configuration example

- api: sk-KjjI60Yd0JFWtxxxxxxxxxxxxxxwmRWpWpQRo

model:

- gcp1/*: 5 # The number after the colon is the weight, weight only supports positive integers.

- gcp2/*: 3 # The size of the number represents the weight, the larger the number, the greater the probability of the request.

- gcp3/*: 2 # In this example, there are a total of 10 weights for all channels, and 10 requests will have 5 requests for the gcp1/* model, 2 requests for the gcp2/* model, and 3 requests for the gcp3/* model.

preferences:

SCHEDULING_ALGORITHM: weighted_round_robin # Only when SCHEDULING_ALGORITHM is weighted_round_robin and the above channel has weights, it will request according to the weighted order. Use weighted polling load balancing, request the channel of the model with a request according to the weight order. When SCHEDULING_ALGORITHM is lottery, use lottery polling load balancing, request the channel of the model with a request according to the weight randomly. Channels without weights automatically fall back to round_robin polling load balancing.

AUTO_RETRY: true

credits: 10 # Supports setting balance, the number set here represents that the API Key can use 10 dollars, optional. The default is unlimited balance, when set to 0, the key cannot be used. When the user has used up the balance, subsequent requests will be blocked.

created_at: 2024-01-01T00:00:00+08:00 # When the balance is set, created_at must be set, indicating that the usage cost starts from the time set in created_at. Optional. The default is 30 days before the current time.

preferences: # Global configuration

model_timeout: # Model timeout, in seconds, default 100 seconds, optional

gpt-4o: 10 # Model gpt-4o timeout is 10 seconds, gpt-4o is the model name, when requesting models like gpt-4o-2024-08-06, the timeout is also 10 seconds

claude-3-5-sonnet: 10 # Model claude-3-5-sonnet timeout is 10 seconds, when requesting models like claude-3-5-sonnet-20240620, the timeout is also 10 seconds

default: 10 # Model does not have a timeout set, use the default timeout of 10 seconds, when requesting a model not in model_timeout, the default timeout is 10 seconds, if default is not set, uni-api will use the default timeout set by the environment variable TIMEOUT, the default timeout is 100 seconds

o1-mini: 30 # Model o1-mini timeout is 30 seconds, when requesting models starting with o1-mini, the timeout is 30 seconds

o1-preview: 100 # Model o1-preview timeout is 100 seconds, when requesting models starting with o1-preview, the timeout is 100 seconds

cooldown_period: 300 # Channel cooldown time, in seconds, default 300 seconds, optional. When a model request fails, the channel will be automatically excluded and cooled down for a period of time, and will not request the channel again. After the cooldown time ends, the model will be automatically restored until the request fails again, and it will be cooled down again. When cooldown_period is set to 0, the cooling mechanism is not enabled.

rate_limit: 999999/min # uni-api global rate limit, in times/minute, supports multiple frequency constraints, such as: 15/min,10/day. Default 999999/min, optional.

keepalive_interval: # Heartbeat interval, in seconds, default 99999 seconds, optional. Suitable for when uni-api is hosted on cloudflare and uses inference models.

gemini-2.5-pro: 50 # Model gemini-2.5-pro heartbeat interval is 50 seconds, this value must be less than the model_timeout set timeout, otherwise it will be ignored.

error_triggers: # Error triggers, when the message returned by the model contains any of the strings in the error_triggers, the channel will return an error. Optional

- The bot's usage is covered by the developer

- process this request due to overload or policy

proxy: socks5://[username]:[password]@[ip]:[port] # Proxy address, optional.

model_price: # Model price, in dollars/M tokens, optional. Default price is 1,2, which means input 1 dollar/1M tokens, output 2 dollars/1M tokens.

gpt-4o: 1,2

claude-3-5-sonnet: 0.12,0.48

default: 1,2Mount the configuration file and start the uni-api docker container:

docker run --user root -p 8001:8000 --name uni-api -dit \

-v ./api.yaml:/home/api.yaml \

yym68686/uni-api:latestAfter writing the configuration file according to method one, upload it to the cloud disk, get the file's direct link, and then use the CONFIG_URL environment variable to start the uni-api docker container:

docker run --user root -p 8001:8000 --name uni-api -dit \

-e CONFIG_URL=http://file_url/api.yaml \

yym68686/uni-api:latest- CONFIG_URL: The download address of the configuration file, which can be a local file or a remote file, optional

- DEBUG: Whether to enable debug mode, default is false, optional. When enabled, more logs will be printed, which can be used when submitting issues.

- TIMEOUT: Request timeout, default is 100 seconds. The timeout can control the time needed to switch to the next channel when one channel does not respond. Optional

- DISABLE_DATABASE: Whether to disable the database, default is false, optional

- DB_TYPE: Database type, default is sqlite, optional. Supports sqlite and postgres.

When DB_TYPE is postgres, the following environment variables need to be set:

- DB_USER: Database user name, default is postgres, optional

- DB_PASSWORD: Database password, default is mysecretpassword, optional

- DB_HOST: Database host, default is localhost, optional

- DB_PORT: Database port, default is 5432, optional

- DB_NAME: Database name, default is postgres, optional

Click the button below to automatically use the built uni-api docker image to deploy:

There are two ways to let Koyeb read the configuration file, choose one of them:

-

Fill in the environment variable

CONFIG_URLwith the direct link of the configuration file -

Paste the api.yaml file content, if you paste the api.yaml file content directly into the Koyeb environment variable setting file, after pasting the text in the text box, enter the api.yaml path as

/home/api.yamlin the path field.

Then click the Deploy button.

In the warehouse Releases, find the latest version of the corresponding binary file, for example, a file named uni-api-linux-x86_64-0.0.99.pex. Download the binary file on the server and run it:

wget https://github.com/yym68686/uni-api/releases/download/v0.0.99/uni-api-linux-x86_64-0.0.99.pex

chmod +x uni-api-linux-x86_64-0.0.99.pex

./uni-api-linux-x86_64-0.0.99.pexFirst, log in to the panel, in Additional services click on the tab Run your own applications to enable the option to run your own programs, then go to the panel Port reservation to randomly open a port.

If you don't have your own domain name, go to the panel WWW websites and delete the default domain name provided. Then create a new domain with the Domain being the one you just deleted. After clicking Advanced settings, set the Website type to Proxy domain, and the Proxy port should point to the port you just opened. Do not select Use HTTPS.

ssh login to the serv00 server, execute the following command:

git clone --depth 1 -b main --quiet https://github.com/yym68686/uni-api.git

cd uni-api

python -m venv uni-api

source uni-api/bin/activate

pip install --upgrade pip

cpuset -l 0 pip install -vv -r requirements.txtFrom the start of installation to the completion of installation, it will take about 10 minutes. After the installation is complete, execute the following command:

tmux new -A -s uni-api

source uni-api/bin/activate

export CONFIG_URL=http://file_url/api.yaml

export DISABLE_DATABASE=true

# Modify the port, xxx is the port, modify it yourself, corresponding to the port opened in the panel Port reservation

sed -i '' 's/port=8000/port=xxx/' main.py

sed -i '' 's/reload=True/reload=False/' main.py

python main.pyUse ctrl+b d to exit tmux, allowing the program to run in the background. At this point, you can use uni-api in other chat clients. curl test script:

curl -X POST https://xxx.serv00.net/v1/chat/completions \

-H 'Content-Type: application/json' \

-H 'Authorization: Bearer sk-xxx' \

-d '{"model": "gpt-4o","messages": [{"role": "user","content": "Hello"}]}'Reference document:

https://docs.serv00.com/Python/

https://linux.do/t/topic/201181

https://linux.do/t/topic/218738

Start the container

docker run --user root -p 8001:8000 --name uni-api -dit \

-e CONFIG_URL=http://file_url/api.yaml \ # If the local configuration file has already been mounted, there is no need to set CONFIG_URL

-v ./api.yaml:/home/api.yaml \ # If CONFIG_URL is already set, there is no need to mount the configuration file

-v ./uniapi_db:/home/data \ # If you do not want to save statistical data, there is no need to mount this folder

yym68686/uni-api:latestOr if you want to use Docker Compose, here is a docker-compose.yml example:

services:

uni-api:

container_name: uni-api

image: yym68686/uni-api:latest

environment:

- CONFIG_URL=http://file_url/api.yaml # If a local configuration file is already mounted, there is no need to set CONFIG_URL

ports:

- 8001:8000

volumes:

- ./api.yaml:/home/api.yaml # If CONFIG_URL is already set, there is no need to mount the configuration file

- ./uniapi_db:/home/data # If you do not want to save statistical data, there is no need to mount this folderCONFIG_URL is the URL of the remote configuration file that can be automatically downloaded. For example, if you are not comfortable modifying the configuration file on a certain platform, you can upload the configuration file to a hosting service and provide a direct link to uni-api to download, which is the CONFIG_URL. If you are using a local mounted configuration file, there is no need to set CONFIG_URL. CONFIG_URL is used when it is not convenient to mount the configuration file.

Run Docker Compose container in the background

docker-compose pull

docker-compose up -dDocker build

docker buildx build --platform linux/amd64,linux/arm64 -t yym68686/uni-api:latest --push .

docker pull yym68686/uni-api:latest

# test image

docker buildx build --platform linux/amd64,linux/arm64 -t yym68686/uni-api:test -f Dockerfile.debug --push .

docker pull yym68686/uni-api:testOne-Click Restart Docker Image

set -eu

docker pull yym68686/uni-api:latest

docker rm -f uni-api

docker run --user root -p 8001:8000 -dit --name uni-api \

-e CONFIG_URL=http://file_url/api.yaml \

-v ./api.yaml:/home/api.yaml \

-v ./uniapi_db:/home/data \

yym68686/uni-api:latest

docker logs -f uni-apiRESTful curl test

curl -X POST http://127.0.0.1:8000/v1/chat/completions \

-H "Content-Type: application/json" \

-H "Authorization: Bearer ${API}" \

-d '{"model": "gpt-4o","messages": [{"role": "user", "content": "Hello"}],"stream": true}'pex linux packaging:

VERSION=$(cat VERSION)

pex -D . -r requirements.txt \

-c uvicorn \

--inject-args 'main:app --host 0.0.0.0 --port 8000' \

--platform linux_x86_64-cp-3.10.12-cp310 \

--interpreter-constraint '==3.10.*' \

--no-strip-pex-env \

-o uni-api-linux-x86_64-${VERSION}.pexmacOS packaging:

VERSION=$(cat VERSION)

pex -r requirements.txt \

-c uvicorn \

--inject-args 'main:app --host 0.0.0.0 --port 8000' \

-o uni-api-macos-arm64-${VERSION}.pexWARNING: Please be aware of the risk of key leakage in remote deployments. Do not abuse the service to avoid account suspension.

The Space repository requires three files: Dockerfile, README.md, and entrypoint.sh.

To run the program, you also need api.yaml (I'll use the example of storing it entirely in secrets, but you can also implement it via HTTP download). Access matching, model and channel configurations are all in the configuration file.

Operation Steps:

-

Visit https://huggingface.co/new-space to create a new space. It should be a public repository; the open source license/name/description can be filled as desired.

-

Visit your space's files page at https://huggingface.co/spaces/your-name/your-space-name/tree/main and upload the three files (

Dockerfile,README.md,entrypoint.sh). -

Visit your space's settings page at https://huggingface.co/spaces/your-name/your-space-name/settings, find the Secrets section and create a new secret called

API_YAML_CONTENT(note the uppercase). Write your api.yaml locally, then copy it directly into the secret field using UTF-8 encoding. -

Still in settings, find Factory rebuild and let it rebuild. If you modify secrets or files, or manually restart the Space, it may get stuck with no logs. Use this method to resolve such issues.

-

In the upper right corner of the settings page, find the three-dot button and select "Embed this Space" to get the public link for your Space. The format is https://(your-name)-(your-space-name).hf.space (remove the parentheses).

Related File Codes:

# Dockerfile,del this line

# Use the uni-api official image

FROM yym68686/uni-api:latest

# Create data directory and set permissions

RUN mkdir -p /data && chown -R 1000:1000 /data

# Set up user and working directory

RUN useradd -m -u 1000 user

USER user

ENV HOME=/home/user \

PATH=/home/user/.local/bin:$PATH \

DISABLE_DATABASE=true

# Copy entrypoint script

COPY --chown=user entrypoint.sh /home/user/entrypoint.sh

RUN chmod +x /home/user/entrypoint.sh

# Ensure /home directory is writable (this is important!)

USER root

RUN chmod 777 /home

USER user

# Set working directory

WORKDIR /home/user

# Entry point

ENTRYPOINT ["/home/user/entrypoint.sh"]---

title: Uni API

emoji: 🌍

colorFrom: gray

colorTo: yellow

sdk: docker

app_port: 8000

pinned: false

license: gpl-3.0

---# entrypoint.sh,del this line

#!/bin/sh

set -e

CONFIG_FILE_PATH="/home/api.yaml" # Note this is changed to /home/api.yaml

echo "DEBUG: Entrypoint script started."

# Check if Secret exists

if [ -z "$API_YAML_CONTENT" ]; then

echo "ERROR: Secret 'API_YAML_CONTENT' does not exist or is empty. Exiting."

exit 1

else

echo "DEBUG: API_YAML_CONTENT secret found. Preparing to write..."

printf '%s\n' "$API_YAML_CONTENT" > "$CONFIG_FILE_PATH"

echo "DEBUG: Attempted to write to $CONFIG_FILE_PATH."

if [ -f "$CONFIG_FILE_PATH" ]; then

echo "DEBUG: File $CONFIG_FILE_PATH created successfully. Size: $(wc -c < "$CONFIG_FILE_PATH") bytes."

# Display the first few lines for debugging (be careful not to display sensitive information)

echo "DEBUG: First few lines (without sensitive info):"

head -n 3 "$CONFIG_FILE_PATH" | grep -v "api:" | grep -v "password"

else

echo "ERROR: File $CONFIG_FILE_PATH was NOT created."

exit 1

fi

fi

echo "DEBUG: About to execute python main.py..."

# No need to use the --config parameter as the program has a default path

cd /home

exec python main.py "$@"The frontend of uni-api can be deployed by yourself, address: https://github.com/yym68686/uni-api-web

You can also use the frontend I deployed, address: https://uni-api-web.pages.dev/

We thank the following sponsors for their support:

- @PowerHunter: ¥2000

- @IM4O4: ¥100

- @ioi:¥50

If you would like to support our project, you can sponsor us in the following ways:

-

USDT-TRC20, USDT-TRC20 wallet address:

TLFbqSv5pDu5he43mVmK1dNx7yBMFeN7d8

Thank you for your support!

- Why does the error

Error processing request or performing moral check: 404: No matching model foundalways appear?

Setting ENABLE_MODERATION to false will fix this issue. When ENABLE_MODERATION is true, the API must be able to use the text-moderation-latest model, and if you have not provided text-moderation-latest in the provider model settings, an error will occur indicating that the model cannot be found.

- How to prioritize requests for a specific channel, how to set the priority of a channel?

Directly set the channel order in the api_keys. No other settings are required. Sample configuration file:

providers:

- provider: ai1

base_url: https://xxx/v1/chat/completions

api: sk-xxx

- provider: ai2

base_url: https://xxx/v1/chat/completions

api: sk-xxx

api_keys:

- api: sk-1234

model:

- ai2/*

- ai1/*In this way, request ai2 first, and if it fails, request ai1.

- What is the behavior behind various scheduling algorithms? For example, fixed_priority, weighted_round_robin, lottery, random, round_robin, smart_round_robin?

All scheduling algorithms need to be enabled by setting api_keys.(api).preferences.SCHEDULING_ALGORITHM in the configuration file to any of the values: fixed_priority, weighted_round_robin, lottery, random, round_robin, smart_round_robin.

-

fixed_priority: Fixed priority scheduling. All requests are always executed by the channel of the model that first has a user request. In case of an error, it will switch to the next channel. This is the default scheduling algorithm.

-

weighted_round_robin: Weighted round-robin load balancing, requests channels with the user's requested model according to the weight order set in the configuration file api_keys.(api).model.

-

lottery: Draw round-robin load balancing, randomly request the channel of the model with user requests according to the weight set in the configuration file api_keys.(api).model.

-

round_robin: Round-robin load balancing, requests the channel that owns the model requested by the user according to the configuration order in the configuration file api_keys.(api).model. You can check the previous question on how to set the priority of channels.

-

smart_round_robin: Intelligent success rate scheduling. This is an advanced scheduling algorithm designed for channels with a large number of API Keys (hundreds, thousands, or even tens of thousands). Its core mechanism is:

- Sorting based on historical success rate: The algorithm dynamically sorts API Keys based on their actual request success rate over the past 72 hours.

- Intelligent grouping and load balancing: To prevent traffic from always concentrating on a few "optimal" keys, the algorithm intelligently divides all keys (including unused ones) into several groups. It distributes the keys with the highest success rates to the beginning of each group, the next highest to the second position, and so on. This ensures that the load is evenly distributed among different tiers of keys and also guarantees that new or historically underperforming keys have a chance to be tried (exploration).

- Periodic automatic updates: After all keys in a channel have been polled once, the system automatically triggers a re-sorting, pulling the latest success rate data from the database to generate a new, more optimal key sequence. The update frequency is adaptive: the larger the key pool and the lower the request volume, the longer the update cycle; and vice versa.

- Applicable scenarios: It is highly recommended for users with a large number of API Keys to enable this algorithm to maximize the utilization of the key pool and the success rate of requests.

- How should the base_url be filled in correctly?

Except for some special channels shown in the advanced configuration, all OpenAI format providers need to fill in the base_url completely, which means the base_url must end with /v1/chat/completions. If you are using GitHub models, the base_url should be filled in as https://models.inference.ai.azure.com/chat/completions, not Azure's URL.

For Azure channels, the base_url is compatible with the following formats: https://your-endpoint.services.ai.azure.com/models/chat/completions?api-version=2024-05-01-preview and https://your-endpoint.services.ai.azure.com/models/chat/completions, https://your-endpoint.openai.azure.com, it is recommended to use the first format. If api-version is not explicitly specified, the default is 2024-10-21.

- How does the model timeout time work? What is the priority of the channel-level timeout setting and the global model timeout setting?

The channel-level timeout setting has higher priority than the global model timeout setting. The priority order is: channel-level model timeout setting > channel-level default timeout setting > global model timeout setting > global default timeout setting > environment variable TIMEOUT.

By adjusting the model timeout time, you can avoid the error of some channels timing out. If you encounter the error {'error': '500', 'details': 'fetch_response_stream Read Response Timeout'}, please try to increase the model timeout time.

- How does api_key_rate_limit work? How do I set the same rate limit for multiple models?

If you want to set the same frequency limit for the four models gemini-1.5-pro-latest, gemini-1.5-pro, gemini-1.5-pro-001, gemini-1.5-pro-002 simultaneously, you can set it like this:

api_key_rate_limit:

gemini-1.5-pro: 1000/minThis will match all models containing the gemini-1.5-pro string. The frequency limit for these four models, gemini-1.5-pro-latest, gemini-1.5-pro, gemini-1.5-pro-001, gemini-1.5-pro-002, will all be set to 1000/min. The logic for configuring the api_key_rate_limit field is as follows, here is a sample configuration file:

api_key_rate_limit:

gemini-1.5-pro: 1000/min

gemini-1.5-pro-002: 500/minAt this time, if there is a request using the model gemini-1.5-pro-002.

First, the uni-api will attempt to precisely match the model in the api_key_rate_limit. If the rate limit for gemini-1.5-pro-002 is set, then the rate limit for gemini-1.5-pro-002 is 500/min. If the requested model at this time is not gemini-1.5-pro-002, but gemini-1.5-pro-latest, since the api_key_rate_limit does not have a rate limit set for gemini-1.5-pro-latest, it will look for any model with the same prefix as gemini-1.5-pro-latest that has been set, thus the rate limit for gemini-1.5-pro-latest will be set to 1000/min.

- I want to set channel 1 and channel 2 to random round-robin, and uni-api will request channel 3 after channel 1 and channel 2 failure. How do I set it?

uni-api supports api key as a channel, and can use this feature to manage channels by grouping them.

api_keys:

- api: sk-xxx1

model:

- sk-xxx2/* # channel 1 2 use random round-robin, request channel 3 after failure

- aws/* # channel 3

preferences:

SCHEDULING_ALGORITHM: fixed_priority # always request api key: sk-xxx2 first, then request channel 3 after failure

- api: sk-xxx2

model:

- anthropic/claude-3-7-sonnet # channel 1

- openrouter/claude-3-7-sonnet # channel 2

preferences:

SCHEDULING_ALGORITHM: random # channel 1 2 use random round-robin- I want to use Cloudflare AI Gateway, how should I fill in the base_url?

For gemini channels, the base_url for Cloudflare AI Gateway should be filled in as https://gateway.ai.cloudflare.com/v1/{account_id}/{gateway_name}/google-ai-studio/v1beta/openai/chat/completions , where {account_id} and {gateway_name} need to be replaced with your Cloudflare account ID and Gateway name.

For Vertex channels, the base_url for Cloudflare AI Gateway should be filled in as https://gateway.ai.cloudflare.com/v1/{account_id}/{gateway_name}/google-vertex-ai , where {account_id} and {gateway_name} need to be replaced with your Cloudflare account ID and Gateway name.

- When does the api key have management permissions?

- When there is only one key, it means self-use, the only key has management permissions, and can see all channel sensitive information through the frontend.

- When there are two or more keys, you must specify one or more keys to have the role of admin, only the keys with the role of admin have permission to access sensitive information. The reason for this design is to prevent another key user from also accessing sensitive information. Therefore, the design of forcing the key to set the role to admin has been added.

- When using koyeb to deploy uni-api, if the configuration file channel does not write the model field, the startup will report an error. How to solve it?

When deploying uni-api on koyeb, if the configuration file channel does not include the model field, it will report an error on startup. This is because the default permission of api.yaml on koyeb is 0644, and uni-api does not have write permission. When uni-api tries to obtain the model field, it will attempt to modify the configuration file, which will result in an error. You can resolve this by entering chmod 0777 api.yaml in the console to grant uni-api write permission.

Load testing tool: locust

Load testing script: test/locustfile.py

mock_server: test/mock_server.go

Start load testing:

go run test/mock_server.go

# 100 10 120s

locust -f test/locustfile.py

python main.pyLoad testing result:

| Type | Name | 50% | 66% | 75% | 80% | 90% | 95% | 98% | 99% | 99.9% | 99.99% | 100% | # reqs |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| POST | /v1/chat/completions (stream) | 18 | 23 | 29 | 35 | 83 | 120 | 140 | 160 | 220 | 270 | 270 | 6948 |

| Aggregated | 18 | 23 | 29 | 35 | 83 | 120 | 140 | 160 | 220 | 270 | 270 | 6948 |

We take security seriously. If you discover any security issues, please contact us at [email protected].

Acknowledgments:

We would like to thank @ryougishiki214 for reporting a security issue, which has been resolved in v1.5.1.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for uni-api

Similar Open Source Tools

uni-api

uni-api is a project that unifies the management of large language model APIs, allowing you to call multiple backend services through a single unified API interface, converting them all to OpenAI format, and supporting load balancing. It supports various backend services such as OpenAI, Anthropic, Gemini, Vertex, Azure, xai, Cohere, Groq, Cloudflare, OpenRouter, and more. The project offers features like no front-end, pure configuration file setup, unified management of multiple backend services, support for multiple standard OpenAI format interfaces, rate limiting, automatic retry, channel cooling, fine-grained model timeout settings, and fine-grained permission control.

kafka-ml

Kafka-ML is a framework designed to manage the pipeline of Tensorflow/Keras and PyTorch machine learning models on Kubernetes. It enables the design, training, and inference of ML models with datasets fed through Apache Kafka, connecting them directly to data streams like those from IoT devices. The Web UI allows easy definition of ML models without external libraries, catering to both experts and non-experts in ML/AI.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

LlamaEdge

The LlamaEdge project makes it easy to run LLM inference apps and create OpenAI-compatible API services for the Llama2 series of LLMs locally. It provides a Rust+Wasm stack for fast, portable, and secure LLM inference on heterogeneous edge devices. The project includes source code for text generation, chatbot, and API server applications, supporting all LLMs based on the llama2 framework in the GGUF format. LlamaEdge is committed to continuously testing and validating new open-source models and offers a list of supported models with download links and startup commands. It is cross-platform, supporting various OSes, CPUs, and GPUs, and provides troubleshooting tips for common errors.

lingo

Lingo is a lightweight ML model proxy that runs on Kubernetes, allowing you to run text-completion and embedding servers without changing OpenAI client code. It supports serving OSS LLMs, is compatible with OpenAI API, plug-and-play with messaging systems, scales from zero based on load, and has zero dependencies. Namespaced with no cluster privileges needed.

ersilia

The Ersilia Model Hub is a unified platform of pre-trained AI/ML models dedicated to infectious and neglected disease research. It offers an open-source, low-code solution that provides seamless access to AI/ML models for drug discovery. Models housed in the hub come from two sources: published models from literature (with due third-party acknowledgment) and custom models developed by the Ersilia team or contributors.

langchainjs-quickstart-demo

Discover the journey of building a generative AI application using LangChain.js and Azure. This demo explores the development process from idea to production, using a RAG-based approach for a Q&A system based on YouTube video transcripts. The application allows to ask text-based questions about a YouTube video and uses the transcript of the video to generate responses. The code comes in two versions: local prototype using FAISS and Ollama with LLaMa3 model for completion and all-minilm-l6-v2 for embeddings, and Azure cloud version using Azure AI Search and GPT-4 Turbo model for completion and text-embedding-3-large for embeddings. Either version can be run as an API using the Azure Functions runtime.

Guardrails

Guardrails is a security tool designed to help developers identify and fix security vulnerabilities in their code. It provides automated scanning and analysis of code repositories to detect potential security issues, such as sensitive data exposure, injection attacks, and insecure configurations. By integrating Guardrails into the development workflow, teams can proactively address security concerns and reduce the risk of security breaches. The tool offers detailed reports and actionable recommendations to guide developers in remediation efforts, ultimately improving the overall security posture of the codebase. Guardrails supports multiple programming languages and frameworks, making it versatile and adaptable to different development environments. With its user-friendly interface and seamless integration with popular version control systems, Guardrails empowers developers to prioritize security without compromising productivity.

MegatronApp

MegatronApp is a toolchain built around the Megatron-LM training framework, offering performance tuning, slow-node detection, and training-process visualization. It includes modules like MegaScan for anomaly detection, MegaFBD for forward-backward decoupling, MegaDPP for dynamic pipeline planning, and MegaScope for visualization. The tool aims to enhance large-scale distributed training by providing valuable capabilities and insights.

llm-benchmark

LLM SQL Generation Benchmark is a tool for evaluating different Large Language Models (LLMs) on their ability to generate accurate analytical SQL queries for Tinybird. It measures SQL query correctness, execution success, performance metrics, error handling, and recovery. The benchmark includes an automated retry mechanism for error correction. It supports various providers and models through OpenRouter and can be extended to other models. The benchmark is based on a GitHub dataset with 200M rows, where each LLM must produce SQL from 50 natural language prompts. Results are stored in JSON files and presented in a web application. Users can benchmark new models by following provided instructions.

vscode-pddl

The vscode-pddl extension provides comprehensive support for Planning Domain Description Language (PDDL) in Visual Studio Code. It enables users to model planning domains, validate them, industrialize planning solutions, and run planners. The extension offers features like syntax highlighting, auto-completion, plan visualization, plan validation, plan happenings evaluation, search debugging, and integration with Planning.Domains. Users can create PDDL files, run planners, visualize plans, and debug search algorithms efficiently within VS Code.

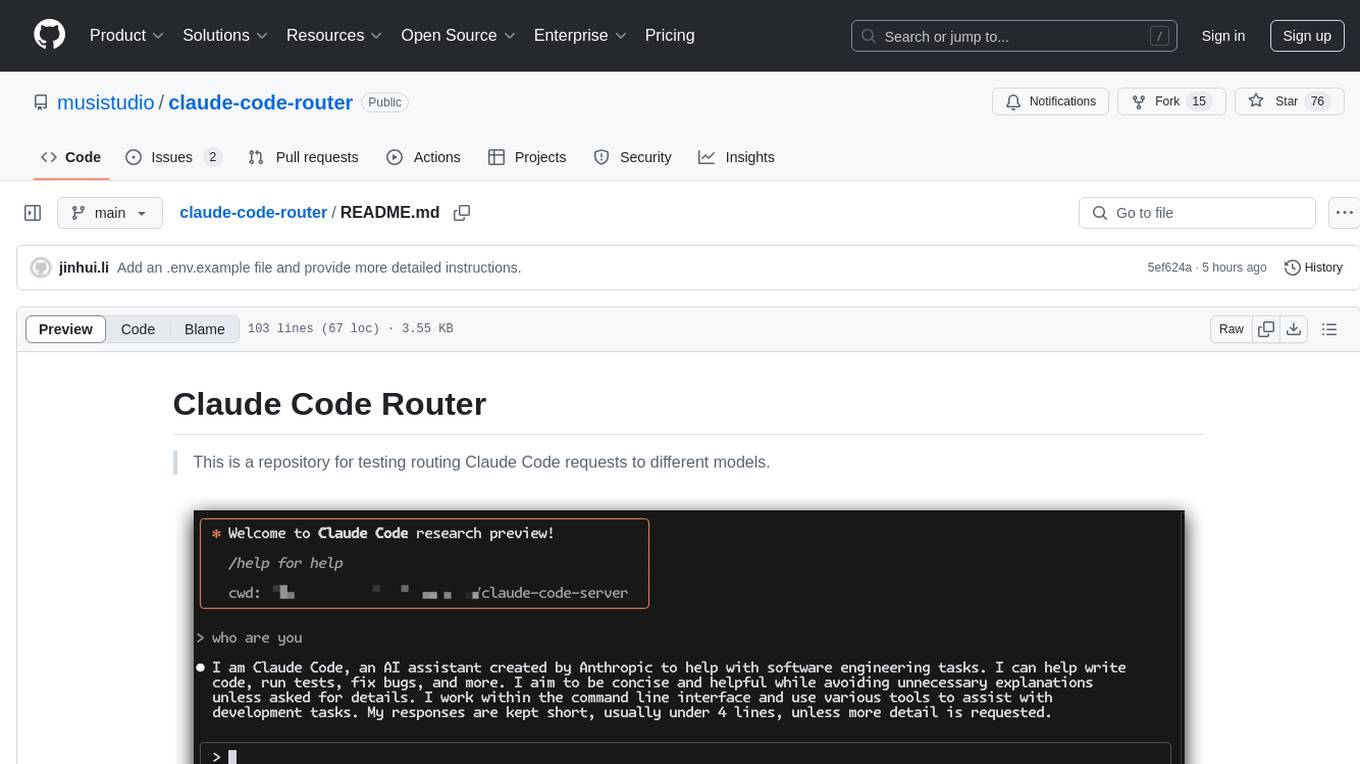

claude-code-router

This repository is for testing routing Claude Code requests to different models. It implements Normal Mode and Router Mode, using various models like qwen2.5-coder-3b-instruct, qwen-max-0125, deepseek-v3, and deepseek-r1. The project aims to reduce the cost of using Claude Code by leveraging free models and KV-Cache. Users can set appropriate ignorePatterns for the project. The Router Mode allows for the separation of tool invocation from coding tasks by using multiple models for different purposes.

MARS5-TTS

MARS5 is a novel English speech model (TTS) developed by CAMB.AI, featuring a two-stage AR-NAR pipeline with a unique NAR component. The model can generate speech for various scenarios like sports commentary and anime with just 5 seconds of audio and a text snippet. It allows steering prosody using punctuation and capitalization in the transcript. Speaker identity is specified using an audio reference file, enabling 'deep clone' for improved quality. The model can be used via torch.hub or HuggingFace, supporting both shallow and deep cloning for inference. Checkpoints are provided for AR and NAR models, with hardware requirements of 750M+450M params on GPU. Contributions to improve model stability, performance, and reference audio selection are welcome.

vespa

Vespa is a platform that performs operations such as selecting a subset of data in a large corpus, evaluating machine-learned models over the selected data, organizing and aggregating it, and returning it, typically in less than 100 milliseconds, all while the data corpus is continuously changing. It has been in development for many years and is used on a number of large internet services and apps which serve hundreds of thousands of queries from Vespa per second.

CoLLM

CoLLM is a novel method that integrates collaborative information into Large Language Models (LLMs) for recommendation. It converts recommendation data into language prompts, encodes them with both textual and collaborative information, and uses a two-step tuning method to train the model. The method incorporates user/item ID fields in prompts and employs a conventional collaborative model to generate user/item representations. CoLLM is built upon MiniGPT-4 and utilizes pretrained Vicuna weights for training.

aici

The Artificial Intelligence Controller Interface (AICI) lets you build Controllers that constrain and direct output of a Large Language Model (LLM) in real time. Controllers are flexible programs capable of implementing constrained decoding, dynamic editing of prompts and generated text, and coordinating execution across multiple, parallel generations. Controllers incorporate custom logic during the token-by-token decoding and maintain state during an LLM request. This allows diverse Controller strategies, from programmatic or query-based decoding to multi-agent conversations to execute efficiently in tight integration with the LLM itself.

For similar tasks

uni-api

uni-api is a project that unifies the management of large language model APIs, allowing you to call multiple backend services through a single unified API interface, converting them all to OpenAI format, and supporting load balancing. It supports various backend services such as OpenAI, Anthropic, Gemini, Vertex, Azure, xai, Cohere, Groq, Cloudflare, OpenRouter, and more. The project offers features like no front-end, pure configuration file setup, unified management of multiple backend services, support for multiple standard OpenAI format interfaces, rate limiting, automatic retry, channel cooling, fine-grained model timeout settings, and fine-grained permission control.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

LocalAI

LocalAI is a free and open-source OpenAI alternative that acts as a drop-in replacement REST API compatible with OpenAI (Elevenlabs, Anthropic, etc.) API specifications for local AI inferencing. It allows users to run LLMs, generate images, audio, and more locally or on-premises with consumer-grade hardware, supporting multiple model families and not requiring a GPU. LocalAI offers features such as text generation with GPTs, text-to-audio, audio-to-text transcription, image generation with stable diffusion, OpenAI functions, embeddings generation for vector databases, constrained grammars, downloading models directly from Huggingface, and a Vision API. It provides a detailed step-by-step introduction in its Getting Started guide and supports community integrations such as custom containers, WebUIs, model galleries, and various bots for Discord, Slack, and Telegram. LocalAI also offers resources like an LLM fine-tuning guide, instructions for local building and Kubernetes installation, projects integrating LocalAI, and a how-tos section curated by the community. It encourages users to cite the repository when utilizing it in downstream projects and acknowledges the contributions of various software from the community.

AiTreasureBox

AiTreasureBox is a versatile AI tool that provides a collection of pre-trained models and algorithms for various machine learning tasks. It simplifies the process of implementing AI solutions by offering ready-to-use components that can be easily integrated into projects. With AiTreasureBox, users can quickly prototype and deploy AI applications without the need for extensive knowledge in machine learning or deep learning. The tool covers a wide range of tasks such as image classification, text generation, sentiment analysis, object detection, and more. It is designed to be user-friendly and accessible to both beginners and experienced developers, making AI development more efficient and accessible to a wider audience.

glide

Glide is a cloud-native LLM gateway that provides a unified REST API for accessing various large language models (LLMs) from different providers. It handles LLMOps tasks such as model failover, caching, key management, and more, making it easy to integrate LLMs into applications. Glide supports popular LLM providers like OpenAI, Anthropic, Azure OpenAI, AWS Bedrock (Titan), Cohere, Google Gemini, OctoML, and Ollama. It offers high availability, performance, and observability, and provides SDKs for Python and NodeJS to simplify integration.

jupyter-ai

Jupyter AI connects generative AI with Jupyter notebooks. It provides a user-friendly and powerful way to explore generative AI models in notebooks and improve your productivity in JupyterLab and the Jupyter Notebook. Specifically, Jupyter AI offers: * An `%%ai` magic that turns the Jupyter notebook into a reproducible generative AI playground. This works anywhere the IPython kernel runs (JupyterLab, Jupyter Notebook, Google Colab, Kaggle, VSCode, etc.). * A native chat UI in JupyterLab that enables you to work with generative AI as a conversational assistant. * Support for a wide range of generative model providers, including AI21, Anthropic, AWS, Cohere, Gemini, Hugging Face, NVIDIA, and OpenAI. * Local model support through GPT4All, enabling use of generative AI models on consumer grade machines with ease and privacy.

langchain_dart

LangChain.dart is a Dart port of the popular LangChain Python framework created by Harrison Chase. LangChain provides a set of ready-to-use components for working with language models and a standard interface for chaining them together to formulate more advanced use cases (e.g. chatbots, Q&A with RAG, agents, summarization, extraction, etc.). The components can be grouped into a few core modules: * **Model I/O:** LangChain offers a unified API for interacting with various LLM providers (e.g. OpenAI, Google, Mistral, Ollama, etc.), allowing developers to switch between them with ease. Additionally, it provides tools for managing model inputs (prompt templates and example selectors) and parsing the resulting model outputs (output parsers). * **Retrieval:** assists in loading user data (via document loaders), transforming it (with text splitters), extracting its meaning (using embedding models), storing (in vector stores) and retrieving it (through retrievers) so that it can be used to ground the model's responses (i.e. Retrieval-Augmented Generation or RAG). * **Agents:** "bots" that leverage LLMs to make informed decisions about which available tools (such as web search, calculators, database lookup, etc.) to use to accomplish the designated task. The different components can be composed together using the LangChain Expression Language (LCEL).

infinity

Infinity is an AI-native database designed for LLM applications, providing incredibly fast full-text and vector search capabilities. It supports a wide range of data types, including vectors, full-text, and structured data, and offers a fused search feature that combines multiple embeddings and full text. Infinity is easy to use, with an intuitive Python API and a single-binary architecture that simplifies deployment. It achieves high performance, with 0.1 milliseconds query latency on million-scale vector datasets and up to 15K QPS.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.