gptauthor

GPTAuthor is an AI tool for writing long form, multi-chapter stories given a story prompt.

Stars: 73

GPT Author is a command-line tool designed to help users write long form, multi-chapter stories by providing a story prompt and generating a synopsis and subsequent chapters using ChatGPT. Users can review and make changes to the generated content before finalizing the story output in Markdown and HTML formats. The tool aims to unleash storytelling genius by combining human input with AI-generated content, offering a seamless writing experience for creating engaging narratives.

README:

Unleash your storytelling genius: GPTAuthor is an easy to use command-line tool for writing long form, multi-chapter stories given a story prompt.

- Human written story description: You describe your story outline, writing style, characters etc in a story prompt (see an example).

- Run GPTAuthor: As described below, choosing model, temperature, and number of chapters to write.

- AI generated synopsis: Given the story prompt, GPTAuthor uses ChatGPT to automatically turn this into a synopsis that has chapter summaries for the number of chapters you specify.

- Human review of synopsis: You are given a chance to review the synopsis and (optionally) make changes, only proceeding to the next step if/when you are happy with it. If it isn't what you want, you can generate another before proceeding.

-

AI generated story: Each chapter is iteratively written by ChatGPT given the common synopsis and previous chapter. (This approach is to keep token count within limits).

The full story is written as Markdown and HTML to an

./_output/folder for your reading pleasure.

The Nov 2023 OpenAI leadership crisis - "In the heart of San Francisco, nestled among the city's tech giants and start-up hopefuls, stood the OpenAI office. A hive of activity, it buzzed with the sound of keyboards clacking, coffee machines hissing, and the occasional drone of a philosophical debate about whether AI could develop a taste for late-night taco runs. It was a typical day, or so everyone thought. Sam Altman, the CEO of OpenAI, was in his office, a space that looked more like a teenager's bedroom than the office of a tech mogul, with posters of space exploration and vintage computers adorning the walls. He was in the middle of explaining to a rubber duck on his desk the intricacies of AI alignment, a method he found surprisingly effective, when his phone buzzed with an email notification." continue reading...

Echoes of Atlantis (based on a blogpost) - "In the dimly lit halls of the British Museum, Aria Seaborne's heart raced with anticipation. Her fingers, delicate yet steady, brushed against the surface of an ancient artifact that had long eluded understanding. It was a curious object, seemingly part of a larger mechanism, its origins shrouded in mystery and its purpose lost to time. Aria, with her keen eye for the arcane, noticed an almost imperceptible seam along its side. With a gentle nudge, the artifact sprang open, revealing a compartment that housed a parchment, brittle with age." continue reading...

You can install gptauthor using pip, ideally into a Python virtual environment.

pip install gptauthorAlternatively, checkout an example notebook that uses gptauthor and you can run directly in Google Colab.

This example reads the story prompt from the example prompts-openai-drama.yaml file and writes 3 chapters using the gpt-3.5-turbo model with a temperature of 0.1. Note that you will need to locally set your OpenAI API Key environment variable.

It's recommended to experiment using the default gpt-3.5-turbo model as generating a few chapters will only cost a couple cents (as of Jan 2024). Once you are happy with the results you can try one of the more expensive gpt-4 models which will produce better quality results, be slower, and cost more to run. See the OpenAI pricing page for more details.

Set your OpenAI API Key on MacOS/Linux:

export OPENAI_API_KEY=sk-<yourkey>Or, set your OpenAI API Key on Windows:

setx OPENAI_API_KEY "sk-<yourkey>"Then run the gptauthor command:

gptauthor --story prompts-openai-drama --total-chapters 3 --llm-model gpt-3.5-turbo --llm-temperature 0.1-

--story TEXT: The name of the yaml file defining the story and prompts

-

--llm-model TEXT: The model name [default: gpt-3.5-turbo] -

--llm-temperature FLOAT: LLM temperature value (0 to 2, OpenAI default is 1) [default: 1] -

--llm-top-p FLOAT: LLM top_p probability value (0 to 2, OpenAI default is 1) [default: 1] -

--llm-use-localhost INTEGER: LLM use localhost:8081 instead of openai [default: 0] -

--total-chapters INTEGER: Total chapters to write [default: 3] -

--allow-user-input / --no-allow-user-input: Allow command line user input [default: allow-user-input] -

--version: Display gptauthor version -

--help: Show usage help

While running the app tells your the input paramers, the progress of the writing, and where the output is written to.

In progress and final output is written to the ./_output/ directory, in the sub-folders ./_output/<prompt-file-name>/<model-name>/<datetime>-<parameters>-<book-name>/.

There are several files, the main output being a Markdown version of the whole book _whole_book.md and an HTML version of the same _whole_book.html.

The prompts for creating your own story are defined in a yaml file, for example see: prompts-openai-drama.yaml.

Make a copy, fill in your story details and describe the writing style (while retaining the same structure), and run the app in the same folder as your new yaml file.

For example, if your yaml prompt file is called prompts-my-really-great-story.yaml:

export OPENAI_API_KEY=sk-<your key>

gptauthor --story prompts-my-really-great-story --total-chapters 5 --llm-model gpt-3.5-turbo --llm-temperature 0.1- The OpenAI API sometimes returns an error, in which case the app will exit. You can restart it and it will continue from where it left off as responses are cached (assuming same arguments).

- Continuity. Since each chapter only knows about the common synopsis and the previous chapter, there can be issues of continuity between chapters.

- Not tested on Windows so there could be path issues here.

- Cost estimation is based on total token but should treat input and output token counts seperately.

- The use of AI to write stories is a controversial topic. This is a tool to test the capabilities of ChatGPT and should be used responsibly.

Contributions are welcome. Please follow these steps:

- Fork the repository.

- Create a new branch for your feature or bug fix.

- Make your changes and commit them.

- Create a pull request with a description of your changes.

While an effort is made to count tokens and estimate OpenAI API costs for each run, they are just estimated and can be wrong. Check your OpenAI billing page to confirm the actual costs.

I'm sure there are bugs, please report them on the Github issues page

Have fun! And please share your results with me if you can (perhaps as a Github documentation issue), I'd love to see them.

This project is MIT licensed.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for gptauthor

Similar Open Source Tools

gptauthor

GPT Author is a command-line tool designed to help users write long form, multi-chapter stories by providing a story prompt and generating a synopsis and subsequent chapters using ChatGPT. Users can review and make changes to the generated content before finalizing the story output in Markdown and HTML formats. The tool aims to unleash storytelling genius by combining human input with AI-generated content, offering a seamless writing experience for creating engaging narratives.

Mapperatorinator

Mapperatorinator is a multi-model framework that uses spectrogram inputs to generate fully featured osu! beatmaps for all gamemodes and assist modding beatmaps. The project aims to automatically generate rankable quality osu! beatmaps from any song with a high degree of customizability. The tool is built upon osuT5 and osu-diffusion, utilizing GPU compute and instances on vast.ai for development. Users can responsibly use AI in their beatmaps with this tool, ensuring disclosure of AI usage. Installation instructions include cloning the repository, creating a virtual environment, and installing dependencies. The tool offers a Web GUI for user-friendly experience and a Command-Line Inference option for advanced configurations. Additionally, an Interactive CLI script is available for terminal-based workflow with guided setup. The tool provides generation tips and features MaiMod, an AI-driven modding tool for osu! beatmaps. Mapperatorinator tokenizes beatmaps, utilizes a model architecture based on HF Transformers Whisper model, and offers multitask training format for conditional generation. The tool ensures seamless long generation, refines coordinates with diffusion, and performs post-processing for improved beatmap quality. Super timing generator enhances timing accuracy, and LoRA fine-tuning allows adaptation to specific styles or gamemodes. The project acknowledges credits and related works in the osu! community.

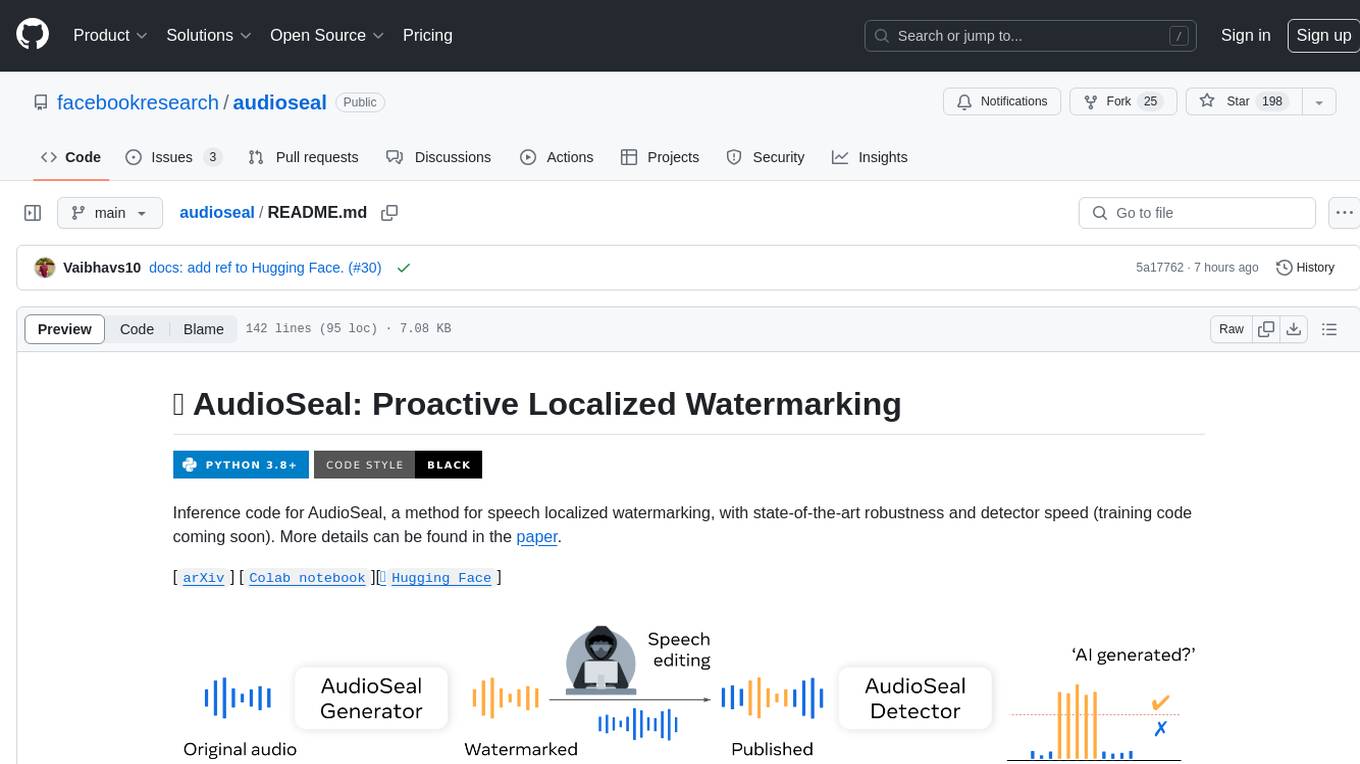

audioseal

AudioSeal is a method for speech localized watermarking, designed with state-of-the-art robustness and detector speed. It jointly trains a generator to embed a watermark in audio and a detector to detect watermarked fragments in longer audios, even in the presence of editing. The tool achieves top-notch detection performance at the sample level, generates minimal alteration of signal quality, and is robust to various audio editing types. With a fast, single-pass detector, AudioSeal surpasses existing models in speed, making it ideal for large-scale and real-time applications.

GhostOS

GhostOS is an AI Agent framework designed to replace JSON Schema with a Turing-complete code interaction interface (Moss Protocol). It aims to create intelligent entities capable of continuous learning and growth through code generation and project management. The framework supports various capabilities such as turning Python files into web agents, real-time voice conversation, body movements control, and emotion expression. GhostOS is still in early experimental development and focuses on out-of-the-box capabilities for AI agents.

llm.c

LLM training in simple, pure C/CUDA. There is no need for 245MB of PyTorch or 107MB of cPython. For example, training GPT-2 (CPU, fp32) is ~1,000 lines of clean code in a single file. It compiles and runs instantly, and exactly matches the PyTorch reference implementation. I chose GPT-2 as the first working example because it is the grand-daddy of LLMs, the first time the modern stack was put together.

MARS5-TTS

MARS5 is a novel English speech model (TTS) developed by CAMB.AI, featuring a two-stage AR-NAR pipeline with a unique NAR component. The model can generate speech for various scenarios like sports commentary and anime with just 5 seconds of audio and a text snippet. It allows steering prosody using punctuation and capitalization in the transcript. Speaker identity is specified using an audio reference file, enabling 'deep clone' for improved quality. The model can be used via torch.hub or HuggingFace, supporting both shallow and deep cloning for inference. Checkpoints are provided for AR and NAR models, with hardware requirements of 750M+450M params on GPU. Contributions to improve model stability, performance, and reference audio selection are welcome.

chronon

Chronon is a platform that simplifies and improves ML workflows by providing a central place to define features, ensuring point-in-time correctness for backfills, simplifying orchestration for batch and streaming pipelines, offering easy endpoints for feature fetching, and guaranteeing and measuring consistency. It offers benefits over other approaches by enabling the use of a broad set of data for training, handling large aggregations and other computationally intensive transformations, and abstracting away the infrastructure complexity of data plumbing.

aici

The Artificial Intelligence Controller Interface (AICI) lets you build Controllers that constrain and direct output of a Large Language Model (LLM) in real time. Controllers are flexible programs capable of implementing constrained decoding, dynamic editing of prompts and generated text, and coordinating execution across multiple, parallel generations. Controllers incorporate custom logic during the token-by-token decoding and maintain state during an LLM request. This allows diverse Controller strategies, from programmatic or query-based decoding to multi-agent conversations to execute efficiently in tight integration with the LLM itself.

nanoPerplexityAI

nanoPerplexityAI is an open-source implementation of a large language model service that fetches information from Google. It involves a simple architecture where the user query is checked by the language model, reformulated for Google search, and an answer is generated and saved in a markdown file. The tool requires minimal setup and is designed for easy visualization of answers.

ezkl

EZKL is a library and command-line tool for doing inference for deep learning models and other computational graphs in a zk-snark (ZKML). It enables the following workflow: 1. Define a computational graph, for instance a neural network (but really any arbitrary set of operations), as you would normally in pytorch or tensorflow. 2. Export the final graph of operations as an .onnx file and some sample inputs to a .json file. 3. Point ezkl to the .onnx and .json files to generate a ZK-SNARK circuit with which you can prove statements such as: > "I ran this publicly available neural network on some private data and it produced this output" > "I ran my private neural network on some public data and it produced this output" > "I correctly ran this publicly available neural network on some public data and it produced this output" In the backend we use the collaboratively-developed Halo2 as a proof system. The generated proofs can then be verified with much less computational resources, including on-chain (with the Ethereum Virtual Machine), in a browser, or on a device.

BentoDiffusion

BentoDiffusion is a BentoML example project that demonstrates how to serve and deploy diffusion models in the Stable Diffusion (SD) family. These models are specialized in generating and manipulating images based on text prompts. The project provides a guide on using SDXL Turbo as an example, along with instructions on prerequisites, installing dependencies, running the BentoML service, and deploying to BentoCloud. Users can interact with the deployed service using Swagger UI or other methods. Additionally, the project offers the option to choose from various diffusion models available in the repository for deployment.

brokk

Brokk is a code assistant designed to understand code semantically, allowing LLMs to work effectively on large codebases. It offers features like agentic search, summarizing related classes, parsing stack traces, adding source for usages, and autonomously fixing errors. Users can interact with Brokk through different panels and commands, enabling them to manipulate context, ask questions, search codebase, run shell commands, and more. Brokk helps with tasks like debugging regressions, exploring codebase, AI-powered refactoring, and working with dependencies. It is particularly useful for making complex, multi-file edits with o1pro.

generative-ai-sagemaker-cdk-demo

This repository showcases how to deploy generative AI models from Amazon SageMaker JumpStart using the AWS CDK. Generative AI is a type of AI that can create new content and ideas, such as conversations, stories, images, videos, and music. The repository provides a detailed guide on deploying image and text generative AI models, utilizing pre-trained models from SageMaker JumpStart. The web application is built on Streamlit and hosted on Amazon ECS with Fargate. It interacts with the SageMaker model endpoints through Lambda functions and Amazon API Gateway. The repository also includes instructions on setting up the AWS CDK application, deploying the stacks, using the models, and viewing the deployed resources on the AWS Management Console.

FigStep

FigStep is a black-box jailbreaking algorithm against large vision-language models (VLMs). It feeds harmful instructions through the image channel and uses benign text prompts to induce VLMs to output contents that violate common AI safety policies. The tool highlights the vulnerability of VLMs to jailbreaking attacks, emphasizing the need for safety alignments between visual and textual modalities.

ersilia

The Ersilia Model Hub is a unified platform of pre-trained AI/ML models dedicated to infectious and neglected disease research. It offers an open-source, low-code solution that provides seamless access to AI/ML models for drug discovery. Models housed in the hub come from two sources: published models from literature (with due third-party acknowledgment) and custom models developed by the Ersilia team or contributors.

CoLLM

CoLLM is a novel method that integrates collaborative information into Large Language Models (LLMs) for recommendation. It converts recommendation data into language prompts, encodes them with both textual and collaborative information, and uses a two-step tuning method to train the model. The method incorporates user/item ID fields in prompts and employs a conventional collaborative model to generate user/item representations. CoLLM is built upon MiniGPT-4 and utilizes pretrained Vicuna weights for training.

For similar tasks

Long-Novel-GPT

Long-Novel-GPT is a long novel generator based on large language models like GPT. It utilizes a hierarchical outline/chapter/text structure to maintain the coherence of long novels. It optimizes API calls cost through context management and continuously improves based on self or user feedback until reaching the set goal. The tool aims to continuously refine and build novel content based on user-provided initial ideas, ultimately generating long novels at the level of human writers.

gptauthor

GPT Author is a command-line tool designed to help users write long form, multi-chapter stories by providing a story prompt and generating a synopsis and subsequent chapters using ChatGPT. Users can review and make changes to the generated content before finalizing the story output in Markdown and HTML formats. The tool aims to unleash storytelling genius by combining human input with AI-generated content, offering a seamless writing experience for creating engaging narratives.

LLMAI-writer

LLMAI-writer is a powerful AI tool for assisting in novel writing, utilizing state-of-the-art large language models to help writers brainstorm, plan, and create novels. Whether you are an experienced writer or a beginner, LLMAI-writer can help you efficiently complete the writing process.

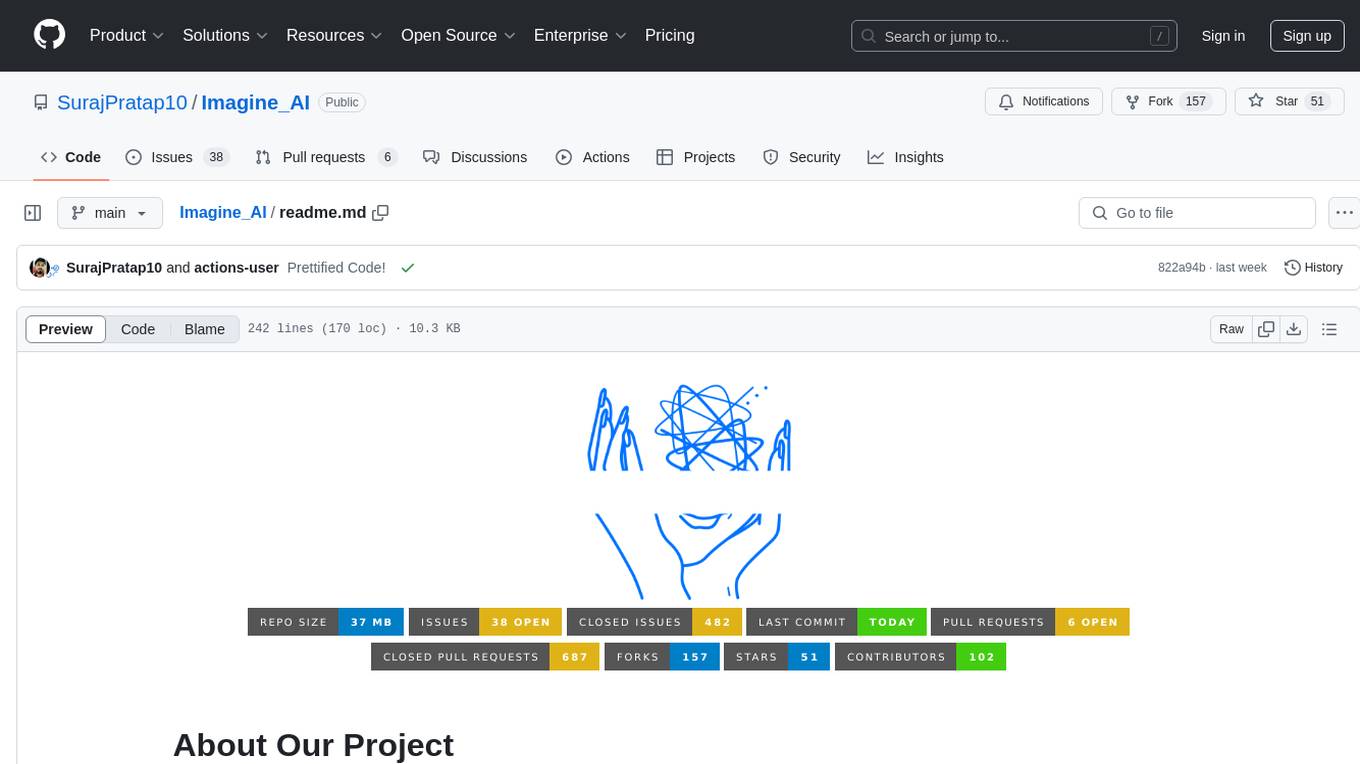

Imagine_AI

IMAGINE - AI is a groundbreaking image generator tool that leverages the power of OpenAI's DALL-E 2 API library to create extraordinary visuals. Developed using Node.js and Express, this tool offers a transformative way to unleash artistic creativity and imagination by generating unique and captivating images through simple prompts or keywords.

SillyTavern

SillyTavern is a user interface you can install on your computer (and Android phones) that allows you to interact with text generation AIs and chat/roleplay with characters you or the community create. SillyTavern is a fork of TavernAI 1.2.8 which is under more active development and has added many major features. At this point, they can be thought of as completely independent programs.

agnai

Agnaistic is an AI roleplay chat tool that allows users to interact with personalized characters using their favorite AI services. It supports multiple AI services, persona schema formats, and features such as group conversations, user authentication, and memory/lore books. Agnaistic can be self-hosted or run using Docker, and it provides a range of customization options through its settings.json file. The tool is designed to be user-friendly and accessible, making it suitable for both casual users and developers.

ComfyUI-IF_AI_tools

ComfyUI-IF_AI_tools is a set of custom nodes for ComfyUI that allows you to generate prompts using a local Large Language Model (LLM) via Ollama. This tool enables you to enhance your image generation workflow by leveraging the power of language models.

ai-game-development-tools

Here we will keep track of the AI Game Development Tools, including LLM, Agent, Code, Writer, Image, Texture, Shader, 3D Model, Animation, Video, Audio, Music, Singing Voice and Analytics. 🔥 * Tool (AI LLM) * Game (Agent) * Code * Framework * Writer * Image * Texture * Shader * 3D Model * Avatar * Animation * Video * Audio * Music * Singing Voice * Speech * Analytics * Video Tool

For similar jobs

gptauthor

GPT Author is a command-line tool designed to help users write long form, multi-chapter stories by providing a story prompt and generating a synopsis and subsequent chapters using ChatGPT. Users can review and make changes to the generated content before finalizing the story output in Markdown and HTML formats. The tool aims to unleash storytelling genius by combining human input with AI-generated content, offering a seamless writing experience for creating engaging narratives.

LLMAI-writer

LLMAI-writer is a powerful AI tool for assisting in novel writing, utilizing state-of-the-art large language models to help writers brainstorm, plan, and create novels. Whether you are an experienced writer or a beginner, LLMAI-writer can help you efficiently complete the writing process.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

daily-poetry-image

Daily Chinese ancient poetry and AI-generated images powered by Bing DALL-E-3. GitHub Action triggers the process automatically. Poetry is provided by Today's Poem API. The website is built with Astro.

exif-photo-blog

EXIF Photo Blog is a full-stack photo blog application built with Next.js, Vercel, and Postgres. It features built-in authentication, photo upload with EXIF extraction, photo organization by tag, infinite scroll, light/dark mode, automatic OG image generation, a CMD-K menu with photo search, experimental support for AI-generated descriptions, and support for Fujifilm simulations. The application is easy to deploy to Vercel with just a few clicks and can be customized with a variety of environment variables.

SillyTavern

SillyTavern is a user interface you can install on your computer (and Android phones) that allows you to interact with text generation AIs and chat/roleplay with characters you or the community create. SillyTavern is a fork of TavernAI 1.2.8 which is under more active development and has added many major features. At this point, they can be thought of as completely independent programs.

Twitter-Insight-LLM

This project enables you to fetch liked tweets from Twitter (using Selenium), save it to JSON and Excel files, and perform initial data analysis and image captions. This is part of the initial steps for a larger personal project involving Large Language Models (LLMs).

AISuperDomain

Aila Desktop Application is a powerful tool that integrates multiple leading AI models into a single desktop application. It allows users to interact with various AI models simultaneously, providing diverse responses and insights to their inquiries. With its user-friendly interface and customizable features, Aila empowers users to engage with AI seamlessly and efficiently. Whether you're a researcher, student, or professional, Aila can enhance your AI interactions and streamline your workflow.