guidance-for-a-multi-tenant-generative-ai-gateway-with-cost-and-usage-tracking-on-aws

This Guidance demonstrates how to build an internal Software-as-a-Service (SaaS) platform that provides access to foundation models, like those available through Amazon Bedrock, to different business units or teams within your organization

Stars: 56

This repository provides guidance on building a multi-tenant SaaS solution for accessing foundation models using Amazon Bedrock and Amazon SageMaker. It helps enterprise IT teams track usage and costs of foundation models, regulate access, and provide visibility to cost centers. The solution includes an API Gateway design pattern for standardization and governance, enabling loose coupling between model consumers and endpoint services. The CDK Stack deploys resources for private networking, API Gateway, Lambda functions, DynamoDB table, EventBridge, S3 buckets, and Cloudwatch logs.

README:

In this repository, we show you how to build a multi-tenant SaaS solution to access foundation models with Amazon Bedrock and Amazon SageMaker.

Enterprise IT teams may need to track the usage of FMs across teams, chargeback costs and provide visibility to the relevant cost center in the LOB. Additionally, they may need to regulate access to different models per team. For example, if only specific FMs may be approved for use.

An internal software as a service (SaaS) for foundation models can address governance requirements while providing a simple and consistent interface for the end users. API Gateway is a common design pattern that enable consumption of services with standardization and governance. They can provide loose coupling between model consumers and the model endpoint service that gives flexibility to adapt to changing model versions, architectures and invocation methods.

Multiple tenants within an enterprise could simply reflect to multiple teams or projects accessing LLMs via REST APIs just like other SaaS services. IT teams can add additional governance and controls over this SaaS layer. In this cdk example, we focus specifically on showcasing multiple tenants with different cost centers accessing the service via API gateway. An internal service is responsible to perform usage and cost tracking per tenant and aggregate that cost for reporting. The cdk template provided here deploys all the required resources to the AWS account.

The CDK Stack provides the following deployments:

- Private Networking environment with VPC, Private Subnets, VPC Endpoints for Lambda, API Gateway, and Amazon Bedrock

- API Gateway Rest API

- API Gateway Usage Plan

- API Gateway Key

- Lambda functions to list foundation models on Bedrock

- Lambda functions to invoke models on Bedrock and SageMaker

- Lambda functions to invoke models on Bedrock and SageMaker with streaming response

- DynamoDB table for saving streaming responses asynchronously

- Lambda function to aggregate usage and cost tracking

- EventBridge to trigger the cost aggregation on a regular frequency

- S3 buckets to store the cost tracking logs

- Cloudwatch logs to collect logs from Lambda invocations

- API Gateway Usage Plan

- API Gateway Key

Sample notebook in the notebooks folder can be used to invoke Bedrock as either one of the teams/cost_center. API gateway then routes the request to the lambda that invokes Bedrock models or SageMaker hosted models and logs the usage metrics to cloudwatch. EventBridge triggers the cost tracking lambda on a regular frequnecy to aggregate metrics from the cloudwatch logs and generate aggregate usage and cost metrics for the chosen granularity level. The metrics are stored in S3 and can further be visualized with custom reports.

The CDK Stack creates Rest API compliant with OpenAPI specification standards.

The solution is currently support both REST invocation and Streaming invocation with long polling for Bedrock and SageMaker.

openapi: 3.0.1

info:

title: "<REST_API_NAME>"

version: '2023-12-13T12:12:15Z'

servers:

- url: https://<HOST>.execute-api.<REGION>.amazonaws.com/{basePath}

variables:

basePath:

default: prod

paths:

"/list_foundation_models":

get:

responses:

'401':

description: 401 response

headers:

Access-Control-Allow-Origin:

schema:

type: string

content:

application/json:

schema:

"$ref": "#/components/schemas/Error"

security:

- api_key: []

"/invoke_model":

requestBody:

required: true

content:

application/json:

schema:

$ref: '#/components/schemas/InvokeModelRequest'

parameters:

- name: model_id

in: query

required: true

schema:

type: string

description: Id of the base model to invoke

- name: model_arn

in: query

required: true

schema:

type: string

description: ARN of the custom model in Amazon Bedrock

- name: requestId

in: query

required: false

schema:

type: string

description: Request ID for long-polling functionality. Requires streaming=true

- name: team_id

in: header

required: true

schema:

type: string

- name: messages_api

in: header

required: false

schema:

type: string

- name: streaming

in: header

required: false

schema:

type: string

- name: type

in: header

required: false

schema:

type: string

responses:

'401':

description: 401 response

headers:

Access-Control-Allow-Origin:

schema:

type: string

content:

application/json:

schema:

"$ref": "#/components/schemas/Error"

security:

- api_key: []

components:

schemas:

InvokeModelRequest:

type: object

required:

- inputs

- parameters

properties:

inputs:

$ref: '#/components/schemas/Prompt'

parameters:

$ref: '#/components/schemas/ModelParameters'

Prompt:

type: object

example:

- role: 'user'

content: 'What is Amazon Bedrock?'

ModelParameters:

type: object

properties:

maxTokens:

type: integer

required: false

temperature:

type: number

required: false

topP:

type: number

required: false

stopSequences:

type: array

required: false

items:

type: string

system:

type: string

required: false

Error:

title: Error Schema

type: object

properties:

message:

type: string

securitySchemes:

api_key:

type: apiKey

name: x-api-key

in: header

| team_id | model_id | input_tokens | output_tokens | invocations | input_cost | output_cost |

|---|---|---|---|---|---|---|

| tenant1 | amazon.titan-tg1-large | 24000 | 2473 | 1000 | 0.0072 | 0.00099 |

| tenant1 | anthropic.claude-v2 | 2448 | 4800 | 24 | 0.02698 | 0.15686 |

| tenant2 | amazon.titan-tg1-large | 35000 | 52500 | 350 | 0.0105 | 0.021 |

| tenant2 | ai21.j2-grande-instruct | 4590 | 9000 | 45 | 0.05738 | 0.1125 |

| tenant2 | anthropic.claude-v2 | 1080 | 4400 | 20 | 0.0119 | 0.14379 |

The following examples are providing guidelines on the structure for the configuration file. Please make sure to look at setup/configs.json for the most updated version of the file.

Edit the global configs used in the CDK Stack. For each organizational units that requires a dedicated multi-tenant SaaS environment, create an entry in setup/configs.json

[

{

"STACK_PREFIX": "", # unit 1 with dedicated SaaS resources

"BEDROCK_ENDPOINT": "https://bedrock-runtime.{}.amazonaws.com", # bedrock-runtime endpoint used for invoking Amazon Bedrock

"BEDROCK_REQUIREMENTS": "boto3>=1.34.62 awscli>=1.32.62 botocore>=1.34.62", # Requirements for Amazon Bedrock

"LANGCHAIN_REQUIREMENTS": "aws-lambda-powertools langchain==0.1.12 pydantic PyYaml", # python modules installed for langchain layer

"PANDAS_REQUIREMENTS": "pandas", # python modules installed for pandas layer

"VPC_CIDR": "10.10.0.0/16" # CIDR used for the private VPC Env,

"API_THROTTLING_RATE": 10000, #Throttling limit assigned to the usage plan

"API_BURST_RATE": 5000 # Burst limit assigned to the usage plan

},

{

"STACK_PREFIX": "" # unit 2 with dedicated SaaS resources,

"BEDROCK_ENDPOINT": "https://bedrock-runtime.{}.amazonaws.com", # bedrock-runtime endpoint used for invoking Amazon Bedrock

"BEDROCK_REQUIREMENTS": "boto3>=1.34.62 awscli>=1.32.62 botocore>=1.34.62", # Requirements for Amazon Bedrock

"LANGCHAIN_REQUIREMENTS": "aws-lambda-powertools langchain==0.1.12 pydantic PyYaml", # python modules installed for langchain layer

"PANDAS_REQUIREMENTS": "pandas", # python modules installed for pandas layer

"VPC_CIDR": "10.20.0.0/16" # CIDR used for the private VPC Env,

"API_THROTTLING_RATE": 10000,

"API_BURST_RATE": 5000

},

]

Execute the following commands:

chmod +x deploy_stack.sh

./deploy_stack.sh

Edit the global configs used in the CDK Stack. For each organizational units that requires a dedicated API Key associated to a crated API Gateway REST API, create an entry in setup/configs.json

by specifying API_GATEWAY_ID and API_GATEWAY_RESOURCE_ID:

[

{

"STACK_PREFIX": "", # unit 1 with dedicated SaaS resources

"API_GATEWAY_ID": "", # Rest API ID

"API_GATEWAY_RESOURCE_ID": "", # Resource ID of the Rest API

"API_THROTTLING_RATE": 10000, #Throttling limit assigned to the usage plan

"API_BURST_RATE": 5000 # Burst limit assigned to the usage plan

}

]

Edit the global configs used in the CDK Stack. For each organizational units that requires a dedicated API Key associated to a crated API Gateway REST API, create an entry in setup/configs.json

by specifying PARENT_STACK_PREFIX:

[

{

"STACK_PREFIX": "", # unit 1 with dedicated SaaS resources

"PARENT_STACK_PREFIX": "", # unit parent you want to import configurations

"API_THROTTLING_RATE": 10000, #Throttling limit assigned to the usage plan

"API_BURST_RATE": 5000 # Burst limit assigned to the usage plan

}

]

Execute the following commands:

chmod +x deploy_stack.sh

./deploy_stack.sh

Add FMs through Amazon SageMaker:

We can expose Foundation Models hosted in Amazon SageMaker by providing the endpoint names in a JSON format in a string representation, as described in the example below:

[

{

"STACK_PREFIX": "", # unit 1 with dedicated SaaS resources

"BEDROCK_ENDPOINT": "https://bedrock-runtime.{}.amazonaws.com", # bedrock-runtime endpoint used for invoking Amazon Bedrock

"BEDROCK_REQUIREMENTS": "boto3>=1.34.62 awscli>=1.32.62 botocore>=1.34.62", # Requirements for Amazon Bedrock

"LANGCHAIN_REQUIREMENTS": "aws-lambda-powertools langchain==0.1.12 pydantic PyYaml", # python modules installed for langchain layer

"PANDAS_REQUIREMENTS": "pandas", # python modules installed for pandas layer

"VPC_CIDR": "10.10.0.0/16" # CIDR used for the private VPC Env,

"API_THROTTLING_RATE": 10000, #Throttling limit assigned to the usage plan

"API_BURST_RATE": 5000 # Burst limit assigned to the usage plan,

"SAGEMAKER_ENDPOINTS": "{'Mixtral 8x7B': 'Mixtral-SM-Endpoint'}" # List of SageMaker endpoints

}

]

We can provide InferenceComponentNamespecification for the model invocation. Please refer to the notebook

01_bedrock_api.ipynb for an example

Amazon SageMaker Hosting is providing flexibility in the definition of the inference container. This solution is currently supporting general purpose inference scripts provided by SageMaker JumpStart and Hugging Face TGI container.

It is required to adapt the lambda functions invoke_model and invoke_model_streaming in case of custom inference scripts.

For additional reading, refer to:

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for guidance-for-a-multi-tenant-generative-ai-gateway-with-cost-and-usage-tracking-on-aws

Similar Open Source Tools

guidance-for-a-multi-tenant-generative-ai-gateway-with-cost-and-usage-tracking-on-aws

This repository provides guidance on building a multi-tenant SaaS solution for accessing foundation models using Amazon Bedrock and Amazon SageMaker. It helps enterprise IT teams track usage and costs of foundation models, regulate access, and provide visibility to cost centers. The solution includes an API Gateway design pattern for standardization and governance, enabling loose coupling between model consumers and endpoint services. The CDK Stack deploys resources for private networking, API Gateway, Lambda functions, DynamoDB table, EventBridge, S3 buckets, and Cloudwatch logs.

VectorETL

VectorETL is a lightweight ETL framework designed to assist Data & AI engineers in processing data for AI applications quickly. It streamlines the conversion of diverse data sources into vector embeddings and storage in various vector databases. The framework supports multiple data sources, embedding models, and vector database targets, simplifying the creation and management of vector search systems for semantic search, recommendation systems, and other vector-based operations.

ModelCache

Codefuse-ModelCache is a semantic cache for large language models (LLMs) that aims to optimize services by introducing a caching mechanism. It helps reduce the cost of inference deployment, improve model performance and efficiency, and provide scalable services for large models. The project facilitates sharing and exchanging technologies related to large model semantic cache through open-source collaboration.

open-responses

OpenResponses API provides enterprise-grade AI capabilities through a powerful API, simplifying development and deployment while ensuring complete data control. It offers automated tracing, integrated RAG for contextual information retrieval, pre-built tool integrations, self-hosted architecture, and an OpenAI-compatible interface. The toolkit addresses development challenges like feature gaps and integration complexity, as well as operational concerns such as data privacy and operational control. Engineering teams can benefit from improved productivity, production readiness, compliance confidence, and simplified architecture by choosing OpenResponses.

CodeFuse-ModelCache

Codefuse-ModelCache is a semantic cache for large language models (LLMs) that aims to optimize services by introducing a caching mechanism. It helps reduce the cost of inference deployment, improve model performance and efficiency, and provide scalable services for large models. The project caches pre-generated model results to reduce response time for similar requests and enhance user experience. It integrates various embedding frameworks and local storage options, offering functionalities like cache-writing, cache-querying, and cache-clearing through RESTful API. The tool supports multi-tenancy, system commands, and multi-turn dialogue, with features for data isolation, database management, and model loading schemes. Future developments include data isolation based on hyperparameters, enhanced system prompt partitioning storage, and more versatile embedding models and similarity evaluation algorithms.

llm-structured-output

This repository contains a library for constraining LLM generation to structured output, enforcing a JSON schema for precise data types and property names. It includes an acceptor/state machine framework, JSON acceptor, and JSON schema acceptor for guiding decoding in LLMs. The library provides reference implementations using Apple's MLX library and examples for function calling tasks. The tool aims to improve LLM output quality by ensuring adherence to a schema, reducing unnecessary output, and enhancing performance through pre-emptive decoding. Evaluations show performance benchmarks and comparisons with and without schema constraints.

claim-ai-phone-bot

AI-powered call center solution with Azure and OpenAI GPT. The bot can answer calls, understand the customer's request, and provide relevant information or assistance. It can also create a todo list of tasks to complete the claim, and send a report after the call. The bot is customizable, and can be used in multiple languages.

call-center-ai

Call Center AI is an AI-powered call center solution that leverages Azure and OpenAI GPT. It is a proof of concept demonstrating the integration of Azure Communication Services, Azure Cognitive Services, and Azure OpenAI to build an automated call center solution. The project showcases features like accessing claims on a public website, customer conversation history, language change during conversation, bot interaction via phone number, multiple voice tones, lexicon understanding, todo list creation, customizable prompts, content filtering, GPT-4 Turbo for customer requests, specific data schema for claims, documentation database access, SMS report sending, conversation resumption, and more. The system architecture includes components like RAG AI Search, SMS gateway, call gateway, moderation, Cosmos DB, event broker, GPT-4 Turbo, Redis cache, translation service, and more. The tool can be deployed remotely using GitHub Actions and locally with prerequisites like Azure environment setup, configuration file creation, and resource hosting. Advanced usage includes custom training data with AI Search, prompt customization, language customization, moderation level customization, claim data schema customization, OpenAI compatible model usage for the LLM, and Twilio integration for SMS.

otto-m8

otto-m8 is a flowchart based automation platform designed to run deep learning workloads with minimal to no code. It provides a user-friendly interface to spin up a wide range of AI models, including traditional deep learning models and large language models. The tool deploys Docker containers of workflows as APIs for integration with existing workflows, building AI chatbots, or standalone applications. Otto-m8 operates on an Input, Process, Output paradigm, simplifying the process of running AI models into a flowchart-like UI.

empower-functions

Empower Functions is a family of large language models (LLMs) that provide GPT-4 level capabilities for real-world 'tool using' use cases. These models offer compatibility support to be used as drop-in replacements, enabling interactions with external APIs by recognizing when a function needs to be called and generating JSON containing necessary arguments based on user inputs. This capability is crucial for building conversational agents and applications that convert natural language into API calls, facilitating tasks such as weather inquiries, data extraction, and interactions with knowledge bases. The models can handle multi-turn conversations, choose between tools or standard dialogue, ask for clarification on missing parameters, integrate responses with tool outputs in a streaming fashion, and efficiently execute multiple functions either in parallel or sequentially with dependencies.

llm-web-api

LLM Web API is a tool that provides a web page to API interface for ChatGPT, allowing users to bypass Cloudflare challenges, switch models, and dynamically display supported models. It uses Playwright to control a fingerprint browser, simulating user operations to send requests to the OpenAI website and converting the responses into API interfaces. The API currently supports the OpenAI-compatible /v1/chat/completions API, accessible using OpenAI or other compatible clients.

google-cloud-gcp-openai-api

This project provides a drop-in replacement REST API for Google Cloud Vertex AI (PaLM 2, Codey, Gemini) that is compatible with the OpenAI API specifications. It aims to make Google Cloud Platform Vertex AI more accessible by translating OpenAI API calls to Vertex AI. The software is developed in Python and based on FastAPI and LangChain, designed to be simple and customizable for individual needs. It includes step-by-step guides for deployment, supports various OpenAI API services, and offers configuration through environment variables. Additionally, it provides examples for running locally and usage instructions consistent with the OpenAI API format.

fractl

Fractl is a programming language designed for generative AI, making it easier for developers to work with AI-generated code. It features a data-oriented and declarative syntax, making it a better fit for generative AI-powered code generation. Fractl also bridges the gap between traditional programming and visual building, allowing developers to use multiple ways of building, including traditional coding, visual development, and code generation with generative AI. Key concepts in Fractl include a graph-based hierarchical data model, zero-trust programming, declarative dataflow, resolvers, interceptors, and entity-graph-database mapping.

ai-dev-2024-ml-workshop

The 'ai-dev-2024-ml-workshop' repository contains materials for the Deploy and Monitor ML Pipelines workshop at the AI_dev 2024 conference in Paris, focusing on deployment designs of machine learning pipelines using open-source applications and free-tier tools. It demonstrates automating data refresh and forecasting using GitHub Actions and Docker, monitoring with MLflow and YData Profiling, and setting up a monitoring dashboard with Quarto doc on GitHub Pages.

langcorn

LangCorn is an API server that enables you to serve LangChain models and pipelines with ease, leveraging the power of FastAPI for a robust and efficient experience. It offers features such as easy deployment of LangChain models and pipelines, ready-to-use authentication functionality, high-performance FastAPI framework for serving requests, scalability and robustness for language processing applications, support for custom pipelines and processing, well-documented RESTful API endpoints, and asynchronous processing for faster response times.

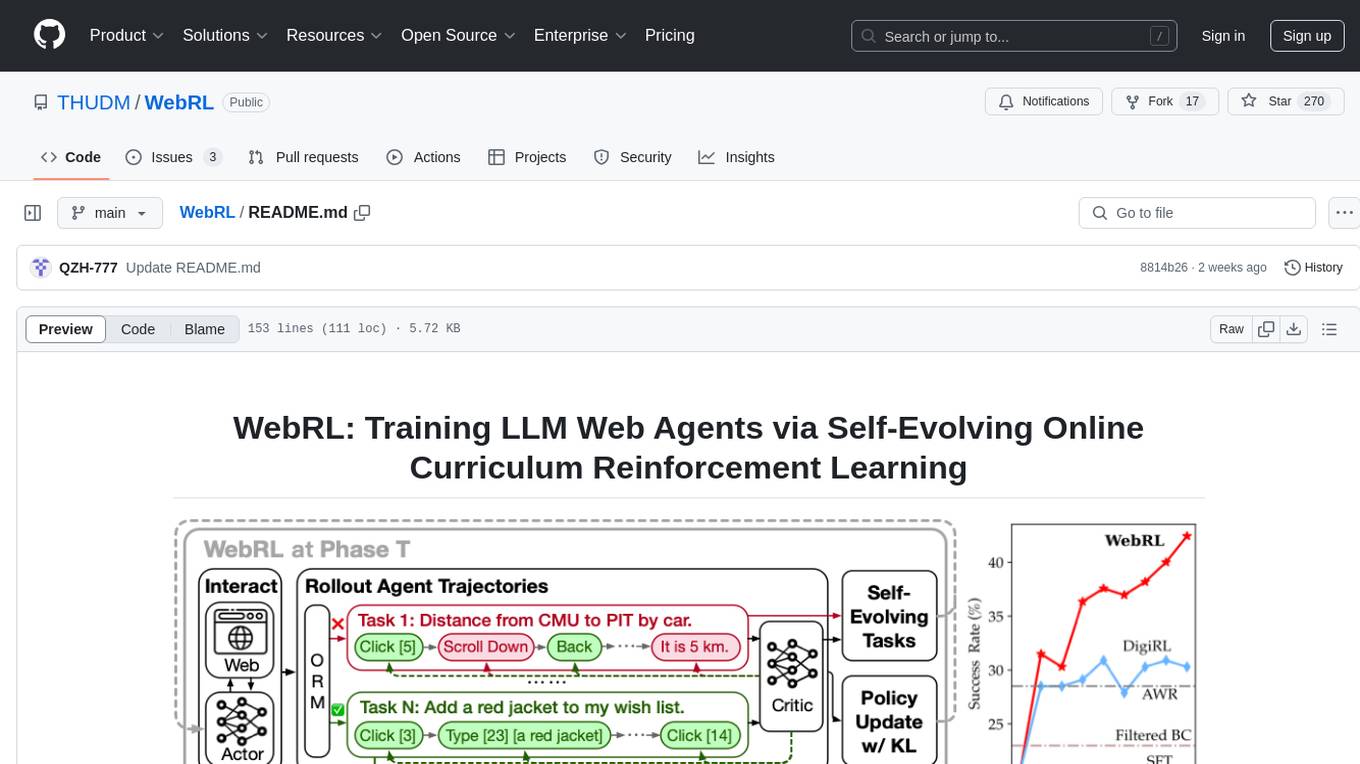

WebRL

WebRL is a self-evolving online curriculum learning framework designed for training web agents in the WebArena environment. It provides model checkpoints, training instructions, and evaluation processes for training the actor and critic models. The tool enables users to generate new instructions and interact with WebArena to configure tasks for training and evaluation.

For similar tasks

guidance-for-a-multi-tenant-generative-ai-gateway-with-cost-and-usage-tracking-on-aws

This repository provides guidance on building a multi-tenant SaaS solution for accessing foundation models using Amazon Bedrock and Amazon SageMaker. It helps enterprise IT teams track usage and costs of foundation models, regulate access, and provide visibility to cost centers. The solution includes an API Gateway design pattern for standardization and governance, enabling loose coupling between model consumers and endpoint services. The CDK Stack deploys resources for private networking, API Gateway, Lambda functions, DynamoDB table, EventBridge, S3 buckets, and Cloudwatch logs.

truss-examples

Truss is the simplest way to serve AI/ML models in production. This repository provides dozens of example models, each ready to deploy as-is or adapt to your needs. To get started, clone the repository, install Truss, and pick a model to deploy by passing a path to that model. Truss will prompt you for an API Key, which can be obtained from the Baseten API keys page. Invocation depends on the model's input and output specifications. Refer to individual model READMEs for invocation details. Contributions of new models and improvements to existing models are welcome. See CONTRIBUTING.md for details.

truss

Truss is a tool that simplifies the process of serving AI/ML models in production. It provides a consistent and easy-to-use interface for packaging, testing, and deploying models, regardless of the framework they were created with. Truss also includes a live reload server for fast feedback during development, and a batteries-included model serving environment that eliminates the need for Docker and Kubernetes configuration.

openmeter

OpenMeter is a real-time and scalable usage metering tool for AI, usage-based billing, infrastructure, and IoT use cases. It provides a REST API for integrations and offers client SDKs in Node.js, Python, Go, and Web. OpenMeter is licensed under the Apache 2.0 License.

opcode

opcode is a powerful desktop application built with Tauri 2 that serves as a command center for interacting with Claude Code. It offers a visual GUI for managing Claude Code sessions, creating custom agents, tracking usage, and more. Users can navigate projects, create specialized AI agents, monitor usage analytics, manage MCP servers, create session checkpoints, edit CLAUDE.md files, and more. The tool bridges the gap between command-line tools and visual experiences, making AI-assisted development more intuitive and productive.

BoxPwnr

BoxPwnr is a tool designed to test the performance of different agentic architectures using Large Language Models (LLMs) to autonomously solve HackTheBox machines. It provides a plug and play system with various strategies and platforms supported. BoxPwnr uses an iterative process where LLMs receive system prompts, suggest commands, execute them in a Docker container, analyze outputs, and repeat until the flag is found. The tool automates commands, saves conversations and commands for analysis, and tracks usage statistics. With recent advancements in LLM technology, BoxPwnr aims to evaluate AI systems' reasoning capabilities, creative thinking, security understanding, problem-solving skills, and code generation abilities.

For similar jobs

llmops-promptflow-template

LLMOps with Prompt flow is a template and guidance for building LLM-infused apps using Prompt flow. It provides centralized code hosting, lifecycle management, variant and hyperparameter experimentation, A/B deployment, many-to-many dataset/flow relationships, multiple deployment targets, comprehensive reporting, BYOF capabilities, configuration-based development, local prompt experimentation and evaluation, endpoint testing, and optional Human-in-loop validation. The tool is customizable to suit various application needs.

azure-search-vector-samples

This repository provides code samples in Python, C#, REST, and JavaScript for vector support in Azure AI Search. It includes demos for various languages showcasing vectorization of data, creating indexes, and querying vector data. Additionally, it offers tools like Azure AI Search Lab for experimenting with AI-enabled search scenarios in Azure and templates for deploying custom chat-with-your-data solutions. The repository also features documentation on vector search, hybrid search, creating and querying vector indexes, and REST API references for Azure AI Search and Azure OpenAI Service.

geti-sdk

The Intel® Geti™ SDK is a python package that enables teams to rapidly develop AI models by easing the complexities of model development and enhancing collaboration between teams. It provides tools to interact with an Intel® Geti™ server via the REST API, allowing for project creation, downloading, uploading, deploying for local inference with OpenVINO, setting project and model configuration, launching and monitoring training jobs, and media upload and prediction. The SDK also includes tutorial-style Jupyter notebooks demonstrating its usage.

booster

Booster is a powerful inference accelerator designed for scaling large language models within production environments or for experimental purposes. It is built with performance and scaling in mind, supporting various CPUs and GPUs, including Nvidia CUDA, Apple Metal, and OpenCL cards. The tool can split large models across multiple GPUs, offering fast inference on machines with beefy GPUs. It supports both regular FP16/FP32 models and quantised versions, along with popular LLM architectures. Additionally, Booster features proprietary Janus Sampling for code generation and non-English languages.

xFasterTransformer

xFasterTransformer is an optimized solution for Large Language Models (LLMs) on the X86 platform, providing high performance and scalability for inference on mainstream LLM models. It offers C++ and Python APIs for easy integration, along with example codes and benchmark scripts. Users can prepare models in a different format, convert them, and use the APIs for tasks like encoding input prompts, generating token ids, and serving inference requests. The tool supports various data types and models, and can run in single or multi-rank modes using MPI. A web demo based on Gradio is available for popular LLM models like ChatGLM and Llama2. Benchmark scripts help evaluate model inference performance quickly, and MLServer enables serving with REST and gRPC interfaces.

amazon-transcribe-live-call-analytics

The Amazon Transcribe Live Call Analytics (LCA) with Agent Assist Sample Solution is designed to help contact centers assess and optimize caller experiences in real time. It leverages Amazon machine learning services like Amazon Transcribe, Amazon Comprehend, and Amazon SageMaker to transcribe and extract insights from contact center audio. The solution provides real-time supervisor and agent assist features, integrates with existing contact centers, and offers a scalable, cost-effective approach to improve customer interactions. The end-to-end architecture includes features like live call transcription, call summarization, AI-powered agent assistance, and real-time analytics. The solution is event-driven, ensuring low latency and seamless processing flow from ingested speech to live webpage updates.

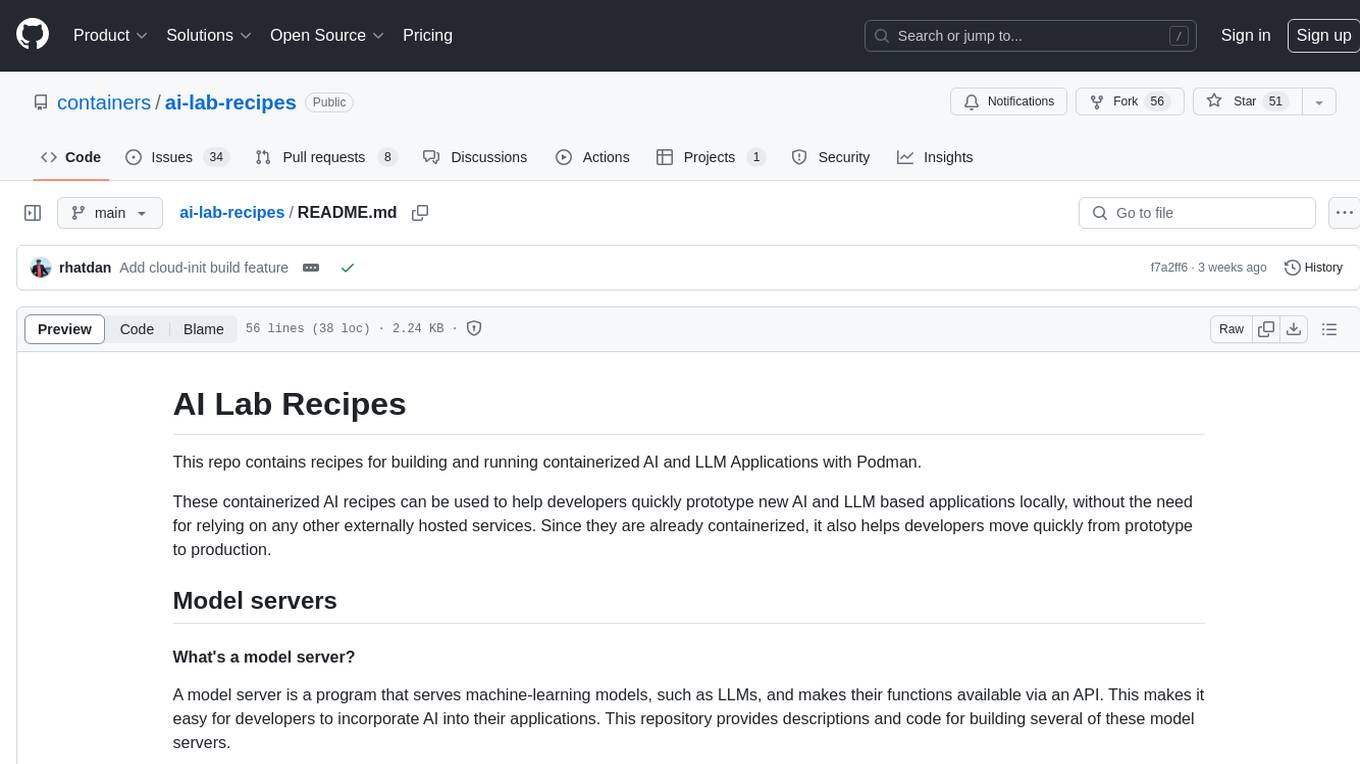

ai-lab-recipes

This repository contains recipes for building and running containerized AI and LLM applications with Podman. It provides model servers that serve machine-learning models via an API, allowing developers to quickly prototype new AI applications locally. The recipes include components like model servers and AI applications for tasks such as chat, summarization, object detection, etc. Images for sample applications and models are available in `quay.io`, and bootable containers for AI training on Linux OS are enabled.

XLearning

XLearning is a scheduling platform for big data and artificial intelligence, supporting various machine learning and deep learning frameworks. It runs on Hadoop Yarn and integrates frameworks like TensorFlow, MXNet, Caffe, Theano, PyTorch, Keras, XGBoost. XLearning offers scalability, compatibility, multiple deep learning framework support, unified data management based on HDFS, visualization display, and compatibility with code at native frameworks. It provides functions for data input/output strategies, container management, TensorBoard service, and resource usage metrics display. XLearning requires JDK >= 1.7 and Maven >= 3.3 for compilation, and deployment on CentOS 7.2 with Java >= 1.7 and Hadoop 2.6, 2.7, 2.8.